Abstract

In this paper, a linear combination of quadratic modified hat functions is proposed to solve stochastic Itô–Volterra integral equation with multi-stochastic terms. All known and unknown functions are expanded in terms of modified hat functions and replaced in the original equation. The operational matrices are calculated and embedded in the equation to achieve a linear system of equations which gives the expansion coefficients of the solution. Also, under some conditions the error of the method is \(O(h^3)\). The accuracy and reliability of the method are studied and compared with those of block pulse functions and generalized hat functions in some examples.

Similar content being viewed by others

Introduction

Nowadays, modelling different problems in different issues of science leads to stochastic equations [1]. These equations arise in many fields of science such as mathematics and statistics [2,3,4,5,6,7], finance [8,9,10], physics [11,12,13], mechanics [14, 15], biology [16,17,18], and medicine [19, 20]. Whereas most of them do not have an exact solution, the role of numerical methods and finding a reliable and accurate numerical approximation have become highlighted [21].

In recent years, different orthogonal basic functions and polynomials have been used to find a numerical solution for integral equations such as block pulse functions [2, 21, 22], hat functions [23], hybrid functions [24, 25], wavelet methods [26,27,28], triangular functions [3, 29], and Bernstein polynomials [30]. In this paper, MHFs will be applied to find an approximate solution for the following stochastic Itô–Volterra integral equation with multi-stochastic terms,

where \(t\in D=[0,T), X, f, \mu\) and \(\sigma _j,\,j=1,2,\dots ,n\), for \(s,t\in D\) are the stochastic processes defined on the same probability space (\(\Omega ,F,P\)) and X is unknown. Also \(\int _0^t\sigma _j(s,t)X(s)\,dB_j(s)\,,\,j=1,2,\dots ,n\) are Itô integrals and \(B_1(t), B_2(t),\dots \, B_n(t)\) are the Brownian motion processes [31, 32].

The paper is organized as follows: In “MHFs and their properties” section, the MHFs and their properties are described. In “Operational matrices” section, the operational matrices are found. In “Solving stochastic Itô–Volterra integral equation with multi-stochastic terms by the MHFs” section, the sets and operational matrices are applied in the above equation and the approximate solution is found. In “Error analysis” section, the error analysis of the present method is discussed. In the “Numerical examples” section, some numerical examples are solved by using this method. And finally, the last section concludes the paper.

MHFs and their properties

In this section, we recall the definition and properties of modified hat functions [33]. Let \(m\ge 2\) be an even integer and \(h=\frac{T}{m}\). Also assume that the interval [0, T) is divided into \(\frac{m}{2}\) equal subintervals \([ih,(i+2)h], i=0, 2,\dots , (m-2)\) and let \(X_m\) be the set of all continuous functions that are quadratic polynomials when restricted to each of the above subintervals. Because each element of \(X_m\) is completely determined by its values at the \((m+1)\) nodes \(ih, i=0, 1,\dots , m\), the dimension of \(X_m\) is \((m+1)\). Considering that \(f\in \chi =C^3(D)\) can be approximated by its expansion with respect to the following set functions, \((m+1)\) set of MHFs are defined over D as

If i is odd and \(1\le i\le (m-1)\),

If i is even and \(2 \le i \le (m-2)\),

and

Properties of the MHFs

By considering the above definition, the following properties come as a result.

3) They are linearly independent.

Suppose

by applying the second property and considering definition (1), we obtain

7) Let \(\mathbf {A}\) be an \((m+1)\times (m+1)\) matrix and \({\mathbf {H}}(t)\) be the vector of \((m+1)\)-MHFs defined in (2) then \({\mathbf {H}}(t)^T\mathbf {A}{\mathbf {H}}(t)\simeq {\mathbf {H}}(t)^T\tilde{\mathbf {A}}\) , where \(\tilde{\mathbf {A}}\) is a column vector with \((m+1)\) entries equal to the diagonal entries of the matrix \(\mathbf {A}\).

Function approximation

An arbitrary real function f on D can be expanded by these functions as [34]

where \(\mathbf F = [f_0 , f_1 , \dots , f_m]^T\) and \(\mathbf H (t)\) is defined in relation (2) and the coefficients in (3) are given by \(f_i=f(ih), i=0,1,\dots ,m.\)

Similarly, an arbitrary real function of two variables g(s, t) on \(D \times D\) can be expanded by these basic functions as

where \(\mathbf H (s),\mathbf I (t)\) are, respectively, \((m_1+1)\)- and \((m_2+1)\)-dimensional MHFs vectors. \(\mathbf G\) is the \((m_1+1)\times (m_2+1)\) MHFs coefficient matrix with entries \(G_{ij} ,i=0,1,2,\dots ,m_1\, , j=0,1,2,\dots ,m_2\) and \(G_{ij}=g(ih,jk),\) where \(h=\frac{T}{m_1}\) and \(k=\frac{T}{m_2}.\) For convenience, we put \(m_1=m_2=m\).

Operational matrices

In this section, we present both operational matrix of integrating the vector \({\mathbf {H}}(t)\), denoted by \(\mathbf P\), and stochastic operational matrix of Itô integrating the vector \({\mathbf {H}}(t)\), denoted by \(\mathbf P _s\). Therefore, by integrating the vector \({\mathbf {H}}(t)\) defined in (2), we have [34, 35]

where \(\mathbf P\) is the following \((m+1)\times (m+1)\) operational matrix of integration of MHFs

Theorem 1

Let \({\mathbf {H}}(t)\) be the vector defined in (2), the Itô integral of \({\mathbf {H}}(t)\) can be expressed as

where \(\mathbf P _s\) is the following \(\,(m+1)\times (m+1)\,\) stochastic operational matrix of integration

with

and

Proof

By considering definitions of \(h_i(t), i= 0, 1,\dots , m\) and integrating by parts, we have

expanding \(\int _0^t h_i(\tau )dB(\tau )\) in terms of MHFs yields

and

so we obtain

If i is odd and \(1\le i\le (m-1)\)

If i is even and \(2\le i\le (m-2)\),

and

Putting the obtained components in the matrix form ends the proof. \(\square\)

Solving stochastic Itô–Volterra integral equation with multi-stochastic terms by the MHFs

Our problem is to define the MHFs coefficients of X(t) in the following linear stochastic Itô–Volterra integral equation with several independent white noise sources,

where \(X,f,\mu\) and \(\sigma _j, j=1,2,\dots ,n\) for \(s,t\in D\), are stochastic processes defined on the same probability space\((\Omega ,F,P)\). Also \(B_1(t), B_2(t),\dots , B_n(t)\) are Brownian motion processes, and \(\int _0^t \sigma _j(s,t)\,dB_j(s)\,,j = 1,2,\dots ,n\) are the Itô integrals.

We replace \(X(t),f(t),\mu (s,t)\) and \(\sigma _j(s,t)\,,j=1,2,\dots ,n\) by their approximations which are obtained by MHFs:

where \({\mathbf {X}}\) and \(\mathbf {F}\) are stochastic MHFs coefficient vectors and \({\mu }\) and \(\mathbf {\Delta }_j\,,j=1,2,\dots ,n\) are stochastic MHFs coefficient matrices. Substituting (9)–(12) in relation (8), we obtain

Using the 6-th property in relation (13), we get

Utilizing operational matrices defined in relations (5) and (6) in (14), we have

Let \(\mathbf {A}={\mu }^Tdiag({\mathbf {X}})\mathbf {P}\) and \(\mathbf {B}_j=\mathbf {\Delta _j}^Tdiag({\mathbf {X}})\mathbf {P_s}, j=1,2,\dots ,n.\) Applying property (7) in relation (15) yields

therefore, by using the third property and replacing \(\simeq\) by \(=\), we have

which is a linear system of equations that gives the approximation of X with the help of MHFs.

Error analysis

In this section, the error analysis is studied. We propose some conditions to show that the rate of convergence for this method is \(O(h^3)\).

Theorem 2

[34] Let \(t_j=jh , j=0,1,\dots ,m , f\in \chi\) and \(f_m\) be the MHFs expansion of f defined as \(f_m(t)=\sum _{j=0}^{m}f(t_j)h_j(t)\) and also assume that \(e_m(t)=f(t)-f_m(t)\) , for \(t \in D\) , then we have

and hence \(\Vert e_m\Vert =O(h^3)\) . Where \(\Vert .\Vert\) denotes the sup-norm for which any continuous function f is defined on the interval [0, T) by

Theorem 3

[34] Let \(s_i=t_i=ih , i=0,1,\dots ,m , \mu \in C^3(D \times D)\) and \(\mu _m(s,t)=\sum _{i=0}^{m}\sum _{j=0}^{m}\mu (s_i,t_j)h_i(s)h_j(t),\) be the MHFs expansion of \(\mu (s,t),\) and also assume that \(e_m(s,t)=\mu (s,t)-\mu _m(s,t),\) then we have

and so \(\Vert e_m\Vert = O(h^3).\)

Theorem 4

Let X be the exact solution of (8) and \(X_m\) be the MHFs series approximate solution of (8) , and also assume that

then

and \(\Vert X-X_m\Vert =O(h^3)\,,\) where

Proof

From relation (8), we have

now the following relation is concluded

where

and

using Theorems 2 and 3, we also have

and

j= 1, 2, ..., n.

By substituting (17) and (18) in relation (16), we obtain

and so

which means \(\Vert X-X_m\Vert =O(h^3)\,\). Thus, the proof is complete. \(\square\)

Numerical examples

In this section, we use our algorithm to solve stochastic Itô–Volterra integral equation with multi-stochastic terms stated in “Solving stochastic Itô–Volterra integral equation with multi-stochastic terms by the MHFs” section. In order to compare it with the method proposed in [22, 23], we consider some examples. The computations associated with the examples were performed using Matlab 7 and [36].

Example 1

Consider the following linear stochastic Itô–Volterra integral equation with multi-stochastic terms [22]

with the exact solution

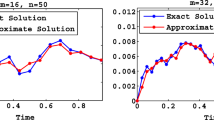

for \(0\le t< 1\) where X is the unknown stochastic process, defined on the probability space \((\Omega ,F,P)\) and \(B_1(t), B_2(t),\dots , B_n(t)\) are the Brownian motion processes. The numerical results for \(X_0=\frac{1}{200}, r=\frac{1}{20}, \alpha _1=\frac{1}{50}, \alpha _2=\frac{2}{50}, \alpha _3=\frac{4}{50}, \alpha _4 = \frac{9}{50}\) are shown in Table 1. Also curves in Figs. 1 and 2 show the exact and approximate solutions computed by this method for \(m=10\) and \(m=40\). Figures 3 and 4 represent the errors of the present method.

Example 2

Let [22]

be a linear stochastic Itô–Volterra integral equation with multi-stochastic terms with the exact solution

for \(0\le t< 1,\) where X is the unknown stochastic process defined on the probability space \((\Omega ,F,P)\) and \(B_1(t), B_2(t),\dots , B_n(t)\) are the Brownian motion processes. The numerical results for \(X_0=\frac{1}{12}, r=\frac{1}{30}, \alpha _1=\frac{1}{10}, \alpha _2(s)=s^2, \alpha _3(s)=\frac{\sin (s)}{3}\) are inserted in Table 2. Also curves in Figs. 5 and 6 show the exact and approximate solutions computed by this method for \(m=10\) and \(m=40\). Figures 7 and 8 represent the errors of the present method.

Conclusion

Finding an analytical exact solution for stochastic equations usually seems impossible. Therefore, it is convenient to use stochastic numerical methods to find some approximate solutions. The MHFs, as a simple and suitable basis, adopt to solve stochastic Itô–Volterra integral equations with multi-stochastic terms. With this choice, the vector and matrix coefficients are found easily. This method results in a linear system of equations that can be solved simply. Numerical results of the examples show that the MHFs tend to more accurate solutions than the BPFs and GHFs do.

References

Kloeden, P., Platen, E.: Numerical Solution of Stochastic Differential Equations. Springer, Berlin (1999)

Maleknejad, K., Khodabin, M., Rostami, M.: Numerical solution of stochastic Volterra integral equations by a stochastic operational matrix based on block pulse functions. Math. Comput. Model. 55, 791–800 (2012)

Khodabin, M., Maleknejad, K., Hosseini Shekarabi, F.: Application of triangular functions to numerical solution of stochastic Volterra integral equations. Int. J. Appl. Math. 43, 1–9 (2013)

Mirzaee, F., Hamzeh, A.: A computational method for solving nonlinear stochastic Volterra integral equations. J. Comput. Appl. Math. 306, 166–178 (2016)

Farnoosh, R., Rezazadeh, H., Sobhani, A., Behboudi, M.: Analytical solutions for stochastic differential equations via Martingale processes. Math. Sci. 9, 87–92 (2015)

Ahmadi, N., Vahidi, A.R., Allahviranloo, T.: An efficient approach based on radial basis functions for solving stochastic fractional differential equations. Math. Sci. 11, 113–118 (2017)

Abdelghani, M.N., Melnikov, A.V.: On linear stochastic equations of optional semimartingales and their applications. Stat. Probab. Lett. 125, 207–214 (2017)

Fathi Vajargah, K., Shoghi, M.: Simulation of stochastic differential equation of geometric Brownian motion by quasi-Monte Carlo method and its application in prediction of total index of stock market and value at risk. Math. Sci. 9, 115–125 (2015)

Thakoor, N., Tangman, Y., Bhuruth, M.: Numerical pricing of financial derivatives using Jains high-order compact scheme. Math. Sci. 6, 72 (2012). https://doi.org/10.1186/2251-7456-6-72

Nouri, K., Abbasi, B., Omidi, F., Torkzadeh, L.: Digital barrier options pricing: an improved Monte Carlo algorithm. Math. Sci. 10, 65–70 (2016)

Nitta, K., Li, C.: A stochastic equation for predicting tensile fractures in ductile polymer solids. Physica A 490, 1076–1086 (2018)

Sousedík, B., Elman, H.C.: Stochastic Galerkin methods for the steady-state Navier–Stokes equations. J. Comput. Phys. 316, 435–452 (2016)

Heydari, M.H., Hooshmandasl, M.R., Cattani, C., Maalek Ghaini, F.M.: An efficient computational method for solving nonlinear stochastic Ito-integral equations: application for stochastic problems in physics. J. Comput. Phys. 283, 148–168 (2015)

Luo, R., Shi, H., Teng, W., Song, C.: Prediction of wheel profile wear and vehicle dynamics evolution considering stochastic parameters for high speed train. Wear 392–393, 126–138 (2017)

Jin, S., Shu, R.: A stochastic asymptotic-preserving scheme for a Kinetic-fluid model for disperse two-phase flows with uncertainty. J. Comput. Phys. 335, 905–924 (2017)

Oroji, A., Omar, M., Yarahmadian, S.: An Ito stochastic differential equations model for the dynamics of the MCF-7 breast cancer cell line treated by radiotherapy. J. Theor. Biol. 407, 128–137 (2016)

Mastrolia, T.: Density analysis of non-Markovian BSDEs and applications to biology and finance. Stoch. Process. Theor Appl. 128, 897–938 (2018)

Vidurupola, S.W.: Analysis of deterministic and stochastic mathematical models with resistant bacteria and bacteria debris for bacteriophage dynamics. Appl. Math. Comput. 316, 215–228 (2018)

Emvudu, Y., Bongor, D., Koïna, R.: Mathematical analysis of HIV/AIDS stochastic dynamic models. Appl. Math. Model. 40, 9131–9151 (2016)

Madani Tonekabony, S.A., Dhawan, A., Kohandel, M.: Mathematical modelling of plasticity and phenotype switching in cancer cell populations. Math. Biosci. 283, 30–37 (2017)

Khodabin, M., Maleknejad, K., Rostami, M., Nouri, M.: Numerical approach for solving stochastic Volterra-Fredholm integral equations by stochastic operational matrix. Comput. Math. Appl. 64, 1903–1913 (2012)

Khodabin, M., Maleknejad, K., Rostami, M.: A numerical method for solving m-dimensional stochastic Ito-Volterra integral equations by stochastic operational matrix. Comput. Math. Appl. 63, 133–143 (2012)

Heydari, M.H., Hooshmandasl, M.R., Maalek Ghaini, F.M., Cattani, C.: A computational method for solving stochastic Ito-Volterra integral equations based on stochastic operational matrix for generalized hat basis functions. J. Comput. Phys. 270, 402–415 (2014)

Maleknejad, K., Tavassoli Kajani, M.: Solving second kind integral equations by Galerkin methods with hybrid Legendre and block pulse functions. Appl. Math. Comput. 145, 623–629 (2003)

Maleknejad, K., Mahmoudi, Y.: Numerical solution of linear Fredholm integral equation by using hybrid Taylor and block pulse functions. Appl. Math. Comput. 149, 799–806 (2004)

Mohammadi, F.: A wavelet-based computational method for solving stochastic Ito-Volterra integral equations. J. Comput. Phys. 298, 254–265 (2015)

Mohammadi, F.: Numerical solution of stochastic Ito-Volterra integral equations using Haar wavelets. Numer. Math. Theor. Meth. Appl. 9, 416–431 (2016)

Heydari, M.H., Mahmoudi, M.R., Shakiba, A., Avazzadeh, Z.: Chebyshev cardinal wavelets and their application in solving nonlinear stochastic differential equations with fractional Brownian motion. Commun. Nonlinear Sci. Numer. Simul. 64, 98–121 (2018)

Mirzaee, F., Hadadiyan, E.: A collocation technique for solving nonlinear stochastic Itô-Volterra integral equations. Appl. Math. Comput. 247, 1011–1020 (2014)

Asgari, M., Hashemizadeh, E., Khodabin, M., Maleknejad, K.: Numerical solution of nonlinear stochastic integral equation by stochastic operational matrix based on Bernstein polynomials. Bull. Math. Soc. Sci. Math. Roumanie 1, 3–12 (2014)

Oksendal, B.K.: Stochastic Differential Equations: An Introduction with Applications, 4th edn. Springer, Berlin (1995)

Arnold, L.: Stochastic Differential Equations: Theory and Applications. Wiley, New York (1974)

Atkinson, K.E.: The Numerical Solution of Integral Equations of the Second Kind. Cambridge University Press, Cambridge (1997)

Mirzaee, F., Hadadiyan, E.: Numerical solution of Volterra-Fredholm integral equations via modification of hat functions. Appl. Math. Comput. 280, 110–123 (2016)

Mirzaee, F., Hadadiyan, E.: Solving system of linear Stratonovich Volterra integral equations via modification of hat functions. Appl. Math. Comput. 293, 254–264 (2017)

Higham, D.J.: An algorithmic introduction to numerical simulation of stochastic differential equations. Siam. Rev. 43(3), 525–546 (2001)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Momenzade, N., Vahidi, A.R. & Babolian, E. A computational method for solving stochastic Itô–Volterra integral equation with multi-stochastic terms. Math Sci 12, 295–303 (2018). https://doi.org/10.1007/s40096-018-0269-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40096-018-0269-x