Abstract

The use of human-level semantic information to aid robotic tasks has recently become an important area for both Computer Vision and Robotics. This has been enabled by advances in Deep Learning that allow consistent and robust semantic understanding. Leveraging this semantic vision of the world has allowed human-level understanding to naturally emerge from many different approaches. Particularly, the use of semantic information to aid in localisation and reconstruction has been at the forefront of both fields. Like robots, humans also require the ability to localise within a structure. To aid this, humans have designed high-level semantic maps of our structures called floorplans. We are extremely good at localising in them, even with limited access to the depth information used by robots. This is because we focus on the distribution of semantic elements, rather than geometric ones. Evidence of this is that humans are normally able to localise in a floorplan that has not been scaled properly. In order to grant this ability to robots, it is necessary to use localisation approaches that leverage the same semantic information humans use. In this paper, we present a novel method for semantically enabled global localisation. Our approach relies on the semantic labels present in the floorplan. Deep Learning is leveraged to extract semantic labels from RGB images, which are compared to the floorplan for localisation. While our approach is able to use range measurements if available, we demonstrate that they are unnecessary as we can achieve results comparable to state-of-the-art without them.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Localisation, the process of finding a robot’s pose within a pre-existing map, is one of the most important aspects of both Computer Vision and Robotic systems. A globally consistent, well localised sensor can substantially reduce the complexity of problems like Multi-View Stereo (MVS) (Agarwal et al. 2011; Mendez et al. 2016), Autonomous Navigation (von Stumberg et al. 2016), 3D Reconstruction (Dai et al. 2017; Mendez et al. 2017) and even Deep Learning (Kendall et al. 2015). While all of these problems can estimate their own sensor poses, such as MVS using a Bundle Adjustment (BA) and Autonomous Navigation using Simultaneous Localisation and Mapping (SLAM). Unfortunately, both BA and SLAM suffer from the same limitation: they can only ever guarantee global pose consistency internally. This means that while pose estimates are globally consistent, they are only valid within the context of the localisation system. There are no guarantees, at least in vision-only systems, that the reconstruction can be directly mapped to the real world, or between agents (without explicit alignment). This paper will attempt to address these limitations with a localisation approach that is efficient, accurate and, most importantly, globally consistent with the real-world.

For a robotic system, it should be clear that offline batch approaches are of limited use (Furukawa and Ponce 2010; Galliani and Schindler 2015). This leaves traditional SLAM systems as the only viable approach for localisation. However, SLAM systems are liable to drift in terms of both pose and scale. They can also become globally inconsistent (even internally) in the case of failed loop closures.

This problem is normally addressed by having a localisation system that can relate the pose of the robot to a pre-existing map. Examples of global localisation frameworks include the Global Positioning System (GPS) and traditional Monte-Carlo Localisation (MCL). MCL has the ability to localise within an existing floorplan (which can be safely assumed to be available for most indoor scenarios). This is a highly desirable trait, as it implicitly eliminates drift, is globally consistent and provides a way for the 3D reconstructions to be related to the real world without having to perform expensive post-hoc optimisations. Traditionally, the range-based scans required by MCL have been produced by expensive sensors such as Light Detection And Ranging (LiDAR). These sensors are capable of producing high density measurements at high rates with low noise, making them ideal for range-based MCL. However, as a sensor they are expensive, are physically large and have high power requirements which is an issue for small mobile platforms.

As a response to this, modern low-budget robotic platforms have used RGB-D cameras as a cheap and low-footprint alternative. This has made vision-based floorplan localisation an active topic in the literature. However, while many approaches have been proposed, they normally use heuristics to lift the 2D plan into the 3D coordinate system of SLAM. These heuristics include techniques like assuming the height of doors and walls (Liu et al. 2015; Winterhalter et al. 2015). Making assumptions about the world allows full 6-Degrees of Freedom (DoF) pose estimations to be computed (by using the assumed geometry). However, this also increases the computational cost and makes algorithms unsuitable for environments that do not conform to these assumptions. Other examples include (Liu et al. 2015), who use visual cues such as Vanishing Points (VPs) or (Chu et al. 2015) who perform piecemeal 3D reconstructions that can then be fitted back to an extruded floorplan. These approaches use innovative ways to extract 3D information from images, however, the data extracted from the image is normally not contained in the floorplan that the sensor is meant to localise in. Fundamentally, this means assumptions must be made about the floorplan. More explicitly, assumptions are made about information not present in the floorplan (e.g. ceiling and door height). It also does not fully exploit the floorplan, ignoring the semantic information that humans use to localise.

In order to find a robust solution to MCL, inspiration can be drawn from the way humans localise within a floorplan. People do not explicitly measure depths to every visible surface and try to match them against different pose estimates in the floorplan. However, this is exactly how most robotic scan-matching algorithms operate. Similarly, humans do not extrude the 2D geometry present in the floorplan into 3D, as is done in most vision-based approaches. Humans do the exact opposite. Instead of depth, people use high level semantic cues. Instead of extruding the floorplan up into the third dimension, humans collapse the 3D world into a 2D representation. Evidence of this is that many of the floorplans used in everyday life are not strictly accurate or in 3D. Instead, floorplans designed for people opt instead for high levels of discriminative landmarks on a 2D map.

Therefore, this paper proposes a fundamentally different approach that is inspired by how humans perform the task. Instead of discarding valuable semantic information, a Convolutional Neural Network (CNN) based encoder-decoder is used to extract high-level semantic information. All semantic information is then collapsed into 2D, in order to reduce the assumptions about the environment. A state-of-the-art sensing and localisation framework is then introduced, which uses these labels (along with image geometry and, optionally, depth) to localise within a semantically labelled floorplan. It is important to note that this paper explicitly avoids the 3D case because the information necessary for indoor navigation is present in the 2D representation. Therefore, we aim for a fast and efficient localisation approach that does not require 3D information.

Semantic Detection and Ranging (SeDAR) is an innovative human-inspired framework that combines new semantic sensing capabilities with a novel semantic Monte-Carlo Localisation (MCL) approach. As an example, Fig. 1 shows a sample SeDAR scan localised in the floorplan. SeDAR has the ability to surpass LiDAR-based MCL approaches. SeDAR also has the ability to perform drift-free local, as well as global, localisation. Furthermore, experimental results show that the semantic labels are sufficiently strong visual cues that depth estimates are no longer needed. Not only does this vision-only approach perform comparably to depth-based methods, it is also capable of coping with floorplan inaccuracies more gracefully than strictly depth-based approaches. Furthermore, this approach relies on high-level semantic cues making it robust to repetitive and texture-less regions.

This paper presents several important extensions to our preliminary work (Mendez et al. 2018) presented at the International Conference on Robotics and Automation (ICRA). Firstly, we extend our method to operate on all SUN3D labels (rather than wall, door and window) and add the ability to create semantic floorplans from a known pose and a SeDAR scan. Secondly, to assist in reproducing the work, we add a significant amount of detail to the methodology, including a complete formalisation of MCL and the SeDAR sensing modality. Thirdly, we create an expansive new dataset for semantic localisation, make it publicly available and add comparison against state-of-the-art SLAM algorithms. Fourthly, we use this extended dataset to explore new properties of the proposed algorithm including results on a hand-drawn map. Finally, an evaluation on the TUM_RGB-D dataset is performed. This evaluation includes the creation of new semantic floorplans and a comparison against state-of-the-art SLAM (2D and 3D) and MCL algorithms

This paper describes the process by which SeDAR is used as a human-inspired sensing and localisation framework. To do this, a generic definition and formalisation of MCL is presented first. Following this, the semantically salient elements are extracted from a floorplan and an RGB image is parsed into a SeDAR scan. The three main novelties of this paper are then presented. In the first, the semantic information present in the floorplan is used to define a new motion model. In the second, the SeDAR scan is used to define a novel sensor model using a combination of range and label information. In the third, an additional sensor model is presented that only depends on label information (an RGB image). Finally, we present localisation results on several datasets and modalities.

2 Literature Review

The field of SLAM is predicated on the simple idea that the pose of a sensor and the reconstructed landmarks are conditioned on each other (Durrant-Whyte 1988; Smith and Cheeseman 1987). This idea is not limited to raw features, but can also be done at the level of objects, as shown by McCormac et al. (2018). However, if one of them is known a priori, it is possible to marginalise the other (Murphy 2000). In the same way that independent reconstruction algorithms (Furukawa and Ponce 2010; Galliani and Schindler 2015) can provide more robust representations of the world, independent localisation algorithms can also provide more robust and consistent pose estimates. In fact, recent work by Schneider et al. (2018) explore the idea that a pre-existing SLAM map is an extremely useful asset for further mapping sessions. However, in each of these cases the environment must be navigated a-priori. Instead we propose to use pre-existing, human-readable (and therefore innacurate) 2D floorplans to localise, requiring no initial mapping session.

It is clear that an accurate map will yield an accurate localisation, and scan-matching localisation approaches (Fox et al. 1999; Dellaert et al. 1999) use this fact successfully. However, independent localisation algorithms can also be extremely useful when only inaccurate maps are available. A clear example of this is the way humans localise within “theme-park”-like maps that encode coarse information using high-level landmarks. While it might not be possible to localise within these maps with millimetre accuracy, these maps (and the techniques that use them) are ideal for solving problems such as loop closure, global localisation, etc. This paper attempts to use this idea by combining pre-existing floorplans with image-based semantic segmentation to provide high-accuracy localisation in 2D.

While it might be desirable to estimate full 3D poses, recent work by Sattler et al. (2017) demonstrates that large-scale 3D models are not strictly necessary for accurate vision-based localisation. Sattler et al. further conclude that 2D-based localisation approaches using coarse maps can be a good first step towards highly accurate localisation. This insight is important to this paper, where the aim is to localise within a 2D floorplan without making assumptions about the 3D structure of the building.

2.1 Monte-Carlo Localisation

MCL can be considered the state-of-the-art for mobile robot localisation today. Introduced by Dellaert et al. (1999), MCL is a form of Particle Filter (PF) where each particle is a pose estimate (and the map is known). It uses a motion model to propagate particles which in turn causes the weights to become the observation likelihood given the pose (Thrun et al. 2006). Re-sampling based on the weights then focuses computation in areas with more probable pose estimates.

Monte-Carlo Localisation (MCL) was made possible by the arrival of accurate range-based sensors such as Sound Navigation And Ranging (SoNAR) and LiDAR. These Range-Based Monte-Carlo Localisation (RMCL) approaches are robust, reliable and still considered state-of-the-art in many robotic applications. As such, they will be discussed first below.

Recent advances in computer vision have made vision-based approaches possible. These approaches, called Vision-Based Monte-Carlo Localisation (VMCL), typically use RGB cameras to avoid expensive sensors and will be discussed second.

Finally, the recent rise in Deep Learning has made semantic-based approaches possible. These approaches rely on neural networks to extract semantic information from the world, and use it to localise. Semantic sensing modalities, such as the one presented in this paper, have the ability to revolutionise MCL.

2.1.1 Range-Based Monte-Carlo Localisation (RMCL)

RMCL was first introduced by Fox et al. (1999) and Dellaert et al. (1999). RMCL improved the Kalman Filter based state-of-the-art by allowing multi-modal distributions to be represented. It also solved the computational complexity of grid-based Markov approaches. More recent approaches, such as those proposed by Kanai et al. (2015), have moved the focus of RMCL into 3D. Kanai et al. focus on a pre-existing 3D reconstruction and simulate 3D depth readings at each particle. In what is probably the closest approach to ours, Bedkowski and Röhling (2017) use a 3D LiDAR scanner, extract normals and use them to segment floors, walls, doors and edges between labels. They then use an approach based on Iterative Closest Point (ICP), with added label constraints, to estimate the observation likelihood. While this seems like a very promising approach, Bedowski et al. use very simple heuristics to classify their points (surface normals, point height, etc.). This work differs from these approaches by using techniques based on Deep Learning to provide better estimates of semantic labels and more robust observation likelihoods.

2.1.2 Vision-Based Monte-Carlo Localisation (VMCL)

RMCL-based approaches require expensive LiDAR and/or SoNAR sensors to operate reliably. Instead, Dellaert et al. (1999) extended their approach to operate using vision-based sensor models.

VMCL allowed the use of rich visual features and low-cost sensors, but had limited performance compared to the more robust LiDAR-based systems. However, with the rising popularity of RGB-D sensors, more robust vision-based MCL approaches became possible. Fallon et al. (2012) presented a robust MCL approach that used a low fidelity a priori map to localise, but required the space to be traversed by a depth sensor beforehand. Brubaker et al. (2013) removed the need to traverse a map with a sensor, and instead used visual odometry, pre-existing roadmaps and a joint MCL/closed-form approach in order to localise a moving car. More recently, visual approaches began to resemble traditional MCL by localising in an extruded floorplan. Winterhalter et al. (2015) performed MCL using an RGB-D camera, basing the observation likelihood on the normals of an extruded floorplan. Chu et al. (2015) removed the RGB-D requirement, by creating piecemeal reconstructions and basing the observation likelihood on direct ICP between these reconstructions and the extruded floorplan. Similar work by Neubert et al. (2017) also removed the RGB-D requirement, using synthesised depth images from the floorplan and comparing the gradient information against an RGB image, allowing purely monocular localisation. However, these approaches all rely on geometric information to provide an observation likelihood.

MCL-based approaches tend to be robust, but they operate entirely on the geometric information present in the floorplan. Therefore, they require depth images directly from sensors and/or local SLAM-based reconstructions. By contrast, our approach aims to use non-geometric semantic information present in the floorplan in order to perform the localisation.

The use of semantic information for indoor localisation has been enabled by advances in Deep Learning, such as the approaches of Badrinarayanan et al. (2015), Kendall et al. (2015) and Shelhamer et al. (2017). More importantly, approaches like that of Holder et al. (2016) have begun to take these approaches outdoors. Poschmann et al. (2017), and the work presented in this paper, attempt to use semantic information in an MCL context. Poschmann et al. follow a very similar approach to Neurbert et al. but synthesise semantic images (rather than depth ones) and base the observation likelihood on photometric consistency with a CNN-based segmentation method (on an RGB image). However, the work presented in this paper does not synthesise semantic images but rather uses the semantic segmentation of the real observation to augment traditional LiDAR-like sensors. Furthermore, we make no assumptions about the 3D environment, and instead rely on RGB observations and a 2D floorplan.

2.2 Closed-Form Localisation Approaches

While the field of MCL evolved in the robotics community, non-MCL-based approaches became more popular in the vision community. Shotton et al. (2013) used regression forests to predict the correspondences of every pixel in the image to a known 3D scene, they then combined this in a RANdom SAmple and Consensus (RANSAC) approach in order to solve the camera pose. Melbouci et al. (2016) used extruded floorplans, but performed local bundle adjustments instead of MCL. Caselitz et al. (2016) use a local SLAM system to create reconstructions that are then aligned using ICP to a LiDAR-built 3D map. However, instead of MCL they optimise the correspondences with a non-linear least squares approach.

More recent approaches have begun to also look at semantic information. Wang et al. (2015) use text detection from shop fronts as semantic cues to localise in the floorplan of a shopping centre. Liu et al. (2015) who use floorplans as a source of geometric and semantic information, combined with vanishing points, to localise monocular cameras. These vision-based approaches tend to use more of the non-geometric information present in the floorplan. However, a common trend is that assumptions must be made about geometry not present in the floorplan (e.g. ceiling height). The floorplan is then extruded out into the 3rd dimension to allow approaches to use the information present in the image.

The proposed approach differs from the approach of Poschmann et al. (2017), Wang et al. (2015) and Liu et al. (2015) in two important ways. Firstly, it does not require an extruded floorplan, opting instead to project the sensory information down to 2D and localise there. This makes our approach be able to run in real time. Secondly, it has the capability of augmenting traditional LiDAR sensors making it a more generic solution.

We use a CNN-based semantic segmentation (that is understandable to humans) in order to extract labels that are inherently present in human-readable floorplans. This allows us to take all that information and collapse it into a 3-DoF problem, making our approach more tractable than competing 6-DoF approaches while avoiding additional assumptions.

3 Problem Definition

While there exist many approaches to perform MCL, Range-Based Monte-Carlo Localisation (RMCL) (Winterhalter et al. 2015; Chu et al. 2015) is widely considered to be the state-of-the-art localisation method for pre-existing maps. RMCL is a scan-matching algorithm, it assumes the presence of a sensor that provides range and bearing tuples across a scanline. The problem then becomes one of finding the pose of the robot that makes the sensor observations match the floorplan. Figure 2a shows a case of the scan being correctly matched for a correctly localised robot. Conversely, Fig. 2b shows an incorrectly matched scan for an incorrect pose.

State-of-the-art localisation performs this matching in a Sequential Monte-Carlo (SMC) (Dellaert et al. 1999) framework, which can be broadly summarised as follows. Firstly, there is a prediction stage where particles are propagated using a motion-model, which is normally odometry from the robot (with Gaussian noise). Secondly, an update phase where each particle is weighted according to how accurately the observations align to the map. Finally, a re-sampling step is performed proportional to the weight of each particle and the process is then repeated.

More formally, the current pose  can be estimated as a set of possible pose samples

can be estimated as a set of possible pose samples  given odometry measurements

given odometry measurements

, sensor measurements

, sensor measurements  and a 2D map

and a 2D map  . Under the assumption that all odometry measurements are equally likely, the posterior is calculated as

. Under the assumption that all odometry measurements are equally likely, the posterior is calculated as

which implies that only the most recent odometry and observations are used (Dellaert et al. 1999). This means that at each iteration the particles from  are: propagated using a motion model

are: propagated using a motion model  , weighted using a sensor model

, weighted using a sensor model  and resampled according to the posterior

and resampled according to the posterior  . Algorithm 1 describes this process in more detail.

. Algorithm 1 describes this process in more detail.

Laser scan matching, the robot is correctly localised when the observations match the geometry of the map (Thrun 2002)

As stated previously, in an MCL context the prediction stage is performed using a motion model. The motion model is defined by the odometry received from the robot ( ). This odometry can be used to “shift” the particles, assigning a likelihood based on the probability of the final position given the measured odometry. More formally, particles are propagated according to

). This odometry can be used to “shift” the particles, assigning a likelihood based on the probability of the final position given the measured odometry. More formally, particles are propagated according to  with Gaussian noise applied such that

with Gaussian noise applied such that

where  is the covariance of the odometry, and the symbol \(\sim \) implies

is the covariance of the odometry, and the symbol \(\sim \) implies  is distributed as

is distributed as  meaning Gaussian noise is applied to the linear and angular components of the odometry. This means the motion model allows MCL-based approaches to reason about the noise characteristics of their odometry. While it would be impossible to fully account for noise in the odometry (due to wheel slippage, changing model parameters, etc.), a well tuned motion model allows for a robust estimate. In Sect. 6.1, the traditional definition of a motion-model is augmented to include a “ghost factor” that uses semantic information to influence how particles move through occupied space.

meaning Gaussian noise is applied to the linear and angular components of the odometry. This means the motion model allows MCL-based approaches to reason about the noise characteristics of their odometry. While it would be impossible to fully account for noise in the odometry (due to wheel slippage, changing model parameters, etc.), a well tuned motion model allows for a robust estimate. In Sect. 6.1, the traditional definition of a motion-model is augmented to include a “ghost factor” that uses semantic information to influence how particles move through occupied space.

The sensor model is defined by each range-scanner observation. The probability of each full range-scan ( ) can be estimated under the assumption that each measurement in the scan is independent of each other. That is,

) can be estimated under the assumption that each measurement in the scan is independent of each other. That is,

is the likelihood of the putative particle  , where

, where

is the set of range and bearing tuples that make up each scan. Calculating the likelihood can be done two ways, using a beam model (Thrun et al. 2001) or a likelihood field model (Thrun 2001).

In the beam model, a raycasting operation is performed. Starting from the pose of the current particle, a ray is cast along the bearing angle  . The raycasting operation terminates when an occupied cell is reached and the likelihood is estimated as

. The raycasting operation terminates when an occupied cell is reached and the likelihood is estimated as

where  is the range obtained from the sensor and

is the range obtained from the sensor and  is the distance travelled by the ray.

is the distance travelled by the ray.

In the likelihood field model, a distance map is used in order to avoid the expensive raycasting operation. The distance map is a Lookup Table (LUT) of the same size as the floorplan, where each cell contains the distance to the nearest geometry. This map is estimated similar to a Chamfer distance (Borgefors 1986), where a search is performed in a window around each cell and the distance to the closest occupied cell in the floorplan is stored. When queried, this distance is converted into a likelihood using Eq. 5. Figure 3 shows the estimated distance map for a floorplan, the creation of which will be explored further in Sect. 6.2. This distance map is only estimated once during initialisation. During runtime, the endpoint of each measurement can be estimated directly from the pose, bearing and range. The probability is then simply related to the distance reported by the LUT.

The raycasting method is (strictly speaking) more closely related to the sensing modality, as the closest geometry may not lie along the ray. However, in practice, most robotics systems use the likelihood field model as it is both faster and tends to provide better results. This is because the raycasting operation can report incorrect measurements due to small pose errors. An example of this is when looking through an open door, an error of a few centimetres can make the rays miss the door. This makes the distribution inherently less smooth.

4 Methodology

The problem with state-of-the-art approaches is that they only use the range information from the sensor, fundamentally limiting how discriminative each reading can be.

Instead, this paper presents a semantic sensing and localisation framework called SeDAR. SeDAR introduces a likelihood field model that incorporates semantically salient information into the traditional range-enabled approach. In an alternative approach, SeDAR combines the raycasting and likelihood field approaches in a novel formulation which allows localisation without range measurements. Experimental evaluation shows that SeDAR outperforms traditional RMCL when using both semantic and depth measurements. When using semantic-only measurements, it is shown that SeDAR can perform comparably to depth-enabled approaches.

5 Semantic Labelling and Sensing

Before using the semantic labels to aid in floorplan localisation, it is necessary to extract them. To do this, a floorplan is labelled in order to identify semantically salient elements. These salient elements are then identified in the camera of the robot by using a state-of-the-art CNN-based semantic segmentation algorithm (Kendall et al. 2015).

5.1 Floorplan

RMCL requires a floorplan and/or previously created range-scan map that is accurate in scale and globally consistent, this presents a number of challenges. A previously created range-scan map requires a robust SLAM algorithm such as GMapping (Grisetti et al. 2007) to be run. This is not ideal as it forces the robot to perform an initial exploration to construct a map before localisation can be performed. Moreover, the SLAM algorithm is also sensitive to noise and the resulting map is difficult to interpret by humans. Instead of using a metric-accurate reconstruction, a more flexible and feasible alternative is using a human-readable floorplan.

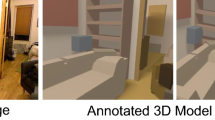

RMCL is not robust to differences between the floorplan and what the robot can observe (e.g. inaccuracies, scale variation and furniture). To overcome these issues, the localisation is augmented with semantic labels extracted from an existing floorplan. For the remainder of this section, and without loss of generality, the labels will be limited to walls, doors and windows. The reason for this limitation is twofold. Firstly, they are salient pieces of information that humans naturally use to localise and are therefore easy to discuss. Secondly, they are simple to automatically extract from a floorplan using image processing. In practice, we use simple image processing techniques along with manual labeling to create a labeled floorplan. As can be seen in Fig. 3c, these semantically salient elements have been colour coded to highlight the different labels. It should be noted, that this limitation will be lifted in Sect. 7.4, where all the labels in the CNN-based semantic segmentation algorithm (Kendall et al. 2015) are used to both construct the floorplan and localise within it.

In order to make a labelled floorplan readable by the robot, it must first be converted into an occupancy grid. An occupancy grid is a 2D representation of the world, in which each cell in the grid has an occupancy probability attached to it. Any cell that is above a threshold is then considered as being occupied. Estimating the occupancy of an existing floorplan is done by taking the normalised greyscale value from the floorplan image.

The map can then be defined as a set of voxels

where  is a set of integer 2D positions. Assuming \(\mathcal {L} = \left\rbrace a, d, w \right\lbrace \) is the set of possible cell labels (wall, door, window), each cell is defined as

is a set of integer 2D positions. Assuming \(\mathcal {L} = \left\rbrace a, d, w \right\lbrace \) is the set of possible cell labels (wall, door, window), each cell is defined as

where  is the occupancy likelihood and

is the occupancy likelihood and  , where

, where  , denotes the label likelihood. The semantic floorplans presented in this work maintain occupancy and label likelihoods, which can then be either thresholded (as in Eq. 14) or used directly.

, denotes the label likelihood. The semantic floorplans presented in this work maintain occupancy and label likelihoods, which can then be either thresholded (as in Eq. 14) or used directly.

Having incorporated the semantic labels into the standard occupancy grid, it is now necessary to use them in sensing.

5.2 SeDAR Sensor

Extracting semantic labels from a robot-mounted sensor is one of the most important parts of SeDAR. It is theoretically possible to directly label range-scans from a LiDAR-based scanner. In fact, there is a wide range of landmark-based SLAM systems that use range sensors (Durrant-Whyte et al. 1999). However, there are limitations on the amount of information that can be extracted from a range-scan.

Beyond the structure of the environment, the additional information contained in floorplans pertains to important architectural features (such as doors and windows). These architectural features are well defined in terms of their appearance. Therefore, they are ideally suited to semantic segmentation of the image.

In SeDAR, labels are extracted from the RGB image only. This is by design, as it allows the use of cameras that cannot sense depth. In the following sections, this sensing modality will be used in a novel MCL framework that does not require range-based measurements. However, it should be noted that SeDAR is capable of using range measurements, should they be available.

If they are used, SeDAR is completely agnostic to the source of the depth measurements. They can come from a deep learning-based depth estimation (Laina et al. 2016) or a dense Structure from Motion (SfM) system (Engel et al. 2013). However, for the purposes of this paper, a simple RGB-D sensor is used. Either way, the method for parsing an RGB-D image into a SeDAR scan is the same.

5.2.1 RGB-D to SeDAR

For a low-cost robotic system that uses an RGB-D image as a proxy for a more expensive LiDAR scanner, a horizontal depth scanline is typically extracted from the depth image as

where  is the angle around the vertical axis and

is the angle around the vertical axis and  is the corresponding range. This can be accomplished by looking exclusively at the depth image.

is the corresponding range. This can be accomplished by looking exclusively at the depth image.

The angle around the vertical axis,  , can be calculated by

, can be calculated by

where \((u,v)\), \((c_x, c_y)\), \((f_x,f_y)\) are the pixel coordinates, principal point and focal length, respectively, of the camera. While it is possible to estimate a second angle along the vertical axis, this is unnecessary in the case of floorplan localisation. More importantly, incorporating this information into the localisation framework requires assumptions to be made about the floorplan (e.g. ceiling height). The underlying assumption is that the centre scanline corresponds to casting rays parallel to the floorplan. This implies the camera must be parallel to the ground plane. However, cameras mounted at an arbitrary Special Orthogonal Space (SO(3)) orientation can still be used assuming that an appropriate scanline is used. In practice, small errors in the orientation of the camera are negligible.

The range measurement  can be calculated as

can be calculated as

where  is the current depth measurement at pixel

is the current depth measurement at pixel  . At this point, a traditional range-scan can be emulated. Notice that in a standard range-scan, all the visible information present in the RGB image is being discarded.

. At this point, a traditional range-scan can be emulated. Notice that in a standard range-scan, all the visible information present in the RGB image is being discarded.

On the other hand, a SeDAR-scan consists of a set of bearing, range and label tuples,

where  is the semantic label. While the scanline still discards a large amount of information in the RGB image, it is important to note that the methods used to estimate the label have already used the context the image provides. It would also possible to look at wider scanlines and provide likelihoods for each label (rather than a single label). In our experiments, this has been unnecessary.

is the semantic label. While the scanline still discards a large amount of information in the RGB image, it is important to note that the methods used to estimate the label have already used the context the image provides. It would also possible to look at wider scanlines and provide likelihoods for each label (rather than a single label). In our experiments, this has been unnecessary.

In order to estimate the labels, a CNN-based encoder-decoder network is used, trained on the SUN3D (Xiao et al. 2013) dataset, that can reliably detect doors, walls, floors, ceilings, furniture and windows. This state-of-the-art semantic segmentation runs at frame-rate on an NVIDIA Titan Xp, which allows images to be parsed into a SeDAR-scan with negligible latency. The label  is then simply the label at pixel

is then simply the label at pixel  .

.

It is important to note that the CNN has not been fine-tuned to any specific task. In fact, this is an important limitation of the approach presented in this paper. When the semantic segmentation fails, the observations become unreliable. This means that correct particles can given low scores and removed from the filter. However, in practice, the CNN appears to generalise well to most indoor environments.

Figure 4 shows the input images and the resulting SeDAR scan. Figure 4a shows the RGB image from which the label image in Fig. 4b is extracted. Figure 4c shows the depth image. In all of these, the scanline shown in the middle of the image denotes specific pixel locations where  and

and  are extracted from the label and depth image, respectively. Finally, Fig. 4d shows the resulting SeDAR scan, where the scanline can be seen localised within a floorplan. A localised range-less SeDAR scanline would look similar to this, as every (

are extracted from the label and depth image, respectively. Finally, Fig. 4d shows the resulting SeDAR scan, where the scanline can be seen localised within a floorplan. A localised range-less SeDAR scanline would look similar to this, as every ( ) tuple would perform ray-tracing until it hit an obstacle. Without ray-tracing, the scanline would simply have no depth. Now that the semantic labels are added into the map and the sensor, they can be used in a novel MCL algorithm.

) tuple would perform ray-tracing until it hit an obstacle. Without ray-tracing, the scanline would simply have no depth. Now that the semantic labels are added into the map and the sensor, they can be used in a novel MCL algorithm.

6 Semantic Monte-Carlo Localisation

It has been shown that there is a large amount of easily-attainable semantic information present in both the floorplan and the image. This information has been largely ignored in the MCL literature in favour of range-based approaches.

In this Section, this semantic information is combined into a novel semantic MCL approach. In the motion model, the semantic information is used to inform collision models. In the sensor model two approaches are presented. The first introduces a likelihood field model that incorporates semantically salient information into the traditional approach. The second approach combines the raycasting and likelihood field approaches into a method which allows localisation without range measurements.

6.1 Motion Model

Equation 2 formalised the motion model as  . However, it is well understood in the literature that the actual distribution being approximated is

. However, it is well understood in the literature that the actual distribution being approximated is  . This encodes the idea that certain motions are more or less likely depending on the map (e.g. through walls).

. This encodes the idea that certain motions are more or less likely depending on the map (e.g. through walls).

Under the assumption that the motion of the robot is small, it can be shown that

(see e.g. (Thrun 2002)) where \(\kappa \) is a normalising factor and

is the set containing every cell in the map. This allows the two likelihoods to be treated independently.

is the set containing every cell in the map. This allows the two likelihoods to be treated independently.

In an occupancy map, the motion  is defined in the same way as Eq. 2. The prior

is defined in the same way as Eq. 2. The prior  is simply the occupancy likelihood of the cell that contains

is simply the occupancy likelihood of the cell that contains  , that is

, that is

which is an elegant solution in the case where the “floorplan” was previously built by the robot.

However, this approach becomes problematic when using human-made floorplans. Human-made floorplans typically have binary edges (when they are made on a computer) or edges with image artefacts (when they are scanned into a computer). This does not reflect what the robot can observe and can cause issues with localisation. Therefore, most approaches tend to assume a binary interpretation of the occupancy. This is done by setting the probability to

where  is a user defined threshold. This thresholding operation is necessary when the floorplan is not created by the robot (e.g. using scan-matching). While this makes depth-based methods perform reliably, it is a crude estimate of reality. For instance, most humans would not even notice if a door is a few centimetres away from where it should be. Issues like this present real problems when particles propagate though doors, as it is possible that the filter will discard particles as they collide with the edge of the door frame.

is a user defined threshold. This thresholding operation is necessary when the floorplan is not created by the robot (e.g. using scan-matching). While this makes depth-based methods perform reliably, it is a crude estimate of reality. For instance, most humans would not even notice if a door is a few centimetres away from where it should be. Issues like this present real problems when particles propagate though doors, as it is possible that the filter will discard particles as they collide with the edge of the door frame.

Instead, the motion model presented here uses semantic information to augment this with a ghost factor that allows particles more leeway in these scenarios. Therefore the proposed prior is

where  is the distance to the nearest door. While other labels such as windows can be used, in the case of a ground-based robot doors are sufficient. The distance,

is the distance to the nearest door. While other labels such as windows can be used, in the case of a ground-based robot doors are sufficient. The distance,  , can be efficiently estimated using a lookup table as defined in Sect. 6.2.

, can be efficiently estimated using a lookup table as defined in Sect. 6.2.

More importantly, \(\epsilon _{ \tiny \textsc {g} }\) is a user defined factor that determines how harshly this penalty is applied. Setting \(\epsilon _{ \tiny \textsc {g} } = 0\) allows particles to navigate through walls with no penalty, while very high values approximate Eq. 14. The effects of \(\epsilon _{ \tiny \textsc {g} }\) will be explored in Sect. 7.1.1. This motion model is more probabilistically accurate than the occupancy model used in most RMCL approaches, and has the added advantage of leveraging the high-level semantic information present in the map.

Having presented a semantically enabled motion model, it is now necessary to give the sensor model the same treatment.

6.2 Sensor Model

The naïve way of incorporating semantic measurements into the sensor model would be to use the beam model. In this modality, the raycasting operation would provide not only the distance travelled by the ray, but also the label of the cell the ray hit. If the label of the cell and the observation match, the likelihood of that particle being correct is increased. However, this approach suffers from the same limitations as the traditional beam model: it has a distinct lack of smoothness. On the other hand, the likelihood field model is significantly smoother, as it provides a gradient between each of the cells. By contrast, the approach presented here uses a joint method that can use likelihood fields to incorporate semantic information in the presence of semantic labels. More importantly, it can also use raycasting within a likelihood field in order to operate without range measurements.

As described in Sect. 3, the likelihood field model calculates a distance map. For each cell  , the distance to the nearest occupied cell

, the distance to the nearest occupied cell

is calculated and stored. When a measurement  is received, the endpoint is estimated and used as an index to the distance map. Assuming a Gaussian error distribution, the weight of each particle

is received, the endpoint is estimated and used as an index to the distance map. Assuming a Gaussian error distribution, the weight of each particle  can then be estimated as

can then be estimated as

where  is the value obtained from the distance map and

is the value obtained from the distance map and  is dictated by the noise characteristics of the sensor. However, this model has three main limitations. Firstly, it makes no use of the semantic information present in the map. Secondly, the parameter

is dictated by the noise characteristics of the sensor. However, this model has three main limitations. Firstly, it makes no use of the semantic information present in the map. Secondly, the parameter  must be estimated by the user and assumes all measurements within a scan have the same noise parameters. Thirdly, it is incapable of operating in the absence of range measurements.

must be estimated by the user and assumes all measurements within a scan have the same noise parameters. Thirdly, it is incapable of operating in the absence of range measurements.

Instead, as mentioned in Sect. 5.1, this work uses the semantic labels present in the map to create multiple likelihood fields. For each label present in the floorplan, a distance map is calculated. This distance map stores the shortest distance to a cell with the same label.

Formally, for each map cell  the distance to the nearest cell of each label is estimated as

the distance to the nearest cell of each label is estimated as

where  are distances to the nearest wall, door and window, respectively. Figure 5 shows the distance maps for each label. For clarity, the argument (

are distances to the nearest wall, door and window, respectively. Figure 5 shows the distance maps for each label. For clarity, the argument ( ), is omitted for the remainder of the paper.

), is omitted for the remainder of the paper.

This approach overcomes the three limitations of the state-of-the-art. Firstly, the use of semantic information (Chu et al. 2015; Dellaert et al. 1999; Kanai et al. 2015; Liu et al. 2015; Winterhalter et al. 2015). Secondly, adapting the sensor noise parameters to the map (Dellaert et al. 1999; Kanai et al. 2015; Winterhalter et al. 2015). Thirdly, operation in the absence of range measurements (Bedkowski and Röhling 2017; Dellaert et al. 1999; Kanai et al. 2015; Winterhalter et al. 2015). These points will now be discussed.

6.2.1 Semantic Information

Most localisation approaches (Chu et al. 2015; Dellaert et al. 1999; Kanai et al. 2015; Liu et al. 2015; Winterhalter et al. 2015) do not use any semantic information present in the map. While approaches such as that of Bedkowski and Röhling (2017) and Poschmann et al. (2017) have begun to use this information, they either rely on geometric primitives for their semantic segmentation approach (Bedkowski and Röhling 2017) or rely on synthetic 3D reconstructions of the map (Poschmann et al. 2017). Contrary to this, SeDAR uses the semantic information present in the map. When an observation  is received, the bearing

is received, the bearing  and range

and range  information are used to estimate the endpoint of the scan. The label

information are used to estimate the endpoint of the scan. The label  is then used to decide which semantic likelihood field to use. Using the endpoint from the previous step, the label-likelihood can be estimated similarly to Eq. 17,

is then used to decide which semantic likelihood field to use. Using the endpoint from the previous step, the label-likelihood can be estimated similarly to Eq. 17,

where  is the distance to the nearest cell of the relevant label and

is the distance to the nearest cell of the relevant label and  is the standard deviation (which will be defined using the label prior). The probability of an observation given the map and pose can then be estimated as

is the standard deviation (which will be defined using the label prior). The probability of an observation given the map and pose can then be estimated as

where \(\epsilon _{\scriptscriptstyle {o}}\) and  are user defined weights. When

are user defined weights. When  the likelihood is the same as standard RMCL. On the other hand, when \(\epsilon _{\scriptscriptstyle {o}} = 0\) the approach uses only the semantic information present in the floorplan. These weights are explored and defined in Sect. 7.1.1. Unlike range scanners,

the likelihood is the same as standard RMCL. On the other hand, when \(\epsilon _{\scriptscriptstyle {o}} = 0\) the approach uses only the semantic information present in the floorplan. These weights are explored and defined in Sect. 7.1.1. Unlike range scanners,  cannot be related to the physical properties of the sensor. Instead, this standard deviation is estimated directly from the prior of each label on the map. Defining

cannot be related to the physical properties of the sensor. Instead, this standard deviation is estimated directly from the prior of each label on the map. Defining  this way has the benefit of not requiring tuning. However, there is a much more important effect that must be discussed.

this way has the benefit of not requiring tuning. However, there is a much more important effect that must be discussed.

6.2.2 Semantically Adaptive Standard Deviation

Most approaches (Dellaert et al. 1999; Kanai et al. 2015; Winterhalter et al. 2015) rely on hand-tuned parameters for the standard deviation of the observation likelihood  . However, when a human reads a floorplan, unique landmarks are the most discriminative features. The more unique a landmark, the easier it is to localise using it (because there are not many areas in the map that contain it). It then follows that the more rare a landmark, the more discriminative it is for the purpose of localisation. Indeed, it is easier for a person to localise in a floorplan by the configuration of doors and windows than it is by the configuration of walls. This translates into the simple insight: lower priors are more discriminative. Therefore,

. However, when a human reads a floorplan, unique landmarks are the most discriminative features. The more unique a landmark, the easier it is to localise using it (because there are not many areas in the map that contain it). It then follows that the more rare a landmark, the more discriminative it is for the purpose of localisation. Indeed, it is easier for a person to localise in a floorplan by the configuration of doors and windows than it is by the configuration of walls. This translates into the simple insight: lower priors are more discriminative. Therefore,  is tied to the prior of each label not only because it is one less parameter to tune, but because it implicitly makes observing rare landmarks more beneficial than common landmarks.

is tied to the prior of each label not only because it is one less parameter to tune, but because it implicitly makes observing rare landmarks more beneficial than common landmarks.

Relating  to the label prior

to the label prior  controls how smoothly the distribution decays w.r.t. distance from the cell. We make the likelihoods more spatially lenient on sparser labels: the smaller

controls how smoothly the distribution decays w.r.t. distance from the cell. We make the likelihoods more spatially lenient on sparser labels: the smaller  is, the smoother the decay. In essence, this allows more discriminative landmarks to contribute towards the localisation from further away.

is, the smoother the decay. In essence, this allows more discriminative landmarks to contribute towards the localisation from further away.

6.2.3 Range-Less Semantic Scan-Matching

The final, and most important, strength of this approach is the ability to perform all of the previously described methodology in the complete absence of range measurements. Most approaches (Bedkowski and Röhling 2017; Dellaert et al. 1999; Kanai et al. 2015; Winterhalter et al. 2015) are incapable of operating without the use of range measurements. Those that are capable of range-less performance (Chu et al. 2015; Liu et al. 2015), rely on strong assumptions about the geometry (Liu et al. 2015) and/or estimate a proxy for depth measurements (Chu et al. 2015). Both these cases have important limitations that are avoided by our semantic scan-matching.

The approach has so far been formalised on the assumption of either  tuples (existing approaches) or

tuples (existing approaches) or  tuples (SeDAR-based approach). However, our approach is capable of operating directly on

tuples (SeDAR-based approach). However, our approach is capable of operating directly on  tuples. In other words, depth measurements are explicitly not added or used.

tuples. In other words, depth measurements are explicitly not added or used.

Incorporating range-less measurements is simple. The beam and likelihood field models are combined in a novel approach that avoids the degeneracies that would happen in traditional RMCL approaches. In Eq. 5, the likelihood of a ray is estimated using the difference between the range ( ) obtained from the sensor and the range (

) obtained from the sensor and the range ( ) obtained from the raycasting operation. Unfortunately, in the absence of a range-based measurement (

) obtained from the raycasting operation. Unfortunately, in the absence of a range-based measurement ( ) this is impossible. Using the standard distance map is also impossible, since the endpoint of the ray cannot be estimated. Using raycasting in the distance map also fails similarly: the raycasting terminates on an occupied cell, implying

) this is impossible. Using the standard distance map is also impossible, since the endpoint of the ray cannot be estimated. Using raycasting in the distance map also fails similarly: the raycasting terminates on an occupied cell, implying  for every ray cast.

for every ray cast.

On the other hand, the semantic likelihood fields can still be used as  will still have a meaningful and discriminative value. This operation is called semantic raycasting. For every

will still have a meaningful and discriminative value. This operation is called semantic raycasting. For every  , the raycasting is performed as described in Sect. 3. However, instead of comparing

, the raycasting is performed as described in Sect. 3. However, instead of comparing  and

and  or using

or using  , the label

, the label  is used to decide what likelihood field to use. The cost can then be estimated as

is used to decide what likelihood field to use. The cost can then be estimated as

where  is defined in equation 19. This method is essentially a combination of the beam-model and the likelihood field model. More explicitly, a ray is cast along the bearing of every observation

is defined in equation 19. This method is essentially a combination of the beam-model and the likelihood field model. More explicitly, a ray is cast along the bearing of every observation  tuple. Once the raycasting hits an occupied cell, we use the occupied cell’s location to perform a lookup into the likelihood field corresponding to the observation’s label. This gives us a distance to the nearest cell with the same label. If the sensor is correctly localised, every distance should be zero. If it isn’t, the likelihood fields provide a smooth cost-function towards the correct pose.

tuple. Once the raycasting hits an occupied cell, we use the occupied cell’s location to perform a lookup into the likelihood field corresponding to the observation’s label. This gives us a distance to the nearest cell with the same label. If the sensor is correctly localised, every distance should be zero. If it isn’t, the likelihood fields provide a smooth cost-function towards the correct pose.

It would be possible to assign binary values (i.e. label matches or not) to Eq. 21. This approach would make the observation likelihood directly proportional to the series of labels along the scanline (i.e. how closely the bearing/label tuples match what is observable from each particle’s pose). However, this would be a naïve solution that provides no smooth gradient to the correct solution. Instead, this approach uses the angular distribution of labels, combined with distances from the likelihood field, to provide a smooth cost-function that converges reliably.

The previous Sections have presented a series of methods to localise a ground-based robot on a pre-existing floorplan. In the following Section, it will be shown that these methods are capable of outperforming standard RMCL approaches when using range-measurements. Moreover, it will be shown that they provide comparable performance when operating exclusively on bearing/label tuples from RGB images without range information.

7 Evaluation

This section will evaluate the strengths of the approach. As an initial step, an evaluation and parameter exploration will be performed on a dataset consisting of a robot driving around a building. As a second step, our approach will be benchmarked on the popular TUM-RGBD dataset (Sturm et al. 2012).

7.1 Human-Readable Floorplans

Evaluation on our the first dataset, which will be relased along with the paper, will focus on three main experiments. Firstly, a thorough evaluation of the performance of the system for a single trajectory is performed along with a parameter exploration of SeDAR. This is done in order to give an insight into the intrinsic characteristics of SeDAR mentioned in Sect. 4. Secondly, a repeatability experiment is undertaken, where the performance of multiple similar trajectories is evaluated. This is done in order to demonstrate the robustness and performance of SeDAR. Finally, an evaluation on a more challenging hand-drawn map is performed. This experiment allows us to demonstrate that SeDAR can localise in geometrically inaccurate maps.

In order to evaluate the approach, a dataset will ideally have several important characteristics. The dataset should consist of a robot navigating within a human-readable floorplan. Human-readability is required to ensure semantic information is present. The trajectory should be captured with an RGB-D camera. This is in order to easily extract all the possible tuple combinations (range, bearing and label). Finally, the trajectory of the robot should be on the same plane as the floorplan.

To satisfy these constraints, we created a new dataset to evaluate our approach on. The floorplan in Fig. 3a is used because it is large enough for meaningful tests and has human-readable floorplans available. The dataset was collected using the popular TurtleBot platform http://www.turtlebot.com/, as it has a front-facing Kinect that can be used for emulating both LiDAR and SeDAR. The dataset, will be released along with the publication of this paper.

Normally, the ground-truth trajectory for floorplan localisation is either manually estimated [as in Winterhalter et al. (2015)] or estimated using external Motion Capture (MoCap) systems [as in Sturm et al. (2012)]. However, both of these approaches are limited in scope. Manual ground-truth estimation is time-consuming and impractical. MoCap is expensive and difficult to calibrate, especially over the large public areas required for floorplan localisation. In order to overcome these limitations, a well established RGB-D SLAM system (Labbe and Michaud 2014) is used to provide an initial estimate. This estimate is then manually refined, using both a computationally expensive global optimisation and judicious manual intervention. While it does not localise within a floorplan, it does provide an accurate reconstruction and trajectory for the robot, which can then be registered with the floorplan. Figure 6a shows a sample trajectory and map estimated by Labbe and Michaud (2014), while Fig. 6b shows them overlaid on the floorplan.

To quantitatively evaluate SeDAR against ground truth, the Absolute Trajectory Error (ATE) metric presented by Sturm et al. (2012) is used. ATE is estimated by first registering the two trajectories using the closed form solution of Horn (1987), who find a rigid transformation  that registers the trajectory

that registers the trajectory  to the ground truth

to the ground truth  . At every time step

. At every time step  , the ATE can then be estimated as

, the ATE can then be estimated as

where  and

and  are the current time-aligned poses of the ground truth and estimated trajectory, respectively. The Root Mean Square Error (RMSE), mean and median values of this error metric are reported, as these are indicative of performance over coarse room-level initialisation. In order to visualise the global localisation process, the error of each successive pose is shown (error as it varies with time). These metrics are sufficient to objectively demonstrate the systems ability to globally localise in a floorplan, while also being able to measure room-level initialisation performance.

are the current time-aligned poses of the ground truth and estimated trajectory, respectively. The Root Mean Square Error (RMSE), mean and median values of this error metric are reported, as these are indicative of performance over coarse room-level initialisation. In order to visualise the global localisation process, the error of each successive pose is shown (error as it varies with time). These metrics are sufficient to objectively demonstrate the systems ability to globally localise in a floorplan, while also being able to measure room-level initialisation performance.

The work presented here is compared against the extremely popular MCL approach present in the Robot Operating System (ROS), called Adaptive Monte Carlo Localisation (AMCL) (Dellaert et al. 1999). AMCL is the standard MCL approach used in the robotics community. Any improvements over this approach are therefore extremely valuable. Furthermore, Adaptive Monte Carlo Localisation (AMCL) (Dellaert et al. 1999) is considered to be the state-of-the-art and is representative of the expected performance of the RMCL approaches detailed in Sect. 2.1, such as Kanai et al. (2015), Bedkowski and Röhling (2017), Winterhalter et al. (2015) and Chu et al. (2015). To demonstrate that our algorithm can outperform SLAM, we also compare against 2D scan-matching (Grisetti et al. 2007), monocular (Mur-Artal et al. 2015) and RGB-D (Mur-Artal and Tardós 2017) approaches in the coarse (room-level) scenario.

In all experiments, any common parameters (such as  ) are kept the same. The only parameters varied are

) are kept the same. The only parameters varied are  , \(\epsilon _{\scriptscriptstyle {o}}\) and \(\epsilon _{ \tiny \textsc {g} }\).

, \(\epsilon _{\scriptscriptstyle {o}}\) and \(\epsilon _{ \tiny \textsc {g} }\).

7.1.1 Detailed Analysis of a Single Trajectory

In order to establish a baseline of performance, and to explore the characteristics of SeDAR discussed in this paper, we first present a thorough evaluation of a single trajectory on a clean floorplan. This trajectory, seen in Fig. 6, covers multiple rooms and corridors and is therefore a representative sample to evaluate on.

As a first experiment, a room-level initialisation is given to both AMCL and the proposed approach. This means that the uncertainty of the pose estimate, roughly corresponds to telling the robot what room in the floorplan it is in. More explicitly, the standard deviations on the pose estimate are of 2.0 m in \((x,y)\) and 2.0 rad in \(\theta \). The systems then ran with a maximum of \(1000\) particles (minimum \(250\)) placed around the covariance ellipse. The error is recorded as each new image in the dataset is added. For the SLAM approaches, it is not necessary to define an initialisation. However, it was necessary to increase the number of features on ORB-SLAM2 so that the tracking could be perfomed successfully. Apart from this, all SLAM algorithms ran with their default parameters.

Figure 7 compares four distinct scenarios against AMCL. Of these four scenarios, two use the range measurements from the Microsoft Kinect (blue lines) and two use only the RGB image (red lines).

The first range-enabled scenario uses the range measurements to estimate the endpoint of the measurement (and therefore the index in the distance map) and sets the range and label weights to \((\epsilon _{\scriptscriptstyle {o}} = 0.0\) and  ), respectively. This means that while the range information is used to inform the lookup in the distance map, the costs are only computed using the labels. The second range-enabled scenario performs a weighted combination

), respectively. This means that while the range information is used to inform the lookup in the distance map, the costs are only computed using the labels. The second range-enabled scenario performs a weighted combination  of both the semantic and traditional approaches. It is interesting to note that this performs slightly worse than the label-only approach, likely because the geometric cues in a hallway environment are relatively weak compared to semantic cues.

of both the semantic and traditional approaches. It is interesting to note that this performs slightly worse than the label-only approach, likely because the geometric cues in a hallway environment are relatively weak compared to semantic cues.

In terms of the ray-based version of this approach, Eq. 21 is used. This means there are no parameters to set. Instead, a mild ghost factor (\(\epsilon _{ \tiny \textsc {g} } = 3.0\)) and a harsh one (\(\epsilon _{ \tiny \textsc {g} } = 7.0\)) are shown.

Since coarse room-level initialisation is an easier problem than global initialisation, the advantages of the range-enabled version of this approach are harder to see compared to state-of-the-art. However, it is important to note how closely the ray-based version of the approach performs to the rest of the scenarios despite using no depth data. Apart from a couple of peaks, the ray-based method essentially performs at the same level as AMCL. This becomes even more noticeable in Table 1, where it is clear that range-based semantic MCL (using only the labels) outperforms state of the art, while the ray-based \(\epsilon _{ \tiny \textsc {g} } = 3.0\) version lags closely behind. The reason \(\epsilon _{ \tiny \textsc {g} } = 3.0\) performs better than \(\epsilon _{ \tiny \textsc {g} } = 7.0\) is because small errors in the pose can cause the robot to “clip” a wall as it goes through the door. Since \(\epsilon _{ \tiny \textsc {g} } = 3.0\) is more lenient on these scenarios, it is able to outperform the harsher ghost factors.

Table 1 also shows comparison against three SLAM algorithms. It is clear that monocular SLAM does not perform well in this scenario. This is because there are large areas of plain textureless regions where tracking is lost. RGB-D SLAM performs better in this scenario, as it can rely on depth cues to maintain tracking. Finally, 2D SLAM also performs well (although slightly worse than RGB-D). However, in all cases, SeDAR outperforms the performance of SLAM algorithms. Not only does the range-enabled SeDAR significantly outperform SLAM, but the ray-based approach also manages to outperform both 2D and 3D depth-enabled SLAM. These results present a clear indication that SeDAR-based localisation approaches are capable of outperforming SLAM methods.

In order to give further context to these results, the results of state-of-the-art approaches by Winterhalter et al. (2015) and Chu et al. (2015) are mentioned here. These approaches are chosen as they present the most comparable methods in the literature. Although direct comparison is not possible (due to differences in the approach, and the availability of code and datasets) an effort has been made to present meaningful metrics. Winterhalter et al. (2015) report (in their paper) an error of 0.2–0.5 m. Winterhalter et al. are estimating a 6-DoF pose, which might make this seem like an unfair comparison. However, they do this on a much smaller room-sized dataset meaning the error is relatively large. While they perform experiments on larger floorplan-level datasets, the errors reported are much noisier ranging between 0.2 and 2 m on the coarse initialisation and 0.2–8 m on the global initialisation. Chu et al. (2015) report (in their paper) a mean error of 0.53 m on the TUMindoor dataset (Huitl et al. 2012), which is similar to the one presented here. These results present further evidence that the SeDAR-based localisation approach can outperform the state-of-the-art localisation approaches.

In terms of qualitative evaluation, both the convergence behaviour and the estimated path of MCL-based approaches is shown.

The convergence behaviour can be seen in Fig. 8. Here, Fig. 8a shows how the filter is initialised to roughly correspond to the room the robot is in. As the robot starts moving, it can be seen that AMCL (Fig. 8b), the range-based version of SeDAR (Fig. 8c) and the ray-based version (Fig. 8d) converge. Notice that while the ray-based approach has a predictably larger variance on the particles, the filter has successfully localised. This can be seen from the fact that the Kinect point cloud is properly aligned with the floorplan. It is important to note that although the Kinect point cloud is present for visualisation in the ray-based method, the depth is not used.

The estimated paths can be seen in Fig. 9, where the red path is the estimated path and green is the ground truth. Figure 9a shows the state-of-the-art, which struggles to converge at the beginning of the sequence (marked by a blue circle). It can be seen that the range-based approach in Fig. 9b (combined label and range), converges more quickly and maintains a similar performance to AMCL. It only slightly deviates from the path at the end of the ambiguous corridor on the left, which also happens to AMCL. It can also be seen that the ray-based approach performs very well. While it takes longer to converge, as can be seen by the estimated trajectory in Fig. 9c, it corrects itself and only deviates from the path in areas of large uncertainty (like long corridors).

These experiments show that SeDAR-based MCL is capable of operating when initialised at the coarse room-level. It is now important to discuss how discriminative SeDAR is when there is no initial pose estimate provided to the system.

Having evaluated against SLAM-based approaches in a local initialisation scenario, the focus will now be on the ability of SeDAR-based MCL to perform global localisation. In these experiments, the system is given no indication of where in the map the robot is. Instead, a maximum of \(50,000\) particles (minimum \(15,000\)) are placed over the entire floorplan. Figure 10 shows the same four scenarios as in the previous section. The thin line is the state-of-the-art method, the two lines above it represent range-based methods, while the two below represent ray-based methods. For the range-based scenarios (blue, bottom two lines) it can be seen that using only the label information  consistently outperforms the state of the art, both in terms of how quickly the values converge to a final result and the actual error on convergence. This shows that SeDAR used in an MCL context is more discriminative than the standard occupancy maps in RMCL. The second range-based measurement

consistently outperforms the state of the art, both in terms of how quickly the values converge to a final result and the actual error on convergence. This shows that SeDAR used in an MCL context is more discriminative than the standard occupancy maps in RMCL. The second range-based measurement  significantly outperforms all other approaches. In this case, the strong geometric cues present in the dataset are helping the particle filter converge faster (therefore skewing the result in favour of the combined method).

significantly outperforms all other approaches. In this case, the strong geometric cues present in the dataset are helping the particle filter converge faster (therefore skewing the result in favour of the combined method).

In terms of the ray-based version of the approach (red lines), two scenarios are compared. A mild ghost factor (\(\epsilon _{ \tiny \textsc {g} } = 3.0\)) and a harsh one (\(\epsilon _{ \tiny \textsc {g} } = 7.0\)). These versions of the approach both provide comparable performance to the state-of-the-art. It is important to emphasise that this approach uses absolutely no range and/or depth measurements. As such, comparing against depth-based systems is inherently unfair. Still, SeDAR ray-based approaches compare favourably to AMCL. In terms of convergence, the mild ghost factor \(\epsilon _{ \tiny \textsc {g} } = 3.0\) gets to within several meters accuracy even quicker than AMCL, at which point the convergence rate slows down and is overtaken by AMCL. The steady state performance is also comparable. While the performance temporarily degrades, it manages to recover and keep a steady error rate throughout the whole run. On the other hand, the harsher ghost factor \(\epsilon _{ \tiny \textsc {g} } = 7.0\) takes longer to converge, but remains steady and eventually outperforms the milder ghost factor. Table 2 shows the RMSE, error along with other statistics. In this case, the combined range and label method performs best.

As before, qualitative analysis can be provided by looking at the convergence behaviour and the estimated paths.

In order to visualise the convergence behaviour, Fig. 11a shows a series of time steps during the initialisation of the filters. On the first image, the particles have been spread over the ground floor of a \((49\,\hbox {m}\times 49\,\hbox {m})\) office area. In this dataset, the robot is looking directly at a door during the beginning of the sequence. Therefore, in Fig. 11b the filter converges with particles looking at doors that are a similar distance away. The robot then proceeds to move through the doors. Going through the door means the filter converges significantly faster as it implicitly uses the ghost factor in the motion model. It also gives the robot a more unique distribution of doors (on a corner), which makes the filter converge quickly. This is shown in Fig. 11c.

The estimated and ground truth paths can be seen in Fig. 12, where the circled part of the trajectory denotes the point of convergence. It can be seen that AMCL takes longer to converge (further away from the corner room) than the range-based approach. More importantly, it can be seen that the range-based approach suffers no noticeable degradation in the estimated trajectory over the room-level initialisation. On the other hand, the performance of the ray-based method degrades more noticeably. This is because the filter converges in a long corridor with ambiguous label distributions (doors left and right are similarly spaced). However, once the robot turns around the system recovers and performs comparably to the range-based approach.

As mentioned previously, entering or exiting rooms helps the filter converge because it can use the ghost factor in the motion model. The following experiments, evaluate how the ghost factor affects the performance of the approach.

The effect of the ghost factor can be measured in a similar way to the overall filter performance. Results show that the ghost factor provides more discriminative information when it is not defined in a binary fashion. This is shown in the label-only scenario for both the range-based and ray-based approaches, in both the global and coarse room-level initialisation. Figure 13 shows the effect of varying the ghost factor during global initialisation. It can be seen that not penalising particles going through walls, \((\epsilon _{ \tiny \textsc {g} } = 0)\), is not a good choice. This makes sense, as there is very little to be gained from allowing particles to traverse occupied cells without any consequence. It follows that the ghost factor should be set as high as possible. However, setting the ghost factor to a large value \((\epsilon _{ \tiny \textsc {g} } = 7.0)\), which corresponds to reducing the probability by \(95\%\) at \(0.43\hbox {m}\), does not provide the best results.

While it might seem intuitive to assume that a higher \(\epsilon _{ \tiny \textsc {g} }\) will always be better, this is not the case. High values of the ghost factor correspond to a binary interpretation of occupancy which makes MCL systems unstable in the presence of discrepancies between the map and the environment. This happens because otherwise correct particles can clip door edges and be completely eliminated from the system. A harsh ghost factor also exacerbates problems with a limited number of particles. In fact, \(\epsilon _{ \tiny \textsc {g} } = 3.0\), corresponding to a \(95\%\) reduction at \(1.0\,\hbox {m}\), consistently showed the best results in all of the global initialisation experiments, as can be seen in Table 3.

In terms of room-level initialisation, having an aggressive ghost factor is more in line with the initial intuition. Table 4 shows that for both of the range-based scenarios, \(\epsilon _{ \tiny \textsc {g} } = 7.0\) provides the best results. This is because coarse room-level initialisation in the presence of range-based measurements is a much easier problem to solve. As such, the problem of particles “clipping” edges of doors is less of an issue.

On the other hand, the ray-based scenario still prefers a milder ghost factor of \(\epsilon _{ \tiny \textsc {g} } = 3.0\). In this scenario, inaccuracies in both the map and the sensing modalities allow for otherwise correct particles to be heavily penalised by an aggressive ghost factor. Both of these results are reflected in Figs. 14a and 14b.

These results allow a single conclusion. The ghost factor must be tuned to the expected amount of noise in the map and sensing modality. Aggressive ghost factors can be used in cases where the pre-existing map is accurate and densely sampled, such as the case where the map was collected by the same sensor being used to localise (i.e. SLAM). On the other hand, in the case where there are expected differences between what the robot is able to observe (e.g. furniture, scale errors, etc.), it is more beneficial to provide a milder ghost factor in order to be more lenient on small pose errors.

7.2 Cross-Trajectory Performance