Abstract

Conventional compressive sensing (CS) reconstruction is very slow for its characteristic of solving an optimization problem. Convolutional neural network can realize fast processing while achieving comparable results. While CS image recovery with high quality not only depends on good reconstruction algorithms, but also good measurements. In this paper, we propose an adaptive measurement network in which measurement is obtained by learning. The new network consists of a fully-connected layer and ReconNet. The fully-connected layer which has low-dimension output acts as measurement. We train the fully-connected layer and ReconNet simultaneously and obtain adaptive measurement. Because the adaptive measurement fits dataset better, in contrast with random Gaussian measurement matrix, under the same measurement rate, it can extract the information of scene more efficiently and get better reconstruction results. Experiments show that the new network outperforms the original one.

This paper was presented in part at the CCF Chinese Conference on Computer Vision, Tianjin, 2017. This paper was recommended by the program committee.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Compressive sensing (CS) theory [1,2,3] is able to acquire measurements of signal at sub-Nyquist rates and recover signal with high probability when the signal is sparse in a certain domain. Random Gaussian matrix is often used as the measurement matrix because we must ensure that the basis of sparse domain is incoherent with measurement. When it comes to reconstruction, there are two main kinds of reconstruction methods: conventional reconstruction methods [4,5,6,7,8,9] and deep learning reconstruction methods [10,11,12,13].

A large amount of compressive sensing reconstruction methods have been proposed. But almost all of them get the reconstruction result by solving optimization, which makes them slow. In recent years, some deep learning approaches have been proposed. With its characteristic of off-line training and online test, the speed of reconstruction has been greatly improved.

The first paper [11] applying deep learning approach to solve the CS recovery problem used stacked denoising autoencoders (SDA) to recover signals from undersampled measurements. SDA consists of fully-connected layers, which means larger network with the signal size growing. This imposes a large computational complexity and can lead to overfitting. DeepInverse [13], utilizing convolutional neuron network (CNN) layers, works with arbitrary measurement, which means that the whole image can be reconstructed by it. But with the signal size growing, the cost of measurement grows too. ReconNet [10] used a fully-connected layer along with convolutional layers to recover signals from compressive measurements block-wise. It can reduce the network complexity and the training time while ensuring a good reconstruction quality. However, ReconNet used fixed random Gaussian measurement, which is not optimally designed for signal.

In this paper, we take a fully-connected layer which has low-dimension output as measurement. The fully-connected layer and reconstruction network ReconNet are put together to be an adaptive measurement network. It can be proved the adaptive measurement network performs better than ReconNet with fixed measurement by comparing the trained weights. Experiment shows that the adaptive measurement matrix can obtain more information of images than fixed random Gaussian measurement matrix. And the images reconstructed from adaptive measurement have larger value of PSNR.

The structure of the paper is organized as follows. Section 2 introduces the fixed random Gaussian measurement. And the description of adaptive measurement network is introduced in Sect. 3. Section 4 conducts the experiments, and Sect. 5 concludes the paper.

2 Fixed Random Gaussian Measurement

ReconNet is a deep learning CS reconstruction approach, which can recover rich semantic content even at a low measurement rate of 1%. It is a convolutional neural network and the process of training and testing ReconNet are shown in Fig. 1.

ReconNet consists of one fully-connected layer and six convolutional layers in which the first three layers and last three layers are identical. The function of these layers is described as follows. The fully-connected layer takes CS measurements as input and outputs a feature map of size 33\(\,\times \,\)33. The first/last three layers are inspired by SRCNN [14], which is a CNN-based approach for image super-resolution. Except for the last convolutional layer, all the other convolutional layers followed by ReLU. Only when the input of CNN has structure information can CNN work. So the fully-connected layer plays a role of recovering some structure information from CS measurements and then convolutional layers enhance output of full-connected layer to a high-resolution image.

The training dataset consists of input data and ground truth. All the images in dataset are 33\(\,\times \,\)33 size patches extracted from original images. Input data of ReconNet for training is obtained by measuring each of the extracted patches using a random Gaussian matrix \(\varPhi \). For a given measurement rate, a random Gaussian matrix of appropriate size is firstly generated and then its rows are orthonormalized to get \(\varPhi \). The input of testing and training is obtained by using the same random Gaussian matrix. Before being measured, the 33\(\,\times \,\)33 size block should be reshaped into a 1089-dimension column vector.

The loss function is given by

\(f({y_i},\{ W\})\) is the i–th reconstruction image of ReconNet, \({x_i}\) is the i–th original signal as well as the i–th label, W means all parameters in ReconNet. T is the total number of image blocks in the training dataset. The loss function is minimized by adjusting W using backpropagation. For each measurement rate, two networks are trained, one with random Gaussian initialization for the fully connected layer, and the other with a deterministic initialization, in each case, weights of all convolutional layers are initialized using a random Gaussian with a fixed standard deviation. The network which provides the lower loss on a validation test will be chosen.

The test process does not include the dotted line part in Fig. 1. The high-resolution scene image is divided into non-overlapping blocks of size 33\(\,\times \,\)33 and each of them is reconstructed by feeding in the corresponding CS measurements to ReconNet. The reconstructed blocks are arranged appropriately to form a reconstruction of the image.

It can be proved that fully-connected layer can recover some structure information. The block of high-resolution scene image is firstly measured by random Gaussian matrix and then multiply parameters of fully-connected layer, this process equals to multiplying a square matrix as Fig. 2 shows. The diagonal numbers of square matrix are obviously larger than the other numbers, and we can see it as an approximate unit matrix, which means the i–th element of output of fully-connected layer is mainly determined by the i–th element of high resolution block. Feature maps can also be used to prove that the fully-connected layer can recover some information.

The feature map of fully-connected layer (fc feature map in Fig. 1) can be obtained after image parrot (label in Fig. 1) is sent into the trained ReconNet at measurement rate 10%. It can be seen that the fully-connected layer can recover some structure information. The feature maps of fully-connected layer (fc feature map in Fig. 1), third convolutional layer (conv3 feature map in Fig. 1) and sixth convolutional layer (conv6 feature map in Fig. 1) at measurement rate 25% are also shown in Fig. 1. We can see that it is a process from low-resolution to high-resolution.

The main drawback of random measurement is that they are not optimally designed for signal. Therefore, the adaptive measurement is possibly a promising approach.

3 Adaptive Measurement Network

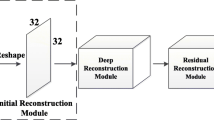

We put a fully-connected layer and ReconNet together to form the adaptive measurement network as Fig. 3 shows. The fully-connected layer which has low-dimension output is considered as measurement. The measurement rate is determined by dimension of network input and fully-connected layer output.

The whole Fig. 3 shows the process of training adaptive network (including the dashed line part). In the training stage, ground truth still consists of 33\(\,\times \,\)33 size patches extracted from the original images. Different from the random Gaussian measurement network, input data of training set is the same with ground truth instead of the output of Gaussian measurements.

When it comes to test, the parameter of fully-connected layer is taken as measurement while ReconNet still works as reconstruction network. Figure 3 without dashed line part shows the process of testing.

Accordingly, the loss function of new network is given by

where K is the parameter of new added fully-connected layer. Difference between (1) and (2) is that in (2) reconstruction image is determined by \({x_i}\) and \(\{ W,K\}\), but \({y_i}\) and \(\{ W\}\) in (1).

Compared to the original one, the new network has more parameter to train. The initial value of the network is random Gaussian. There is a high probability that a better measurement more adaptive to data set can be obtained.

It can be proved the new ReconNet of adaptive measurement network performs better than the original one. The fully-connected layer can recover more structure information. The equivalent process is shown in Fig. 4(b). Compared with Fig. 4(a), the value of square matrix in Fig. 4(b) is more dispersing. The i–th element of output of fully-connected layer is mainly determined by the i–th element of high resolution block and its neighboring elements. So, the measurement can acquire information more effectively. As shown in Fig. 5, in equivalent square matrix, the value of white part is larger than black part. A high-resolution block is firstly reshaped to a high-resolution vector. The output of fully-connected layer is obtained by multiplying the high-resolution vector by the equivalent square matrix. We take the 1–th element as an example. The 1–th element of output is obtained by multiplying the high-resolution vector by 1–th row vector of square matrix. The elements of 1–th row vector can be seen as the weights of column vector. It is obvious that the red elements of the high-resolution vector have larger weights, which means the 1–th element of output of fully-connected layer is mainly determined by those red elements of high-resolution vector. In high-resolution block, those red elements correspond to the 1–th element and its neighboring elements. Since the 1–th element and its neighboring elements are relevant, the values of them are approximate, which means the 1-th element of output of fully-connected layer is more determined by the 1–th element of high-resolution block. The i–th element can be explained the same way. It is proved that the fully-connected layer of new ReconNet recover more structure information.

Adaptive measurement network’s feature maps of fully-connected layer (fc feature map), third convolutional layer (conv3 feature map) and sixth convolutional layer (conv6 feature map) at measurement rate 10% are shown in Fig. 3. In contrast to Fig. 1, the feature maps of adaptive network are obviously better even at measurement 10%.

Figure 6 shows an example of reconstruction results at two kinds of measurement. The measurement rate is 10%. Figure 6(a) is the original image. Figure 6(b) is the reconstruction result of random Gaussian measurement network. Figure 6(c) is the reconstruction result of adaptive network. The adaptive reconstruction result is more attractive visually.

Adaptive measurement network’s better performance can also be proved through measurement matrix. Since the original signal is reshaped to a column vector before being measured, we reshape some row vectors of measurement matrix to size 33\(\,\times \,\)33. Two reshaped row vectors of the random Gaussian measurement matrix at measurement rate 1% and 10% in time and frequency domain are shown in Fig. 7(a). The content of random Gaussian measurement matrix is obviously irregular. We cannot get any useful information from Fig. 7(a). Two reshaped row vectors of adaptive measurement matrix at measurement rate 1%, 10%, 20% in time and frequency domain are shown in Fig. 7(b). As we all know, most of the energy of an image is concentrated in the low frequency part. When the measurement rate is low, some high frequency information must be discarded to reconstruct the contours of the image as fully as possible. However, with the increase in measurement rate, the ability of measurement is enhanced. The high-frequency information in adaptive measurement increases gradually. We can also know it from frequency domain image. So, the reconstructed image will become clearer.

4 Results

In this section, we conduct reconstruction experiments at both fixed random Gaussian measurement and adaptive measurement.

We use the caffe framework for network training on the MATLAB platform. Our computer is equipped with Intel Core i7-6700 CPU with frequency of 3.4 GHz, NVidia GeForce GTX 980 GPU, 64 GB RAM, and the framework runs on the Ubuntu 14.04 operating system.

The dataset consists of 21760 33\(\,\times \,\)33 size patches extracted from 91 images in [14] with a stride equal to 14. It is worthy to mention that because ReconNet reconstruct image block-wise and the size of block is fixed, zero-padding operation is applied to input images of different size. But we find symmetric padding acts better than zero-padding, so all the experiment results are based on symmetric padding instead of zero-padding.

We use cameraman image to test both the networks, and the result of reconstruction is shown as follows.

It is shown in Fig. 8 that the reconstruction results of image cameraman at different measurement rates with different measurements. Our results are more attractive visually.

The reconstruction results for 11 test images at measurement rate 1%, 10%, 25% with different measurements are shown in Table 1. All results show that adaptive measurement outperforms random Gaussian measurement.

5 Conclusion

We have presented an adaptive measurement obtained by learning. We showed that the adaptive measurement provides better reconstruction results than the fixed random Gaussian measurement. It is shown that the learned measurement matrix is more regular in time domain. It is clear that the learned measurement matrix is more adaptive to data set than the fixed one. That’s an important reason why adaptive measurement works better. What’s more, our network is universal, which can be applied to all kinds of images.

References

Kašin, B.S.: The widths of certain finite-dimensional sets and classes of smooth functions. Izv. Akad. Nauk SSSR Ser. Mat. 41(2), 334–351 (1977)

Candès, E., Romberg, J.: Sparsity and incoherence in compressive sampling. Inverse Prob. 23(3), 969–985 (2007)

Rauhut, H.: Random sampling of sparse trigonometric polynomials. Found. Comput. Math. 22(6), 737–763 (2008)

Candes, E.J., Romberg, J., Tao, T.: Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 52(2), 489–509 (2004)

Duarte, M.F., Wakin, M.B., Baraniuk, R.G.: Wavelet-domain compressive signal reconstruction using a Hidden Markov Tree model. In: IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 5137–5140. IEEE (2008)

Peyré, G., Bougleux, S., Cohen, L.: Non-local regularization of inverse problems. In: Forsyth, D., Torr, P., Zisserman, A. (eds.) ECCV 2008. LNCS, vol. 5304, pp. 57–68. Springer, Heidelberg (2008). https://doi.org/10.1007/978-3-540-88690-7_5

Baraniuk, R.G., Cevher, V., Duarte, M.F., et al.: Model-based compressive sensing. IEEE Trans. Inf. Theory 56(4), 1982–2001 (2010)

Li, C., Yin, W., Jiang, H., et al.: An efficient augmented Lagrangian method with applications to total variation minimization. Comput. Optim. Appl. 56(3), 507–530 (2013)

Kim, Y., Nadar, M.S., Bilgin, A.: Compressed sensing using a Gaussian Scale Mixtures model in wavelet domain. In: IEEE International Conference on Image Processing, pp. 3365–3368. IEEE (2010)

Kulkarni, K., Lohit, S., Turaga, P., Kerviche, R., Ashok, A.: Reconnet: non-iterative reconstruction of images from compressively sensed measurements. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 449–458 (2016)

Mousavi, A., Patel, A.B., Baraniuk, R.G.: A deep learning approach to structured signal recovery. In: 2015 53rd Annual Allerton Conference on Communication, Control, and Computing (Allerton), pp. 1336–1343. IEEE, September 2015

Yao, H., Dai, F., Zhang, D., Ma, Y., Zhang, S., Zhang, Y.: DR\(^{2}\)-Net: Deep Residual Reconstruction Network for Image Compressive Sensing. arXiv preprint arXiv:1702.05743 (2017)

Mousavi, A., Baraniuk, R.G.: Learning to invert: signal recovery via deep convolutional networks. arXiv preprint arXiv:1701.03891 (2017)

Dong, C., Loy, C.C., He, K., Tang, X.: Learning a deep convolutional network for image super-resolution. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8692, pp. 184–199. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10593-2_13

Acknowledgements

This work is supported by the National Natural Science Foundation of China (Grant No. 61472301, 61632019) and the Foundation for Innovative Research Groups of the National Natural Science Foundation of China (No. 61621005).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Xie, X., Wang, Y., Shi, G., Wang, C., Du, J., Han, X. (2017). Adaptive Measurement Network for CS Image Reconstruction. In: Yang, J., et al. Computer Vision. CCCV 2017. Communications in Computer and Information Science, vol 772. Springer, Singapore. https://doi.org/10.1007/978-981-10-7302-1_34

Download citation

DOI: https://doi.org/10.1007/978-981-10-7302-1_34

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-7301-4

Online ISBN: 978-981-10-7302-1

eBook Packages: Computer ScienceComputer Science (R0)