Abstract

An organisation using personal data should document its data governance processes to maintain and demonstrate compliance with the General Data Protection Regulation (GDPR). As processes evolve, their documentation should reflect these changes with an assessment showing ongoing compliance. Through this paper, we show how semantic representations of processes are useful towards maintaining ongoing GDPR compliance by using a test-driven approach that generates and checks constraints for adherence to GDPR requirements. We first check whether all required information has been documented, and then whether it is compliant. We prototype our testing approach using a real-world website’s consent mechanism for GDPR compliance, and persist results towards generating documentation. We use previously-published ontologies to represent processes (GDPRov), consent (GConsent), and GDPR (GDPRtEXT), with SHACL used to test requirement constraints.

Paper and Resources: https://w3id.org/GDPRep/semantic-tests.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Demonstrating compliance towards the General Data Protection Regulation (GDPR) [17] requires documenting information regarding how its various obligations and requirements were met. GDPR explicitly requires documentation of information for records of processing activities (R82, A30), consent (R42, A7-1), and impact assessment (DPIA (A35)). It also requires controllers to implement and periodically review appropriate measures regarding processing (A5-1, A24). Therefore the process of assessing, maintaining, and demonstrating compliance with the GDPR is tightly coupled with operational workflows involving personal data.

Processes change and evolve over time - such as the purpose may change, or the same process is used for other additional purposes, or the assigned processor changes. For GDPR compliance, each such change needs to be documented as a temporally versioned record of processing to demonstrate compliance regarding processing activities at that period in time. It would be considered prudence or good practice to show that the specific change was assessed and verified to be compliant before proceeding with it. This is mandatory under GDPR for certain situations requiring a DPIA (A35).

Semantics, and by extension the semantic-web, has been demonstrated to be of assistance in the management of GDPR compliance. Existing work addresses modelling machine-readable metadata for compliance [8, 11, 13, 14], querying for compliance-related information [16], and maintaining compliant processing logs [8]. Interoperable semantics are beneficial when information is shared between stakeholders such as - controllers and processors, or controllers and certification bodies or supervisory authorities. The interoperability is also helpful towards transparency regarding processing activities to address the discrepancy between requirements of an organisation and compliance [18]. A discussion of four areas where automation can be applied [7], one of which is compliance using checklists, shows possible avenues for further incorporating semantics into the compliance process.

In this paper, we show how semantic representation of processes are useful in a test-driven approach for documenting ongoing compliance with the GDPR. We describe our approach towards generating and testing constraints based on requirements gathered from GDPR and the use of semantics to generate documentation linked with the GDPR. The paper also presents an application of this approach by testing a website’s consent mechanism for GDPR compliance and generating compliance documentation. For this, we build on our previous work including ontologies to represent processes (GDPRov [14]), consent (GConsent [12]), and GDPR (GDPRtEXT [13]), and an approach to turn compliance questions into semantic queries [16]. An overview of this was presented in a prior publication [15].

2 Approach

2.1 Generating Constraints from Requirements

The first step towards compliance is selecting applicable clauses from the GDPR and converting them into tangible requirements. Resources useful for this include information and guidance provided by Data Protection Authorities and professional institutes. Information pertaining to the fulfilment of these requirements is required for compliance documentation.

The next step is to identify information required to assess whether requirements have been met, and then generate constraints that check (a) presence of that information, and (b) verify its correctness. For the purposes of this paper, we focus on the legal basis of given consent, with a subset of the requirements and constraints presented in Table 1. Checking for presence of information before verification of correctness follows a closed-world assumption where absence of information indicates non-compliance.

Constraints that verify correctness, or rather conformance, to requirements are required to be implemented based on underlying information representations (e.g. ontology). Some constraint assessments can be automated whereas others require human intervention, particularly where qualitative requirements are involved. For example, informed consent requires the request to be clear and unambiguous - which needs to be evaluated manuallyFootnote 1.

A test for compliance contains verification of (one or more) constraints where results indicate compliance with identified requirements. By linking the constraint with relevant points or concepts within GDPR, it is possible to generate and document ‘coverage’ of compliance. For example, for constraints generated from identified requirements, by having their results linked to the GDPR, the number of tests passed indicates compliance with set of linked GDPR points or articles.

Constraints can be linked to each other to formulate dependency relationships. This can make testing for compliance more efficient by identifying common dependencies. It also allows creating logical groupings of related constraints. Such groupings can be based on functionality or relation to GDPR such as association with one concept or one specific article. For example, requirements for validity of consent are grouped from individual constraints for each requirement (e.g. clear, unambiguous), with requirements for explicit consent containing only additional constraints along with the group for valid consent.

2.2 Model of Processes

Representing a model or template of processes as machine-readable metadata has advantages in terms of ex-ante verification of compliance. This allows creating constraints that specifically check whether the model of processes follows the requirements gathered from GDPR. This is distinct from verification of compliance using records or logs of processing which constitute as ex-post compliance. For example, verifying whether the consent collection mechanism follows requirements for valid consent is done by representing the mechanism as a model and checking constraints associated with validity of given consent.

The model also allows testing for existence of internal processes regarding handling of data subject rights and data breaches. The metadata representation of model enables creating a persistent snapshot of processes for planning, conducting an impact assessment (DPIA), and inspecting past compliance. Additionally, creating and testing a model allows abstraction of information common to instances such as notice or dialogue for consent - which is common to all or a significant number of data subjects. By abstracting such common information into the model of the process, actual instances of given consent need to be linked only with the relevant attributes and can refer to the model for more information regarding compliance.

Using models also makes the testing process more efficient in terms of reducing the number of tests to be conducted. If a model is verified to be compliant using prior testing, then its instances can be verified to be compliant using only the constraints specific to the instance. For example, when verifying compliance for processing using given consent as a legal basis, the validity of given consent also needs to be evaluated. By abstracting the model of collecting consent and verifying it to be compliant, the given consent used in processing is assumed to be valid. The only constraint that needs to be tested is therefore whether the processing is permitted based on the interpretation of given consent.

2.3 Testing and Documentation

The requirements and constraints by themselves are universal in that they can be expressed without dependence on any technology or information representation. Adapting constraints into an testing framework requires basing it on the underlying models and information representations. For example, where information is defined using RDF+OWL, the testing framework is created using relevant technologies that can query and validate RDF+OWL - such as using SPARQL [19] and SHACL [9] respectively. In this case, the information format (RDF) itself enables the use of semantics which assists in linking the information, constraints, and results with points of relevance within the GDPR. Where the underlying information format does not inherently supporting semantics, these can be added as metadata to the test results to link them with GDPR.

Having the information or metadata format be machine-readable and interoperable allows taking advantage of querying and validation. The testing framework needs to be aware of the vocabularies and technologies used to represent the information and should persist results using machine-readable metadata. Tests should be defined at a granular level to enable actionable constraints such as “personal data (category) should have a source”. These are then combined to create larger and more complex tests, which is similar to the creation of ‘unit’ tests and combining them into modules to test complex functionality. For example, testing whether personal data collected from users and shared with a third party with legal basis of consent adheres to given consent requires verification using constraints that test - (a) source of personal data (user) (b) third party identity (c) legal basis, and (d) matching processing with given consent.

The results of tests are associated with articles or concepts within GDPR based on the requirements used to generate constraints. Depending on the extent of machine-readable information used, it is possible to also include information such as (a) representation of processes (b) testing constraints (c) results of internal evaluations (d) text of GDPR. The end result of the testing process is a report that lists compliance with GDPR in the form of requirements (un-)fulfilled.

3 Demonstration Using Use-Case

3.1 Creating the Data Graph

For the use-case, we chose the consent mechanism on quantcast.com website, depicted in Fig. 1, and modelled the data graph based on information presented in the consent dialogue and the website. The choice of website was made based on Quantcast being a provider of GDPR consent collection mechanism using the IAB consent frameworkFootnote 2. The website was also one of the few (to the authors’ knowledge) that allows changing/withdrawing consent using the same dialogue. We chose to include information from the website about analytics services provided by Quantcast as it uses personal data. More information on the creation of data graph is available onlineFootnote 3.

Consent dialogues on quantcast.com (clockwise from top-left) (a) first screen (b) default options on selecting “I Accept” (c) default options on selecting “Show Purposes” (c) Third parties listed for purpose “Personalisation”

We used GDPRovFootnote 4 (which extends PROV-O [10] and P-Plan [3]) to model personal data and consent workflows, and GConsentFootnote 5 to model consent attributes and given consent. GDPRov allowed representing processes and personal data mentioned in the consent dialogue as models. GConsent allowed expressing consent using attributes such as medium and status. Where there was an overlap, such as for personal data and purpose, we used both to define the instance.

We collected personal data categories from the descriptions in the consent dialogue as well as other pages on the website describing various products and services offered by Quantcast. We defined the source of personal data as ‘user’ where data collection was mentioned in the consent dialogue, and ‘third party’ where explicitly defined. We defined processes for addressing the rights provided by GDPR using descriptions provided in the privacy policy. Where a URL or email address was provided regarding rights, we defined it as the IRI of the process for handling that right. We defined the IRI for DPO using the contact point provided in the policy.

We represented the consent collection mechanism on the website as an instance of gdprov:ConsentAcquisitionStep. This was defined as a step in the process QChoice representing the product Quantcast Choice. Similar processes were defined for Marketing, Advertisement, and Measurement identified from the information on the website. Each top-level description in the consent dialogue, e.g. Personalisation, was modeled as gdprov:Purpose and gc:Purpose with processing and personal data modeled from its description. The legal basis was defined using GDPRtEXTFootnote 6 and was associated at the process (purpose) or step (processing) level. We used given consent as the legal basis for purposes mentioned in the consent dialogue and legitimate interest otherwise.

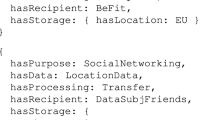

In the consent dialogue, the use of independent radio buttons was interpreted as allowing the user to consent and withdraw for each individual purpose, which was represented by creating separate instances of consent for each choice. We modelled the dialogue as an instance of gdprov:ConsentAgreementTemplateBundle consisting of several gdprov:ConsentAgreementTemplate instances to represent multiple individual consent entities. We had difficulty in interpreting the language used for third parties as it suggests the user is giving consent directly to third parties rather than to Quantcast. Pending clarification from legal experts, we chose to represent these as data recipients rather than as Controllers or Joint-Controllers for ease of testing. This allowed us to represent the data sharing processes in a concise manner with each purpose being associated with the hundreds of third parties listed in the consent dialogue rather than defining a separate consent representation for every third party. For testing, we defined an instance of given consent (see Fig. 2.) which was then later withdrawn. All resources associated with the data, constraints, and queries are available online(see footnote 3).

3.2 Testing Data Graph for Compliance

We defined constraints over the data graph using SHACL and its extension SHACL-SPARQL [9]. For testing, we used the SHACL validator binary provided by TopBraidFootnote 7. To distinguish between constraints that could be verified automatically and those that required manual consideration, we subclassed sh:NodeShape as AutomaticallyCheckedConstraint and ManuallyCheckedConstraint where manual tests checked the value of boolean properties. For example, the value of consentIsBySilence indicates whether consent is given by silence with valid value being xsd:false. The consent collection dialogue was considered as the input for manual tests regarding validity of consent. Appropriate result messages were associated with each constraint using sh:message. The property linkToGDPR was defined to linking constraints with GDPR using GDPRtEXT. An example constraint is provided in Listing 1.1.

For evaluation, we defined two sets of constraints following the outline provided in the approach described in Sect. 2. The first set validated instances of given consent against defined constraints, whereas the second set first validated the model of consent and then validated the instances of given consent using the validated model. For the second set, results from validating the model were persisted in data graph in order to use them as input to validate given consent. A simple bash script was used to construct a pipeline that executed constraints and stored results as a rdf/turtle file.

For ease of evaluation, we generated a combined data graph consisting of data from Quantcast and ontologies used (GDPRov, GConsent, GDPRtEXT). We added this data graph along with results of SHACL validation to a triple-store (GraphDB Free EditionFootnote 8) under separate graphs. We then executed SPARQL queries to query the data graph and generate reports.

We used three separate queries to facilitate different actions associated with compliance. The first query listed the distinct messages from failing tests as actionable items. The second query listed the compliance of applicable GDPR articles using links from constraints and their verification. The third query, shown in Listing 1.2, generated a test report, depicted in Table 2, containing the constraint description, type - automatic (A) or manual (M), link to GDPR, result - pass (P) or fail (F), node (instance in data graph), and failure message (not shown in table). The results from these queries were then used to generate a compliance report to document the state of maintaining compliance and actions required. The report contains results of queries related to compliance [16]. The documentation regarding creating the data graph, constraints, and testing, along with the SPARQL queries and generated report is available online (see footnote 3) Fig. 3.

4 Related Work

The approach presented in this paper acts on machine-readable metadata representation of processes and workflows associated with personal data and consent. An alternative to this is an approach that uses ODRL policies [5] for assessment of compliance using questions constructed from GDPR [1]. The ODRL policy consists of constraints classified as Feature, Discretional, and Dispensation with Rule used to specify them as Permission, Prohibition, or Duty. The policies are linked to the relevant text in GDPR using RDF properties similar to the use of GDPRtEXT in this paper. The questions are used in a tool that incorporates human feedback and generates an assessment report. This is useful to incorporate the manual testing requirements from our approach, as well as to present the results from validation as a feedback process.

The Scalable Policy-aware Linked Data Architecture For Privacy, Transparency and Compliance (SPECIAL) is an European H2020 project that provides a semantic-web framework for the generation of logs that enable ex-post GDPR compliance verification [8]. Their compliance engine can also be used to perform ex-ante compliance checks [2] using a model-based approach similar to the one advocated by GDPRov. The compliance assessment in SPECIAL focuses on determining whether the specified use of purposes, processes, and personal data is allowed by the specified legal basis such as consent. This can be incorporated in our approach to determine the validity of constraints related to use of given consent for data processing operations.

Other related work includes PrOnto [11] - a legal ontology of concepts related to privacy agents, personal data types, processing operations, rights and obligations. Based on the examples shown in its associated publications, PrOnto can be used to define the underlying data graph and the constraints for compliance validation. The W3C Community Group for Data Protection Vocabularies and ControlsFootnote 9 (DPVCG) is currently working on taxonomies for purposes, data processing, consent, personal data, technical and organisational measures, and legal basis which will provide a vocabulary for the representation and documentation of such processes. Layered Privacy Language (LPL) [4] can be used to model privacy properties such as personal privacy, user consent, data provenance, and retention management for the GDPR, and can be used to define the constraints using its authorisation-based modeling.

5 Discussion

In this section, we provide a broad discussion of how our test-driven approach can be used as a practical tool by stakeholders and the challenges in its adoption for real-world cases. Considering that processes and activities in an organisations are traditionally documented without semantics, it could be tedious and cumbersome to adopt the semantic-web based framework described in this paper. However, as mentioned earlier, the test-based approach can also be used with existing representations by adding semantics to the test results and reports to link them with relevant information such as the articles in GDPR. This is also applicable towards persisting outputs of reports generated from tools [1] and conformity assessments (CAP) [6].

The advantages of representing processes with semantics goes beyond testing for compliance as representation of processes are also useful for planning of operations and internal documentation. Semantic representations of processes can assist in automating the generation of documentation such as privacy policies where processes are listed along with their purpose, legal basis, and use of personal data. Privacy policy generators that generate boilerplate policies exist online, but do not incorporate semantics. The use of semantics allows queryable machine-readable metadata that can be used in tools towards understanding and evaluating complex policies for users and authorities.

The modeling of third parties as data recipients in Sect. 3.1 shows the challenges in representing complexities when it comes to GDPR compliance. A report of cases regarding data protection [20] further shows instances where individual use-cases differ significantly, which could indicate that an universal ontology to represent such processes may not be feasible. A more practical approach could be to create taxonomies and use them in ontology design patterns for compliance. The DPVCG taxonomies could be used alongside existing ontologies to create compliance design patterns to address GDPR requirements. This follows open technological solutions such as the SPECIAL project that drive adoption of semantics in the regulatory compliance space.

6 Conclusion

This paper demonstrates the benefits of using a test-driven approach towards maintaining ongoing GDPR compliance by using semantic representations of processes. The approach generates and checks constraints for adherence to GDPR requirements and persists the results towards compliance documentation. The prototype demonstration provides an example of testing using a real-world website’s consent mechanism using previously-published ontologies to represent processes (GDPRov), consent (GConsent), and GDPR (GDPRtEXT), with SHACL used to test requirement constraints.

In conclusion, the generation of compliance reports by incorporating semantics into the testing process is useful to maintain and document the state of compliance at a given time as well as to demonstrate the ongoing compliance for changes to the data processes within an organisation. While the demonstration in this paper only covers a small set of requirements for GDPR, namely those associated with given consent, it is sufficient to demonstrate the value of the approach and the use of semantics for compliance.

Notes

- 1.

While it may be possible to use NLP-based approaches to evaluate the complexity of language to determine whether it is clear and unambiguous, such approaches cannot be assumed to be universally applicable, and therefore require a manual assessment.

- 2.

IAB Transparency and Consent Framework https://advertisingconsent.eu/.

- 3.

Paper and Resources https://w3id.org/GDPRep/semantic-tests.

- 4.

GDPRov Ontology https://w3id.org/GDPRov.

- 5.

GConsent Ontology https://w3id.org/GConsent.

- 6.

GDPRtEXT Ontology and Resource https://w3id.org/GDPRtEXT.

- 7.

TopBraid SHACL https://github.com/TopQuadrant/shacl/.

- 8.

GraphDB Triple-Store http://graphdb.ontotext.com/.

- 9.

References

Agarwal, S., Steyskal, S., Antunovic, F., Kirrane, S.: Legislative compliance assessment: framework, model and GDPR instantiation. In: Medina, M., Mitrakas, A., Rannenberg, K., Schweighofer, E., Tsouroulas, N. (eds.) APF 2018. LNCS, vol. 11079, pp. 131–149. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-02547-2_8

Fernández, J.D., Ekaputra, F.J., Ruswono, P., Kiesling, E., Azzam, A.: Privacy-aware linked widgets. In: 1st Workshop on Fairness, Accountability, Transparency, Ethics, and Society on the Web. In Conjunction with The Web Conference 2019, p. 8 (2019)

Garijo, D., Gil, Y.: The P-Plan Ontology, March 2014. http://vocab.linkeddata.es/p-plan/

Gerl, A., Bennani, N., Kosch, H., Brunie, L.: LPL, towards a GDPR-compliant privacy language: formal definition and usage. In: Hameurlain, A., Wagner, R. (eds.) Transactions on Large-Scale Data- and Knowledge-Centered Systems XXXVII. LNCS, vol. 10940, pp. 41–80. Springer, Heidelberg (2018). https://doi.org/10.1007/978-3-662-57932-9_2

Iannella, R., Villata, S.: ODRL Information Model 2.2, February 2018. https://www.w3.org/TR/odrl-model/

Kamara, I., Leenes, R., Lachaud, E., Stuurman, K., van Lieshout, M., Bodea, G.: Data protection certification mechanisms - study on articles 42 and 43 of the Regulation (EU) 2016/679. Technical report, Directorate -General for Justice and Consumers, Unit C.3 Data Protection and Unit C.4 International Data Flows and Protection, February 2019

Kingston, J.: Using artificial intelligence to support compliance with the general data protection regulation. Artif. Intell. Law 25(4), 429–443 (2017). https://doi.10/gfxvtc, https://doi.org/10.1007/s10506-017-9206-9

Kirrane, S., et al.: A scalable consent, transparency and compliance architecture. In: Gangemi, A., et al. (eds.) ESWC 2018. LNCS, vol. 11155, pp. 131–136. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-98192-5_25

Knublauch, H., Kontokostas, D.: Shapes Constraint Language (SHACL). https://www.w3.org/TR/shacl/

Lebo, T., et al.: PROV-O: The PROV Ontology (2013)

Palmirani, M., Martoni, M., Rossi, A., Bartolini, C., Robaldo, L.: PrOnto: privacy ontology for legal reasoning. In: Kő, A., Francesconi, E. (eds.) EGOVIS 2018. LNCS, vol. 11032, pp. 139–152. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-98349-3_11

Pandit, H.J., Debruyne, C., O’Sullivan, D., Lewis, D.: GConsent - a consent ontology based on the GDPR. In: Hitzler, P., et al. (eds.) ESWC 2019. LNCS, vol. 11503, pp. 270–282. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-21348-0_18

Pandit, H.J., Fatema, K., O’Sullivan, D., Lewis, D.: GDPRtEXT - GDPR as a linked data resource. In: Gangemi, A., et al. (eds.) ESWC 2018. LNCS, vol. 10843, pp. 481–495. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-93417-4_31

Pandit, H.J., Lewis, D.: Modelling provenance for GDPR compliance using linked open data vocabularies. In: Proceedings of the 5th Workshop on Society, Privacy and the Semantic Web - Policy and Technology (PrivOn2017) (PrivOn) (2017). http://ceur-ws.org/Vol-1951/PrivOn2017_paper_6.pdf

Pandit, H.J., O’Sullivan, D., Lewis, D.: Exploring GDPR compliance over provenance graphs using SHACL. In: Proceedings of the Posters and Demos Track of the 14th International Conference on Semantic Systems co-located with the 14th International Conference on Semantic Systems (SEMANTiCS 2018), Vienna, Austria (2018). http://ceur-ws.org/Vol-2198/paper_120.pdf

Pandit, H.J., O’Sullivan, D., Lewis, D.: Queryable provenance metadata For GDPR compliance. In: Procedia Computer Science. Proceedings of the 14th International Conference on Semantic Systems 10th - 13th of September 2018 Vienna, Austria, vol. 137, pp. 262–268, January 2018. http://doi.org/10/gfdc6r, http://www.sciencedirect.com/science/article/pii/S1877050918316314

Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repealing Directive 95/46/EC (General Data Protection Regulation). Off. J. Eur. Union L119, 1–88, May 2016. http://eur-lex.europa.eu/legal-content/EN/TXT/?uri=OJ:L:2016:119:TOC

Schiffner, S., et al.: Towards a roadmap for privacy technologies and the general data protection regulation: a transatlantic initiative. In: Medina, M., Mitrakas, A., Rannenberg, K., Schweighofer, E., Tsouroulas, N. (eds.) APF 2018. LNCS, vol. 11079, pp. 24–42. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-02547-2_2

SPARQL 1.1 Query Language. https://www.w3.org/TR/sparql11-query/

Zanfir-Fortuna, G.: Processing personal data on the basis of legitimate interests under the GDPR: Practical Cases. Technical report, Nymity (2018)

Acknowledgements

This work is supported by the ADAPT Centre for Digital Content Technology which is funded under the SFI Research Centres Programme (Grant 13/RC/2106) and is co-funded under the European Regional Development Fund.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2019 The Author(s)

About this paper

Cite this paper

Pandit, H.J., O’Sullivan, D., Lewis, D. (2019). Test-Driven Approach Towards GDPR Compliance. In: Acosta, M., Cudré-Mauroux, P., Maleshkova, M., Pellegrini, T., Sack, H., Sure-Vetter, Y. (eds) Semantic Systems. The Power of AI and Knowledge Graphs. SEMANTiCS 2019. Lecture Notes in Computer Science(), vol 11702. Springer, Cham. https://doi.org/10.1007/978-3-030-33220-4_2

Download citation

DOI: https://doi.org/10.1007/978-3-030-33220-4_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-33219-8

Online ISBN: 978-3-030-33220-4

eBook Packages: Computer ScienceComputer Science (R0)