Abstract

Approximately 3% of live newborn children suffer from cleft lip syndrome. Technology can be used in the medical area to help treatment for patients with Congenital Facial Abnormalities in cases with oral fissures. Facial dysmorphism classification level is relevant to physicians, since there are no tools to determine its degree. In this paper, a mobile application is proposed to process and analyze images, implementing the DLIB algorithm to map different face areas in healthy pediatric children, and those presenting cleft lip syndrome. Also, two repositories are created: 1. Contains all extracted facial features, 2. Stores training patterns to classify the severity level that the case presents. Finally, the Dendrite Morphological Neural Network (DMNN) algorithm was selected according to different aspects such as: one of the highest performance methods compared with MLP, SVM, IBK, RBF, easy implementation used in mobile applications with the most efficient landmarks mapping compared with OpenCV and Face detector.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Mobile computing has been developed particularly and emphatically towards practical processes that aim to be 100% functional. In technological contexts, there has been an attempt to improve the indicators of the respective uses of mobile applications in the health sector among the clinical services offered. A concrete example is to present cases of patients with some physical irregularity in the face, known as Facial Dysmorphia (FD), caused by poor fusion in the tissues that generate the upper lip during embryonic development.

Size, shape and location of the separations vary enormously. In some cases, newborns who suffer from this anomaly, have a small nick in the upper lip, others have a separation that goes up to the base of the nose, and such separation in the middle of the lip [1] technological advances of today have given rise to a variety of algorithms that allow us to identify faces of human beings, extract relevant information for facial processing, analysis and landmarks [2]. In literature there are techniques of Artificial Intelligence (AI) [3,4,5] and [6] with technology advances support [7], it is possible to solve problems of this nature [8]; achieving interpretation of properties and comparison with databases, to recognize objects or people in controlled environments. Thus, participating in the health sector [9,10,11].

Unfortunately, in the health sector and pediatric clinical services that attend to cases of patients with some Congenital Facial Abnormality (CFA), there is no portable application accurate enough for physiognomic level severity assessment so as not to depend on traditional systems based on subjective clinical observations by physicians. Nor is there a repository with physiognomic information extracted from portraits of newborn children up to the age of 12 months, healthy or with some facial dysmorphia, specifically on the lips. To carry out investigations to address the problem above, we present a mobile application for Android that uses DLIB library, tool that contains a wide range of open source machine learning algorithms, through a C++ API, with a applications diversity (object and face recognition, face pose estimator, and so on) [12].

This algorithm is used mainly to map face dots (landmarks) and from them generate a repository with characteristic features of individuals without FD, or with some facial anomaly given the degree of asymmetry present in North and Central America, with which the tool manages to determine if a person presents facial recognition patterns with irregular measurements. In short, the objective of the proposal is that the database be used in future research, that can impact health, technology and science sectors.

This work aims to raise awareness among specialists in different areas (Systems, computing and related areas) about the importance of carrying out relevant studies in this type of diagnostics, thus involving public and private health sector institutions. The rest of this article is structured as follows: Sect. 2 gives state-of-the-art, general and relevant overview of the proposal. Section 3 presents method, phases and repository main components. Section 4 focuses on experimental results where the app with classification method used in the recognition process is tested and compared with other classifiers. Section 5 shows results, discussions and conclusions towards future works.

2 Related Work

OpenCV is a recognized algorithm to solve emotional recognition problems based on facial expressions, object detection, point mapping, and so on. The first task was to find an algorithm to map the face efficiently; it was observed that OpenCV presented irregularities in some adult’s face areas. Also, algorithms are used to extract the face features, and another to obtain its value [13,14,15]. Our research uses an algorithm that map, extract its value and presented the best result compared with other algorithms used in our propose. The AI use techniques such as Support Vector Machine (SVM), k-Nearest Neighbors (KNN), Multilayer Perceptron (MLP), and so on. To classify emotions [3,4,5,6, 13, 14, 16], Dendrite Morphological Neural Network (DMNN) to a nontrivial problem (atrophy in upper lip) were used.

Also, landmarks have been used for facial expressions recognition [13, 14, 17], facial asymmetry [17, 18] specifically with cleft lip [19] y [20]; each algorithm considers a different number of points adapted to the problem. However, in [21], it makes a comparison of approach errors to map 37, 68, 84 and 194 points; and it is seen that it will be more precise that with more points in the curves than in the complete form. Other tasks such as [5, 6, 8, 21] besides the anthropometric and geometric points [22], propose implementing morphometric measurements (distances or angles) [6, 22]. Unfortunately, our investigation on Euclidean distance and angles applied to open cleft lip problems was not functional. Most of these investigations merge different techniques (learning, landmarks, distance metrics, analysis methods, among others) to improve response accuracy. The proposed method uses: 68 landmarks, 8 of these are key points, computation of distances in radians and a method to classify facial asymmetry level of infants with open cleft lip.

3 Proposed Method

3.1 Preprocessing and Features Extraction

The requirements of the app, the repository and the descriptive features will be identified to train and classify the type of facial dystrophy of those pediatric individuals who present the diagnosis (cleft lip), described below:

Software and hardware requirements. Android 5.0 Operating System (Lollipop), image normalization with: 2-6 Mega pixels (MP) resolution, format (jpg., png., bmp.). Avoid object saturation, such as: landscapes, portraits, objects, among others. Newborns photographs: healthy ones and others suffering from the syndrome, specifically cleft lip (0 and 12 months). Areas of interest: Face limit- It refers to the facial limits, that is. Nose– Central axis (lobule) for this analysis purpose. Lips– Considering the dividing line between upper and lower lips. Landmarks map: The drawing process was made with DLIB facial detection algorithm [12], detecting 68 points on digital image (Table 1), and draws the faces (frontal, inclined or lateral).

Images analysis process. An image will be selected to graph the landmarks using different colors (blue, red, yellow, magenta and green; outer limit of cheeks, eyebrows, eyelid area, nose width and height, lower and upper lip, respectively).

Repository construction will be generated containing records with 142 properties (Table 1), obtained from images of healthy children; plus others with facial dysmorphism and infants who had the disease and who underwent surgery in more than two sessions, depending on the type of condition, which will be used for the normalization, classification and to determine the three levels of asymmetry addressed in this article. The Table 1 first column, represent the total attributes, each point labeled with the recovered landmarks in 2D P(x, y), represented in Cartesian plane (right and upper side (+), left and lower side (−)), and the two subsequent columns indicate the landmark number and its description.

3.2 Normalization and Feature Extraction

The normalization process performs a Cartesian to polar \( \left( {x,y} \right) \to \) \( \left( {r,\theta } \right) \). The coordinates \( \left( {x,y} \right) \) represent the geometric location of the extracted facial points, \( r = \rho \) also known as rho, represents the distance between the origin (landmark 31) and the interest points (upper lip landmarks) and \( \theta \) is the elevation angle between polar axis (landmark 31) and the object. Then, we get \( \rho \) by means of:

Now, we will obtain a vector of \( \theta \) associated with location of \( \left( {x,y} \right) \) corresponding to the interest area points (upper lip) in a range of \( - \pi \) to \( \pi \), thus

Where \( atan2_{i} \) obtains the distances (represented in angles as radian unit measurements) between the point 31 and the points of the upper lip (Table 1). Each set is in \( a_{ji} \). After that, pair point differences are computed by Eq. (3).

Where \( d_{ji} \) represents the differences, \( a \) has all the measures Eq. (2) with respect to the polo (31), j = n records, i = n pair groups, \( k_{1} \) y \( k_{2} \) indicate the landmark number. Finally, We compute by arithmetical mean of differences, thus

3.3 Training and Classification

The training process of a classifying method requires identifying the attribute \( C_{j} \) to classify properly. Then, we delimit the proper classification ranges of each type by Eq. (4).

Where max and min gets the upper and lower limit of the means \( M_{j} \). The ranges denoted by \( R_{j} \) (Table 2). To label each record by class name, thus obtains

Table 2 shows the asymmetry level; we consider 3 class (\( C_{j} \)): Severe \( {\text{C}}_{0} = S \): physical features determining notorious facial amyotrophy presence, Moderate \( {\text{C}}_{1} = M \): People who have been surgically treated due to facial disease presence, almost null \( {\text{C}}_{2} = N \): No physical features determining facial amyotrophy. The classification stage uses the ranges (Table 2) obtained from the Eq. (6) to perform and accurate classification.

The document (.csv) for training, will be composed of 8 attributes: \( d_{j1} = \left( {49,53} \right),d_{j2} = \left( {50,52} \right),d_{j3} = \left( {61,63} \right),d_{j4} = \left( {67,65} \right),a_{j5} = \left( {51} \right),a_{j6} = (62) \), \( M_{j} \) and \( {\text{C}}_{j} \), each with 105 cases of healthy children, another 35 with facial dysmorphism and 20 infants who had the disease or underwent surgery in more than two sessions depending on the type of condition.

4 Experimental Results and Discussions

The interface GUI is composed of four modules: picture take.– in order to capture and store file; portrait analysis.– to normalize and analyze photographs using the facial detection algorithm from an intelligent device and save information in the BD; Create file.– Retrieve relevant data to create file with the necessary attributes for training. Files compression. – The functionality of this module is evaluated by the end user by coordinating the number of documents to be compressed. Finally, modeling controls generated errors and authors involved. With the above, it is possible using the DLIB algorithm [12] to analyze the captured image. For the analysis process first, an image is selected to graph the landmarks (Fig. 1) using different colors (blue, red, yellow, magenta and green; outer limit of cheeks, eyebrows, eyelid area, nose width and height, lower and upper lip, respectively).

The estimation stage shows the user the case number examined, the value of the arithmetic mean corresponding to the current image, and the facial severity level of the selected case (Fig. 1). Finally, it generates a file in the indicated format. The drawing process was made using DLIB facial detection algorithm; the selection of this detector was due to its better performance in comparison with OpenCV and Face Detector which showed great instability when working with images with these characteristics.

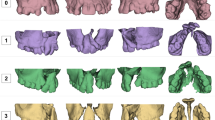

The proposed application “Queiloquisis”, was tested using point location process and facial detection algorithm (DLIB) generating 68 landmarks, on different banks of 140 images [1], from Faces + [23], New México Cleft Palate Center [24], and St. Louis Children’s Hospital [25, 26]. The rest of the images used in the investigation were captured from a mobile device. Figure 2 shows the healthy individuals images mapping and who present the disease known as open cleft lip and Fig. 3 cases presenting of the disease with several surgical interventions.

The face axes are stored by coordinates x and y. Therefore, the positions of the points (Fig. 2) cover a dimension of the face from 0 to 100 pixels (left side) and from 0 to 250 pixels (right side), resulting in a very variable proportion, which was controllable. Another aspect of determination when selecting the facial recognition algorithm was based on high performance, reflected when analyzing the total samples, reaching an error margin of 4%, 15% and 18% with the DLIB algorithm, OpenCV and Face Detector, respectively. Since the facial detection algorithm (DLIB) is not able to determine the facial atrophy level, a model is implemented for interpretation.

Experiments are carried out and conjectures of distance between two points are discarded, due to the irregularities of the face (curves impossible to be drawn in Cartesian coordinates). When analyzing a healthy person photograph, the distance between the right corner of the lip and the left side in relation to the nose lobe is \( 3.459 \ldots n \) and \( 5.686 \ldots n \) units, respectively, generating an imbalance that directly alters the result. However, when performing the above analysis (Eqs. 1–4), the result is \( 1.543 \ldots n \) and \( 1.540 \ldots n \) units. With these values it is demonstrated that the normalization process is adequate to the research needs, generating good results.

Table 3 shows the results with Weka and the used classification methods, applying a dataset normalized (Eqs. 1–6). For the MLP, a sigmoid polynomial function, five hidden layers, moment = 0.2 and learning rate = 0.3. For SVM, binary classifier with polynomial kernel of degree 2 was used. K-nearest neighbors IBK, Euclidean distance search algorithm and k = 1. For Radial Basis Function RBF, with 2 Clusters and normalized Gaussian. For DMNN, M = 0.25 and 23 dendrites; technique recently proposed by [27] and used in other experiments such as [28, 29].

The Table 3 demonstrate the performance on the DMNN improves the results obtained and shows the classification error when manipulating a dataset with these characteristic, to have an estimated error generalization, 100%, 80% and 70% of data for training and the other samples were used for testing on each of the 3 class were used.

The results of most of the algorithms used show a high performance. Therefore, out of the four, the best DMNN is implemented in the application to determine image symmetry level (Fig. 1). In addition, to know the performance of the classification technique, performance metrics are generated, considering 3 classes, see Table 4.

Table 5 is generated achieving a statistical Kappa of 92.86% and a correct data classification rate of 96% (overall accuracy). To get a better evaluation of the model, different metrics are considered, reaching a true positives rate of 96%, demonstrating a classification model good performance.

We conclude this section, emphasizing that this research focus does not imply representing or showing which side represents more asymmetry, but the level of asymmetry that a case presents.

5 Conclusions and Future Works

This work depends on the medical area, facilitating the analysis process and classification asymmetry level in images of pediatric patients within and without congenital facial diseases, improving the response time, efficiency, capacity, execution level, quality control and above all a control of information to be abundant and accurate. This allowed us to have a broader view of the latent needs in this field.

The relevance of this document lies in four elements: (a) implementation of the facial detector (DLIB), algorithm with better performance compared to OpenCV and Face detector; (b) Images normalization for a better analysis, (c) Distance calculations converted to radians to obtain a high margin of reliability, ensuring useful results, reliable and functional for the health sector and (d) The classifier method DMNN was created recently and this word demonstrated the best performance in infant facial asymmetry classification with cleft lip.

Finally, this article showed the research potential, making feedback possible in: (a) the understanding of training and the pediatric facial dysmorphic syndrome classification, (b) the scarcity of relevant information in this diagnosis; reason for which a repository with features and characteristic patterns of the condition (cleft lip) were built. It should be noted that this research does not only point towards this line of work, but it could also be directed to new investigations such as: (a) address this diagnosis in the bilateral symptom, (b) perform tests with other conjectures, (c) develop a hybrid application, and (d) create a shared information center (server, cloud, etc.) allowing for data linking among mobile devices running the application.

References

Christopher, D.: http://www.drderderian.com. Accessed 11 Feb 2018

Liu, M., et al.: Landmark-based deep multi-instance learning for brain disease diagnosis. Med. Image Anal. 43, 157–168 (2018)

Tang, X., et al.: Facial landmark detection by semi-supervised deep learning. Neurocomputing 297, 22–32 (2018). https://doi.org/10.1016/j.neucom.2018.01.080

Li, Y., et al.: Face recognition based on recurrent regression neural network. Neurocomputing 297, 50–58 (2018). https://doi.org/10.1016/j.neucom.2018.02.037

Deng, W., et al.: Facial landmark localization by enhanced convolutional neural network. Neurocomputing 273, 222–229 (2018). https://doi.org/10.1016/j.patrec.2016.07.005

Haoqiang, F., Erjin, Z.: Approaching human level facial landmark localization by deep learning. Image Vis. Comput. 47, 27–35 (2016). https://doi.org/10.1016/j.imavis.2015.11.004

Smith, M., et al.: Continuous face authentication scheme for mobile devices with tracking and liveness detection. Microprocess. Microsyst. 63, 147–157 (2018). https://doi.org/10.1016/j.micpro.2018.07.008

Barman, A., Paramartha, D.: Facial expression recognition using distance and shape signature features. Pattern Recogn. Lett. 000, 1–8 (2017). https://doi.org/10.1016/j.procs.2017.03.069

Josué Daniel, E.: Makoa: Aplicación para visualizar imágenes médicas formato DICOM en dispositivos móviles iPad. http://132.248.52.100:8080/xmlui/handle/132.248.52.100/7749. Accessed 22 Apr 2017

Jones, A.L.: The influence of shape and colour cue classes on facial health perception. Evol. Hum. Behav. 19–29 (2018). https://doi.org/10.1016/j.evolhumbehav.2017.09.005

Mirsha, et al.: Effects of hormonal treatment, maxilofacial surgery orthodontics, traumatism and malformation on fluctuating asymmetry. Revista Argentina de Antropología Biológica 1–15 (2018). https://doi.org/10.1002/ajhb.20507

DLIB: (2008). http://dlib.net/. Accessed 25 Oct 2017

Yang, D., et al.: An emotion recognition model based on facial recognition in virtual learning environment. Procedia Comput. Sci. 125, 2–10 (2018). https://doi.org/10.1016/j.procs.2017.12.003

Tarnowski, P., et al.: Emotion recognition using facial expressions. Procedia Comput. Sci. 108, 1175–1184 (2017)

Al Anezi, T., Khambay, B., Peng, M.J., O’Leary, E., Ju, X., Ayoub, A.: A new method for automatic tracking of facial landmarks in 3D motion captured images (4D). Int. J. Oral Maxillofac. Surg. 42(1), 9–18 (2013)

Fan, H., Zhou, E.: Approaching human level facial landmark localization by deep learning. Image Vis. Comput. 47, 27–35 (2016)

Liu, S., Fan, Y.Y., Guo, Z., Samal, A., Ali, A.: A landmark-based data-driven approach on 2.5D facial attractiveness computation. Neurocomputing 238, 168–178 (2017). https://doi.org/10.1016/j.neucom.2017.01.050

Economou, S., et al.: Evaluation of facial asymmetry in patients with juvenile idiopathic arthritis: Correlation between hard tissue and soft tissue landmarks. Am. J. Orthod. Dentofac. Orthop. 153(5), 662–672 (2018). https://doi.org/10.1016/j.ajodo.2017.08.022

Hallac, R.R., Feng, J., Kane, A.A., Seaward, J.R.: Dynamic facial asymmetry in patients with repaired cleft lip using 4D imaging (video stereophotogrammetry). J. Cranio-Maxillo-Fac. Surg. 45, 8–12 (2017). https://doi.org/10.1016/j.jcms.2016.11.005

Al-Rudainy, D., Ju, X., Stanton, S., Mehendale, F.V., Ayoub, A.: Assessment of regional asymmetry of the face before and after surgical correction of unilateral cleft lip. J. Cranio-Maxillofac. Surg. 46(6), 974–978 (2018)

Chen, F., et al.: 2D facial landmark model design by combining key points and inserted points. Expert Syst. Appl 7858–7868 (2015). https://doi.org/10.1016/j.eswa.2015.06.015

Vezzetti, E., Marcolin, F.: 3D human face description: landmarks measures and geometrical features. Image Vis. Comput. 30, 698–712 (2012). https://doi.org/10.1016/j.imavis.2012.02.007

Faces +, Faces + (Cirugía plástica, dermatología, piel y láser) de San Diego, California. http://www.facesplus.com/procedure/craniofacial/congenital-disorders/cleft-lip-and-palate/#results. Accessed 02 Mar 2017

New Mexico Cleft Palate Center. http://nmcleft.org/?page_id=107. Accessed 02 Mar 2017

Pediatric Plastic Surgery: Hospital de niños en St. Louis. http://www.stlouischildrens.org/our-services/plastic-surgery/photo-gallery. Accessed 10 Mar 2017

My UK Healt Care: Hospital de niños en Kentucky. http://ukhealthcare.uky.edu/kch/liau-blog/gallery/. Accessed 22 Mar 2017

Sossa, H., Guevara, E.: Efficient training for dendrite morphological neural networks. Neurocomputing 132–142 (2014). https://doi.org/10.1016/j.neucom.2013.10.031

Vega, R., et al.: Retinal vessel extraction using lattice neural networks with dendritic processing. Comput. Biol. Med. 20–30 (2015). https://doi.org/10.1016/j.compbiomed.2014.12.016

Zamora, E., Sossa, H.: Dendrite morphological neurons trained by stochastic gradient descent. Neurocomputing 420–431 (2017). https://doi.org/10.1016/j.neucom.2017.04.044

Acknowledgements

Griselda Cortés and Mercedes Flores wish to thank COMECYT and Tecnológico de Estudios Superiores de Ecatepec (TESE) for its support in development of this project. The authors also thank Juan C. Guzmán, Itzel Saldivar and Diana López for their work and dedication to this project with which they aim to obtain the degree in computer systems engineering in TESE.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Cortés, G., Villalobos, F., Flores, M. (2019). Asymmetry Level in Cleft Lip Children Using Dendrite Morphological Neural Network. In: Carrasco-Ochoa, J., Martínez-Trinidad, J., Olvera-López, J., Salas, J. (eds) Pattern Recognition. MCPR 2019. Lecture Notes in Computer Science(), vol 11524. Springer, Cham. https://doi.org/10.1007/978-3-030-21077-9_22

Download citation

DOI: https://doi.org/10.1007/978-3-030-21077-9_22

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-21076-2

Online ISBN: 978-3-030-21077-9

eBook Packages: Computer ScienceComputer Science (R0)