Abstract

Imbalance classification requires to represent the input data adequately to avoid biased results towards the class with the greater samples number. Here, we introduce an enhanced version of the famous twin support vector machine (TWSVM) classifier by incorporating an extended dual formulation of its quadratic programming optimization. Besides a centered kernel alignment (CKA)-based representation is used to avoid data overlapping. In particular, our approach, termed enhanced TWSVM (ETWSVM), allows representing the input samples in a high dimensional space (possibly infinite) after reformulation of the TWSVM dual form. Obtained results for binary classification demonstrate that our ETWSVM can reveal relevant data structures diminishing overlapping and biased classification results under imbalance scenarios. Moreover, ETWSVM notably adopts the lowest computational cost for training in comparison to state-of-the-art methods.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Imbalanced data refer to datasets in which one of the classes have a higher number of samples than the others. The class with the highest number of samples is called the majority class, while the class with the lowest number of samples is known as the minority class. Traditional classification models tend to deal with the minority class as noise, that is, the minority class inputs are deemed as rare patterns. Besides, in several applications, i.e., natural disaster prediction, cancer gene expression discrimination, fraudulent credit card transactions, the non-identification of minority class samples yields to a massive cost [1].

Some machine learning approaches have been developed in the past decade to deal with imbalanced data, most of which have been based on sampling techniques, ensemble methods, and cost-sensitive learning [2]. The sampling techniques are applied over data to balance the number of samples between classes; this is done by eliminating samples from the majority class (under-sampling) and/or creating synthetic samples of the minority one (over-sampling). However, these techniques can generate a loss of information by eliminating majority class samples, or overfitting due to the redundant information through generating synthetic minority class inputs [3]. Secondly, ensemble methods split the set of the majority class in several subsets with a size equal to the minority set. Then, this train as many classifiers as the number of majority class subsets. These approaches usually archive competitive performance; but, the computational cost is enormous since the number of classifiers to train [4]. In turn, cost-sensitive approaches modify the learning algorithm or the cost function by penalizing the misclassification of the minority class samples. Nevertheless, these techniques lead to overfitting, since it tends to bias the minority class [5]. In recent years, a twin support vector machines (TWSVM)-based algorithms take advantage of their generalization capacity and their low computational cost by constructing two non-parallel hyperplanes instead of only one hyperplane as the traditional support vector machine (SVM) [6]. Some TWSVM’s extensions include a structural data representation a favor the generalization capacity of the classifier [7]. Additionally, TWSVM-based approaches have been proposed to counter the imbalanced data effect combining resampling techniques, coupling a between-class discriminant algorithm, and weighting the primal problem to prevent the overtraining [8, 9]. Nonetheless, most of the TWSVM-based approaches do not include a suitable reproducing kernel Hilbert space (RKHS)-based representation, being difficult to incorporate an incorporate kernel approach within the optimization. Thus, they neglect the intrinsic formulation and virtues of a dual problem regarding kernel methods.

In this work, we propose a TWSVM-based cost-sensitive method that make a kernel-based enhancement of the TWSVM for imbalance data classification (ETWSVM), which allows representing the input samples in a high dimensional space. Also, we use a centered kernel alignment (CKA) method [10] with the objective of learning a kernel mapping to counteract inherent imbalanced issues, reduce the computational time and enhance the data representation. As benchmarks, we test the standard SVM classifier [11], a support vector machine with slack variables regulated [5], a SMOTE with SVM [12], and a weighted Lagrangian twin support vector machine (WLTSVM) [13] for binary classification. Results obtained from benchmarks databases of the state-of-the-art show that our approach outperforms the baseline methods concerning both the accuracy and the geometric mean-based classification assessment.

The remainder of this paper is organized as follows: Sect. 2 describes the material and methods of the approach proposed for classification. Sections 3 and 4 describe the experimental set-up and the results obtained, respectively. Finally, the concluding remarks are outlined in Sect. 5.

2 Enhanced TWSVM

Nonlinear Extension of the TWSVM Classifier. Let  be an input training set, where each sample \({\varvec{x}}_n\) can belong to the minority class matrix

be an input training set, where each sample \({\varvec{x}}_n\) can belong to the minority class matrix  (

( ), or to the majority class matrix

), or to the majority class matrix  (

( ), being P the number of features and

), being P the number of features and  the number of samples. The well-know extension of the SVM classifier, termed twin support vector machine (TWSVM), relaxes the SVM’s quadratic programming optimization (QPP) by employing two non-parallel hyperplanes, as follows:

the number of samples. The well-know extension of the SVM classifier, termed twin support vector machine (TWSVM), relaxes the SVM’s quadratic programming optimization (QPP) by employing two non-parallel hyperplanes, as follows:  , where

, where  is a normal vector concerning \(f^\ell ({\varvec{x}})\), and

is a normal vector concerning \(f^\ell ({\varvec{x}})\), and  is a bias term. Traditionally, the non-linear extension of the TWSVM includes a kernel within the discrimination function as

is a bias term. Traditionally, the non-linear extension of the TWSVM includes a kernel within the discrimination function as  , where

, where  is the n-th element of the vector

is the n-th element of the vector  , and

, and  is a kernel function. Nonetheless, such a non-linear extension does not consider directly a reproductive kernel Hilbert space (RKHS) within the empirical risk minimization-based cost function of the TWSVM, that is to say, it does not consider a mapping to a high dimensional (possible infinite) feature space.

is a kernel function. Nonetheless, such a non-linear extension does not consider directly a reproductive kernel Hilbert space (RKHS) within the empirical risk minimization-based cost function of the TWSVM, that is to say, it does not consider a mapping to a high dimensional (possible infinite) feature space.

So, we introduce a non-linear extension of the TWSVM, termed NTWSVM, optimization functional towards the mapping  , where

, where  . Thereby, the hyperplanes can be rewritten as:

. Thereby, the hyperplanes can be rewritten as:  . In particular, each hyperplane plays the role of a one-class classifier, aiming to enclose its corresponding class. Next, the NTWSVM’s primal form yields:

. In particular, each hyperplane plays the role of a one-class classifier, aiming to enclose its corresponding class. Next, the NTWSVM’s primal form yields:

where

is a slack variable vector for the l-th class;

is a slack variable vector for the l-th class;  are regularization parameters, the first one regularizes the slack variables and the second one the model parameters \(\hat{{\varvec{w}}}^\ell \) and \(\hat{b}^\ell \) (rules the margin maximization). Besides,

are regularization parameters, the first one regularizes the slack variables and the second one the model parameters \(\hat{{\varvec{w}}}^\ell \) and \(\hat{b}^\ell \) (rules the margin maximization). Besides,  is an all ones row vector,

is an all ones row vector,  being

being  an identity matrix, \(\ell '=-\ell \), and \({||\cdot ||}_2\) stands for the 2-norm. Later, the Wolfe dual form of Eq. (1) can be written as follows:

an identity matrix, \(\ell '=-\ell \), and \({||\cdot ||}_2\) stands for the 2-norm. Later, the Wolfe dual form of Eq. (1) can be written as follows:

where  is a Lagrangian multiplier,

is a Lagrangian multiplier,  holds elements

holds elements  , \(\kappa (\cdot ,\cdot )\) is a multivariate Gaussian kernel,

, \(\kappa (\cdot ,\cdot )\) is a multivariate Gaussian kernel,  is an extended column vector of the matrix

is an extended column vector of the matrix  ,

,  holds elements

holds elements

, and \({\varvec{I}}_{P+1}, {\varvec{I}}_{D^\ell +1}\) are identity matrix of proper size. Hence, the NTWSVM hyperplanes yields:

, and \({\varvec{I}}_{P+1}, {\varvec{I}}_{D^\ell +1}\) are identity matrix of proper size. Hence, the NTWSVM hyperplanes yields:  , where

, where  holds elements

holds elements  and

and  . Finally, the label of the new input is computed as the distance to the closest hyperplane as

. Finally, the label of the new input is computed as the distance to the closest hyperplane as  , where \(|\cdot |\) stands for the absolute value. It worth noting that due to the one-class foundation of the NTWSVM approach, the distance to the hyperplanes are proportional to the score magnitude.

, where \(|\cdot |\) stands for the absolute value. It worth noting that due to the one-class foundation of the NTWSVM approach, the distance to the hyperplanes are proportional to the score magnitude.

CKA-Based Enhancement of the NTWSVM. To avoid instability issues concerning the inverse of the matrix \({\varvec{\varSigma }}\) and to reveal relevant data structures for imbalance classification tasks, we use a centered kernel alignment (CKA)-based approach to infer  , where

, where  \((P'\le P+1)\). In fact, we learn \({\varvec{E}}\) as a linear projection to match the kernels

\((P'\le P+1)\). In fact, we learn \({\varvec{E}}\) as a linear projection to match the kernels  and

and  , holding elements

, holding elements  ,

,  , being \(\delta \left( \cdot \right) \) the delta function. Besides, the empirical estimate of the CKA alignment between \({\varvec{K}}^x\) and \({\varvec{K}}^{y}\) is defined as [10]:

, being \(\delta \left( \cdot \right) \) the delta function. Besides, the empirical estimate of the CKA alignment between \({\varvec{K}}^x\) and \({\varvec{K}}^{y}\) is defined as [10]:

where \({\varvec{\tilde{K}}}\) stands for the centered kernel matrix calculated as  , where

, where  ,

,  is an identity matrix, and \(\langle \!\cdot ,\cdot \!\rangle _\mathbb {F}\) denotes the matrix-based Frobenius inner product. Later, to compute the projection matrix \({\varvec{E}}\), the following learning algorithm is employed:

is an identity matrix, and \(\langle \!\cdot ,\cdot \!\rangle _\mathbb {F}\) denotes the matrix-based Frobenius inner product. Later, to compute the projection matrix \({\varvec{E}}\), the following learning algorithm is employed:

Lastly, given  , the NTSVM is trained as above explained. For the sake of simplicity, we called our proposal as ETWSVM. A MatLab code of ETWSVM is publicly availableFootnote 1

, the NTSVM is trained as above explained. For the sake of simplicity, we called our proposal as ETWSVM. A MatLab code of ETWSVM is publicly availableFootnote 1

3 Experimental Set-Up

Datasets and ETWSVM Training. A toy dataset comprising two classes belonging to Gaussian distribution with a mean vector of  and a variance matrix of \(0.3{\varvec{I}}_2\), the minority samples are the samples with a probability greater than 0.7 and rest as majority one; and twelve benchmark datasets originating from the well-known UCI machine learning repository are used to test the ETWSVM proposedFootnote 2. Table 1 displays the main properties of the studied UCI repository datasets; the minority class is marked and rest as the majority one. As quality assessment, we employ the classification performance regarding the accuracy (ACC) and the geometric mean (GM) measures. The ACC is computed as

and a variance matrix of \(0.3{\varvec{I}}_2\), the minority samples are the samples with a probability greater than 0.7 and rest as majority one; and twelve benchmark datasets originating from the well-known UCI machine learning repository are used to test the ETWSVM proposedFootnote 2. Table 1 displays the main properties of the studied UCI repository datasets; the minority class is marked and rest as the majority one. As quality assessment, we employ the classification performance regarding the accuracy (ACC) and the geometric mean (GM) measures. The ACC is computed as  , where TP is the number of samples belonging to class \(+1\) and classified as \(+1\), FN codes the number of samples belonging to class \(+1\) and classified as \(-1\), TF is the number of samples belonging to class \(-1\) and classified as \(-1\), and FP is the number of samples belonging to class \(-1\) and classified \(+1\). Traditionally, The ACC measure is used for classification, but it can be biased for imbalanced datasets. Therefore, the GM is utilized to test imbalance classification as follows:

, where TP is the number of samples belonging to class \(+1\) and classified as \(+1\), FN codes the number of samples belonging to class \(+1\) and classified as \(-1\), TF is the number of samples belonging to class \(-1\) and classified as \(-1\), and FP is the number of samples belonging to class \(-1\) and classified \(+1\). Traditionally, The ACC measure is used for classification, but it can be biased for imbalanced datasets. Therefore, the GM is utilized to test imbalance classification as follows:  , where

, where  and

and  . Besides, a nested 10-fold cross-validation scheme is carried out for ETWSVM training. Regarding the free parameter tuning, the regularization value \(c_2\) is fixed as

. Besides, a nested 10-fold cross-validation scheme is carried out for ETWSVM training. Regarding the free parameter tuning, the regularization value \(c_2\) is fixed as  , and the bandwidth is searched from the set

, and the bandwidth is searched from the set  , where \(\sigma _0\) is the median of the input data Euclidean distances. Note that our CKA-based enhancement avoids the estimation of the \(c_1\) value in Eq. (1).

, where \(\sigma _0\) is the median of the input data Euclidean distances. Note that our CKA-based enhancement avoids the estimation of the \(c_1\) value in Eq. (1).

Method Comparison. As baseline, four classification approaches are tested: SVM [11], SVM with regularized slack variables (SVM reg-slack) [5], SVM with synthetic minority oversampling technique (SMOTE) [12], and a weighted Lagrangian twin support vector machine (WLTSVM) [13]. All provided methods include a Gaussian kernel. So, we infer the kernel bandwidth as in the ETWSVM training. Also, the regularization parameter value is computed from the set \(\{0.1, 1, 10, 100, 1000\}\) (for the WLTSVM technique we assume equal regularization parameter values). On the other hand, the k value for the SMOTE algorithm is fixed from the set \(\{3, 5, 9, 10,15\}\) and the relationship between minority and majority samples is set to 1:1. All the classifiers are implemented in MATLAB R2016b environment on a PC with Intel i5 processor (2.7 GHz) with 6 GB RAM.

4 Results and Discussion

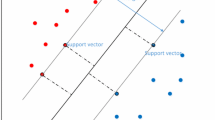

Figure 1 presents the ETWSVM results on the toy dataset. As seen in Figs. 1(a) and (b), which show the generated score by the minority and majority hyperplanes, respectively; the majority hyperplane generates a higher score for the samples associated with the minority class. Such behavior occurs reciprocally between the minority hyperplane and the examples of the majority class. Indeed, each hyperplane function is fixed depending on the slack variables of its counterpart. Therefore, the minority class’s support vectors are selected from the majority hyperplane meanwhile the majority class’s support vectors from the minority one. Also, the Fig. 1(d) exposes the support vectors obtained, where the majority hyperplane only requires three support vectors, but the minority hyperplane chooses all the majority samples as support vectors to reveal the central data structure. As expected, our ETWTSVM highlights the minority class data structure holding a higher number of support vectors than in the majority one. In turn, by combining the ETWSVM hyperplanes, Fig. 1(c) displays the classifier’s boundaries that encodes the class patterns correctly.

Next, the attained classification results on the real-world datasets are summarized in Table 2 concerning the ACC and the GM assessments. As seen, the average ACC performances for the SVM reg-slack, the SVM, the SMOTE-SVM, and our ETWSVM are closely similar. Nonetheless, the SMOTE-SVM and our proposal achieve more reliable results regarding the standard deviation values. Further, for the GM, which is preferred for evaluating imbalance data classification, demonstrates that the SMOTE-SVM and the introduced ETWSVM allow revealing discriminative patterns to avoid biased results. Again, the low standard deviation values of such approaches probe their dominance to cope with imbalance problems. Notably, the CKA-based kernel learning in Eq. (4), favors the ETWSVM hyperplane representation through a non-linear matching between the input data dependencies and the output labels. Moreover, despite that SMOTE-SVM reports the best GM performance in average, it requires the higher computational time to train the classification system (see Table 3). On the other hand, the GM results for the ETWSVM are competitive but requires the lowest computational cost for training. The later can be explained because our ETWSVM optimization takes advantage of the one-class foundation of the straightforward TWSVM, while decreasing the class overlapping based on the CKA matching. Finally, it is worth noting to mention that the ETWSVM requires to fix few free parameters and solves two smaller sized QPP in comparison to the state-of-the-art algorithms.

5 Conclusions

In this study, we propose an enhanced version of the well-known twin support vector machine classifier. In fact, our approach, called ETWSVM, includes a direct non-linear mapping within the quadratic programming optimization of the standard TWSVM, which allows representing the input samples in a high dimensional space (possibly infinite) after reformulation of the TWSVM dual form. Besides, a centered kernel alignment-based approach is proposed to learn the ETWSVM kernel mapping to counteract inherent imbalanced issues. Attained results on both synthetic and real-world datasets probe the virtues of our approach regarding classification and computational cost assessments. In particular, the real-world results related to some UCI repository datasets, show that the ETWSVM-based discrimination outperforms most of the standard SVM-based methods for imbalance classification and obtains competitive results in comparison to the SMOTE-SVM. However, ETWSVM notably adopts the lowest computational cost for training. As future work, authors plan to couple the ETWSVM approach with resampling methods to improve the classification performance. Moreover, a scalable multi-class extension could be an exciting research line. Finally, a couple the ETWSVM approach with a stochastic gradient descent for large scale problems.

References

Haixiang, G., et al.: Learning from class-imbalanced data: review of methods and applications. Expert Syst. Appl. 73, 220–239 (2017)

Loyola-González, O., et al.: Study of the impact of resampling methods for contrast pattern based classifiers in imbalanced databases. Neurocomputing 175, 935–947 (2016)

Branco, P., Torgo, L., Ribeiro, R.P.: A survey of predictive modeling on imbalanced domains. ACM Comput. Surv. 49(2), 31 (2016)

García, S., et al.: Dynamic ensemble selection for multi-class imbalanced datasets. Inf. Sci. 445, 22–37 (2018)

Xanthopoulos, P., Razzaghi, T.: A weighted support vector machine method for control chart pattern recognition. Comput. Ind. Eng. 70, 134–149 (2014)

Tang, L., Tian, Y., Yang, C.: Nonparallel support vector regression model and its SMO-type solver. Neural Netw. (2018)

Qi, Z., Tian, Y., Shi, Y.: Structural twin support vector machine for classification. Knowl. Based Syst. 43, 74–81 (2013)

Liu, L., et al.: Between-class discriminant twin support vector machine for imbalanced data classification. In: CAC 2017, pp. 7117–7122. IEEE (2017)

Xu, Y.: Maximum margin of twin spheres support vector machine for imbalanced data classification. IEEE Trans. Cybern. 47(6), 1540–1550 (2017)

Alvarez-Meza, A., Orozco-Gutierrez, A., Castellanos-Dominguez, G.: Kernel-based relevance analysis with enhanced interpretability for detection of brain activity patterns. Front. Neurosci. 11, 550 (2017)

Anandarup, R., et al.: A study on combining dynamic selection and data preprocessing for imbalance learning. Neurocomputing 286, 179–192 (2018)

Piri, S., Delen, D., Liu, T.: A synthetic informative minority over-sampling (SIMO) algorithm leveraging support vector machine to enhance learning from imbalanced datasets. Decis. Support Syst. 106, 15–29 (2018)

Shao, Y.H., et al.: An efficient weighted lagrangian twin support vector machine for imbalanced data classification. Pattern Recogn. 47(9), 3158–3167 (2014)

Acknowledgments

Under grants support by the project: “Desarrollo de un sistema de posorte clínico basado en el procesamiento estócastico para mejorar la resolusión espacial de la resonancia magnética estructural y de difusión con aplicación al procedimiento de la ablación de tumores”, code: 111074455860, funded by COLCIENCIAS. Moreover, C. Jimenez is partially financed by the project E6-18-09: “Clasificador de máquinas de vectores de soporte para problemas desbalanceados con selección automática de parámetros”, funded by Vicerrectoria de Investigación, innovación y extension and by Maestría en Ingeniería Eléctrica, both from Universidad Tecnológica de Pereira.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Jimenez, C., Alvarez, A.M., Orozco, A. (2019). A Data Representation Approach to Support Imbalanced Data Classification Based on TWSVM. In: Vera-Rodriguez, R., Fierrez, J., Morales, A. (eds) Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications. CIARP 2018. Lecture Notes in Computer Science(), vol 11401. Springer, Cham. https://doi.org/10.1007/978-3-030-13469-3_7

Download citation

DOI: https://doi.org/10.1007/978-3-030-13469-3_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-13468-6

Online ISBN: 978-3-030-13469-3

eBook Packages: Computer ScienceComputer Science (R0)