Abstract

Given a single RGB image of a complex outdoor road scene in the perspective view, we address the novel problem of estimating an occlusion-reasoned semantic scene layout in the top-view. This challenging problem not only requires an accurate understanding of both the 3D geometry and the semantics of the visible scene, but also of occluded areas. We propose a convolutional neural network that learns to predict occluded portions of the scene layout by looking around foreground objects like cars or pedestrians. But instead of hallucinating RGB values, we show that directly predicting the semantics and depths in the occluded areas enables a better transformation into the top-view. We further show that this initial top-view representation can be significantly enhanced by learning priors and rules about typical road layouts from simulated or, if available, map data. Crucially, training our model does not require costly or subjective human annotations for occluded areas or the top-view, but rather uses readily available annotations for standard semantic segmentation in the perspective view. We extensively evaluate and analyze our approach on the KITTI and Cityscapes data sets.

S. Schulter and M. Zhai—equal contribution.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Visual completion is a crucial ability for an intelligent agent to navigate and interact with the three-dimensional (3D) world. Several tasks such as driving in urban scenes, or a robot grasping objects on a cluttered desk, require innate reasoning about unseen regions. A top-view or bird’s eye view (BEV) representationFootnote 1 of the scene where occlusion relationships have been resolved is useful in such situations [11]. It is a compact description of agents and scene elements with semantically and geometrically consistent relationships, which is intuitive for human visualization and precise for autonomous decisions.

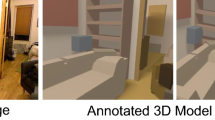

Given a single RGB image of a typical street scene (left), our approach creates an occlusion-reasoned semantic map of the scene layout in the bird’s eye view. We present a CNN that can hallucinate depth and semantics in areas occluded by foreground objects (marked in red and obtained via standard semantic segmentation), which gives an initial but noisy and incomplete estimate of the scene layout (middle). To fill in unobserved areas in the top-view, we further propose a refinement-CNN that induces learning strong priors from simulated and OpenStreetMap data (right), which comes at no additional annotation costs.

In this work, we derive such top-view representations through a novel framework that simultaneously reasons about geometry and semantics from just a single RGB image, which we illustrate in the particularly challenging scenario of outdoor road scenes. The focus of this work lies in the estimation of the scene layout, although foreground objects can be placed on top using existing 3D localization methods [24, 38]. Our learning-based approach estimates a geometrically and semantically consistent spatial layout even in regions hidden behind foreground objects, like cars or pedestrians, without requiring human annotation for occluded pixels or the top-view itself. Note that human supervision for such occlusion-reasoned top-view maps is likely to be subjective and of course, expensive to procure. Instead, we derive supervisory signals from readily available annotations for semantic segmentation in the perspective view, a depth sensor or stereo (for visible areas) and a knowledge corpus of typical road scenes via simulations and OpenStreetMap data. Figure 1 provides an illustration.

Specifically, in Sect. 3.1, we propose a novel CNN that takes as input an image with occluded regions (corresponding to foreground objects) masked out, and estimates the segmentation labels and depth values over the entire image, essentially hallucinating distances and semantics in the occluded regions. In contrast to standard image in-painting approaches, we operate in the semantic and depth spaces rather than the RGB image space. Section 3.1 shows how to train this CNN without additional human annotations for occluded regions. The hallucinated depth map is then used to map the hallucinated semantic segmentation of each pixel into the bird’s eye view, see Sect. 3.2.

This initial prediction can be incomplete and erroneous, for instance, since BEV pixels far away from the camera can be unobserved due to limited image resolution or due to imperfect depth estimation. Thus, Sect. 3.3 proposes a refinement and completion neural network to leverage easily obtained training data from simulations that encode general priors and rules about road scene layouts. Since there is no correspondence between actual images and simulated data, we employ an adversarial loss for teaching our CNN a generative aspect about typical layouts. When GPS is available for training images, we also show how map data provides an additional training signal for our models. We demonstrate this using OpenStreetMap (OSM) [19]. Maps provide rough correspondence with RGB images through the GPS location, but it can be noisy and lacks information on scene scale, besides mislabels in the map itself. We handle these issues by learning a warping function that aligns OSM data with image evidence using a variant of spatial transformer network [13]. Note that a single RGB image is used at test time, with simulations or OSM limited to training.

In Sect. 4, we evaluate our proposed semantic BEV synthesis on the KITTI [8] and Cityscapes [4] datasets. For a quantitative evaluation, we manually annotate validation images with the scene layout in both the perspective and the top-view, which is a time-consuming and error-prone process but again highlights the benefit of our method that resorts only to readily available annotations. Since, to the best of our knowledge, no prior work exists solving this problem in a similar setup to allow a fair comparison, we comprehensively evaluate with several baselines to study the role of each module. Our experiments consider roads and sidewalks for layout estimation, with cars and persons as occluding foreground objects, although extensions to other semantic classes are straightforward in future work. While not our focus, we visualize a simple application in Sect. 4.3 to include foreground objects such as cars and pedestrians in our representation. We observe qualitatively meaningful top-view estimates, which also obtain low errors on our annotated test set.

2 Related Work

General scene understanding is one of the fundamental goals of computer vision and many approaches exist that tackle this problem from different directions.

Indoor: Recent works like [2, 16, 26] have shown great progress by leveraging strong priors about indoor environments obtained from large-scale data sets. While these approaches can rely on strong assumptions like a Manhattan world layout, our work focuses on less constrained outdoor driving scenarios.

Outdoor: Scene understanding for outdoor scenes has received a lot of interest in recent years [5, 10, 27, 30, 37], especially due to applications like driver assistance systems or autonomous driving. Wang et al. [29] propose a conditional random field that infers 3D object locations, semantic segmentation as well as a depth reconstruction of the scene from a single geo-tagged image, which also enables the use of OSM data. At test time, their approach requires as input accurate GPS and map information. In contrast, we require only the RGB image at test time. Seff and Xiao [21] leverage OpenStreetMap (OSM) data to predict several road layout attributes from a single image, like the distance to an intersection, drivable directions, heading angle, etc.While we also leverage OSM for training our models and make predictions only from a single RGB image, we infer a full semantic map in the top view instead of a discrete set of attributes.

Top-View Representations: Sengupta et al. [22] derive a top-view representation by relating semantic segmentation in perspective images to a ground plane with a homography. However, this is a simplifying (flat-world) assumption where non-flat objects will produce artifacts in the ground plane, like shadows or cones. To alleviate these artifacts, they aggregate semantics over multiple frames. However, removing all artifacts would require viewing objects from many different angles. In contrast, our approach enables reasoning about occlusion from just a single image, which is enabled by automatically learned and context-dependent priors about the world. Geiger et al. [7] represent road scenes with a complex model in the bird’s eye view. However, input to the model comes from multiple sources (vehicle tracklets, vanishing points, scene flow, etc.) and inference requires MCMC, while our approach efficiently computes the BEV representation from just a single image. Moreover, their hand-crafted parametric model might not account for all possible scene layouts, whereas our approach is non-parametric and thus more flexible. Máttyus et al. [18] combine perspective and top-view images to estimate road layouts and Zhai et al. [34] predict the semantic layouts of top-view images by learning the transformation between the perspective and the top-view. Gupta et al. [11] demonstrate the suitability of a BEV representation for mapping and planning, even though it is not explicitly learned.

Occlusion Reasoning: Most recent works in this area focus on occlusions of foreground objects and use complex hand-crafted models [5, 30, 37, 38]. In contrast, we estimate the layout of a scene occluded by foreground objects. Guo and Hoiem [10] employ a scene parsing approach that retrieves existing shapes from training data based on visible pixels. Our approach learns to hallucinate occluded areas and does not rely on an existing and fixed set of polygons from training data. Liu et al. [17] also hallucinate the semantics and depth of regions occluded by foreground objects. However, (i) their approach relies on a hand-crafted graphical model while ours is learning-based and (ii) they assume sparse depth from a laser scanner as input, while we estimate depth from a single RGB image (the sparse depth maps are actually ground truth for training our models).

3 Generating Bird’s Eye View Representations

We now present our approach for transforming a single RGB image in the perspective view into an occlusion-reasoned semantic representation in the bird’s eye view, see Fig. 1. We take as input an image \(I\in \mathbb {R}^{h\times w\times 3}\) with spatial dimension \(h\) and \(w\) and a semantic segmentation \(S^{\text {fg}}\in \mathbb {R}^{h\times w\times C}\) of the visible scene, where \(C\) is the number of categories. Note that any semantic segmentation method can be used and we rely on the recently proposed pyramid scene parsing (PSP) network [35]. \(S^{\text {fg}}\) provides the location of foreground objects that occlude the scene. In this work, we consider foreground objects like cars or pedestrians as occluders but other definitions are possible as well.

To reason about these occlusions, we define a masked image \(I^{M}\), where pixels of foreground objects have been removed. In Sect. 3.1, we propose a CNN that takes \(I^{M}\) as input and hallucinates the depth as well as the semantics of the entire image, including occluded pixels. The occlusion-reasoned depth map \(D^{\text {bg}}\) allows us to map the occlusion-reasoned semantic segmentation \(S^{\text {bg}}\) into 3D and then into the bird’s eye view (BEV), see Sect. 3.2.

While this initial BEV map \(B^{\text {init}}\) is already better than mapping the non-occlusion reasoned semantic map \(S^{\text {fg}}\) into 3D, there can still be unobserved or erroneous pixels. In Sect. 3.3, we thus propose a CNN that learns priors from simulated data to further improve our representation. If a GPS signal is available, OpenStreetMap (OSM) data can be additionally included as supervisory signal.

3.1 Learning to see Around Foreground Objects

An important step towards an occlusion-reasoned representation of the scene is to infer the semantics and the geometry behind foreground objects.

Masking: Given the semantic segmentation \(S^{\text {fg}}\), we define the mask of foreground pixels as \(M\in \mathbb {R}^{h\times w}\), where a pixel in the mask \(M_{ij}\) is 1 if and only if the segmentation at that pixel \(S^{\text {fg}}_{ij}\) belongs to any of the foreground classes. Otherwise, the pixel in the mask is 0. In order to inform the CNN about which pixels have to be in-painted, we apply the mask on the input RGB image and define each pixel in the masked input \(I^{M}\) as

where \(\bar{m}\) is the mean RGB value of the color range, such that after normalization the input to the CNN is zero for those pixels. Given \(I^{M}\), we extract a feature representation by applying ResNet-50 [12]. Similar to recent semantic segmentation literature [35], we use a larger stride in convolutions and dilation [32] to increase the feature map resolution from \(\frac{h}{32} \times \frac{w}{32}\) to \(\frac{h}{8} \times \frac{w}{8}\).

(a) The inpainting CNN first encodes a masked image and the mask itself. The extracted features are concatenated and two decoders predict semantics and depth for visible and occluded pixels. (b) To train the inpainting CNN we ignore foreground objects as no ground truth is available (red) but we artificially add masks (green) over background regions where full annotation is already available

In addition to masking the input image, we explicitly provide the mask as input to the CNN for two reasons: (i) While the value m becomes 0 after centering the input of the CNN, other visible pixels might still share the same value and confuse the training of the CNN. (ii) An explicit mask input allows encoding more information like the category of the occluded pixel. We thus define another mask \(M^{\text {cls}}\in \mathbb {R}^{h\times w\times C^{\text {fg}}}\), where \(C^{\text {fg}}\) is the number of foreground classes and each channel corresponds to one of them. We encode \(M^{\text {cls}}\) with a small CNN and fuse the resulting feature with the one from the masked image, see Fig. 2a.

Hallucination: We then put two decoders on the fused feature representation of \(I^{M}\) and \(M^{\text {cls}}\) for predicting semantic segmentation and the depthmap of the occlusion-free scene. For semantic segmentation, we again use the PSP module [35], which is particularly useful for in-painting where contextual information is crucial. For depth prediction, we follow [15] in defining the network architecture. Both decoders are followed by a bilinear upsampling layer to provide the output at the same resolution as the input, see Fig. 2a. While traditional in-painting methods fill missing pixels with RGB values, note that we directly go from an RGB image to the in-painted semantics and the geometry of the scene, which has two benefits: (1) The computational costs are smaller as we avoid the (in our case) unnecessary detour in the RGB space. (2) The task of in-painting in the RGB space is presumably harder than in-painting semantics and depth as there is no need for predicting any texture or color.

Training: We train the proposed CNN in a supervised way. However, as mentioned before, it would be very costly to annotate the semantics and particularly the geometry behind foreground objects. We thus resort to an alternative that only requires standard semantic segmentation and depth ground truth. Because our desired ground truth is unknown for real foreground objects in the masked input image \(I^{M}\), we do not infer any loss at those pixels. However, we augment \(I^{M}\) with additional randomly sampled masks, but for which we still have ground truth, see Fig. 2b. In this way, we can teach our CNN to hallucinate occluded areas of the input image without acquiring costly human annotations. Note that an alternative to masking regions in the input image is to paste real foreground objects into the scene. However, this strategy requires separate instances of foreground objects cropped at the semantic boundaries and a good understanding of the scene geometry for generating a realistic looking training image.

3.2 Mapping into the Bird’s Eye View

Given the depth map \(D^{\text {bg}}\) and the intrinsic camera parameters \(\mathbf {K}\), we can map each coordinate of the perspective view into the 3D space. We drop the z-coordinate (height axis) for each 3D point and assign x and y coordinates to the closest integer, which gives us a mapping into bird’s eye view representation. We use this mapping to transfer the class probability distribution of each pixel in the perspective view, i.e., \(S^{\text {bg}}\), into the bird’s eye view, which we denote \(B^{\text {init}}\in \mathbb {R}^{k\times l\times C^{\text {bg}}}\), where \(C^{\text {bg}}\) is the number of background classes and \(k\) and \(l\) are the spatial dimensions. Throughout the paper, we use \(k=128\) and \(l=64\) pixels that we relate to \(60 \times 30\) meters in the point cloud. For all points that are mapped to the same pixel in the top view, we average the corresponding class distribution. Figure 3 illustrates the geometric transformation.

Note that \(B^{\text {init}}\) is our first occlusion-reasoned semantic representation in the bird’s eye view. However, \(B^{\text {init}}\) also has several remaining issues. Some pixels in \(B^{\text {init}}\) will not be assigned any class probability, especially those far from the camera due to image foreshortening in the perspective view. Imperfect depth prediction is also an issue because it may assign a well classified pixel in the perspective view a wrong depth value, which puts the point into a wrong location in top-view. This can lead to unnatural arrangements of semantic classes in \(B^{\text {init}}\).

3.3 Refinement with a Knowledge Corpus

To remedy the above mentioned issues, we propose a refinement CNN that takes \(B^{\text {init}}\) and predicts the final output \(B^{\text {final}}\in \mathbb {R}^{k\times l\times C^{\text {bg}}}\), which has the same dimensions as \(B^{\text {init}}\). The refinement CNN has an encoder-decoder structure with a fully-connected bottleneck layer, see Fig. 4b. The main difficulty in training the refinement CNN is the lack of semantic ground truth data in the bird’s eye view, which is very hard and costly to annotate. In the following we present two sources of supervisory signals that are easy to acquire.

Simulation: The first source of information we leverage is a simulator that renders the semantics of typical road scenes in the bird’s eye view. The simulator models roads with different types of intersections, lanes and sidewalks, see Fig. 4a for some examples. Note that it is easy to create such a simulator as we do not need to model texture, occlusions or any perspective distortions in the scene. A simple generative model about road topology, number of lanes, radius for curved roads, etc.is enough. Since there is no correspondence with the real training data, we rely on an adversarial loss [1] between predictions of the refinement CNN \(B^{\text {final}}\) and data from the simulator \(B^{\text {sim}}\)

where \(m\) is the batch size and \(d\left( .; \varTheta _{\text {discr}}\right) \) is the discriminator function with parameters \(\varTheta _{\text {discr}}\). Note that \(d\left( .; \varTheta _{\text {discr}}\right) \) needs to be a K-Lipschitz function [1], which is enforced by gradient clipping on the parameters \(\varTheta _{\text {discr}}\) during training. While any other variant of adversarial loss is possible, we found [1] to provide the most stable training. The adversarial loss injects prior information about typical road scene layouts and remedies errors of \(B^{\text {init}}\) like unobserved pixels or unnatural shapes of objects due to depth or semantic prediction errors.

(a) Simulated road shapes in the top-view. (b) The refinement-CNN is an encoder-decoder network receiving three supervisory signals: self-reconstruction with the input, adversarial loss from simulated data, and reconstruction loss with aligned OpenStreetMap (OSM) data. (c) The alignment CNN takes as input the initial BEV map and a crop of OSM data (via noisy GPS and yaw estimate given). The CNN predicts a warp for the OSM map and is trained to minimize the reconstruction loss with the initial BEV map.

Since \(\mathcal {L}^{\text {sim}}\) operates without any correspondence, the refinement network needs additional regularization to not deviate too much from the actual input, i.e., \(B^{\text {init}}\). We add a reconstruction loss between \(B^{\text {init}}\) and \(B^{\text {final}}\) to define the final loss as \(\mathcal {L}= \mathcal {L}^{\text {sim}} + \lambda \cdot \mathcal {L}^{\text {reconst}}\) with

where \(\odot \) is an element-wise multiplication and \(\mathbf {M}\in \mathbb {R}^{k\times l}\) is a mask of 0’s for unobserved pixels in \(B^{\text {init}}\) and 1’s otherwise.

OpenStreetMap Data: Driving imagery often comes with a GPS signal and an estimate of the driving direction, which enables the use of OpenStreetMap (OSM) data as another source of supervisory signal for the refinement CNN. The most simple approach is to render the OSM data for the given location and angle, \(B^{\text {osm}}\), and define a reconstruction loss with \(B^{\text {final}}\) as \(\mathcal {L}^{\text {OSM}} = \Vert B^{\text {final}}- B^{\text {osm}}\Vert ^{2}\). This loss can be included into the final loss \(\mathcal {L}\) in addition to or instead of \(\mathcal {L}^{\text {reconst}}\). In any case, \(\mathcal {L}^{\text {OSM}}\) ignores noise in the GPS and the direction estimate as well as imperfect renderings due to annotation noise and missing information in OSM.

We therefore propose to align the initial OSM map \(B^{\text {osm}}\) with the semantics and geometry observed in the actual RGB image with a warping function \(\hat{B}^{\text {osm}}= w\left( B^{\text {osm}};\theta \right) \) parameterized by \(\theta \). We use a composition of a similarity transformation implemented as a parametric spatial transformer (handling translation, rotation, and scale; denoted “Box”) and a non-parametric warp implemented as bilinear sampling (handling non-linear misalignments due to OSM rendering; denoted “Flow”) [13], see Fig. 5. We minimize the masked reconstruction between \(\hat{B}^{\text {osm}}\) and the initial BEV map \(B^{\text {init}}\),

(a) We use a composition of similarity transform (left, “box”) and a non-parametric warp (right, “flow”) to align noisy OSM with image evidence. (b, top) Input image and the corresponding \(B^{\text {init}}\). (b, bottom) Resulting warping grid overlaid on the OSM map and the warping result for 4 different warping functions, respectively: “box”, “flow”, “box+flow”, “box+flow (with regularization)”. Note the importance of composing the transformations and the induced regularization.

where \(w\left( .;\theta \right) \) is differentiable [13], and \(\varGamma (.)\) is a low-pass filter similar to [28, 36], and \(\Vert .\Vert ^2_2\) the squared \(\ell _2\)-norm, both acting as regularizing functions. The hyper-parameters \(\lambda _2\) and \(\lambda _3\) are manually set.

To minimize the alignment error the first choice is non-linear optimization, e.g., LBFG-S [3]. However, we found this to produce satisfactory results only for parts of the data, while a significant portion would require hand-tuning of several hyper-parameters. This is mostly due to noise in the initial BEV map \(B^{\text {init}}\) as well as the rendering \(B^{\text {osm}}\). An alternative, which proved to be more stable and easy to realize, is to learn a function that predicts the warping parameters, which has the benefit that the predictive function can implicitly leverage other examples of \((B^{\text {init}}, B^{\text {osm}})\) pairs in the training corpus. We thus train a CNN that takes \(B^{\text {init}}\) and \(B^{\text {osm}}\) as inputs and predicts the warping parameters \(\theta \) by minimizing the alignment error. Also, we can either train this CNN separately or jointly with the refinement CNN, thus providing different training signals for the refinement module. We evaluate these options in Sect. 4.2. Figure 4c illustrates the process of aligning the OSM data.

4 Experiments

Our quantitative and qualitative evaluation focuses on occlusion reasoning via hallucination in the perspective view (Sect. 4.1) and scene completion via the refinement network in the bird’s eye view (Sect. 4.2).

Datasets: Creating the proposed BEV representation requires data for learning the parameters of the modules described above. Importantly, the only supervisory signal that we need is semantic segmentation (human annotation) and depth (LiDAR or stereo), although not both are required for the same input image. Both KITTI [8] and Cityscapes [4] fulfill our requirements. Both data sets come with a GPS signal and a yaw estimate of the driving direction, which allows us to additionally leverage OSM data during training.

The KITTI [8] data set contains many sequences of typical driving scenarios and contains accurate GPS location and driving direction as well as a 3D point cloud from a laser scanner. However, annotation for semantic segmentation is scarce. We create two versions of the data set based on segmentation annotation: KITTI-Ros consists of 31 sequences (14201 frames) for training, where 100 of them have semantic annotation, and of 9 sequences (4368) for validation, where 46 images are annotated for segmentation. The segmentation ground truth comes from [20]. KITTI-RAW consists of 31 sequences (16273 frames) for training and 9 sequences (2296 frames) for validation. 1074 images from the training set and 233 images from the validation set have ground truth annotations for semantic segmentation, which we collected on our own.

The Cityscapes data set [4] contains 2975 training and 500 validation images, all of which are fully annotated for semantic segmentation and are provided as stereo image pairs. For ease of implementation, we rely on a strong stereo method [33] to serve as our training signal for depth, although unsupervised methods exists for direct training from stereo images [6, 9]. GPS location and heading are also provided, although accuracy is lower compared to KITTI.

Validation Data for Occlusion-Reasoning: For a quantitative evaluation of occlusion reasoning in the perspective view as well as in the bird’s eye view, we manually annotated all validation image of the three data sets that also have semantic segmentation ground truth. We asked annotators to draw the scene layout by hand for the categories “road” and “sidewalk”. Other pixels are annotated as “background”.

Implementation Details: We train our in-painting models with a batch size of 2 for 80k iterations with ADAM [14]. The initial learning rate is 0.0002, which is decreased by a factor of 10 for the last 20k iterations. The refinement network is trained with a batch size of 64 for 80k iterations and a learning rate of 0.0001.

4.1 Occlusion Reasoning by Hallucination

Here we analyze our hallucination CNN proposed in Sect. 3.1, which targets at in-painting the semantics and depth of areas occluded by foreground objects. To the best of our knowledge, there is no prior art that can serve as a fair comparison point. Although [17] addresses the same task, their approach assumes sparse depth information as input, which serves as ground truth in our approach. Nevertheless, we have created fair baselines that justify our design choices.

Evaluation Protocol: We split our evaluation protocol into two parts. First, we follow [17] by randomly masking out background regions in the input and evaluate the predictions of the hallucination CNNs (random-boxes). For this case, note that evaluation can be done for all semantic classes and depth. While this is the only possible evaluation without human annotation for occluded areas, the sampling process may not resemble objects realistically. Thus, we also evaluate with our newly acquired annotations (human-gt) for the categories “road” and “sidewalk”, which was not done in [17]. We measure mean IoU for segmentation and absolute relative distance (ARD) for depth estimation as in [15].

Semantics and Depth Space Versus RGB Space: We compare our hallucination CNN with a baseline that takes the traditional approach of in-painting and operates in the RGB pixel space. This baseline consists of two CNNs, one for in-painting in the RGB space and one for semantic and depth prediction. For a fair comparison, we equip both CNNs with the same ResNet-50 feature extractor. For RGB-space in-painting, we use the same decoder structure as for depth prediction but with 3 output channels and train it with the random mask sampling strategy. The second CNN has the exact same architecture as our hallucination CNN and is trained without masking inputs but instead uses the already in-painted RGB images. From Table 1 we can see that the proposed direct hallucination network outperforms in-painting in the RGB space for depth prediction and segmentation with the human-provided ground truth while it trails for segmentation of all categories with random boxes. The reason for the inferior performance might be missing context information that is available to the baseline by the RGB-space supervision. However, note that the proposed architecture is twice as efficient, since in-painting and prediction of semantics and depth are obtained in the same forward pass. Qualitative examples of our direct hallucination CNN are given in Fig. 6.

Mask-Encoding: We also analyze different variants of how to encode the foreground mask as input to the proposed hallucination CNN. Table 1 demonstrates the beneficial impact of explicitly encoding the foreground mask (“+mask”) in addition to masking the RGB image (“RGB-only”), as well as providing the class information of the foreground objects inside the mask (“+cls-encode”).

4.2 Refining the BEV Representation

We now evaluate the refinement model described in Sect. 3.3 on all three data sets with the acquired annotations in the bird’s eye view. The evaluation metric again is mean IOU for the categories “road” and “sidewalk”. We compare four models: (1) The initial BEV map, without refinement. (2) A refinement heuristic, where missing semantic information at pixel (i, j) is filled with the semantics of the closest pixels in y-direction towards the camera. (3) The proposed refinement module with simulated data and the self-reconstruction loss. (4) The refinement module with the additional OSM-reconstruction loss. Table 2 clearly shows that the combination of simulated and aligned OSM data provides the best supervisory signal for the refinement module on all three data sets. Interestingly, the refinement heuristic is a strong competitor but this is probably because evaluation is limited to only “road” and “sidewalk”, where simple rules are often correct. This heuristic will likely fail for classes like “vegetation” and “building”. Importantly, all refinement strategies improve upon the initial BEV map. Because no fair comparison point to prior art is available to us, we further analyze two alternative baselines on the KITTI-RAW data set.

Importance of Hallucination: We train a refinement module that takes as input BEV maps that omit the hallucination step (“No halluc.”). To create this BEV map, we train a joint segmentation and depth prediction network (same architecture as for hallucination) with standard foreground annotation and map the semantics of background classes into the BEV map as described in Sect. 3.2. Table 2 shows that avoiding the hallucination step hurts the performance. Note that the proposed refinement CNN recovers most errors for roads, while the relative performance drop for sidewalks is larger. We believe this is due to long stretches of non-occluded roads in the KITTI data set. Sidewalks, on the other hand, are typically more occluded due to parked cars and pedestrians.

Importance of Depth Prediction: We train a CNN that takes as input the RGB image in the perspective view and directly predicts the BEV map, without depth prediction (“No depth pred.”). The CNN extracts basic features with ResNet-50 [12], applies strided convolutions for further down-sampling, a fully-connected layer resembling a transformation from 2D to 3D, and transposed convolutions for up-sampling into the BEV dimensions. To create a training signal for this network, we map ground truth segmentation with the ground truth depth data (LiDAR) into the bird’s eye view. On top of the output of this CNN, we still apply the proposed refinement module for a fair comparison. The importance of depth prediction becomes clearly evident from Table 2. In this case, not even the refinement-CNN is able to recover. While there can be better architectures for directly predicting a semantic BEV map from the perspective view than our baseline, it is important to note that depth is an intermediary that clearly eases the task by enabling the use of known geometric transformations.

Warping OSM Data: In Table 3, we compare different warping functions and optimization strategies for aligning the OSM data, as described in Sect. 3.3. Our results show that the composition of “Box” (translation, scale and rotation) and “Flow” (displacement field) is superior to individual warps. We can also see that the proposed alignment CNN trained jointly with the refinement module provides the best training signal from OSM data. As already mentioned in Sect. 3.3, LBFG-S alignment failed for around 30% of the training data, which explains the superiority of the proposed CNN for predicting warping parameters.

Qualitative Results: Figure 7 demonstrates the beneficial impact of both the hallucination and refinement modules with several qualitative examples. In the first three cases, we can observe the learned priors of the hallucination CNN that correctly handles largely occluded areas, which is evident from both the hallucinated semantics and the difference in the first two illustrated BEV maps (before and after hallucination). Other examples illustrate how the refinement CNN completes unobserved areas and even completes whole side roads and intersections.

4.3 Incorporating Foreground Objects into the BEV Map

Finally, we show how foreground objects like cars or pedestrians can be handled in the proposed framework. Since it is not the main focus of this paper, we use a simple baseline to lift 2D bounding boxes of cars into the BEV map. Importantly, we demonstrate that our refinement module is able to handle foreground objects as well. First we leverage the 3D ground truth annotations of the KITTI data set and estimate the mean dimensions of a 3D bounding box. Then, for a given 2D bounding box in the perspective view, we use the estimated depth map to compute the 3D point of the bottom center of the bounding box, which is then used to translate our prior 3D bounding box in the BEV map. The refinement network takes the initial BEV map that now includes foreground objects. We extend the simulator to render objects as rectangles in the top-view and employ a self-reconstruction loss since OSM cannot provide such information. Figure 8 gives two examples of the obtained BEV-map with foreground objects for illustrative purpose. A full quantitative evaluation for localization accuracy and consistency with background requires significant extensions to be studied in our future work.

5 Conclusion

Our work addresses a complex problem in 3D scene understanding, namely, occlusion-reasoned semantic representation of outdoor scenes in the top-view, using just a single RGB image in the perspective view. This requires solving the canonical challenge of hallucinating semantics and geometry in areas occluded by foreground objects, for which we propose a CNN trained using only standard annotations in the perspective image. Further, we show that adversarial and warping-based refinement allow leveraging simulation and map data as valuable supervisory signals to learn prior knowledge. Quantitative and qualitative evaluations on the KITTI and Cityscapes datasets show attractive results compared to several baselines. While we have shown the feasibility of solving this problem using a single image, incorporating temporal information might be a promising extension for further gains. We finally note that with the use of indoor data sets like [23, 25], along with simulators [31] and floor plans [16], a similar framework may be derived for indoor scenes, which will be the subject of our future work.

Notes

- 1.

We use the terms “top-view” and “bird’s eye view” interchangeably.

References

Arjovsky, M., Chintala, S., Bottou, L.: Wasserstein generative adversarial networks. In: ICML (2017)

Armeni, I., et al.: 3D semantic parsing of large-scale indoor spaces. In: CVPR (2016)

Byrd, R.H., Lu, P., Nocedal, J., Zhu, C.: A limited memory algorithm for bound constrained optimization. SIAM J. Sci. Comput. 16(5), 1190–1208 (1995)

Cordts, M., et al.: The cityscapes dataset for semantic urban scene understanding. In: CVPR (2016)

Dhiman, V., Tran, Q.H., Corso, J.J., Chandraker, M.: A continuous occlusion model for road scene understanding. In: CVPR (2016)

Garg, R., BG, V.K., Carneiro, G., Reid, I.: Unsupervised CNN for single view depth estimation: geometry to the rescue. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9912, pp. 740–756. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46484-8_45

Geiger, A., Lauer, M., Wojek, C., Stiller, C., Urtasun, R.: 3D traffic scene understanding from movable platforms. In: PAMI (2014)

Geiger, A., Lenz, P., Stiller, C., Urtasun, R.: Vision meets Robotics: the KITTI Dataset. Int. J. Robot. Res. (IJRR) (2013)

Godard, C., Aodha, O.M., Brostow, G.J.: Unsupervised monocular depth estimation with left-right consistency. In: CVPR (2017)

Guo, R., Hoiem, D.: Beyond the line of sight: labeling the underlying surfaces. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012. LNCS, vol. 7576, pp. 761–774. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-33715-4_55

Gupta, S., Davidson, J., Levine, S., Sukthankar, R., Malik, J.: Cognitive mapping and planning for visual navigation. In: CVPR (2017)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: CVPR (2016)

Jaderberg, M., Simonyan, K., Zisserman, A., Kavukcuoglu, K.: Spatial transformer networks. In: NIPS (2015)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. In: ICLR (2015)

Laina, I., Christian Rupprecht, V.B., Tombari, F., Navab, N.: Deeper depth prediction with fully convolutional residual networks. In: 3DV (2016)

Liu, C., Schwing, A.G., Kundu, K., Urtasun, R., Fidler, S.: Rent3D: floor-plan priors for monocular layout estimation. In: CVPR (2015)

Liu, M., He, X., Salzmann, M.: Building scene models by completing and hallucinating depth and semantics. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9910, pp. 258–274. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46466-4_16

Máttyus, G., Wang, S., Fidler, S., Urtasun, R.: HD maps: fine-grained road segmentation by parsing ground and aerial images. In: CVPR (2016)

OpenStreetMap contributors: Planet dump. Retrieved from https://planet.osm.org, https://www.openstreetmap.org (2017)

Ros, G., Ramos, S., Granados, M., Bakhtiary, A., Vazquez, D., Lopez, A.M.: Vision-based offline-online perception paradigm for autonomous driving. In: WACV (2015)

Seff, A., Xiao, J.: Learning from maps: visual common sense for autonomous driving arXiv:1611.08583 (2016)

Sengupta, S., Sturgess, P., Ladický, L., Torr, P.H.S.: Automatic dense visual semantic mapping from street-level imagery. In: IROS (2012)

Silberman, N., Hoiem, D., Kohli, P., Fergus, R.: Indoor segmentation and support inference from RGBD images. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012. LNCS, vol. 7576, pp. 746–760. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-33715-4_54

Song, S., Chandraker, M.: Robust scale estimation in real-time monocular SFM for autonomous driving. In: CVPR (2014)

Song, S., Lichtenberg, S.P., Xiao, J.: SUN RGB-D: a RGB-D scene understanding benchmark suite. In: CVPR (2015)

Song, S., Yu, F., Zeng, A., Chang, A.X., Savva, M., Funkhouser, T.: Semantic scene completion from a single depth image. In: CVPR (2017)

Sturgess, P., Alahari, K., Ladický, L., Torr, P.H.S.: Combining appearance and structure from motion features for road scene understanding. In: BMVC (2009)

Vijayanarasimhan, S., Ricco, S., Schmid, C., Sukthankar, R., Fragkiadaki, K.: SfM-net: learning of structure and motion from video. CoRR abs/1704.07804 (2017)

Wang, S., Fidler, S., Urtasun, R.: Holistic 3D scene understanding from a single geo-tagged image. In: CVPR (2015)

Wojek, C., Walk, S., Roth, S., Schindler, K., Schiele, B.: Monocular visual scene understanding: understanding multi-object traffic scenes. PAMI 36, 882–897 (2013)

Wu, Y., Wu, Y., Gkioxari, G., Tian, Y.: Building generalizable agents with a realistic and rich 3D environment. CoRR abs/1801.02209 (2018)

Yu, F., Koltun, V.: Multi-scale context aggregation by dilated convolutions. In: ICLR (2016)

Zbontar, J., LeCun, Y.: Stereo matching by training a convolutional neural network to compare image patches. JMLR 17, 1–32 (2016)

Zhai, M., Bessinger, Z., Workman, S., Jacobs, N.: Predicting ground-level scene layout from aerial imagery. In: CVPR (2017)

Zhao, H., Shi, J., Qi, X., Wang, X., Jia, J.: Pyramid scene parsing network. In: CVPR (2017)

Zhou, T., Brown, M., Snavely, N., Lowe, D.G.: Unsupervised learning of depth and ego-motion from video. In: CVPR (2017)

Zia, M.Z., Stark, M., Schindler, K.: Explicit occlusion modeling for 3D object class representations. In: CVPR (2013)

Zia, M.Z., Stark, M., Schindler, K.: Towards Scene Understanding with Detailed 3D Object Representations (2015)

Acknowledgments

This material is based upon work supported by the National Science Foundation under Grant No. (IIS-1553116). The work was part of M. Zhai’s internship at NEC Labs America, in Cupertino.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Schulter, S., Zhai, M., Jacobs, N., Chandraker, M. (2018). Learning to Look around Objects for Top-View Representations of Outdoor Scenes. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds) Computer Vision – ECCV 2018. ECCV 2018. Lecture Notes in Computer Science(), vol 11219. Springer, Cham. https://doi.org/10.1007/978-3-030-01267-0_48

Download citation

DOI: https://doi.org/10.1007/978-3-030-01267-0_48

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-01266-3

Online ISBN: 978-3-030-01267-0

eBook Packages: Computer ScienceComputer Science (R0)