Abstract

Aiming at the problem of light stripe distribution uneven and large curvature variation, which results in wrong stripe center extraction, a fast light stripe center extraction method based on the adaptive template is proposed. Firstly, the adaptive threshold method is used to reduce the image convolution area, and the multi-thread parallel operation is used to improve the speed of extracting the light stripe center. Secondly, the multi-direction template method is used to estimate the width of the light stripe along the normal direction, so that the size of the Gaussian template can be automatically obtained. Finally, the Hessian matrix eigenvalues are normalized to eliminate the multiple light stripe centers at both ends of the light stripe, and avoid extracting the wrong light stripe centers at the intersection position or the large curvature change, thus ensuring the continuity of the light stripe. This method has fast processing speed, good robustness, and high precision. It is very suitable for vision measurement image, medical image, and remote sensing image.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In the field of vision measurement [1,2,3,4,5,6], such as geometric dimension measurement, 3D profilometry measurement, 3D object classification recognition, and so on, it usually uses the camera to obtain the modulated light stripe to solve and reconstruct the contour data of the measured object. Structured light vision sensor is widely used because of its simple structure, low cost, non-contact, high efficiency. Thus, extracting the center line of the light stripe is a key step for 3D reconstruction. Further, in the field of medical image analysis [7,8,9,10,11], such as blood vessel detection in retina or fundus images, pulmonary nodule detection in CT images, information enhancement of ultrasound images, and so on. All of these need to detect the edge contour or the center for medical diagnosis. Moreover, in the field of remote sensing, the center of curvilinear structures are extracted from aerial and satellite images to determine key information such as rivers and roads [12,13,14]. Therefore, fast and accurate center extraction of lines are extremely important for visual measurement, and center detection of lines algorithm can also be applied to medical images and remote sensing images.

At present, the methods of extracting the center of light stripe can be divided into two categories according to the extracting accuracy. One is pixel-level accuracy, such as extreme value method [15,16,17,18], skeleton thinning method [19]; the other is sub-pixel accuracy, including direction template method [20, 21], gray centroid method [22, 23], curve fitting method [24, 25] and Hessian matrix method (Steger method) [26,27,28].

Extremum method and threshold method can detect and extract the light stripe center quickly, but the extraction accuracy is not high and is vulnerable to noise interference. The skeleton refinement method has fast extraction speed, but it has poor noise resistance and is prone to burrs. Directional template method has fast extraction speed, but its robustness and accuracy are not as good as curve fitting method and Hessian matrix method. Although the curve fitting method also has high accuracy, high stability and good robustness, the detection and extraction speed is slow, and it is not suitable for the detection of light stripe in complex background images. Gray centroid method is generally used in simple light stripe images because of its high accuracy, fast speed and good stability. Steger method is widely used to extract of the center of light stripe image in visual measurement, the center line of blood vessel in medical detection image and the center line of road in remote sensing image because of its high accuracy and good anti-noise ability.

Steger’s method has high accuracy, good stability and strong anti-noise ability, but the computational complexity caused by multiple convolutions is huge, resulting in a slower speed. In addition, if the whole image only uses the same Gauss convolution kernel size, it is difficult to accurately extract the center line and even lead to the discontinuity of the light stripe when the light stripe width distribution is uneven and the curvature varies greatly. At both ends of the light stripe, it is easy to generate multiple centers point of the light stripe because of discontinuity of light stripe and abrupt change of gray level. Similarly, if the light stripe is located at the edge of the image, there will also be multiple centers. If there are intersections in the light stripe, the center line of the light stripe near the intersection will often have a larger error.

For different problems, many papers also present various improvement methods. For example, aiming at the problem that multi-convolution of Gauss template increases the time of extracting the center of the light stripes, the processing range of stripe image is reduced by morphology and image recognition [29, 30], and the convolution times of image by Gauss template are reduced, so as to improve the speed of image extraction, or to improve the speed of image extraction by virtue of the performance of hardware [31]. In order to solve the problem of uneven distribution of light stripe width, adaptive template is often used to solve the problem of strip discontinuity caused by the change of light stripe width. However, adaptive template increases the number of convolutions, thus increasing the extraction time.

To solve these problems, we also propose an improved method based on the Hessian matrix. First, the original image is divided into several sub-blocks according to the number of CPU threads on the computer, and the extraction speed of the light stripe center is improved by multi-threaded parallel computing. Secondly, in sub-block images, morphological methods (corrosion, expansion, regional connectivity) and adaptive threshold methods are used to segment the light stripe image. Thirdly, the width of the light stripe is estimated by binary image using the multi-directional template. Thus, the size of the convolution template for Gaussian filter is determined and image convolution is carried out by adaptive template method, and candidate points of light stripe center are determined by Steger method using Hessian matrix. Finally, the eigenvalues of the Hessian matrix are normalized by the data normalization method. According to the discriminant conditions, the candidate points of the light stripe center are judged to be the center points of the light stripe. Finally, the light stripe center of the whole image is extracted.

2 Algorithms and Principles

2.1 Image Sub-block and Parallel Computing

When structured light is used for dimension measurement or three-dimensional topographic scanning, the modulated light stripe projected on the measured object will change with the change of its surface shape. When the surface curvature of the object is large or at the corner of the object, it is easy to produce uneven width distribution of the cross section of the light stripe. The method based on Hessian matrix needs to estimate the light stripe width along the normal direction as the convolution template size of the Gauss filtering. Therefore, the best method is to use many different sizes of Gauss convolution templates, which usually leads to the reduction of image processing speed. At present, with the improvement of hardware performance, it is easy to improve the speed of the algorithm using the performance of hardware itself. So, we use a multi-threaded parallel computing method, as shown in Fig. 1.

We use the method shown in Fig. 2 to segment the image. Different sub-images are obtained according to the number of threads in the CPU. There are some overlapping images between adjacent sub-images, so it is convenient to use the Gauss convolution template for convolution calculation. Of course, the width of overlapping area is larger than that of convolution template. Image segmentation is not only helpful to improve the speed of the algorithm, but also helpful to get more accurate thresholds for binary image processing.

According to the characteristics of the image, the upper edge noise of the image is more serious, while the other areas are more uniform. In addition, the light stripe in the image are generally vertical distribution. We divide the image into four horizontal sub-images.

2.2 Feature Segmentation of Light Stripe

Before image convolution, the region segmentation theory is used to segment the image automatically. Because of the great difference between the gray value of light stripe and background, the threshold of image segmentation is automatically determined by the method of maximum class square (OSTU method). In order to improve the running speed, we simplify it as follows.

For an image, if the maximum gray value is \({L_{\max }}\) and the minimum gray value is \({L_{\min }}\), then the distribution range of gray value is \(\left[ {{L_{\min }},{L_{\max }}} \right] \). If the number of pixels with gray value of L is \({n_L}\), then the total number of pixels is \(N = \sum \limits _{i = {L_{\min }}}^{i = {L_{\max }}} {{n_i}} \).

By normalizing the gray value, the following results can be obtained:

The histogram defined by Eq. (1) is called a 2D histogram. There are \({L_{\max }} - {L_{\min }}\) straight lines that are perpendicular to the main diagonal line in a 2D histogram, as shown in Fig. 3.

Assuming a gray value threshold is \(\tau \). The gray value threshold divides the pixels in the stripe image into two categories, namely \(\left[ {{L_{\min }},\tau } \right] \), and \(\left[ {\tau ,{L_{\max }}} \right] \). Then the probability of two kinds of occurrence is

Then, the mean value is

Where, \(\mu \left( \tau \right) = \sum \limits _{i = {L_{\min }}}^\tau {i{p_i}} ,{\mu _\tau } = \sum \limits _{i = {L_{\min }}}^{{L_{\max }}} {i{p_i}} \).

Then, the variance of the two groups of data is:

Between-class variance \(\sigma _B^2\) can be computed using:

The optimal threshold \({\tau ^ * }\) can be selected from

After \(\tau \)‘\(*\) is obtained, all pixels can be classified using

where \(f\left( {x,y} \right) \) is the segmented image, \(I\left( {x,y} \right) \) is the gray value of the image at the point \(\left( {i,j} \right) \).

2.3 Width Estimation of Light Stripe

After we get the binary image, we need to determine the size of the convolution template for the next Gauss filtering. We propose a directional template method to determine the width of the cross section of the light stripe.

The gray value 1 in the binary image is regarded as the candidate point of the image light stripe. Four directional templates are used to convolute the candidate point in the light stripe image, and the minimum value of the four convolutions is taken as the light stripe width through the candidate point (Fig. 4).

Where, s is the compensation coefficient, and the ratio of the light stripe width in gray image to that in binary image is regraded as the value s. In this paper, s is \(\sqrt{2} \).

2.4 Calculation of the Hessian Matrix

In general, the intensity profile \(f\left( x \right) \) of a light stripe can be described by a Gaussian function as shown in Fig. 5. The Gauss function is as follow.

where A represents the intensity of the light stripe, and \({\sigma }\) represents the standard deviation width of light stripe.

For filtering the noise in the image, the image \(I\left( {x,y} \right) \) is convolved with Gaussian kernel \({g_\sigma }(x,y)\) and differential operators to calculate the derivatives of \(I\left( {x,y} \right) \). So, the center point of the light stripe is given by the first-order zero-crossing-point of the convolution result, which also reaches local extreme points in the second-order derivatives.

Therefore, we use the Gauss filter to eliminate the noise in the image. The Gauss filter is as follow.

The first-order partial derivatives of the Gaussian function are expressed by

The second-order partial derivatives of the Gaussian function are denoted by

According to the above Eqs. (11) and (12), the first-order derivative and the second-order derivative of the image can be convoluted by image and Gaussian kernel function.

where \({I_{x}}\left( {x,y} \right) \), \({I_y}\left( {x,y} \right) \) are the first-order partial derivatives with the image \(I\left( {x,y} \right) \) along the x and y directions, and \({I_{xx}}\left( {x,y} \right) \), \({I_{yy}}\left( {x,y} \right) \), and \({I_{xy}}\left( {x,y} \right) \) represent the second-order partial derivatives with the image \(I\left( {x,y} \right) \), respectively.

So, the Hessian matrix of any point in an image is given by

The eigenvalues of the Hessian matrix are the maximum and minimum of the second-order directional derivatives of the image at this point. The corresponding eigenvectors are the directional vectors of the two extremes, and the two vectors are orthogonal. For light stripe images, the normal direction is the eigenvector corresponding to the larger absolute eigenvalue of the Hessian matrix, and the eigenvector corresponding to the smaller absolute eigenvalue of the Hessian matrix is perpendicular to the normal direction.

Suppose \({\lambda _1}\) and \({\lambda _2}\) are the two eigenvalues of the Hessian matrix, and \({n_x}\) and \({n_y}\) are their corresponding eigenvectors. The eigenvalue \({\lambda _1}\) approaches zero, and the other eigenvalue \({\lambda _2}\) is far less than zero because the gray value of the edge of the light stripe is much larger than that of the background. Namely, \(\left\| {{\lambda _1}} \right\| \approx 0,{\lambda _2} \ll 0\).

Obviously, whether the above conditions are satisfied is a necessary condition for judging that the image point is the center of the light stripe. Therefore, we should choose two thresholds to judge the two eigenvalues. If the threshold is larger, the effective center of the light stripe will be lost, and the discontinuity of the light stripe will occur. If the threshold is smaller, multiple light stripe centers will be generated on the same cross-section of the light stripe.

In order to solve the above problems, we use the data normalization method commonly used in the neural network to normalize the above two eigenvalues. Therefore, we propose a normalized model based on Gauss function and an arc-tangent function.

2.5 Normalization of Hessian Matrix Eigenvalues and Discrimination of Light Stripe Centers

For smaller eigenvalues \({\lambda _1}\) tending to zero, we use standard Gauss function to normalize it. So, when the eigenvalue \({\lambda _1}\) tends to zero, the normalized value tends to 1. For the eigenvalue \({\lambda _2}\) with larger absolute value, we use the arc-tangent function to normalize it. When the absolute value of the eigenvalue \({\lambda _1}\) increases, the normalized value approaches to 1. These two normalization functions are as follows.

where \({\lambda _1}\) is the eigenvalue of the Hessian matrix corresponding to the axis direction of the light stripe. The gray value of the light stripe center varies tinily in its axis direction. a is a constant that we need to set.

where \({\lambda _2}\) is the eigenvalue of the Hessian matrix corresponding to the normal direction of the light stripe. The gray value of the light stripe center varies greatly in its normal direction. b is also a constant that we need to set.

We use the product of these two normalized functions as the final normalized function. The proposed function is illustrated in Fig. 6.

It should be noted that the values of a and b here are related to the stripe width of the actual image. We usually use the following formula to get the value.

Where, N is the sum of the gray value 1 of the segmented light stripe image. For this paper, a is set to 20 and b is set to \(-0.1\).

To obtain the sub-pixel center point of light stripe, let \(\left( {x + t{n_x},y + t{n_y}} \right) \) be the sub-pixel coordinates of the center coordinate \(\left( {x,y} \right) \) along the normal direction of the unit vector \(\left( {{n_x},{n_y}} \right) \) deduced by the Hessian matrix. The Taylor expansion of \(I\left( {x + t{n_x},y + t{n_y}} \right) \) at \(I\left( {x,y} \right) \) is

where \({I_x}\left( {x,y} \right) \), \({I_y}\left( {x,y} \right) \) are the convolution results of the first-order partial derivatives in x and y directions, and t is an unknown value. Because of the sub-pixel center exists on the normal vector of light stripe, the derivative of Eq. (19) is equal to 0, then

If \(t{n_x} \in \left[ { - {1 / {2,{1 / 2}}}} \right] \) and \(t{n_y} \in \left[ { - {1 / {2,{1 / 2}}}} \right] \), \(\left( {x + t{n_x},y + t{n_y}} \right) \) is the sub-pixel center point of the light stripe.

3 Experiment

3.1 Extraction of Light Stripe Center in Visual Measurement of Rails

Line-structure vision sensor is often used in rail dimension vision measurement. Fast measurement of rail dimension is very important to ensure the safety of train running. Usually the rail is installed outdoors, and the outdoor light environment is always changing, which easily affects the accuracy of light stripe center extraction. We validate the effectiveness of the proposed method by extracting light strips from the image of rail size measurement. The light stripe image of size measurement of rails is shown in Fig. 7.

From Fig. 7, we can see that the background noise of the stripe image is more complex, and the light stripe width distribution is not uniform. The width of the light stripe at both ends is obviously thinner. In the area labeled 5 in Fig. 7, the curvature of the light stripe changes greatly. It is impossible to extract the center line of light stripe directly by using traditional Hessain matrix.

(a) (b) (c) (d) Extraction of the center line of the light stripe in the area image labeled 1, 2, 3 and 4 in Fig. 7, respectively

From Fig. 8(a), we can see that the background noise of the stripe area labeled 1 in Fig. 8 is more serious. The method proposed in this paper can still ensure the correct extraction of the center line of the light stripe.

From Fig. 8(b), we can see that in the area labeled 2 in Fig. 8, the light stripe fines obviously at the end. The method proposed in this paper can still ensure the correct extraction of the center line of the light stripe.

From Fig. 9(a), we can see that when the curvature of the stripe curve changes greatly, the center line of the light stripe can still be correctly extracted.

From Fig. 9(b), we can see that when the width of the stripe curve is unevenly distributed, the center line of the light stripe can still be correctly extracted.

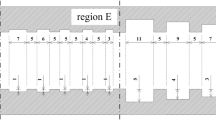

3.2 Extraction of Multiple Light Stripe Centers

We use the method based on Hessian matrix and the method proposed in this paper to extract the center lines of multiple light stripes in the image to verify the extraction accuracy.

From Fig. 10, we can see that it is obviously incorrect to extract the central points of two light stripes in the image by using the Hessian matrix method. There are obviously many center points of the light stripes at both ends of the light stripes. We need to delete the wrong center points of the light stripes. We can directly remove the center points of the stripes at both ends of the image, this method is simple, but it is easy to retain or delete the wrong center points of the stripes too much.

(a) Extraction of the center line of the light stripe in the area image labeled 1 in Fig. 10. (b) Extraction of the center line of the light stripe in the area image labeled 2 in Fig. 10. (c) Extraction of the center line of the light stripe in the area image labeled 3 in Fig. 10. (d) Extraction of the center line of the light stripe in the area image labeled 4 in Fig. 10.

From Fig. 11, we can see that after normalizing the eigenvalues of Hessian matrix proposed in this paper, excessive light stripe centers at both ends can be deleted obviously. It should be noted that the number of center points deleted depends on the size of the Gauss filter template.

3.3 Extraction of Intersecting Light Stripe Centers

We use the method based on Hessian matrix and the method proposed in this paper to extract the center line of intersecting light strips, respectively.

From Fig. 12(a), we can see that at the intersection of the two curves, the extracted feature points are not the center of the two quadratic curves, so the wrong feature points need to be eliminated. The change direction of the points on each conic is continuous, and the change direction of the wrong feature points extracted at the intersection will change abruptly, and the angle between the mutation position and the change vector of the adjacent points will increase.

As can be seen from Fig. 12(b), the proposed method can not only filter out the multiple centers of the four endpoints of two curved strips, but also filter out the wrong centers at the intersection. If we need to get the center of the light strip, we can use the right center of the light strip which has been extracted to fit the curve. After fitting, we can get the intersection point, and then we can get the correct center line of the intersection.

3.4 Extraction of Curve Center in Medical Images

The adaptive template matching and Hessian matrix eigenvalue normalization method proposed in this paper are used to extract the central line of color retinal vessels to verify the effectiveness of the proposed method in complex multi-central line extraction.

As shown in Fig. 13(a), it is a color image of retinal vessels. Figure 13(b) shows the extracted image. From the image, it can be seen that for the image without lesions, the blood vessels can be segmented completely.

3.5 Comparisons of Extraction Time

We use the configuration of the notebook computer: i5-3210M dual-core four-threaded CPU, 8G memory, compiled by Visual studio 2015 software, the resolution of 768*576 light stripe image to extract, that is, the image in Fig. 7. The calculating time is as shown in Table 1.

Although the time of the proposed method is comparable to that of Steger’s method (the speed of the proposed method is a little slower), the calculation time can be reduced by increasing the number of threads in the CPU or using GPU acceleration.

4 Conclusion

A fast sub-pixel center extraction method based on adaptive template is proposed. The adaptive threshold method is used to reduce the image convolution area, and the multi-thread parallel operation is used to improve the speed of stripe center extraction. The multi-directional template is used to estimate the width of the light stripe along the normal direction. The size of the Gaussian convolution template is determined according to the actual width. The Hessian matrix is calculated and its eigenvalues are normalized to establish the criterion for the center of the light stripe. This method has fast processing speed, good robustness and high precision. It is suitable for vision measurement image, medical image, remote sensing image and other fields.

References

Liu, Z., Li, X.J., Yin, Y.: On-site calibration of line-structured light vision sensor in complex light environments. Opt. Express 23(23), 248310–248326 (2015)

O’Toole, M., Mather, J., Kutulakos, K.N.: 3D shape and indirect appearance by structured light transport. IEEE Trans. Pattern Anal. Mach. Intell. 38(7) (2016)

Lilienblum, E., Al-Hamadi, A.: A structured light approach for 3-D surface reconstruction with a stereo line-scan system. IEEE Trans. Instrum. Meas. 64(5) (2015)

Zhang, S., Yau, S.: Absolute phase-assisted three-dimensional data registration for a dual-camera structured light system. Appl. Opt. 47(17), 3134–3142 (2008)

Liu, Z., Li, F., Huang, B., Zhang, G.: Real-time and accurate rail wear measurement method and experimental analysis. J. Opt. Soc. Am. A 31(8), 1721–1729 (2014)

Gong, Z., Sun, J., Zhang, G.: Dynamic structured-light measurement for wheel diameter based on the cycloid constraint. Appl. Opt. 55(1), 198–207 (2016)

Xiong, G., Zhou, X., Degterev, A., Ji, L.: Automated neurite labeling and analysis in fluorescence microscopy images. Cytometry Part A 69, 494–505 (2006)

Faizal, K.Z., Kavitha, V.: An effective segmentation approach for lung CT images using histogram thresholding with EMD refinement. In: Sathiakumar, S., Awasthi, L., Masillamani, M., Sridhar, S. (eds.) Internet Computing and Information Communications. AISC, vol. 216, pp. 483–489. Springer, New Delhi (2014). https://doi.org/10.1007/978-81-322-1299-7_45

Bae, K.T., Kim, J.S., Na, Y.H., et al.: Pulmonary nodules: automated detection on CT images with morphologic matching algorithm-preliminary results. Radiology 236(1), 286–293 (2005)

Jonathan, L., Evan, S., Trevor, D.: Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39(4), 640–651 (2017)

Idris, S.A., Jafar, F.A.: Image enhancement filter evaluation on corrosion visual inspection. In: Sulaiman, H.A., Othman, M.A., Othman, M.F.I., Rahim, Y.A., Pee, N.C. (eds.) Advanced Computer and Communication Engineering Technology. LNEE, vol. 315, pp. 651–660. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-07674-4_61

Cai, W., Dong, A., Zhang, X.: Crack width detection of the concrete surfaced based on images. In: Wu, Y. (ed.) Advances in Computer, Communication, Control and Automation. LNEE, vol. 121, pp. 625–632. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-25541-0_79

Hinz, S., Baumgartner, A.: Automatic extraction of urban road networks from multi-view aerial imagery ISPRS. J. Photogramm. Remote Sens. 23, 83–98 (2003)

Hinz, S., Wiedemann, C.: Increasing efficiency of road extraction by self-diagnosis photogramm. Eng. Remote Sens. 70, 1457–1466 (2004)

Canny, J.: A computational approach to edge detection. IEEE. Trans. Pattern Anal. 6, 679–698 (1986)

Perona, P., Malik, J.: Scale-space and edge detection using anisotropic diffusion. IEEE. Trans. Pattern Anal. 12, 629–639 (1990)

Weijer, J.V.D., Gevers, T., Geusebroek, J.M.: Edge and corner detection by photometric quasi-invariants. IEEE. Trans. Pattern Anal. 27, 625–630 (2005)

Tsai, L.W., Hsieh, J.W., Fan, K.C.: Vehicle detection using normalized color and edge map. IEEE Trans. Image Process. 16, 850–864 (2007)

Suárez, J.P., Carey, G.F., Plaza, A.: Graph-based data structures for skeleton-based refinement algorithms. Commun. Numer. Methods Eng. 17(12), 903–910 (2001)

Ziou, D.: Line detection using an optimal IIR filter. Pattern Recogn. 24, 465–478 (1991)

Laligant, O., Truchetet, F.: A nonlinear derivative scheme applied to edge detection. IEEE. Trans. Pattern Anal. 32, 242–257 (2010)

Shortis, M.R., Clarke, T.A., Short, T.: A comparison of some techniques for the subpixel location of discrete target images. In: Proceedings of the SPIE, vol. 2350, pp. 239–250 (1994)

Luengo-Oroz, M.A., Faure, E., Angulo, J.: Robust iris segmentation on uncalibrated noisy images using mathematical morphology. Image Vis. Comput. 28, 278–284 (2010)

Xu, G.S.: Sub-pixel edge detection based on curve fitting. In: Proceedings of the Second International Conference on Information and Computing Science (Ulsan, Korea, September 2009), pp. 373–375 (2009)

Goshtasby, A., Shyu, H.L.: Edge detection by curve fitting. Image Vis. Comput. 13, 169–177 (1995)

Steger, C.: An unbiased detector of curvilinear structures. IEEE Trans. Pattern Anal. 20, 113–125 (1998)

Qi, L., Zhang, Y., Zhang, X., Wang, S., Xie, F.: Statistical behavior analysis and precision optimization for the laser stripe center detector based on Steger’s algorithm. Opt. Express 21, 13442–13449 (2013)

Lemaitre, C., Perdoch, M., Rahmoune, A., Matas, J., Miteran, J.: Detection and matching of curvilinear structures. Pattern Recogn. 44, 1514–1527 (2011)

Hu, K., Zhou, F.Q., Zhang, G.J.: Fast extraction method for sub-pixel center of strcutured light stripe. Chin. J. Sci. Instrum. 27(10), 1326–1329 (2006)

Wang, S., Ye, A., Hao, G.: Autonomous pallet localization and picking for industrial forklifts based on the line structured light. In: IEEE International Conference on Mechatronics and Automation (2016)

Xie, F., Zhang, Y., Wang, S., et al.: Robust extrication method for line structured-light stripe. Optik 124(23), 6400–6403 (2013)

Guan, X., Sun, L., Li, X., Su, J., Hao, Z., Lu, X.: Global calibration and equation reconstruction methods of a three dimensional curve generated from a laser plane in vision measurement. Optics Express 22(18), 22043 (2014)

Acknowledgement

National Science Fund for Distinguished Young Scholars of China (51625501).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Zou, W., Wei, Z. (2019). A Fast Adaptive Subpixel Extraction Method for Light Stripe Center. In: Zhao, Y., Barnes, N., Chen, B., Westermann, R., Kong, X., Lin, C. (eds) Image and Graphics. ICIG 2019. Lecture Notes in Computer Science(), vol 11901. Springer, Cham. https://doi.org/10.1007/978-3-030-34120-6_64

Download citation

DOI: https://doi.org/10.1007/978-3-030-34120-6_64

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-34119-0

Online ISBN: 978-3-030-34120-6

eBook Packages: Computer ScienceComputer Science (R0)