Abstract

This chapter explores existing data reproducibility and robustness initiatives from a cross-section of large funding organizations, granting agencies, policy makers, journals, and publishers with the goal of understanding areas of overlap and potential gaps in recommendations and requirements. Indeed, vigorous stakeholder efforts to identify and address irreproducibility have resulted in the development of a multitude of guidelines but with little harmonization. This likely results in confusion for the scientific community and may pose a barrier to strengthening quality standards instead of being used as a resource that can be meaningfully implemented. Guidelines are also often framed by funding bodies and publishers as recommendations instead of requirements in order to accommodate scientific freedom, creativity, and innovation. However, without enforcement, this may contribute to uneven implementation. The text concludes with an analysis to provide recommendations for future guidelines and policies to enhance reproducibility and to align on a consistent strategy moving forward.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

The foundation of many health care innovations is preclinical biomedical research, a stage of research that precedes testing in humans to assess feasibility and safety and which relies on the reproducibility of published discoveries to translate research findings into therapeutic applications.

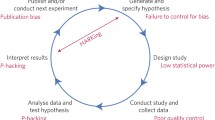

However, researchers are facing challenges while attempting to use or validate the data generated through preclinical studies. Independent attempts to reproduce studies related to drug development have identified inconsistencies between published data and the validation studies. For example, in 2011, Bayer HealthCare was unable to validate the results of 43 out of 67 studies (Prinz et al. 2011), while Amgen reported its inability to validate 47 out of 53 seminal publications that claimed a new drug discovery in oncology (Begley and Ellis 2012).

Researchers attribute this inability to validate study results to issues of robustness and reproducibility. Although defined with some nuanced variation across research groups, reproducibility refers to achieving similar results when repeated under similar conditions, while robustness of a study ensures that similar results can be obtained from an experiment even when there are slight variations in test conditions or reagents (CURE Consortium 2017).

Several essential factors could account for a lack of reproducibility and robustness such as incomplete reporting of basic elements of experimental design, including blinding, randomization, replication, sample size calculation, and the effect of sex differences. Inadequate reporting may be due to poor training of the researchers to highlight and present technical details, insufficient reporting requirements, or page limitations imposed by the publications/journals. This results in the inability to replicate or further use study results since the necessary information to do so is lacking.

The limited presence of opportunities and platforms to contradict previously published work is also a contributing factor. Only a limited number of platforms allow researchers to publish scientific papers that point out any shortcomings of previously published work or highlight a negative impact of any of the components found during the study. Such data is equally essential and informative as any positive data/findings from a study, and limited availability of such data can result in irreproducibility.

Difficulty in accessing unpublished data is also a contributing factor. Negative or validation data are rarely welcomed by high-impact journals, and unpublished dark data related to published results (such as health records or performance on tasks which did not result in a significant finding) may comprise essential details that may help to reproduce the results of the study or build on its results.

For the past decade, stakeholders, such as researchers, journals, funders, and industry leaders, have been aggressively involved in identifying and taking steps to address the issue of reproducibility and robustness of preclinical research findings. These efforts include maintaining and, in some cases, strengthening scientific quality standards including examining and developing policies that guide research, increasing requirements for reagent and data sharing, and issuing new guidelines for publication.

One important step that stakeholders in the scientific community have taken is to support the development and implementation of guidelines. However, the realm of influence for a given type of stakeholder has been limited. For example, journals usually issue guidelines related to reporting of methods and data, whereas funders may issue guidelines pertaining primarily to study design and, increasingly, data management and availability. In addition, the enthusiasm with which stakeholders have tried to address the “reproducibility crisis” has led to the generation of a multitude of guidelines. This has resulted in a littered landscape where there is overlap without harmonization, gaps in recommendations or requirements that may enhance reproducibility, and slow updating of guidelines to meet the needs of promising, rapidly-evolving computational approaches. Worse yet, the perceived increased burden to meet requirements and lack of clarity around what guidelines to follow reduce compliance as it may leave researchers, publishers, and funding organizations confused and overwhelmed. The goal of this chapter is to compile and review the current state of existing guidelines to understand the overlaps, perform a gap analysis on what may still be missing, and to make recommendations for the future of guidelines to enhance reproducibility in preclinical research.

2 Guidelines and Resources Aimed at Improving Reproducibility and Robustness in Preclinical Data

2.1 Funders/Granting Agencies/Policy Makers

Many funders and policy makers have acknowledged the issue of irreproducibility and are developing new guidelines and initiatives to support the generation of data that are robust and reproducible. This section highlights guidelines, policies, and resources directly related to this issue in preclinical research by the major international granting institutions and is not intended to be an exhaustive review of all available guidelines, policies, and resources. Instead, the organizations reviewed represent a cross-section of many of the top funding organizations and publishers in granting volume and visibility. Included also is a network focused specifically on robustness, reproducibility, translatability, and reporting transparency of preclinical data with membership spanning across academia, industry, and publishing. Requirements pertaining to clinical research are included when guidance documents are also used for preclinical research. The funders, granting agencies, and policy makers surveyed included:

-

National Institutes of Health (NIH) (Collins and Tabak 2014; LI-COR 2018; Krester et al. 2017; NIH 2015, 2018a, b)

-

Medical Research Council (MRC) (Medical Research Council 2012a, b, 2016a, b, 2019a, b, c)

-

The World Health Organization (WHO) (World Health Organization 2006, 2010a, b, 2019)

-

Wellcome Trust (Wellcome Trust 2015, 2016a, b, 2018a, b, 2019a, b; The Academy of Medical Sciences 2015, 2016a, b; Universities UK 2012)

-

Canadian Institute of Health Research (CIHR) (Canadian Institutes of Health Research 2017a, b)

-

Deutsche Forschungsgemeinschaft (DFG)/German Research Foundation (Deutsche Forschungsgemeinschaft 2015, 2017a, b)

-

European Commission (EC) (European Commission 2018a, b; Orion Open Science 2019)

-

Institut National de la Santé et de la Recherche Médicale (INSERM) (French Institute of Health and Medical Research 2017; Brizzi and Dupre 2017)

-

US Department of Defense (DoD) (Department of Defense 2017a, b; National Institutes of Health Center for Information Technology 2019)

-

National Health and Medical Research Council (NHMRC) (National Health and Medical Research Council 2018a, b, 2019; Boon and Leves 2015)

-

Center for Open Science (COS) (Open Science Foundation 2019a, b, c, d; Aalbersberg 2017)

-

Howard Hughes Medical Institute (HHMI) (ASAPbio 2018)

-

Bill & Melinda Gates Foundation (Gates Open Research 2019a, b, c, d, e)

-

Innovative Medicines Initiative (IMI) (Innovative Medicines Initiative 2017, 2018; Community Research and Development Information Service 2017; European Commission 2017)

-

Preclinical Data Forum Network (European College of Neuropsychopharmacology 2019a, b, c, d)

2.2 Publishers/Journal Groups

Journal publishers and groups have been revising author instructions and publication policies and guidelines, with an emphasis on detailed reporting of study design, replicates, statistical analyses, reagent identification, and validation. Such revisions are expected to encourage researchers to publish robust and reproducible data (National Institutes of Health 2017). Those publishers and groups considered in the analysis were:

-

NIH Publication Guidelines Endorsed by Journal Groups (Open Science Foundation 2019d)

-

Transparency and Openness Promotion (TOP) Guidelines for Journals (Open Science Foundation 2019d; Nature 2013)

-

PLOS ONE Journal (The Science Exchange Network 2019a, b; Fulmer 2012; Baker 2012; Powers 2019; PLOS ONE 2017a, b, 2019a, b, c; Bloom et al. 2014; Denker et al. 2017; Denker 2016)

-

Journal of Cell Biology (JCB) (Yamada and Hall 2015)

-

Elsevier (Cousijn and Fennell 2017; Elsevier 2018, 2019a, b, c, d, e, f, g; Scholarly Link eXchange 2019; Australian National Data Service 2018)

2.3 Summary of Overarching Themes

Guidelines implemented by funding bodies and publishers/journals to attain data reproducibility can take on many forms. Many agencies prefer to frame their guidelines as recommendations in order to accommodate scientific freedom, creativity, and innovation. Therefore, typical guidelines that support good research practices differ from principles set forth by good laboratory practices, which are based on a more formal framework and tend to be more prescriptive.

In reviewing current guidelines and initiatives around reproducibility and robustness, key areas that can lead to robust and reproducible research were revealed and are discussed below.

Research Design and Analysis

Providing a well-defined research framework and statistical plan before initiating the research reduces bias and thus helps to increase the robustness and reproducibility of the study.

Funders have under taken various initiatives to support robust research design and analysis, including developing guidance on granting applications. These require researchers to address a set of objectives in the grant proposal including the strengths and weakness of the research, details on the experimental design and methods of the study, planned statistical analyses, and sample sizes. In addition, researchers are often required to abide by existing reporting guidelines such as ARRIVE and asked to provide associated metadata.

Some funders, including NIH, DFG, NHMRC, and HHMI, have developed well-defined guidance documents focusing on robustness and reproducibility for applicants, while others, including Wellcome Trust and USDA, have started taking additional approaches to implement such guidelines. For instance, a symposium was held by Wellcome Trust, while USDA held an internal meeting to identify approaches and discuss solutions to include strong study designs and develop rigorous study plans.

As another example, a dedicated annexure, “Reproducibility and statistical design annex,” is required from the researchers in MRC-funded research projects to provide information on methodology and experimental design.

Apart from funders, journals are also working to improve study design quality and reporting, such as requiring that authors complete an editorial checklist before submitting their research in order to enhance the transparency of reporting and thus the reproducibility of published results. Nearly all journals, including Nature Journal of Cell Biology, and PLOS ONE and the major journal publisher Elsevier have introduced this requirement.

Some journals are also prototyping alternate review models such as early publication to help verify study design. For instance, in Elsevier’s Registered Reports initiative, the experimental methods and proposed analyses are preregistered and reviewed before study data is collected. The article gets published on the basis of its study protocol and thus prevents authors from modifying their experiments or excluding essential information on null or negative results in order to get their articles published. However, this has been implemented in a limited number of journals in the Elsevier portfolio. PLOS ONE permits researchers to submit their articles before a peer review process is conducted. This allows researchers/authors to seek feedback on draft manuscripts before or in parallel to formal review or submission to the journal.

Training and Support

Providing adequate training to researchers on the importance of robust study design and experimental methods can help to capture relevant information crucial to attaining reproducibility.

Funders such as MRC have deployed training programs to train both researchers and new panel members on the importance of experimental design and statistics and on the importance of having robust and reproducible research results.

In addition to a detailed guidance handbook for biomedical research, WHO has produced separate, comprehensive training manuals for both trainers and trainees to learn how to implement their guidelines. Also, of note, the Preclinical Data Forum Network, sponsored by the European College of Neuropsychopharmacology (European College of Neuropsychopharmacology 2019e) in Europe and Cohen Veterans Bioscience (Cohen Veterans Bioscience 2019) in the United States, organizes yearly training workshops to enhance awareness and to help junior scientists further develop their experimental skills, with prime focus on experimental design to generate high-quality, robust, reproducible, and relevant data.

Reagents and Reference Material

Developing standards for laboratory reagents are essential to maintain reproducibility.

Funders such as HHMI require researchers to make all tangible research materials including organisms, cell lines, plasmids, or similar materials integral to a publication through a repository or by sending them out directly to requestors.

Laboratory Protocols

Providing detailed laboratory protocols is required to reproduce a study. Otherwise, researchers may introduce process variability when attempting to reproduce the protocol in their own laboratories. These protocols can also be used by reviewers and editors during the peer review process or by researchers to compare methodological details between laboratories pursuing similar approaches.

Funders such as INSERM took the initiative to introduce an electronic lab book. This platform provides better research services by digitizing the experimental work. This enables researchers to better trace and track the data and procedures used in experiments.

Journals such as PLOS ONE have taken an initiative wherein authors can deposit their laboratory protocols on repositories such as protocols.io. A unique digital object identifier (DOI) is assigned to each study and linked to the Methods section of the original article, allowing researchers to access the published work of these authors along with the detailed protocols used to obtain the results.

Reporting and Review

Providing open and transparent access to the research findings and study methods and publishing null or negative results associated with a study facilitate data reproducibility.

Funders require authors to report, cite, and store study data in its entirety, and have developed various initiatives to facilitate data sharing. For instance, CIHR and NHMRC have implemented an open access policy, which requires researchers to store their data in specific repositories to improve discovery and facilitate interaction among researchers, gain Creative Commons Attribution license (CC BY) for their research to allow other researchers to access and use the data in parts or as a whole, and link their research activities via identifiers such as digital object identifiers (DOIs) and ORCID to allow appropriate citation of datasets and provide recognition to data generators and sharers.

Wellcome Trust and Bill & Melinda Gates Foundation have launched their own publishing platforms – Wellcome Open Research and Gates Open Research, respectively – to allow researchers to publish and share their results rapidly.

Other efforts focused on data include the European Commission, which aims to build an open research platform “European Open Science Cloud” that can act as a virtual repository of research data of publicly funded studies and allow European researchers to store, process, and access research data.

In addition, the Preclinical Data Forum Network has been working toward building a data exchange and information repository and incentivizing the publication of negative data by issuing the world’s first price for published “negative” scientific results.

Journals have also taken various initiatives to allow open access of their publications. Some journals such as Nature and PLOS ONE require data availability statements to be submitted by researchers to help in locating the data, and accessing details for primary large-scale data, through details of repositories and digital object identifiers or accession numbers.

Journals also advise authors to upload their raw and metadata in appropriate repositories. Some journals have created their separate cloud-based repository, in addition to those publicly available. For instance, Elsevier has created Mendeley Data to help researchers manage, share, and showcase their research data. And, JCB has established JCB DataViewer, a cross-platform repository for storing large amounts of raw imaging and gel data, for its published manuscripts. Elsevier has also partnered with platforms such as Scholix and FORCE11, which allows data citation, encouraging reuse of research data, and enabling reproducibility of published research.

3 Gaps and Looking to the Future

A gap analysis of existing guidelines and resources was performed, addressing such critical factors as study design, transparency, data management, availability of resources and information, linking relevant research, publication opportunities, consideration of refutations, and initiatives to grow. It should be noted that these categories were not defined de novo but based on a comprehensive review of the high-impact organizations considered.

We considered the following observed factors within each category to understand where organizations are supporting good research practices with explicit guidelines and/or initiatives and to identify potential gaps:

-

Study Design

-

Scientific premise of proposed research: Guidelines to support current or proposed research that is formed on a strong foundation of prior work.

-

Robust methodology to address hypothesis: Guidelines to design robust studies that address the scientific question. This includes justification and reporting of the experimental technique, statistical analysis, and animal model.

-

Animal use guidelines and legal permissions: Guidelines regarding animal use, clinical trial reportings, or legal permissions.

-

Validation of materials: Guidelines to ensure validity of experimental protocol, reagent, or equipment.

-

-

Transparency

-

Comprehensive description of methodology: Guidelines to ensure comprehensive reporting of method and analysis to ensure reproducibility by other researchers. For example, publishers may include additional space for researchers to detail their methodology. Similar to “robust methodology to address hypothesis” but more focused on post-collection reporting rather than initial design.

-

Appropriate acknowledgments: Guidelines for authors to appropriately acknowledge contributors, such as co-authors or references.

-

Reporting of positive and negative data: Guidelines to promote release of negative data, which reinforces unbiased reporting.

-

-

Data Management

-

Early design of data management: Guidelines to promote early design of data management.

-

Storage and preservation of data: Guidelines to ensure safe and long-term storage and preservation of data.

-

Additional tools for data collection and management: Miscellaneous data management tools developed (e.g., electronic lab notebook).

-

-

Availability of Resources and Information

-

Data availability statements: A statement committing researchers to sharing data (usually upon submission to a journal or funding organization).

-

Access to raw or structured data: Guidelines to share data in publicly available or institutional repositories to allow for outside researchers to reanalyze or reuse data.

-

Open or public access publications: Guidelines to encourage open or public access publications, which allows for unrestricted use of research.

-

Shared access to resources, reagents, and protocols: Guidelines to encourage shared access to resources, reagents, and protocols. This may include requirements for researchers to independently share and ship resources or nonprofit reagent repositories.

-

-

Linking Relevant Research

-

Indexing data, reagents, and protocols: Guidelines to index research components, such as data, reagent, or protocols. Indexing using a digital object identifier (DOI) allows researchers to digitally track use of research components.

-

Two-way linking of relevant datasets and publications: Guidelines to encourage linkage between publications. This is particularly important in clinical research when multiple datasets are compiled to increase analytical power.

-

-

Publication Opportunities

-

Effective review: Guidelines to expedite or strengthen the review process, such as a checklist for authors or reviewers to complete or additional responsibilities of the reviewer.

-

Additional peer review and public release processes: Opportunities to release research conclusions independent from the typical journal process.

-

Preregistration: Guidelines to encourage preregistration, a process where researchers commit to their study design prior to collecting data. This reduces bias and increases clarity of the results.

-

-

Consideration of Refutations

-

Attempts to resolve failures to reproduce: Guidelines for authors and organizations to address any discrepancies in results or conclusions

-

-

Initiatives to Grow

-

Develop resources: Additional resources developed to increase reproducibility and rigor in research. This includes training workshops.

-

Work to develop responsible standards: Commitments and overarching goals made by organization to increase reproducibility and rigor in research.

-

As part of the study design, it appeared that there is a dearth of guidelines to ensure validity of experimental protocols, reagents, or equipment. Variability and incomplete reporting of reagents used is a known and oft-cited source of irreproducibility.

The most notable omission regarding transparency were guidelines to promote the release of report negative data to reinforce unbiased reporting. This also results in poor study reproducibility since, overwhelmingly, only positive data are reported for preclinical studies.

Most funding agencies have seriously begun initiatives addressing data management to ensure safe and long-term storage and preservation of data and are developing, making available, or promoting data management tools (e.g., electronic lab notebook). However, these ongoing activities do not often include guidelines to promote the early design of data management, which may reduce errors and ease researcher burden by optimizing and streamlining the process from study design to data upload.

To that point, a massive shift can be seen as both funders and publishers intensely engage in guidelines around the availability of resources and information. Most of this effort is in the ongoing development of guidelines to share data in publicly available or institutional repositories to allow for outside researchers to reanalyze or reuse data. This is to create a long-term framework for new strategies to research that will allow for “big data” computational modeling, deep-learning artificial intelligence, and mega-analyses across species and measures. However, not many guidelines were found that encourage shared access to resources, reagents, and protocols. This may include requirements for researchers to independently share and ship resources or nonprofit reagent repositories.

Related are guidelines for linking relevant research. This includes guidelines to index research components, such as data, reagents, or protocols with digital object identifiers (DOIs) that allow researchers to digitally track the use of research components and guidelines to encourage two-way linking of relevant datasets and publications. This is historically a common requirement for clinical studies and is currently being developed for preclinical research, but not consistently across the organizations surveyed.

On the reporting side, the most notable exclusion to publication opportunities guidelines were those that encourage preregistration, a process whereby researchers commit to their study design prior to collecting data and publishers agree to publish results whether they be positive or negative. These would serve to reduce both experimental and publication biases and increase clarity of the results.

In the category consideration of refutations, which, broadly, are attempts to resolve failures to reproduce a study, few guidelines exist. However, there is ongoing work to develop guidelines for authors and organizations to address discrepancies in results or conclusions and a commitment from publishers that they will consider publications that do not confirm previously published research in their journal.

Lastly, although many organizations cite a number of initiatives to grow, there appear to be notable gaps both in the development of additional resources and work to develop responsible standards. One initiative that aims to develop solutions to address the issue of data reproducibility in preclinical neuroscience research is the EQIPD (European Quality in Preclinical Data) project, launched in October 2017 with support from the Innovative Medicines Initiative (IMI). The project recognizes poor data quality as the main concern resulting in the non-replication of studies/experiments and aims to look for simple, sustainable solutions to improve data quality without impacting innovation. It is expected that this initiative will lead to a cultural change in data quality approaches in the medical research and drug development field with the final intent to establish guidelines that will strengthen robustness, rigor, and validity of research data to enable a smoother and safer transition from preclinical to clinical testing and drug approval in neuroscience (National Institutes of Health 2017; Nature 2013, 2017; Vollert et al. 2018).

In terms of providing additional resources, although some organizations emphasize training and workshops for researchers to enhance rigor and reproducibility, it is unclear if and how organizations themselves assess the effectiveness and actual implementation of their guidelines and policies. An exception may be WHO’s training program, which provides manuals for both trainer and trainee to support the implementation of their guidelines.

More must also be done to accelerate work to develop consensus, responsible standards. As funders, publishers, and preclinical researchers alike begin recognizing the promise of computational approaches and attempt to meet the demands for these kinds of analyses, equal resources and energy must be devoted to the required underlying standards and tools. To be able to harmonize data across labs and species, ontologies and CDEs must be developed and researchers must be trained and incentivized to use them. Not only may data that have already been generated offer profound validation opportunities but also the ability to follow novel lines of research agnostically based on an unbiased foundation of data. In acquiring new data, guidelines urging preclinical scientists to collect and upload all experimental factors, including associated dark data in a usable format may bring the field closer to understanding if predictive multivariate signatures exist, embrace deviations in study design, and may be more reflective of clinical trials.

Overall, the best path forward may be for influential organizations to develop a comprehensive plan to enhance reproducibility and align on a standard set of policies. A coherent road map or strategy would ensure that all known factors related to this issue are addressed and reduce complications for investigators.

References

Aalbersberg IJ (2017) Elsevier supports TOP guidelines in ongoing efforts to ensure research quality and transparency. https://www.elsevier.com/connect/elsevier-supports-top-guidelines-in-ongoing-efforts-to-ensure-research-quality-and-transparency. Accessed 1 May 2018

ASAPbio (2018) Peer review meeting summary. https://asapbio.org/peer-review/summary. Accessed 1 May 2018

Australian National Data Service (2018) Linking data with Scholix. https://www.ands.org.au/working-with-data/publishing-and-reusing-data/linking-data-with-scholix. Accessed 1 May 2018

Baker M (2012) Independent labs to verify high-profile papers. https://www.nature.com/news/independent-labs-to-verify-high-profile-papers-1.11176. Accessed 1 May 2018

Begley CG, Ellis LM (2012) Raise standards for preclinical cancer research. Nature 483(7391):531

Bloom T, Ganley E, Winker M (2014) Data access for the open access literature: PLOS’s data policy. https://journals.plos.org/plosbiology/article?id=10.1371/journal.pbio.1001797. Accessed 1 May 2018

Boon W-E, Leves F (2015) NHMRC initiatives to improve access to research outputs and findings. Med J Aust 202(11):558

Brizzi F, Dupre P-G (2017) Inserm Labguru Pilot Program digitizes experimental data across institute. https://www.labguru.com/case-study/Inserm_labguru-F.pdf. Accessed 1 May 2018

Canadian Institutes of Health Research (2017a) Reproducibility in pre-clinical health research. Connections 17(9)

Canadian Institutes of Health Research (2017b) Tri-agency open access policy on publications. http://www.cihr-irsc.gc.ca/e/32005.html. Accessed 1 May 2018

Cancer Research UK (2018a) Develop the cancer leaders of tomorrow: our research strategy. https://www.cancerresearchuk.org/funding-for-researchers/our-research-strategy/develop-the-cancer-leaders-of-tomorrow. Accessed 1 May 2018

Cancer Research UK (2018b) Data sharing guidelines. https://www.cancerresearchuk.org/funding-for-researchers/applying-for-funding/policies-that-affect-your-grant/submission-of-a-data-sharing-and-preservation-strategy/data-sharing-guidelines. Accessed 1 May 2018

Cancer Research UK (2018c) Policy on the use of animals in research. https://www.cancerresearchuk.org/funding-for-researchers/applying-for-funding/policies-that-affect-your-grant/policy-on-the-use-of-animals-in-research#detail1. Accessed 1 May 2018

Cohen Veterans Bioscience (2019) Homepage. https://www.cohenveteransbioscience.org. Accessed 15 Apr 2019

Collins FS, Tabak LA (2014) NIH plans to enhance reproducibility. Nature 505(7485):612–613

Community Research and Development Information Service (2017) European quality in preclinical data. https://cordis.europa.eu/project/rcn/211612_en.html. Accessed 1 May 2018

Cousijn H, Fennell C (2017) Supporting data openness, transparency & sharing. https://www.elsevier.com/connect/editors-update/supporting-openness,-transparency-and-sharing. Accessed 1 May 2018

CURE Consortium (2017) Curating for reproducibility defining “reproducibility.” http://cure.web.unc.edu/defining-reproducibility/. Accessed 1 May 2018

Denker SP (2016) The best of both worlds: preprints and journals. https://blogs.plos.org/plos/2016/10/the-best-of-both-worlds-preprints-and-journals/?utm_source=plos&utm_medium=web&utm_campaign=plos-1702-annualupdate. Accessed 1 May 2018

Denker SP, Byrne M, Heber J (2017) Open access, data and methods considerations for publishing in precision medicine. https://www.plos.org/files/PLOS_Biobanking%20and%20Open%20Data%20Poster_2017.pdf. Accessed 1 May 2018

Department of Defense (2017a) Guide to FY2018 research funding at the Department of Defense (DOD). August 2017. https://research.usc.edu/files/2011/05/Guide-to-FY2018-DOD-Research-Funding.pdf. Accessed 1 May 2018

Department of Defense (2017b) Instruction for the use of animals in DoD programs. https://www.esd.whs.mil/Portals/54/Documents/DD/issuances/dodi/321601p.pdf. Accessed 1 May 2018

Deutsche Forschungsgemeinschaft (2015) DFG guidelines on the handling of research data. https://www.dfg.de/download/pdf/foerderung/antragstellung/forschungsdaten/guidelines_research_data.pdf. Accessed 1 May 2018

Deutsche Forschungsgemeinschaft (2017a) DFG statement on the replicability of research results. https://www.dfg.de/en/research_funding/announcements_proposals/2017/info_wissenschaft_17_18/index.html. Accessed 1 May 2018

Deutsche Forschungsgemeinschaft (2017b) Replicability of research results. A statement by the German Research Foundation. https://www.dfg.de/download/pdf/dfg_im_profil/reden_stellungnahmen/2017/170425_stellungnahme_replizierbarkeit_forschungsergebnisse_en.pdf. Accessed 1 May 2018

Elsevier (2018) Elsevier launches Mendeley Data to manage entire lifecycle of research data. https://www.elsevier.com/about/press-releases/science-and-technology/elsevier-launches-mendeley-data-to-manage-entire-lifecycle-of-research-data. Accessed 15 Apr 2019

Elsevier (2019a) Research data. https://www.elsevier.com/about/policies/research-data#Principles. Accessed 15 Apr 2019

Elsevier (2019b) Research data guidelines. https://www.elsevier.com/authors/author-resources/research-data/data-guidelines. Accessed 15 Apr 2019

Elsevier (2019c) STAR methods. https://www.elsevier.com/authors/author-resources/research-data/data-guidelines. Accessed 15 Apr 2019

Elsevier (2019d) Research elements. https://www.elsevier.com/authors/author-resources/research-elements. Accessed 15 Apr 2019

Elsevier (2019e) Research integrity. https://www.elsevier.com/about/open-science/science-and-society/research-integrity. Accessed 15 Apr 2019

Elsevier (2019f) Open data. https://www.elsevier.com/authors/author-resources/research-data/open-data. Accessed 15 Apr 2019

Elsevier (2019g) Mendeley data for journals. https://www.elsevier.com/authors/author-resources/research-data/mendeley-data-for-journals. Accessed 15 Apr 2019

European College of Neuropsychopharmacology (2019a) Preclinical Data Forum Network. https://www.ecnp.eu/research-innovation/ECNP-networks/List-ECNP-Networks/Preclinical-Data-Forum.aspx. Accessed 15 Apr 2019

European College of Neuropsychopharmacology (2019b) Output. https://www.ecnp.eu/research-innovation/ECNP-networks/List-ECNP-Networks/Preclinical-Data-Forum/Output.aspx. Accessed 15 Apr 2019

European College of Neuropsychopharmacology (2019c) Members. https://www.ecnp.eu/research-innovation/ECNP-networks/List-ECNP-Networks/Preclinical-Data-Forum/Members. Accessed 15 Apr 2019

European College of Neuropsychopharmacology (2019d) ECNP Preclinical Network Data Prize. https://www.ecnp.eu/research-innovation/ECNP-Preclinical-Network-Data-Prize.aspx. Accessed 15 Apr 2019

European College of Neuropsychopharmacology (2019e) Homepage. https://www.ecnp.eu. Accessed 15 Apr 2019

European Commission (2017) European quality in preclinical data. https://quality-preclinical-data.eu/wp-content/uploads/2018/01/20180306_EQIPD_Folder_Web.pdf. Accessed 1 May 2018

European Commission (2018a) The European cloud initiative. https://ec.europa.eu/digital-single-market/en/european-cloud-initiative. Accessed 1 May 2018

European Commission (2018b) European Open Science cloud. https://ec.europa.eu/digital-single-market/en/european-open-science-cloud. Accessed 1 May 2018

French Institute of Health and Medical Research (2017) INSERM 2020 strategic plan. https://www.inserm.fr/sites/default/files/2017-11/Inserm_PlanStrategique_2016-2020_EN.pdf. Accessed 1 May 2018

Fulmer T (2012) The cost of reproducibility. SciBX 5:34

Gates Open Research (2019a) Introduction. https://gatesopenresearch.org/about. Accessed 15 Apr 2019

Gates Open Research (2019b) Open access policy frequently asked questions. https://www.gatesfoundation.org/How-We-Work/General-Information/Open-Access-Policy/Page-2. Accessed 15 Apr 2019

Gates Open Research (2019c) How to publish. https://gatesopenresearch.org/for-authors/article-guidelines/research-articles. Accessed 15 Apr 2019

Gates Open Research (2019d) Data guidelines. https://gatesopenresearch.org/for-authors/data-guidelines. Accessed 15 Apr 2019

Gates Open Research (2019e) Policies. https://gatesopenresearch.org/about/policies. Accessed 15 Apr 2019

Innovative Medicines Initiative (2017) European quality in preclinical data. https://www.imi.europa.eu/projects-results/project-factsheets/eqipd. Accessed 1 May 2018

Innovative Medicines Initiative (2018) 78th edition. https://www.imi.europa.eu/news-events/newsletter/78th-edition-april-2018. Accessed 1 May 2018

Krester A, Murphy D, Dwyer J (2017) Scientific integrity resource guide: efforts by federal agencies, foundations, nonprofit organizations, professional societies, and academia in the United States. Crit Rev Food Sci Nutr 57(1):163–180

LI-COR (2018) Tracing the footsteps of the data reproducibility crisis. http://www.licor.com/bio/blog/reproducibility/tracing-the-footsteps-of-the-data-reproducibility-crisis/. Accessed 1 May 2018

Medical Research Council (2012a) MRC ethics series. Good research practice: principles and guidelines. https://mrc.ukri.org/publications/browse/good-research-practice-principles-and-guidelines/. Accessed 1 May 2018

Medical Research Council (2012b) UK Research and Innovation. Methodology and experimental design in applications: guidance for reviewers and applicants. https://mrc.ukri.org/documents/pdf/methodology-and-experimental-design-in-applications-guidance-for-reviewers-and-applicants/. Accessed 1 May 2018

Medical Research Council (2016a) Improving research reproducibility and reliability: progress update from symposium sponsors. https://mrc.ukri.org/documents/pdf/reproducibility-update-from-sponsors/. Accessed 1 May 2018

Medical Research Council (2016b) Funding reproducible, robust research. https://mrc.ukri.org/news/browse/funding-reproducible-robust-research/. Accessed 1 May 2018

Medical Research Council (2019a) Proposals involving animal use. https://mrc.ukri.org/funding/guidance-for-applicants/4-proposals-involving-animal-use/. Accessed 15 Apr 2019

Medical Research Council (2019b) Grant application. https://mrc.ukri.org/funding/guidance-for-applicants/2-the-application/#2.2.3. Accessed 15 Apr 2019

Medical Research Council (2019c) Open research data: clinical trials and public health interventions. https://mrc.ukri.org/research/policies-and-guidance-for-researchers/open-research-data-clinical-trials-and-public-health-interventions/. Accessed 15 Apr 2019

National Health and Medical Research Council (2018a) National Health and Medical Research Council open access policy. https://www.nhmrc.gov.au/about-us/resources/open-access-policy. Accessed 15 Apr 2019

National Health and Medical Research Council (2018b) 2018 NHMRC symposium on research translation. https://www.nhmrc.gov.au/event/2018-nhmrc-symposium-research-translation. Accessed 15 Apr 2019

National Health and Medical Research Council (2019) Funding. https://www.nhmrc.gov.au/funding. Accessed 15 Apr 2019

National Institutes of Health (2017) Principles and guidelines for reporting preclinical research. https://www.nih.gov/research-training/rigor-reproducibility/principles-guidelines-reporting-preclinical-research. Accessed 1 May 2018

National Institutes of Health Center for Information Technology (2019) Federal Interagency Traumatic Brain Injury Research Informatics System. https://fitbir.nih.gov/. Accessed 1 May 2018

Nature (2013) Enhancing reproducibility. Nat Methods 10:367

Nature (2017) On data availability, reproducibility and reuse. 19:259

Nature (2019) Availability of data, material and methods. http://www.nature.com/authors/policies/availability.html. Accessed 15 Apr 2019

NIH (2015) Enhancing reproducibility through rigor and transparency. https://grants.nih.gov/grants/guide/notice-files/NOT-OD-15-103.html. Accessed 1 May 2018

NIH (2018a) Reproducibility grant guidelines. https://grants.nih.gov/reproducibility/documents/grant-guideline.pdf. Accessed 1 May 2018

NIH (2018b) Rigor and reproducibility in NIH applications: resource chart. https://grants.nih.gov/grants/RigorandReproducibilityChart508.pdf. Accessed 1 May 2018

Open Science Foundation (2019a) Transparency and openness guidelines. Table summary funders. https://osf.io/kzxby/. Accessed 15 Apr 2019

Open Science Foundation (2019b) Transparency and openness guidelines. https://osf.io/4kdbm/. Accessed 15 Apr 2019

Open Science Foundation (2019c) Transparency and openness guidelines for funders. https://osf.io/bcj53/. Accessed 15 Apr 2019

Open Science Foundation (2019d) Open Science Framework, guidelines for Transparency and Openness Promotion (TOP) in journal policies and practices. https://osf.io/9f6gx/wiki/Guidelines/?_ga=2.194585006.2089478002.1527591680-566095882.1527591680. Accessed 15 Apr 2019

Orion Open Science (2019) What is Open Science? https://www.orion-openscience.eu/resources/open-science. Accessed 15 Apr 2019

Pattinson D (2012) PLOS ONE launches reproducibility initiative. https://blogs.plos.org/everyone/2012/08/14/plos-one-launches-reproducibility-initiative/. Accessed 1 May 2018

PLOS ONE (2017a) Making progress toward open data: reflections on data sharing at PLOS ONE. https://blogs.plos.org/everyone/2017/05/08/making-progress-toward-open-data/. Accessed 1 May 2018

PLOS ONE (2017b) Protocols.io tools for PLOS authors: reproducibility and recognition. https://blogs.plos.org/plos/2017/04/protocols-io-tools-for-reproducibility/. Accessed 1 May 2018

PLOS ONE (2019a) Data availability. https://journals.plos.org/plosone/s/data-availability. Accessed 15 Apr 2019

PLOS ONE (2019b) Submission guidelines. https://journals.plos.org/plosone/s/submission-guidelines. Accessed 15 Apr 2019

PLOS ONE (2019c) Criteria for publication. https://journals.plos.org/plosone/s/criteria-for-publication. Accessed 15 Apr 2019

Powers M (2019) New initiative aims to improve translational medicine hit rate. http://www.bioworld.com/content/new-initiative-aims-improve-translational-medicine-hit-rate-0. Accessed 15 Apr 2019

Prinz F, Schlange T, Asadullah K (2011) Believe it or not: how much can we rely on published data on potential drug targets? Nat Rev Drug Discov 10(9):712

Scholarly Link eXchange (2019) Scholix: a framework for scholarly link eXchange. http://www.scholix.org/home. Accessed 15 Apr 2019

The Academy of Medical Sciences (2015) Reproducibility and reliability of biomedical research: improving research practice. In: Symposium report, United Kingdom, 1–2 April 2015

The Academy of Medical Sciences (2016a) Improving research reproducibility and reliability: progress update from symposium sponsors. https://acmedsci.ac.uk/reproducibility-update/. Accessed 1 May 2018

The Academy of Medical Sciences (2016b) Improving research reproducibility and reliability: progress update from symposium sponsors. https://acmedsci.ac.uk/file-download/38208-5631f0052511d.pdf. Accessed 1 May 2018

The Science Exchange Network (2019a) Validating key experimental results via independent replication. http://validation.scienceexchange.com/. Accessed 15 Apr 2019

The Science Exchange Network (2019b) Reproducibility initiative. http://validation.scienceexchange.com/#/reproducibility-initiative. Accessed 15 Apr 2019

Universities UK (2012) The concordat to support research integrity. https://www.universitiesuk.ac.uk/policy-and-analysis/reports/Pages/research-concordat.aspx. Accessed 1 May 2018

Vollert J, Schenker E, Macleod M et al (2018) A systematic review of guidelines for rigour in the design, conduct and analysis of biomedical experiments involving laboratory animals. Br Med J Open Sci 2:e000004

Wellcome Trust (2015) Research practice. https://wellcome.ac.uk/what-we-do/our-work/research-practice. Accessed 1 May 2018

Wellcome Trust (2016a) Why publish on Wellcome Open Research? https://wellcome.ac.uk/news/why-publish-wellcome-open-research. Accessed 1 May 2018

Wellcome Trust (2016b) Wellcome to launch bold publishing initiative. https://wellcome.ac.uk/press-release/wellcome-launch-bold-publishing-initiative. Accessed 1 May 2018

Wellcome Trust (2018a) Grant conditions. https://wellcome.ac.uk/sites/default/files/grant-conditions-2018-may.pdf. Accessed 1 May 2018

Wellcome Trust (2018b) Guidelines on good research practice. https://wellcome.ac.uk/funding/guidance/guidelines-good-research-practice. Accessed 1 May 2018

Wellcome Trust (2019a) Wellcome Open Research. https://wellcomeopenresearch.org/. Accessed 15 Apr 2019

Wellcome Trust (2019b) Wellcome Open Research. How it works. https://wellcomeopenresearch.org/about. Accessed 15 Apr 2019

World Health Organization (2006) Handbook: quality practices in basic biomedical research. https://www.who.int/tdr/publications/training-guideline-publications/handbook-quality-practices-biomedical-research/en/. Accessed 1 May 2018

World Health Organization (2010a) Quality Practices in Basic Biomedical Research (QPBR) training manual: trainee. https://www.who.int/tdr/publications/training-guideline-publications/qpbr-trainee-manual-2010/en/. Accessed 1 May 2018

World Health Organization (2010b) Quality Practices in Basic Biomedical Research (QPBR) training manual: trainer. https://www.who.int/tdr/publications/training-guideline-publications/qpbr-trainer-manual-2010/en/. Accessed 1 May 2018

World Health Organization (2019) Quality practices in basic biomedical research. https://www.who.int/tdr/publications/quality_practice/en/. Accessed 15 Apr 2019

Yamada KM, Hall A (2015) Reproducibility and cell biology. J Cell Biol 209(2):191

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2019 The Author(s)

About this chapter

Cite this chapter

Kabitzke, P., Cheng, K.M., Altevogt, B. (2019). Guidelines and Initiatives for Good Research Practice. In: Bespalov, A., Michel, M., Steckler, T. (eds) Good Research Practice in Non-Clinical Pharmacology and Biomedicine. Handbook of Experimental Pharmacology, vol 257. Springer, Cham. https://doi.org/10.1007/164_2019_275

Download citation

DOI: https://doi.org/10.1007/164_2019_275

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-33655-4

Online ISBN: 978-3-030-33656-1

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)