Abstract

Errors in simple choice tasks result in systematic changes in the response time and accuracy of subsequent trials. We propose that there are at least two different causes of choice errors – response speed and evidence quality, which result in different types of post-error changes. We explore these differences in types of errors and post-error changes in two recognition memory experiments with speed versus accuracy emphasis conditions that differentially produce response-speed and evidence-quality errors. Under conditions that give rise to more response-speed errors, we find evidence of traditional post-error slowing. Under conditions that give rise to evidence-quality errors, we find evidence of post-error speeding. We propose a broadening of theories of cognitive control to encompass maladaptive as well as adaptive strategies, and discuss implications for the use of post-error changes to measure cognitive control.

Similar content being viewed by others

Introduction

Identifying, responding to and learning from errors are vital to adaptive functioning. As such, errors and their consequences are widely studied as markers of cognitive control. Error control is typically studied in simple and rapid choice tasks through the occurrence of systematic changes in response time (RT) and accuracy on subsequent trials. Within this literature, a lack of consensus has developed regarding post-error consequences and their causes, measurement, and interpretation (for review, see Williams et al., 2016).

Here we examine a perspective on post-error effects provided by dynamic theories of rapid choice (for a review, see Brown & Heathcote, 2008) that assume decisions are based on accumulating evidence over time. A response is triggered when the summed evidence for one option reaches its threshold. Errors that occur when the wrong evidence threshold is reached first can be both systematically faster and slower than correct responses (Ratcliff & Rouder, 1998). These two categories of errors are assumed to have distinct causes, and to occur in all experimental conditions with their relative proportions varying systematically as a function of whether the speed or accuracy of responses is emphasized.

Faster errors occur when responding is rushed. Random variation in evidence accumulation from moment to moment (Stone, 1960) and random bias in evidence accumulation start points from trial to trial (Laming, 1968) have been proposed as two causes of such speed errors. These random variations can lead to evidence favoring an incorrect response. Increasing the decision threshold reduces their effects, but at the cost of slowed responding. This speed-accuracy tradeoff mechanism is thought to explain post-error slowing by a threshold increase that reduces the probability of response-speed errors.

Slower errors occur when accuracy is emphasized over speed. Evidence-accumulation models assume slower errors are due to poor evidence quality. If average evidence accumulation rates vary randomly from trial to trial and decisions are difficult, such evidence-quality errors occur on trials where the average favors the wrong response. In this case, further evidence accumulation due to an increased threshold amplifies, rather than reduces, the tendency to make an error (Laming, 1968). In describing this phenomenon in the context of post-error slowing, Laming (1979) argued that participants “run out of data” (pp. 219) on tasks of sufficiently high difficultly, and Rabbitt and Vyas (1970) made reference to errors of perceptual analysis.

Although evidence-accumulation models acknowledge these very different categories of response-speed and data-quality errors, broader cognitive control research has focused almost exclusively on response-speed errors and post-error slowing (Williams et al., 2016). Cognitive control theories commonly assume that the occurrence of errors trigger post-error slowing to increase accuracy. However, post-error slowing is only an effective remedy for response-speed errors. When poor evidence quality is the dominant cause of errors, slowing costs time for what might be little to no benefit.

When accuracy is emphasized, and/or tasks are difficult, RT is typically longer. When decision-making evidence cannot be improved due to data, cognitive, and/or perceptual limitations, some errors are unavoidable and spending longer making choices wastes time. If decision makers value both speed and accuracy (i.e., they are optimizing reward rate; Bogacz et al., 2006), and additional time is not improving accuracy, it may be more adaptive to lower the decision threshold and choose more quickly, leading to post-error speeding.

We propose these two causes of error: (1) response speed and (2) evidence quality may be systematically associated with two distinct post-error changes: (1) post-error slowing, and (2) post-error speeding, respectively. This proposal is exploratory in that it assumes decision makers use post-error processing to prioritize both speed and accuracy. As far as we are aware, it is also the first exploration of whether different types of errors might be followed by different types of post-error adjustment. Our proposal leads to three predictions. Firstly, when experimental manipulations give rise to more response-speed errors, we should find post-error slowing. Secondly, when experimental manipulations give rise to more evidence-quality errors, we should find no post-error slowing, and perhaps post-error speeding. Finally, because dynamic choice theories assume response-speed and evidence-quality errors are present in all manipulations to some extent, and we expect response-speed errors to be faster than evidence-quality errors, post-error changes may vary systemically within a condition. Specifically, errors made in the faster half of the RT distribution may show more post-error slowing, while errors made in the slower half of the RT distribution may show less post-error slowing (or post-error speeding).

To test our proposition, we reanalyzed two published data sets (Osth et al., 2017; Rae et al., 2014). Both were recognition memory experiments where participants were instructed to respond either quickly or accurately across blocks. Emphasizing speed should lead to a greater proportion of response-speed errors and post-error slowing. Emphasizing accuracy should lead to a greater proportion of evidence quality errors and post-error speeding.

Methods

The full method details are reported in Rae et al. (2014), and Osth et al. (2017). To summarize, participants completed a recognition memory experiment consisting of two 1-h sessions on different days. Participants indicated whether a word was “old” or “new” via key-press. Instructions emphasized speed or accuracy of responses across alternating blocks. Note Osth et al. (2017) is an extension of Rae et al. (2014), where participants additionally indicated their confidence in the correctness of the “old” or “new” response they had just made.

Measuring post-error change

The standard method of quantifying post-error slowing involves subtracting the mean RT of each participant’s post-error trials from the mean RT of their post-correct trials. This type of global averaging can be confounded by slow fluctuations in speed and accuracy (e.g., more error occurring during periods of slower responding), and differences in the speed of error and correct responses (e.g., slowing after faster responses when errors are faster). Two alternative calculations have been proposed to address these confounds: the robust method (Dutilh et al., 2012) and the matched method (Hajcak & Simons, 2002). We employ all three methods; the details on how we did so are provided in the Supplementary Material. In order to examine the prediction of cognitive control theories that post-error slowing is associated with increased accuracy we report the results of applying all three methods to response accuracy.

Results

For Rae et al. (2014), one participant was excluded due to recording error, leaving 47 data sets for analysis. For Osth et al. (2017), an additional ten participants were collected post publication for the purpose of increasing the sample size to support further analysis, making 46 participants in total. For both experiments, sequential pairs or triplets including responses faster than 150 ms or slower than 2,500 ms were removed from analyses. All results were calculated for each participant before assessing averages over participants in each experiment and condition.

The Supplementary Material contains analyses confirming that, in line with expectations and in both experiments, errors were faster and more frequent in the speed-emphasis conditions, and less frequent and slower in the accuracy-emphasis conditions.

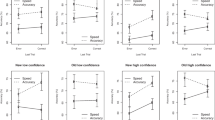

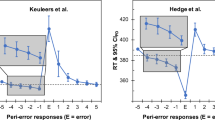

The plots in Fig. 1 establish that, for both experiments, post-error slowing occurred under speed emphasis and post-error speeding occurred under accuracy emphasis. For Rae et al. (2014), paired-sample t-tests confirm the consistency of these differences in post-error slowing between speed and accuracy conditions across the robust (speed mean, Ms = 19.15, accuracy mean, Ma = -19.05, t[46] = 3.26, p = .002), matched (Ms = 19.29, Ma = 38.64, t[46] = 5.21, p < .001), and standard (Ms = 17.28, Ma = -15.05, t[46] = 3.15, p = .003) methods. For Osth et al. (2017) similar t-tests found reliable changes between conditions for the matched (Ms = 13.86, Ma = -34.85, t[45] = 3.34, p = .002) and standard methods (Ms = 6.34, Ma = -37.59, t[45] = 2.98, p = .005), but not the robust method, although the latter is in the expected direction. These results support our hypotheses that the two types of error are associated with differing post-error adjustments.

Post-error response time changes under speed and accuracy emphasis for the robust, matched, and standard calculation methods. The top row of plots represents data for Rae et al. (2014). The bottom row of plots represents data from Osth et al. (2017). Positive results indicate post-error slowing. ‘s’ and ‘a’ indicate speed and accuracy; ‘*’ indicates a two-tailed t-test of the difference from zero was significant at p = .05. Error bars indicate the standard error of the mean

We hypothesized that there would be more response-speed errors in faster RT bins and more evidence-quality errors in slower RT bins, and so predicted more post-error slowing for errors made in the fastest 50% of responses (hereafter, earlier errors), and more post-error speeding for errors made in the slowest 50% of responses (hereafter, later errors). Figure 2 confirms these predictions for both conditions and experiments and for all calculation methods, although to a lesser degree for the standard method. Inferential analyses support these visual interpretations. We performed two-way within-subjects ANOVAs for each calculation method, using condition (speed/accuracy) and error placement (earlier/later) as factors. The main effect of error placement was significant for Rae et al. (2014) for the robust (p = .032) and matched (p = .035) methods, but not the standard (p = .199) method. This pattern was replicated for Osth et al. (2017), where the robust (p = .007) and matched (p = .029) methods again reached significance, but not the standard (p = .096) method. Full ANOVA results are reported in the Supplementary Material.

Post-error response time changes using the robust, matched, and standard calculation methods for speed and accuracy emphasis and error position (earlier/later). Participants who had less than five suitable errors in a bin were removed entirely from analyses. Error bars represent the within-subjects standard error of the mean (Morey, 2008)

Discussion

In line with our predictions, in the two recognition memory experiments we analyzed, Rae et al. (2014) and Osth et al. (2017), we found post-error slowing when response-speed errors dominated, and post-error speeding when evidence-quality errors dominated. In order to control for potential confounds in the standard measurement method we also used the robust (Dutilh et al., 2012) and matched (Hajcak & Simons, 2002) methods of calculating post-error changes. We examined two factors that we supposed affected the preponderance of each type of error: speed versus accuracy emphasis instructions and overall response speed. Analyses reported in the Supplementary Material comparing the speed of correct and error responses supported a shift from evidence-quality errors when accuracy was emphasized, to response-speed errors when speed was emphasized. We observed a corresponding shift from post-error slowing under accuracy emphasis to post-error speeding under speed emphasis. Because evidence-quality errors are slower than response-speed errors they should become more common in the slower half of responses than in the faster half of responses. In line with this expectation and the hypothesized relationship with post-error changes, in both experiments errors sped up relative to correct responses as overall response speed decreased within both the speed and accuracy conditions.

Post-error slowing has been widely reported and typically associated with easy decisions. It is usually explained by an adaptive reaction that controls error rates by increasing response caution. Easy decisions are likely associated with a preponderance of response-speed errors, so these findings are consistent with the association we propose, and the explanation is consistent with increased response caution being an effective means of reducing response-speed errors. Reports of post-error speeding are less common, typically occurring in lower accuracy conditions, consistent with the association we propose. It has been reported in two different types of paradigms associated with two different explanations.

The orienting account predicts post-error speeding when errors are more common than correct responses and post-error slowing when errors are less common. This occurs because rarer responses evoke an orienting response that slows the subsequent trial. Notebaert et al. (2009a; see also Núńez Castellar et al., 2010) tested this account in a multiple-choice paradigm where correct responses were rarer and errors predominated, and found post-error speeding.Footnote 1 Although the orienting account might accommodate the post-error slowing we observed, it does not account for our post-error speeding findings because correct responses were usually twice as common as errors. Also, speeding only occurred in the higher accuracy condition, whereas the orienting account predicts it should be more common when accuracy is lower.

Williams et al. (2016) found post-error speeding in a task requiring very difficult choices. Their “post-error recklessness” account suggested that participants became impatient when they realized accuracy could not be controlled by being more cautious. Subsequently they responded less cautiously, and hence faster and less accurately, on the following trial. Consistent with a voluntary cause, they found speeding in a difficult choice was reduced when accurate responding was financially rewarded. Similar explanations have been supported in gambling (Verbruggen, Chambers, Lawrence & McLaren, 2017) and game-like tasks (Dyson, Sundvall, Forder, & Douglas, 2018) where increased response caution is ineffective in improving accuracy. In the current experiments, participant errors in the accuracy condition were signalled by feedback that was likely aversive, particularly as it signalled that they had failed in the goal emphasized in task instructions. Hence, they may have experienced greater disappointment following errors under accuracy than speed emphasis, and so more reckless responding followed.

These proposed emotional reactions might be seen as maladaptive; however, when viewed from the standpoint of reward-rate optimization (Bogacz et al., 2006) they could also be viewed as adaptive – increasing the number of opportunities to make a correct response by increasing the amount of trials one could complete. In our paradigm only a fixed number of trials were available, so this was not the case. However, it is possible that the habit of taking reward rate into account for situations where that is adaptive may have been inappropriately generalized to the experimental setting.

In the Supplementary Material we also examined post-error changes in accuracy using the standard, robust, and matched calculations. For Rae et al. (2014) no effects were significant, but for Osth et al. (2017) there was a clear decrease in accuracy following an error, which tended to be larger for the accuracy condition. Although greater post-error accuracy under speed rather than accuracy emphasis is consistent with the standard cognitive control account, at the very least our findings suggests the account is incomplete. One possible explanation is that the negative affect experienced following an error reduces accuracy because it takes away attentional resources from processing on the subsequent trial (Ben-Haim et al., 2016). To the degree that the disappointment caused by an error is greater under accuracy emphasis this could also explain the associated decrease in accuracy relative to the speed emphasis condition.

Alternatively, Rabbitt and Rodgers (1977) suggested a decrease in accuracy could index an error correction reflex that interferes with responding on the subsequent trial (also see Crump & Logan, 2012). Consistent with this explanation, incorrect post-error responses are often the correct response to the previous trial, and when the stimulus from the error trial is repeated, responses are typically faster and more accurate (Rabbitt, 1969; Rabbitt & Rodgers, 1977). Error corrections of this type are particularly apparent when the inter-response-interval is quite short, and they are associated with amplified post-error slowing (Jentzsch & Dudschig, 2009). However, effects of short inter-response-interval would not have been apparent in Osth et al.’s (2017) data because of the requirement for a post-choice confidence rating. Further, in this data we observed that a greater decrease in accuracy was associated with post-error speeding, rather than post-error slowing.

Overall, our results suggest that although error-type has a key role in post-error adjustments, this factor interacts with a number of other processes. One is adaptive management of speed-accuracy tradeoffs – although unlike many current conceptions, we propose that this could be slowing or speeding, depending on error type and perhaps other contextual factors. Another component could be an error correction reflex that is important at short inter-response intervals. A third is an emotional component that causes post-error recklessness, which may be maladaptive in terms of reducing attentional resources required on the subsequent trial.

One potential approach to the challenge of separating these effects is through applying the evidence-accumulation models that inspired our predictions about different error types. We did not pursue this approach here because of the challenges of fitting such models while taking into account the confounding factors addressed by the robust and matched methods of calculating post-error effects. Although these same methods could be used to extract a subset of data that could then be fit with such models, the reduced sample size and corresponding increase in measurement error is potentially problematic. Dutilh et al. (2013) addressed this issue using a simplified evidence-accumulation model that can be estimated based on fewer trials because it removes between-trial variability in rate and starting-point parameters. Unfortunately, according to our proposal these are exactly the features necessary to explain the relative speed of error and correct responses, and to accommodate response-speed and data-quality errors. In future work we plan to explore whether it is possible to gain further insight into the mechanisms underlying post-error slowing by using hierarchical Bayesian estimation (Heathcote et al., 2019). In doing so we will attempt to ameliorate measurement noise issues when fitting evidence-accumulation models with the full suite of trial-to-trial variability parameters required to provide a comprehensive model of error phenomena.

Finally, we believe our results have marked implications for the burgeoning literature using post-error effects in a range of applied research areas. We advise caution in interpreting different degrees of post-error slowing as indicative of differences in cognitive control, at least unless there is reason to believe that the groups or tasks being compared do not differ in the proportions of response-speed versus data-quality errors. To the degree that such differences are controlled, our results offer a new perspective when post-error speeding is observed, suggesting that more reckless responses may arise as part of a more general control system that optimizes speed, accuracy, and reward-rate trade-offs.

Notes

Oddball tasks have been used to provide further support for an orientation component to post-error slowing, as when irrelevant auditory cues are provided, responses following novel cues are slower and less accurate than responses following non-novel cue (but not slower and less accurate than uncued responses, suggesting the benefit of a cue may be diminished if the cue is novel; Parmentier & Andres, 2010). Parmentier, Vasilev, and Andres (2019) also found an interaction effect for post-error slowing and auditory cue type (novel vs. non-novel), further suggesting an orientation effect may contribute to post-error slowing for tasks with auditory cues.

References

Ben-Haim, M. S., Williams, P., Howard, Z., Mama, Y., Eidels, A., Algom, D. (2016) The Emotional Stroop Task: Assessing Cognitive Performance under Exposure to Emotional Content. J. Vis. Exp. (112), e53720, https://doi.org/10.3791/53720.

Bogacz, R., Brown, E., Moehlis, J., Holmes, P., & Cohen, J. D. (2006). The physics of optimal decision making: A formal analysis of models of performance in two-alternative forced-choice tasks. Psychological Review, 113, 700–765. doi: https://doi.org/10.1037/0033-295X.113.4.700

Brown, S. D., & Heathcote, A. (2008). The simplest complete model of choice reaction time: Linear ballistic accumulation. Cognitive Psychology, 57, 153-178. doi: https://doi.org/10.1016/j.cogpsych.2007.12.002

Crump, M. J., & Logan, G. D. (2012). Prevention and Correction in Post-Error Performance: An Ounce of Prevention, a Pound of Cure. Journal of Experimental Psychology: General, 142, 692. doi: https://doi.org/10.1037/a0030014

Dutilh, G., Forstmann, B. U., Vandekerckhove, J., & Wagenmakers, E. J. (2013). A diffusion model account of age differences in posterror slowing. Psychology and Aging, 28, 64-76. doi: https://doi.org/10.1037/a0029875

Dutilh, G., van Ravenzwaaij, D., Nieuwenhuis, S., van der Maas, H. L. J., Forstmann, B. U., & Wagenmakers, E.-J. (2012). How to measure post-error slowing: A confound and a simple solution. Journal of Mathematical Psychology, 56, 208-216. doi: https://doi.org/10.1016/j.jmp.2012.04.001

Dyson, B. J., Sundvall, J., Forder, L., & Douglas, S. (2018). Failure generates impulsivity only when outcomes cannot be controlled. Journal of Experimental Psychology: Human Perception and Performance, 44, 1483-1487. https://doi.org/10.1037/xhp0000557

Hajcak, G., & Simons, R. F. (2002). Error-related brain activity in obsessive–compulsive undergraduates. Psychiatry research, 110, 63-72. https://doi.org/10.1016/S0165-1781(02)00034-3

Heathcote, A., Lin, Y. S., Reynolds, A., Strickland, L., Gretton, M., & Matzke, D. (2019). Dynamic models of choice. Behavior research methods, 51, 961-985. https://doi.org/10.3758/s13428-018-1067-y

Jentzsch I., Dudschig C. (2009). Why do we slow down after an error? Mechanisms underlying the effects of posterror slowing. Quarterly Journal of Experimental Psychology, 62, 209-218. https://doi.org/10.1080/1747021080224065

Laming, D. (1968). Information theory of choice-reaction times. New York: Academic Press.

Laming, D. (1979). Autocorrelation of choice-reaction times. Acta psychologica, 43(5), 381-412.

Morey, R. D. (2008). Confidence intervals from normalized data: A correction to Cousineau (2005). Quantitative Methods For Psychology 2, 61-64.

Notebaert, W., et al. (2009a). "Post-error slowing: An orienting account." Cognition 111(2): 275-279.

Núňez Castellar, E., Kühn, S., Fias, W., & Notebaert, W. (2010). Outcome expectancy and not accuracy determines posterror slowing: ERP support. Cognitive, Affective, & Behavioral Neuroscience, 10, 270-278. https://doi.org/10.3758/CABN.10.2.270

Notebaert, W., Houtman, F., Opstal, F. V., Gevers, W., Fias, W., & Verguts, T. (2009b). Post-error slowing: An orienting account. Cognition, 111(2), 275-279. doi: https://doi.org/10.1016/j.cognition.2009.02.002

Osth, A., Bora, B., Dennis, S. & Heathcote, A. (2017). Diffusion vs. linear ballistic accumulation: Different models, different conclusions about the slope of the zROC in recognition memory. Journal of Memory and Language, 96, 36-61. doi:https://doi.org/10.1016/j.jml.2017.04.003

Parmentier, F. B., & Andrés, P. (2010). The involuntary capture of attention by sound. Experimental Psychology, 57, 68-76. https://doi.org/10.1027/1618-3169/a000009

Parmentier, F. B., Vasilev, M. R., & Andrés, P. (2019). Surprise as an explanation to auditory novelty distraction and post-error slowing. Journal of Experimental Psychology: General, 148, 192-200. https://doi.org/10.1037/xge0000497

Rabbitt, P. (1969). Psychological refractory delay and response-stimulus interval duration in serial, choice-response tasks. Acta Psychologica, 30, 195-219. doi: https://doi.org/10.1016/0001-6918(69)90051-1

Rabbitt, P., & Rodgers, B. (1977). What does a man do after he makes an error? an analysis of response programming. The Quarterly Journal of Experimental Psychology, 29, 727-743. doi: https://doi.org/10.1080/14640747708400645

Rabbitt, P. M. A., & Vyas, S. M. (1970). An elementary preliminary taxonomy for some errors in laboratory choice RT tasks. Acta Psychologica, 33, 56-76. doi: https://doi.org/10.1016/0001-6918(70)90122-8

Rae, B., Heathcote, A., Donkin, C., Averell, L. & Brown, S. (2014). The Hare and the Tortoise: Emphasizing speed can change the evidence used to make decisions. Journal of Experimental Psychology: Learning, Memory & Cognition, 40, 1226-1243. doi: https://doi.org/10.1037/a0036801

Ratcliff, R., & Rouder, J. (1998). Modelling response times for two-choice decisions. Psychological Science, 9, 347-356. doi: https://doi.org/10.1111/1467-9280.00067

Stone, M. (1960). "Models for choice-reaction time." Psychometrika 25, 251-260.

Verbruggen, F., Chambers, C. D., Lawrence, N. S., & McLaren, I. P. (2017). Winning and losing: Effects on impulsive action. Journal of Experimental Psychology: Human Perception and Performance, 43, 147. doi: https://doi.org/10.1037/xhp0000284

Williams, P., Heathcote, A., Nesbitt, K., & Eidels, A. (2016). Post-error recklessness and the hot hand. Judgment and Decision making, 11, 174-184.

Acknowledgements

Karlye Damaso and Paul Williams would like to acknowledge the Department of Education and Training for provisions of Australian Postgraduate Award Scholarships during periods of manuscript preparation. Andrew Heathcote would like to acknowledge Australian Research Council grant DP160101891 for supporting his work on this project.

The data used in this manuscript have been made available on OSF. The data were not from preregistered experiments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Karlye Damaso and Paul Williams share first author position.

Electronic supplementary material

ESM 1

(DOCX 339 kb)

Rights and permissions

About this article

Cite this article

Damaso, K., Williams, P. & Heathcote, A. Evidence for different types of errors being associated with different types of post-error changes. Psychon Bull Rev 27, 435–440 (2020). https://doi.org/10.3758/s13423-019-01675-w

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-019-01675-w