Abstract

We are surrounded by an endless variation of objects. The ability to categorize these objects represents a core cognitive competence of humans and possibly all vertebrates. Research on category learning in nonhuman animals started with the seminal studies of Richard Herrnstein on the category “human” in pigeons. Since then, we have learned that pigeons are able to categorize a large number of stimulus sets, ranging from Cubist paintings to English orthography. Strangely, this prolific field has largely neglected to also study the avian neurobiology of categorization. Here, we present a hypothesis that combines experimental results and theories from categorization research in pigeons with neurobiological insights on visual processing and dopamine-mediated learning in primates. We conclude that in both fields, similar conclusions on the mechanisms of perceptual categorization have been drawn, despite very little cross-reference or communication between these two areas to date. We hypothesize that perceptual categorization is a two-component process in which stimulus features are first rapidly extracted in a feed-forward process, thereby enabling a fast subdivision along multiple category borders. In primates this seems to happen in the inferotemporal cortex, while pigeons may primarily use a cluster of associative visual forebrain areas. The second process rests on dopaminergic error-prediction learning that enables prefrontal areas to connect top down the relevant visual category dimension to the appropriate action dimension.

Similar content being viewed by others

The world surrounding us offers an endless variety of scenes and objects. Just look around. You might see a desk, chairs, books, computers; you instantly recognize them as belonging to a certain category (furniture, electronics, etc.), although your specific desk may be unique to you. The apparent ease of your categorical recognition belies the complexity of this feat: We effortlessly detect and categorize tens of thousands of objects from countless possible angles and distances. And we do so in the blink of an eye (Grill-Spector & Kanwisher, 2005). How is this possible? This article is about psychological and neurobiological studies that try to solve this question.

Our review will focus on perceptual categorization in nonhuman animals. This research field started with pigeon experiments, and perceptual category learning in birds is still a highly prolific area of investigation. As successful this research area is, it suffers from a curious neglect of the neurobiological fundaments of categorization learning in birds. This is a real deficit, as incorporating neurobiology into research on bird learning and cognition could provide two unprecedented opportunities: First, it could deepen our understanding of the mechanisms of category learning in birds from a different angle. Second, it would establish an avian alternative to the successful neuroscientific inquiries on categorization that use rodent and primate models. Therefore, we will also review some of the new developments in neuroscience outside the avian realm and outline their possible impact on perceptual categorization learning research in pigeons. By restricting ourselves in this way, we unfortunately have to ignore a vast and highly interesting comparative literature on abstract concept learning. We apologize to all the investigators whose research we therefore do not appropriately cite owing to this restriction.

Categories and concepts

Modified from Keller and Schoenfeld (1950), Cohen and Lefebvre (2005) defined categorization as an organism’s ability to respond equivalently to members of the same class, to respond differently to members of a different class, and to transfer their reports to novel and different members of these classes. Categorization is a key component of our cognitive system since it drastically reduces information load (Wasserman, Kiedinger, & Bhatt, 1988). Due to the evolutionary relevance of categorization learning, humans (Rosch, Mervis, Gray, Johnson, & Boyes-Braem, 1976; Hegdé, Bart, & Daniel, 2008) and many more animals (e.g., pigeons: Herrnstein & Loveland 1964; Yamazaki, Aust, Huber, Hausmann, & Güntürkün, 2007; dogs: Range, Viranyi, & Huber, 2007; monkeys: Kromrey, Maestri, Hauffen, Bart, & Hegdé, 2010; Sigala & Logothetis, 2002; honey bees: Benard, Stach, & Giurfa, 2006) learn to react in a similar manner to different objects from the same category.

Usually, categories are distinguished from concepts, although, possibly, this distinction is not a binary difference but a continuous transition. A category refers to a set of entities that are grouped by overlapping perceptual features. Take, for example, the perceptual category “book.” Books can come in different sizes, in different boards and scripts, with hard or soft cover. But despite these variations, their overlapping features make it easy to retrieve the category book when seeing one. Importantly, different kinds of books can be treated equivalently as if they were the same, as soon as they are grouped in the same category (Sidman, 1994). Indeed, once objects are classified as belonging to a joint category, memory about individuating features of the diverse items starts to suffer (Lupyan, 2008).

But what about e-books? Are they part of the same category as printed books? And what about the books that were read in the ancient civilizations of Egypt or Rome? These were handwritten on long strips of papyrus and were read by rolling a stretch of papyrus from left to right. After reading, this scroll was placed in a jar, together with further scrolls. What looked like a modern book in ancient Rome was in fact not a book but a codex, and it consisted of official documents bound together. Is it still possible to come up with the category book when taking e-books and ancient scrolls into consideration? This problem grows even larger when we reflect about sayings such as “I can read him like a book.” All of these examples cannot be jointly bound in the perceptual category “book.” They are, however, part of the concept of books (Goldstone, Kersten, & Carvalho, 2017). Concepts are mental elements of knowledge about objects that have joint features or functions that do not need to be perceptual. In a famous saying of Barsalou (1983), the concept of “things to remove from a burning house” means that even children and jewelry become similar and conceptually bound. It is important to distinguish between perceptual categories and concepts. As we will see below, pigeons can learn an astonishing variety of perceptual categories. But they also seem to master some abstract concepts, such as “number,” identity, and higher order relations (for review, see Lazareva & Wasserman, 2017). As outlined above, however, we will solely focus on perceptual categories in the present review.

Behavioral experiments on perceptual categorization in pigeons

Scientific inquiry into categorization took a major shift in the mid-1960s. Until that time, categorization was seen as a language-based cognitive ability that was a realm of human cognition. Then, Herrnstein and Loveland (1964) published their now-classic study in which they demonstrated that pigeons can quickly acquire the category “human” after being conditioned to discriminate between hundreds of photographs of which some depicted humans (Herrnstein and Loveland used the word “concept” in their title). The birds not only easily learned to properly discriminate the training stimuli but also transferred their knowledge to novel photographs. Numerous subsequent experiments further explored pigeons’ categorization abilities using natural stimuli, such as birds versus mammals (Kendrick, Wright, & Cook, 1990), individual pigeons (Nakamura, Croft, & Westbrook, 2003), cartoons (Matsukawa, Inoue, & Jitsumori, 2004), human action poses (Qadri & Cook, 2017), different painting styles (Watanabe, 2011; Watanabe, Sakamoto, & Wakita, 1995), human facial expressions (Jitsumori & Yoshihara, 1997), or human face identity (Soto & Wasserman 2011). Pigeons even successfully discriminate malignant from benign human breast histopathology (Levenson, Krupinski, Navarro, & Wasserman, 2015) and can distinguish English words from nonwords (Scarf, Boy, et al. 2016). Taken together, pigeons seem to have unexpectedly large resources to learn various perceptual categories.

All of these studies adopted the basic procedure employed by Herrnstein and Loveland (1964): They used an initially large number of stimuli during discrimination acquisition, followed by testing transfer ability with novel stimuli. This procedure should ensure that discrimination has been established and that subsequent categorization testing is not based on rote memory. Successful transfer to previously unknown exemplars of the learned category is then usually taken as evidence of open-ended categorization ability (Herrnstein, 1990). However, this approach does not necessarily guarantee that pigeons indeed had only used the presence or absence of humans in photographs to decide between S+ and S−, respectively. The reason is simple: Humans are often depicted alongside furniture, streets, houses, or cars. These items could then be used as an extended feature collection of the category “human.” Indeed, pigeons can learn to categorize “man-made objects” (Lubow, 1974). So the animals in the study of Herrnstein and Loveland (1964) may simply have used perceptual background features that co-occur with humans to master the task. Such a strategy would in principle still be based on perceptual categorization, but using different features than what we had expected. Thus, in order to identify the nature of the formed category, we have to find means to reliably identify the utilized visual cues. The relevance of this quest is depicted by results of, for example, Greene (1983), who discovered that her pigeons exploited spurious systematic differences of the background in a “human” categorization task, rather than using the actual presence or absence of people.

Different approaches to this problem discovered that pigeons can rely on a mixture of background and relevant stimulus category information to base their decision on. Wasserman, Brooks, and McMurray (2015) demonstrated that pigeons can successfully learn to categorize in parallel 128 photographs into 16 different categories. Since no background cues were available, the animals had to use pictorial aspects of the stimuli. However, the spatial location of the photographs turned out to affect choices in the beginning of the experiment. This vanished during the progress of learning. Thus, all kinds of cues can affect categorization learning. To demonstrate that pigeons can indeed also learn about human body-unique information, Aust and Huber (2001) trained their pigeons in a people/no-people experiment. During transfer trials, pictures of novel human figures were cut out and pasted on previously seen “no people” stimuli. Thus, the pigeons were now confronted with a stimulus that contained a previously learned negative background that was combined with a novel positive foreground that depicted a person. The animals pecked on such manipulated stimuli, thereby making it likely that they indeed had used features related to the human body (see also Aust & Huber, 2002). Further studies tried to reconstruct the stimulus properties that control categorization behavior by randomly covering parts of the stimuli with “bubbles.” In this experiment, pigeons and humans had to decide if the depicted person had a happy or a neutral expression. By analyzing the success of the subjects relative to the visible components of the stimuli, Gibson, Wasserman, Gosselin, and Schyns (2005) discovered that both humans and pigeons mainly relied on the mouth part of the photographs. To directly reveal the focus of attention of pigeons during perceptual categorization tasks, Dittrich, Rose, Buschmann, Bourdonnais, and Güntürkün (2010) introduced peck tracking to examine the pecking locations of pigeons in a people-present/people-absent task. Their results revealed that pecking location was mostly focused on the head of the human figures. Removal of the heads of the depicted persons impaired performance, while removal of other parts of the human figures did not. Using this technique, Castro and Wasserman (2014, 2017) also demonstrated that pigeons track features that are category relevant and respond less to details that only weakly coincide with the presence of the relevant stimulus. The results of these studies make it likely that pigeons selectively attend to and choose features that are diagnostic for the presence of the learned perceptual category.

Taken together, pigeons can learn an astonishing variety of perceptual categories. Hereby, they seem to focus their attention on specific features that predict the presence of a stimulus that belongs to the rewarded category. If background patterns systematically correlate with the presence of an S+, the behavior of the animals can also come under the control of such patterns. We will dwell on the implication of these observations in the next section.

Learning perceptual categories—Excursion 1: The reward prediction error and dopamine

Several theoretical accounts have been offered to explain category learning. As outlined in the very beginning, we will not review all of these diverse attempts but will only focus on those theories that might inform a mechanistic hypothesis on the neurobiological fundaments of perceptual categories in birds. To this end, we obviously have to start with the mechanisms of learning.

Every stimulus offers a diversity of features, of which only some are category relevant. In the beginning, the animal can do nothing more than proceed by using trial and error to identify the features that are correlated with reward. Consequently, several categorization theories have incorporated error-driven learning rules that allow emergence of a selective attention to relevant stimulus dimensions (Gluck & Bower, 1988; Kruschke, 1992; Rumelhart, Hinton, & Williams, 1986). Assuming that the increase in associative strength between a stimulus and an outcome is proportional to the error magnitude between prediction and outcome, a gradient descent of error is to be expected. This obviously is also the core assumption of the error-driven learning rule by Rescorla and Wagner (1972). Recently, Soto and Wasserman (2010) put forward a common elements model of visual categorization that incorporates this rule as a driving force to learn common features within individual stimuli that belong to a category. None of these features has to be present in all exemplars of a category, and thus none of them can fully explain categorization behavior. Similarly, the common elements model assumes that each image is represented by an overlapping collection of elements. Elements that are only activated by a single image are stimulus-specific properties that drive the identification of this individual stimulus. Elements that are activated by several images from the same category are category specific and drive categorization learning. Cook, Wright, and Drachman (2013), for example, trained pigeons on line drawings of birds and mammals. When analyzing transfer to novel instances, the authors discovered that the birds had mostly learned some key visual features that helped to disambiguate between classes. Thus, the pigeons were able to select the diagnostic parts and were able to tolerate some image alterations as long as the core features were preserved. The common elements model would predict such findings but also those of low-level features that come to predict categorization learning (Greene, 1983; Huber, Troje, Loidolt, Aust, & Grass, 2000; Troje, Huber, Loidolt, Aust, & Fieder, 1999).

Studies of reward processing by dopaminergic midbrain neurons and their target regions in mammalian frontostriatal networks have shed light on the mechanisms by which nervous systems update predictions, associate subjective values to events, and select responses based on feedback (Schultz, 2016). These insights provide a post hoc neurophysiological correlate of the Rescorla and Wagner (1972) theory. Let us therefore make an excursion into the dopaminergic system before later coming back to categorization learning in birds.

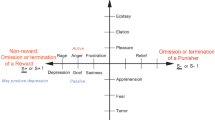

Midbrain dopaminergic cell groups in mammals project to frontostriatal targets and beyond. Dopamine neurons show increased activation when a reward is received (Waelti, Dickinson, & Schultz, 2001). This activation has different components, but the most important one for the present account is constituted by a fast activation or depression of the dopaminergic signal that codes a positive or a negative reward prediction error, respectively (Nomoto, Schultz, Watanabe & Sakagami, 2010; Schultz, 2016). When reward is contingently preceded by a cue, dopaminergic activity shifts backward in time to the reward predicting cue such that the burst is now correlated with the CS+, but not with the reward itself anymore (Schultz, 1998). When a predicted reward is not delivered, or is smaller than predicted, dopamine neurons drop their activity at the time point of expected reward delivery. The same neurons show an increase of activation when the reward was larger than predicted (Bayer & Glimcher, 2005). Schultz (2016) argues that these responses function as feature detectors for the goodness of a reward relative to its prediction: If the reward is fully predicted, then no signal change occurs. If the reward is better than predicted, then dopamine neurons emit a positive signal. If the reward is smaller than predicted, then the same neurons emit a negative signal (see Fig. 1a). Dopamine neurons also comply with further assumptions of Rescorla and Wagner (1972), such as, for example, the blocking effect (Waelti et al., 2001). Based on these findings, Montague, Hyman, and Cohen (2004) have argued that dopamine neurons essentially perform the computation proposed by the Rescorla–Wagner theory. Thus, when predictions turn out to be wrong, dopamine retunes the system to improve its predictions. According to this view, dopamine activity carries a teaching signal that modifies the response selection (see Fig. 1b).

Dopamine-mediated reward prediction error hypothesis (Schultz, 1998). a Activity pattern of a dopamine neuron during the process of classical conditioning. In Trial 1 the animal did not expect a reward. Therefore, the dopamine cell responds to reward delivery with a sharp increase of activity. In Trial n, when learning became asymptotic, the dopamine neuron does respond to the conditioned stimulus (CS; the less predictable cue) but no longer to the unconditioned stimulus (UCS; which is predicted by the CS). When the reward is omitted in Trial n + 1, the dopamine neuron ceases to fire at the time point when reward was expected. Thus, dopamine neurons can deliver a message of “better than expected” or “worse than predicted” to forebrain structures. b Dopamine-mediated signals could continuously adjust synapses in target areas to ensure every generated action that produced an unexpected result activates a new surge of synaptic changes to ensure future action adjustments

Learning perceptual categories—Excursion 2: The neuronal basis of categorization in primates

Over the past decade, neurobiological accounts of categorization in primates have taken a new route. About 10 years ago, the inferior temporal cortex (ITC) of monkeys was mostly seen as a mere storehouse of visual images that were selectively retrieved by the prefrontal cortex to enable category-based decisions. Recordings from the monkey ITC had revealed diverse cell populations, each responsive to a distinct but overlapping set of stimuli (Vogels, 1999). ITC neurons are known to respond to diagnostic features of stimuli that enable a successful categorization of these patterns (Sigala & Logothetis, 2002). During the process of learning a categorization task, the population response of ITC neurons shifts moderately to enable higher stimulus selectivity for the critical features (Baker, Behrmann, & Olson, 2002). Due to massive parallel processing of large numbers of neurons, selectivity for a combination of features is markedly enhanced during categorization learning, such that both feature-based and configuration-based coding is enabled within ITC at a single-cell level (Baker et al., 2002).

But is this category-coding ability of ITC-cells a result of top-down instructions from prefrontal areas, or does it emerge from bottom-up input? Already in the mid-1990s, Simon Thorpe demonstrated that when humans watched a stream of images where each image was flashed for just 20 ms, event-related potentials signaled categorical decisions within about 150 ms after stimulus appearance (Thorpe, Fize, & Marlot, 1996). This feat likely made a purely feed-forward-driven visual categorization mechanism. Subsequent studies demonstrated that human fast categorization is based on visual areas comparable to the monkey ITC (Curran, Tanaka, & Weiskopf, 2002). How is such a fast, purely feed-forward categorization at ITC level possible?

The primate ITC can solve categorization problems by rapidly computing a vector that distinguishes between various object categories, despite individual differences between objects within a class (DiCarlo, Zoccolan, & Rust, 2012). Just 50 ms after picture exposure, a population of monkey ITC neurons can be selective for a certain image category (DiCarlo & Maunsell, 2000). This is nicely shown in a study by Hung, Kreiman, Poggio, and DiCarlo (2005): Here, monkeys were shown 77 stimuli that could be categorized into eight classes, such as toys and faces, while ITC neurons were recorded. The activity of these neurons was then analyzed with a linear classifier—a machine learning algorithm that categorizes items based on the value of the linear combination of features of these items. A linear classifier that was trained with the input from the recorded neurons could categorize these stimuli with an accuracy of above 90%. The categorization accuracy of the classifier steeply increased with time, such that 70% accuracy was reached just 12.5 ms after the onset of activity of ITC neurons. Classifier performance also increased linearly with the number of recorded cells, such that only about 100 neurons were needed over a period of less than 20 ms to categorize stimuli with very high accuracy (Hung et al., 2005). These fast responses support bottom-up visual object categorization at ITC level despite changes in object position or background (Li, Cox, Zoccolan, & DiCarlo, 2009). So, is the primate ITC sufficient to run all processes that are needed for perceptual categorization from stimulus onset up to the final decision of the animal? Certainly not.

The prefrontal cortex (PFC) of primates is a second critical area for categorization learning. When monkeys are trained to categorize different objects into two groups, PFC cells show a sharp differentiation between categories (Freedman, Riesenhuber, Poggio, & Miller, 2001). PFC cells also stay active during a delay period when the stimulus is no longer shown, and they display a high level of task-relevant activity during the subsequent decision phase (Lundqvist et al., 2016). In addition, category coding of PFC neurons quickly changes when new category boundaries are created by switching to the new category and ceasing to respond to old category boundaries (Freedman et al., 2001). Thus, PFC neurons flexibly code the current category boundaries, based on reward feedback consecutive to own behavior (Freedman & Miller, 2008).

ITC and PFC interact closely during categorization. Existing category borders of ITC seem to be sharpened by PFC top-down input in a task-dependent manner (Kauffmann, Bourgin, Guyader, & Peyrin, 2015; Pannunzi et al., 2012). This top-down input becomes especially relevant during rule changes when new category borders have to be selected based on PFC circuits (Roy, Riesenhuber, Poggio, & Miller, 2010). Most importantly, even if multiple category borders are already represented at ITC level, it requires the PFC to learn the rules according to which certain categories are selected for action, while other category borders are neglected (Seger & Miller, 2010). Accordingly, monkeys with PFC lesions are, in principle, able to categorize objects but fail to translate this ability into rule-based decisions (Minamimoto, Saunders, & Richmond, 2010).

Taken together, a minimalistic account on the neural fundaments of perceptual categorization in primates involves two structures, ITC and PFC, which have complementary functions. The ITC encodes detailed visual information by a parallel activation of a large neuronal population. These differently tuned cells encode information about all kinds of category borders that can be extracted by simple computational means such as, for example, linear classifiers. In addition, the coding properties of these neurons can be modestly modified by dopaminergic input and by top-down influences from the PFC. Prefrontal areas, on the other hand, receive massive dopaminergic input and are importantly tuned by this prediction error coding feedback. The PFC interacts with the ITC to retrieve existing category-based information and to transform this into decision and action processes. The PFC thereby swiftly switches between different ITC-based category borders, depending on changing task contingencies. It should again be emphasized that this concentration on just the ITC and PFC is a minimalist view on the system—a view that ignores that structures like hippocampus and striatum also have their own unique contributions to specific other aspects of categorization behavior.

But now it is time to return to birds.

The avian visual pathways and the bird “‘prefrontal”‘ system in a whirlwind

Both birds and mammals have two ascending visual pathways to the forebrain; the tectofugal (comparable to the mammalian extrageniculocortical system), and the thalamofugal pathway (comparable to the geniculocortical system).

The tectofugal pathway (see Fig. 2, depicted in dark yellow) ascends from the retina to the optic tectum, to the thalamic nucleus rotundus, and then finally terminates in the forebrain entopallium (Mouritsen, Heyers, & Güntürkün, 2016). In pigeons, the tectofugal system controls visual tasks in which the bird has to look at visual stimuli in its frontal visual field and responds to them (Remy & Güntürkün, 1991; Güntürkün & Hahmann, 1998). Because practically all perceptual categorization tasks in pigeons use setups in which the birds scrutinize the visual stimuli with their frontal visual field and then peck them, it is likely that the tectofugal system is the most important neural component for our understanding of perceptual categorization in pigeons. Entopallial neurons have reciprocal interactions with a cluster of associative areas (jointly depicted in green) that surround the entopallium and that alter their activity patterns during different visual object distinction tasks (Stacho, Ströckens, Xiao, & Güntürkün, 2016).

a Skull and brain of pigeon depicted as combined CT/MRT-based image (Güntürkün, Verhoye, De Groof, & Van der Linden, 2013). b Organization of the visual pathways in pigeons and their projections to the “prefrontal” NCL. Tectofugal system (shown in yellow) starts with the retinal projections to the optic tectum. From there, tectofugal projections reach, via the thalamic n. rotundus (Rt), the entopallium (E), which then projects to surrounding associative visual areas (shown in green). Thalamofugal visual system is shown in blue and begins with the retinal projections to the thalamic nucleus geniculate lateralis, pars dorsalis (GLd), and from there to the visual Wulst. Since the Wulst also projects to the associative visual areas, these structures receive input from both pathways and in addition interact with the nidopallium caudolaterale (NCL), which is a functional equivalent to the mammalian prefrontal cortex. NCL projects to the (pre)motor arcopallium and the striatum. From there, down-sweeping projections realize the motor output of the animal. Glass brain of the pigeon is based on Güntürkün et al. (2013). (Color figure online)

Recordings from entopallial neurons reveal that they respond to a large number of overlapping features. For example, Scarf, Stuart, Johnston, and Colombo (2016) recorded from the entopallium while the animals were required simply to peck on one of 12 different visual stimuli. The authors discovered that many cells in the entopallium were vigorously responding to several stimuli, while showing only modest modifications of their spike trains when processing distinct stimuli. Verhaal, Kirsch, Vlachos, Manns, and Güntürkün (2012) trained pigeons in a go/no-go task while recording from the entopallium. They discovered that entopallium neurons rapidly learn to respond to rewarded stimuli, while quickly ceasing to respond to no-go cues. Colombo, Frost, and Steedman (2001) and Johnston, Anderson, and Colombo (2017a, 2017b) demonstrated, in addition, that entopallium and associative visual area neurons keep firing for a specific cue during the delay period of a delayed-matching-to-sample task, and so possibly support the retention of critical visual information. Thus, entopallium and associative visual area neurons seem to prefer broad, overlapping stimulus classes and can modify their activity patterns according to reward-associated task contingencies.

The thalamofugal visual pathway (see Fig. 2; shown in blue) ascends from the retina to the thalamic nucleus geniculate lateralis, pars dorsalis (GLd) and from there to the visual Wulst in the telencephalon. The thalamofugal system seems to mainly receive visual input from the lateral visual field (Güntürkün & Hahmann, 1998; Remy & Güntürkün, 1991). The Wulst also has reciprocal connections with the visual associative areas. Thus, both major ascending visual pathways merge in this area (Shanahan, Bingman, Shimizu, Wild, & Güntürkün, 2013).

The associative visual areas have reciprocal connections with a structure in the most posterior part of the pigeon forebrain: the nidopallium caudolaterale (NCL; see Fig. 2). A large number of studies make it likely that the NCL is a functional equivalent to the PFC of mammals (Güntürkün, 2012). Similar to the PFC, the associative NCL integrates multimodal information and connects this higher order sensory input to limbic and motor structures, including the striatum (Kröner & Güntürkün, 1999). Thus, like the PFC, the avian NCL is also a convergence zone between the ascending sensory pathways and the descending motor systems (Güntürkün & Bugnyar, 2016). Accordingly, NCL neurons code for different modalities in task-dependent manner (Moll & Nieder, 2015, 2017) and prospectively encode future behavior based on learned stimulus associations (Veit, Pidpruzhnykova, & Nieder, 2015). Importantly, they control what should be remembered and what should be forgotten (Rose & Colombo, 2005), encode future events (Scarf et al., 2011), decision-making (Lengersdorf, Güntürkün, Pusch, & Stüttgen, 2014), action-related subjective values (Kalenscher et al., 2005; Koenen, Millar, & Colombo, 2013), rule tracking (Nieder, 2017; Veit & Nieder, 2013), numerosity (Ditz & Nieder, 2015), visual category (Kirsch et al., 2009), and the association of outcomes to actions (Starosta, Güntürkün. & Stüttgen, 2013; Johnston et al., 2017a; Liu, Wan, Shang, & Shi, 2017).

NCL lesions also interfere with all cognitive tasks that are known to depend on the mammalian PFC (Güntürkün, 1997, 2005, 2012; Kalenscher, Ohmann, & Güntürkün, 2006). The learning-related plasticity of NCL very likely depends on its dense innervation by dopaminergic fibers that release dopamine during learning and executive tasks (Karakuyu, Herold, Güntürkün, & Diekamp, 2007; Herold, Joshi, Hollmann & Güntürkün, 2012). NCL and PFC therefore represent a case of parallel evolution of mammals and birds that resulted in the convergent emergence of brain areas that subserve executive cognitive functions (Güntürkün, 2012).

In summary, the functional organization of the visual and “prefrontal” avian forebrain is highly similar to the mammalian pattern. Together with their input and output structures, they form the visuomotor system of the bird brain. Now we can combine insights from primate research with cognitive and neuroscientific inquiries to develop a mechanistic hypothesis on perceptual categorization in birds.

A mechanistic neuroscientific hypothesis on perceptual categorization in pigeons

Let’s combine the collective evidence discussed so far in a framework that links population-level category coding in the bird visual forebrain with the prediction error-driven neural learning dynamics in the NCL. Let’s first look for evidence of population-level category-specific coding in the bird visual system.

Koenen, Pusch, Bröker, Thiele, and Güntürkün (2016) tested the idea of a population-level category coding of visual associative neurons. Their pigeons were not required to discriminate between stimulus categories but just pecked on any upcoming stimulus to obtain food. The abstract stimuli differed in color, shape, spatial frequency, and amplitude. The spike trains of the simultaneously recorded visual associative neurons were used to post hoc identify the stimulus classes the animals saw. To this end, a representational dissimilarity matrix (RDM) was calculated from the spikes such that neural output of each neuron to each stimulus was correlated with the neural responses to every other stimulus. Thus, each stimulus pair could be depicted with a gray value code that corresponds to the degree of dissimilarity (calculated using the Spearman’s rank correlation coefficient) of the neuronal response patterns. The gray values help to quickly visualize the degree of (dis)similarity of cellular populations to specific stimuli (see Fig. 3).

Cellular coding of variously colored objects and grating patterns in the pigeon’s associative visual areas. Analysis of the population of cells using a representational dissimilarity matrix (RDM) shows that neuronal responses to colored stimuli are discernible in the left upper corner due to highly correlated spike patterns of visual-associative units (dark gray: low dissimilarity; light gray: high dissimilarity). Similarly, responses to grating patterns can be discerned in the right lower corner. These are further subdivided by spatial frequency. Diagonal is black since the neural activity induced by each stimulus is compared to itself, resulting in highest possible similarity. Note. From Koenen et al. (2016), with permission of corresponding author and publisher

Koenen et al. (2016) revealed that basic stimulus categories such as pattern, color, amplitude, and spatial frequency were discernable from the neuronal population responses. It is important to remember that the animals were not conditioned to discriminate between stimuli but were merely pecking at each of them to obtain food. Accordingly, the actual behavior of the animals did not differ between stimulus categories, although their neural responses did. Thus, associative visual neurons of the avian forebrain reveal category-specific population coding, even without any categorization training. This is exactly what would be required to perceive a perceptual coherence in different images, even without categorization training. Indeed, Herrnstein and de Villiers (1980) had proposed that differential reinforcement may not produce but merely disclose perceptual groupings that are bottom-up driven at the pictorial level and produce stimulus generalizations that are then picked up and further shaped by reinforcement contingencies. Consequently, Astley and Wasserman (1992) reported that pigeons perceive similarity among members of basic-level categories, making it likely that the birds had perceived basic-level categories to be perceptually cohesive.

In a subsequent study to Koenen et al. (2016), Azizi et al. (2018) confronted pigeons with a large set of photographs that depicted animate (humans; nonhumans) and nonanimate items (natural objects; artificial objects). The subcategories were partly further subdivided (humans = bodies vs. faces; nonhumans = animal bodies vs. animal faces; natural objects = vegetables vs. fruit; artificial objects = tools vs. traffic signs). This stimulus set had been previously used to reveal category-specific population coding in monkey ITC (Kriegeskorte et al., 2008). As in Koenen et al. (2016), the pigeons in this new study merely had to peck on each stimulus to obtain food. Cellular responses from entopallium and surrounding associative areas were this time analyzed with a linear discriminant analysis (LDA) to identify a linear combination of features with which categories can be separated based on their spike trains. It turned out that individual neurons did not significantly distinguish between categories, while the whole neuronal population did. More specifically, the recorded cells evinced a highly significant categorization along the animate/inanimate border. A more specific analysis revealed that this categorization was mainly driven by the category “human.” Further scrutinizing of the data set finally demonstrated that the computation of this category did not emerge in the entopallium but in the associative visual areas. Pigeon neurons reliably and correctly categorized photographs of human bodies or faces with a linear increase in the number of associative visual neurons analyzed, and they reached practically 100% with just 35 cells. As such, only a small number of neurons in the visual associative forebrain of pigeons are sufficient to recognize the presence or absence of a person in a photograph.

Taken together, even at the level of visual association areas of pigeons, visual categories like “human” can be revealed at the level of small neuronal populations. Since neither categorization training nor differential rewarding paradigms were involved, it is likely that these category-specific coding properties are driven by mere feed-forward stimulus input statistics. These results overlap with the findings of theoretical analyses (Gale & Laws, 2006) as well as imaging and cell recording data in monkey and human visual associative cortex (Kriegeskorte et al., 2008; Stansbury, Naselaris, & Gallant, 2013). Behavioral studies in monkeys and pigeons reveal similar findings. Monkeys that are playing a same–different task are more likely to confuse different faces or different fruits as “same,” based on simple overlapping perceptual features (Sands et al., 1982). Very similar results occur when pigeons are tested with leave forms of different tree species (Cerella, 1979).

As outlined above, population-level category coding in the primate ITC is just one pillar of primate categorization. An additional pillar is the PFC, which learns to select response patterns based on a dopamine-mediated reduction of the prediction error. As a result of this learning process, the PFC starts to select the relevant visual category dimension from the ITC and to choose the appropriate action dimension from premotor cortex and striatum. This is reminiscent of Sutherland and Mackintosh (1971) who proposed that discrimination learning “involves two processes: learning to which aspects of the stimulus to attend and learning what responses to attach to the relevant aspects of the stimulus situation” (p. 21).

There is good evidence that the neural dynamics of the avian NCL are characterized by dopamine-mediated reductions of prediction errors, just like in primate PFC (Puig, Rose, Schmidt, & Freund, 2014). The organization of the avian dopaminergic system and its terminal areas in the NCL are highly comparable to that of the mammalian PFC (Durstewitz, Kröner & Güntürkün, 1999; Durstewitz, Kröner, Hemmings, & Güntürkün, 1998; Herold et al., 2011; Waldmann & Güntürkün, 1993; Wynne & Güntürkün, 1995). As in mammals, dopamine release and local dopamine receptor adjustments in NCL change during the course of learning of associative tasks (Herold et al., 2012; Karakuyu et al., 2007). As predicted by reward prediction error accounts, blocking of forebrain D1 receptors in pigeons abolishes different learning speeds relative to different reward magnitudes (Diekamp, Kalt, Ruhm, Koch, & Güntürkün, 2000; Rose, Schiffer & Güntürkün, 2013; Rose, Schmidt, Grabemann, & Güntürkün, 2009). In addition, and again in line with the mammalian dopamine literature, blocking D1 receptors in the associative pigeon forebrain disrupts local visual attention processes (Rose, Schiffer, Dittrich, & Güntürkün, 2010). A recent study demonstrated that avian learning unfolds identically as in monkeys, by increases and decreases of phasic dopamine release depending on better-than-expected or worse-than-predicted outcomes (Gadagkar et al., 2016).

Taken together, our hypothesis assumes that population-level category coding in visual associative areas and a dopamine-mediated reward-prediction error reduction in NCL constitute the neural core of visual category learning in pigeons. Our explanation does not necessarily require representations at exemplar or prototype level but assumes that the animals simply optimize their reward chances by exploiting the output of their massively parallel visual system that processes low-level visual features. This does not exclude the possibility that pigeons can learn rules or acquire abstract concepts when low-level input is insufficient for appropriate decisions. However, we are convinced that simple parallel analyses are able to explain even quite complex examples of avian categorization behavior. For example, Grainger, Dufau, Montant, Ziegler, and Fagot (2012) and Scarf, Boy, et al. (2016) had shown that both baboons and pigeons learn orthography, seemingly guided by letter-string-based algorithms. However, Linke, Bröker, Ramscar, and Baayen (2017) recently demonstrated that a deep learning network that received mere gradient orientation features as input units and operated according to Rescorla–Wagner learning rules could perfectly predict baboon lexical decision behavior. Thus, the power of a combination of population-level feature analysis and dopamine-mediated reward-prediction error reduction can represent quite a powerful mechanistic explanans for at least a good part of animal categorization behavior. In fact, our hypothesis operates along the same steps of reasoning as the previously discussed common elements model of Soto and Wasserman (2010). What we add is a detailed neurobiological view of the processes that Soto and Wasserman had outlined in computational terms. By this, such an overlapping theoretical view in visual categorization in pigeons now becomes testable from neuron to behavior.

In the following, we both visualize and outline the proposed processes that might unfold during categorization (see Fig. 4): When a pigeon starts to perceive and to respond to various visual patterns, possibly different neural processes emerge within the visual associative forebrain areas and the NCL. In the visual associative structures of the avian forebrain, several million neurons will respond to the stimuli with slightly individually distinct, but largely overlapping, spike patterns. The coding property of each cell mostly will be too broad to enable the discrimination of a certain category. However, at the population level, different kinds of categorical distinctions will be discernable in a feed-forward manner.

Proposed neuroscientific hypothesis on perceptual categorization learning in pigeons. Pigeon perceives stimuli showing humans or cars and then processes t hem in visual pathways. Stimuli seen in pigeons’ frontal visual field are primarily processed in the entopallium and then projected to the visual associative areas. Population coding properties of visual associative neurons can result in categorical distinctions between objects (humans; cars) or their canonical parts (faces; tires). Neurons of the visual associative areas project to the “prefrontal” NCL in a feed-forward manner and from there receive feedback projections. If the animal is playing a human/no-human categorization task and has pecked on a photograph depicting a human or a car, dopaminergic neurons (shown in brown) will adjust their firing frequency relative to the subsequent occurrence of reward or nonreward. Thereby, local NCL networks that receive input from a population of human-coding visual neurons that initiated a key peck will be synaptically strengthened. Back projections from the NCL to visual structure are able to further sharpen category boundaries, depending on experimental conditions. NCL neurons take part in a decision process with which downstream premotor areas are activated to execute the peck on a key that shows a human or a car. (Color figure online)

While all of this unfolds, the pigeon will sometimes be rewarded after pecking on a certain stimulus, and sometimes not. Each reward will trigger a dopamine release in different projection areas of the dopaminergic system, including the NCL. Trial by trial, this dopamine-mediated prediction error adjustment will strengthen those synaptic connections that were active shortly before dopamine release, while other synapses will be downregulated that were not followed by reward. NCL neurons constitute the interface between ascending sensory and descending motor pathways. As a result, specific sensory-prefrontal-motor circuits will be strengthened. Those circuits that code for a rewarded combination of category boundaries are then associated with the actions that produce the rewarded outcome.

The visual associative areas of birds are innervated by only a small number of dopaminergic fibers and have a modest density of D1 receptors (Durstewitz et al., 1999; Wynne & Güntürkün, 1995). Thus, similar reward-dependent synaptic adjustments might also go on, albeit at a lower level, in the avian associative visual structures. In addition, it is conceivable that top-down input from the NCL sharpens category borders of visual neurons according to reward contingencies. These two synergistic mechanisms could explain why visual neurons in pigeons quickly retune their activity patterns according to the reward properties of learned stimuli (Colombo et al., 2001; Johnston et al., 2017a, 2017b; Scarf, Stuart, et al., 2016; Verhaal et al., 2012).

In summary, our hypothesis combines the rich experimental and theoretical tradition of categorization learning in pigeons with neurobiological insights on visual processing and dopamine-mediated plasticity in primates. Our approach associates these until now mostly unconnected areas of research and combines them with a hypothesis that can be tested at different levels of analysis. We hope that our neuroscientific bird’s eye view on category learning will provide new angles of inquiry, thereby enabling fresh insights on this fascinating field of science.

References

Astley, S. L., & Wasserman, E. A. (1992). Categorical discrimination and generalization in pigeons. All negative stimuli are not created equal. Journal of Experimental Psychology: Animal Behavior Processes, 18, 193–207.

Aust, U., & Huber, L., (2001). The role of item- and category-specific information in the discrimination of people versus nonpeople images by pigeons. Animal Learning & Behavior, 29, 107–119.

Aust, U., & Huber, L., (2002). Target-defining features in a “people-present/people-absent” discrimination task by pigeons. Animal Learning & Behavior, 30, 165–176.

Azizi, A. H., Pusch, R., Koenen, C., Klatt, S., Kellermann, J., Güntürkün, O., & Cheng, S. (2018). Revealing category representation in visual forebrain areas of pigeons using a multi-variate approach. Manuscript submitted for publication.

Baker, C. I., Behrmann, M., & Olson, C. R. (2002). Impact of learning on representation of parts and wholes in monkey inferotemporal cortex. Nature Neuroscience, 5, 1210–1216.

Barsalou, L. W. (1983). Ad hoc categories. Memory & Cognition, 11, 211–227.

Bayer, H. M., & Glimcher, P. W. (2005). Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron, 47, 129–141.

Benard, B., Stach, S., & Giurfa, M. (2006). Categorization of visual stimuli in the honeybee Apis mellifera. Animal Cognition, 9, 257–270.

Castro, L., & Wasserman, E. A. (2014). Pigeons’ tracking of relevant attributes in categorization learning. Journal of Experimental Psychology: Animal Learning and Cognition, 40, 195–211.

Castro, L., & Wasserman, E. A. (2017) Feature predictiveness and selective attention in pigeons’ categorization learning. Journal of Experimental Psychology: Animal Learning and Cognition, 43, 231–242.

Cerella, J. (1979). Visual classes and natural categories in the pigeon. Journal of Experimental Psychology: Human Perception and Performance, 5, 68–77.

Cohen, H., & Lefebvre, C. (2005). Handbook of categorization in cognitive science. Amsterdam, Netherlands: Elsevier.

Colombo, M., Frost, N., & Steedman, W. (2001). Responses of ectostriatal neurons during delayed matching-to-sample behavior in pigeons (Columba livia). Brain Research, 917, 55–66.

Cook, R. G., Wright, A. A., & Drachman, E. E. (2013). Categorization of birds, mammals, and chimeras by pigeons. Behavioral Processes, 93, 98–110.

Curran, T., Tanaka, J. W., & Weiskopf, D. M. (2002). An electrophysiological comparison of visual categorization and recognition memory. Cognitive, Affective, & Behavioral Neuroscience, 2, 1–18.

DiCarlo, J. J., & Maunsell, J. H. R. (2000). Form representation in monkey inferotemporal cortex is virtually unaltered by free viewing. Nature Neuroscience, 3, 814–821.

DiCarlo, J. J., Zoccolan, D., & Rust, N. C. (2012). How does the brain solve visual object recognition? Neuron, 73. 415–434.

Diekamp, B., Kalt, T., Ruhm, A., Koch, M., & Güntürkün, O. (2000). Impairment in a discrimination reversal task after D1-receptor blockade in the pigeon ‘prefrontal cortex’. Behavioral Neuroscience, 114, 1145–1155.

Dittrich, L., Rose, J., Buschmann, J. U. F., Bourdonnais, M., & Günttürkün, O. (2010). Peck tracking: A method for localizing critical features within complex pictures for pigeons. Animal Cognition, 13, 133–143.

Ditz, H. M., & Nieder A. (2015). Neurons selective to the number of visual items in the corvid songbird endbrain. Proceedings of the National Academy of Sciences of the United States of America, 112, 7827–7832.

Durstewitz, D., Kröner, S., & Güntürkün, O. (1999). The dopaminergic innervation of the avian telencephalon. Progress in Neurobiology, 59, 161–195.

Durstewitz, D., Kröner, S., Hemmings, H. C., Jr., & Güntürkün, O. (1998). The dopaminergic innervation of the pigeon telencephalon: Distribution of DARPP-32 and coocurrence with glutamate decarboxylase and tyrosine hydroxylase. Neuroscience, 83, 763–779.

Freedman, D. J., & Miller, E. K. (2008). Neural mechanisms of visual categorization: Insights from neurophysiology. Neuroscience and Biobehavioral Reviews, 32, 311–329.

Freedman, D. J., Riesenhuber, M., Poggio, T., & Miller, E. K. (2001). Categorical representation of visual stimuli in the primate prefrontal cortex. Science, 291, 312–316.

Gadagkar, V., Puzerey, P. A., Chen, R., Baird-Daniel, E., Farhang, A. R., & Goldberg, J. H. (2016). Dopamine neurons encode performance error in singing birds. Science, 354, 1278–1282.

Gale, T. M., & Laws, K. R. (2006). Category-specificity can emerge from bottom-up visual characteristics: Evidence from a modular neural network. Brain and Cognition, 61, 269–279.

Gibson, B., Wasserman, E. A., Gosselin, F., & Schyns, P. G. (2005). Applying bubbles to localize features that control pigeons’ visual discrimination behavior. Journal of Experimental Psychology: Animal Behavior Processes, 31, 376–382.

Gluck, M. A., & Bower, G. H. (1988). From conditioning to category learning: An adaptive network model. Journal of Experimental Psychology: General, 117, 227–247.

Goldstone, R. L., Kersten, A., & Carvalho, P. F. (2017). Categorization and concepts. In J. Wixted (Ed.), Stevens’ handbook of experimental psychology and cognitive neuroscience, Volume 3: Language & thought (4th ed., pp. 275–317). Hoboken, NJ: Wiley.

Grainger, J., Dufau, S., Montant, M., Ziegler, J. C., & Fagot, J. (2012). Orthographic processing in baboons (Papio papio). Science, 336, 245–248.

Greene, S. L. (1983). Feature memorization in pigeon concept formation. In M. L. Commons, R. J. Herrnstein, & A. R. Wagner (Eds.), Quantitative analyses of behavior: Discrimination processes (Vol. 4., pp. 209–229). Cambridge, MA: Ballinger.

Grill-Spector, K., & Kanwisher, N. (2005) Visual recognition: As soon as you know it is there, you know what it is. Psychological Science, 16, 152–160.

Güntürkün, O. (1997) Cognitive impairments after lesions of the neostriatum caudolaterale and its thalamic afferent: Functional similarities to the mammalian prefrontal system? Journal of Brain Research, 38, 133–143.

Güntürkün, O. (2005). The avian ‘prefrontal cortex’ and cognition. Current Opinion in Neurobiology, 15, 686–693.

Güntürkün, O. (2012). Evolution of cognitive neural structures. Psychological Research, 76, 212–219.

Güntürkün, O., & Bugnyar, T. (2016) Cognition without cortex. Trends in Cognitive Science, 20, 291–303.

Güntürkün, O., & Hahmann, U. (1998). Functional subdivisions of the ascending visual pathways in the pigeon. Behavioural Brain Research, 98, 193–201.

Güntürkün, O., Verhoye, M., De Groof, G., & Van der Linden, A. (2013). A 3-dimensional digital atlas of the ascending sensory and the descending motor systems in the pigeon brain. Brain Structure & Function, 281, 269–281

Hegdé, J., Bart, E., & Daniel, K. (2008). Fragment-based learning of visual object categories. Current Biology, 18, 597–601.

Herold, C., Joshi, I., Hollmann, M., & Güntürkün, O. (2012). Prolonged cognitive training increases D5 receptor expression in the avian prefrontal cortex. PLoS ONE, 7, e36484.

Herold, C., Palomero-Gallagher, N., Hellmann, B., Kröner, S., Theiss, C., Güntürkün, O. & Zilles, K. (2011). The receptorarchitecture of the pigeons’ nidopallium caudolaterale—An avian analogue to the prefrontal cortex. Brain Structure & Function, 216, 239–254.

Herrnstein, R. J. (1990). Levels of stimulus control: A functional approach. Cognition, 37, 133–166.

Herrnstein, R. J., & De Villiers, P. A. (1980). Fish as a natural category for people and pigeons. In G. H. Bower (Ed.), The psychology of learning and motivation (Vol. 14, pp. 59–95). New York, NY: Academic Press.

Herrnstein, R. J., & Loveland, D. H. (1964). Complex visual concept in the pigeon. Science, 146, 549–551.

Huber, L., Troje, N. F., Loidolt, M., Aust, U., & Grass, D. (2000). Natural categorization through multiple feature learning in pigeons. The Quarterly Journal of Experimental Psychology Section B, 53, 341–357.

Hung, C. P., Kreiman, G., Poggio, T., & DiCarlo, J. J. (2005). Fast readout of object identity from macaque inferior temporal cortex. Science, 310, 863–866.

Jitsumori, M., & Yoshihara, M. (1997). Categorical discrimination of human facial expressions by pigeons: A test of the linear feature model. The Quarterly Journal of Experimental Psychology Section B, 50, 253–268.

Johnston, M., Anderson, C. & Colombo, M. (2017a). Neural correlates of sample-coding and reward-coding in the delay activity of neurons in the entopallium and nidopallium caudolaterale of pigeons (Columba livia). Behavioural Brain Research, 317, 382–392.

Johnston, M., Anderson, C., & Colombo, M. (2017b). Pigeon NCL and NFL neuronal activity represents neural correlates of the sample. Behavioral Neuroscience, 131, 213–219.

Kalenscher, T., Ohmann, T. & Güntürkün, O. (2006). The neuroscience of impulsive and self-controlled decisions. International Journal of Psychophysiology, 62, 203–211.

Kalenscher, T. Windmann, S., Rose, J., Diekamp, B., Güntürkün, O., & Colombo, M. (2005). Single units in the pigeon brain integrate reward amount and time-to-reward in an impulsive choice task. Current Biology, 15, 594–602.

Karakuyu, D., Herold, C., Güntürkün, O., & Diekamp, B. (2007). Differential increase of extracellular dopamine and serotonin in the “prefrontal cortex” and striatum of pigeons during working memory. European Journal of Neuroscience, 26, 2293–2302.

Kauffmann, L., Bourgin, J., Guyader, N., & Peyrin, C. (2015). The neural bases of the semantic interference of spatial frequency-based information in scenes. Journal of Cognitive Neuroscience, 27, 2394–2405.

Keller, F. S., & Schoenfeld, W. N. (1950). Principles of psychology. New York, NY: Appleton-Century-Crofts.

Kendrick, D. F., Wright, A. A., & Cook, R. G. (1990). On the role of memory in concept-learning by pigeons. The Psychological Record, 40, 359–371.

Kirsch, J. A., Hausmann, M., Vlachos, J., Rose J., Yim, M. Y., Aertsen, A., & Güntürkün, O. (2009). Neuronal encoding of meaning: Establishing category-selective response patterns in the avian “prefrontal cortex”. Behavioural Brain Research, 198, 214–223.

Koenen, C., Millar, J., & Colombo, M. (2013). How bad do you want it? Reward modulation in the avian nidopallium caudolaterale. Behavioural Neuroscience, 127, 544–554.

Koenen, C., Pusch, R., Bröker, F., Thiele, S., & Güntürkün, O. (2016). Categories in the pigeon brain: A reverse engineering approach. Journal of Experimental Analysis of Behavior, 105, 111–122.

Kriegeskorte, N., Mur, M., Ruff, D. A., Kiani, R., Bodurka, J., Esteky, H., … Bandettini, P. A. (2008). Matching categorical object representations in inferior temporal cortex of man and monkey. Neuron, 60, 1126–1141.

Kröner, S., & Güntürkün, O. (1999). Afferent and efferent connections of the caudolateral neostriatum in the pigeon Columba livia: A retro- and anterograde pathway tracing study. Journal of Comparative Neurology, 407, 228–260.

Kromrey, S., Maestri, M., Hauffen, K., Bart, E., & Hegdé, J. (2010). Fragment-based learning of visual object categories in non-human primates. PLoS ONE, 5, e15444.

Kruschke, J. K. (1992). ALCOVE: An exemplar-based connectionist model of category learning. Psychological Review, 99, 22–44.

Lazareva, O. F., & Wasserman, E. A. (2017). Categories and concepts in animals. In J. Stein (Ed.), Reference module in neuroscience and biobehavioral psychology (pp. 1–29). New York, NY: Elsevier.

Lengersdorf, D., Güntürkün, O., Pusch, R., & Stüttgen, M. C. (2014). Neurons in the pigeon nidopallium caudolaterale signal the selection and execution of perceptual decisions. European Journal of Neuroscience, 40, 3316–3327.

Levenson, R. M., Krupinski, E. A., Navarro, V. M., & Wasserman, E. A. (2015). Pigeons (Columba livia) as trainable observers of pathology and radiology breast cancer images. PLoS ONE, 10, e0141357.

Li, N., Cox, D. D., Zoccolan, D., & DiCarlo, J. J. (2009). What response properties do individual neurons need to underlie position and clutter “invariant” object recognition? Journal of Neurophysiology, 102, 360–376.

Linke, M., Bröker, F., Ramscar, M., & Baayen, H. (2017). Are baboons learning “orthographic” representations? Probably not. PLoS ONE, 12, e0183876.

Liu, X., Wan, H., Li, S., Shang, Z., & Shi, L. (2017) The role of nidopallium caudolaterale in the goal-directed behavior of pigeons. Behavioural Brain Research, 326, 112–120.

Lubow, R. E. (1974). High-order concept formation in the pigeon. Journal of the Experimental Analysis of Behavior, 21, 475–483.

Lundqvist, M., Rose, J., Herman, P., Brincat, S. L., Buschman, T. L., & Miller, E. K. (2016). Gamma and beta bursts underlie working memory. Neuron, 90, 152–164.

Lupyan, G. (2008). From chair to “chair”: A representational shift account of object labeling effects on memory. Journal of Experimental Psychology: General, 137, 348–369.

Minamimoto, T., Saunders, R. C., & Richmond, B. J. (2010). Monkeys quickly learn and generalize visual categories without lateral prefrontal cortex. Neuron, 66, 501–507.

Matsukawa, A., Inoue, S., & Jitsumori, M. (2004). Pigeon’s recognition of cartoons: Effects of fragmentation, scrambling, and deletion of elements. Behavioural Processes, 65, 25–34.

Moll, F. W., & Nieder, A. (2015). Cross-modal associative mnemonic signals in crow endbrain neurons. Current Biology, 25, 2196–2201.

Moll, F. W., & Nieder, A. (2017). Modality-invariant audio-visual association coding in crow endbrain neurons. Neurobiology of Learning and Memory, 137, 65–76.

Montague, P. R., Hyman, S. E., & Cohen, J. D. (2004). Computational roles for dopamine in behavioural control. Nature, 431, 760–767.

Mouritsen, H., Heyers, D., & Güntürkün, O. (2016). The neural basis of long-distance navigation in birds. Annual Review of Physiology, 78, 133–154.

Nakamura, T., Croft, D., & Westbrook, R. F. (2003). Domestic pigeons (Columba livia) discriminate between photographs of individual pigeons. Animal Learning & Behavior, 31, 307–317.

Nieder, A. (2017). Inside the corvid brain—Probing the physiology of cognition in crows. Current Opinion in Behavioral Sciences, 16, 8–14.

Nomoto, K., Schultz, W., Watanabe, T., & Sakagami, M. (2010). Temporally extended dopamine responses to perceptually demanding reward-predictive stimuli. Journal of Neuroscience, 30, 10692–10702.

Pannunzi, M., Gigante, G., Mattia, M., Deco, G., Fusi, S., & Del Giudice, P. (2012). Learning selective top-down control enhances performance in a visual categorization task. Journal of Neurophysiology, 108, 3124–3137.

Puig, M. V, Rose, J., Schmidt, R., & Freund, N. (2014). Dopamine modulation of learning and memory in the prefrontal cortex: Insights from studies in primates, rodents, and birds. Frontiers in Neural Circuits, 8, 93.

Qadri, M. A. J., & Cook, R. G. (2017). Pigeons and humans use action and pose information to categorize complex human behaviors. Vision Research, 131, 16–25.

Range, F., Viranyi, Z., & Huber, L. (2007). Selective imitation in domestic dogs. Current Biology, 17, 868–872.

Remy, M., & Güntürkün, O. (1991). Retinal afferents of the tectum opticum and the nucleus opticus principalis thalami in the pigeon. Journal of Comparative Neurology, 305, 57–70.

Rescorla, R. A., & Wagner, A. W. (1972). A theory of Pavlovian conditioning: Variations in the effectiveness of reinforcement and nonreinforcement. In A. H. Black & W. F. Prokasy (Eds.), Classical Conditioning II: Current research and theory (pp. 64–99). New York, NY: Appleton-Century-Crofts.

Rosch, E., Mervis, C. B., Gray, W. D., Johnson, D. M., & Boyes-Braem, P. (1976). Basic objects in natural categories. Cognitive Psychology, 8, 382–439.

Rose, J., & Colombo, M. (2005). Neural correlates of executive control in the avian brain. PLoS Biology, 3, e190.

Rose, J., Schiffer, A.-M., Dittrich, L., & Güntürkün, O. (2010). The role of dopamine in maintenance and distractability of attention in the ‘prefrontal cortex’ of pigeons. Neuroscience, 167, 232–237.

Rose, J., Schiffer, A.-M., & Güntürkün, O. (2013). Striatal dopamine D1 receptors are involved in the dissociation of learning based on reward-magnitude. Neuroscience, 230, 132–138.

Rose, J., Schmidt, J., Grabemann, M., & Güntürkün, O. (2009). Theory meets pigeons: The influence of reward magnitude on discrimination learning. Behavioural Brain Research, 198, 125–129.

Roy, J. E., Riesenhuber, M., Poggio, T., & Miller, E. K. (2010). Prefrontal cortex activity during flexible categorization. Journal of Neuroscience, 30, 8519–8528.

Rumelhart, D. E., Hinton, G. E., & Williams, R. J. (1986). Learning internal representations by back-propagating errors. In D. E. Rumelhart & J. L. McClelland (Eds.), Parallel distributed processing (Vol. 1, pp. 1–34). Cambridge, MA: MIT Press,.

Sands, S. F., Lincoln, C. E., & Wright, A. A. (1982). Pictorial similarity judgments and the organization of visual memory in the rhesus monkey. Journal of Experimental Psychology: General, 111, 369–389.

Scarf, D., Boy, K., Uber Reinert, A., Devine, J., Güntürkün, O., & Colombo, M. (2016a). Orthographic processing in pigeons (Columba livia). Proceedings of the National Academy of Sciences of the United States of America, 113, 11272–11276.

Scarf, D., Stuart, M., Johnston, M., & Colombo, M. (2016b). Visual response properties of neurons in four areas of the avian pallium. Journal of Comparative Physiology A, 202, 235–245.

Scarf, D., Miles, K., Sloan, A., Goulter, N., Hegan, M., Seid-Fatemi, A., … Colombo, M. (2011). Brain cells in the avian ‘prefrontal cortex’ code for features of slot-machine-like gambling. PLoS ONE, 6, e14589.

Schultz, W. (1998). Predictive reward signal of dopamine neurons. Journal of Neurophysiology, 80, 1–27.

Schultz, W. (2016). Dopamine reward prediction error signaling: A two component response. Nature Reviews Neuroscience, 17, 183–195.

Seger, C. A., & Miller, E. K. (2010). Category learning in the brain. Annual Review in Neuroscience, 33, 203–219.

Shanahan, M., Bingman, V., Shimizu, T., Wild, M., & Güntürkün, O. (2013). The large-scale network organization of the avian forebrain: A connectivity matrix and theoretical analysis. Frontiers in Computational Neuroscience, 7, 89.

Sidman, M. (1994). Equivalence relations and behavior: A research story. Boston, MA: Authors Cooperative.

Sigala, N., & Logothetis, N. (2002). Visual categorization shapes feature selectivity in the primate temporal cortex. Nature, 415, 318–320.

Soto, F. A., & Wasserman, E. A. (2010). Error-driven learning in visual categorization and object recognition: A common-elements model. Psychological Review, 117, 349–381.

Soto, F. A., & Wasserman, E. A. (2011). Asymmetrical interactions in the perception of face identity and emotional expression are not unique to the primate visual system. Journal of Vision, 11(3). https://doi.org/10.1167/11.3.24

Stacho, M., Ströckens, F., Xiao, Q., & Güntürkün, O. (2016). Functional organization of telencephalic visual association fields in pigeons. Behavioural Brain Research, 303, 93–102.

Stansbury, D. E., Naselaris, T., & Gallant, J. L. (2013). Natural scene statistics account for the representation of scene categories in human visual cortex. Neuron, 79, 1025–1034.

Starosta, S., Güntürkün, O., & Stüttgen, M. (2013). Stimulus-response-outcome coding in the pigeon nidopallium caudolaterale. PLoS ONE, 8, e57407.

Sutherland, N. S., & Mackintosh, N. J. (1971). Mechanisms of animal discrimination learning. London, UK: Academic Press.

Thorpe, S., Fize, D., & Marlot, C. (1996). Speed of processing in the human visual system. Nature, 381, 520–522.

Troje, N. F., Huber, L., Loidolt, M., Aust, U., & Fieder, M. (1999). Categorical learning in pigeons: The role of texture and shape in complex static stimuli. Vision Research, 39, 353–366.

Veit, L., & Nieder, A. (2013). Abstract rule neurons in the endbrain support intelligent behaviour in corvid songbirds. Nature Communications, 4(2878). https://doi.org/10.1038/ncomms3878

Veit, L., Pidpruzhnykova, G., & Nieder, A. (2015). Associative learning rapidly establishes neuronal representations of upcoming behavioral choices in crows. Proceedings of the National Academy of Sciences of the United States of America, 112, 15208–15213.

Verhaal, J., Kirsch, J. A., Vlachos, I., Manns, M., & Güntürkün, O. (2012). Lateralized reward-associated visual discrimination in the avian entopallium. European Journal of Neuroscience, 35, 1337–1343.

Vogels, R. (1999). Categorization of complex visual images by rhesus monkeys. Part 2: Single cell study. European Journal of Neuroscience, 11, 1239–1255.

Waelti, P., Dickinson, A., & Schultz, W. (2001). Dopamine responses comply with basic assumptions of formal learning theory. Nature, 412, 38–43.

Waldmann, C. M., & Güntürkün, O. (1993). The dopaminergic innervation of the pigeon caudolateral forebrain: Immunocytochemical evidence for a “prefrontal cortex” in birds? Brain Research, 600, 225–234.

Wasserman, E. A., Brooks, D. I., & McMurray, B. (2015). Pigeons acquire multiple categories in parallel via associative learning: A parallel to human word learning? Cognition, 136, 99–122.

Wasserman, E. A., Kiedinger, R. E., & Bhatt, R. S. (1988). Conceptual behavior in pigeons: Categories, subcategories, and pseudocategories. Journal of Experimental Psychology: Animal Behavior Processes, 14, 235–246.

Watanabe, S. (2011). Discrimination of painting style and quality: Pigeons use different strategies for different tasks. Animal Cognition, 14, 797–808.

Watanabe, S., Sakamoto, J., & Wakita, M. (1995). Pigeons’ discrimination of paintings by Monet and Picasso. Journal of the Experimental Analysis of Behavior, 63, 165–174.

Wynne, B., & Güntürkün, O. (1995). The dopaminergic innervation of the forebrain of the pigeon (Columba livia): A study with antibodies against tyrosine hydroxylase and dopamine. Journal of Comparative Neurology, 357, 446–464.

Yamazaki, Y., Aust, U., Huber, L., Hausmann, M., & Güntürkün, O. (2007). Lateralized cognition: Asymmetrical and complementary strategies of pigeons during discrimination of the “human concept”. Cognition, 104, 315–344.

Acknowledgements

Supported by the Deutsche Forschungsgemeinschaft through SFB 874. We are grateful to Olga F. Lazareva for critically reading a previous version of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Güntürkün, O., Koenen, C., Iovine, F. et al. The neuroscience of perceptual categorization in pigeons: A mechanistic hypothesis. Learn Behav 46, 229–241 (2018). https://doi.org/10.3758/s13420-018-0321-6

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13420-018-0321-6