Abstract

In a novel computer mouse tracking paradigm, participants read a spatial phrase such as “The blue item to the left of the red one” and then see a scene composed of 12 visual items. The task is to move the mouse cursor to the target item (here, blue), which requires perceptually grounding the spatial phrase. This entails visually identifying the reference item (here, red) and other relevant items through attentional selection. Response trajectories are attracted toward distractors that share the target color but match the spatial relation less well. Trajectories are also attracted toward items that share the reference color. A competing pair of items that match the specified colors but are in the inverse spatial relation increases attraction over-additively compared to individual items. Trajectories are also influenced by the spatial term itself. While the distractor effect resembles deviation toward potential targets in previous studies, the reference effect suggests that the relevance of the reference item for the relational task, not its role as a potential target, was critical. This account is supported by the strengthened effect of a competing pair. We conclude, therefore, that the attraction effects in the mouse trajectories reflect the neural processes that operate on sensorimotor representations to solve the relational task. The paradigm thus provides an experimental window through motor behavior into higher cognitive function and the evolution of activation in modal substrates, a longstanding topic in the area of embodied cognition.

Similar content being viewed by others

Introduction

Most everyday tasks are a seamless combination of perception, cognition, and action. To pick a snack at a self-service bakery, I have to recognize the different varieties of pastry on the counter, decide which one I like best, and reach for it. Classical theories of the human mind hold that these different processes occur in sequence: perceiving, deciding, acting (Newell, 1990). Intuitively, however, it feels that these things may overlap in time. When I am rushed, I might start reaching before I know which pastry exactly I will pick, deciding as I go, and in effect my hand may follow a less-than-straight path as donuts are weighed against nearby croissants (Truong et al., 2013).

In line with this intuition, psychological researchers increasingly agree that the neural processes underlying perception, cognition, and action are closely interlinked (e.g., Pezzulo & Cisek 2016) and evolve in a graded and temporally continuous manner, rather than being strictly separable into sequential stages. An important source of support for this view comes from behavioral experiments in which motoric responses are influenced in a graded way by properties of perceptual or cognitive components of the task (Spivey, 2007).

Trajectory attraction to non-target objects in visual space

Motor plans evolve continuously over time. Ghez et al., (1997) provided evidence for this view in their timed-movement-initiation paradigm, in which the time between a cue and movement initiation is systematically varied. For short stimulus-response intervals, movements fell close to a “default” direction that reflected the average target location. Response distributions gradually migrated toward the cued target with increasing stimulus-response interval. Subsequent research has substantiated this evidence both at the behavioral and the neural level. For instance, when the final target in an array of potential reaching goals is cued only upon movement onset, pointing trajectories curve toward the other items. The strength of attraction depends on the items’ spatial distribution, with multiple non-targets on one side of the display exerting stronger attraction than single ones (Gallivan and Chapman, 2014; Chapman et al., 2010). The visual saliency of potential targets strongly modulates trajectory bias, with highly salient potential targets attracting trajectories even when the opposite direction of curvature is predicted from the spatial distribution of potential targets (Wood et al., 2011). This suggests that there is a link between attentional deployment and motor planning. Song & Nakayama (2006; see also, 2007) used movement trajectories to capture attentional deployment when an odd-colored target had to be found among uniformly colored distractors. Attraction toward distractors was strong for one target with two distractors, but disappeared when attentional deployment to the target was made easier by increasing the number of uniformly colored distractors, enabling perceptual grouping, or by keeping target color fixed across trials. Similarly, attention-capturing motion at the location of a distractor increases attraction (Moher et al., 2015).

The dynamic neural field model of Erlhagen and Schöner (2002) postulates that values of motor parameters such as movement direction are represented as hills of localized activation within populations of neurons that are tuned to the parameters. Task demands or potential targets preactivate neurons in the distribution and interact with the input from the cued target. The model predicts the time course of activation in the population distribution from the early preshape (or default distribution) to the late form in which activation is centered on the cued target, accounting for the behavioral patterns observed by Ghez et al., (1997). The model predicts that the metrics of potential targets matter. Large differences between movement directions for the different targets lead to bimodal distributions of reaching directions at short stimulus-response intervals, which become monomodal over time. Small differences between the movement directions for different targets lead to monomodal distributions at all stimulus-response intervals, that merely shift toward the cued target. This dependence of response distributions on the metrics of the target set was also observed by Ghez et al., (1997).

The graded and time-continuous evolution of motor plans can be directly observed at the neural level by recording from populations of neurons in motor and premotor cortex. Monkeys reaching from a central button to one of six peripherally arranged target buttons were given varying amounts of prior information about the upcoming movement (Bastian et al. 1998, 2003). Distributions of population activation that represented the planned movement direction were observed during the delay between this prior information and the cue. Over time, the population shifted from an early, broad peak centered on the range of precued movement directions to a narrower peak centered on the movement direction to the specified target. Cisek and Kalaska (2005) performed the same experiment in which two precued targets implied movement directions that were 180 degrees apart. Now the early distribution of population activation was bimodal, switching to monomodal after the cue.

Trajectory attraction based on abstract cognitive tasks

The studies reviewed above involve specification of movement targets directly through visual cues of varied timing, salience, and validity. Their influence on movement is accounted for by inputs to neural representations over a space of movement parameters that map one-to-one onto movement targets.

A related type of study involving computer mouse tracking (Spivey et al., 2005; Freeman et al., 2011) uses biases in response trajectories to gain insight into the evolution of decisions in more abstract spaces whose neural representations do not necessarily map directly onto the sensorimotor surfaces. In a typical mouse tracking experiment (e.g., Coco & Duran, 2005; Dale, Kehoe, & Spivey, 2007; Dale, Kehoe, & Spivey, 2008; Freeman, Ambady, Rule, & Johnson, 2009; Spivey et al., 2016), participants solve some abstract cognitive task, such as categorizing an animal name as referring to a mammal or non-mammal (Dale et al., 2007). They respond by moving a mouse-controlled cursor from a start location on the computer screen (typically a center-bottom location) to an appropriate response button (typically two buttons in the upper left and upper right of the screen). The trajectory of the mouse cursor is recorded and analyzed metrically.

The possible responses to the cognitive task are mapped onto the response buttons in an arbitrary manner (e.g., through verbal instruction or written labels). Deviations of the mouse trajectories from a straight path to the correct response button in the direction of the alternative response button are used to infer how the certainty about the cognitive decision evolves in time. More difficult decisions are commonly associated with stronger curvature than easier ones, so that the cursor bends toward the correct button later in its path. The trajectories are taken to reveal the moment-to-moment decision state of the cognitive system, reflecting the ongoing competition between response alternatives (Freeman et al., 2011).

This paradigm has been used for a range of high-level cognitive tasks, such as social categorization (Freeman et al., 2008, 2013; Freeman & Ambady 2011; Cloutier et al., 2014), processing of grammatical aspect (Anderson et al., 2013), vowel discrimination (Farmer et al., 2009), cognitive flexibility (Dshemuchadse et al., 2015), intertemporal decision-making and delay discounting (Dshemuchadse et al., 2013; Scherbaum et al., 2013, 2016), multitasking (Scherbaum et al., 2015), stimulus-response compatibility (Flumini et al., 2014), lexical decision (Barca & Pezzulo, 2012), and response selection (Wifall et al., 2017). The vast majority of mouse-tracking studies employed the standard two-choice paradigm (Hehman et al., 2015), although some variants have been explored, mostly in a similar methodological frame (e.g., Anderson et al., 2013; Cloutier et al., 2014; Farmer, Anderson, & Spivey, 2007; Farmer, Anderson, & Spivey, 2007; Scherbaum et al., 2013, 2017; Koop & Johnson, 2011).

The current study

There are thus two broad categories of factors beyond the spatial attributes of an ultimate movement target that have been shown to influence the shape of motor responses. One category includes processes at the sensorimotor surfaces, evoked, for instance, by competing targets or salient distractors. The other one includes abstract cognitive tasks that evolve within neural domains remote from the sensorimotor level and that modulate motor decisions through learned links between actions and candidate solutions.

We aim to complement these previously studied factors with one that lies at the interface of high-level cognition and immediate perception. The experiments described here show that motor action is also influenced by attentional processes on a perceptual level that are integral components of a more abstract and complex cognitive task. We thus aim to observe signatures of the cognitive task directly, in an embodied and ecologically valid experimental setup where the cognitive task serves its proper role: using spatial language to identify objects in the world and thereby select movement targets.

Although we hope to reach situated cognition in general, our entry point is thus the “perceptual grounding” of spatial language in visual scenes. This task is sufficiently simple to be open to direct experimental assessment through movement, while also tapping into relational thinking, which implies a certain level of cognitive abstraction. Specifically, we ask participants to perceptually ground phrases about spatial relations such as “The green item to the left of the red one” by moving a cursor to the target that matches this description.

We have recently presented a neural process model that implements a neural mechanism for perceptual grounding of spatial and movement relations (Richter et al., 2014a, b, 2017). The model captures the processing steps that unfold in time when a relational phrase is linked to a visual scene. The structure of that model provided the heuristics for both the design and the expected outcome of the experiments we report. Before describing the experiments, we will therefore briefly summarize previous research into spatial language processing and sketch the neural model of relational grounding.

Spatial language

Spatial language helps disambiguate referent objects when feature-based language is insufficient. “The blue object”, for instance, may refer to either of two objects in Fig. 1 while “the blue object to the right of the green object” uniquely specifies a single object. Relational phrases like this consist of three components: a target object, corresponding to the blue object in the example; the relation itself, denoted by the spatial term (“right” in the example); and a reference object, which corresponds to the green object in the example. We focus on the deictic relations left, right, above, and below.

Linking a spatial phrase that describes a deictic relation to a configuration of objects in the visual environment requires multiple computational steps, as analyzed by Logan and Sadler (1996). First, the two arguments of a relation must be linked to the locations of the corresponding objects in a perceptual representation. Logan and Sadler (1996) call this spatial indexing. Second, the parameters of the reference frame must be set. For deictic relations, the origin of the reference frame is centered on the reference object, while its other parameters, including scale, direction, and orientation, remain congruent with the viewer’s reference frame. Third, a spatial template must be imposed on the reference object within the adjusted reference frame. This template is specific to the relation in question and indicates the goodness of fit for different locations in space relative to the reference object. Finally, the goodness of fit must be assessed for the target object by matching its position to the spatial template.

It is evident from this framework that spatial relations are not instantly available throughout the visual field, which is likewise suggested by the combinatorial explosion of possible relations when many objects are present (Franconeri et al., 2012). In line with this, empirical evidence suggests that evaluating visual relations involves the sequential processing of objects and relational pairs. Most importantly, the classical notion that localizing features in the visual environment requires focused attention (Treisman & Gelade, 1980) entails that spatial indexing does so as well. This is backed up by a stronger engagement of selective attention when locations of visual targets are to be reported rather than merely detected (Hyun et al., 2009). EEG data likewise support this conclusion. When participants saw two visual stimuli and judged their spatial relation, EEG showed attention shifts between them, despite the instruction to focus on both items at the same time, showing that either stimulus needed to be sequentially selected to evaluate their relation (Franconeri et al., 2012).

Eye-tracking data further highlight the role of attentional selection in establishing reference between linguistic input and visual scenes, particularly for spatial language (Eberhard et al., 1995; Tanenhaus et al., 1995). Yuan et al., (2016) briefly presented participants with visual displays of two stimuli that were vertically aligned and could thus be viewed as instantiating an ‘above’ or ‘below’ relation. Participants had to indicate for a queried item whether it had been in the upper or lower position. If a saccade from the non-queried to the queried item had occurred, responses were faster than when the other item was queried, suggesting that sequential order may play a role in judging relations. Another eye-tracking study using relations between object pictures showed similar gaze shifts (Burigo and Knoeferle, 2015), albeit without fully settling the role of shift order (and modeling efforts are similarly inconclusive in this respect; Kluth, Burigo, & Knoeferle, 2001; Regier & Carlson, 2016).

Sequential processing is furthermore induced by the presence of multiple candidate pairs. In visual search experiments by Logan (1994; see also, Moore, Elsinger, & Lleras, 2001; for review, see Carlson & Logan, 2005) participants saw visual displays with multiple item pairs and reported the presence or absence of a target pair that was defined by a relational phrase (e.g., by “dash above plus”) and placed among distractor pairs which instantiated the opposite relation (e.g., dashes below pluses). Search time rose steeply with the number of distractor pairs. Search time slopes were flat, in contrast, when distractor pairs consisted of all dashes or all pluses, attributed to pop-out of the discrepant item in the target pair (Logan, 1994). The pop-out did not appear to help processing the relation of the pair, however, deciding whether the sought relation was present still took more time than only deciding whether a discrepant item was present (probed in another condition). Thus, attentional allocation is required but not sufficient to process relations, which instead seems to involve additional steps (Logan, 1994).

Together, the evidence suggests that sequential selection of visual items plays an important role in multiple stages of relational processing, although leaving some open questions with respect to the underlying mechanisms.

A dynamic neural field model of spatial language grounding

We provide a rough outline of the model, which is presented in detail elsewhere (Richter, Lins, Schneegans, Sandamirskaya, & Schöner, 2014a; see also, 2017, Richter et al., 2014b). The model is framed in dynamic field theory (DFT; Lins & Schöner, 2014; Schöner, 2015; Schöner, Spencer, & the DFT Research Group, 2008), a set of concepts that neurally ground perceptual, motor, and cognitive processes. In DFT, distributions of activation over populations of neurons are modeled and simulated as dynamic neural fields, which are defined over the continuous metric dimensions that the modeled populations are sensitive to, such as retinal space, color, or movement space. This reflects the tuning of neural activity to input or output dimensions (see Bastian et al., 2003; Erlhagen, Bastian, Jancke, Riehle, & Schöner, 1999; Jancke et al., 1999, for the neurophysiological foundation of DFT).

Dynamic neural fields evolve continuously in time. They receive input from the sensory surfaces or through synaptic connections from other dynamic fields. An object in the visual array, for instance, may induce a localized bump of activation in a field defined over retinal space. If such input is strong enough to push activation across a threshold, output is generated, and a localized peak of activation may arise. The output may impact other fields or motor systems via synaptic connections. On the other hand, output also drives lateral interaction between different sites within the same field: Neighboring sites excite each other (local excitation) while remote sites inhibit each other (surround inhibition). When this recurrent regime is entered, the emerging peak is to a degree decoupled from the input and thus stabilized against input fluctuations or other perturbations (which ultimately enables stable cognition in situated agents; Lins & Schóner, 2014; Schöner, 2008; Schöner et al. 2015). Peak formation in DFT thus represents an elementary decision about the presence or computational relevance of what brought it about.

The neural process model of the perceptual grounding of spatial language is a seamless dynamical system composed of multiple interconnected fields that implement mechanisms of scene representation, visual search, spatial phrase representation, neural process control, and relational processing (Fig. 2). The spatial phrase representation (left in Fig. 2) guides processes in the sensory parts of the architecture that receive input from a visual image. The visual image is supplied to the perceptual field, shown at the top right of Fig. 2. This field is defined over two dimensions of image space and one color dimension. Visual items initially lead to hills of sub-threshold activation in the perceptual field. The locations of these hills along the field’s dimensions indicate colors and spatial positions of the items. The perceptual field is the visual scene representation to which other parts connect in order to drive attention for visual search or to receive location or color input for further processing.

To ground a spatial phrase such as “The green item to the left of the red item” in a scene like Fig. 1, the phrase is stored in the spatial phrase representation. In a first step, then, the component for feature-based visual search drives feature attention in the perceptual field, bringing all items in the reference color (here, red) above the output threshold. The output is projected to the relational component (bottom right in Fig. 2), where one possible reference position is selected and retained in a dedicated spatial working memory. The successful completion of selecting and storing a reference position is detected by mechanisms of neural process control, which are shown in the top left of Fig. 2. Upon the detection, these mechanisms initiate the next processing step, which consists of a visual search process similar to the previous one, but focused on items in target color (here, green). This step involves bringing all items in target color above threshold in the perceptual field through feature attention (the model state in Fig. 2 shows this point of the grounding process). Note that the neurally enforced sequentiality of reference and target selection is mandatory to ensure that reference and target positions are relayed to the correct downstream substrates in the component for relational processing (for details, see Richter, Lins, Schneegans, Sandamirskaya, & Schöner, 2014a, b; Richter et al., 2017). The potential target item positions are then spatially transformed within the relational component, bringing them into a space that is centered on the stored reference position. An activation template, driven by the spatial phrase representation, instantiates the semantics of the phrase’s spatial term within that space and all potential target positions are matched against the template. By this, the best-fitting item position is selected and ultimately projected back into the perceptual field, so that a peak at the target location forms there. At this point, all items in the spatial phrase have been found and neurally instantiated; the phrase has been successfully grounded.

When grounding the same spatial phrase in a scene such as Fig. 3, which contains two items that share the reference color (red), the model may initially select the incorrect reference item, as it is not known at the time of the selection which of the items in reference color the phrase refers to. In this case, the lack of appropriate target items is detected by mechanisms of neural process control (Richter et al., 2012; Sandamirskaya & Schöner, 2010) and additional grounding attempts occur in sequence until the correct item pair is found.

In summary, the model autonomously realizes the essential steps postulated by Logan and Sadler (1996), including the capability to sequentially test different hypotheses about possible referents of a spatial phrase (similar to Logan, 1994).

The model is constrained by neural principles articulated in DFT (Schneegans et al., 2015a), by evidence for sequentiality in relational processing, and by capacity limitations in attentional function (both discussed in the previous section; e.g., Franconeri et al., 2012; Franconeri et al., 2009; Logan, 1994; Treisman & Gelade, 1980), all broadly consistent with a theoretical account in DFT of visual feature representation and feature binding (Schneegans, 2016; Schneegans et al., 2015b). These constraints lead to the hypothesis that grounding always entails the attentional selection of, first, all potential reference items and, subsequently, all potential target items. Importantly, the model postulates that this involves activating all items of the matching color at a point during the selection process. This becomes visible in the evolution of activation in the perceptual field during grounding, which is shown for the two example scenes in Fig. 4: Peaks of activation arise at all locations where items in reference color are located, and the same is true for items in target color.

Evolution of activation in the perceptual field during grounding the spatial phrase “The green item to the left of the red item” in the depicted visual scenes. Activation snapshots are numbered in temporal order and show maximum activation along the perceptual field’s color dimension. Labeled black bars indicate periods where output is produced at the indicated items’ locations

Due to its unique role of linking space to item features (here, color), the perceptual field serves as a hub that connects multiple neural systems. Conceptually similar fields are implicated in various functions such as visual working memory and change detection (Schneegans et al., 2015b; Schneegans 2016; Zibner et al., 2011, 2017) as well as driving motor systems (Tekülve et al., 2016; Zibner et al., 2015). We postulate that activation peaks in the perceptual field (or a related neural representation) may impact motor planning (Cisek & Pastor-Bernier, 2014; Cisek, 2007; Cisek & Kalaska, 2005; Bastian et al., 1998).

We thus expect that the attentional selection of all potential referents of the spatial phrase may lead to motor signatures during a grounding task. This includes all items sharing the target or the reference color. If motor signatures arise from the activation of potential reference items, rather than only from the activation of potential target items, this shows that motor planning is susceptible to influences from higher cognitive processes during the grounding of relations.

Experiments

We conducted four experiments that probe how spatial language grounding is tied to the neural representations of visual space and associated motor responses. The experimental paradigm closely resembled the grounding scenario solved by the model described above. Participants read a spatial phrase which described a relation between two colored items, such as “The green item to the left of the red one.”, and then saw a visual scene composed of 12 colored items, including the described pair. A cursor controlled via the computer mouse had to be moved from a starting point to the target item of the phrase (green in the example) while the cursor trajectory was recorded. By definition, the target item had the target-defining color mentioned in the phrase and at the same time matched the spatial term better than any other item in that color. Other items in the scene included a reference item (red in the example), relative to which the spatial term must be applied in order to find the target; one or more distractor items, defined by sharing the target color but providing a quantitatively worse match to the spatial term; and, in some experiments, items that shared the color of the reference item but were not combined with a target item to form the relational pair described in the phrase. The remaining items were differently colored fillers.

The mouse trajectories were examined for biases toward items that according to the model must be brought into the attentional foreground in the grounding process. This included distractors and items in reference color, as these could potentially take on the roles contained in the spatial phrase. Only six clearly distinguishable colors were used in each display, making visual search among the items highly efficient (Wolfe & Horowitz, 2004; Wolfe et al., 1990). It was therefore assumed that items would be identified rapidly as candidates or non-candidates for the different roles, which entails ruling out reference and filler items as potential movement goals at an early stage of the grounding process. Attraction to items in reference color was therefore of particular interest, since these items gained relevance only from their computational role in the grounding process whereas it could be determined rapidly through visual search that they did not pose potential movement goals. Filler items were not expected to impact the grounding process systematically, again due to the ease with which relevant items can be singled out through visual search based on color. This expectation is also supported by a study in which the impeding effect of distracting items irrelevant to a sought relation disappeared when target and reference were colored differently from the other items (Logan & Compton, 1996).

Experiment 1 looked for attraction toward a uniquely colored reference item and for attraction toward a distractor item. Experiment 2 served to disambiguate the nature of two effects observed in Experiment 1, namely those of the reference item and of the spatial term used in the phrase. This involved changing the directionality of response movements from vertical to horizontal. Experiment 2 thus also generalized the findings of Experiment 1 to this different response metric. Experiment 3 further tested the hypothesis that effects observed in Experiments 1 and 2 were signatures of grounding processes rather than based merely on the fact that the colors of distractor and reference item were mentioned in the phrase. For this, it was probed whether attraction caused by a competing relational pair, composed of a distractor and an additional item in reference color, transcended the sum of biases evoked by individual items in reference or target color that were not part of such a pair. Experiment 4 sought to provide further support for the interpretation that in Experiment 3 additional attraction had been caused by the combination of items into a relational pair rather than by a generic interaction between closely spaced items in task-relevant colors. This was done by comparing attraction toward a competing relational pair to attraction caused by an analogous pair in which both items shared a single task-relevant color.

Key aspects of the cognitive task used in these experiments differed from previous mouse tracking work, which required some adjustments in the employed methods. Most importantly, the space in which cognitive processes operated to solve the task was congruent with the response space, and this space was structured in a complex and variable manner. As described, mouse tracking research has instead focused on abstract cognitive tasks, and typically considered only a single, spatially fixed source of potential attraction, usually the sole alternative response option, so that any deviation could be interpreted in relation to that source (but see Scherbaum et al. 2015). Here, each visual display contained multiple effect sources, whose locations varied from trial to trial, and who could be situated on either side of the straight path to the ultimate movement target (relative to which trajectory deviation was measured). Biases induced by these sources were expected to superimpose in each trajectory and, due to the variable placement, to do so in a different manner in each trial. In effect, net trajectory biases could potentially go in either direction and even change directionality over movement time. Measuring the effects of individual sources thus required a systematic yet flexible manner to generate the complex visual displays, combined with specific measures to counterbalance the impact of confounding influences for analysis.

General methods

Aspects common to all experiments are described here. Specific aspects will be covered in the experimental sections.

Participants

Participants were recruited separately for each of the four experiments, by notices around the local campus. They signed informed consent and received monetary compensation for participation. The participants were naïve to the experimental hypotheses, native German speakers, had self-reported normal or corrected-to-normal vision, and no color vision deficiencies.

Apparatus and stimuli

The experiments were implemented and run using MATLAB R2017a and the Psychophysics Toolbox 3 (Brainard, 1997; Pelli, 1997; Kleiner et al., 2007), and presented on a 22” LCD screen (Samsung, 226BW at 1920 × 1080 resolution; size of visible image 475 mm× 297 mm) at a viewing distance of approximately 70 cm (thus subtending approximately 40.4∘× 22.99∘ visual angle, v.a.). Trajectories were collected using a standard computer mouse (Logitech, M-UAE96, approximate sampling rate 92 Hz; Experiments 2 to 4 instead used a Roccat Kone Pure mouse, effectively sampling at approximately 400 Hz). Mouse cursor speed was set such that mouse movement on the tabletop translated to cursor movement over the same physical distance on the screen, to make motions more similar to natural arm movements and simplify cognitive transformation from hand coordinates to screen space (see, e.g., Krakauer, Pine, Ghilardi, & Ghez, 2000).

Spatial phrases

Spatial phrases were in German and followed the scheme article – target – spatial term – reference, as in the example “Das Grüne rechts vom Roten.”, which translates to “The green [one] to the right of [the] red [one]”. The article was always “Das”, the target was taken from the set {Rote, Grüne, Blaue, Gelbe, Weiße, Schwarze}, the reference from the set {Roten, Grünen, Blauen, Gelben, Weißen, Schwarzen}, translating to “the {red, green, blue, yellow, white, black} one”, and the spatial term was taken from the set {links vom, rechts vom, über dem, unter dem}, translating to {left of, right of, above, below}.

The spatial phrases thus denoted a target item by a combination of a color (“green” in the above example) and a position given relative to a reference item (“right of”), which was specified only by its color (“red”). Which of the six colors took the role of target and reference was determined randomly for each trial. The relational description provided by the spatial phrase was qualitatively valid for at least one visual item in the associated visual scene.

Visual scenes

Figure 6 shows an annotated example display from Experiment 1, illustrating the general structure of the visual scenes (only the start marker and the visual items were visible to the participants). The visual items were irregular polygons, generated randomly for each trial and having a diameter between 8.2 and 16.4 mm (0.67 and 1.34∘ v.a.; circles in Fig. 6). They could be colored green, red, blue, yellow, black, or white, were constrained to a rectangular stimulus region (see Fig. 6), and retained a minimum border-to-border distance of 0.5 mm.

A subset of items in each scene matched one of the two colors named in the spatial phrase and were thus expected to give rise to behavioral effects. These items’ spatial arrangement was determined in a controlled manner. Most importantly, every scene contained a target item and a reference item. The spatial arrangement of these items relative to each other was determined with the help of two-dimensional fit functions, which described for each spatial term how well different spatial coordinates, defined in relation to the reference location at the origin of the coordinate space, matched the term’s semantics (Fig. 5; see Appendix A for the underlying equation). To ensure that targets matched the spatial term well, they were placed in a region of the fit function where fit exceeded a threshold value (given in the experimental sections; see Fig. 9a for an example). To sample the space in this region approximately uniformly, possible target positions were located on the junctions of an equally spaced square grid superimposed on the region (see Fig. 9b for an example). One of the resulting positions was selected for each trial, thereby fixing the relative positioning of reference and target. The placement of the resulting two-item configuration within the final display was then determined such that the target item was positioned in one of four possible target locations (gray X’s in Fig. 6). Each of the four possible target locations was used with each possible target-reference configuration, meaning that each target-reference configuration was used in four visual scenes.

Display configuration in Experiment 1. The item array corresponds to the spatial phrase “The green item to the left of the red item”. T denotes the target, D the distractor, and R the reference item. See text for details

Other items sharing colors from the spatial phrase were present only in some experiments and conditions. This included distractor items and, for some scenes in Experiments 3 and 4, a pair of items that instantiated the inverse of the relation described in the phrase, or items sharing the reference color without being part of such a pair. How these items were placed is described in the experimental sections.

Finally, after fixing the positions of the above items, filler items (encircled gray in Fig. 6) were added to arrive at a total of 12 visual items in each scene. This made the arrays more similar to real-world scenes that naturally afford the use of spatial language to point out a specific item, rather than denoting a target based on simple features or based on the overall gestalt of the array. The fillers were randomly placed in the stimulus region and their colors were randomly taken from the colors not mentioned in the spatial phrase (i.e., from a pool of four colors). A constraint on filler placement was that the center of mass across all items in a scene (black diamond in Fig. 6) had to be congruent with the center of the stimulus region (with a tolerance of ± 0.8 mm in either direction for technical reasons). This means that the center of mass always was in the horizontal screen center (or vertical, in Experiment 2), which simplified counterbalancing potential biases toward either of the two as later described. This constraint also made the average position of the item array independent of the target location, which prevented participants from inferring the approximate location of the target item on that basis.

Procedure

The procedure is illustrated in Fig. 7. To start a trial, participants moved the mouse cursor, a white dot, onto a black start marker centered in the bottom of an otherwise gray screen. After resting there for 300 ms, the spatial phrase appeared at a position somewhat random around the center of the stimulus region (± 48 mm/20 mm in horizontal/vertical direction; text was in Arial and 8.8 mm high). The phrase was visible for a random duration between 1 and 2 s to counteract anticipatory responses. Phrase offset was marked by an auditory beep. Participants were instructed to start movement in upward direction (or rightward, in Experiment 2) within 1 s after the phrase had disappeared. Movement onset was defined as cursor movement faster than 20 mm/s, which was assessed by continuously monitoring traveled pixels within 20ms sampling intervals (as described above, physical mouse movement distance was equivalent to physical cursor movement distance, so that the threshold as well applied to both).

If mouse movement occurred too early or too late, the trial was aborted with appropriate feedback and presented again at a later point. Importantly, the array of visual items appeared only upon movement onset, in order to force selection of the motor goal into the same time window as attentional selection processes associated with the grounding task. Also, it has been shown that presenting stimuli only after movement onset produces more consistent deviation than showing stimuli first (Scherbaum & Kieslich, 2017).

The participant’s task was to select the item which in his or her opinion best matched the preceding phrase (participants could select any item). Starting from movement onset, participants had 2 s to select an item by clicking it (any mouse click closer to an item’s center than the maximum item radius of 8.2 mm was registered as selection of that item). If no selection occurred in that time window, the trial was aborted with appropriate feedback and presented again at a later point. The time limit served to prevent participants from stopping mouse movement while grounding the relation, so as to time-lock movement onset and the start of relation grounding. The allowed duration was based on pilot work and adjusted to impose a sense of time pressure without requiring hasty responses. Trials exceeding the time limit mainly occurred during the first few trials, before participants fully adapted to the paradigm. After item selection, the next trial followed.

Apart from the instruction to select the best-matching item, participants were told that there were no correct or incorrect responses (in particular, they were not made aware of the technical distinction between targets and distractors) and that the items did not pose obstacles for mouse movement. Prior to the experimental trials, the experimenter demonstrated the procedure by completing two trials (once choosing the distractor and once choosing the target) and each participant completed 13 practice trials with no time constraints.

Analysis

Only data from trials with correct responses entered analysis. Responses counted as correct if participants had selected the item that fitted the spatial phrase best according to the fit function (i.e., the target item). Furthermore, trajectories with sharp turns were excluded from analysis. This derives from previous mouse tracking research in which distributions of curvature have been used to determine whether responses might stem from two distinct populations of trials, one where an initial response decision is corrected mid-flight (leading to high deviation) and another where the initial decision remains unchanged so that trajectories are affected only by graded influences from other sources (leading to low deviation; Farmer, Anderson, & Spivey, 2007; Freeman & Dale, 2013; Hehman et al., 2015).

Due to the specifics of the current paradigm, we had to assess curvature in a different manner than previous mouse tracking studies, which have typically used area under the curve or maximum deviation (Freeman & Dale, 2013; Hehman et al., 2015). These latter methods measure curvature as deviation from the direct path aggregated over movement time and as the largest observed distance from the direct path, respectively. They thus express the global degree of curvature in a trajectory, which is useful when deviation is expected to occur only in one particular direction, for instance, toward the nonselected alternative out of two response buttons. In the current experimental setup, it was expected that multiple potentially opposing biases would jointly affect individual trajectories. For instance, a trajectory’s shape may be codetermined by attraction toward a distractor item located to the left of the direct path and by attraction toward a reference item located to the right of the direct path. With superimposed opposing biases, global measures of curvature can yield misleading values. In the above case, for instance, maximum deviation would capture only the larger of the two opposing biases and any global measure of curvature may be erroneously reduced by the influence of the counteracting bias. Thus, global measures are difficult to interpret with mixed-direction biases.

To circumvent these problems, we sought to identify possible redecisions mid-flight using a measure of local curvature that yields high values at abrupt turns but is unaffected by the trajectory distance from the direct path. It was computed by an algorithm (described in detail in Appendix B) which for regularly spaced points along the length of trajectories yields curvature values between zero (straight line) and π radians (antiparallel trajectory segments). To identify trials where an abrupt redecision or a similar local event may have taken place, the maximum curvature value within each trajectory was determined and compared to a fixed threshold value of 0.933 radians. Trajectories exceeding this threshold were excluded from analyses. Apart from cleaning the data set of possible redecisions, this also served to exclude outlier trials with extreme deviations that hinted at momentary failures to coordinate mouse movement and subsequent corrections of movement direction.

The outcome of the exclusion procedure was governed by three parameters: the threshold value and two parameters of the curvature computation algorithm itself (the latter two are explained in Appendix B). The three parameters were tuned based on the trajectory data set of Experiment 1 and the obtained values were used for all trajectories and experiments. The tuning procedure involved plotting excluded and included trajectories for different parameter sets and manipulating parameters until a balance was found of reliably excluding apparent redecisions and outliers without discarding overly large portions of the data. For instance, the algorithm was tuned to retain trajectories with very brief deviations that appeared to result from slightly overshooting the target or from minor imprecisions in mouse handling. Since, regardless of the measure of curvature used, no objective criterion is known that would perfectly distinguish trials with redecisions from those where only graded attraction is present, a certain degree of subjective judgment was necessarily involved in the choice of parameters. To provide an impression of retained and excluded trajectories obtained with our parameters, Appendix B shows some examples from these two sets. We further sought to alleviate this issue by statistically examining the distributions of maximum curvature across all trials for signs of distinct response populations (i.e., bimodality; described in detail under statistical methods). This analysis was conducted once including and once excluding those trajectories that exceeded the curvature threshold, in order to test for the presence of different response populations in general as well as in the cleaned data set used in the main analyses.

Trajectory preparation

Trajectories were trimmed to start with the first data point after movement onset and to end with the last data point before crossing an 8.2mm radius around the selected item’s center (equaling the maximum possible item radius; Fig. 8a). Trimmed trajectories were translated to place the first data point at [0,0] and then rotated around that point such that the center of the selected item would be placed on the positive y-axis (i.e., at x = 0; Fig. 8b). This entailed that final trajectory points tended to lie not at x = 0, but somewhat lateral to the y-axis, depending on where the radial border of the selected item had been crossed, so that any deviations affecting trajectories until the end of the movement were retained in the rotated versions.

Through these transformations, the direct path (see Fig. 8a), defined as the straight line from the point of movement onset to the center of the selected item, was made congruent with the y-axis. Thus, as shown in Fig. 8b, x-coordinates in the transformed trajectories are equivalent to deviation from the direct path, with negative values corresponding to biases to the left of the direct path and positive values representing biases to the right (sides are given relative to the “direction of travel” toward the target). To enable averaging, deviation data was time-normalized (Fig. 8c) by linearly interpolating x-coordinates over 151 equally spaced steps of movement time. The data points of averaged trajectories thus combine deviation data from the same proportion of time elapsed since movement onset.

Examining trajectory biases

We were interested in whether movement trajectories were attracted by visual items whose colors were mentioned in the spatial phrase. To examine this, mean deviation over time was compared between conditions in which an item of interest was located to the left or to the right of the direct path, respectively. Depending on the effect under scrutiny, the item of interest was either a distractor item or the reference item. In Experiments 3 and 4, it could also be a pair of closely spaced items (which was treated as a single ”item” of interest for matters of analysis) or an item sharing the reference color.

To fully isolate the effect of the item of interest in a given comparison, the impact of several interfering influences needed to be counterbalanced. First, we expected all items that shared a color from the spatial phrase to codetermine trajectory shape in every trial. Second, a trajectory bias toward the screen center was expected, since participants were instructed to start movement into an upward direction (or rightward, in Experiment 2) before the visual items appeared. Third, a bias toward the center of mass across items was expected in early trajectory portions, as participants may have deemed all items potential targets during the short time window preceding color-based visual search. Fourth, we conjectured that spatial terms might impact trajectory shape in a systematic manner, as suggested by priming evidence (Tower-Richardi et al., 2012).

To balance out these influences, mean trajectories for each participant were composed as averages over several experimental sub-conditions. Each sub-condition included all trials exhibiting a specific combination of spatial term (left, right, above, below), target position (top left, top right, bottom left, bottom right), reference side (left or right), and distractor side (left or right; distractor side was replaced by pair side in Experiments 3 and 4). Trajectories within each sub-condition were averaged, yielding one mean trajectory per sub-condition. The obtained means were then grouped into two sets based on the side of the item of interest for the comparison at hand, and overall means were computed within each of the two sets. The two overall means thus differed only with respect to the side of the item of interest, while each combination of biasing influences was weighted to an equal degree in the final means, regardless of the number of trials in the different sub-conditions. Note that using target position as a factor in defining the sub-conditions took care of balancing out the bias toward the screen center, since the screen center was to the left of the direct path for two of the four possible target positions and to the right of the direct path for the other two. It moreover balanced out a possible bias to the center of mass across items, since the center of mass was made congruent with the screen center during scene creation (see above).

Computing overall mean trajectories from sub-condition means instead of averaging directly over all cases was necessary since case numbers in each sub-condition were partly different. One reason was loss of cases due to incorrect responses (i.e., not selecting the target item) and exclusion of sharply curved trajectories. Furthermore, the algorithm for scene creation produced certain trial types somewhat more frequently than others. For instance, in “left of” trials with target positions on the right side of the display, distractors were slightly more often located to the left of the right-leaning direct paths, since for most target-reference configurations the distractor region covered more space to the left of the target item. The approach we took prioritizes the approximately uniform sampling of possible target and distractor positions over equal trial numbers in the sub-conditions.

A limitation on balancing was that spatial terms could not be fully counterbalanced in those comparisons by reference side where the spatial term axis was orthogonal to the direct paths. We refer to “above” and “below” as having a vertical axis, insofar that these terms’ semantics presuppose a vertical displacement between the reference and the target item. Analogously, we refer to “left” and “right” as having a horizontal axis. The direct paths, on the other hand, were roughly vertical when the start marker was below the item arrays (Experiments 1, 3, and 4) and roughly horizontal when the start marker was to the left of the item arrays (Experiment 2). In trials where the direct path and the spatial term axis were roughly orthogonal to each other, the spatial term prescribed on which side of the direct path the reference item had to be located, because the target item had to match the spatial term. For instance, given a vertical direct path and the spatial term “left”, the reference item must be placed to the right of the direct path in order for the spatial term to hold. This coupling of spatial terms and reference sides entails that any effects of spatial terms and reference item placement will be confounded in the respective comparisons. This will be highlighted when discussing the affected results.

Statistical methods

Trajectory data were subjected to repeated-measures analyses in the form of paired-samples t tests (all experiments) and repeated measures analyses of variance (ANOVAs; Experiment 3). In both cases, separate tests were performed for the data at each of the 151 interpolated points.

The large number of tests gives rise to the question how many significant results in direct succession correspond to overall significance of the difference between the compared series of data points. Due to the strong interdependence of successive data points in natural movement trajectories (Dale et al., 2007), traditional methods such as Bonferroni correction are not applicable. One view on this matter holds that sequences of statistical tests over movement trajectories should be considered as units that stand for a single comparison of whole trajectories, rejecting the need for alpha correction as long as the outcome of the comparison is presented and interpreted in its entirety (Gallivan & Chapman, 2014; Chapman, 2011). Many researchers in mouse tracking (e.g., Anderson et al., 2013; Bartolotti & Marian, 2012; Duran, Dale, & McNamara, 2010; Freeman et al., 2008; Scherbaum et al., 2015) have instead adopted a bootstrap approach (Efron & Tibshirani, 1993) first introduced by Dale et al., (2007). The method preserves the dependency between time steps and yields an empirical distribution of bootstrap replications over the maximum length of significant sequences. Based on a prespecified p value, a criterion for sequence length in the real data is derived beyond which the presence of an overall effect is assumed. In keeping with much of the mouse tracking literature, we adopted this approach as an additional indicator for the overall significance of sustained trajectory deviations. The method was implemented according to the description provided by Dale et al., (2007; see also, Scherbaum et al., 2015). For each comparison we report, a separate criterion was computed based on 10,000 bootstrap replications of maximum sequence length, using the compared data as input to the bootstrap. The derived length criteria required for overall significance were based on p < 0.01. In the case of ANOVAs, a separate criterion was obtained for each main effect and interaction.

Distributions of maximum curvature values were examined for signs of bimodality. This was done over all correct trials, including those excluded from the other analyses due to exceeding the curvature threshold and, if bimodality was observed in this full sample, also for the smaller set of trajectories with sharply curved ones excluded. We thereby sought to determine, first, whether two distinct populations of trials were at all discernible and, second, whether trials from both of these populations may still have affected the ultimately analyzed set of trajectories. Bimodality was assessed using Hartigan’s dip test (Hartigan and Hartigan, 1985; Hartigan, 1985) in the MATLAB implementation by Mechler (2002), testing the null hypothesis of unimodality against the alternative hypothesis of multimodality, with p values below 0.05 indicating bimodality. We used the dip test instead of the more widely used bimodality coefficient (SAS Institute, 2012) since the distributions of maximum curvature were skewed, which may lead to erroneous detection of bimodality by the bimodality coefficient (Pfister et al., 2013).

Finally, movement times were analyzed in an exploratory manner by comparing them between conditions in a way similar to the trajectories; details are provided in the experimental sections.

Experiment 1

The first experimentFootnote 1 tested whether attentional selection of a uniquely colored reference item and a distractor item during spatial language grounding affected the shape of mouse trajectories to the target. The target and the distractor were viewed as potential movement goals that must be disambiguated through grounding the spatial phrase. The distractor was therefore hypothesized to metrically attract the trajectories. The unique reference item was expected to be ruled out as a potential movement goal by the participants early on but was still expected to be attentionally selected in the grounding process due to its computational relevance. The reference item was therefore as well hypothesized to attract mouse trajectories.

Methods

Participants

The 12 participants (five female, seven male) were 27.4 years (SD = 3.8 years) old on average and received €10 for participation.

Visual scenes

An annotated example display for Experiment 1 is shown in Fig. 6. Possible target positions in the visual scenes were located in a region of the fit function where fit was higher than 0.6, illustrated by the dotted red outline in Fig. 9a (only the fit function for the spatial term “left” is shown; scene creation and the resulting item positions were analogous for the other spatial terms). The spacing of the grid for target placement within that region was adjusted to obtain 16 possible target positions (red dots in Fig. 9b).

a Regions eligible for target and distractor placement (red and green dotted lines, respectively) and one possible placement of target and distractor. b All possible target positions (red) and all possible distractor positions (green) for the target position that is marked with a cross. Circles illustrate the approximate item extent (maximum radius) and dots mark item centers

A set of possible distractor positions was created separately for each of the 16 target positions. In each case, distractors could be placed in a region of the fit function where fit was higher than 0.4 and at least 0.03 lower than the fit value of the target position at hand (e.g., the green outline in Fig. 9a shows the distractor region for the annotated target and one possible distractor placement; in Fig. 9b green dots indicate all possible distractor positions for the target position marked with a white cross). Out of the resulting distractor positions one was used per trial, paired with the respective target position. Due to the dependency of the distractor region on target fit and position, its shape and size was different for each target position. In consequence, the number of distractor positions varied from 16 to 25 (mean 20.9) between target positions.

In a random subset of scenes (27%) one filler was given the same color as the target and the distractor, as an additional incentive to evaluate the spatial relation. The respective filler had to be located on the side of the reference item opposite to that denoted by the spatial term, so that it did not pose a qualitative match to the term, and it had to be separated from the reference item along the term’s axis (e.g., horizontally for “right”) by at least 28.3 mm (2.32∘ v.a.). The effect of this item was not specifically analyzed, but cursory analysis of the data without the trials including such an item showed that results were not markedly changed.

Together, there were 335 different configurations of target, reference, and distractor items for each of the four spatial terms. Each of these was used with each of the four possible target locations, leading to a total of 5360 trials. The trials were randomly assigned to the participants, so that each participant completed 446 trials and one completed eight more to use the entire trial set.

Analysis

Trajectory deviation from the direct path as a function of the elapsed proportion of movement time was used as the main dependent measure. Analyses focused on the factors reference side and distractor side, each with the two levels left and right (of the direct path). To assess the effect of reference side, three planned contrasts compared mean deviation between left and right conditions. One compared trajectories across spatial terms, one included only horizontal-axis spatial terms (“left” and “right”), and one included only vertical-axis spatial terms (“above” and “below”). The effect of distractor side was assessed with three analogous contrasts. Inflated type I error risk over these comparisons was addressed by choosing p < 0.01 for each t test.Footnote 2

Paired-samples t tests were used to compare movement times between distractor sides, between reference sides, and between spatial term axes. Also, movement time difference scores between distractor sides and between reference sides were compared between spatial term axes (i.e., between horizontal axis trials with the terms “left” or “right” and the vertical axis trials with the terms “above” or “below”). Due to the exploratory nature of these comparisons, each test used p < 0.05.

Results

When asked, participants reported not to have noticed that possible target positions were restricted to four screen locations (some noted that targets tended to be located around the center area of the item arrays rather than in the outer regions). Movement onset was generally registered close to the center of the start marker (M = 2.14 mm, SD = 1.97 mm).

A total of 5245 trajectories was obtained (115 were lost due to technical issues). Of these, 5003 (95.39%) were below curvature threshold (M = 416.92, SD = 35.91 equaling M = 95.3%, SD = 2.76%). Of the non-curved trajectories, 90.17% (4511) were correct responses and thus entered further analysis (86.01% of all obtained trajectories). Participants achieved a mean accuracy of 90.18% (SD = 3.34%) and their mean movement time was 1073 ms (SD = 112 ms). The above numbers are based on simple averaging over the respective trial ensembles; mean data reported from here on is based on balanced means as described. Figure 10 shows the empirical distribution over maximum curvature values for all correct responses, with red bars indicating curvature above threshold (i.e., trajectories excluded from other analyses). For the distribution, Hartigan’s dip test indicated no bimodality (p > 0.05).

Distribution of trajectories over maximum curvature values in Experiment 1. Red bars correspond to trajectories that were discarded due to high curvature. Only correct responses are shown

The left side of Fig. 11 visualizes the results of comparisons by distractor side, where red and blue circles labeled ‘D’ in the top of each panel indicate distractor side for the correspondingly colored mean trajectory.

Comparisons of mean deviation for Experiment 1. Solid red and blue lines show mean trajectory data, with red and blue circles labeled ‘D’ or ‘R’ in the top of the panels indicating distractor or reference side for the correspondingly colored trajectory. Transparent regions delimited by dashed lines indicate between-subjects standard deviation. Left color maps indicate p values at that time step, right ones indicate effect sizes. Black dotted lines on the left span time steps where differences were significant (p < 0.01)

Across spatial terms, trajectories diverged in a way consistent with a bias toward the distractor (Fig. 11a), with 106 successive time steps showing significant differences at p < 0.01, thus exceeding the bootstrap criterion (p < 0.01) of 18 time steps. The sequence of significant differences extended from 30.46 to 100% of movement time. For horizontal axis spatial terms, the bias toward the distractor was present as well (Fig. 11b), with 85 successive time steps showing significant differences at p < 0.01, exceeding the bootstrap criterion (p < 0.01) of eight time steps. The sequence of significant differences extended from 44.37 to 100% of movement time. Similarly, for vertical axis spatial terms, the bias toward the distractor was present (Fig. 11c), with 92 successive time steps showing significant differences at p < 0.01, exceeding the bootstrap criterion (p < 0.01) of 14 time steps. The sequence of significant differences extended from 39.74 to 100% of movement time.

The right side of Fig. 11 visualizes the results of the comparisons by reference side, where red and blue circles labeled ‘R’ in the top of each panel indicate reference side for the correspondingly colored mean trajectory. Across spatial terms, a mixture of two biases was visible (Fig. 11d). In the first half of movement time, trajectories diverged in a way consistent with a bias away from the reference item. This effect spanned 56 successive time steps with significant differences at p < 0.01, exceeding the bootstrap criterion (p < 0.01) of six time steps. For this effect, the sequence of significant differences extended from 1.32 to 37.75% of movement time. In the second half, trajectories diverged in a way consistent with a bias toward the reference. This effect spanned 54 successive time steps with significant differences at p < 0.01, as well exceeding the bootstrap criterion (p < 0.01) of six time steps. For this effect, the sequence of significant differences extended from 64.9 to 100% of movement time.

For horizontal axis spatial terms (Fig. 11e), only the early divergence consistent with a bias away from the reference remained. Note that, due to the coupling of reference side and spatial term in trials with horizontal axis spatial terms, this bias is also congruent with movement in the direction described by the spatial term. The divergence was present over 64 successive time steps showing significant differences at p < 0.01, exceeding the bootstrap criterion (p < 0.01) of 34 time steps. The sequence of significant differences extended from 1.32 to 43.05% of movement time. For vertical axis spatial terms (Fig. 11f), in contrast, only the late divergence consistent with a bias toward the reference remained. The divergence was present over 103 successive time steps showing significant differences at p < 0.01, exceeding the bootstrap criterion (p < 0.01) of 21 time steps. The sequence of significant differences extended from 32.45 to 100% of movement time.

Condition-specific movement times are listed in Table 1. The t tests on movement time data showed no significant impact of distractor side, reference side, or spatial term axis (ps > 0.05). Similarly, there was no significant impact of spatial term axis on movement time difference scores between distractor sides or reference sides (ps > 0.05).

Discussion

In the vast majority of trials, participants selected the target item, suggesting the employed fit functions appropriately captured the spatial terms’ semantics. The majority of trajectories were smoothly curved, showing that motor responses were mostly subject to graded attraction, whereas decisions about motor targets may have been abruptly revised in only very few trials. Together with the absence of bimodality in the curvature distribution this suggests that the motor responses were not governed by fundamentally different processes from trial to trial.

The mouse paths to the target item displayed biases into different directions. Three effects were observed. First, there was a distractor effect, which biased trajectories to the side of the direct path on which the distractor item was located. The effect was observed to comparable degrees when the target position relative to the reference item was specified by horizontal axis spatial terms (“left of” or “right of”) and when it was specified by vertical axis spatial terms (“above” or “below”). Its onset occurred after approximately a third of the total movement time. The distractor effect is in line with the notion that target and distractor were viewed as potential movement goals that must be disambiguated through grounding the spatial phrase, paralleling earlier studies where initial uncertainty over the ultimate movement goal was induced through other means such as delayed cuing (e.g., Chapman et al., 2014; Gallivan & Chapman, 2010).

Second, there was a reference effect, which consisted of trajectory attraction toward the side of the direct path where the reference item of the spatial phrase was located. In the mean data across spatial term axes, this effect was visible within the last third of movement time. In trials using the spatial terms “above” and “below”, its onset occurred after approximately a third of the total movement time, and the effect was considerably more pronounced, likely due to not being superimposed with an effect of the spatial term, as discussed below. An attraction to the reference item was not observed for horizontal axis spatial terms, which again was probably due to superimposition with an effect of the spatial term in these trials. That the reference effect was weaker in the across-spatial-term comparison is most likely attributable to the mixture of trials from each spatial term axis in that comparison, so that an average of the effect’s presence in vertical axis spatial terms and its absence in horizontal axis spatial terms was observed. Since the reference item could likely be ruled out as a potential movement goal through quick visual search, the reference effect suggests that its impact on trajectories was due to its involvement in the cognitive process of spatial language grounding. Note that if this was the case for the reference item, the same mechanism may have contributed to the distractor effect as well, beyond the distractor’s role as a potential action target.

Third, the spatial term effect was a bias with a very early onset, pointing in the direction described by the spatial term. It was visible in the comparisons by reference side and there only in the mean data across spatial terms and, more strongly and somewhat more extended, in trials with horizontal axis spatial terms. In both cases, the spatial term effect occurred immediately after movement onset and remained observable over approximately 40% of the total movement time. The early onset suggests that participants were already moving in a direction congruent with the spatial term before the item array appeared. The effect thus cannot have resulted from the arrangement of visual items and, for instance, pose a repulsion from the reference item. Also, if the latter were the case, the effect should have been observable independent of the spatial term. Moreover, recall that the spatial term did not predict the absolute location of the target or its side in the display, since across trials each of the four target locations was paired an equal number of times with each spatial term. Thus, starting movement in the direction described by the spatial term would have been a less viable strategy to decrease target distance than simply starting movement in an upward direction as instructed. These considerations suggest that the spatial term effect can instead be attributed to the semantics of the spatial term, independent of cognitive strategies or visual stimulation. This interpretation is consistent with prior evidence about a biasing impact of cardinal direction prime words (e.g., “north”) on mouse trajectories (Tower-Richardi et al., 2012). The effect also bears similarities to a motor bias evoked by the directionality implied in sentences that were judged for sensibility (Zwaan et al., 2012) and similar embodiment effects (e.g., Glenberg & Kaschak, 2002).

It is unsurprising that the spatial term effect was observed only in the comparisons between reference sides and only for horizontal axis spatial terms (and less strongly in the across-spatial-term comparison). In trials with horizontal axis spatial terms, the side of the reference item relative to the direct path was coupled to the spatial term: When the reference item was on the left side, the spatial term was “right” and vice versa. Thus, in the comparisons by reference side for horizontal axis spatial terms each set of data included only one spatial term, so that its effect could systematically impact the mean trajectories. By contrast, in trials with vertical axis spatial terms reference sides and spatial terms were not coupled, so that possible biases in spatial term direction could not become visible in the balanced means. Such biases would furthermore have acted approximately parallel to the direct paths, making them unlikely to be observable in the deviation measures. In comparisons by distractor side, on the other hand, reference side was generally balanced in the compared means and thereby also any impact of the spatial terms. Regarding the across-spatial-term comparison by reference side, the lower strength and earlier offset of the spatial term effect likely stemmed from combining trials in which the effect was present with trials where it was absent. Finally, although the spatial term effect was generally observed only in the first half of the movements, we surmise that it in fact influenced trajectories over much of the movement time. This is based on the absence of a reference effect in the comparison by reference side for horizontal axis spatial terms, which at first seems difficult to reconcile with the notion that the reference effect was based on the involvement of the reference item in the grounding process. This notion can be retained, however, by assuming that the spatial term effect cancelled out with the reference effect in the second half of that comparison, so that neither effect became visible there.

Concerning the approximate latency of the observed effects, an unbiased picture can be gathered from those conditions where observed deviations were probably not mixtures of multiple effects; this includes all comparisons by distractor side and the comparison by reference side for vertical axis spatial terms. Apart from the spatial term effect, biases’ onsets occurred at approximately 30 to 40% of total movement time. Given an overall mean movement time of 1073 ms, this corresponds to an absolute temporal separation between scene onset and effect onset of approximately 400 ms (these numbers are deliberately kept vague and must be considered with care, as a possible covariation of absolute movement time and effect magnitude is not taken into account; for instance, if the effect estimate is dominated by trials with long movement times, then the absolute time between display and effect onset may be underestimated). These effect onset times are broadly consistent with the time required for visual search with color targets. For instance, Wolfe et al., (1990) found reaction times of approximately 500–600 ms for detecting a color target among up to 32 items in ten different colors (note that these reaction times include the motor response); search was highly efficient with minimal reaction time slopes over increasing item number. Together, this suggests that items in the scenes used in the experiments here could be distinguished very quickly via efficient visual search. It is thus likely that the observed effects were not affected by difficulties in finding the relevant items among fillers or distinguishing their different roles.

The claim that the mere involvement of visual items in the grounding process causes motor biases hinges on the reference effect. Consolidating the findings of Experiment 1 thus requires showing that the reference effect is indeed universal across spatial terms and not a peculiarity of “above” and “below” or of the specific response metrics afforded by the task. This is further examined in Experiment 2.

Experiment 2

The main goal of Experiment 2Footnote 3 was to confirm that the reference effect is universal across spatial terms and response metrics. We conjectured that its absence for horizontal axis spatial terms in Experiment 1 was due to its overlap with the spatial term effect, not due to a complete absence of an impact of the reference item. In addition, Experiment 2 aimed to replicate the distractor effect seen in Experiment 1 using different response metrics.

The paradigm was largely analogous to Experiment 1. The main difference was that responses were made along a roughly horizontal rather than vertical axis, from the start marker on the left side of the screen to targets on the right side of the screen. Compared to Experiment 1, this resulted in a switched relationship between the principal movement direction and the spatial term axes: The axis of the terms “left” and “right” was now approximately parallel to the direct paths and the axis of the spatial terms “above” and “below” was approximately orthogonal to the direct paths. In consequence, the side of the reference item relative to the direct path was now coupled to the vertical axis spatial terms (left side for “below”, right side for “above”) while this coupling was removed for the horizontal axis spatial terms.

It was hypothesized that all effects from Experiment 1 would occur in an analogous manner in Experiment 2, with partly reversed couplings of spatial term axes and effects. The distractor effect was hypothesized to occur equally for both spatial term axes. The spatial term effect was hypothesized to be observable for vertical axis spatial terms but not for horizontal axis spatial terms. Conversely, the reference effect was hypothesized to be observable for horizontal axis spatial terms, but not for vertical axis spatial terms. In other words, the observable signatures of the reference effect and the spatial term effect were expected to be switched between spatial term axes compared to Experiment 1. This rested on the assumption that the two biases would cancel each other out in trajectory portions where both were present, as conjectured based on the results of Experiment 1. This hypothesized pattern of results would argue for the generality of both the reference effect and the spatial term effect over spatial term axes and response axes as well as confirm the robustness of the distractor effect.

Methods

Participants

The 24 participants (15 female, nine male) were 25 years (SD = 4.1 years) old on average and received €10 for participation.

Visual scenes

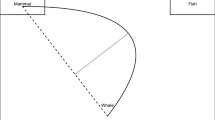

An annotated example display for Experiment 2 is shown in Fig. 12. The arrangement differed from that in Experiment 1 only by clockwise rotation around the screen center by 90 degrees. The extent of the different components and the distances between them were unchanged.

Display configuration in Experiment 2. The item array corresponds to the spatial phrase “The green item above the red item”. T denotes the target, D the distractor, and R the reference item. See text for details