Abstract

Background

We aimed to develop a set of quality indicators for patients with traumatic brain injury (TBI) in intensive care units (ICUs) across Europe and to explore barriers and facilitators for implementation of these quality indicators.

Methods

A preliminary list of 66 quality indicators was developed, based on current guidelines, existing practice variation, and clinical expertise in TBI management at the ICU. Eight TBI experts of the Advisory Committee preselected the quality indicators during a first Delphi round. A larger Europe-wide expert panel was recruited for the next two Delphi rounds. Quality indicator definitions were evaluated on four criteria: validity (better performance on the indicator reflects better processes of care and leads to better patient outcome), feasibility (data are available or easy to obtain), discriminability (variability in clinical practice), and actionability (professionals can act based on the indicator). Experts scored indicators on a 5-point Likert scale delivered by an electronic survey tool.

Results

The expert panel consisted of 50 experts from 18 countries across Europe, mostly intensivists (N = 24, 48%) and neurosurgeons (N = 7, 14%). Experts agreed on a final set of 42 indicators to assess quality of ICU care: 17 structure indicators, 16 process indicators, and 9 outcome indicators. Experts are motivated to implement this finally proposed set (N = 49, 98%) and indicated routine measurement in registries (N = 41, 82%), benchmarking (N = 42, 84%), and quality improvement programs (N = 41, 82%) as future steps. Administrative burden was indicated as the most important barrier for implementation of the indicator set (N = 48, 98%).

Conclusions

This Delphi consensus study gives insight in which quality indicators have the potential to improve quality of TBI care at European ICUs. The proposed quality indicator set is recommended to be used across Europe for registry purposes to gain insight in current ICU practices and outcomes of patients with TBI. This indicator set may become an important tool to support benchmarking and quality improvement programs for patients with TBI in the future.

Similar content being viewed by others

Background

Traumatic brain injury (TBI) causes an enormous health and economic burden around the world [1]. Patients with moderate and severe TBI are at high risk for poor outcomes and often require intensive care unit (ICU) admission. In these patients, evidence-based treatment options are scarce and large differences in outcome and daily ICU practice exist [2,3,4,5].

Research to establish more evidence-based and thereby uniform treatment policies for patients with TBI has high priority. Still, breakthrough intervention strategies are scarce [6] and guideline recommendations remain limited. Therefore, new strategies, such as precision medicine and routine quality measurement, are being explored to drive research and clinical practice forward [1]. Routine quality measurement using appropriate indicators can guide quality improvement, for example, through identifying best practices and internal quality improvement initiatives. The potential of quality indicators to improve care has already been demonstrated in other clinical areas [7], in other ICU populations like sepsis [8] or stroke patients [9], and in children with TBI [10, 11].

However, there are also examples of quality indicators that do not positively affect the quality of care. This may be for various reasons, such as lack of validity and reliability, poor data quality, or lack of support by clinicians [12,13,14]. Deploying poor indicators has opportunity costs due to administrative burden while distorting healthcare priorities. An evaluation of a putative quality indicator is inherently multidimensional, and when used to identify best practice or benchmark hospitals, validity and reliability and uniform definitions are all equally important [15, 16].

Although some quality indicator sets for the general ICU exist [17, 18], there are no consensus-based quality indicators specific for the treatment of adult patients with TBI. Delphi studies have been proposed as a first step in the development of quality indicators [19]. The systematic Delphi approach gathers information from experts in different locations and fields of expertise to reach group consensus without groupthink [19], an approach which aims to ensure a breadth of unbiased participation.

The aim of this study was to develop a consensus-based European quality indicator set for patients with TBI at the ICU and to explore barriers and facilitators for implementation of these quality indicators.

Methods

This study was part of the Collaborative European NeuroTrauma Effectiveness Research in Traumatic Brain Injury (CENTER-TBI) project [20].

An Advisory Committee (AC) was convened, consisting of 1 neurosurgeon (AM), 3 intensivists (MJ, DM, GC), 1 emergency department physician (FL), and 3 TBI researchers (HL, ES, LW) from 5 European countries. The AC’s primary goals were to provide advice on the recruitment of the Delphi panel, to monitor the Delphi process, and to interpret the final Delphi results. During a face-to-face meeting (September 2017), the AC agreed that the Delphi study would initially be restricted to Europe, recruit senior professionals as members of the Delphi panel, and focus on the ICU. The restriction to a European rather than a global set was motivated by substantial continental differences in health funding systems, health care costs, and health care facilities. The set was targeted to be generalizable for the whole of Europe and therefore included European Delphi panelists. The AC agreed to target senior professionals as Delphi panelists as they were expected to have more specialized and extensive clinical experience with TBI patients at the ICU. The AC decided to focus the indicator set on ICU practice, since ICU mortality rates are high (around 40% in patients with severe TBI [21]), large variation in daily practice exists [2,3,4,5, 22], and detailed data collection is generally more feasible in the ICU setting due to available patient data management systems or electronic health records (EHRs). We focused on adult patients with TBI.

Delphi panel

The AC identified 3 stakeholder groups involved in ICU quality improvement: (1) clinicians (physicians and nurses) primary responsible for ICU care, (2) physicians from other specialties than intensive care medicine who are regularly involved in the care of patients with TBI at the ICU, and (3) researchers/methodologists in TBI research. It was decided to exclude managers, auditors, and patients as stakeholders, since the completion of the questionnaires required specific clinical knowledge. Prerequisites to participate were a minimum professional experience of 3 years at the ICU or in TBI research. Stakeholders were recruited from the personal network of the AC (also through social media), among the principal investigators of the CENTER-TBI study (contacts from more than 60 NeuroTrauma centers across 22 countries in Europe) [20], and from a European publication on quality indicators at the ICU [18]. These experts were asked to provide additional contacts with sufficient professional experience.

Preliminary indicator set

Before the start of the Delphi process, a preliminary set of quality indicators was developed by the authors and the members of the AC, based on international guidelines (Brain Trauma Foundation [23] and Trauma Quality Improvement Program guidelines [24]), ICU practice variation [3,4,5], and clinical expertise (Additional file 1: Questionnaire round 1). Quality indicators were categorized into structure, process, and outcome indicators [25]. Overall, due to the absence of high-quality evidence on which thresholds to use in TBI management, we refrained from formulating quality indicators in terms of thresholds. For example, we did not use specific carbon dioxide (CO2) or intracranial pressure (ICP) thresholds to define quality indicators for ICP-lowering treatments.

Indicator selection

The Delphi was conducted using online questionnaires (Additional files 1, 2, and 3). In the first round, the AC rated the preliminary quality indicators on four criteria: validity, discriminability (to distinguish differences in center performance), feasibility (regarding data collection required), and actionability (to provide clear directions on how to change TBI care or otherwise improve scores on the indicator) [26,27,28,29,30] (Table 1). We used a 5-point Likert scale varying from strongly disagree (1) to strongly agree (5). Additionally, an “I don’t know” option was provided to capture uncertainty. Agreement was defined as a median score of 4 (agreement) or 5 (strong agreement) on all criteria. Disagreement was defined as a median score below 4 on at least one of the four criteria [31, 32]. Consensus was defined as an interquartile range (IQR) ≤ 1 (strong consensus) on validity—since validity is considered the key characteristic for a useful indicator [19]—and IQR ≤ 2 (consensus) on the other criteria [31, 32]. Criteria for rating the indicators and definitions of consensus remained the same during all rounds. The AC was able to give recommendations for indicator definitions at the end of the questionnaire. Indicators were excluded for the second Delphi round when there was consensus on disagreement on at least one criterion, unless important comments for improvement of the indicator definition were made. Such indicators with improved definitions were rerated in the next Delphi round.

In the second round, the remaining indicators were sent to a larger group of experts. The questionnaire started with a description of the goals of the study, and some characteristics of experts were asked. Experts had the possibility to adapt definitions of indicators at the end of a group of indicators on a certain topic (domain). Indicators were included in the final set when there was agreement and consensus, excluded when there was disagreement and consensus, and included the next round when no consensus was reached or important comments to improve the indicator definitions were given. As many outcome scales exist for TBI, like the Glasgow Outcome Scale - Extended (GOSE), Coma Recovery Scale - Revised (CSR-R), and Rivermead Post-Concussion Symptoms Questionnaire (RPQ), a separate ranking question was used to determine which outcome scales were preferred (or most important) to use as outcome indicators—to avoid an extensive outcome indicator set (Additional file 2, question outcome scales). Outcome scales that received the highest ratings (top 3) were selected for round 3 and rated as described above. Finally, exploratory questions were asked for which goals or reasons experts would implement the quality indicators. We only selected experts for the final round that completed the full questionnaire.

In the last round, the expert panel was permitted only to rate the indicators, but could not add new indicators or suggest further changes to definitions. Experts received both qualitative and quantitative information on the rating of indicators (medians and IQRs) from round 2 for each individual indicator. Indicators were included in the final set if there was both agreement and consensus. Final exploratory questions were asked regarding the barriers and facilitators for implementation of the indicator set. For each Delphi round, three automated reminder emails and two personal reminders were sent to the Delphi participant to ensure a high response rate.

Statistical analysis

Descriptive statistics (median and interquartile range) were calculated to determine which indicators were selected for the next round and to present quantitative feedback (median and min-max rates) in the third Delphi round. “I don’t know” was coded as missing. A sensitivity analysis after round 3 was performed to determine the influence of experts from Western Europe compared with other European regions on indicator selection (in- or exclusion in the final set). Statistical analyses were performed using the R statistical language [33]. Questionnaires were developed using open-source LimeSurvey software [34]. In LimeSurvey, multiple online questionnaires can be developed (and send by email), the response rates can be tracked, and questionnaire scores or responses can easily be exported to a statistical program.

Results

Delphi panel

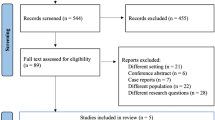

The Delphi rounds were conducted between March 2018 and August 2018 (Fig. 1). Approximately 150 experts were invited for round 2, and 50 experts from 18 countries across Europe responded (≈33%). Most were intensivists (N = 24, 48%), followed by neurosurgeons (N = 7, 14%), neurologists (N = 5, 10%), and anesthesiologist (N = 5, 10%) (Table 2). Most of the experts indicated to have 15 years or more experience with patients with TBI at the ICU or another department (N = 25, 57%). Around half of the experts indicated that they had primary responsibility for the daily practical care of patients with TBI at the ICU (N = 21, 47%). Experts were employed in 37 centers across 18 European countries: mostly in Western Europe (N = 26, 55%). Most experts were from academic (N = 37, 84%) trauma centers in an urban location (N = 44, 98%). Almost all experts indicated the availability of EHRs in their ICU (N = 43, 96%). Thirty-one experts (63%) participated in the CENTER-TBI study. The response rate in round 3 was 98% (N = 49).

Overview of the Delphi process. Overview of the Delphi process: time frame, experts’ involvement, and indicator selection; *8 indicators were removed based on the sensitivity analyses. The left site of the figure shows the number of indicators that were removed after disagreement and consensus with no comments to improve definitions. In addition, the number of changed indicator definitions is shown. The right site of the figure shows the number of newly proposed indicators (that were rerated in the next Delphi round) and the number of indicators that were included in the final indicator set. After round 2, 17 indicators were included in the final set (and removed from the Delphi process), and after round 3, 25 indicators were included in the final set—a total of 42 indicators. The agreement was defined as a median score of 4 (agreement) or 5 (strong agreement) on all four criteria (validity, feasibility, discriminability, and actionability) to select indicators. The disagreement was defined as a median score below 4 on at least one of the four criteria. The consensus was defined as an interquartile range (IQR) ≤ 1 (strong consensus) on validity—since validity is considered the key characteristic for a useful indicator [19]—and IQR ≤ 2 (consensus) on the other criteria

Indicator selection

The first Delphi round started with 66 indicators (Fig. 1). In round 1, 22 indicators were excluded. The main reason for exclusion was poor agreement (median < 4) on all criteria except discriminability (Additional file 4). Round 2 started with 46 indicators; 17 were directly included in the final set and 7 were excluded, mainly due to a poor agreement (median < 4) on actionability and poor consensus (IQR > 1) on validity. Round 3 started with 40 indicators; 25 indicators were included in the final set. Exclusion of 8 indicators was based on the sensitivity analysis (no consensus in Western Europe versus other European regions) and 7 indicators had low agreement on actionability or no consensus on validity or actionability. During the full Delphi process, 20 new indicators were proposed, and 30 definitions were discussed and/or modified.

The final quality indicator set consisted of 42 indicators on 13 clinical domains (Table 3), including 17 structure indicators, 16 process indicators, and 9 outcome indicators. For the domains “precautions ICP monitoring,” “sedatives,” “osmotic therapies,” “seizures,” “fever,” “coagulopathy,” “respiration and ventilation,” and “red blood cell policy,” no indicators were included in the final set.

Experts proposed changing the names of the “short-term outcomes” and “long-term outcomes” domains to “in-hospital outcomes” and “after discharge or follow-up outcomes.” In round 2, the Glasgow Outcome Coma Scale Extended (GOSE), quality of life after brain injury (Qolibri), and short form health survey (SF-36) were rated the best outcome scales. However, the Qolibri was excluded in round 3 as an outcome indicator, since there was no consensus in the panel on its validity to reflect the quality of ICU care. The majority of experts (N = 14, 28%) indicated that the outcome scales should be measured at 6 months, but this was closely followed by experts that indicated both at 6 and 12 months (N = 13, 26%).

Barriers and facilitators for implementation

Almost all experts indicated that the indicator set should be used in the future (N = 49, 98%). One expert did not believe an indicator set should be used at all, because it would poorly reflect the quality of care (N = 1, 2%).

The majority of experts indicated that the set could be used for registry purposes (N = 41, 82%), assessment of adherence to guidelines (N = 35, 70%), and quality improvement programs (N = 41, 82%). Likewise, the majority of experts indicated that the indicator set could be used for benchmarking purposes (N = 42, 84%); both within and between centers. Pay for performance was rarely chosen as a future goal (N = 3, 6%). Almost all experts indicated administrative burden as a barrier (N = 48, 98%). Overall, experts endorsed facilitators more than the barriers for implementation (Fig. 2).

Facilitators or barriers for implementation of the quality indicator set. Percentage of experts that indicated a certain facilitator or barrier for implementation of the quality indicator set. Other indicated facilitator was “create meaningful uniform indicators.” Other indicated barriers were “gaming” (N = 1, 2%) and “processes outside of ICU (e.g., rehabilitation) are hard to query.” *Participation in trauma quality improvement program

Discussion

Main findings

This three-round European Delphi study including 50 experts, resulted in a quality indicator set with 42 indicators with high-level of consensus on validity, feasibility, discriminability, and actionability, representing 13 clinical domains for patients with TBI at the ICU. Experts indicated multiple facilitators for implementation of the total set, while the main barrier was the anticipated administrative burden. The selection of indicators during the Delphi process gave insight in which quality indicators were perceived as important to improve the quality of TBI care. In addition, the indicator definitions evolved during the Delphi process, leading to a final set of understandable and easy to interpret indicators by (clinical) experts. This set serves as a starting point to gain insight into current ICU care for TBI patients, and after empirical validation, it may be used for quality measurement and improvement.

Our Delphi resulted in 17 structure indicators, 16 process indicators, and 9 outcome indicators. A large number of structure indicators already reached consensus after round 2; this might reflect that these were more concise indicators. However, during the rounds, definitions for process indicators became more precise and specific. Process indicators must be evidence-based before best practices can be determined: this might also explain that important domains with indicators on daily care in TBI (such as decompressive craniectomy, osmotic therapies, respiration, and ventilation management) did not reach consensus in our Delphi study. Structure, process, and outcome indicators have their own advantages and disadvantages. For example, process indicators tend to be inherently actionable as compared to structure and outcome indicators, yet outcome indicators are more relevant to patients [35]. Most indicators were excluded from the set due to low agreement and lack of consensus on actionability and validity: this indicates that experts highly valued the practicality and usability of the set and were strict on selecting only those indicators that might improve patient outcome and processes of care. Overall, the complete set comprises all different types of indicators.

Existing indicators

Some national ICU registries already exist [17], and in 2012, a European ICU quality indicator set for general ICU quality has been developed [18]. In addition, several trauma databanks already exist [36, 37]. The motivations for selection (or rejection) of indicators in our study can contribute to the ongoing debate on which indicators to collect in these registries. For example, length of stay is often used as an outcome measure in current registries, but the Delphi panel commented that determination of the length of stay is debatable as an indicator, since hospital structures differ (e.g., step-down units are not standard), and admission length can be confounded by (ICU) bed availability. Although general ICU care is essential for TBI, not all general ICU or trauma indicators are applicable in exactly the same way for TBI. For example, individualized deep venous thrombosis prophylaxis management in TBI is a priority in view of the risk of progressive brain hemorrhage in contrast to other ICU conditions (e.g., sepsis). Therefore, our TBI-specific indicator set might form a useful addition to current registries.

Strength and limitations

This study has several strengths and limitations. No firm rules exist on how to perform a Delphi study in order to develop quality indicators [19]. Therefore, we extensively discussed the methodology and determined strategies with the Advisory Committee. Although the RAND/UCLA Appropriateness Method recommends a panel meeting [38], no group discussion took place in our study to avoid overrepresentation of strong voices and for reasons of feasibility. However, experts received both qualitative and quantitative information on the rating of indicators to gain insight into the thinking process of the other panel members. Considering the preliminary indictor set, we used the guidelines [23, 24] as a guide to which topics should be included and not as an evidence base. Considering the Delphi panel, the success of indicator selection depends on the expertise of invited members: we assembled a large network of 50 experts from 18 countries across Europe with various professional backgrounds. All participants can be considered as established experts in the field of TBI-research and/or daily clinical practice (around 70% of experts had more than 10 years of ICU experience). However, more input from some key stakeholders in the quality of ICU care, such as rehabilitation physicians, nurses and allied health practitioners, health care auditors, and TBI patients, would have been preferable. We had only three rehabilitation experts on our panel, but increased input from this group of professionals would have been valuable, since they are increasingly involved in the care of patients even at the ICU stage. A number of nurses were invited, but none responded, possibly due to a low invitation rate. This is a severe limitation since nurses play a key role in ICU quality improvement and quality indicator implementation [39, 40]. Therefore, future studies should put even more efforts in involving nurses in quality indicator development. Experts were predominantly from Western Europe. Therefore, we performed sensitivity analyses for Western Europe and removed indicators with significant differences compared with other regions to obtain a set generalizable for Europe. The restriction to a European rather than a global set was motivated by substantial continental differences in health funding systems, health care costs, and health care facilities. Finally, some of the responses may have been strongly influenced by familiarity with measures (e.g., SF-36 was selected instead of Qolibri) rather that solely reflecting the value of the measure per se.

Use and implementation

Quality indicators may be used for the improvement of care in several ways. First, registration of indicator data itself will make clinicians and other stakeholders aware of their center or ICU performance, as indicators will provide objective data on care instead of perceived care. Second, as the evidence base for guidelines is often limited, this indicator set could support refinement of guideline recommendations. This was shown in a study by Vavilala et al., where guideline-derived indicators for the acute care of children with TBI were collected from medical records and were associated with improved outcome [10]. Third, quality indicators can be used to guide and to inform quality improvement programs. One study showed that a TBI-specific quality improvement program was effective, demonstrating lower mortality rates after implementation [41]. Fourth, (international) benchmarking of quality indicators will facilitate discussion between (health care) professionals and direct attention towards suboptimal care processes [17]. Future benchmarking across different hospitals or countries requires advanced statistical analyses such as random effect regression models to correct for random variation and case-mix correction. To perform such benchmarking, case-mix variables must be collected, like in general ICU prognostic models or the TBI-specific prognostic models, such as IMPACT and CRASH [42, 43].

A quality indicator set is expected to be dynamic: ongoing large international studies will further shape the quality indicator set. This is also reflected in the “retirement” of indicators over time (when 90–100% adherence is reached). Registration and use of the quality indicators will provide increasing insight into their feasibility and discriminability and provides the opportunity to study their validity and actionability. Such empirical testing of the set will probably reveal that not all indicators meet the required criteria and thus will reduce the number of indicators in the set, which is desirable, as the set is still quite extensive. For now, based on the dynamic nature of the set and ongoing TBI studies, we recommend to use this consensus-based quality indicator for registry purposes—to gain insight (over time) in current care and not for changing treatment policies. Therefore, we recommend to regard this consensus-based quality indicator set as a starting point in need of further validation, before broad implementation can be recommended. Such validation should seek to establish whether adherence to the quality indicators is associated with better patient outcomes.

To provide feedback on clinical performance, new interventions are being explored to further increase the effectiveness of indicator-based performance feedback, e.g., direct electronic audit and feedback with suggested action plans [44]. A single (external) organization for data collection could enhance participation of multiple centers. International collaborations must be encouraged and further endorsement by scientific societies seems necessary before large-scale implementation is feasible. When large-scale implementation becomes global, there is an urgent need to develop quality indicators for low-income countries [36, 45]. An external organization for data collection could also reduce the administrative burden for clinicians. This is a critical issue, since administrative burden was indicated as the main barrier for implementation of the whole indicator set, although experts agreed on the feasibility of individual indicators. In the future, automatic data extraction might be the solution to overcome the administrative burden.

Conclusion

This Delphi consensus study gives insight in which quality indicators have the potential to improve quality of TBI care at European ICUs. The proposed quality indicator set is recommended to be used across Europe for registry purposes to gain insight in current ICU practices and outcomes of patients with TBI. This indicator set may become an important tool to support benchmarking and quality improvement programs for patients with TBI in the future.

Abbreviations

- AC:

-

Advisory Committee

- CENTER-TBI study:

-

Collaborative European NeuroTrauma Effectiveness Research in Traumatic Brain Injury study

- CSR-R:

-

Coma Recovery Scale - Revised

- EHR:

-

Electronic health records

- GOSE:

-

Glasgow Outcome Coma Scale Extended

- ICP:

-

Intracranial pressure

- ICU:

-

Intensive care unit

- IQR:

-

Interquartile range

- Qolibri:

-

Quality of life after brain injury

- RPQ:

-

Rivermead Post-Concussion Symptoms Questionnaire

- SF-36:

-

Short form health survey

- TBI:

-

Traumatic brain injury

References

AIR M, Menon DK, Adelson PD, Andelic N, Bell MJ, Belli A, Bragge P, Brazinova A, Buki A, Chesnut RM, et al. Traumatic brain injury: integrated approaches to improve prevention, clinical care, and research. Lancet Neurol. 2017;16(12):987–1048.

Lingsma HF, Roozenbeek B, Li B, Lu J, Weir J, Butcher I, Marmarou A, Murray GD, Maas AI, Steyerberg EW. Large between-center differences in outcome after moderate and severe traumatic brain injury in the international mission on prognosis and clinical trial design in traumatic brain injury (IMPACT) study. Neurosurgery. 2011;68(3):601–7 discussion 607–608.

Huijben JA, Volovici V, Cnossen MC, Haitsma IK, Stocchetti N, Maas AIR, Menon DK, Ercole A, Citerio G, Nelson D, et al. Variation in general supportive and preventive intensive care management of traumatic brain injury: a survey in 66 neurotrauma centers participating in the Collaborative European NeuroTrauma Effectiveness Research in Traumatic Brain Injury (CENTER-TBI) study. Crit Care. 2018;22(1):90.

Cnossen MC, Huijben JA, van der Jagt M, Volovici V, van Essen T, Polinder S, Nelson D, Ercole A, Stocchetti N, Citerio G, et al. Variation in monitoring and treatment policies for intracranial hypertension in traumatic brain injury: a survey in 66 neurotrauma centers participating in the CENTER-TBI study. Crit Care. 2017;21(1):233.

Huijben JA, van der Jagt M, Cnossen MC, Kruip M, Haitsma IK, Stocchetti N, Maas AIR, Menon DK, Ercole A, Maegele M, et al. Variation in blood transfusion and coagulation management in traumatic brain injury at the intensive care unit: a survey in 66 neurotrauma centers participating in the Collaborative European NeuroTrauma Effectiveness Research in Traumatic Brain Injury Study. J Neurotrauma. 2017;35(2).

Stocchetti N, Taccone FS, Citerio G, Pepe PE, Le Roux PD, Oddo M, Polderman KH, Stevens RD, Barsan W, Maas AI, et al. Neuroprotection in acute brain injury: an up-to-date review. Crit Care. 2015;19:186.

Williams SC, Schmaltz SP, Morton DJ, Koss RG, Loeb JM. Quality of care in U.S. hospitals as reflected by standardized measures, 2002–2004. N Engl J Med. 2005;353(3):255–64.

van Zanten AR, Brinkman S, Arbous MS, Abu-Hanna A, Levy MM, de Keizer NF, Netherlands Patient Safety Agency Sepsis Expert G. Guideline bundles adherence and mortality in severe sepsis and septic shock. Crit Care Med. 2014;42(8):1890–8.

Howard G, Schwamm LH, Donnelly JP, Howard VJ, Jasne A, Smith EE, Rhodes JD, Kissela BM, Fonarow GC, Kleindorfer DO, et al. Participation in Get With The Guidelines-Stroke and its association with quality of care for stroke. JAMA Neurol. 2018;75(11):1331–7.

Vavilala MS, Kernic MA, Wang J, Kannan N, Mink RB, Wainwright MS, Groner JI, Bell MJ, Giza CC, Zatzick DF, et al. Acute care clinical indicators associated with discharge outcomes in children with severe traumatic brain injury. Crit Care Med. 2014;42(10):2258–66.

Rivara FP, Ennis SK, Mangione-Smith R, MacKenzie EJ, Jaffe KM. Variation in adherence to new quality-of-care indicators for the acute rehabilitation of children with traumatic brain injury. Arch Phys Med Rehabil. 2012;93(8):1371–6.

Fischer C, Lingsma HF, Marang-van de Mheen PJ, Kringos DS, Klazinga NS, Steyerberg EW. Is the readmission rate a valid quality indicator? A review of the evidence. PLoS One. 2014;9(11):e112282.

Anema HA, Kievit J, Fischer C, Steyerberg EW, Klazinga NS. Influences of hospital information systems, indicator data collection and computation on reported Dutch hospital performance indicator scores. BMC Health Serv Res. 2013;13:212.

Fischer C, Lingsma HF, Anema HA, Kievit J, Steyerberg EW, Klazinga N. Testing the construct validity of hospital care quality indicators: a case study on hip replacement. BMC Health Serv Res. 2016;16(1):551.

Kallen MC, Roos-Blom MJ, Dongelmans DA, Schouten JA, Gude WT, de Jonge E, Prins JM, de Keizer NF. Development of actionable quality indicators and an action implementation toolbox for appropriate antibiotic use at intensive care units: a modified-RAND Delphi study. PLoS One. 2018;13(11):e0207991.

Roos-Blom MJ, Gude WT, Spijkstra JJ, de Jonge E, Dongelmans D, de Keizer NF. Measuring quality indicators to improve pain management in critically ill patients. J Crit Care. 2019;49:136–42.

Salluh JIF, Soares M, Keegan MT. Understanding intensive care unit benchmarking. Intensive Care Med. 2017;43(11):1703–7.

Rhodes A, Moreno RP, Azoulay E, Capuzzo M, Chiche JD, Eddleston J, Endacott R, Ferdinande P, Flaatten H, Guidet B, et al. Prospectively defined indicators to improve the safety and quality of care for critically ill patients: a report from the Task Force on Safety and Quality of the European Society of Intensive Care Medicine (ESICM). Intensive Care Med. 2012;38(4):598–605.

Boulkedid R, Abdoul H, Loustau M, Sibony O, Alberti C. Using and reporting the Delphi method for selecting healthcare quality indicators: a systematic review. PLoS One. 2011;6(6):e20476.

Maas AI, Menon DK, Steyerberg EW, Citerio G, Lecky F, Manley GT, Hill S, Legrand V, Sorgner A, Participants C-T, et al. Collaborative European NeuroTrauma Effectiveness Research in Traumatic Brain Injury (CENTER-TBI): a prospective longitudinal observational study. Neurosurgery. 2015;76(1):67–80.

Rosenfeld JV, Maas AI, Bragge P, Morganti-Kossmann MC, Manley GT, Gruen RL. Early management of severe traumatic brain injury. Lancet. 2012;380(9847):1088–98.

Bulger EM, Nathens AB, Rivara FP, Moore M, MacKenzie EJ, Jurkovich GJ, Brain Trauma F. Management of severe head injury: institutional variations in care and effect on outcome. Crit Care Med. 2002;30(8):1870–6.

Carney N, Totten AM, O’Reilly C, Ullman JS, Hawryluk GW, Bell MJ, Bratton SL, Chesnut R, Harris OA, Kissoon N, et al. Guidelines for the management of severe traumatic brain injury, fourth edition. Neurosurgery. 2017;80(1):6–15.

American College of Surgeons, Trauma quality improvement program: best practices in the management of traumatic brain injury. https://www.facs.org/~/media/files/quality%20programs/trauma/tqip/traumatic%20brain%20injury%20guidelines.ashx.

Donabedian A. The quality of care. How can it be assessed? JAMA. 1988;260(12):1743–8.

Barber CE, Patel JN, Woodhouse L, Smith C, Weiss S, Homik J, LeClercq S, Mosher D, Christiansen T, Howden JS, et al. Development of key performance indicators to evaluate centralized intake for patients with osteoarthritis and rheumatoid arthritis. Arthritis Res Ther. 2015;17:322.

Darling G, Malthaner R, Dickie J, McKnight L, Nhan C, Hunter A, McLeod RS, Lung Cancer Surgery Expert P. Quality indicators for non-small cell lung cancer operations with use of a modified Delphi consensus process. Ann Thorac Surg. 2014;98(1):183–90.

Patwardhan M, Fisher DA, Mantyh CR, McCrory DC, Morse MA, Prosnitz RG, Cline K, Samsa GP. Assessing the quality of colorectal cancer care: do we have appropriate quality measures? (A systematic review of literature). J Eval Clin Pract. 2007;13(6):831–45.

Gooiker GA, Kolfschoten NE, Bastiaannet E, van de Velde CJ, Eddes EH, van der Harst E, Wiggers T, Rosendaal FR, Tollenaar RA, Wouters MW, et al. Evaluating the validity of quality indicators for colorectal cancer care. J Surg Oncol. 2013;108(7):465–71.

Kimberlin CL, Winterstein AG. Validity and reliability of measurement instruments used in research. Am J Health Syst Pharm. 2008;65(23):2276–84.

Olij BF, Erasmus V, Kuiper JI, van Zoest F, van Beeck EF, Polinder S. Falls prevention activities among community-dwelling elderly in the Netherlands: a Delphi study. Injury. 2017;48(9):2017–21.

Rietjens JAC, Sudore RL, Connolly M, van Delden JJ, Drickamer MA, Droger M, van der Heide A, Heyland DK, Houttekier D, Janssen DJA, et al. Definition and recommendations for advance care planning: an international consensus supported by the European Association for Palliative Care. Lancet Oncol. 2017;18(9):e543–51.

Core Team R. R: A language and environment for statistical computing. Vienna: R Foundation for Statistical Computing; 2018. URL https://www.R-project.org/

Corporation L:LimeSurvey user manual. 2016.

Mant J. Process versus outcome indicators in the assessment of quality of health care. Int J Qual Health Care. 2001;13(6):475–80.

O’Reilly GM, Cameron PA, Joshipura M. Global trauma registry mapping: a scoping review. Injury. 2012;43(7):1148–53.

Haider AH, Hashmi ZG, Gupta S, Zafar SN, David JS, Efron DT, Stevens KA, Zafar H, Schneider EB, Voiglio E, et al. Benchmarking of trauma care worldwide: the potential value of an International Trauma Data Bank (ITDB). World J Surg. 2014;38(8):1882–91.

Fitch K, Bernstein SJ, Aguilar MD, Burnand B, LaCalle JR, Lázaro P, et al. The RAND/UCLA appropriateness method user’s manual. Santa Monica: RAND; 2001.

Saherwala AA, Bader MK, Stutzman SE, Figueroa SA, Ghajar J, Gorman AR, Minhajuddin A, Olson DM. Increasing adherence to brain trauma foundation guidelines for hospital care of patients with traumatic brain injury. Crit Care Nurse. 2018;38(1):e11–20.

Odgaard L, Aadal L, Eskildsen M, Poulsen I. Nursing sensitive outcomes after severe traumatic brain injury: a nationwide study. J Neurosci Nurs. 2018;50(3):149–54.

Tarapore PE, Vassar MJ, Cooper S, Lay T, Galletly J, Manley GT, Huang MC. Establishing a traumatic brain injury program of care: benchmarking outcomes after institutional adoption of evidence-based guidelines. J Neurotrauma. 2016;33(22):2026–33.

Collaborators MCT, Perel P, Arango M, Clayton T, Edwards P, Komolafe E, Poccock S, Roberts I, Shakur H, Steyerberg E, et al. Predicting outcome after traumatic brain injury: practical prognostic models based on large cohort of international patients. BMJ. 2008;336(7641):425–9.

Steyerberg EW, Mushkudiani N, Perel P, Butcher I, Lu J, McHugh GS, Murray GD, Marmarou A, Roberts I, Habbema JD, et al. Predicting outcome after traumatic brain injury: development and international validation of prognostic scores based on admission characteristics. PLoS Med. 2008;5(8):e165 discussion e165.

Gude WT, Roos-Blom MJ, van der Veer SN, de Jonge E, Peek N, Dongelmans DA, de Keizer NF. Electronic audit and feedback intervention with action implementation toolbox to improve pain management in intensive care: protocol for a laboratory experiment and cluster randomized trial. Implement Sci. 2017;12(1):68.

Peabody JW, Taguiwalo MM, Robalino DA, Frenk J. Improving the quality of care in developing countries. In: Jamison DT, Breman JG, Measham AR, Alleyne G, Claeson M, Evans DB, Jha P, Mills A, Musgrove P, editors. Disease control priorities in developing countries. 2nd ed. Washington (DC): World Bank; 2006. Chapter 70. 2006.

American Thoracic Society; Infectious Diseases Society of America. Guidelines for the management of adults with hospital acquired, ventilator-associated, and healthcare-associated pneumonia. Am J Respir Crit Care Med. 2005.

Singer M, Deutschman CS, Seymour CW, Shankar-Hari M, Annane D, Bauer M, Bellomo R, Bernard GR, Chiche JD, Coopersmith CM, Hotchkiss RS, Levy MM, Marshall JC, Martin GS, Opal SM, Rubenfeld GD, van der Poll T, Vincent JL, Angus DC. The Third International Consensus Definitions for Sepsis and Septic Shock (Sepsis-3). JAMA 2016

Acknowledgements

The authors would like to thank Lydia van Overveld (Radboud University Nijmegen), Claudia Fischer (Medical University Wien, Austria), and Ida Korfage (Erasmus MC, Rotterdam) for their helpful advice on the use of quality indicators and the Delphi methodology. The authors would like to thank Frank Santegoets (Erasmus MC, Rotterdam) for his help with the LimeSurvey software. Data used in preparation of this manuscript were obtained in the context of CENTER-TBI, a large collaborative project with the support of the European Commission 7th Framework program (602150). The authors would like to thank all Delphi panel members for their participation and for sharing their valuable expertise.

Members of the Delphi panel:

Marcel Aries (Department of Intensive Care, University Maastricht, Maastricht University Medical Centre, Maastricht, The Netherlands); Rafael Badenes (Department Anesthesiology and Surgical-Trauma Intensive Care Hospital Clinic Universitari de Valencia, Valencia, Spain); Albertus Beishuizen (Intensive Care Center, Medisch Spectrum Twente, Enschede, Netherlands); Federico Bilotta (Department of Anesthesiology, Critical care and Pain medicine. Policlinico Umberto 1, hospital, University of Rome "La Sapienza", Rome, Italy); Arturo Chieregato (Neurorianimazione, Ospedale Niguarda, Milan, Italy); Emiliano Cingolani (UOSD Shock e Trauma Azienda Ospedaliera San Camillo ForlaniniCirconvallazione Gianicolense, 8700152 – Roma, Italy); Giuseppe Citerio (School of Medicine and Surgery, University of Milan-Bicocca, Milan, Italy; Neurointensive care, San Gerardo Hospital, ASST-Monza, Monza, Italy); Maryse Cnossen (Center for Medical Decision Sciences, Department of Public Health, Erasmus MC – University Medical Center Rotterdam, Rotterdam, the Netherlands).

Mark Coburn (Department of Anesthesiology; University Hospital RWTH Aachen; Pauwelsstrasse 30; 52074 Aachen; Germany); Jonathan P Coles (Division of Anaesthesia University of Cambridge Addenbrooke's Hospital, Cambridge, CB2 0QQ, United Kingdom); Mark Delargy (Royal college of Surgeons in Ireland, Dublin, Republic of Ireland); Bart Depreitere (Neurosurgery, University Hospitals Leuven, Belgium); Ari Ercole (University of Cambridge Division of Anaesthesia Addenbrooke's Hospital, Hills Road, Cambridge, CB2 0QQ, United Kingdom); Hans Flaatten (Department of clinical medicine, University of Bergen, Norway); Volodymyr Golyk (Neurology, National Academy for Postgraduate Medical Education named after Shupik, Kyiv, Ukraine);

Erik Grauwmeijer (Rijndam rehabilitation Centre and Erasmus MC, department of rehabilitation, Rotterdam, The Netherlands); Iain Haitsma (Department of Neurosurgery, Erasmus MC, 's Gravendijkwal 230, Kamer H-703, 3015, CE - University Medical Center Rotterdam, Rotterdam, the Netherlands); Raimund Helbok (Medical University of Innsbruck Department of Neurology, Neurocritical Care UnitAnichstr.35, 6020 Innsbruck, Austria, Europe); Cornelia Hoedemaekers (Department of Intensive Care Radboud university medical center P.O. Box 9101, 6500 HB Nijmegen (707), The Netherlands); Bram Jacobs (Department of Neurology, University of Groningen, University Medical Center Groningen, The Netherlands); Korné Jellema (Department of neurology Haaglanden Medical Center, the Hague, The Netherlands); Lars-Owe D. Koskinen (Department of Pharmacology and Clinical Neuroscience, NeurosurgeryUmeå University, Umeå, Sweden); Andrew I.R. Maas (Department of neurosurgery Antwerp University Hospital and University of Antwerp, Edegem, Belgium); Marc Maegele (Department of Traumatology and Orthopedic Surgery Cologne-Merheim Medical Center (CMMC) Institute for Research in Operative Medicine (IFOM), University Witten-Herdecke (UWH), Köln Germany); Maria Cruz Martin Delgado (Intensive Care Unit Hospital Universitario Torrejon, Madrid, Spain); David Menon (Division of Anaesthesia, University of Cambridge, Addenbrooke’s Hospital, Cambridge, United Kingdom);

Kirsten Møller (Department of Neuroanaesthesiology The Neuroscience Centre Rigshospitalet, University of Copenhagen Denmark); Rui Moreno (UCIPNC Hospital de São José Centro Hospitalar de Lisboa Central Nova Medical School Lisboa Portugal); David Nelson (Department of Physiology and Pharmacology, Section of Anaesthesia and Intensive Care Karolinska Institutet 17173 Stockholm Sweden); Annemarie W. Oldenbeuving (Department of Intensive Care, Elisabeth-TweeSteden Hospital (ETZ), Tilburg, The Netherlands); Jean-Francois Payen (Grenoble Alpes University Hospital, 38000 Grenoble, France); Suzanne Polinder (Center for Medical Decision Sciences, Department of Public Health, Erasmus MC – University Medical Center Rotterdam, Rotterdam, the Netherlands); Jasmina Pejakovic (Clinical center of Vojvodina, Clinic for anesthesiology and intensive care medicine, Novi Sad, Serbia); Gerard M Ribbbers (Erasmus MC University Medical Center Rotterdam Department of Rehabilitation Medicine Rijndam rehabilitation, Rotterdam, The Netherlands); Rolf Rossaint (Department of Anaesthesiology, Medical Faculty RWTH Aachen University, Aachen, Germany);

Guus Geurt Schoonman (Elisabeth-Tweesteden Hospital Tilburg, the Netherlands); Luzius A. Steiner (Anesthesiology, University Hospital Basel, Switzerland, Department of Clinical Research, University of Basel, Switzerland); Nino Stocchetti (Department of physiopathology and transplantation, Milan University Neuro ICU Fondazione IRCCS Cà Granda Ospedale Maggiore Policlinico Via F Sforza, 35 20122 Milan, Italy); Fabio Silvio Taccone (Hôpital ErasmeDot of Intensive Care Route de Lennik 8081070 Brussels, Belgium);

Riikka Takala (Perioperative Services, Intensive Care Medicine and Pain Management Turku University Hospital Finland); Olli Tenovuo (Turku University Hospital and University of Turku, Turku, Finland); Eglis Valeinis (Neurosurgery ClinicPauls Stradins Clinical University Hospital Riga, Latvia); Walter M. van den Bergh (Department of Critical Care, University Medical Center Groningen, University of Groningen, Groningen, The Netherlands); Mathieu van der Jagt (Department of Intensive Care and Erasmus MC Stroke Center, Erasmus Medical Center Rotterdam, University Medical Center Rotterdam, Rotterdam, the Netherlands); Thomas van Essen (Neurosurgical Cooperative Holland, Department of Neurosurgery, Leiden University Medical Center and Medical Center Haaglanden, Leiden and The Hague, Leiden, The Netherlands); Nikki van Leeuwen (Centre for Medical Decision Making, Department of Public Health, Erasmus Medical Center, PO Box 2040, 3000 CA, Rotterdam, The Netherlands); Michael H.J. Verhofstad (Trauma Research Unit Erasmus (TRUE), Department of Surgery, Erasmus MC, University Medical Center Rotterdam, Rotterdam, The Netherlands); Pieter E. Vos (Department Neurology Slingeland Hospital Doetinchem, The Netherlands); Lindsay Wilson (Division of Psychology, University of Stirling, Stirling, Scotland, United Kingdom)

Funding

European Commission FP7 Framework Program 602150. The study was part of the CENTER-TBI project, a large collaborative project with the support of the European Commission 7th Framework program (602150). The funder had no role in the design of the study and collection, analysis, and interpretation of data and in writing the manuscript. DKM was supported by the National Institute for Health Research (NIHR), UK, through an NIHR Senior Investigator Award and the Cambridge NIHR Biomedical Research Centre.

Availability of data and materials

There are however legal constraints that prohibit us from making the data publicly available. Since there are only a limited number of experts per country included in this study, data will be identifiable. Readers may contact Dr. Hester Lingsma (h.lingsma@erasmusmc.nl) for reasonable requests for the data.

Author information

Authors and Affiliations

Consortia

Contributions

JH, EW, MJ, and HL made the substantial contributions to the design of the study protocol. JH and EW collected the data, and JH analyzed the data and drafted the manuscript, the tables, and the figures. HL also supervised the project and gave feedback on the manuscript. All authors were involved in the design of the study and gave feedback on (and approved) the final version of the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable. No patients participated and Delphi panelist (experts) have given consent by completion of the agreement form in the questionnaire. Compliance with ethical standards was confirmed by the medical ethical committee of the Erasmus Medical Center Rotterdam (MEC-2018-1371).

Consent for publication

Not applicable

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional files

Additional file 1:

Questionnaire round 1. (DOC 270 kb)

Additional file 2:

Questionnaire round 2. (DOCX 79 kb)

Additional file 3:

Questionnaire round 3. (DOCX 89 kb)

Additional file 4:

Indicator selection and scores during the Delphi process. (DOCX 66 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Huijben, J.A., Wiegers, E.J.A., de Keizer, N.F. et al. Development of a quality indicator set to measure and improve quality of ICU care for patients with traumatic brain injury. Crit Care 23, 95 (2019). https://doi.org/10.1186/s13054-019-2377-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13054-019-2377-x