Abstract

Background

National audit is a key strategy used to improve care for patients with dementia. Audit and feedback has been shown to be effective, but with variation in how much it improves care. Both evidence and theory identify active ingredients associated with effectiveness of audit and feedback. It is unclear to what extent national audit is consistent with evidence- and theory-based audit and feedback best practice.

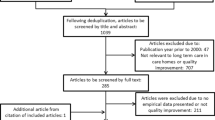

Methods

We explored how the national audit of dementia is undertaken in order to identify opportunities to enhance its impact upon the improvement of care for people with dementia. We undertook a multi-method qualitative exploration of the national audit of dementia at six hospitals within four diverse English National Health Service organisations. Inductive framework analysis of 32 semi-structured interviews, documentary analysis (n = 39) and 44 h of observations (n = 36) was undertaken. Findings were presented iteratively to a stakeholder group until a stable description of the audit and feedback process was produced.

Results

Each organisation invested considerable resources in the audit. The audit results were dependent upon the interpretation by case note reviewers who extracted the data. The national report was read by a small number of people in each organisation, who translated it into an internal report and action plan. The internal report was presented at specialty- and organisation-level committees. The internal report did not include information that was important to how committee members collectively decided whether and how to improve performance. Participants reported that the national audit findings may not reach clinicians who were not part of the specialty or organisation-level committees.

Conclusions

There is considerable organisational commitment to the national audit of dementia. We describe potential evidence- and theory-informed enhancements to the enactment of the audit to improve the local response to performance feedback in the national audit. The enhancements relate to the content and delivery of the feedback from the national audit provider, support for the clinicians leading the organisational response to the feedback, and the feedback provided within the organisation.

Similar content being viewed by others

Background

More than one in four people in acute hospitals have dementia [1]. People with dementia do not always receive identified best care; for example their pain may not be assessed, and carers may not be asked about causes of distress and what might calm the patient if they become agitated [1]. The national audit of dementia is a mandated [2] intervention to improve the care for people affected by dementia. The national audit of dementia provides feedback to National Health Service (NHS) organisations about the quality of care received by people with dementia in general hospitals. The audit is commissioned on behalf of NHS England and has taken place approximately every 2 years since 2010.

Audit and feedback is effective, but there is variation in the extent to which it improves care. A Cochrane review of audit and feedback [3] found that a quarter of audits stimulated less than 0.5% improvement, but a quarter exceeded 16% improvement. A low baseline was associated with increased improvement, as was the use of feedback that was repeated, given by a supervisor or peer, given in writing and verbally, and that included specific targets and an action plan [3]. Further factors that may be associated with increased effectiveness of audit and feedback draw upon theory: Colquhoun et al. [4] identified hypotheses to enhance audit, describing the potential roles related to the feedback recipient (e.g. the trust they have in the data), the target behaviour (e.g. the extent to which barriers to improvement can be addressed), the content of the audit and feedback (e.g. whether benchmark comparisons are included) and the delivery of the audit and feedback (e.g. if it presented at the time of decision-making about care). More recently, Brown et al. [5] synthesised evidence and theory about audit and feedback to develop clinical performance feedback intervention theory (CP-FIT). Briefly, CP-FIT describes factors associated with the feedback, the recipient and the context which act through diverse mechanisms (e.g. social influence, actionability) upon how the feedback is perceived, and whether intention and behaviour to improve clinical performance is produced. There have been calls to test the impact on care of the implementation of potential evidence- and theory-based enhancements to audit and feedback [6].

In order to identify potential enhancements to an existing audit, it is necessary to understand current audit and feedback practice. Specifically, we aimed to describe the content and delivery of the national audit of dementia and identify potential enhancements.

Methods

This multi-method study used interviews, observations and documentary analysis, supported by stakeholder involvement through two groups: a co-production and an advisory group. Multi-methods enable the identification of reported practices and influences, as well as of tacit knowledge and practices taken for granted [7, 8].

Setting

We studied six hospitals (approximately 4750 beds) within four English NHS organisations, purposively sampled to maximise diversity. We identified organisations with diverse regulator (Care Quality Commission) ratings for clinical effectiveness, sought hospitals within each rating that were of different sizes (full-time equivalent staff ranged across organisations from 4000 to 15000) and reviewed their previous performance on the national audit (Table 1). Consideration of both hospital and organisation level was important as national audit of dementia feedback is provided at the hospital level, but staff are employed at the organisation-level. Some hospitals at some sites did not meet the inclusion criteria of the national audit. All hospitals receiving national audit of dementia feedback at each site were included in the study. The sample of interviews, observations and documents (Appendix 1) was informed by co-production group input (described below) and emerging findings of the study. The sample sought people, events and documents able to provide diverse perspectives upon the content and delivery the national audit.

Interviews

MS interviewed 32 purposively sampled staff, undertook 36 observations (44 h) and analysed 39 documents across the sites. Interview participants (Appendix 1) were accessed through the hospital research department, approached by email and gave written informed consent, as described below. Interviews were semi-structured, conducted face-to-face and audio-recorded. The topic guide (Appendix 3) explored participants’ involvement with audit, their reported perception of why it was undertaken, what happens during audit and feedback, what affects its effectiveness and what could be changed to increase effectiveness. There were also questions targeted based upon earlier findings and the participants’ role. Mean interview length was 59 min (range 36 to 98 min). Whilst the period of interviews overlapped with the period of observations and documentary analysis, they happened during separate site visits. Concurrent data collection enabled findings from different sources to inform sampling and data collection (e.g. interview responses and documentary analysis targeting later observations, observations informing the interview topic guide). During the first three interviews, MS drew a diagram representing that participant’s description of what happens during audit (See example in Appendix 2). The diagram was shared with the respective participant for amendment during the interview. The diagrams were discussed with the co-production group and collated into a single diagram. This collated diagram (Appendix 2) was used (and further developed) in later interviews as part of an amended interview topic guide.

Observations

Observations involved 204 staff participants at ward, specialty- (e.g. dementia steering group, care of the older person governance group) and organisation-level (e.g. organisational quality committee), meetings to plan, prepare for and respond to the national audit and the gathering and recording of national audit data. Participants were accessed through the hospital research department, approached by email and gave informed consent, as described below.

Observations took place at three of the four organisations, with the researcher taking field notes and asking exploratory questions [9]. Mean observation length was 74 min (range 14 to 226 min). Reflective notes were taken after both interviews and observations. The interview recordings, field notes and reflective notes were transcribed and checked for accuracy.

Documentary analysis

We sampled documents about the organisations under study produced by external sources (reports by the regulator and national audit provider organisation), and internally produced documents (e.g. organisational quality strategy, clinical audit policy, audit training materials, reports to and minutes from governance committees). Documents were accessed through the hospital research department and/or participants.

Stakeholder involvement

The co-production group included three carers, three clinical leads for dementia and three organisation clinical audit leads. The advisory group included representatives from the audit co-ordinator (Royal College of Psychiatrists) and the audit commissioner (Healthcare Quality Improvement Partnership) of the national audit of dementia, professional bodies, patients and researchers studying audit and feedback. The advisory group member perspectives were conveyed to the co-production group by MS. MS is a nurse with qualitative research practice and training, and experience of leading quality assurance and improvement in hospital settings. Prior to data collection, MS facilitated four meetings (8 h) with the co-production group to develop a description of their baseline reported understanding of what happens during audit and feedback, what influences its effectiveness and of potential enhancements. The meetings involved facilitated mixed small group work, presentation and whole-group discussion.

Data analysis

The interviews and data analysis were undertaken by MS, co-coded by TF. Co-coding involved co-indexing and sorting a sample of data (approximately 100 pages) collated as example quotes across the initial data categories and across all methods, independently reviewing the sample dataset for coding and subsequently challenging the description and categories through joint discussion, until consensus was reached. Extensive exemplar quotes for each category and code were further challenged by all authors at a higher level of abstraction, with credibility enriched through further challenge by the co-production group. Transcript data were entered into Nvivo v12 (QSR International) for data management.

Inductive framework analysis [10] involved becoming familiar with the data through transcribing and reading the observation notes, checking and reading the transcribed interview data and reading the documents. MS identified initial codes and categories from each of the first two observations and interviews. MS compared these across data sources and against the diagrams from the first two interviews in order to create an initial analytical framework. The framework contained both higher-level descriptive headings and more thematic, conceptual issues within those categories. The framework category heading, narrative description and exemplar data extracts were presented to the co-production group alongside their initial views. The extent to which a finding was repeated across sites was included in the presentation. The group considered differences and similarities between the findings, their initial views and their emergent understanding, identified challenges to the analysis and proposed further avenues to explore. This process was repeated twice with additional data and updated categories, before adding explicit group consideration of elements of an existing intervention framework [11], a previous systematic review [2] and theory-informed hypotheses [3]. Two further iterations of presentation and review resulted in a later description which was then used to inform the topic guide at the fourth site. Analysis and presentation of the fourth site findings resulted in only minor amendment to the description; this was identified as an indicator of theoretical saturation of the data.

Ethics

This study was approved by Newcastle University Faculty of Medical Sciences Ethics Committee (application 01266/12984/2017) and was part of a wider project to describe and enhance audit and feedback in dementia care in hospitals, including both the national audit and local ward audits/monitoring. Only data related to the National Audit of Dementia are included here. Data were collected from January 2018 to April 2019.

Results

We identified ten different stages to the national audit. These were classified as follows: impetus, agreement to take part, preparation of staff, assessment of care, analysis of data, identification of actions, internal feedback, sense-making, wider organisation feedback and making changes. The stages were common across sites; differences between sites in the content of each stage are described below. Whilst described as stages, there was overlap and interaction between them (e.g. the impetus for participation impacted upon different stages of the audit, notably how data were collected and improvement actions agreed; preparation affected the assessment of practice and the selection of actions; internal feedback affected sense-making and making changes). We found that whilst the collection and dissemination of data were organised at a hospital level, sites went through the above stages (e.g. agreement to take part, making changes) at the wider organisation level. As a result, we describe both hospital and organisation-level findings.

Impetus, agreement to take part and preparation of staff

There were different drivers to take part in the national audit. These included it being perceived as mandatory, to enable comparison, to report on participation externally and to gain internal resources for improvement:

It (national audit) justifies our existence (as a specialist team), I suppose. And it shows that we’re doing the right thing… [then later] I think our consultant is very proactive in terms of dementia care. She uses the audit as a stick – with the chief execs – to try to improve care (interview 14, dementia nurse specialist).

The role of the national audit as a lever for gaining internal improvement resources will be described in more detail as part of the “Internal feedback and sense-making” section.

Nationally, almost all hospitals that meet the eligibility criteria chose to take part in the national audit (documents 15–18, national audit report). We found that the decision-making process about participation involved a member of the organisation clinical governance team identifying a lead clinician who advised on whether the data could be collected (interview 13, clinical audit facilitator). Their recommendation was reported to an organisation-level governance committee which took responsibility for the decision.

Within three organisations, data collection staff described reluctance to collect data (e.g. observation 9, junior doctor training/audit recruitment):

The junior doctor said they did not want to audit the notes but was asked repeatedly by a consultant, stating that the consultant “just kept pushing me to do it” (observation 23, record review).

There was evidence that the reviewers found data extraction of low value (see the “Assessment of care” section) and uninteresting:

You can see why we only do it for a couple of hours, because it’s so soul-destroying (observation 22, record review).

However, we also found two dementia specialists who attended work to collect data on a non-work day. One of whom had earlier described the audit results as being linked to retaining the dementia role they enjoyed (Table 2).

To prepare actors to gather data, the Royal College of Psychiatrists provided a guidance document, although this may not be used (observations 11–15, 23, 25). At two organisations, we found the people collecting case note data had impromptu discussions about the interpretation of the standards.

Assessment of care

The national audit requires collation of data from different sources: an organisational checklist, a staff survey, a carer survey and a review of case notes (documents 15–18, national audit report). Case note review data were largely collected by senior nurses (deputy ward manager level to specialist nurse level) although at one organisation it was predominantly doctors (junior doctor to medical consultant) (observations 16–18, 20–24).

During observation of case note reviews (three organisations n = 18), the mean time to review a patient’s notes was 25.7 min (range 9 to 52 min). Most case note reviewers recorded their findings on paper forms. The paper forms were subsequently entered into the national audit web portal by deputy ward manager level staff (mean = 11.3 min per record; range 6 to 20 min) (observation 25, 28). There was evidence from one site that time assessing practice was prioritised over clinical care. At this site, those assessing practice were told by the clinical lead to wear normal clothes to prevent re-assignment to the wards (observation 8) and subsequently a request to undertake an assessment on a patient considered ready for discharge was declined in order to gather data (observation 20).

During data entry, approximately half of the data forms needed to be checked with the data collector (observations 25 and 28). This clarification was not observed, which prevents description of any further interpretation of the standards or case notes, but also implies an underestimation of the total time taken to collect and finalise data.

The observations and interviews showed that the case note reviews were influenced by the quality of record keeping, the case note reviewer’s expectations, interpretations and goals (Table 2). Goals included to complete the data collection task quickly (observation 23), to show the need for investment and to present the team or organisation positively (interview 14, dementia nurse specialist).

Case note reviews were also affected by interpretation of the standards and/or the case notes. There were examples where reviewers had developed their own complex set of unwritten criteria that needed to be met to reach the audit standard; others required a much lower level of evidence. It appeared that less complex decision-making was used by those reviewers who had been reluctant to undertake the case note review and/or wanted to complete the task quickly.

Analysis of data, identification of actions and wider organisation feedback

Analysis of data is undertaken nationally (documents 15–18, national audit reports), approximately 5 months after the delivery of the care assessed. There is a further 9-month gap between national data entry closing and the release of reports to organisations (document 15–18).

At two organisations, local emergent findings were discussed by those assessing practice with the deputy director of nursing prior to the national reports being received (interviews 18, 27). At all four organisations, the national reports were awaited prior to agreeing actions (e.g. interview 31, deputy director of nursing). Study participants reported that the analysis within the report was robust (interview 3, directorate audit lead) but took a long time (interview 13, clinical audit facilitator; interview 27, dementia nurse specialist).

At each organisation, the national report was shared with a small group (approximately two to six) of positional leaders such as the deputy director of nursing or directorate manager, although they may not read it (interview 6, dementia nurse specialist).

The national report contained 66 pages and had a common structure for every hospital (documents 15–18). It included a description of the audit steering group members and the audit method, the mean scores for England and Wales, a summary of local performance including national ranked position, key national findings and a summary of the local hospital performance on these nationally identified priorities. The report then provided detailed performance information, including data and narrative information summarised from carer and staff surveys. Recommendations for commissioners, board members, clinical positional leaders and ward managers, based upon national performance, were included. In addition, hospital-level data are available in an online spreadsheet.

The clinical leads who develop the organisation’s response described difficulty understanding the national report (interview 6, dementia nurse specialist) and being unclear about how to implement improvement (Table 3). Some clinical leads attended a quality improvement workshop run by the Royal College of Psychiatrists approximately 3 months after the report had been received. The workshop included content about what other organisations had done to achieve their results.

The organisation-identified clinical lead (medical consultant or dementia nurse specialist) translated their organisation’s report(s) into a local standardised template, including proposed actions. The deputy director of nursing may also be involved in writing the internal report (interview 27, dementia nurse specialist).

Whilst the national feedback was at hospital level, information and actions in the internal reports were at the organisation level (documents 7–10, internal reports). The internal reports appeared to focus more on national than local performance, and actions rarely addressed relative or absolute low hospital or organisation performance. For example, one organisation with two sites had ten lower quartile results, but eight of these results did not have actions to address performance (document 7, internal reports). Four hospitals (in three organisations) assessed functioning in fewer than 50% of patients but did not have actions to address performance.

At three organisations, the internal reports describe actions targeting all five of the key national priorities, with three of the five addressed by the fourth organisation (document 9, internal report).

Some participants who wrote the internal report described undertaking analysis to compare with other organisations; this was undertaken to identify high-performers to learn from (interview 27, dementia nurse specialist) and to compare performance against other similar organisations (interview 12, deputy director of nursing). Participants described considering organisational context as part of this comparison (interviews 8, 18, deputy directors of nursing).

Despite this, comparative data were included in only two organisation’s reports (documents 9, 10), both comparing themselves against the national average. There were no examples of alternative external comparisons (e.g. comparison against top performers or a peer group) in any internal reports (documents 7–10, internal reports).

Potential reasons for current performance were not reported in three of the four internal reports (documents 7, 8, 10, internal reports). In the site where they were reported (document 9, internal reports), it was not clear how the barriers to performance were identified or how proposed actions were linked to the identified barriers. Who should be doing the target care behaviour (e.g. assess pain) was considered in the development of the action plan, but this information was not included in the internal report or action plan (observation 10).

Proposed actions in the internal reports often reiterated or amended existing actions (documents 11, 12, organisation dementia strategy) and frequently involved changing the health record, training or further audit (documents 7–10, internal reports). The selection of actions was constrained by the perceived sphere of influence of those writing the action plan. Action plans described what would be done and by when (documents 7–10). Most described who would be responsible, some described the outcome sought and one described the possible obstacles to completing the action.

Internal feedback and sense-making

At each site, possible actions to improve care were discussed with a small group of stakeholders during the development of the internal report, typically the clinical lead, dementia nurse specialist, and deputy director of nursing. The actions were then amended at specialty (e.g. care of older people) level committees (observation 3, dementia steering group) and further refined and agreed at organisation-level governance committees (observation 1, 5, 31, 35). At one organisation (observation 7, clinical governance committee), presentation at the organisation-level committee led to further discussion prior to agreement.

During committee meetings, positional clinical leaders and senior managers considered whether and how to change. They discussed the motivation of the audit provider (Royal College of Psychiatrists) and the validity of the data (interview 19, 30; observation 35). Verbal feedback supplemented the written report by including information about relative performance, typically describing where the hospital was ranked in the top or bottom six of all participating hospitals. As described as part of the impetus to participate in the audit, low relative performance increased commitment to improve:

I don’t know how valuable the benchmarking is, apart from that it brings it to the attention of the board. If you’re somewhere near the bottom then they want something done about it, it’s a useful lever sometimes in that way (interview 18, deputy director of nursing).

However, comparison can also lead to complacency:

So, gather data and then see that a lot of organisations are in a similar position, so it’s accepted that that’s the position that it is… I think sometimes there’s a degree of a) complacency or b) it’s not possible to improve (interview 19, deputy director of nursing).

One participant described absolute performance as more important as a lever for improvement than relative performance:

I’m not so bothered about the difference (between two hospitals), I’m bothered that only 60% of them got one (a discussion about discharge with the carer). What would bother me more, would be why they weren’t being done, because that’s just over half. Two thirds isn’t good (interview 11, directorate manager).

Participants and observations revealed how data may be triangulated with other organisation data. This was a narrative process during meetings (observations 1, 5, 7, 30, 31, 35). Patient experience data, complaints (e.g. observation 8) in particular, were often used as a measure of “true performance”; that is, that national audit data appeared to be viewed as credible if it agreed with issues raised in complaints. Informing this discussion with narrative case studies may support the engagement of influential positional leaders (observation 5, clinical governance committee).

Through this sense-making work, concerns may be added to the hospital risk register and scored. The risk score allocated is affected by external pressure, including from regulatory, reputational and financial risk (interview 20, clinical governance facilitator; document 36). Some seek to game the risk level by changing it to affect who becomes aware:

The clinical audit facilitator said, “all audits on forward plan get risk rated…if less than 12 they are not escalated”. We “try to keep it at the lower end, nine times out of ten they are…if higher they get discussed at Board.” The dementia nurse specialist said that you do not want the Board’s input as it was, “a hindrance not a help” (observation 2, clinical audit facilitation meeting).

Wider organisation feedback

Ward-level staff in all participating organisations may not get feedback on the national audit results. However, there was evidence that ward staff may get information about actions being taken to improve (Table 3).

Across the sites, dementia specialists described having a good understanding of the anticipated results. As such, feedback may not alter participants’ understanding of performance, and this may affect whether it leads to action (Table 3).

Making changes

At each organisation, actions were monitored (interviews 1, 13, 20). Monitoring demonstrated that actions were sometimes delayed (documents 24–27, observations 5, 31). Some action owners were unaware of the actions for which they had been assigned responsibility (interview 11, directorate manager) and some action owners leave and the actions are not completed (interview 27, dementia audit lead). Across each organisation, many participants (e.g. interview 14, 24, 27; observation 8, dementia steering group) reported that the next report of the national audit comes too soon (from July 2017 to July 2019) to see improvements in the results.

Discussion

There is scope to improve returns on investment by enhancing the content and delivery of national audits [6]. In order to identify potential enhancements to the national audit of dementia, we studied how six hospitals completed the audit. Our analysis of documents, interviews and observations, and the co-produced description revealed similar stages to the national audit of dementia across the sites. We found there was an organisation-level decision-making process about participation, where the default position was to take part in national audit, and identification of a lead. The identified lead, typically a dementia nurse specialist or medical consultant, took steps to prepare those involved in data collection. The collection of audit data was affected by record keeping, and case note reviewers’ expectations, interpretations and goals. Nationally, the audit included 10047 case notes; our findings suggest that this equates to almost 6200 h of senior (e.g. deputy ward manager or above) clinical time, with only one identified example of data entry being undertaken by a non-clinician. Importantly, this estimate excludes work to agree to take part, preparation and clarification work, analysis of data or the identification and completion of actions to improve.

The data were analysed nationally; this was reported as being robust but slow. Hospital performance and national performance (mean and hospital ranking) were described in a report sent to the organisation clinical leads. The report was read by few people. The organisation clinical lead for the audit translated the report into an internal report. The leads often found the national report confusing and were uncertain how to create organisational change. The internal report acted as a filter, with subsequent conversations about actions being based only upon the internal report. There was little evidence that actions within the internal report were informed by a robust analysis of current performance involving the identification of low performance, specification of the target care behaviours, analysis of the causes of performance, and the selection of actions to address the causes of performance.

Committee discussion considered relative performance, data quality, narrative triangulation with other data, an assessment of risk and discussion about existing actions. This led to discussion of, and approval for, a plan to improve. The improvement actions may be delayed or not completed.

The study explored what happens within six hospitals as part of four NHS organisations. These were selected for diversity; however, we cannot assume transferability to other audits, institutions or countries. Data from the fourth site sought to test earlier findings. A limitation of the study is that the timing of data collection did not allow for observation of the national audit; however, we were able to explore the content and delivery of the audit through interview and documentary analysis. A further limitation was that the focus was on what happens within the hospital; wider stakeholders (e.g. regulators, commissioners) were not included as contributing participants. Future studies may seek to understand how these wider stakeholders interpret and use the data.

The healthcare workers in the co-production group were from three of the participating study sites. To aid reflexivity, we facilitated the co-production group to describe their pre-study views. MS facilitated the group to challenge emergent findings through explicit consideration of the group’s pre-study views, evidence, theory and an intervention framework. We sought diverse perspectives by involving carers, people from diverse organisations and feedback loops to the advisory group. Sampling was informed by the co-production group members proposing the job titles of key actors in the process, to enable appropriate targeting of participants; sampling was further informed by emergent findings and the advisory group. As a result, whilst it is possible that the involvement of people from the study sites adversely affected the gathering or interpretation of the data, we anticipate that, by drawing upon their knowledge, involvement strengthened the description of the national audit. Concurrent data collection using different methods enabled exploration of themes between data sources and is a further strength.

Comparing the content and delivery of the national audit against evidence [3] and theory [4, 5, 12] identifies potential enhancements to the national audit of dementia. We found that feedback was approximately 14 months after care had been delivered, was discussed at senior level and did not reach clinical staff delivering the care, with the exception of selected positional leaders. The lack of feedback to clinicians mirrors previous findings on the English national blood transfusion audit [7]. Providing more specific, timely feedback may enhance effectiveness [4, 5]. Within the hospitals, this could involve individuals, teams or the organisation getting feedback on the extent to which their care meets the standards measured in the audit from case note reviewers at the point of data collection, or at data entry. The data could be delivered through existing feedback mechanisms (e.g. supervision, ward huddle). Feedback reach is a key implementation and evaluation challenge [13], where actors may receive but not engage with [14], internalise or initiate a response [15]to the feedback. Future research should assess co-interventions to support both delivery of and participation with feedback. Changes to the national reporting process would involve the audit co-ordinator (Royal College of Psychiatrists), the audit commissioner (Healthcare Quality Improvement Partnership) and the audit funder (NHS England) agreeing and delivering more timely and specific feedback reports (e.g. National Hip Fracture Database [16]).

CP-FIT [5] proposes that organisation-level response and senior management support may make feedback interventions more effective. We found that the response to the national audit was at an organisational level. Like Gould et al. [7], we found that the hospital report is received by the organisation clinical lead who leads the organisational response. We found that the clinical leads may be confused by the report. The hospital report from the Royal College of Psychiatrists compares performance against the national mean. Simplifying the report [4, 5] and guiding the tailored selection of comparators [4, 5, 12] may enhance the organisation-level response. The report may be simplified by: reducing the length from 66-pages [9] through the use of interactive reports that enable recipients to get more information if they want it [4, 16], simplifying and standardising graphical presentation [4, 5], highlighting recipient hospital priorities [4, 5] and removing national priorities from the hospital report. These enhancements may require contractual changes from the audit commissioner, such that interactive rather than static (e.g. pdf) reports are produced. Interactive reports could allow recipients to select comparators to highlight room for improvement [4, 5, 12], (e.g. to compare their hospital with the top 10% performing hospitals, or a peer group). Selecting a comparator based upon peer group may increase competition, credibility [12] or fairness (e.g. interview 18).

The organisation clinical leads were unsure how to deliver improvement; many actions described in the internal reports written by the clinical leads did not address local poor performance and were not informed by a robust analysis of current performance. Supporting clinical leads to identify local relative and absolute poor performance [3], to explore influences upon performance and the selection of strategies that address barriers and facilitators [4, 17,18,19] may enhance improvement as a result of the national audit. This could be done through the provision of interactive data enabling recipients to select absolute and relative performance parameters (e.g. to highlight local quartile results). Facilitation and education [5] could further support recipients to undertake this selection, explore influences upon performance, and select improvement strategies. Providing selectable examples of potential improvement actions for each result, rather than a report-level list of recommendations, may further enhance audit effectiveness [20] by prompting clinical leads to tailor actions to local performance and context.

We found that action plan discussions at speciality- and organisation-level committees included consideration of whether and how to change. This reflects Weiner’s [21] description that commitment to change results from change valence, which may stem from consideration of whether the change is needed, important, beneficial or worthwhile. We found that commitment was related to a discursive assessment of trust in the data, based upon assessment of the source and method, triangulation with recalled data about patient experience, and actors’ assessment of risk, in particular regulatory and reputational risk. This sense-making discussion may generate “legitimacy” [16] and echoes findings from other sources of feedback [22]. Valid and reliable collection of data, and internal organisational feedback that describes the method and triangulated data, may support perceptions of trust and credibility by feedback recipients. Justifying the need for change by linking it to recipient priorities [4, 5] (such as patient outcomes, reputational risks) may further enhance the effectiveness of the national audit.

Conclusion

We used multiple methods to co-produce a description of the content and delivery of the national audit of dementia at six diverse English hospitals. We found considerable organisational commitment to the audit. We were able to identify potential enhancements to the national audit of dementia that could enhance effectiveness. The enhancements related to the content of the feedback from the national audit provider, support for the clinical leads leading the organisational response to the feedback and the content of the feedback provided within the organisation. We recommend further work to consider whether and how these potential enhancements could be operationalised and the steps needed to implement and test them.

Availability of data and materials

The datasets generated during and/or analysed during the current study are not publicly available in order to maintain the anonymity of participants.

Notes

Interviewer has a personal version of the collated diagram with stage and participant specific questions based upon emergent findings and stakeholder feedback

Abbreviations

- CP-FIT:

-

Clinical Performance Feedback Intervention Theory

- e.g.:

-

Example

- NHS:

-

National Health Service

References

Royal College of Psychiatrists. National Audit of Dementia care in general hospitals 2018-2019: round four audit report. London: Royal College of Psychiatrists; 2019.

Healthcare Quality Improvement Partnership. NHS England Quality Accounts List 2020/21. Healthcare quality improvement partnership. January 2020. https://www.hqip.org.uk/wp-content/uploads/2020/01/nhs-england-quality-accounts-list-2020-21-vjan2020.pdf Accessed 14 Feb 2020.

Ivers N, Jamtvedt G, Flottorp S, Young JM, Odgaard-Jensen J, French SD, et al. Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2012;6:CD000259.

Colquhoun HL, Carroll K, Eva KW, Grimshaw JM, Ivers N, Michie S, et al. Advancing the literature on designing audit and feedback interventions: identifying theory-informed hypotheses. Implement Sci. 2017;12(1):117.

Brown B, Gude WT, Blakeman T, van der Veer SN, Ivers N, Francis JJ, et al. Clinical performance feedback intervention theory (CP-FIT): a new theory for designing, implementing, and evaluating feedback in health care based on a systematic review and meta-synthesis of qualitative research. Implement Sci. 2019;14(1):40.

Grimshaw JM, Ivers N, Linklater S, Foy R, Francis JJ, Gude WT, et al. Reinvigorating stagnant science: implementation laboratories and a meta-laboratory to efficiently advance the science of audit and feedback. BMJ Qual Saf. 2019;28(5):416–23.

Gould NJ, Lorencatto F, During C, Rowley M, Glidewell L, Walwyn R, Michie S, Foy R, Stanworth SJ, Grimshaw JM, Francis JJ. How do hospitals respond to feedback about blood transfusion practice? A multiple case study investigation. PloS one. 2018;13(11).

Taylor SJ, Bogdan R, DeVault M. Introduction to qualitative research methods: a guidebook and resource. John Wiley & Sons; 2015.

Royal College of Psychiatrists. National Audit of Dementia care in general hospitals 2016-2017: third round of audit report. London: Royal College of Psychiatrists; 2017.

Ritchie J, Spencer L. In: Bryman A, Burgess B, editors. Qualitative data analysis for applied policy research. Routledge, London: Analyzing qualitative data; 1994.

Hoffmann TC, Glasziou PP, Boutron I, Milne R, Perera R, Moher D, Altman DG, Barbour V, Macdonald H, Johnston M, Lamb SE. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. Bmj. 2014;7;348:g1687.

Gude WT, Brown B, van der Veer SN, Colquhoun HL, Ivers NM, Brehaut JC, et al. Clinical performance comparators in audit and feedback: a review of theory and evidence. Implement Sci. 2019;1;14(1):39.

Glasgow RE, Harden SM, Gaglio B, Rabin BA, Smith ML, Porter GC, Ory MG, Estabrooks PA. RE-AIM planning and evaluation framework: adapting to new science and practice with a twenty-year review. Front Public Health. 2019;7:64.

Vaisson G, Witteman HO, Chipenda-Dansokho S, Saragosa M, Bouck Z, Bravo CA, Desveaux L, Llovet D, Presseau J, Taljaard M, Umar S. Testing e-mail content to encourage physicians to access an audit and feedback tool: a factorial randomized experiment. Curr Oncol. 2019;26(3):205.

May CR, Mair F, Finch T, MacFarlane A, Dowrick C, Treweek S, Rapley T, Ballini L, Ong BN, Rogers A, Murray E. Development of a theory of implementation and integration: normalization process theory. Implement Sci. 2009;4(1):29.

McVey L, Alvarado N, Keen J, Greenhalgh J, Mamas M, Gale C, Doherty P, Feltbower R, Elshehaly M, Dowding D, Randell R. Institutional use of National Clinical Audits by healthcare providers. J Evaluation Clin Practice. 2020:1–8.

Eccles M, Grimshaw J, Walker A, Johnston M, Pitts N. Changing the behavior of healthcare professionals: the use of theory in promoting the uptake of research findings. J Clinical Epidemiol. 2005;1;58(2):107–12.

Ivers N, Barnsley J, Upshur R, Tu K, Shah B, Grimshaw J, Zwarenstein M. “My approach to this job is... one person at a time”: perceived discordance between population-level quality targets and patient-centred care. Can Fam Physician. 2014;1;60(3):258–66.

Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;1:10(1):21.

Roos-Blom MJ, Gude WT, De Jonge E, Spijkstra JJ, Van Der Veer SN, Peek N, Dongelmans DA, De Keizer NF. Impact of audit and feedback with action implementation toolbox on improving ICU pain management: cluster-randomised controlled trial. BMJ Qual Saf. 2019;1;28(12):1007–15.

Weiner BJ. A theory of organizational readiness for change. Implement Sci. 2009;1;4(1):67.

Burt J, Campbell J, Abel G, Aboulghate A., Ahmed F, Asprey A, Barry H, Beckwith J, Benson J, Boiko O, Bower P, . Improving patient experience in primary care: a multimethod programme of research on the measurement and improvement of patient experience. Programme Grants for Applied Research. 2017;5(9).

Acknowledgements

We would like to acknowledge the time, expertise and dedication of the co-production and advisory group members. We would also like to thank Professor Robbie Foy for commenting on an earlier draft of this manuscript, and the reviewers for their comments.

Funding

This report is independent research arising from a Doctoral Research Fellowship (DRF-2016-09-028) supported by the National Institute for Health Research (NIHR). The views expressed in this presentation are those of the authors and not necessarily those of the NHS, the National Institute for Health Research or the Department of Health and Social Care.

Author information

Authors and Affiliations

Contributions

All authors were involved in designing the study and drafting the manuscript. MS facilitated the co-production group and collected the data, under supervision from RT, NK, LA and TF. MS and TF coded the data. MS and the co-production group synthesised the data, under supervision from RT, NK, LA and TF. All authors read and approved the final manuscript.

Authors’ information

Michael Sykes, NIHR Doctoral Research Fellow, Population Health Sciences Institute, Newcastle University, Newcastle Upon Tyne, NE2 4AX, UK

Richard Thomson, Professor of Epidemiology and Public Health, Population Health Sciences Institute, Newcastle University, Newcastle Upon Tyne, NE2 4AX, UK

Niina Kolehmainen, Senior Clinical Lecturer and Honorary Consultant AHP, Population Health Sciences Institute, Newcastle University, Newcastle Upon Tyne, NE2 4AX, UK

Louise Allan, Professor of Geriatric Medicine, College of Medicine and Health, University of Exeter, St Luke’s Campus, Heavitree Road, Exeter, EX1 2LU, UK

Tracy Finch, Professor of Healthcare and Implementation Science, Department of Nursing, Midwifery and Health, Northumbria University, Newcastle upon Tyne, NE7 7XA, UK

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study was approved by Newcastle University Faculty of Medical Sciences Ethics Committee (application 01266/12984/2017). All participants gave consent to participate. Written informed consent was sought from potential participants for interviews and one-to-one observations. For observations of groups, information was given in advance to the Chair of the meeting or senior member of the group, with a request to distribute it to all members. This information included details about the study aims, methods, risks and benefits and how participants were able to have their data excluded from the study.

Consent for publication

Consent for publication was obtained from all participants.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1

Appendix 2

Appendix 3

Interview topic guide v3

- 1.

Could you describe your role?

- 2.

What do you understand by the term audit and feedback? (Can prompt that often called “clinical audit”)

- 3.

Different types of audits have been described.

- (a)

Do you recognise these types? (Show list)

- (b)

Which of these types are you involved with?

- (c)

How do they differ?

- (a)

- 4.

Do you come into contact with the national audit of dementia? What is your involvement with that audit?

- 5.

The national audit has been described like this (Show collated diagram). Do these match your experience? With which parts of this do you get involved?

- 6.

Explore each part:Footnote 1

- (a)

When does it happen?

- (b)

Where does it happen?

- (c)

Who is involved?

- (d)

How is it done? Is it always done like that? (If appropriate, prompt about materials involved)

- (e)

How does that feel? How do other people feel about that?

- (f)

Which documents and/or potential observations/interview participants could provide more information about this part?

- (a)

- 7.

Audit is used for different reasons, why do you think it is used here? Any other reasons?

- 8.

Some people use audit to improve care. What do you think about that?

- (a)

How much do you think it improves care?

- (b)

How does it improve care?

- (c)

What affects whether it improves care? (Use collated diagram as prompt)

- (a)

- 9.

If you could change anything about the national audit, what would you change?

- 10.

What would happen if the hospital didn’t do the national audit? (Can prompt: Would things be different, and if so, how?)

- 11.

Is there anything else you would like to add?

Thank you

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Sykes, M., Thomson, R., Kolehmainen, N. et al. Impetus to change: a multi-site qualitative exploration of the national audit of dementia. Implementation Sci 15, 45 (2020). https://doi.org/10.1186/s13012-020-01004-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-020-01004-z