Abstract

Guidelines from different organisations regarding the use of aspirin for primary prevention vary despite being based on similar evidence. Translating these in practice presents a further major challenge. The benefit–harm balance tool developed by Puhan et al. (BMC Med 13:250, 2015) for aspirin can overcome some of these difficulties and is therefore an important step towards personalised medicine. Although a good proof-of-concept, this tool has some important limitations that presently preclude its use in practice or for further research. One of the major benefits of aspirin that has become apparent in the last decade or so is its effect in preventing cancer and cancer-related deaths. However, this benefit is clear and consistent in randomised as well as observational evidence only for specific cancers. Additionally, it has long lag-time and carry-over periods. These nuances of aspirin’s effects demand a specific and a more sophisticated model such as a time-varying model. Further refinement of this tool with respect to these aspects is merited to make it ready for evaluation in qualitative and quantitative studies with the goal of clinical utility.

Please see related article: http://bmcmedicine.biomedcentral.com/articles/10.1186/s12916-015-0493-2

Similar content being viewed by others

Background

Public health or patient management guideline recommendations are based on broad risk groups and very often take only a few dimensions into account, i.e. one or two major benefits and one or two major harms. It is therefore no surprise that recommendations of different organisations vary despite these being based on similar evidence as they may consider different dimensions or use different risk group categorisation. Guidelines for the use of aspirin in primary prevention are an excellent example of such divergent recommendations [1–5]. Further, even if there was one universal set of recommendations, translating these recommendations in practice would still present a major challenge not only because the categorisation in broad risk groups is often too crude to apply to an individual but also because an individual’s clinical profile often has several more dimensions to consider in addition to those which formed the basis of the applicable recommendations. Furthermore, individual preferences and perceptions often fall outside the scope of guidelines, and yet these are a very important component of the ultimate informed decision which must occur at an individual level. This underscores the need to develop tools or methods to aid the in-depth analysis of the benefit–harm balance at an individual’s level in a way that also incorporates personal preferences. The benefit–harm balance tool for aspirin developed by Puhan et al. [6] is therefore one such valuable step in our quest for personalised medicine.

Aspirin’s effects are site-specific and time-varying

This benefit–harm balance tool [6] is a good proof-of-concept but has some important limitations that need to be highlighted. An important caveat about any model is that it can only be as good as its underlying assumptions – this is where the nuances of aspirin’s effects matter. A large body of evidence [7–12] now exists which shows that it takes approximately 3 and 5 years for aspirin’s effect on cancer incidence and cancer-related deaths, respectively, to become apparent. There is also a 5-year carry-over benefit on cancer incidence and at least 10 years for cancer deaths following cessation of aspirin use. Puhan et al. [6] used the Gail/National Cancer Institute approach [13] for their modelling, however, considering such long periods of lag as well as carry-over benefit, time-varying modelling would have been more appropriate.

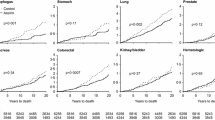

Puhan et al. [6] also modelled aspirin’s effects on 12 cancers based on data from randomised controlled trials (RCTs) reported by Algra and Rothwell [14]. Data from RCTs are most robust for pre-specified primary endpoints. Barring the Women’s Health Study [10], none of the RCTs that have so far been reported had cancer as their primary endpoint and Women’s Health Study data were not included in the analyses by Algra and Rothwell [14]. Despite these analyses, as well as others by Rothwell et al. [11, 12, 15–17], being robust, they should be considered in the context of data from observational studies [18]. After a thorough review of the evidence [8], we concluded that a clear and large benefit exists for colorectal, oesophageal and stomach cancer, and the benefit for lung, breast and prostate cancer is smaller and less clear. Many aspirin experts agree with a beneficial effect on only three gastrointestinal (GI) tract cancers due to the biological and pharmacological plausibility of such an effect [19], as well as due to some uncertainty regarding aspirin’s effects on lung, breast and prostate cancer. Therefore, we provided sensitivity analyses with aspirin’s beneficial effect being limited to three GI cancers as well as colorectal cancer alone [8]. Recent analyses by the U.S. Preventive Services Task Force also take into account only the beneficial effect on colorectal cancer [20]. Therefore, assuming aspirin’s effect on cancers other than colorectal, oesophageal, stomach, lung, breast and prostate cancers is not correct, even when simulations and repetitions consider the statistical uncertainty of effect on other cancers.

The flexibility to adjust weights as per individual preference is a major strength of Puhan et al’s study [6]. However, they assigned a default weight of 1.0 for GI bleeding, which is very misleading. Many end-users will often go by the default weights and it is therefore important to get this right. Their default weight was based on 3-year survival of 45.5 % in one study [21]. GI bleeding is often an accompanying sign or sequelae of a major illness and long-term survival is largely driven by the original disease. Unlike myocardial infraction, stroke or cancer, GI bleeding rarely results in a long-term morbidity on its own. Therefore, using the same approach of 5-year survival to derive weight for GI bleeding is not appropriate and use of 30-day mortality to determine default weight would have been more appropriate. We have extensively reviewed 30-day mortality in GI bleeding (any bleeding), and despite the increasing mortality risk with age, it does not exceed 10 % even in older individuals [22]. Furthermore, these mortality rates continue to fall with improving standards of care [22]. Finally, although aspirin without doubt increases the risk of GI bleeding, it has not been shown to significantly increase the risk of fatal GI bleeding [23, 24]. In short, a default weight of 0.1 would have been more appropriate than that of 1.0.

The authors discuss some of the limitations discussed above, although these should not have existed in the first place, even for a proof-of-concept study. A more thorough approach in reviewing the current evidence as well as an in-depth understanding of the nuances of aspirin’s benefits and harms would have eliminated most of these limitations, making this conceptually excellent tool ready for the next steps of qualitative and quantitative research studies to assess the clinical utility of such an approach.

Future directions and conclusions

Puhan et al. [6] have demonstrated a good proof-of-concept and the computational feasibility of such benefit–harm balance tool. Once refined, as discussed above, this tool can then be subjected to further research, including research in supplementary preference-eliciting tools and presentation formats as discussed by the authors. The clinical utility of such a refined tool will also need to be evaluated in clinical trials. While further research in such tools continues, two important areas also merit simultaneous attention. This tool is based on the incidence of cardiovascular, cancer and bleeding events. Many individuals and their clinicians are keen to know an intervention’s impact on saving lives. Therefore, a similar tool based on mortality due to these diseases should also be developed. The tool also needs to be regularly updated as new reliable evidence becomes available. If the clinical utility of such a tool is demonstrated and the tool gets updated regularly, it will greatly enhance our ability to deliver personalised medicine not only by estimating the benefit–harm balance at an individual level, based on a range of factors in that individual’s clinical profile, but also by taking that person’s individual preferences into account. This would create a vital link between public health and individualised medicine, thus enabling personalised public health.

Abbreviations

GI, Gastrointestinal; MI, Myocardial Infraction; RCTs, Randomised Controlled Trials; WHS, Women’s Health Study

References

Vandvik PO, Lincoff AM, Gore JM, Gutterman DD, Sonnenberg FA, Alonso-Coello P, Akl EA, Lansberg MG, Guyatt GH, Spencer, et al. Primary and secondary prevention of cardiovascular disease: Antithrombotic Therapy and Prevention of Thrombosis, 9th ed: American College of Chest Physicians Evidence-Based Clinical Practice Guidelines. Chest. 2012;141(2 Suppl):e637S–68S.

Force USPST. Routine aspirin or nonsteroidal anti-inflammatory drugs for the primary prevention of colorectal cancer: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2007;146(5):361–4.

Force USPST. Aspirin for the prevention of cardiovascular disease: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2009;150(6):396–404.

Perk J, De Backer G, Gohlke H, Graham I, Reiner Z, Verschuren M, Albus C, Benlian P, Boysen G, Cifkova R, et al. European Guidelines on cardiovascular disease prevention in clinical practice (version 2012). The Fifth Joint Task Force of the European Society of Cardiology and Other Societies on Cardiovascular Disease Prevention in Clinical Practice (constituted by representatives of nine societies and by invited experts). Eur Heart J. 2012;33(13):1635–701.

Bibbins-Domingo K, U.S. Preventive Services Task Force. Aspirin Use for the Primary Prevention of Cardiovascular Disease and Colorectal Cancer: U.S. Preventive Services Task Force Recommendation Statement. Ann Intern Med. 2016;164(12):836–45. doi:10.7326/M16-0577. Epub 2016 Apr 12.

Puhan MA, Yu T, Stegeman I, Varadhan R, Singh S, Boyd CM. Benefit-harm analysis and charts for individualized and preference-sensitive prevention: example of low dose aspirin for primary prevention of cardiovascular disease and cancer. BMC Med. 2015;13:250.

Cao Y, Nishihara R, Wu K, Wang M, Ogino S, Willett WC, Spiegelman D, Fuchs CS, Giovannucci EL, Chan AT. Population-wide Impact of Long-term Use of Aspirin and the Risk for Cancer. JAMA Oncol. 2016;2(6):762–9. doi:10.1001/jamaoncol.2015.6396.

Cuzick J, Thorat MA, Bosetti C, Brown PH, Burn J, Cook NR, Ford LG, Jacobs EJ, Jankowski JA, La Vecchia C, et al. Estimates of benefits and harms of prophylactic use of aspirin in the general population. Ann Oncol. 2015;26(1):47–57.

Thorat MA, Cuzick J. Role of aspirin in cancer prevention. Curr Oncol Rep. 2013;15(6):533–40.

Cook NR, Lee IM, Zhang SM, Moorthy MV, Buring JE. Alternate-day, low-dose aspirin and cancer risk: long-term observational follow-up of a randomized trial. Ann Intern Med. 2013;159(2):77–85.

Rothwell PM, Price JF, Fowkes FG, Zanchetti A, Roncaglioni MC, Tognoni G, Lee R, Belch JF, Wilson M, Mehta Z, et al. Short-term effects of daily aspirin on cancer incidence, mortality, and non-vascular death: analysis of the time course of risks and benefits in 51 randomised controlled trials. Lancet. 2012;379(9826):1602–12.

Rothwell PM, Fowkes FG, Belch JF, Ogawa H, Warlow CP, Meade TW. Effect of daily aspirin on long-term risk of death due to cancer: analysis of individual patient data from randomised trials. Lancet. 2011;377(9759):31–41.

Gail MH, Costantino JP, Bryant J, Croyle R, Freedman L, Helzlsouer K, Vogel V. Weighing the risks and benefits of tamoxifen treatment for preventing breast cancer. J Natl Cancer Inst. 1999;91(21):1829–46.

Algra AM, Rothwell PM. Effects of regular aspirin on long-term cancer incidence and metastasis: a systematic comparison of evidence from observational studies versus randomised trials. Lancet Oncol. 2012;13(5):518–27.

Flossmann E, Rothwell PM. Effect of aspirin on long-term risk of colorectal cancer: consistent evidence from randomised and observational studies. Lancet. 2007;369(9573):1603–13.

Rothwell PM, Wilson M, Elwin CE, Norrving B, Algra A, Warlow CP, Meade TW. Long-term effect of aspirin on colorectal cancer incidence and mortality: 20-year follow-up of five randomised trials. Lancet. 2010;376(9754):1741–50.

Rothwell PM, Wilson M, Price JF, Belch JF, Meade TW, Mehta Z. Effect of daily aspirin on risk of cancer metastasis: a study of incident cancers during randomised controlled trials. Lancet. 2012;379(9826):1591–601.

Bosetti C, Rosato V, Gallus S, Cuzick J, La Vecchia C. Aspirin and cancer risk: a quantitative review to 2011. Ann Oncol. 2012;23(6):1403–15.

Thun MJ, Jacobs EJ, Patrono C. The role of aspirin in cancer prevention. Nat Rev Clin Oncol. 2012;9(5):259–67.

Chubak J, Whitlock EP, Williams SB, Kamineni A, Burda BU, Buist DS, Anderson ML. Aspirin for the Prevention of Cancer Incidence and Mortality: Systematic Evidence Reviews for the U.S. Preventive Services Task Force. Ann Intern Med. 2016;164(12):814–25. doi:10.7326/M15-2117.

Roberts SE, Button LA, Williams JG. Prognosis following upper gastrointestinal bleeding. PLoS One. 2012;7(12), e49507.

Thorat MA, Cuzick J. Prophylactic use of aspirin: systematic review of harms and approaches to mitigation in the general population. Eur J Epidemiol. 2015;30(1):5–18.

Thorat MA, Cuzick J. Reply to the letter to the editor ‘the harms of low-dose aspirin prophylaxis are overstated’ by P. Elwood and G. Morgan. Ann Oncol. 2015;26(2):442–3.

Elwood P, Morgan G. The harms of low-dose aspirin prophylaxis are overstated. Ann Oncol. 2015;26(2):441–2.

Author’s information

MT is a surgical oncologist and a researcher at the Centre for Cancer Prevention, Wolfson Institute of Preventive Medicine, Queen Mary University of London. Therapeutic cancer prevention is one of the main areas of his research, this includes work on aspirin and other drugs potentially suitable for repurposing as cancer prevention agents. He is also involved in aspirin trials in cancer in advisory capacity. He is a member of the executive board of the International Cancer Prevention Society (ICAPS, formerly known as ISCaP), member of the steering committee of Early Cancer Detection Europe (ECaDE), and member of the UK Therapeutic Cancer Prevention Network (UK-TCPN). The views expressed here are personal and do not represent the official position of his institution or organisations he is a member of.

Competing interests

The author declares that he has no competing interests.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Thorat, M.A. Individualised benefit–harm balance of aspirin as primary prevention measure – a good proof-of-concept, but could have been better…. BMC Med 14, 101 (2016). https://doi.org/10.1186/s12916-016-0648-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12916-016-0648-9