Abstract

Background

In healthcare, a gap exists between what is known from research and what is practiced. Understanding this gap depends upon our ability to robustly measure research utilization.

Objectives

The objectives of this systematic review were: to identify self-report measures of research utilization used in healthcare, and to assess the psychometric properties (acceptability, reliability, and validity) of these measures.

Methods

We conducted a systematic review of literature reporting use or development of self-report research utilization measures. Our search included: multiple databases, ancestry searches, and a hand search. Acceptability was assessed by examining time to complete the measure and missing data rates. Our approach to reliability and validity assessment followed that outlined in the Standards for Educational and Psychological Testing.

Results

Of 42,770 titles screened, 97 original studies (108 articles) were included in this review. The 97 studies reported on the use or development of 60 unique self-report research utilization measures. Seven of the measures were assessed in more than one study. Study samples consisted of healthcare providers (92 studies) and healthcare decision makers (5 studies). No studies reported data on acceptability of the measures. Reliability was reported in 32 (33%) of the studies, representing 13 of the 60 measures. Internal consistency (Cronbach's Alpha) reliability was reported in 31 studies; values exceeded 0.70 in 29 studies. Test-retest reliability was reported in 3 studies with Pearson's r coefficients > 0.80. No validity information was reported for 12 of the 60 measures. The remaining 48 measures were classified into a three-level validity hierarchy according to the number of validity sources reported in 50% or more of the studies using the measure. Level one measures (n = 6) reported evidence from any three (out of four possible) Standards validity sources (which, in the case of single item measures, was all applicable validity sources). Level two measures (n = 16) had evidence from any two validity sources, and level three measures (n = 26) from only one validity source.

Conclusions

This review reveals significant underdevelopment in the measurement of research utilization. Substantial methodological advances with respect to construct clarity, use of research utilization and related theory, use of measurement theory, and psychometric assessment are required. Also needed are improved reporting practices and the adoption of a more contemporary view of validity (i.e., the Standards) in future research utilization measurement studies.

Similar content being viewed by others

Background

Clinical and health services research produces vast amounts of new research every year. Despite increased access by healthcare providers and decision-makers to this knowledge, uptake into practice is slow [1, 2] and has resulted in a 'research-practice gap.'

Measuring research utilization

Recognition of, and a desire to narrow, the research-practice gap, has led to the accumulation of a considerable body of knowledge on research utilization and related terms, such as knowledge translation, knowledge utilization, innovation adoption, innovation diffusion, and research implementation. Despite gains in the understanding of research utilization theoretically [3, 4], a large and rapidly expanding literature addressing the individual factors associated with research utilization [5, 6], and the implementation of clinical practice guidelines in various health disciplines [7, 8], little is known about how to robustly measure research utilization.

We located three theoretical papers explicitly addressing the measurement of knowledge utilization (of which research utilization is a component) [9–11], and one integrative review that examined the psychometric properties of self-report research utilization measures used in professions allied to medicine [12]. Within each of these papers, a need for conceptual clarity and pluralism in measurement was stressed. Weiss [11] also argued for specific foci (i.e., focus on specific studies, people, issues, or organizations) when measuring knowledge utilization. Shortly thereafter, Dunn [9], proposed a linear four-step process for measuring knowledge utilization: conceptualization (what is knowledge utilization and how it is defined and classified); methods (given a particular conceptualization, what methods are available to observe knowledge use); measures (what scales are available to measure knowledge use); and reliability and validity. Dunn specifically urged that greater emphasis be placed on step four (reliability and validity). A decade later, Rich [10] provided a comprehensive overview of issues influencing knowledge utilization across many disciplines. He emphasized the complexity of the measurement process, suggesting that knowledge utilization may not always be tied to a specific action, and that it may exist as more of an omnibus concept.

The only review of research utilization measures to date was conducted in 2003 by Estabrooks et al.[12]. The review was limited to self-report research utilization measures used in professions allied to medicine and to the specific data on validity that was extracted. That is, only data that was (by the original authors) explicitly interpreted as validity in the study reports was extracted as 'supporting validity evidence'. A total of 43 articles from three online databases (CINAHL, Medline, and Pubmed) comprised the final sample of articles included in the review. Two commonly used multi-item self-report measures (published in 16 papers) were identified--the Nurses Practice Questionnaire and the Research Utilization Questionnaire. An additional 16 published papers were identified that used single-item self-report questions to measure research utilization. Several problems with these research utilization measures were identified: lack of construct clarity of research utilization, lack of use of research utilization theories, lack of use of measurement theory, and finally, lack of standard psychometric assessment.

The four papers [9–12] discussed above point to a persistent and unresolved problem--an inability to robustly measure research utilization. This presents both an important and a practical challenge to researchers and decision-makers who rely on such measures to evaluate the uptake and effectiveness of research findings to improve patient and organizational outcomes. There are multiple reasons why we believe the measurement of research utilization is important. The most important reason relates to designing and evaluating the effectiveness of interventions to improve patient outcomes. Research utilization is commonly assumed to have a positive impact on patient outcomes by assisting with eliminating ineffective and potentially harmful practices, and implementing more effective (research-based) practices. However, we can only determine if patient outcomes are sensitive to varying levels of research utilization if we can first measure research utilization in a reliable and valid manner. If patient outcomes are sensitive to the use of research and we do not measure it, we, in essence, do the field more harm than good by ignoring a 'black box' of causal mechanisms that can influence research utilization. The causal mechanisms within this back box can, and should, be used to inform the design of interventions that aim to improve patient outcomes by increasing research utilization by care providers.

Study purpose and objectives

The study reported in this paper is a systematic review of the psychometric properties of self-report measures of research utilization used in healthcare. Specific objectives of this study were to: identify self-report measures of research utilization used in healthcare (i.e., used to measure research utilization by healthcare providers, healthcare decision makers, and in healthcare organizations); and assess the psychometric properties of these measures.

Methods

Study selection (inclusion and exclusion) criteria

Studies were included that met the following inclusion criteria: reported on the development or use of a self-report measure of research utilization; and the study population comprised one or more of the following groups--healthcare providers, healthcare decision makers, or healthcare organizations. We defined research utilization as the use of research-based (empirically derived) information. This information could be reported in a primary research article, review/synthesis report, or a protocol. Where the study involved the use of a protocol, we required the research-basis for the protocol to be apparent in the article. We excluded articles that reported on adherence to clinical practice guidelines, the rationale being that clinical practice guidelines can be based on non-research evidence (e.g., expert opinion). We also excluded articles reporting on the use of one specific-research-based practice if the overall purpose of the study was not to examine research utilization.

Search strategy for identification of studies

We searched 12 bibliographic databases; details of the search strategy are located in Additional File 1. We also hand searched the journal Implementation Science (a specialized journal in the research utilization field) and assessed the reference lists of all retrieved articles. The final set of included articles was restricted to those published in the English, Danish, Swedish, and Norwegian languages (the official languages of the research team). There were no restrictions based on when the study was undertaken or publication status.

Selection of Studies

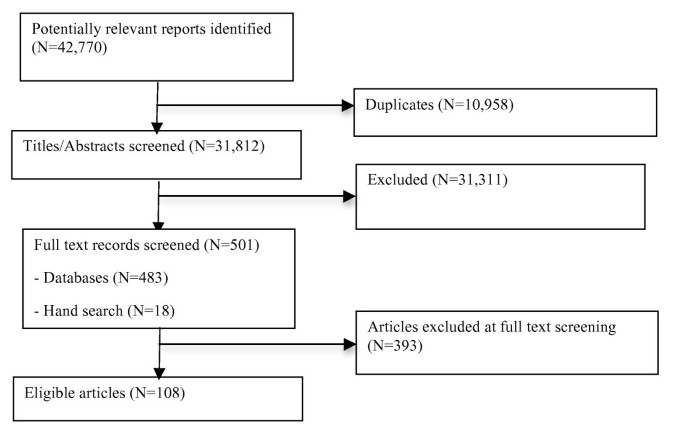

Two team members (JES and HMO) independently screened all titles and abstracts (n = 42,770). Full text copies were retrieved for 501 titles, which represented all titles identified as having potential relevance to our objectives or where there was insufficient information to make a decision as to relevance. A total of 108 articles (representing 97 original studies) comprised the final sample. Disagreements were resolved by consensus. When consensus could not be reached, a third senior member of the review team (CAE, LW) acted as an arbitrator and made the final decision (n = 9 articles). Figure 1 summarizes the results of the screening/selection process. A list of retrieved articles that were excluded can be found in Additional File 2.

Data Extraction

Two reviewers (JES and HMO) performed data extraction: one reviewer extracted the data, which was then checked for accuracy by a second reviewer. We extracted data on: year of publication, study design, setting, sampling, subject characteristics, methods, the measure of research utilization used, substantive theory, measurement theory, responsiveness (the extent to which the measure can assess change over time), reliability (information on variances and standard deviations of measurement errors, item response theory test information functions, and reliability coefficients where extracted where it existed), reported statements of traditional validity (content validity, criterion validity, construct validity), and study findings reflective of the four sources of validity evidence (content, response processes, internal structure, and relations to other variables) outlined in the Standards for Educational and Psychological Testing (the Standards) [13]. Content evidence refers to the extent to which the items in a self-report measure adequately represent the content domain of the concept or construct of interest. Response processes evidence refers to how respondents interpret, process, and elaborate upon item content and whether this behaviour is in accordance with the concept or construct being measured. Internal structure evidence examines the relationships between the items on a self-report measure to evaluate its dimensionality. Relations to other variables evidence provide the fourth source of validity evidence. External variables may include measures of criteria that the concept or construct of interest is expected to predict, as well as relationships to other scales hypothesized to measure the same concepts or constructs, and variables measuring related or different concepts or constructs [13]. In the Standards, validity is a unitary construct in which multiple evidence sources contribute to construct validity. A higher number of validity sources indicate stronger construct validity. An overview of the Standards approach to reliability and validity assessment is in Additional File 3. All disagreements in data extraction were resolved by consensus.

There are no universal criteria to grade the quality of self-report measures. Therefore, in line with other recent measurement reviews [14, 15], we did not use restrictive criteria to rate the quality of each study. Instead, we focused on performing a comprehensive assessment of the psychometric properties of the scores obtained using the research utilization measures reported in each study. In performing this assessment, we adhered to the Standards, considered best practice in the field of psychometrics [16]. Accordingly, we extracted data on all study results that could be grouped according to the Standards' critical reliability information and four validity evidence sources. To assess relations to other variables, we a priori (based on commonly used research utilization theories and systematic reviews) identified established relationships between research utilization and other (external) variables (See Additional File 3). The external variables included: individual characteristics (e.g., attitude towards research use), contextual characteristics (e.g., role), organizational characteristics (e.g., hospital size), and interventions (e.g., use of reminders). All relationships between research use and external variables in the final set of included articles were then interpreted as supporting or refuting validity evidence. The relationship was coded as 'supporting validity evidence' if it was in the same direction and had the significance predicted, and as 'refuting validity evidence' if it was in the opposite direction or did not have the significance predicted.

Data Synthesis

The findings from the review are presented in narrative form. To synthesize the large volume of data extracted on validity, we developed a three-level hierarchy of self-report research utilization measures based on the number of validity sources reported in 50% or more of the studies for each measure. In the Standards, no one source of validity evidence is considered always superior to the other sources. Therefore, in our hierarchy, level one, two, and three measures provided evidence from any three, two, and one validity sources respectively. In the case of single-item measures, only three validity sources are applicable; internal structure validity evidence is not applicable as it assesses relationships between items. Therefore, a single-item measure within level one has evidence from all applicable validity sources.

Results

Objective 1: Identification and characteristics of self-report research utilization measures used in healthcare

In total, 60 unique self-report research utilization measures were identified. We grouped them into 10 classes as follows:

-

1.

Nurses Practice Questionnaire (n = 1 Measure)

-

2.

Research Utilization Survey (n = 1 Measure)

-

3.

Edmonton Research Orientation Survey (n = 1 Measure)

-

4.

Knott and Wildavsky Standards (n = 1 Measure)

-

5.

Other Specific Practices Indices (n = 4 Measures) (See Additional File 4)

-

6.

Other General Research Utilization Indices (n = 10 Measures) (See Additional File 4)

-

7.

Past, Present, Future Use (n = 1 Measure)

-

8.

Parahoo's Measure (n = 1 Measure)

-

9.

Estabrooks' Kinds of Research Utilization (n = 1 Measure)

-

10.

Other Single-Item Measures (n = 39 Measures)

Table 1 provides a description of each class of measures. Classes one through six contain multiple-item measures, while classes seven through ten contain single-item measures; similar proportions of articles reported multi- and single-item measures (n = 51 and n = 59 respectively, two articles reported both multi- and single-item measures). Only seven measures were assessed in multiple studies: Nurses Practice Questionnaire; Research Utilization Survey; Edmonton Research Orientation Survey; a Specific Practice Index [17, 18]; Past, Present, Future Use; Parahoo's Measure; and Estabrooks' Kinds of Research Utilization. All study reports claimed to measure research utilization; however, 13 of the 60 measures identified were proxy measures of research utilization. That is, they measure variables related to using research (e.g., reading research articles) but not research utilization directly. The 13 proxy measures are: Nurses Practice Questionnaire, Research Utilization Questionnaire, Edmonton Research Orientation Survey, and the ten Other General Research Utilization Indices.

The majority (n = 54) of measures were assessed with healthcare providers. Professional nurses comprised the sample in 56 studies (58%), followed by allied healthcare professionals (n = 25 studies, 26%), physicians (n = 7 studies, 7%), and multiple clinical staff groups (n = 5 studies, 5%). A small proportion of studies (n = 5 studies, 5%) measured research utilization by healthcare decision makers. The decision makers, in each study, were members of senior management with direct responsibility for making decisions for a healthcare organization and included: medical officers and program directors [19]; managers in ministries and regional health authorities [20]; senior administrators [21]; hospital managers [22]; and executive directors [23]. A different self-report measure was used in each of these six studies. The unit/organization was the unit of analysis in 6 of the 97 (6%) included studies [22–27]; a unit-level score for research utilization was calculated by aggregating the mean scores of individual care providers.

Most studies were conducted in North America (United States: n = 43, 44% and Canada: n = 22, 23%), followed by Europe (n = 22, 23%). Other geographic areas represented included: Australia (n = 5, 5%), Iran (n = 1, 1%), Africa (n = 2, 2%), and Taiwan (n = 2, 2%). With respect to date of publication, the first report included in this review was published in 1976 [28]. The majority of reports (n = 90, 83%) were published within the last 13 years (See Figure 2).

Objective 2: Psychometric assessment of the self-report research utilization measures

Our psychometric assessment involved three components: acceptability, reliability, and validity.

Acceptability

Acceptability in terms of time required to complete the research utilization measures and missing data (specific to the research utilization items) was not reported.

Reliability

Reliability was reported in 32 (33%) of the studies (See Table 2 and Additional File 5). Internal consistency (Cronbach's Alpha) was the most commonly reported reliability statistic--it was reported for 13 of the 18 multi-item measures (n = 65, 67% of studies). Where reliability (Cronbach's Alpha) was reported, it almost always (n = 29 of 31 studies, 94%) exceeded the accepted standard (> 0.70) for scales intended to compare groups, as recommended by Nunnally and Bernstein [29]. The two exceptions were assessments of the Nurses Practice Questionnaire [30–32]. This tendency to only report reliability coefficients that exceed the accepted standard may potentially reflect a reporting bias.

Stability, or test-retest, reliability was reported for only three (3%) of the studies: two studies assessing the Nurses Practice Questionnaire [33–35], and one study assessing Stiefel's Research Use Index [36]. All three studies reported Pearson r coefficients greater than 0.80 using one-week intervals (Table 2). One study also assessed inter-rater reliability. Pain et al.[37] had trained research staff and study respondents rate their (respondents) use of research on a 7-point scale. Inter-rater reliability among the interviewers was acceptable with pair wise correlations ranging from 0.80 to 0.91 (Table 2). No studies reported other critical reliability information consistent with the Standards, such as variances or standard deviations of measurement errors, item response theory test information functions, or parallel forms coefficients.

Validity

No single research utilization measure had supporting validity evidence from all four evidence sources outlined in the Standards. For 12 measures [38–49], each in the 'other single-item' class, there were no reported findings that could be classified as validity evidence. The remaining 48 measures were classified as level one (n = 6), level two (n = 16), or level three (n = 26) measures, according to whether the average number of validity sources reported in 50% or more of the studies describing an assessment of the measure was three, two, or one, respectively. Level one measures displayed the highest number of validity sources and thus, the strongest construct validity. A summary of the hierarchy is presented in Tables 3, 4, and 5. More detailed validity data is located in Additional File 6.

Measures reporting three sources of validity evidence (level one)

Six measures were grouped as level one: Specific Practices Indices (n = 1), General Research Utilization Indices (n = 3), and Other-Single Items (n = 2) (Table 3). Each measure was assessed in a single study. Five [24, 50–52] of the six measures displayed content, response processes, and relations to other variables validity evidence, while the assessment of one measure [36] provided internal structure validity evidence. A detailed summary of the level one measures is located in Table 6.

Measures reporting two sources of validity evidence (level two)

Sixteen measures were grouped as level two: Nurses Practice Questionnaire (n = 1); Knott and Wildvasky Standards (n = 1); General Research Utilization Indices (n = 4); Specific Practices Indices (n = 2); Estabrooks' Kinds of Research Utilization (n = 1); Past, Present, Future Use (n = 1); and Other Single-Items (n = 6) (Table 4). Most assessments occurred with nurses in hospitals. No single validity source was reported for all level two measures. For the 16 measures in level two, the most commonly reported evidence source was relations to other variables (reported for 12 [75%] of the measures), followed by response processes (n = 7 [44%] of the measures), content (n = 6 [38%] of the measures), and lastly, internal structure (n = 1 [6%] of the measures). Four of the measures were assessed in multiple studies: Nurses Practice Questionnaire, a Specific Practices Index [17, 18], Parahoo's Measure, and Estabrooks' Kinds of Research Utilization.

Measures reporting one source of validity evidence (level three)

The majority (n = 26) of research utilization measures identified fell into level three: Champion and Leach's Research Utilization Survey (n = 1); Edmonton Research Orientation Survey (n = 1); General Research Utilization Indices (n = 3); Specific Practices Indices (n = 1); Past, Present, Future Use (n = 1); and, Other Single-Item Measures (n = 19) (Table 5). The majority of level three measures are single-items (n = 20) and have been assessed in a single study (n = 23). Similar to level two, there was no single source of validity evidence common across all of the level three measures. The most commonly reported validity source was content (reported for 12 [46%] of the measures), followed by response processes (n = 10, 38%), relations to other variables (n = 10, 38%), and lastly, internal structure evidence (n = 1, 4%). Three level three measures were assessed in multiple studies: the Research Utilization Questionnaire; Past, Present, Future Use items; and the Edmonton Research Orientation Survey.

Additional properties

As part of our validity assessment, we paid special attention to how each measure 'functioned'. That is, 'were the measures behaving as they should' . All six level one measures and the majority of level two measures (n = 12 of 16) displayed 'relations to other (external) variables' evidence, indicating that the measures are functioning as the literature hypothesizes a research utilization measure should function. Fewer measures in level three (n = 10 of 26) displayed optimal functioning (Table 5 and Additional File 5). We also looked for evidence of responsiveness of the measures (the extent to which the measure captures change over time); no evidence was reported.

Discussion

Our discussion is organized around three areas: the state of the science of research utilization measurement, construct validity, and our proposed hierarchy of measures.

State of the science

In 2003, Estabrooks et al.[12] completed a review of self-report research utilization measures. By significantly extending the search criteria of that review, we identified 42 additional self-report research utilization measures, a substantial increase in the number of measures available. While, on the surface, this gives the impression of an optimistic picture of research utilization measurement, detailed inspection of the 108 articles included in our review revealed several limitations to these measures. These limitations seriously constrain our ability to validly measure research utilization. The limitations center on ambiguity between different measures and between studies using the same measure, and methodological problems with the design and evaluation of the measures.

Ambiguity in self-report research utilization measures

There is ambiguity with respect to the naming of self-report research utilization measures. For example, similar measures have different names. Parahoo's Measure [53] and Pettengil's single item [54], for example, both ask participants one question--whether they have used research findings in their practice in the past two years or three years, respectively. Conversely, other measures that ask substantially different questions are similarly named; for example, Champion and Leach [55], Linde [56], and Tsai [57, 58] all describe a Research Utilization Questionnaire. Further ambiguity was seen in the articles that described the modification of a pre-existing research utilization measure. In most cases, despite making significant modifications to the measure, the authors retained the original measure's name and, thus, masked the need for additional validity testing. The Nurses Practice Questionnaire is an example of this. Brett [33] originally developed the Nurses Practice Questionnaire, which consisted of 14 research-based practices, to assess research utilization by hospital nurses. The Nurses Practice Questionnaire was subsequently modified (the number of and actual practices assessed, as well as the items that follow each of the practices) and used in eight additional studies [30–32, 35, 59–63], but each study retained the Nurses Practice Questionnaire name.

Methodological problems

In the earlier research utilization measurement review, Estabrooks et al.[12] identified four core methodological problems, lack of: construct clarity, use of research utilization theory, use of measurement theory, and psychometric assessment. In our review, we found that, despite an additional 10 years of research, 42 new measures and 65 new reports of self-report research utilization measures, these problems and others persist.

Lack of construct clarity

Research utilization has been, and is likely to remain, a complex and contested construct. Issues around clarity of research utilization measurement stems from four areas: a lack of definitional precision of research utilization, confusion around the formal structure of research utilization, lack of substantive theory to develop and evaluate research utilization measures, and confusion between factors associated with research utilization and the use of research per se.

Lack of definitional precision with respect to research utilization is well documented. In 1991, knowledge utilization scholar Thomas Backer [64] declared lack of definitional precision as part of a serious challenge of fragmentation that was facing researchers in the knowledge (utilization) field. Since then, there have been substantial efforts to understand what does and does not make research utilization happen. However, the issue of definitional precision continues to be largely ignored. In our review, definitions of research utilization were infrequently reported in the articles (n = 36 studies, 37%) [3, 20, 23, 30, 32, 36, 37, 40, 51, 53, 57, 63, 65–90] and even less frequently incorporated into the administered measures (n = 8 studies, 8%) [3, 67–70, 74, 80, 86, 88]. Where definitions of research utilization were offered, they varied significantly between studies (even studies of the same measure) with one exception: Estabrooks' Kinds of Research Utilization. In this latter measure, the definitions offered were consistent in both the study reports and the administered measure.

A second reason for the lack of clarity in research utilization measurement is confusion around the formal structure of research utilization. The literature is characterized by multiple conceptualizations of research utilization. These conceptualizations influence how we define research utilization and, consequently, how we measure the construct and interpret the scores obtained from such measurement. Two prevailing conceptualizations dominating the field are research utilization as process (i.e., consists of a series of stages/steps) and research utilization as variable or discrete event (a 'variance' approach). Despite debate in the literature with respect to these two conceptualizations, this review revealed that the vast majority of measures that quantify research utilization do so using a 'variable' approach. Only two measures were identified that assess research utilization using a 'process' conceptualization: Nurses Practice Questionnaire [33] (which is based on Rogers' Innovation Decision Process Theory [91, 92]) and Knott and Wildavsky's Standards measure (developed by Belkhodja et al. and based on Knott and Wildavsky's Standards of Research Use model [93]). Some scholars also prescribe research utilization as typological in addition to being a variable or a process. For example, Stetler [88] and Estabrooks [3, 26, 66–70, 74, 80, 86] both have single items that measure multiple kinds of research utilization, with each kind individually conceptualized as a variable. Grimshaw et al.[8], in a systematic review of guideline dissemination and implementation strategies, reported a similar finding with respect to limited construct clarity in the measurement of guideline adherence in healthcare professionals. Measurement of intervention uptake, they argued, is problematic because measures are mostly around the 'process' of uptake rather than on the 'outcomes' of uptake. While both reviews point to lack of construct clarity with respect to process versus variable/outcome measures, they report converse findings with respect to the dominant conceptualization in existing measures. This finding suggests a comprehensive review targeting the psychometric properties of self-report measures used in guideline adherence is also needed. While each conceptualization (process, variable, typological) of research utilization is valid, there is, to date, no consensus regarding which one is best or the most valid.

A third reason for the lack of clarity in research utilization measurement is limited use of substantive theory in the development of research utilization measures. There are numerous theories, frameworks, and models of research utilization and of related constructs, from the fields of nursing (e.g., [94–96]), organizational behaviour (e.g., [97–99]), and the social sciences (e.g., [100]). However, only 1 of the 60 measures identified in this review explicitly reported using research utilization theory in its development. The Nurses Practice Questionnaire [33] was developed based of Rogers' Innovation-Decision Process theory (one component of Rogers' larger Diffusion of Innovations theory [91]). The Innovation-Decision Process theory describes five stages to the adoption of an innovation (research): awareness, persuasion, decision, implementation, and confirmation. A similar finding regarding limited use of substantive theory was also reported by Grimshaw et al.[8] in their review of guideline dissemination and implementation strategies. This limited use of theory in the development and testing of self-report measures may therefore reflect the more general state of the science in the research utilization and related (e.g., knowledge translation) fields that requires addressing.

A fourth and final reason that we identified for the lack of clarity in research utilization measurement is confusion between factors associated with research utilization and the use of research per se. The Nurses Practice Questionnaire [33] and all 10 Other General Research Utilization Indices ([24, 36, 50, 73, 84, 101–105]) claim to directly measure research utilization. However, their items, which while compatible with a process view of research utilization, do not directly measure research utilization. For example, 'reading research' is an individual factor that fits into the awareness stage of Rogers' Innovation Decision-Process theory. The Nurses Practice Questionnaire uses this item to create an overall 'adoption' score, which is interpreted as 'research use' , but it is not 'use' . A majority of the General Research Utilization Indices also includes reading research as an item. In these measures, such individual factors are treated as proxies for research utilization. We caution researchers that while many individual factors like 'reading research' may be a desirable quality for making research utilization happen, they are not research utilization. Therefore, when selecting a research utilization measure to use, the aim of the investigation is paramount; if the aim is to examine research utilization as an event, then measures that incorporate proxies should be avoided.

Lack of measurement theory

Foundational to the development of any measure is measurement theory. The two most commonly used measurement theories are classical test score theory, and modern measurement (or item response) theory. Classical test score theory proposes that an individual's observed score on a construct is the additive composite of their true score and random error. This theory forms the basis for traditional reliability theory (Cronbach's Alpha) [106, 107]. Item response theory is a model-based theory that relates the probability of an individual's response to an item on an underlying trait. It proposes that as an individual's level of a trait (research utilization) increases, the probability of a correct (or in the case of research utilization, a more positive) response also increases [108, 109].

Similar to the previous review by Estabrooks et al.[12], none of the reports in our review explicitly stated that consideration of any kind was given to measurement theory in either the development or assessment of the respective measures. However, in our review, for 14 (23%) of the measures, there was reliability evidence consistent with the adoption of a classical test score theory approach. For example: Cronbach's alpha coefficients were reported on 13 (22%) measures (Table 2) and principal components (factor) analysis and item total correlations were reported on 2 (3%) measures (Tables 3 and 4).

Lack of psychometric assessment

In the previous review, Estabrooks et al.[12] concluded, 'All of the current studies lack significant psychometric assessment of used instruments.' They further stated that over half of the studies in their review did not mention validity, and that only two measures displayed construct validity. This latter finding, we argue, may be attributed to the adoption of a traditional conceptualization of validity where only evidence labeled as validity by the original study authors were considered. In our review, a more positive picture was displayed, with only 12 (20%) of the self-report research utilization measures identified showing no evidence of construct validity. We attribute this, in part, to our implementation of the Standards as a framework for validity. Using this framework, we scrutinized all results (not just those labeled as validity), in terms of whether or not they added to overall construct validity.

Additional limitations to the field

Several additional limitations in research utilization measurement were also noted as a result of this review. They include: limited reporting of data reflective of reliability beyond standard internal consistency (Cronbach's Alpha) coefficients; limited reporting of study findings reflective of validity; limited assessments of the same measure in multiple (> 1) studies; lack of assessment of acceptability and responsiveness; overreliance on the assessment made in the index (original) study of a measure; and failure to re-establish validity when modifications are made and/or the measure is assessed in a new population or context.

Construct validity (the standards)

Traditionally, validity has been conceptualized according to three distinct types: content, criterion, and construct. While this way of thinking about validity has been useful, it has also caused problems. For example, it has led to compartmentalized thinking about validity, making it 'easier' to overlook the fact that construct validity is really the whole of validity theory. It has also led to the incorrect view of validity as a property of a measure rather than of the scores (and resulting interpretations) obtained with the measure. A more contemporary conceptualization of validity (seen in the Standards) was taken in this review. Using this approach, validity was conceptualized as a unitary concept with multiple sources of evidence, each contributing to overall (construct) validity [13]. We believe this conceptualization is both more relevant and more applicable to the study of research utilization than is the traditional conceptualization that dominates the literature [16, 110].

All self-report measures require validity assessments. Without such assessments little to no intrinsic value can be placed on findings obtained with the measure. Validity is associated with the interpretations assigned to the scores obtained using a measure, and thus is intended to be hypothesis-based [110, 111]. Hence, to establish validity, desired score interpretations are first hypothesized to allow for the deliberate collection of data to support or refute the hypotheses [112]. In line with this thinking, data collected using a research utilization self-report measure will always be more or less valid depending on the purpose of the assessment, the population and setting, and timing of the assessment (e.g., before or after an intervention). As a result, we are not able to declare any of the measures we identified in our review as valid or invalid, but only as more or less valid for selected populations, settings, and situations. This deviates substantially from traditional thinking, which suggests that validity either exists or not.

According to Cronbach and Meehl [113], construct validity rests in a nomological network that generates testable propositions that relate scores obtained with self-report measures (as representations of a construct) to other constructs, in order to better understand the nature of the construct being measured [113]. This view is comparable to the traditional conceptualization of construct validity as existing or not, and is also in line with the views of philosophers of science from the first half of the 20th century (e.g., Duhem [114] and Lakatos [115]). Duhem and Lakatos both contended that any theory could be fully justified or falsified based on empirical evidence (i.e., based on data collected with an specific measure). From this perspective, construct validity exists or not. In the second half of the 20th century, however, movement away from justification to what was described by Feyerabend [116] and Kuhn [117] as 'nonjustificationism' occurred. In nonjustificationism, a theory is never fully justified or falsified. Instead, at any given time, it is a closer or further approximation of the truth than another (competing) theory. From this perspective, construct validity is a matter of degree (i.e., more or less valid) and can change with the sample, setting, and situation being assessed. This is in line with a more contemporary (the Standards) conceptualization of validity.

Self-report research utilization measure hierarchy

The Standards[13] provided us with a framework to create a hierarchy of research utilization measures and thus, synthesize a large volume of psychometric data. In an attempt to display the overall extent of construct validity of the measures identified, our hierarchy (consistent with the Standards) placed equal weight on all four evidential sources. While we were able to categorize 48 of the 60 self-report research utilization measures identified into the hierarchy, several cautions exist with respect to use of the hierarchy. First, the levels in the hierarchy are based on the number of validity sources reported, and not on the actual source or quality of evidence within each source. Second, some measures in our hierarchy may appear to have strong validity only because they have been subjected to limited testing. For example, the six measures in level one have only been tested in a single study. Third, the hierarchy included all 48 measures that displayed any validity evidence. Some of these measures, however, are proxies of research utilization. Overall, the hierarchy is intended to present an overview of validity testing to date on the research utilization measures identified. It is meant to inform researchers regarding what testing has been done and, importantly, where additional testing is needed.

Limitations

Although rigorous and comprehensive methods were used for this review, there are three study limitations. First, while we reviewed dissertation databases, we did not search all grey literature sources. Second, due to limited reporting of findings consistent with the four sources of validity evidence in the Standards, we may have concluded lower levels of validity for some measures than actually exist. In the latter case, our findings may reflect poor reporting rather than less validity. Third, our decision to exclude articles that reported on healthcare providers' adherence to clinical practice guidelines may be responsible for the limited number of articles sampling physicians included in the review. A systematic review conducted by Grimshaw et al.[8] on clinical practice guidelines reported physicians alone were the target of 174 (74%) of the 235 studies included in that review. A future review examining the psychometric properties of self-report measures used to quantify guideline adherence would therefore be a fruitful avenue of inquiry.

Conclusions

In this review, we identified 60 unique self-report research utilization measures used in healthcare. While this appears to be a large and definite set of measures, our assessment paints a rather discouraging picture of research utilization measurement. Several of the measures, while labeled research utilization measures, did not assess research utilization per se. Substantial methodological advances in the research utilization field, focusing in the area of measurement (in particular with respect to construct clarity, use of measurement theory, and psychometric assessment) are urgently needed. These advances are foundational to ensuring the availability of defensible self-report measures of research utilization. Also needed are improved reporting practices and the adoption of a more contemporary view of validity (the Standards) in future research utilization measurement studies.

References

Haines A, Jones R: Implementing findings of research. BMJ. 1994, 308 (6942): 1488-1492.

Glaser EM, Abelson HH, Garrison KN: Putting Knowledge to Use: Facilitating the Diffusion of Knowledge and the Implementation of Planned Change. 1983, San Francisco: Jossey-Bass

Estabrooks CA: The conceptual structure of research utilization. Research in Nursing and Health. 1999, 22 (3): 203-216. 10.1002/(SICI)1098-240X(199906)22:3<203::AID-NUR3>3.0.CO;2-9.

Stetler C: Research utilization: Defining the concept. Image:The Journal of Nursing Scholarship. 1985, 17: 40-44. 10.1111/j.1547-5069.1985.tb01415.x.

Godin G, Belanger-Gravel A, Eccles M, Grimshaw G: Healthcare professionals' intentions and behaviours: A systematic review of studies based on social cognitive theories. Implementation Science. 2008, 3 (36):

Squires J, Estabrooks C, Gustavsson P, Wallin L: Individual determinants of research utilization by nurses: A systematic review update. Implementation Science. 2011, 6 (1):

Grimshaw JM, Eccles MP, Walker AE, Thomas RE: Changing physicians' behavior: What works and thoughts on getting more things to work. Journal of Continuing Education in the Health Professions. 2002, 22 (4): 237-243. 10.1002/chp.1340220408.

Grimshaw JM, Thomas RE, MacIennan G, Fraser CR, Vale L, Whity P, Eccles MP, Matowe L, Shirran L, Wensing M: Effectiveness and efficiency of guideline dissemination and implementation strategies. Health Technology Assessment. 2004, 8 (6): 1-72.

Dunn WN: Measuring knowledge use. Knowledge: Creation, Diffusion, Utilization. 1983, 5 (1): 120-133.

Rich RF: Measuring knowledge utilization processes and outcomes. Knowledge and Policy: International Journal of Knowledge Transfer and Utilization. 1997, 3: 11-24.

Weiss CH: Measuring the use of evaluation. Utilizing evaluation: Concepts and measurement techniques. Edited by: Ciarlo JA. 1981, Beverly Hills, CA: Sage, 17-33.

Estabrooks C, Wallin L, Milner M: Measuring knowledge utilization in health care. International Journal of Policy Analysis & Evaluation. 2003, 1: 3-36.

American Educational Research Association, American Psychological Association, National Council on Measurement in Education: Standards for Educational and Psychological Testing. 1999, Washington, D.C.: American Educational Research Association

Shaneyfelt T, Baum K, Bell D, Feldstein D, Houston T, Kaatz S, Whelan C, Green M: Instruments for Evaluating Education in Evidence-Based Practice. JAMA. 2006, 296: 1116-1127. 10.1001/jama.296.9.1116.

Kirkova J, Davis M, Walsh D, Tiernan E, O'Leary N, LeGrand S, Lagman R, Mitchell-Russell K: Cancer symptom assessment instruments: A systematic review. Journal of Clinical Oncology. 2006, 24 (9): 1459-1473. 10.1200/JCO.2005.02.8332.

Streiner D, Norman G: Health Measurement Scales: A practical Guide to their Development and Use. 2008, Oxford: Oxford University Press, 4

Tita ATN, Selwyn BJ, Waller DK, Kapadia AS, Dongmo S: Evidence-based reproductive health care in Cameroon: population-based study of awareness, use and barriers. Bulletin of the World Health Organization. 2005, 83 (12): 895-903.

Tita AT, Selwyn BJ, Waller DK, Kapadia AS, Dongmo S, Tita ATN: Factors associated with the awareness and practice of evidence-based obstetric care in an African setting. BJOG: An International Journal of Obstetrics & Gynaecology. 2006, 113 (9): 1060-1066. 10.1111/j.1471-0528.2006.01042.x.

Dobbins M, Cockerill R, Barnsley J: Factors affecting the utilization of systematic reviews. A study of public health decision makers. International Journal of Technology Assessment in Health Care. 2001, 17 (2): 203-214. 10.1017/S0266462300105069.

Belkhodja O, Amara N, Landry Rj, Ouimet M: The extent and organizational determinants of research utilization in canadian health services organizations. Science Communication. 2007, 28: 377-417. 10.1177/1075547006298486.

Knudsen HK, Roman PM: Modeling the use of innovations in private treatment organizations: The role of absorptive capacity. Journal of Substance Abuse Treatment. 2004, 26 (1): 353-361.

Meehan SMS: An exploratory study of research management programs: Enhancing use of health services research results in health care organizations. (Volumes I and II). Thesis. 1988, The George Washington University

Barwick MA, Boydell KM, Stasiulis E, Ferguson HB, Blase K, Fixsen D: Research utilization among children's mental health providers. Implementation Science. 2008, 3: 19-19. 10.1186/1748-5908-3-19.

Reynolds MIA: An Investigation of Organizational Factors Affecting Research Utilization in Nursing Organizations. Thesis. 1981, University of Michigan

Molassiotis A: Nursing research within bone marrow transplantation in Europe: An evaluation. European Journal of Cancer Care. 1997, 6 (4): 257-261. 10.1046/j.1365-2354.1997.00034.x.

Estabrooks CA, Scott S, Squires JE, Stevens B, O'Brien-Pallas L, Watt-Watson J, Profetto-McGrath J, McGilton K, Golden-Biddle K, Lander J: Patterns of research utilization on patient care units. Implementation Science. 2008, 3: 31-10.1186/1748-5908-3-31.

Pepler CJ, Edgar L, Frisch S, Rennick J, Swidzinski M, White C, Brown TG, Gross J: Unit culture and research-based nursing practice in acute care. Canadian Journal of Nursing Research. 2005, 37 (3): 66-85.

Kirk SA, Osmalov MJ: Social workers involvement in research. Clinical social work: research and practice. Edited by: Russell MN. 1976, Newbury Park, Calif.: Sage Publications, 121-124.

Nunnally J, Bernstein I: Psychometric Theory. 1994, New York: McGraw-Hill, 3

Rodgers SE: A study of the utilization of research in practice and the influence of education. Nurse Education Today. 2000, 20 (4): 279-287. 10.1054/nedt.1999.0395.

Rodgers SE: The extent of nursing research utilization in general medical and surgical wards. Journal of Advanced Nursing. 2000, 32 (1): 182-193. 10.1046/j.1365-2648.2000.01416.x.

Berggren A: Swedish midwives' awareness of, attitudes to and use of selected research findings. Journal of Advanced Nursing. 1996, 23 (3): 462-470. 10.1111/j.1365-2648.1996.tb00007.x.

Brett JL: Use of nursing practice research findings. Nursing Research. 1987, 36 (6): 344-349.

Brett JL: Organizational integrative mechanisms and adoption of innovations by nurses. Nursing Research. 1989, 38 (2): 105-110.

Thompson CJ: Extent and factors influencing research utilization among critical care nurses. Thesis. 1997, Texas Woman's University, College of Nursing

Stiefel KA: Career commitment, nursing unit culture, and nursing research utilization. Thesis. 1996, University of South Carolina

Pain K, Magill-Evans J, Darrah J, Hagler P, Warren S: Effects of profession and facility type on research utilization by rehabilitation professionals. Journal of Allied Health. 2004, 33 (1): 3-9.

Dysart AM, Tomlin GS: Factors related to evidence-based practice among U.S. occupational therapy clinicians. American Journal of Occupational Therapy. 2002, 56: 275-284. 10.5014/ajot.56.3.275.

Ersser SJ, Plauntz L, Sibley A, Ersser SJ, Plauntz L, Sibley A: Research activity and evidence-based practice within DNA: a survey. Dermatology Nursing. 2008, 20 (3): 189-194.

Heathfield ADM: Research utilization in hand therapy practice using a World Wide Web survey design. Thesis. 2000, Grand Valley State University

Kelly KA: Translating research into practice: The physicians' perspective. Thesis. 2008, State University of New York at Albany

Mukohara K, Schwartz MD: Electronic delivery of research summaries for academic generalist doctors: A randomised trial of an educational intervention. Medical Education. 2005, 39 (4): 402-409. 10.1111/j.1365-2929.2005.02109.x.

Niederhauser VP, Kohr L: Research endeavors among pediatric nurse practitioners (REAP) study. Journal of Pediatric Health Care. 2005, 19 (2): 80-89.

Olympia RP, Khine H, Avner JR: The use of evidence-based medicine in the management of acutely ill children. Pediatric Emergency Care. 2005, 21 (8): 518-522. 10.1097/01.pec.0000175451.38663.d3.

Scott I, Heyworth R, Fairweather P: The use of evidence-based medicine in the practice of consultant physicians: Results of a questionnaire survey. Australian and New Zealand Journal of Medicine. 2000, 30 (3): 319-326. 10.1111/j.1445-5994.2000.tb00832.x.

Upton D: Clinical effectiveness and EBP 3: application by health-care professionals. British Journal of Therapy & Rehabilitation. 1999, 6 (2): 86-90.

Veeramah V: The use of research findings in nursing practice. Nursing Times. 2007, 103 (1): 32-33.

Walczak JR, McGuire DB, Haisfield ME, Beezley A: A survey of research-related activities and perceived barriers to research utilization among professional oncology nurses. Oncology Nursing Forum. 1994, 21 (4): 710-715.

Bjorkenheim J: Knowledge and social work in health care - the case of Finland. Social Work in Health Care. 2007, 44 (3): 261-10.1300/J010v44n03_09.

Varcoe C, Hilton A: Factors affecting acute-care nurses' use of research findings. Canadian Journal of Nursing Research. 1995, 27 (4): 51-71.

Dobbins M, Cockerill R, Barnsley J: Factors affecting the utilization of systematic reviews: A study of public health decision makers. International Journal of Technology Assessment in Health Care. 2001, 17 (2): 203-214. 10.1017/S0266462300105069.

Suter E, Vanderheyden LC, Trojan LS, Verhoef MJ, Armitage GD: How important is research-based practice to chiropractors and massage therapists?. Journal of Manipulative and Physiological Therapeutics. 2007, 30 (2): 109-115. 10.1016/j.jmpt.2006.12.013.

Parahoo K: Research utilization and research related activities of nurses in Northern Ireland. International Journal of Nursing Studies. 1998, 35 (5): 283-291. 10.1016/S0020-7489(98)00041-8.

Pettengill MM, Gillies DA, Clark CC: Factors encouraging and discouraging the use of nursing research findings. Image--the Journal of Nursing Scholarship. 1994, 26 (2): 143-147. 10.1111/j.1547-5069.1994.tb00934.x.

Champion VL, Leach A: Variables related to research utilization in nursing: an empirical investigation. Journal of Advanced Nursing. 1989, 14 (9): 705-710. 10.1111/j.1365-2648.1989.tb01634.x.

Linde BJ: The effectiveness of three interventions to increase research utilization among practicing nurses. Thesis. 1989, The University of Michigan

Tsai S: Nurses' participation and utilization of research in the Republic of China. International Journal of Nursing Studies. 2000, 37 (5): 435-444. 10.1016/S0020-7489(00)00023-7.

Tsai S: The effects of a research utilization in-service program on nurses. International Journal of Nursing Studies. 2003, 40 (2): 105-113. 10.1016/S0020-7489(02)00036-6.

Barta KM: Information-seeking, research utilization, and barriers to research utilization of pediatric nurse educators. Journal of Professional Nursing: Official Journal of the American Association of Colleges of Nursing. 1995, 11 (1): 49-57.

Coyle LA, Sokop AG: Innovation adoption behavior among nurses. Nursing Research. 1990, 39 (3): 176-180.

Michel Y, Sneed NV: Dissemination and use of research findings in nursing practice. Journal of Professional Nursing. 1995, 11 (5): 306-311. 10.1016/S8755-7223(05)80012-2.

Rutledge DN, Greene P, Mooney K, Nail LM, Ropka M: Use of research-based practices by oncology staff nurses. Oncology Nursing Forum. 1996, 23 (8): 1235-1244.

Squires JE, Moralejo D, LeFort SM: Exploring the role of organizational policies and procedures in promoting research utilization in registered nurses. Implementation Science. 2007, 2 (1):

Backer TE: Knowledge utilization: The third wave. Knowledge: Creation, Diffusion, Utilization. 1991, 12 (3): 225-240.

Butler L: Valuing research in clinical practice: a basis for developing a strategic plan for nursing research. The Canadian Journal of Nursing Research. 1995, 27 (4): 33-49.

Cobban SJ, Profetto-McGrath J: A pilot study of research utilization practices and critical thinking dispositions of Alberta dental hygienists. International Journal of Dental Hygiene. 2008, 6 (3): 229-237. 10.1111/j.1601-5037.2008.00299.x.

Connor N: The relationship between organizational culture and research utilization practices among nursing home departmental staff. Thesis. 2007, Dalhousie University

Estabrooks CA: Modeling the individual determinants of research utilization. Western Journal of Nursing Research. 1999, 21 (6): 758-772. 10.1177/01939459922044171.

Estabrooks CA, Kenny DJ, Adewale AJ, Cummings GG, Mallidou AA: A comparison of research utilization among nurses working in Canadian civilian and United States Army healthcare settings. Research in Nursing and Health. 2007, 30 (3): 282-296. 10.1002/nur.20218.

Profetto-McGrath J, Hesketh KL, Lang S, Estabrooks CA: A study of critical thinking and research utilization among nurses. Western Journal of Nursing Research. 2003, 25 (3): 322-337. 10.1177/0193945902250421.

Hansen HE, Biros MH, Delaney NM, Schug VL: Research utilization and interdisciplinary collaboration in emergency care. Academic Emergency Medicine. 1999, 6 (4): 271-279. 10.1111/j.1553-2712.1999.tb00388.x.

Hatcher S, Tranmer J: A survey of variables related to research utilization in nursing practice in the acute care setting. Canadian Journal of Nursing Administration. 1997, 10 (3): 31-53.

Karlsson U, Tornquist K: What do Swedish occupational therapists feel about research? A survey of perceptions, attitudes, intentions, and engagement. Scandinavian Journal of Occupational Therapy. 2007, 14 (4): 221-229. 10.1080/11038120601111049.

Kenny DJ: Nurses' use of research in practice at three US Army hospitals. Canadian Journal of Nursing Leadership. 2005, 18 (3): 45-67.

Lacey EA: Research utilization in nursing practice -- a pilot study. Journal of Advanced Nursing. 1994, 19 (5): 987-995. 10.1111/j.1365-2648.1994.tb01178.x.

McCleary L, Brown GT: Research utilization among pediatric health professionals. Nursing and Health Sciences. 2002, 4 (4): 163-171. 10.1046/j.1442-2018.2002.00124.x.

McCleary L, Brown GT: Use of the Edmonton research orientation scale with nurses. Journal of Nursing Measurement. 2002, 10 (3): 263-275. 10.1891/jnum.10.3.263.52559.

McCleary L, Brown GT: Association between nurses' education about research and their reseach use. Nurse Education Today. 2003, 23 (8): 556-565. 10.1016/S0260-6917(03)00084-4.

McCloskey DJ: The relationship between organizational factors and nurse factors affecting the conduct and utilization of nursing research. Thesis. 2005, George Mason University

Milner FM, Estabrooks CA, Humphrey C: Clinical nurse educators as agents for change: increasing research utilization. International Journal of Nursing Studies. 2005, 42 (8): 899-914. 10.1016/j.ijnurstu.2004.11.006.

Nash MA: Research utilization among Idaho nurses. Thesis. 2005, Gonzaga University

Ohrn K, Olsson C, Wallin L: Research utilization among dental hygienists in Sweden -- a national survey. International Journal of Dental Hygiene. 2005, 3 (3): 104-111. 10.1111/j.1601-5037.2005.00135.x.

Olade RA: Evidence-based practice and research utilization activities among rural nurses. Journal of Nursing Scholarship. 2004, 36 (3): 220-225. 10.1111/j.1547-5069.2004.04041.x.

Pelz DC, A HJ, Ciarlo JA: Measuring utilization of nursing research. Utilizing evaluation: Concepts and measurement techniques. Edited by: Anonymous. 1981, Beverly Hills, CA: Sage, 125-149.

Prin PL, Mills MD, Gerdin U: Nurses' MEDLINE usage and research utilization. Nursing informatics: the impact of nursing knowledge on health care informatics proceedings of NI'97, Sixth Triennial International Congress of IMIA-NI, Nursing Informatics of International Medical Informatics Association. Edited by: Amsterdam. 1997, Netherlands: IOS Press, 46: 451-456.

Profetto-McGrath J, Smith KB, Hugo K, Patel A, Dussault B: Nurse educators' critical thinking dispositions and research utilization. Nurse Education in Practice. 2009, 9 (3): 199-208. 10.1016/j.nepr.2008.06.003.

Sekerak DK: Characteristics of physical therapists and their work environments which foster the application of research in clinical practice. Thesis. 1992, The University of North Carolina at Chapel Hill

Stetler CB, DiMaggio G: Research utilization among clinical nurse specialists. Clinical Nurse Specialist. 1991, 5 (3): 151-155.

Wallin L, Bostrom A, Wikblad K, Ewald U: Sustainability in changing clinical practice promotes evidence-based nursing care. Journal of Advanced Nursing. 2003, 41 (5): 509-518. 10.1046/j.1365-2648.2003.02574.x.

Wells N, Baggs JG: A survey of practicing nurses' research interests and activities. Clinical Nurse Specialist: The Journal for Advanced Nursing Practice. 1994, 8 (3): 145-151. 10.1097/00002800-199405000-00009.

Rogers EM: Diffusion of Innovations. 1983, New York: The Free Press, 3

Rogers E: Diffusion of Innovations. 1995, New York: The Free Press, 4

Knott J, Wildavsky A: If dissemination is the solution, what is the problem?. Knowledge: Creation, Diffusion, Utilization. 1980, 1 (4): 537-578.

Titler MG, Kleiber C, Steelman VJ, Rakel BA, Budreau G, Everett LQ, Buckwalter KC, Tripp-Reimer T, Goode CJ: The Iowa Model of evidence-based practice to promote quality care. Critical Care Nursing Clinics of North America. 2001, 13 (4): 497-509.

Kitson A, Harvey G, McCormack B: Enabling the implementation of evidence based practice: a conceptual framework. Quality in Health Care. 1998, 7 (3): 149-158. 10.1136/qshc.7.3.149.

Logan J, Graham ID: Toward a comprehensive interdisciplinary model of health care research use. Science Communication. 1998, 20 (2): 227-246. 10.1177/1075547098020002004.

Abrahamson E, Rosenkopf L: Institutional and competitive bandwagons - Using mathematical-modeling as a tool to explore innovation diffusion. Academy of Management Review. 1993, 18 (3): 487-517.

Warner K: A 'desperation-reaction' model of medical diffusion. Health Services Research. 1975, 10 (4): 369-383.

Orlikowski WJ: Improvising organizational transformation over time: A situated change perspective. Information Systems Research. 1996, 7 (1): 63-92. 10.1287/isre.7.1.63.

Weiss C: The many meanings of research utilization. Public Administration Review. 1979, 39 (5): 426-431. 10.2307/3109916.

Forbes SA, Bott MJ, Taunton RL: Control over nursing practice: a construct coming of age. Journal of Nursing Measurement. 1997, 5 (2): 179-190.

Grasso AJ, Epstein I, Tripodi T: Agency-Based Research Utilization in a Residential Child Care Setting. Administration in Social Work. 1988, 12 (4): 61-

Morrow-Bradley C, Elliott R: Utilization of psychotherapy research by practicing psychotherapists. American Psychologist. 1986, 41 (2): 188-197.

Rardin DK: The Mesh of research Tand practice: The effect of cognitive style on the use of research in practice of psychotherapy. Thesis. 1986, University of Maryland College Park

Kamwendo K, Kamwendo K: What do Swedish physiotherapists feel about research? A survey of perceptions, attitudes, intentions and engagement. Physiotherapy Research International. 2002, 7 (1): 23-34. 10.1002/pri.238.

Hambleton R, Jones R: Comparison of classical test theory and item response theory and their applications to test development. Educational Measurement: Issues & Practice. 1993, 12 (3):

Ellis BB, Mead AD: Item analysis: Theory and practice using classical and modern test theory. Handbook of Research Methods in Industrial and Organizational Psychology. 2002, Blackwell Publications, 324-343.

Hambleton R, Swaminathan H, Rogers J: Fundamentals of Item Response Theory. Newbury Park, CA: Sage. 1991

Van der Linden W, Hambleton R: Handbook of Modern Item Response Theory. New York: Springer. 1997

Downing S: Validity: on the meaningful interpretation of assessment data. Medical Education. 2003, 37: 830-837. 10.1046/j.1365-2923.2003.01594.x.

Kane M: Validating high-stakes testing programs. Educational Measurement: Issues and Practice. 2002, Spring 2002: 31-41.

Kane MT: An argument-based approach to validity. Psychological Bulletin. 1992, 112 (3): 527-535.

Cronbach LJ, Meehl PE: Construct validity in psychological tests. Psychological Bulletin. 1955, 52: 281-302.

Duhem P: The Aim and Structure of Physical Theory. 1914, Princeton University Press

Lakatos I: Criticism and methodology of scientific research programs. Proceeding of the Aristotelian Society for the Systematic Study of Philosophy. 1968, 69: 149-186.

Feyerabend P: How to be a good empiricist - a plea for tolerance in matters epistemological. Philosophy of Science: The Central Issues. Edited by: Curd M, Cover J. 1963, New York: W.W. Norton & Company, 922-949.

Kuhn TS: The Structure of Scientific Revolutions. 1970, Chicago: University of Chicago Press, 2

Bostrom A, Wallin L, Nordstrom G: Research use in the care of older people: a survey among healthcare staff. International Journal of Older People Nursing. 2006, 1 (3): 131-140. 10.1111/j.1748-3743.2006.00014.x.

Bostrom AM, Wallin L, Nordstrom G: Evidence-based practice and determinants of research use in elderly care in Sweden. Journal of Evaluation in Clinical Practice. 2007, 13 (4): 665-10.1111/j.1365-2753.2007.00807.x.

Bostrom AM, Kajermo KN, Nordstrom G, Wallin L: Barriers to research utilization and research use among registered nurses working in the care of older people: Does the BARRIERS Scale discriminate between research users and non-research users on perceptions of barriers?. Implementation Science. 2008, 3 (1):

Humphris D, Hamilton S, O'Halloran P, Fisher S, Littlejohns P: Do diabetes nurse specialists utilise research evidence?. Practical Diabetes International. 1999, 16 (2): 47-50. 10.1002/pdi.1960160213.

Humphris D, Littlejohns P, Victor C, O'Halloran P, Peacock J: Implementing evidence-based practice: factors that influence the use of research evidence by occupational therapists. British Journal of Occupational Therapy. 2000, 63 (11): 516-222.

McCloskey DJ, McCloskey DJ: Nurses' perceptions of research utilization in a corporate health care system. Journal of Nursing Scholarship. 2008, 40 (1): 39-45. 10.1111/j.1547-5069.2007.00204.x.

Tranmer JE, Lochhaus-Gerlach J, Lam M: The effect of staff nurse participation in a clinical nursing research project on attitude towards, access to, support of and use of research in the acute care setting. Canadian Journal of Nursing Leadership. 2002, 15 (1): 18-26.

Pain K, Hagler P, Warren S: Development of an instrument to evaluate the research orientation of clinical professionals. Canadian Journal of Rehabilitation. 1996, 9 (2): 93-100.

Bonner A, Sando J: Examining the knowledge, attitude and use of research by nurses. Journal of Nursing Management. 2008, 16 (3): 334-343. 10.1111/j.1365-2834.2007.00808.x.

Henderson A, Winch S, Holzhauser K, De Vries S: The motivation of health professionals to explore research evidence in their practice: an intervention study. Journal of Clinical Nursing. 2006, 15 (12): 1559-1564. 10.1111/j.1365-2702.2006.01637.x.

Waine M, Magill-Evans J, Pain K: Alberta occupational therapisits' perspectives on and participation in research. Canadian Journal of Occupational Therapy. 1997, 64 (2): 82-88.

Aron J: The utilization of psychotherapy research on depression by clinical psychologists. Thesis. 1990, Auburn University

Brown DS: Nursing education and nursing research utilization: is there a connection in clinical settings?. Journal of Continuing Education in Nursing. 1997, 28 (6): 258-262. quiz 284

Parahoo K: A comparison of pre-Project 2000 and Project 2000 nurses' perceptions of their research training, research needs and of their use of research in clinical areas. Journal of Advanced Nursing. 1999, 29 (1): 237-245. 10.1046/j.1365-2648.1999.00882.x.

Parahoo K: Research utilization and attitudes towards research among psychiatric nurses in Northern Ireland. Journal of Psychiatric and Mental Health Nursing. 1999, 6 (2): 125-135. 10.1046/j.1365-2850.1999.620125.x.

Parahoo K, McCaughan EM: Research utilization among medical and surgical nurses: A comparison of their self reports and perceptions of barriers and facilitators. Journal of Nursing Management. 2001, 9 (1): 21-30. 10.1046/j.1365-2834.2001.00237.x.

Parahoo K, Barr O, McCaughan E: Research utilization and attitudes towards research among learning disability nurses in Northern Ireland. Journal of Advanced Nursing. 2000, 31 (3): 607-613. 10.1046/j.1365-2648.2000.01316.x.

Valizadeh L, Zamanzadeh V: Research in brief: Research utilization and research attitudes among nurses working in teaching hospitals in Tabriz, Iran. Journal of Clinical Nursing. 2003, 12: 928-930. 10.1046/j.1365-2702.2003.00798.x.

Veeramah V: Utilization of research findings by graduate nurses and midwives. Journal of Advanced Nursing. 2004, 47 (2): 183-191. 10.1111/j.1365-2648.2004.03077.x.

Callen JL, Fennell K, McIntosh JH: Attitudes to, and use of, evidence-based medicine in two Sydney divisions of general practice. Australian Journal of Primary Health. 2006, 12 (1): 40-46. 10.1071/PY06007.

Cameron KAV, Ballantyne S, Kulbitsky A, Margolis-Gal M, Daugherty T, Ludwig F: Utilization of evidence-based practice by registered occupational therapists. Occupational Therapy International. 2005, 12 (3): 123-136. 10.1002/oti.1.

Elliott V, Wilson SE, Svensson J, Brennan P: Research utilisation in sonographic practice: Attitudes and barriers. Radiography. 2008

Erler CJ, Fiege AB, Thompson CB: Flight nurse research activities. Air Medical Journal. 2000, 19 (1): 13-18. 10.1016/S1067-991X(00)90086-5.

Logsdon C, Davis DW, Hawkins B, Parker B, Peden A: Factors related to research utilization by registered nurses in Kentucky. Kentucky Nurse. 1998, 46 (1): 23-26.

Miller JP: Speech-language pathologists' use of evidence-based practice in assessing children and adolescents with cognitive-communicative disabilities: a survey. Thesis. 2007, Eastern Washington University

Nelson TD, Steele RG: Predictors of practitioner self-reported use of evidence-based practices: Practitioner training, clinical setting, and attitudes toward research. Administration and Policy in Mental Health and Mental Health Services Research. 2007, 34 (4): 319-330. 10.1007/s10488-006-0111-x.

Ofi B, Sowunmi L, Edet D, Anarado N, Ofi B, Sowunmi L, Edet D, Anarado N: Professional nurses' opinion on research and research utilization for promoting quality nursing care in selected teaching hospitals in Nigeria. International Journal of Nursing Practice. 2008, 14 (3): 243-255. 10.1111/j.1440-172X.2008.00684.x.

Oliveri RS, Gluud C, Wille-Jorgensen PA: Hospital doctors' self-rated skills in and use of evidence-based medicine - a questionnaire survey. Journal of Evaluation in Clinical Practice. 2004, 10 (2): 219-10.1111/j.1365-2753.2003.00477.x.

Sweetland J, Craik C: The use of evidence-based practice by occupational therapists who treat adult stroke patients. British Journal of Occupational Therapy. 2001, 64 (5): 256-260.

Veeramah V: A study to identify the attitudes and needs of qualified staff concerning the use of research findings in clinical practice within mental health care settings. Journal of Advanced Nursing. 1995, 22 (5): 855-861.

Wood CK: Adoption of innovations in a medical community: The case of evidence-based medicine. Thesis. 1996, University of Hawaii

Wright A, Brown P, Sloman R: Nurses' perceptions of the value of nursing research for practice. Australian Journal of Advanced Nursing. 1996, 13 (4): 15-18.

Acknowledgements

This project was made possible by the support of the Canadian Institutes of Health Research (CIHR) Knowledge Translation Synthesis Program (KRS 86255). JES is supported by CIHR postdoctoral and Bisby fellowships. CAE holds a CIHR Canada Research Chair in Knowledge Translation. HMO holds Alberta Heritage Foundation for Medical Research (AHFMR) and KT Canada (CIHR) doctoral scholarships. PG holds a grant from AFA Insurance, and LW is supported by the Center for Care Sciences at Karolinska Institutet. We would like to thank Dagmara Chojecki, MLIS for her support in finalizing the search strategy.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors' contributions

JES, CAE, PG, and LW participated in designing the study and securing funding for the project. JES, CAE, HMO, PG, and LW participated in developing the search strategy, study relevance, and data extraction tools. JES and HMO undertook the article selection and data extraction. All authors participated in data synthesis. JES drafted the manuscript. All authors provided critical commentary on the manuscript and approved the final version.

Electronic supplementary material

13012_2010_399_MOESM1_ESM.PDF

Additional File 1: Search Strategy. This file contains the details of the search strategy used for the review. (PDF 74 KB)

13012_2010_399_MOESM2_ESM.PDF

Additional File 2: Exclusion List by Reason (N = 393). This file contains a list of the retrieved articles that were excluded from the review and the reason each article was excluded. (PDF 412 KB)

13012_2010_399_MOESM3_ESM.PDF

Additional File 3: The Standards. This file contains an overview of the Standards for Educational and Psychological Testing Validity Framework and sample predictions used to assess 'relations to other variables' validity evidence according to this framework. (PDF 192 KB)

13012_2010_399_MOESM4_ESM.PDF

Additional File 4: Description of Other Specific Practices Indices and Other General Research Use Indices. This file contains a description of the four measures included in the class 'Other Specific Practices Indices' and the ten measures included in the class 'Other General Research Use Indices'. (PDF 112 KB)

13012_2010_399_MOESM5_ESM.PDF

Additional File 5: Reported Reliability of Self-Report Research Utilization Measures. This file contains the reliability coefficients reported in the included studies. (PDF 85 KB)

13012_2010_399_MOESM6_ESM.PDF

Additional file 6: Supporting Validity Evidence by Self-Report Research Utilization Measure. This file contains the detailed validity evidence on each included self-report research utilization measure. (PDF 355 KB)

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Squires, J.E., Estabrooks, C.A., O'Rourke, H.M. et al. A systematic review of the psychometric properties of self-report research utilization measures used in healthcare. Implementation Sci 6, 83 (2011). https://doi.org/10.1186/1748-5908-6-83

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1748-5908-6-83