Abstract

Motivated by the uplink scenario in cellular cognitive radio, this study considers a communication network in which a point-to-point channel with a cognitive transmitter and a Multiple Access Channel (MAC) with common information share the same medium and interfere with each other. A Multiple Access-Cognitive Interference Channel (MA-CIFC) is proposed with three transmitters and two receivers, and its capacity region in different interference regimes is investigated. First, the inner bounds on the capacity region for the general discrete memoryless case are derived. Next, an outer bound on the capacity region for full parameter regime is provided. Using the derived inner and outer bounds, the capacity region for a class of degraded MA-CIFC is characterized. Two sets of strong interference conditions are also derived under which the capacity regions are established. Then, an investigation of the Gaussian case is presented, and the capacity regions are derived in the weak and strong interference regimes. Some numerical examples are also provided.

Similar content being viewed by others

1. Introduction

Interference avoidance techniques have traditionally been used in wireless networks wherein multiple source-destination pairs share the same medium. However, the broadcasting nature of wireless networks may enable cooperation among entities, which ensures higher rates with more reliable communication. On the other hand, due to the increasing number of wireless systems, spectrum resources have become scarce and expensive. The exponentially growing demand for wireless services along with the rapid advancements in wireless technology has lead to cognitive radio technology which aims to overcome the spectrum inefficiency problem by developing communication systems that have the capability to sense the environment and adapt to it [1].

In overlay cognitive networks, the cognitive user can transmit simultaneously with the non-cognitive users and compensate for the interference by cooperation in sending, i.e., relaying, the non-cognitive users' messages [1]. From an information theoretic point of view, Cognitive Interference Channel (CIFC) was first introduced in [2] to model an overlay cognitive radio and refers to a two-user Interference Channel (IFC) in which the cognitive user (secondary user) has the ability to obtain the message being transmitted by the other user (primary user), either in a non-causal or in a causal manner. An achievable rate region for the non-causal CIFC was derived in [2], by combining the Gel'fand-Pinsker (GP) binning [3] with a well-known simultaneous superposition coding scheme (rate splitting) applied to IFC [4]. For the non-causal CIFC, where the cognitive user has non-causal full or partial knowledge of the primary user's transmitted message several achievable rate regions and capacity results in some special cases have been established [5–14]. More recently a three-user cognitive radio network with one primary user and two cognitive users is studied in [15, 16], where an achievable rate region is derived for this setup based on rate splitting and GP binning.

In the interference avoidance-based systems, i.e., when the communication medium is interference-free, uplink transmission is modeled with a Multiple Access Channel (MAC) whose capacity region has been fully characterized for independent transmitters [17, 18] as well as for the transmitters with common information [19]. Recently, taking the effects of interference into account in the uplink scenario, a MAC and an IFC have been merged into one setup by adding one more transmit-receive pair to the communication medium of a two-user MAC [20, 21], where the channel inputs at the transmitters are independent and there is no cognition or cooperation.

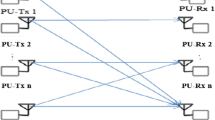

In this paper, we introduce Multiple Access-Cognitive Interference Channel (MA-CIFC) by providing the transmitter of the point-to-point channel with cognition capabilities in the uplink with interference model. Moreover, transmitters of MAC have common information that enables cooperation among them. As shown in Figure 1, the proposed channe consists of three transmitters and two receivers: two-user MAC with common information as the primary network and a point-to-point channel with a cognitive transmitter that knows the message being sent by all of the transmitters in a non-causa manner. A physical example of this channel is the coexistence of cognitive users with the licensed primary users in a cellular or satellite uplink transmission, where the cognitive radios by their abilities exploit side information about the environment to maintain or improve the communication of primary users while also achieving some spectrum resources for their own communication. In this scenario, the primary non-cognitive users can be oblivious to the or aware of the cognitive users [1]. When the non-cognitive user is oblivious to the cognitive user's presence, its receiver's decoding process is independent of the interference caused by the cognitive user's transmission. In fact, the primary receiver treats interference as noise. However, in the aware cognitive user's scenario, the decoding process at the primary receiver can be adapted to improve its own rate. For example, the primary receiver can decode the cognitive user's message and cancel the interference when the interfering signal is strong enough. If the multi-antenna capability is available at the primary receiver, it can also reduce or increase the interfering signal by beam-steering, which results in the occurrence of the weak or strong interference regimes [1].

To analyze the capacity region of MA-CIFC, we first derive three inner bounds on the capacity region (achievable rate regions). The first two bounds assume an oblivious primary receiver, which does not decode the cognitive user's message but treats it as noise. Two different coding schemes are proposed based on the superposition coding, the GP binning and the method of [6] in defining auxiliary Random Variables (RVs). Later, we show that these strategies are optimal for a degraded MA-CIFC and also in the Gaussian weak interference regime. In the third achievability scheme, we consider an aware primary receiver and obtain an inner bound on the capacity region based on using superposition coding in the encoding part and allowing both receivers to decode all messages with simultaneous joint decoding in the decoding part. This strategy is capacity-achieving in the strong interference regime. Next, we provide a general outer bound on the capacity region and derive conditions under which the first achievability scheme achieves capacity for the degraded MA-CIFC. We continue the capacity results by the derivation of two sets of strong interference conditions, under which the third inner bound achieves capacity. Further, we compare these two sets of conditions and identify the weaker set. We also extend the strong interference results to a network with k primary users.

Moreover, we consider the Gaussian case and find capacity results for the Gaussian MA-CIFC in both the weak and strong interference regimes. We use the second derived inner bound to show that the capacity-achieving scheme in weak interference consists of Dirty Paper Coding (DPC) [22] at the cognitive transmitter and treating interference as noise at both receivers. We also provide some numerical examples.

The rest of the paper is organized as follows. Section 2 introduces MA-CIFC model and the notations. Three inner bounds and an outer bound on the capacity region are derived in Section 3 and Section 4, respectively, for the discrete memoryless MA-CIFC. Section 5 presents the capacity results for the discrete memoryless MA-CIFC in three special cases. In Section 6, the Gaussian MA-CIFC is investigated. Finally, Section 7 concludes the paper.

2. Channel models and preliminaries

Throughout the paper, upper case letters (e.g. X) are used to denote RVs and lower case letters (e.g. x) show their realizations. The probability mass function (p.m.f) of a RV X with alphabet set is denoted by p X (x), where subscript X is occasionally omitted. specifies the set of ϵ-strongly, jointly typical sequences of length n. The notation indicates a sequence of RVs (X i , Xi+1, ..., X j ), where Xjis used instead of , for brevity. denotes a zero mean normal distribution with variance σ2.

Consider the MA-CIFC in Figure 2, which is denoted by , where and are channel inputs at Transmitter 1 (Tx1), Transmitter 2 (Tx2) and Transmitter 3 (Tx3), respectively; and are channel outputs at Receiver 1 (Rx1) and Receiver 3 (Rx3), respectively; and is the channel transition probability distribution. In n channel uses, each Txj desires to send a message pair (m0, m j ) to Rx1 where j ∈ {1,2}, and Tx3 desires to send a message m3 to Rx3.

Definition 1: A code for MA-CIFC consists of (i) four independent message sets , where j ∈ {0, 1, 2, 3}; (ii) two encoding functions at the primary transmitters, at Tx1 and at Tx2; (iii) an encoding function at the cognitive transmitter, ; and (iv) two decoding functions, at Rx1 and at Rx3. We assume that the channel is memoryless. Thus, the channel transition probability distribution is given by

The probability of error for this code is defined as

Definition 2: A rate quadruple (R0, R1, R2, R3) is achievable if there exists a sequence of codes with P e → 0 as n → ∞. The capacity region , is the closure of the set of all achievable rates.

3. Inner bounds on the capacity region of discrete memoryless MA-CIFC

Now, we derive three achievable rate regions for the general setup. Theorems 1 and 2 assume an oblivious primary receiver (Rx1), which does not decode the cognitive user's message (m3) and treats it as noise. The decoding procedure at the cognitive receiver (Rx3) differs in these schemes. In Theorem 1, the cognitive receiver (Rx3) decodes the primary messages (m0, m1, m2), and all the transmitters use superposition coding. However, in Theorem 2, the cognitive receiver (Rx3) also treats the interference from the primary messages (m0, m1, m2) as noise, while the cognitive transmitter (Tx3) uses GP binning to precode its message for interference cancelation at Rx3. We also utilize the method of [6] in defining auxiliary RVs, which helps us to achieve the outer bound in special cases. In fact, we achieve the outer bound of Theorem 4 using the region of Theorem 1 for a class of degraded MA-CIFC in Section 5. The region of Theorem 2 is used in Section 6 to derive the capacity region in the weak interference regime. In the scheme of Theorem 3, we consider an aware primary receiver (Rx1) which decodes the cognitive user's message (m3). The cognitive receiver (Rx3) also decodes the primary messages (m0, m1, m2). Therefore, this region is obtained based on using superposition coding in the encoding part and by allowing both receivers to decode all messages with simultaneous joint decoding in the decoding part. In Section 5, we show that this strategy is capacity-achieving in the strong interference regime. Proofs are provided in "Appendix A".

Theorem 1: The union of rate regions given by

is achievable for MA-CIFC, where the union is over all p.m.fs that factor as

Theorem 2: The union of rate regions given by (3)-(6) and

is achievable for MA-CIFC, where the union is over all p.m.fs that factor as

Theorem 3: The union of rate regions given by

is achievable for MA-CIFC, where the union is over all p.m.fs that factor as

Remark 1: We utilize the region of Theorem 1 in Section 5 to achieve capacity results for a class of degraded MA-CIFC, and the region of Theorem 2 to derive the results for the Gaussian case in Section 6. The region of Theorem 3 is also used to characterize the capacity region under strong interference conditions in Section 5.

4. An outer bound on the capacity region of discrete memoryless MA-CIFC

Here, we derive a general outer bound on the capacity region of MA-CIFC which is used to obtain the capacity region for a class of the degraded MA-CIFC in Section 5 and also to find capacity results for the Gaussian MA-CIFC in the weak interference regime in Section 6. Let denote the union of all rate quadruples (R0, R1, R2, R3) satisfying (3)-(6) and

where the union is over all p.m.fs that factor as (11).

Theorem 4: The capacity region of MA-CIFC satisfies

Proof: Consider a code with the average error probability of . Define the following RVs for i = 1, ..., n:

Considering the encoding functions f1 and f2, defined in Definition 1, and the above definitions for auxiliary RVs, we remark that (X1,i, U i ) → T i → (X2,i, V i ) forms a Markov chain. Thus, these choices of auxiliary RVs satisfy the p.m.f (11) of Theorem 4. Now using Fano's inequality [23], we derive the bounds in Theorem 4. For the first bound, we have:

where (a) follows since messages are independent and (b) holds due to Fano's inequality and the fact that conditioning does not increase entropy. Hence,

where (a) is due to the encoding functions f1, f2 and f3, defined in Definition 1, and the non-negativity of mutual information, (b) is obtained from the chain rule, (c) follows from the memoryless property of the channel and the fact that conditioning does not increase entropy, and (d) is obtained from (20)-(22).

Now, applying Fano's inequality and the independence of the messages, we can bound R1 as:

where (a) follows from the chain rule and the encoding functions f1 and f2, and (b) from (20)-(22). Similarly, we can show that

Next, based on similar arguments, we bound R1 + R2 as

The last sum-rate bound can be derived as follows:

where (a) follows since conditioning does not increase entropy. Using the standard time-sharing argument for (24)-(28) completes the proof.

5. Capacity results for discrete memoryless MA-CIFC

In this section, we characterize the capacity region of MA-CIFC under specific conditions. First, we consider a class of degraded MA-CIFC and derive conditions under which the inner bound in Theorem 1 achieves the outer bound of Theorem 4. Next, we investigate the strong interference regime by deriving two sets of strong interference conditions under which the region of Theorem 3 achieves capacity. We also compare these two sets of conditions and identify the weaker set. Finally, we extend the strong interference results to a network with k primary users.

A. Degraded MA-CIFC

Now, we characterize the capacity region for a class of MA-CIFC with a degraded primary receiver. We define MA-CIFC with a degraded primary receiver as a MA-CIFC where Y1 and X3 are independent given Y3, X1, X2. More precisely, the following Markov chain holds:

or equivalently, X3 → (X1, X2, Y3) → Y1 forms a Markov chain. This means that the primary receiver (Rx1) observes a degraded or noisier version of the cognitive user's signal (Tx3) compared with the cognitive receiver (Rx3).

Assume that the following conditions are satisfied for MA-CIFC over all p.m.fs that factor as (11):

Under these conditions, the cognitive receiver (Rx3) can decode the messages of the primary users with no rate penalty. If MA-CIFC with a degraded primary receiver satisfies conditions (30)-(33), the region of Theorem 1 coincides with and achieves capacity, as stated in the following theorem.

Theorem 5: The capacity region of MA-CIFC with a degraded primary receiver, defined in (29), satisfying (30)-(33) is given by the union of rate regions satisfying (2)-(6) over all joint p.m.fs (11).

Remark 2: The messages of the primary users (m0, m1, m2) can be decoded at Rx3 under conditions (30)-(33). Therefore, Rx3-Tx3 achieves the rate in (2). Moreover, we can see that due to the degradedness condition in (29), treating interference as noise at the primary receiver (Rx1) achieves capacity. We show in Section 6 that, in the Gaussian case the capacity is achieved by using the region of Theorem 2 based on DPC (or GP binning), where the cognitive receiver (Rx3) does not decode the primary messages and conditions (30)-(33) are not necessary.

Proof: Achievability: The proof follows from the region of Theorem 1. Using the condition in (30), the sum of the bounds in (2) and (3) makes the bound in (7) redundant. Similarly, conditions (31)-(33), along with the bound in (2), make the bounds in (8)-(10) redundant and the region reduces to (2)-(6).

Converse: To prove the converse part, we evaluate of Theorem 4 with the degradedness condition in (29). It is noted that the p.m.f of Theorem 5 is the same as the one for . Moreover, the bounds in (3)-(6) are equal for both regions. Hence, it is only necessary to show the bound in (2). Considering (19), we obtain:

where (a) is obtained by applying the degradedness condition in (29). This completes the proof.

B. Strong interference regime

Now, we derive two sets of strong interference conditions under which the region of Theorem 3 achieves capacity. First, assume that the following set of strong interference conditions, referred to as Set1, holds for all p.m.fs that factor as (18):

In fact, under these conditions, interfering signals at the receivers are strong enough that all messages can be decoded by both receivers. Condition (34) implies that the cognitive user's message (m3) can be decoded at Rx1, while conditions (35)-(37) guarantee the decoding of the primary messages (m0, m1, m2) along with m3 at Rx3 in a MAC fashion.

Theorem 6: The capacity region of MA-CIFC satisfying (34)-(37) is given by:

Remark 3: The message of the cognitive user (m3) can be decoded at Rx1, under condition (34) and (m0, m1, m2) can be decoded at Rx3 under conditions (35)-(37). Hence, the bound in (38) gives the capacity of a point-to-point channel with message m3 with side-information X1, X2 at the receiver. Moreover, (38)-(41) with condition (34) give the capacity region for a three-user MAC with common information where R1 and R2 are the common rates, R3 is the private rate for Tx3, and the private rates for Tx1 and Tx2 are zero.

Remark 4: If we omit Tx2, i.e., , and Tx2 has no message to transmit, i.e., R2 = 0, the model reduces to a CIFC, and coincides with the capacity region of the strong interference channel with unidirectional cooperation (or CIFC), which was characterized in [8, Theorem 5]. It is noted that in this case, the common message can be ignored, i.e., and R0 = 0.

Proof: Achievability: Considering (35)-(37), the proof follows from Theorem 3.

Converse: Consider a code with an average error probability of . Define the following RV for i = 1, ..., n:

It is noted that due to the encoding functions f1, f2 and f3, defined in Definition 1, the independence of messages, and the above definitions for Tn, RVs satisfy the p.m.f (18) of Theorem 6. First, we provide a useful lemma which we need in the proof of the converse part.

Lemma 1: If (34) holds for all distributions that factor as (18), then

Proof: The proof relies on the results in [24, Proposition 1] and [25, Lemma]. By redefining X2 = X3, Y2 = Y3, X1 = (X1, X2, T) in [8, Lemma 5], the proof follows.

Now, using Fano's inequality [23], we derive the bounds in Theorem 6. Using (23) provides:

where (a) is due to (42) and the encoding functions f1, f2 and f3, defined in Definition 1, (b) follows from two facts; conditioning does not increase entropy and forms a Markov chain, (c) is obtained from the chain rule, and (d) follows from the memoryless property of the channel and the fact that conditioning does not increase entropy.

Now, applying Fano's inequality and the independence of the messages, we can bound R1 + R3 as

where (a) follows from encoding functions f1, f2 and f3, (b) follows from (42) and the fact that forms a Markov chain, (c) is obtained from (43), (d) follows from the chain rule, and (e) follows from the memoryless property of the channel and the fact that conditioning does not increase entropy.

Applying similar steps, we can show that,

Finally, the sum-rate bound can be obtained as

where (a) follows from steps (a)-(c) in (45), (b) is due to the fact that forms a Markov chain, and (c) follows from the memoryless property of the channel and the fact that conditioning does not increase entropy. Using a standard time-sharing argument for (44)-(47) completes the proof.

Next, we derive the second set of strong interference conditions, called Set2, under which the region of Theorem 3 is the capacity region. For all p.m.fs that factor as (18), Set2 includes (34) and the following conditions:

Remark 5: Similar to the condition Set1, under these conditions interfering signals at the receivers are strong enough that all messages can be decoded by both receivers. The first condition in (34) is equal in the two sets under which the cognitive user's message (m3) can be decoded at Rx1. However, conditions (48)-(50) imply that the primary messages (m0, m1, m2) can be decoded at Rx3 in a MAC fashion, while in Set1, they can be decoded along with m3.

Theorem 7: The capacity region of MA-CIFC, satisfying (34) and (48)-(50), referred to as , is given by the union of rate regions satisfying (14)-(17) over all p.m.fs that factor as (18).

Proof: See "Appendix B".

Remark 6: Similar to Remark 4, by omitting , the model reduces to a CIFC. Moreover, and Set2 reduce to the capacity region and strong interference conditions which have been derived in [13] for non-causal CIFC.

Remark 7 (Comparison of two sets of conditions): In the strong interference conditions of Set1, the first condition in (34) is used in the converse part, while (35)-(37) are used to reduce the inner bound to . However, all the conditions of Set2 are utilized to prove the converse part. Now, we compare the conditions in these two sets. We can write (35) as

Considering (34), it can be seen that Idiff ≥ 0. Hence, condition (35) implies condition (48), but not vice versa. Similar conclusions can be drawn for other conditions of these two sets. Therefore, Set1 implies Set2, and the conditions of Set2 are weaker compared to those of Set1.

C. Multiple access-cognitive interference network (MA-CIFN)

Now, we extend the result of Theorem 6 to a network with k + 1 transmitters and two receivers; a k-user MAC as a primary network and a point-to-point channel with a cognitive transmitter. We call it Multiple Access-Cognitive Interference Network (MA-CIFN). Consider MA-CIFN in Figure 3, denoted by , where is the channel input at Transmitter j (Txj), for and are channel outputs at the primary and cognitive receivers, respectively, and is the channel transition probability distribution. In n channel uses, each Txj desires to send a message pair m j to the primary receiver where j ∈ {1, ..., k}, and Txk + 1 desires to send a message mk+1to the cognitive receiver. We ignore the common information for brevity. Definitions 1 and 2 can be simply extended to the MA-CIFN. Therefore, we state the result on the capacity region under strong interference conditions.

Corollary 1: The capacity region of the MA-CIFN, satisfying

for all S ⊆ [1: k] and for every p(x1)p(x2)...p(x k ) p(xk+ 1|x1,x2,...,x k )p(y1,yk+1|x1,x2,...,x k ,xk+1), is given by

for all S ⊆ [1: k], where X(S) is the ordered vector of X j , j ∈ S, and Scdenotes the complement of the set S.

Proof: Following the same lines as the proof of Theorem 6, the proof is straightforward. Therefore, it is omitted for the sake of brevity.

Remark 8: Under condition (51), the message of the cognitive user (mk+1) can be decoded at the primary receiver (Y1). Also, the cognitive receiver (Yk+1), under condition (52), can decode m j ; j ∈ {1,...,k} in a MAC fashion. Therefore, the bound in (53) gives the capacity of a point-to-point channel with message mk+1with side-information X j ; j ∈ {1,..., k} at the cognitive receiver. Moreover, (53) and (54) with condition (51), give the capacity region for a k + 1-user MAC with common information at the primary receiver.

6. Gaussian MA-CIFC

In this section, we consider the Gaussian MA-CIFC and characterize capacity results for the Gaussian case in the weak and strong interference regimes. For simplicity, we assume that Tx1 and Tx2 have no common information. This means that, R0 = 0 and . to investigate these regions. The Gaussian MA-CIFC, as depicted in Figure 4, at time i = 1,..., n can be mathematically modeled as

where h31, h13, and h23 are known channel gains. X1,i, X2,i and X3,iare input signals with average power constraints:

for j ∈ {1,2, 3}. Z1,iand Z3,iare independent and identically distributed (i.i.d) zero mean Gaussian noise components with unit powers, i.e., for j ∈ {1, 3}.

A. Strong interference regime

Here, we extend the results of Theorem 6, i.e., and Set1, to the Gaussian case. The strong interference conditions of Set1, i.e., (34)-(37), for the above Gaussian model become:

where - 1 ≤ ρ u ≤ 1 is the correlation coefficient between X u and X3, i.e., for u ∈ {1, 2}.

Theorem 8: For the Gaussian MA-CIFC satisfying conditions (58)-(61), the capacity region is given by

where, to simplify notation, we define

Remark 9: Condition (58) implies that Tx3 causes strong interference at Rx1. This enables Rx1 to decode m3. Moreover, (59)-(61) provide strong interference conditions at Rx3, under which all messages can be decoded in Rx3 in a MAC fashion.

Proof: The achievability part follows from in Theorem 6 by evaluating (38)-(41) with zero mean jointly Gaussian channel inputs X1, X2 and X3. That is, , and , where E(X1,X2) = 0, , and . The converse proof is based on reasoning similar to that in [26] and is provided in "Appendix C".

It is noted that the channel parameters, i.e., P1,P2,P3,h31,h13,h23, must satisfy (58)-(61) for all , to numerically evaluate the using (62)-(65). Here, we choose which satisfy strong interference conditions (58)-(61); hence, the regions are derived under strong interference conditions.

Figure 5 shows the capacity region for the Gaussian MA-CIFC of Theorem 8, for P1 = P2 = P3 = 6, and , where ρ1 = ρ2 is fixed in each surface. The ρ1 = ρ2 = 0 region corresponds to the no cooperation case, where the channel inputs are independent. It can be seen that as ρ1 = ρ2 increases, the bound on R3 becomes more restrictive while the sum-rate bounds become looser; because Tx3 dedicates parts of its power for cooperation. This means that, as Tx3 allocates more power to relay m1, m2 by increasing ρ1 = ρ2, R1 and R2 improve, while R3 degrades due to the less power allocated to transmit m3. The capacity region for this channel is the union of all the regions obtained for different values of ρ1 and ρ2, satisfying . This union is shown in Figure 6. In order to better perceive the effect of cooperation, we let R2 = 0 in Figure 7. It is seen that by increasing ρ1 = ρ2, the bound on R1 + R3 becomes looser and R1 improves, while R3 decreases due to the more power dedicated for cooperation.

B. Weak interference regime

Now, we consider the Gaussian MA-CIFC with weak interference at the primary receiver (Rx1), which means h31 ≤ 1. We remark that, since there is no cooperation between receivers, the capacity region for this channel is the same as the one with the same marginal outputs and . Hence, we can state the following useful lemma.

Lemma 2: The capacity region of a Gaussian MA-CIFC, defined by (55) and (56) when h31 ≤ 1, is the same as the capacity region of a Gaussian MA-CIFC with the following channel outputs:

where Y'3,i= X3,i+ Z3,iand . Therefore, the degradedness condition in (29) holds for the Gaussian MA-CIFC when h31 ≤ 1.

Proof: The proof follows from [6, Lemma 3.5].

Next, we use the inner bound of Theorem 2 and the outer bound of Theorem 4 to derive the capacity region, which shows that the capacity-achieving scheme in this case consists of DPC at the cognitive transmitter and treating interference as noise at both receivers.

Theorem 9: For the Gaussian MA-CIFC, defined by (55) and (56), when h31 ≤ 1, the capacity region is given by

where θ(·) is defined in (66).

Remark 10: By evaluating (2) with jointly Gaussian channel inputs, one can easily achieve (69). However, this results in the Gaussian counterparts of the bounds in (7)-(10). Therefore, some conditions are necessary to make these bounds redundant, similar to the ones in (30)-(33). However, we show that (12) is also evaluated to (69), if we apply DPC with appropriate parameters. Hence, conditions (30)-(33) are unnecessary in the Gaussian case. This means that DPC completely mitigates the effects of interference for the Tx3-Rx3 pair and leaves the link between them interference-free for fixed values of ρ1, ρ2. Consequently, is independent of h13 and h23.

Remark 11: If we omit Tx2, the model reduces to a CIFC and by setting coincides with the capacity region of the Gaussian CIFC with weak interference, which was characterized in [6, Lemma 3.6].

Proof: The rate region in Theorem 2 can be extended to the discrete-time Gaussian memoryless case with continuous alphabets by standard arguments [23]. Hence, it is sufficient to evaluate (3)-(6) and (12) with an appropriate choice of input distribution to reach (69)-(72). Let , and , since Tx1 and Tx2 have no common information. Also, let U and V be deterministic constants. We choose zero mean jointly Gaussian channel inputs X1, X2 and X3. In fact, for j ∈ {1,2,3}, where E(X1,X2) = 0, , and . Noting the p.m.f (13), consider the following choice of input distribution for certain :

Therefore, (3)-(6) are easily evaluated to (70)-(72). In "Appendix D", we derive (69) by evaluating (12) with appropriate parameters. The converse proof follows by applying the bounds in the proof of Theorem 4 to the Gaussian case and utilizing Entropy Power Inequality (EPI) [23, 27]. A detailed converse proof is provided in "Appendix D".

Remark 12: According to Theorems 8 and 9, jointly Gaussian channel inputs X1, X2 and X3 are optimal for the Gaussian MA-CIFC under the strong and weak interference conditions, determined in the above theorems.

Figure 8 shows the capacity region for the Gaussian MA-CIFC of Theorem 9, for P1 = P2 = P3 = 6, and , where ρ1 = ρ 2 is fixed in each surface. It is noted that the capacity region is independent of h13 and h23. The ρ1 = ρ2 = 0 region corresponds to the no cooperation case, where channel inputs are independent. We see that when Tx3 dedicates parts of its power for cooperation, i.e., ρ1 = ρ2 = 0.5, the rates of the primary users (R1, R2) increase, while R3 decreases. The capacity region for this channel is the union of all the regions obtained for different values of ρ1 and ρ2 satisfying, , which is shown in Figure 9. Similar to Figure 7, we investigate the capacity region for R2 = 0 in Figure 10 in the weak interference regime. It is seen that, when Tx3 dedicates more power for cooperation by increasing ρ1 = ρ2, R1 improves and R3 decreases.

7. Conclusion

We investigated a cognitive communication network where a MAC with common information and a point-to-point channel share a same medium and interfere with each other. For this purpose, we introduced Multiple Access-Cognitive Interference Channel (MA-CIFC) by merging a two-user MAC as a primary network and a cognitive transmitter-receiver pair in which the cognitive transmitter knows the message being sent by all of the transmitters in a non-causal manner. We analyzed the capacity region of MA-CIFC by deriving the inner and outer bounds on the capacity region of this channel. These bounds were proved to be tight in some special cases. Therefore, we determined the optimal strategy in these cases. Specifically, in the discrete memoryless case, we established the capacity regions for a class of degraded MA-CIFC and also under two sets of strong interference conditions. We also derive strong interference conditions for a network with k primary users. Further, we characterized the capacity region of the Gaussian MA-CIFC in the weak and strong interference regimes. We showed that DPC at the cognitive transmitter and treating interference as noise at the receivers, i.e., an oblivious primary receiver, are optimal in the weak interference. However, the receivers have to decode all messages when the interference is strong enough, which requires an aware primary receiver.

Appendix A Proofs of Theorems 1, 2 and 3

Outline of the proof for Theorem 1: We propose the following random coding scheme, which contains superposition coding and the technique of [6] in defining auxiliary RVs, i.e., U and V. The cognitive receiver (Rx3) decodes the interfering signals caused by the primary messages (m1, m2), while the primary receiver (Rx1) does not decode the interference from the cognitive user's message (m3) and treats it as noise.

Codebook Generation: Fix a joint p.m.f as (11). Generate i.i.d tnsequences, according to the probability . Index them as tn(m0) where . For each tn(m0), generate i.i.d sequences, each with probability . Index them as where . Similarly, for each tn(m0), generate i.i.d sequences, each with probability . Index them as where . For each , generate i.i.d sequences, according to . Index them as where .

Encoding: In order to transmit the messages (m0, m1, m2, m3), Txj sends for j ∈ {1,2} and Tx3 sends .

Decoding:

Rx1: After receiving , Rx1 looks for a unique triple and some such that

For large enough n with arbitrarily high probability, if (3)-(6) hold.

Rx3: After receiving , Rx3 finds a unique index and some triple such that

With arbitrary high probability, if n is large enough and (2), (7)-(10) hold. This completes the proof.

Outline of the proof for Theorem 2: Our proposed random coding scheme, in the encoding part, contains the methods of Theorem 1 and GP binning at the cognitive transmitter (Tx3) which is used at Tx3 to cancel the interference caused by m0, m1, m2 at Rx3. In the decoding part, both receivers decode only their intended messages, treating the interference as noise. Therefore, unlike the decoding part of Theorem 1, Rx3 decodes only its own message (m 3), while treating the other signals as noise.

Codebook Generation: Fix a joint p.m.f as (13). Generate codewords based on the same lines as in the codebook generation part of Theorem 1. Then, generate i.i.d wnsequences. Index them as wn(m3,l) where and l ∈ [1,2nL].

Encoding: In order to transmit the messages (m0,m1,m2,m3), Txj sends for j ∈ {1,2}. Tx3 (the cognitive transmitter), in addition to m3, knows m0, m1 and m2. Hence, knowing codewords , to send m3, it seeks an index l such that

If there is more than one such index, Tx3 picks the smallest. If there is no such codeword, it declares an error. Using covering lemma [27], it can be shown that there exists such an index l with high enough probability if n is sufficiently large and

Then, Tx3 sends generated according to .

Decoding: The decoding procedure at Rx1 is similar to Theorem 1 and the error in this receiver can be bounded if (3)-(6) hold.

Rx3: After receiving , Rx3 finds a unique index for some index such that

For large enough n, the probability of error can be made sufficiently small if

Combining (74) and (75) results in (12). This completes the proof.

Outline of the proof for Theorem 3: We propose the following random coding scheme, which contains superposition coding in the encoding part and simultaneous joint decoding in the decoding part. All messages are common to both receivers, i.e., both receivers decode (m0, m1, m2, m3).

Codebook Generation: Fix a joint p.m.f as (18). Generate i.i.d tnsequences, according to the probability . Index them as tn(m0) where . For j ∈ {1,2} and each tn(m0), generate i.i.d sequences, each with probability . Index them as where . For each , generate i.i.d sequences, each with probability . Index them as where .

Encoding: In order to transmit message (m0, m1, m2, m3), Txj sends for j ∈ {1,2} and Tx3 sends .

Decoding:

Rx1: After receiving , Rx1 looks for a unique triple and some such that

For large enough n, with arbitrarily high probability if

Rx3: Similarly, after receiving , Rx3 finds a unique index and some triple such that

With the arbitrary high probability , if n is large enough and

This completes the proof.

Appendix B Proof of Theorem 7

Since achievability directly follows from Theorem 3, we proceed to the converse part. We assume a code with the properties indicated in the converse proof of Theorem 6 and define Tnas (42). Four bounds in are the same as the bounds in , which are shown in the converse proof of Theorem 6. Therefore, it is necessary to prove the three bounds. Moreover, similar to Lemma 1, we have:

Lemma 3: If (48)-(50) hold for all distributions that factor as (18), then

We next prove the remaining three bounds of Theorem 7. Applying Fano's inequality, similar to (45), we have:

where (a) follows from (42) and the deterministic relation between and M1, (b) is obtained from (83), (c) is due to the fact that mutual information is non-negative, and (d) follows from the facts that conditioning does not increase entropy and the channel is memoryless.

Using a similar approach and condition (84), it can be shown that

Finally, using (47) we obtain the last sum-rate bound as:

where (a) is obtained from the deterministic relation between and (M0, M j ) for j ∈ {1,2}, and (b) follows from (85). Using a standard time-sharing argument for (44)-(47) and (86)-(88) completes the proof.

Appendix C Proof of the converse part for Theorem 8

For any rate triple , Rx1 is able to decode m1 and m2 reliably. Then, Rx1 is able to construct

If condition (58) holds, then is a less noisy version of Y3. Since Rx3 has to decode m3, Rx1 can decode m3 via with no rate penalty. Therefore, (R1, R2, R3) is contained in the capacity region of a three-user MAC with common information [19] at Rx1, where R1 and R2 are the common rates between Tx1-Tx3 and Tx2-Tx3, respectively; R3 is the private rate for Tx3; and the private rates for Tx1 and Tx2 are zero. From the maximum entropy theorem [23], this region is largest for Gaussian inputs and is evaluated to (63)-(65). The bound in (62) follows by applying the standard methods as in (44).

Appendix D Detailed proof for Theorem 9

First, we derive (69) by evaluating (12) with appropriate parameters. Consider the mapping in (73). We choose

It is noted that (91) makes (1 - β)X'3 - βZ3 and X'3 + Z3 uncorrelated and hence independent since they are jointly Gaussian. Using (12) and (73), we obtain

Now, we evaluate h(W|Y3):

where (a) follows from (89), (90), and is obtained because, by applying (91), , X1 and X2 are jointly independent. By applying (93) to (92), we can evaluate (12) as:

This completes the achievability proof.

Converse: Utilizing the power constraint (57), we derive the bounds of for the Gaussian MA-CIFC when h31 < 1. It can be easily shown that we can set , since R0 = 0 and . Applying the degradedness condition (29) to (19), which holds due to Lemma 2, we obtain:

Using the fact that conditioning does not increase the entropy and (57) with j = 3 yields:

Hence, there exists 0 ≤ λ ≤ 1 such that

Combining (94) and (95) results in

for some 0 ≤ λ ≤ 1. Now, considering that the Gaussian distribution maximizes the entropy of a RV for a given value of the second moment, we have:

where , and . Considering (95) and (97), we obtain:

Moreover, since (69) is achievable and R3 does not appear in (70)-(72), it can be shown that

Consequently, (69) follows from (96) and (98). Now, consider the bound in (3):

We can compute the first term as

which incorporates the fact that Gaussian distribution maximizes the entropy of a RV for a given value of the second moment.

To compute the second term in (99), the Entropy Power Inequality (EPI) [23, 27] is used to obtain:

where (a) follows from (67), (b) is obtained from EPI, and (c) follows from (95) and (98). Therefore, (3) is evaluated to (70) by combining (99)-(101).

Utilizing (101), one can easily evaluate (4) and (5) to (71) and (72), respectively. This completes the converse proof for Theorem 9.

References

Goldsmith A, Jafar SA, Maric I, Srinivasa S: Breaking spectrum gridlock with cognitive radios: an information theoretic perspective. Proc ieee, invited 2009, 97(5):894-914.

Devroye N, Mitran P, Tarokh V: Achievable rates in cognitive radio channels. ieee Trans Inf Theory 2006, 52: 1813-1827.

Gel'fand S, Pinsker M: Coding for channels with random parameters. Probl Control Info Theory 1980, 9(1):19-31.

Han TS, Kobayashi K: A new achievable rate region for the interference channel. ieee Trans Inf Theory 1981, 27: 49-60. 10.1109/TIT.1981.1056307

Jovicic A, Viswanath P: Cognitive radio: an information-theoretic perspective. ieee Trans Inf Theory 2009, 55: 3945-3958.

Wu W, Vishwanath S, Arapostathis A: Capacity of a class of cognitive radio channels: interference channels with degraded message sets. ieee Trans Inf Theory 2007, 53(11):4391-4399.

Devroye N, Vu M, Tarokh V: Achievable rates and scaling Laws in cognitive radio channels. EURASIP J Wirel Commun Netw Special issue on cognitive radio and dynamic spectrum sharing systems 2008., 2008(896246):

Maric I, Yates RD, Kramer G: Capacity of interference channels with partial transmitter cooperation. IEEE Trans Inf Theory 2007, 53: 3536-3548.

Maric I, Goldsmith A, Kramer G, Shamai (Shitz) S: On the capacity of interference channels with one cooperating transmitter. Eur Trans Telecommun 2008, 19: 405-420. 10.1002/ett.1298

Rini S, Tuninetti D, Devroye N: State of the cognitive interference channel: a new unified inner bound, and capacity to within 1.87 bits. Proceedings of the 2010 International Zurich Seminar on Communications [http://arxiv.org/abs/0910.3028v1]

Rini S, Tuninetti D, Devroye B: New inner and outer bounds for the discrete memoryless cognitive interference channel and some capacity results.2010. [http://arxiv.org/abs/1003.4328v1]

Mirmohseni M, Akhbari B, Aref MR: On the capacity of interference channel with causal and non-causal generalized feedback at the cognitive transmitter. IEEE Trans Inf Theory submitted to, April 2010, Revised, Oct. 2011. [http://ee.sharif.ir/~mirmohseni/IT-prep.pdf]

Mirmohseni M, Akhbari B, Aref MR: Capacity regions for some classes of causal cognitive interference channels with delay. In Proceedings of the IEEE Information Theory Workshop (ITW). Dublin; 2010.

Jiang J, Xin Y: On the achievable rate regions for interference channels with degraded message sets. IEEE Trans Inf Theory 2008, 54: 4707-4712.

Nagananda KG, Murthy CR: Three-user cognitive channels with cumulative message sharing: an achievable rate region. In Proceedings of the IEEE Information Theory Workshop (ITW). Greece; 2009:291-295.

Nagananda KG, Murthy CR: Information theoretic results for three-user cognitive channels. Proceedings of the IEEE Globecom 2009.

Ahlswede R: Multiway communication channels. In: Proceedings of the IEEE International Symposium Information Theory (ISIT). Tsahkadsor, Armenian S.S.R; 1971:23-52.

Liao HHJ: Multiple access channels. University of Hawaii, Honolulu; 1972.

Slepian D, Wolf JK: A coding theorem for multiple access channels with correlated sources. Bell Syst Tech J 1973, 52: 1037-1076.

Chaaban A, Sezgin A: On the capacity of the 2-user Gaussian MAC interfering with a P2P link.2010. [http://arxiv.org/abs/1010.6255v1]

Zhu F, Shang X, Chen B, Poor HV: On the capacity of multiple-access-Z-interference channels.2010. [http://arxiv.org/abs/1011.1225v1]

Costa MHM: Writing on dirty paper. IEEE Trans Inf Theory 1983, 29: 439-441. 10.1109/TIT.1983.1056659

Cover TM, Thomas JA: Elements of Information Theory. 2nd edition. Wiley Series in Telecommunications; 2006.

Koörner J, Marton K: Comparison of two noisy channels. In Topics in information theory. Edited by: I Csiszar, P Elias. North-Holland, Amsterdam; 1977. Colloquia Mathematica Societatis Janos Bolyai

Costa MHM, El Gamal A: The capacity region of the discrete mem-oryless interference channel with strong interference. IEEE Trans Inf Theory 1987, 33(5):710-711. 10.1109/TIT.1987.1057340

Sato H: The capacity of the Gaussian interference channel under strong interference. IEEE Trans Inf Theory 1981, 27(6):786-788. 10.1109/TIT.1981.1056416

El Gamal A, Kim YH: Lecture notes on network information theory.2010. [http://arxiv.org/abs/1001.3404]

Acknowledgements

This work was partially supported by Iran National Science Foundation (INSF) under contract No. 88114.46-2010 and by Iran Telecom Research Center (ITRC) under contract No. T500/17865. The material in this paper has been presented in part in the Canadian Workshop on Information Theory (CWIT), Kelowna, British Columbia, Canada, May 17-20, 2011.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Mirmohseni, M., Akhbari, B. & Aref, M.R. Capacity bounds for multiple access-cognitive interference channel. J Wireless Com Network 2011, 152 (2011). https://doi.org/10.1186/1687-1499-2011-152

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1687-1499-2011-152