Abstract

Strong gravitational lenses provide source/lens distance ratios \({\mathcal {D}}_{\mathrm{obs}}\) useful in cosmological tests. Previously, a catalog of 69 such systems was used in a one-on-one comparison between the standard model, \(\varLambda \)CDM, and the \(R_{\mathrm{h}}=ct\) universe, which has thus far been favored by the application of model selection tools to many other kinds of data. But in that work, the use of model parametric fits to the observations could not easily distinguish between these two cosmologies, in part due to the limited measurement precision. Here, we instead use recently developed methods based on Gaussian Processes (GP), in which \({\mathcal {D}}_{\mathrm{obs}}\) may be reconstructed directly from the data without assuming any parametric form. This approach not only smooths out the reconstructed function representing the data, but also reduces the size of the \(1\sigma \) confidence regions, thereby providing greater power to discern between different models. With the current sample size, we show that analyzing strong lenses with a GP approach can definitely improve the model comparisons, producing probability differences in the range \(\sim \) 10–30%. These results are still marginal, however, given the relatively small sample. Nonetheless, we conclude that the probability of \(R_{\mathrm{h}}=ct\) being the correct cosmology is somewhat higher than that of \(\varLambda \)CDM, with a degree of significance that grows with the number of sources in the subsamples we consider. Future surveys will significantly grow the catalog of strong lenses and will therefore benefit considerably from the GP method we describe here. In addition, we point out that if the \(R_{\mathrm{h}}=ct\) universe is eventually shown to be the correct cosmology, the lack of free parameters in the study of strong lenses should provide a remarkably powerful tool for uncovering the mass structure in lensing galaxies.

Similar content being viewed by others

1 Introduction

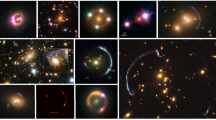

The degree to which light from high-redshift quasars is deflected by intervening galaxies can be calculated precisely if one has enough information concerning the distribution of mass within the gravitational lens [1, 2]. Depending on the mass of the galaxy, and the alignment between source, lens, and observer, gravitational lenses may be classified either as macro (with sub-classes of strong and weak lensing) or micro lensing systems. Strong lensing occurs when the source, lens, and observer are sufficiently well aligned that the deflection of light forms an Einstein ring. Using the angle of deflection, one may derive the radius of this ring, from which one may then also compute the angular diameter distance to the lens. This distance, however, is model dependent. Hence, together with the measured redshift of the source, this angular diameter distance may be used to discriminate between various cosmological models (see, e.g., Ref. [3,4,5,6,7]).

In this paper, we use a recent compilation of 118 [8] plus 40 [9] strong lensing systems, with good spectroscopic measurements of the central velocity dispersion based on the Sloan Lens ACS (SLACS) Survey [4, 10, 11], and the Lenses Structure and Dynamics (LSD ) Survey (see, e.g., refs. [12, 13]), to conduct a comparative study between \(\varLambda \)CDM [14, 15] and another Friedmann–Robertson–Walker (FRW) cosmology known as the \(R_{\mathrm{h}}=ct\) universe [16, 17]. Over the past decade, such comparative tests between this alternative model and \(\varLambda \)CDM have been carried out using a wide assortment of data, most of them favouring the former over the latter (for a summary of these tests, see Table 1 in ref. [18]). These studies have included high z-quasars [19], gamma-ray bursts [15], Type Ia SNe [21, 22], and cosmic chronometers [23]. The \(R_{\mathrm{h}}=ct\) model is characterized by a total equation of state \(p=-\rho /3\), in terms of the total pressure p and density \(\rho \) in the cosmic fluid.

The results of these comparative tests are not yet universally accepted, however, and several counterclaims have been made in recent years. One may loosely group these into four general categories: (1) that the gravitational radius (and therefore also the Hubble radius) \(R_{\mathrm{h}}\) is not really physically meaningful [24,25,26]; (2) that the zero active mass condition \(\rho +3p=0\) at the basis of the \(R_{\mathrm{h}}=ct\) cosmology is inconsistent with the actual constitutents in the cosmic fluid [27]; (3) that the H(z) data favour \(\varLambda \)CDM over \(R_{\mathrm{h}}=ct\) [25, 28]; and (4) that Type Ia SNe also favour the concordance model over \(R_{\mathrm{h}}=ct\) [25, 28, 29]. These works, and papers published in response to them [17, 23, 30,31,32,33], have generated an important discussion concerning the viability of \(R_{\mathrm{h}}=ct\) that we aim to continue here. In Sect. 7 below, we will discuss at greater length the need to use truly model-independent data in these tests, basing their analysis on sound statistical practices. Such due diligence is of utmost importance in any serious attempt to compare different cosmologies in an unbiased fashion.

The test most directly relevant to the work reported here was carried out using strong lenses by Ref. [7], who based their comparison on parametric fits from the models themselves, and concluded that both cosmologies account for the data rather well. The precision of the measurements used in that application, however, was not good enough to favour either model over the other. In this paper, we revisit that sample of strong lensing systems and use an entirely different approach for the comparison, based on Gaussian Processes (GP) to reconstruct the function representing the data non-parametrically. In so doing, the angular diameter distance to the lensing galaxies is determined without pre-assuming any model, providing a better comparison of the competing cosmologies using a functional area minimization statistic described in Sect. 5. An obvious benefit of this approach is that a reconstructed function representing the data may be found regardless of whether or not any of the models being tested is actually the correct cosmology.

In Sect. 2 of this paper, we describe the lensing equation used in cosmological tests, and we then describe the data used with this application in Sect. 3. The Gaussian processes and the cosmological models being tested here are summarized in Sect. 4. The area minimization statistic is introduced in Sect. 5, and we explain how this is used to obtain the model probabilities. We end with our conclusions in Sect. 6.

2 Theory of lensing

In work with strong lensing, the observed images are typically fitted using a singular isothermal ellipsoid approximation (SIE) for the lens [34]. The projected mass distribution at redshift \(z_l\) is assumed to be elliptical, with semi-major axis \(\theta _2\) and semi-minor axis \(\theta _1\). Often, an even simpler approximation suffices, and we make use of it in this paper: we use a singular isothermal sphere (SIS) for the lens model, in which the semi-major and semi-minor axes are equal, i.e., \(\theta _1=\theta _2\). To provide context for this approach, we first describe SIE lens model and afterwards restrict it further by setting \(\theta _1=\theta _2\). The lens equation [35] that relates the position \(\beta \) in the source plane to the position \(\theta \) in the image plane is given by

where \(\varPhi \) is the lensing potential of the SIE given as [36]

and \(\epsilon \) is the ellipticity related to the eccentricity according to

In Eq. (2), \(\theta _{\mathrm{E}}\) is the Einstein radius, defined as

where \(\sigma _v\) is the velocity dispersion within the lens and

Notice that Eq. (4) is independent of the Hubble constant \(H_0\). Nonetheless, one must still measure \(\sigma _v\), the total velocity dispersion of stellar and dark matter. Obtaining this quantity is challenging because it is not the average line-of-sight velocity dispersion weighted with surface-brightness. The velocity dispersion of the SIS (\(\sigma _{SIS}\) or \(\sigma _v)\), may be related to the central velocity dispersion \(\sigma _0\), which is obtained from the stellar velocity dispersion with one-eighth the effective optical radius (see, e.g., Refs. [4, 5]). Though this works quite well for massive elliptical galaxies, which are indistinguishable kinematically from an SIE within one effective radius, \(\sigma _{SIS}\) and \(\sigma _0\) are actually not equal. Dark matter is dynamically hotter than bright stars so the velocity dispersion of the former must be greater than that of the latter [37]. Treu et al. [4] studied the homogeneity of early-type galaxies using the large samples of lenses identified by the Sloan Lenses ACS Survey (SLACS; [10, 38]) and found that \(f_{SIS}\equiv \langle {\sigma _{SIS}}/{\sigma _0}\rangle =1.010\pm 0.017\) when fitting the geometry of multiple images. Similar results were found in Ref. [39], who examined the ratio of stellar velocity dispersion to \(\sigma _{SIS}\) for different anisotropy parameters. The accumulation of evidence therefore suggests that \(f_{SIS}=1.01\), and this is the value we adopt for this study. Thus, following Ref. [40], we write the Einstein Radius as

where

The data based on Eq. (6) will be used to compare our two cosmological models in this paper. The errors associated with individual measurements of \({\mathcal {D}}\) are calculated from the error propagation equation,

containing \(\theta _{\mathrm{E}}\), \(\sigma _0\), \(f_{SIS}=1.01\) and \(\sigma _f=0.017\). We follow Grillo et al. [5] and set \(\sigma _{\theta _{\mathrm{E}}}=0.05\, \theta _{\mathrm{E}}\) and \(\sigma _{\sigma _0}=0.05\,\sigma _0\). The overall dispersion in \({\mathcal {D}}\) is expected to be \(\sigma _{{\mathcal {D}}}\sim 0.11{\mathcal {D}}_{\mathrm{obs}}\).

3 Data

The compilation we use here contains 158 strong lensing systems. These have excellent spectroscopic measurements of the central velocity dispersion, obtained using the Sloan Lens ACS (SLACS) Survey [4, 10, 11] and the Lenses Structure and Dynamics (LSD) survey [12, 13]. One can also find some of the original contributions to these datasets in Refs. [41,42,43,44,45,46,47,48]. The velocity dispersion (and its aforementioned error \(\sim 5\)%) are obtained from Sloan Digital Sky Survey Database (SDSS).

Given that two distances are involved for each lens-source pairing, the GP method calls for a reconstruction of \({\mathcal {D}}(z_l,z_s)\) in two dimensions. This will only be feasible, however, when the sample is large enough to yield enough statistics to warrant this full approach. For now, even with 158 strong lensing systems, we are constrained to consider small redshift ranges, effectively reducing the problem to a one-dimensional reconstruction in each sub-division. Because the data are less dispersed in the lens plane, where \(0.1<z_l<0.5\), and scattered much more in the source plane, \(0.3<z_s<3.0\), we carry out the reconstruction within thin redshift-shells of sources, turning \(D_{\mathrm{obs}}(z_l,z_s)\) into a one-dimensional function of \(z_l\) for what is essentially a fixed \(z_s\). To minimize the scatter in source redshifts, we use a bin size less than 0.025 and choose those bins that have at least five data points within them, allowing us to reconstruct \(D_\mathrm{obs}(z_l,z_s)\) using GP for each of the selected bins. In our sample of 158 strong-lensing systems, these criteria therefore allow us to assemble five different redshift bins, with anywhere from 5 to 9 lens-source pairs in each of them. These strong lenses are displayed in Tables 1, 2, 3, 4 and 5. Note that for the purpose of GP reconstruction in one dimension, we assume that all the sources in redshift bin \((z_s,z_s+\varDelta z)\) have the same average redshift \(z_s+\varDelta z/2\).

4 Gaussian processes and model comparisons

Adapting the code developed by Seikel et al. [51] for Gaussian Processes in python, we reconstruct \({\mathcal {D}}_\mathrm{obs}(z_l,\langle z_s\rangle )\) for each of the sub-samples in Tables 1, 2, 3, 4 and 5, without assuming any model a priori. The GP method uses some of the attributes of a Gaussian distribution, though the former utilizes a distribution over functions obtained using GP, while the latter represents a random variable. The reconstruction of a function f(x) at x using GP creates a Gaussian random variable with mean \(\mu (x)\) and variance \(\sigma (x)\). The function reconstructed at x using GP, however, is not independent of that reconstructed at \(\tilde{x}=(x+dx)\), these being related by a covariance function \(k(x,\tilde{x})\). Although one can use many possible forms of k, we use one that depends on the distance between x and \(\tilde{x}\), i.e., the squared exponential covariance function defined as

Note that this function depends on two hyperparameters, \(\sigma _f\) and \(\varDelta \), where \(\sigma _f\) indicates a change in the y-direction and \(\varDelta \) represents a distance over which a significant change in the x-direction occurs. Overall, these two hyperparameters characterize the smoothness of the function k, and are trained on the data using a maximum likelihood procedure, which leads to the reconstructed \({\mathcal {D}}_{\mathrm{obs}}(z_l,\langle z_s\rangle )\) function for each source redshift shell centered on \(z_s\). For this paper, we have found that these hyperparameter values are 0.144 and 0.661, respectively.

One of the principal features of the GP approach that we highlight in this application to strong lenses concerns the estimation of the \(1\sigma \) confidence region attached to the reconstructed \({\mathcal {D}}_{\mathrm{obs}}(z_l,\langle z_s\rangle )\) curves. The \(1\sigma \) confidence region depends on both the actual errors of individual data points, \(\sigma _{{\mathcal {D}}i}\), on the optimized hyperparameter \(\sigma _f\) (see Eq. 9) and on the product \(K_*K^{-1}K_*^T\) (see Ref. [51]), where \(K_*\) is the covariance matrix at the point of estimation \(x_*\), calculated using the given data at \(x_i\), according to

K is the covariance matrix for the original dataset. Note that the dispersion at point \(x_i\) will be less than \(\sigma _{{\mathcal {D}}i}\) when \(K_*K^{-1}K_*^T>\sigma _f\), i.e., when for that point of estimation there is a large correlation between the data. From Eq. (9) it is clear that the correlation between any two points x and \(\tilde{x}\) will be large only when \(x-\tilde{x}<\sqrt{2}\varDelta \). This condition, however, is satisfied most frequently for the strong lenses used in our study, which results in GP estimated \(1\sigma \) confidence regions that are smaller than the errors in the original data. We refer the reader to Ref. [51] for further details.

The principal goal of this paper is to use a GP reconstruction of the \({\mathcal {D}}_{\mathrm{obs}}(z_l,\langle z_s\rangle )\) functions in order to compare the predictions of the \(\varLambda \)CDM and \(R_\mathrm{h}=ct\) cosmological models. The standard model contains radiation (photons and neutrinos), matter (baryonic and dark) and dark energy in the form of a cosmological constant. This blend of constituents, currently dominated by dark energy, is producing a phase of accelerated expansion, following an earlier period of deceleration when radiation was dominant. In terms of today’s critical density \(\rho _c\equiv 3c^2H_0/8\pi G\) and Hubble constant \(H_0\), the Hubble expansion rate in this cosmology depends on the matter density, \(\varOmega _{\mathrm{m}}\equiv {\rho _{\mathrm{m}}}/{\rho _c}\), radiation density, \(\varOmega _{\mathrm{r}}\equiv {\rho _{\mathrm{r}}}/{\rho _c}\) and dark energy density, \(\varOmega _{\mathrm{de}}\equiv {\rho _{\mathrm{de}}}/{\rho _c}\), with the constraint \(\varOmega _{\mathrm{m}}+\varOmega _{\mathrm{r}}+\varOmega _{\mathrm{de}}=1\). Since \(\varOmega _{\mathrm{r}}\) is negligible in the current era, we ignore radiation and use \(\varOmega _{\mathrm{de}}=1-\varOmega _{\mathrm{m}}\). For all the calculations, we use the parameters optimized by Planck, with \(\varOmega _{\mathrm{m}}=0.272\), and \(\varOmega _{\mathrm{de}}=0.728\). Thus, one deduces from the Friedmann equation that in \(\varLambda \)CDM

The angular diameter distance between redshifts \(z_1\) and \(z_2\) is given as

Therefore, substituting for H(z) from Eq. (11), one gets

The \(R_{\mathrm{h}}=ct\) universe [16, 17, 52,53,54] is also an FRW cosmology with radiation (photons and neutrinos), matter (baryonic and dark) and dark energy, with radiation and dark energy dominating the early Universe, and matter and dark energy dominating the current era [55]. But while it is similar to \(\varLambda \)CDM in this regard, it has an additional constraint on the total equation of state, i.e., \(\rho +3p=0\), the so-called zero active mass condition, where \(\rho \) and p are the total energy density and pressure, respectively. With this additional constraint, the \(R_\mathrm{h}=ct\) universe always expands at a constant rate, which depends on only one parameter – the Hubble constant \(H_0\). Using the Friedmann equation with zero active mass, we find that

and from Eq. (12), we therefore find that

5 The area minimization statistic

Now that we are dealing with a comparison between two continuous functions, i.e., \({\mathcal {D}}_{\mathrm{obs}}\) with either \({\mathcal {D}}^{\varLambda {\mathrm{CDM}}}\) or \({\mathcal {D}}^{R_{\mathrm{h}}=ct}\) (each derived from Eq. 5 using Eqs. 13 and 15), we cannot use discrete sampling statistics, such as weighted least squares, for the comparison of different models. The reason is that sampling at random points to obtain the squares of differences between model and reconstructed curve would lose information between these points, whose importance cannot be ascertained prior to the sampling. To overcome this deficiency, we introduce a new statistic, based on a previous application [56, 57], which we call the “Area Minimization Statistic” to estimate each model’s probability of being consistent with the data. Our principal assumption is that the measurement errors are Gaussian, which we use to generate a mock sample of GP reconstructed curves representing the possible variation of \({\mathcal {D}}\) away from \({\mathcal {D}}_{\mathrm{obs}}\). We do this by employing the Gaussian randomization

where \({\mathcal {D}}_{i,\,\mathrm{obs}}(z_l,\langle z_s\rangle )\) are the actual measurements as a function of \(z_l\) for each source shell \(\langle z_s\rangle \). \(\sigma _{{\mathcal {D}}_i}\) are the actual observed errors and r is a Gaussian random variable with zero mean and a variance of 1. Next, these \({\mathcal {D}}_i(z_l,\langle z_s\rangle )\) are used together with the errors \(\sigma _{{\mathcal {D}}_i}\) to reconstruct the function \({\mathcal {D}}_{\mathrm{mock}}(z_l,\langle z_s\rangle )\) corresponding to each mock sample, and finally we calculate the weighted absolute area difference between \({\mathcal {D}}_{\mathrm{mock}}(z_l,\langle z_s\rangle )\) and the GP reconstructed function of the actual data according to

In this expression, \(z_{\mathrm{min}}\) and \(z_{\mathrm{max}}\) are the minimum and maximum redshifts, respectively, of the data range. We repeat this procedure 10,000 times to build a distribution of frequency versus area differential DA, and from it construct the cumulative probability distribution. In Fig. 1 we show these quantities for the illustrative source shell \(0.50<z_s<0.525\) (the frequency is shown in the top panel, and the cumulative probability distribution is on the bottom). This procedure generates a 1-to-1 mapping between the value of DA and the frequency with which it arises. With the additional assumption that curves with a smaller DA are a better match to \({\mathcal {D}}_{\mathrm{obs}}\), one can then use the cumulative distribution to estimate the probability that the difference between a model’s prediction and the reconstructed curve is merely due to Gaussian randomness. When comparing a model’s prediction to the data, we therefore calculate its DA and use our 1-to-1 mapping to determine the probability that its inconsistency with the data is just due to variance, rather than the model being wrong. These are the probabilities we then compare to determine which model is more likely to be correct. This basic concept is common to many kinds of statistical approaches, though none of the existing ones can be used when comparing two continuous curves, as we have here.

The reconstructed curves for our five subsamples are shown in the left-hand panels of Fig. 2. These correspond to the five source redshift shells in Tables 1, 2, 3, 4 and 5. The corresponding cumulative probability distributions are plotted in the right-hand panels, which also locate the DA values for \(R_{\mathrm{h}}=ct\) (yellow) and \(\varLambda \)CDM (red). The probabilities associated with these differential areas are summarized in Table 6. Along with the reconstructed functions, the left-hand panels also show the corresponding \(1\sigma \) (dark) and \(2\sigma \) (light) confidence regions provided by the GP, and the theoretical predictions in \(\varLambda \)CDM (dashed) and \(R_{\mathrm{h}}=ct\) (dotted). As we highlighted earlier, the functions \({\mathcal {D}}_{\mathrm{obs}}(z_l,\langle z_s\rangle )\) have been reconstructed without pre-assuming any parametric form, so in principle they represent the actual variation of \({\mathcal {D}}\) with redshift, regardless of whether or not either of the two models being tested here is the correct cosmology.

The overall impression one gets from the results displayed in Fig. 2 and summarized in Table 6 is that, for every source redshift shell sampled here, the probability of \(R_{\mathrm{h}}=ct\) being consistent with the GP reconstructed function \({\mathcal {D}}_\mathrm{obs}\) is \(\sim \)10–30% higher than that for \(\varLambda \)CDM. Future surveys will greatly grow the sample of sources available for this type of analysis, differentiating between these two models with greater confidence.

Left panels a, c, e, g, i The solid curve in each plot indicates the reconstructed \({\mathcal {D}}_\mathrm{obs}\) function using Gaussian processes, for the source redsfhit ranges (0.51, 0.535), (0.52, 0.545), (0.45, 0.475), (0.5, 0.525), and (0.46, 0.485). The dotted curve indicates the predicted \({\mathcal {D}}\) in the \(R_{\mathrm{h}}=ct\) universe and the dashed curve indicates the corresponding \({\mathcal {D}}\) in \(\varLambda \)CDM. In each of these figures, dark blue represents the \(1\sigma \) confidence region, and light blue is \(2\sigma \). Right panels b, d, f, h, j The corresponding cumulative probability distributions

6 Conclusions

In this paper, we have introduced the GP reconstruction approach to strong lensing studies, though clearly the available sample is still not large enough for us to make full use of this method. As noted earlier, one of the principal benefits of this technique is that the function (in this case \({\mathcal {D}}_{\mathrm{obs}}\)) representing the data may be obtained without the assumption of any parametric form associated with particular models. This allows one to test different models against the actual \({\mathcal {D}}_{\mathrm{obs}}\), rather than against each other’s predictions, neither of which may be a good representation of the measurements. In addition, GP provide \(1\sigma \) and \(2\sigma \) confidence regions for the reconstructed functions more in line with the population as a whole, rather than individual data points, greatly restricting the ability of ‘incorrect’ models to adequately fit the observations due to otherwise large measurement errors.

This is reflected in the probabilities quoted in Table 6 for the two models we have examined here. Unlike previous model comparisons based on the use of parametric fits to the strong-lensing data, we now find that \(R_{\mathrm{h}}=ct\) is favoured over \(\varLambda \)CDM with consistently higher likelihoods in all five source redshift shells we have assembled for this work. Though these statistics are still quite limited, it is nonetheless telling that the differentiation between models improves as the number of sources within each shell increases. Also, at least for \(R_{\mathrm{h}}=ct\), the probability of its predictions matching the GP reconstructed functions generally increases as the size of the lens sample grows. The outcome of this work underscores the importance of using unbiased data and sound statistical methods when comparing different cosmological models. As a counterexample, consider the use of H(z) measurements based on BAO observations instead of cosmic chronometers [28], constituting an unwitting use of model-dependent measurements to test competing models. Such an approach ignores the significant limitations in all but the three most recent BAO measurements [58, 59] for this type of work. Previous applications of the galaxy two-point correlation function to measure the BAO scale were contaminated with redshift distortions associated with internal gravitational effects [59]. To illustrate the significance of these limitations, and the impact of the biased BAO measurements of H(z), note how the model favoured by the data switches from \(\varLambda \)CDM to \(R_{\mathrm{h}}=ct\) when only the unbiased measurements are used [61].

A second counterexample is provided by the merger of disparate sub-samples of Type Ia SNe to improve the statistical analysis. We have already published an in-depth explanation of the perils associated with the blending of data with different systematics for the purpose of model selection [62], but let us nonetheless consider a brief synopsis here. The Union2.1 catalog [63, 64] includes \(\approx 580\) SN detections, though each sub-sample has its own systematic and intrinsic uncertainties. The conventional approach minimizes an overall \(\chi ^2\), while each sub-sample is assigned an intrinsic dispersion to ensure that \(\chi ^2_{\mathrm{dof}}=1\) [28, 29]. Instead, the statistically correct approach would estimate the unknown intrinsic dispersions simultaneously with all other parameters [62, 65]. Quite tellingly, the outcome of the model selection is reversed when one switches from the improper statistical approach to the correct one. To emphasize how critical this reversal is in the case of \(\varLambda \)CDM, one simply needs to compare the outcome of using a merged super-sample with that produced with a large, single homogeneous sample, such as the Supernova Legacy Survey Sample [66].

Within this context, we highlight the fact that the features of the GP reconstruction approach in the study of strong lenses are promising because, in spite of the fact that the use of these systems to measure cosmological parameters has been with us for over a decade (see, e.g., Refs. [4,5,6, 67]), the results of this effort have thus far been less precise than those of other kinds of observations. For the large part, these earlier studies were based on the use of parametric fits to the data, but it is quite evident (e.g., from Figs. 1 and 2 in Ref. [7]) that the scatter in \({\mathcal {D}}_{\mathrm{obs}}\) about the theoretical curves generally increases significantly as \(D_A(z_l,z_s) \rightarrow D_A(0,z_s)\). That is, measuring \({\mathcal {D}}\) incurs a progressively greater error as the distance to the gravitational lens becomes a smaller fraction of the distance to the quasar source. This has to do with the fact that \(\theta _{\mathrm{E}}\) changes less for large values of \(z_s/z_l\) so, for a fixed error in the Einstein angle, the measurement of \({\mathcal {D}}_{\mathrm{obs}}\) becomes less precise. As we have demonstrated in this paper, the analysis of strong lensing systems based on a GP reconstruction of \({\mathcal {D}}_{\mathrm{obs}}\) improves our ability to distinguish between different models, albeit by a modest amount given the current sample.

Upcoming survey projects, such as the Dark Energy Survey (DES; [68]), the Large Synoptic Survey Telescope (LSST; [69]), the Joint Dark Energy Mission (JDEM; [70]), and the Square Kilometer Array (SKA; e.g., Ref. [71]), are expected to greatly grow the size of the lens sample. The ability of GP reconstruction methods to differentiate between models will increase in tandem with this growth. Several sources of uncertainty still remain, however, including the actual mass distribution within the lens. And since such errors appear to be more restricting for lens systems with large values of \(z_s/z_l\), a priority for future work should be the identification of strong lenses with small angular diameter distances between the source and lens relative to the distance between the lens and observer.

References

M. Bartelmann, P. Schneider, AA 345, 17 (1999)

A. Refreiger, ARAA 41, 645 (2003)

T. Futamase, S. Yoshida, PThPh 105, 887 (2001)

T. Treu, L.V.E. Koopmans, A.S. Bolton, S. Burles, L.A. Moustakas, ApJ 640, 662 (2006)

C. Grillo, M. Lombardi, G. Bertin, AA 477, 397 (2008)

M. Biesiada, A. Piórkowska, B. Malec, MNRAS 406, 1055 (2010)

F. Melia, J.-J. Wei, X.-F. Wu, AJ 149, 2 (2015)

S. Cao et al., ApJ 806, 185 (2015)

Y. Shu et al., ApJ 851, 48 (2017)

A. Bolton, S.M. Burles, L.V.E. Koopmans, T. Treu, L.M. Moustakas, ApJ 638, 703 (2006)

L.V.E. Koopmans, T. Treu, A.S. Bolton, S. Burles, L.A. Moustakas, ApJ 649, 599 (2006)

A.S. Bolton, S.M. Burles, L.V.E. Koopmans et al., ApJ 682, 964 (2008)

E.R. Newton, P.J. Marshall, T. Treu, SLACS collaboration. ApJ 734, 104 (2011)

A .G. Riess et al., AJ 116, 1009 (1998)

S. Perlmutter et al., Nature 391, 51 (1998)

F. Melia, MNRAS 382, 1917 (2007)

F. Melia, A. Shevchuk, MNRAS 419, 2579 (2012)

F. Melia, MNRAS 464, 1966 (2017)

F. Melia, ApJ 764, 72 (2013)

J.-J. Wei, X. Wu, F. Melia, ApJ 772, 43 (2013)

J.-J. Wei, X.-F. Wu, F. Melia, R.S. Maier, AJ 149, 102 (2015)

F. Melia, J.-J. Wei, R. S. Maier, X. Wu, EPL (2017) (submitted)

F. Melia, R.S. Maier, MNRAS 432, 2669 (2013)

P. van Oirschot, J. Kwan, G.F. Lewis, MNRAS 404, 1633 (2010)

M. Bilicki, M. Seikel, MNRAS 425, 1664 (2012)

G.F. Lewis, P. van Oirschot, MNRAS Lett. 423, 26 (2012)

G.F. Lewis, MNRAS 432, 2324 (2013)

D.L. Shafer, Phys. Rev. D 91, 103516 (2015)

D. Rubin & B. Hayden B., ApJL 833, 5 (2016) (id. L30)

O. Bikwa, F. Melia, A.S.H. Shevchuk, MNRAS 421, 3356 (2012)

F. Melia, JCAP 09, 029 (2012)

F. Melia, Astrophys. Sp. Sci. 356, 393 (2015)

F. Melia, MNRAS 446, 1191 (2015)

K.U. Ratnatunga, R.E. Griffiths, E.J. Ostrander, AJ 117, 2010 (1999)

P. Schneider, J. Ehlers, E.E. Falco, Gravitational lenses (Springer, Berlin, 1992)

R. Kormann, P. Schneider, M. Bartelmann, AA 284, 285 (1994)

R.E. White, D.S. Davis, BAAS 28, 1323 (1996)

A.S. Bolton, S.M. Burles, L.V.E. Koopmans, T. Treu, L.M. Moustakas, ApJL 624, L21 (2005)

G. van de Ven, P.G. van Dokkum, M. Franx, MNRAS 344, 924 (2003)

S. Cao, Y. Pan, M. Biesiada, W. Godlowski, Z.-H. Zhu, JCAP 3, 16 (2012)

P. Young, J.E. Gunn, J. Kristian, J.B. Oke, J.A. Westphal, ApJ 241, 507 (1980)

J. Huchra, M. Gorenstein, S. Kent et al., AJ 90, 691 (1985)

J. Lehar, G.I. Langston, A. Silber, C.R. Lawrence, B.F. Burke, AJ 105, 847 (1993)

C.D. Fassnacht, D.S. Womble, G. Neugebauer et al., ApJL 460, L103 (1996)

J.L. Tonry, AJ 115, 1 (1998)

L.V.E. Koopmans, T. Treu, ApJ 568, 5 (2002)

L.V.E. Koopmans, T. Treu, ApJ 583, 606 (2003)

T. Treu, L.V.E. Koopmans, ApJ 611, 739 (2004)

T. Treu, L.V.E. Koopmans, MNRAS 343, 29 (2003)

T. Treu, L.V.E. Koopmans, ApJ 575, 87 (2002)

M. Seikel, C. Clarkson, M. Smith, JCAP 06, 036S (2012)

F. Melia, M. Abdelqader, IJMPD 18, 1889 (2009)

F. Melia, Front. Phys. 11, 119801 (2016)

F. Melia, Front. Phys. 12, 129802 (2017)

F. Melia, M. Fatuzzo, MNRAS 456, 3422 (2016)

M.K. Yennapureddy, F. Melia, JCAP 11, 029Y (2017)

F. Melia, M.K. Yennapureddy, JCAP 02, 034M (2018)

L. Anderson et al., MNRAS 441, 24 (2014)

T. Delubac et al., AA 574, A59 (2015)

M. López-Corredoira, ApJ 781, 96 (2014) (id. 96)

F. Melia, M. López-Corredoira, IJMPD 26, 1750055 (2017)

J.-J. Wei, X. Wu, F. Melia, R.S. Maier, AJ 149, 102 (2015)

M.R. Kowalski, ApJ 686, 749 (2008)

N. Suzuki et al., ApJ 746, 85 (2012)

A.G. Kim, PASP 123, 230 (2011)

M. Betoule et al., AA 568, 22 (2014)

M. Biesiada, B. Malec, A. Piórkowska, RAA 11, 641 (2011)

J. Frieman, Dark energy survey collaboration. BAAS 36, 1462 (2004)

A. Tyson, R. Angel, The new era of wide field astronomy. ASP conference series, vol. 232. Ed. R. Clowes, A. Adamson, G. Bromage. San Francisco: ASP, p. 347 (2001)

A. Tyson, ASP conference series, vol. 339, Observing dark energy, Ed. S. C. Wolff, T. R. Lauer, p. 95 (2005)

J. McKean, N. Jackson, S. Vegetti, M. Rybak, S. Serjeant, L.V.E. Koopmans, R. B. Metcalf, C. Fassnacht, P. J. Marshall, M. Pandey-Pommier, In: Proceedings of advancing astrophysics with the square kilometre array (AASKA14). 9–13 June 2014. Giardini Naxos, Italy (2015)

Acknowledgements

FM is grateful to the Instituto de Astrofísica de Canarias in Tenerife and to Purple Mountain Observatory in Nanjing, China for their hospitality while part of this research was carried out. FM is also grateful for partial support to the Chinese Academy of Sciences Visiting Professorships for Senior International Scientists under Grant 2012T1J0011, and to the Chinese State Administration of Foreign Experts Affairs under Grant GDJ20120491013.

Author information

Authors and Affiliations

Corresponding author

Additional information

F. Melia: John Woodruff Simpson Fellow.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funded by SCOAP3

About this article

Cite this article

Yennapureddy, M.K., Melia, F. Cosmological tests with strong gravitational lenses using Gaussian processes. Eur. Phys. J. C 78, 258 (2018). https://doi.org/10.1140/epjc/s10052-018-5746-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-018-5746-8