Abstract

A measurement of W+W− production in pp collisions at \(\sqrt{s} = 7~\mathrm{TeV}\) is presented. The data were collected with the CMS detector at the LHC, and correspond to an integrated luminosity of 4.92±0.11 fb−1. The W+W− candidates consist of two oppositely charged leptons, electrons or muons, accompanied by large missing transverse energy. The W+W− production cross section is measured to be 52.4±2.0 (stat.)±4.5 (syst.)±1.2 (lum.) pb. This measurement is consistent with the standard model prediction of 47.0±2.0 pb at next-to-leading order. Stringent limits on the WWγ and WWZ anomalous triple gauge-boson couplings are set.

Similar content being viewed by others

1 Introduction

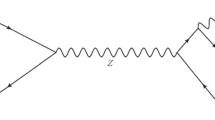

The standard model (SM) description of electroweak and strong interactions can be tested through measurements of the W+W− production cross section at a hadron collider. The s-channel and t-channel \(\mathrm {q} \overline {\mathrm {q}} \) annihilation diagrams, shown in Fig. 1, correspond to the dominant process in the SM, at present energies. The gluon–gluon diagrams, which contain a loop at lowest order, contribute only 3 % of the total cross section [1] at \(\sqrt{s} = 7~\mathrm{TeV}\). WWγ and WWZ triple gauge-boson couplings (TGCs) [2], responsible for s-channel W+W− production, are sensitive to possible new physics processes at a higher mass scale. Anomalous values of the TGCs would change the W+W− production rate and potentially certain kinematic distributions from the SM prediction. Aside from tests of the SM, W+W− production represents an important background source for new particle searches, e.g. for Higgs boson searches [3–5]. Next-to-leading-order (NLO) calculations of W+W− production in pp collisions at \(\sqrt{s} = 7~\mathrm{TeV}\) predict a cross section of σ NLO(pp→W+W−)=47.0±2.0 pb [1].

This paper reports a measurement of the W+W− cross section in the \(\mathrm {W}^{+} \mathrm {W}^{-} \to\ell^{+}\nu\ell^{-} \overline {\nu } \) final state in pp collisions at \(\sqrt{s} = 7~\mathrm{TeV}\) and constraints on anomalous triple gauge-boson couplings. The measurement is performed with the Compact Muon Solenoid (CMS) detector at the Large Hadron Collider (LHC) using the full 2011 data sample, corresponding to an integrated luminosity of 4.92±0.11 fb−1, more than two orders of magnitude larger than data used in the first measurements with the CMS [6] and ATLAS [7] experiments at the LHC, and comparable in size to the data sets more recently analyzed by ATLAS [8, 9].

2 The CMS detector and simulations

The CMS detector is described in detail elsewhere [10] so only the key components for this analysis are summarised here. A superconducting solenoid occupies the central region of the CMS detector, providing an axial magnetic field of 3.8 T parallel to the beam direction. A silicon pixel and strip tracker, a crystal electromagnetic calorimeter, and a brass/scintillator hadron calorimeter are located within the solenoid. A quartz-fiber Cherenkov calorimeter extends the coverage to |η|<5.0, where pseudorapidity is defined as η=−ln[tan(θ/2)], and θ is the polar angle of the particle trajectory with respect to the anticlockwise-beam direction. Muons are measured in gas-ionisation detectors embedded in the steel magnetic-flux-return yoke outside the solenoid. The first level of the CMS trigger system, composed of custom hardware processors, is designed to select the most interesting events in less than 3 μs using information from the calorimeters and muon detectors. The high-level trigger processor farm further decreases the rate of stored events to a few hundred hertz for subsequent analysis.

This measurement exploits W+W− pairs in which both bosons decay leptonically, yielding an experimental signature of two isolated, high transverse momentum (p T), oppositely charged leptons (electrons or muons) and large missing transverse energy (\(E_{\mathrm {T}}^{\mathrm {miss}} \)) due to the undetected neutrinos. The \(E_{\mathrm {T}}^{\mathrm {miss}} \) is defined as the modulus of the vectorial sum of the transverse momenta of all reconstructed particles, charged and neutral, in the event. This variable, together with the full event selection, is explained in detail in Sect. 3.

Several SM processes constitute backgrounds for the W+W− sample. These include W+jets and quantum chromodynamics (QCD) multijet events where at least one of the jets is misidentified as a lepton, top-quark production (\(\mathrm {t}\overline {\mathrm {t}} \) and tW), Drell–Yan Z/γ ∗→ℓ + ℓ −, and diboson production (Wγ (∗), WZ, and ZZ) processes.

A number of Monte Carlo (MC) event generators are used to simulate the signal and backgrounds. The \(\mathrm {q} \overline {\mathrm {q}} \to \mathrm {W}^{+} \mathrm {W}^{-} \) signal, W+jets, WZ, and Wγ (∗) processes are generated using the MadGraph 5.1.3 [11] event generator. The gg→W+W− signal component is simulated using gg2ww [12]. The powheg 2.0 program [13] provides event samples for the Drell–Yan, \(\mathrm {t}\overline {\mathrm {t}} \), and tW processes. The remaining background processes are simulated using pythia 6.424 [14].

The default set of parton distribution functions (PDFs) used to produce the LO MC samples is CTEQ6L [15], while CT10 [16] is used for NLO generators. The NLO calculations are used for background cross sections. For all processes, the detector response is simulated using a detailed description of the CMS detector, based on the Geant4 package [17].

The simulated samples include the effects of multiple pp interactions in each beam crossing (pileup), and are reweighted to match the pileup distribution as measured in data.

3 Event selection

This measurement considers signal candidates in three final states: e+e−, μ + μ −, and e± μ ∓. The W→ℓν ℓ (ℓ=e or μ) decays are the main signal components; W→τν τ events with leptonic τ decays are included, although the analysis is not optimised for this final state. The trigger requires the presence of one or two high-p T electrons or muons. For single lepton triggers the p T threshold for the selection is 27 (15) GeV for electrons (muons). For double lepton triggers, the p T thresholds, for pairs of leptons of the same flavour, are lowered to 18 and 8 GeV for the first and second electrons, respectively, and to 7 GeV for the each of the two muons. Different flavour lepton triggers are also used. The overall trigger efficiency for signal events is measured to be approximately 98 % using data.

Two oppositely charged lepton candidates are required, both with p T>20 GeV. Electron candidates are selected using a multivariate approach that exploits correlations between the selection variables described in Ref. [18] to improve identification performance, while muon candidates [19] are identified using a selection close to that described in Ref. [6]. Charged leptons from W boson decays are expected to be isolated from any other activity in the event. The lepton candidates are required to be consistent with originating at the primary vertex of the event, which is chosen as the vertex with the highest \(\sum p_{\mathrm {T}} ^{2}\) of its associated tracks. This criterion provides the correct assignment for the primary vertex in more than 99 % of events for the pileup distribution observed in the data. The efficiency is measured by checking how often a primary vertex with the highest \(\sum p_{\mathrm{T}}^{2}\) of the constituent tracks is consistent with the vertex formed by the two primary leptons. This is done in MC and checked in data.

The particle-flow (PF) technique [20] that combines the information from all CMS subdetectors to reconstruct each individual particle is used to calculate the isolation variable. For each lepton candidate, a cone around the lepton direction at the event vertex is reconstructed, defined as \(\Delta R = \sqrt{(\Delta\eta)^{2} + (\Delta\phi)^{2}}\), where Δη and Δϕ are the distances from the lepton track in η and azimuthal angle, ϕ (in radians), respectively; ΔR takes a value of 0.4 (0.3) for electrons (muons). The scalar sum of the transverse momentum is calculated for the particles reconstructed with the PF algorithm that are contained within the cone, excluding the contribution from the lepton candidate itself. If this sum exceeds approximately 10 % of the candidate p T, the lepton is rejected; the exact requirement depends on the lepton flavour and on η.

Jets are reconstructed from calorimeter and tracker information using the PF technique [21]. The anti-k T clustering algorithm [22] with a distance parameter of 0.5, as implemented in the FastJet package [23, 24], is used. To correct for the contribution to the jet energy from pileup, a median energy density ρ, or energy per area of jet, is determined event by event. The pileup contribution to the jet energy is estimated as the product of ρ and the area of the jet and subsequently subtracted [25] from the jet transverse energy E T. Jet energy corrections are also applied as a function of the jet E T and η [26]. To reduce the background from top-quark decays, a jet veto is applied: events with one or more jets with corrected E T>30 GeV and |η|<5.0 are rejected.

To further suppress the top-quark background, two top-quark tagging techniques based on soft-muon and b-jet tagging [27, 28] are applied. The first method vetoes events containing muons from b-quark decays, which can be either low-p T muons or nonisolated high-p T muons. The second method uses information from tracks with large impact parameter within jets, and applies a veto on those with the b-jet tagging value above the selected veto threshold. The combined rejection efficiency for these tagging techniques, in the case of \(\mathrm {t}\overline {\mathrm {t}} \) events, is about a factor of two, once the full event selection is applied.

The Drell–Yan background has a production cross section some orders of magnitude larger than the W+W− process. To eliminate Drell–Yan events, two different \(E_{\mathrm {T}}^{\mathrm {miss}} \) vectors are used [29]. The first is reconstructed using the particle-flow algorithm, while the second uses only the charged-particle candidates associated with the primary vertex and is therefore less sensitive to pileup. The projected \(E_{\mathrm {T}}^{\mathrm {miss}} \) is defined as the component of \(E_{\mathrm {T}}^{\mathrm {miss}} \) transverse to the direction of the nearest lepton, if it is closer than π/2 in azimuthal angle, and the full \(E_{\mathrm {T}}^{\mathrm {miss}} \) otherwise. A lower cut on this observable efficiently rejects Z/γ ∗→τ + τ − background events, in which the \(E_{\mathrm {T}}^{\mathrm {miss}} \) is preferentially aligned with leptons, as well as Z/γ ∗→ℓ + ℓ − events with mismeasured \(E_{\mathrm {T}}^{\mathrm {miss}} \) associated with poorly reconstructed leptons or jets. The minimum of the projections of the two \(E_{\mathrm {T}}^{\mathrm {miss}} \) vectors is used, exploiting the correlation between them in events with significant genuine \(E_{\mathrm {T}}^{\mathrm {miss}} \), as in the signal, and the lack of correlation otherwise, as in Drell–Yan events. The requirement for this variable in the e+e− and μ + μ − final states is projected \(E_{\mathrm {T}}^{\mathrm {miss}} > ( 37 + N_{\mathrm{vtx}}/ 2 )~\mathrm{GeV}\), which depends on the number of reconstructed primary vertices (N vtx). In this way the dependence of the Drell–Yan background on pileup is minimised. For the e± μ ∓ final state, which has smaller contamination from Z/γ ∗→ℓ + ℓ − decays, the threshold is lowered to 20 GeV. These requirements remove more than 99 % of the Drell–Yan background, the actual number of accepted background events is obtained from the data, as explained below.

Remaining Z/γ ∗→ℓ + ℓ − events in which the Z boson recoils against a jet are reduced by requiring the angle in the transverse plane between the dilepton system and the most energetic jet to be smaller than 165 degrees. This selection is applied only in the e+e− and μ + μ − final states when the leading jet has E T>15 GeV.

To further reduce the Drell–Yan background in the e+e− and μ + μ − final states, events with a dilepton mass within ±15 GeV of the Z mass are rejected. Events with dilepton masses below 20 GeV are also rejected to suppress contributions from low-mass resonances. The same requirement, where the threshold is lowered to 12 GeV, is also applied in the e± μ ∓ final state. Finally, the transverse momentum of the dilepton system (\(p_{\mathrm {T}} ^{\ell\ell}\)) is required to be above 45 GeV to reduce both the Drell–Yan background and the contribution from misidentified leptons.

To reduce the background from other diboson processes, such as WZ or ZZ production, any event that has an additional third lepton with p T>10 GeV passing the identification and isolation requirements is rejected. Wγ (∗) background, in which the photon is misidentified as an electron, is suppressed by stringent γ conversion rejection requirements [18].

4 Estimation of backgrounds

A combination of techniques is used to determine the contributions from backgrounds that remain after the W+W− selection. The major contribution at this level comes from the top-quark processes, followed by the W+jets background.

The normalisation of the top-quark background is estimated from data by counting top-quark-tagged events, with the requirements explained in Sect. 3, and applying the corresponding tagging efficiency. The top-quark tagging efficiency (ϵ top tagged) is measured in a data sample, dominated by \(\mathrm {t}\overline {\mathrm {t}} \) and tW events, that is selected from a phase space close to that for W+W− events, but instead requiring one jet with E T>30 GeV. The residual number of top-quark events (N not tagged) in the signal sample is given by

where N tagged is the number of tagged events. The total uncertainty on this background estimation is about 18 %. The main contribution comes from the statistical and systematic uncertainties related to the measurement of ϵ top tagged.

The W+jets and QCD multijet background with jets misidentified as leptons are estimated by counting the number of events containing one lepton that satisfies the nominal selection criteria and another lepton that satisfies relaxed requirements on impact parameter and isolation but not the nominal criteria. This sample, enriched in W+jets events, is extrapolated to the signal region using the efficiencies for such loosely identified leptons to pass the tight selection. These efficiencies are measured in data using multijet events and are parametrised as functions of the p T and η of the lepton candidate. QCD backgrounds are found to be negligible. The systematic uncertainties stemming from this efficiency determination dominate the overall uncertainty, which is estimated to be about 36 %. The main contribution to this uncertainty comes from the differences in the p T spectrum of the jets in the measurement data sample, composed mainly of QCD events, compared to the sample, primarily W+jets, from which the extrapolation is performed.

The residual Drell–Yan contribution to the e+e− and μ + μ − final states outside of the Z boson mass window (\(N_{\mathrm{out}}^{\ell\ell,\mathrm{exp}}\)) is estimated by normalising the simulation to the observed number of events inside the Z boson mass window in data (\(N_{\mathrm{in}}^{\ell\ell}\)). The contribution in this region from other processes where the two leptons do not come from a Z boson (\(N_{\mathrm{in}}^{\text{non-Z}}\)) is subtracted before performing the normalisation. This contribution is estimated on the basis of the number of e± μ ∓ data events within the Z boson mass window. The WZ and ZZ contributions in the Z mass window (\(N_{\mathrm{in}}^{ \mathrm {Z} \mathrm{V}}\)) are also subtracted, using simulation, when leptons come from the same Z boson as in the case of the Drell–Yan production. The residual background in the W+W− data outside the Z boson mass window is thus expressed as

with

The systematic uncertainty in the final Drell–Yan estimate is derived from the dependence of \(R^{\ell\ell}_{\mathrm{out}/\mathrm{in}}\) on the value of the \(E_{\mathrm {T}}^{\mathrm {miss}} \) requirement.

Finally, a control sample with three reconstructed leptons is defined to rescale the estimate, based on the simulation, of the background Wγ ∗ contribution coming from asymmetric γ ∗ decays, where one lepton escapes detection [30].

Other backgrounds are estimated from simulation. The Wγ background estimate is cross-checked in data using the events passing all the selection requirements except that the two leptons must have the same charge; this sample is dominated by W+jets and Wγ events. The Z/γ ∗→τ + τ − contamination is also cross-checked using Z/γ ∗→e+e− and Z/γ ∗→μ + μ − events selected in data, where the leptons are replaced with simulated τ-lepton decays, and the results are consistent with the simulation. Other minor backgrounds are WZ and ZZ diboson production where the two selected leptons come from different bosons.

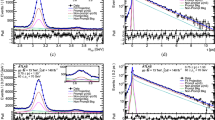

The estimated event yields for all processes after the event selection are summarised in Table 1. The distributions of the key analysis variables are shown in Fig. 2.

Distributions of the maximum lepton transverse momentum (p Tmax), the minimum lepton transverse momentum (p Tmin), the dilepton transverse momentum (\(p_{\mathrm {T}} ^{\ell\ell}\)) and invariant mass (M ℓℓ ) at the final selection level. Some of the backgrounds have been rescaled to the estimates based on control samples in data, as described in the text. All leptonic channels are combined, and the uncertainty band corresponds to the statistical and systematic uncertainties in the predicted yield. The last bin includes the overflow. In the box below each distribution, the ratio of the observed CMS event yield to the total SM prediction is shown

5 Efficiencies and systematic uncertainties

The signal efficiency, which includes the acceptance of the detector, is estimated using simulation and including both the \(\mathrm {q} \overline {\mathrm {q}} \to \mathrm {W}^{+} \mathrm {W}^{-} \) and gg→W+W− processes. Residual discrepancies in the lepton reconstruction and identification efficiencies between data and simulation are corrected by determining data-to-simulation scale factors measured using Z/γ ∗→ℓ + ℓ − events in the Z peak region [31] that are recorded with unbiased triggers. These factors depend on the lepton p T and |η| and are within 4 % (2 %) of unity for electrons (muons). Effects due to W→τν τ decays with τ leptons decaying into lower-energy electrons or muons are included in the signal efficiency.

The experimental uncertainties in lepton reconstruction and identification efficiency, momentum scale and resolution, \(E_{\mathrm {T}}^{\mathrm {miss}} \) modelling, and jet energy scale are applied to the reconstructed objects in simulated events by smearing and scaling the relevant observables and propagating the effects to the kinematic variables used in the analysis. A relative uncertainty of 2.3 % in the signal efficiency due to multiple collisions within a bunch crossing is taken from the observed variation in the efficiency in a comparison of two different pileup scenarios in simulation, reweighted to the observed data.

The relative uncertainty in the signal efficiency due to variations in the PDFs and the value of α s is 2.3 % (0.8 %) for \(\mathrm {q} \overline {\mathrm {q}} \) (gg) production, following the PDF4LHC prescription [16, 32–36]. The effect of higher-order corrections, studied using the mcfm program [1], is found to be 1.5 % (30 %) for \(\mathrm {q} \overline {\mathrm {q}} \) annihilation (gg) by varying the renormalisation (μ R ) and factorisation (μ F ) scales in the range (μ 0/2,2μ 0), with μ 0 equal to the mass of the W boson, and setting μ R =μ F . The W+W− jet veto efficiency in data is estimated from simulation and multiplied by a data-to-simulation scale factor derived from Z/γ ∗→ℓ + ℓ − events in the Z peak,

where \(\epsilon_{ \mathrm {W}^{+} \mathrm {W}^{-} }^{\mathrm{data}}\) and \(\epsilon_{ \mathrm {W}^{+} \mathrm {W}^{-} }^{\mathrm{MC}}\) (\(\epsilon_{ \mathrm {Z} }^{\mathrm{data}}\) and \(\epsilon_{ \mathrm {Z} }^{\mathrm{MC}}\)) are the efficiencies for the jet veto on the W+W− (Z) process for data and MC, respectively. The uncertainty in this efficiency is factorised into the uncertainty in the Z efficiency in data and the uncertainty in the ratio of the W+W− efficiency to the Z efficiency in simulation (\(\epsilon_{ \mathrm {W}^{+} \mathrm {W}^{-} }^{\mathrm{MC}}/\epsilon _{ \mathrm {Z} }^{\mathrm{MC}}\)). The former, which is dominated by statistics, is 0.3 %. Theoretical uncertainties due to higher-order corrections contribute most to the \(\epsilon_{ \mathrm {W}^{+} \mathrm {W}^{-} }^{\mathrm{MC}}/\epsilon_{ \mathrm {Z} }^{\mathrm{MC}}\) ratio uncertainty, which is 4.6 %. The data-to-simulation correction factor is close to unity, using the Z/γ ∗→ℓ + ℓ − events.

The uncertainties in the W+jets and top-quark background predictions are evaluated to be 36 % and 18 %, respectively, as described in Sect. 4. The total uncertainty in the Z/γ ∗→ℓ + ℓ − normalisation is about 50 %, including both statistical and systematic contributions.

The theoretical uncertainties in the diboson cross sections are calculated by varying the renormalisation and factorisation scales using the mcfm program [1]. The effect of variations in the PDFs and the value of α s on the predicted cross section are derived by following the same prescription as for the signal acceptance. Including the experimental uncertainties gives a systematic uncertainty of around 10 % for WZ and ZZ processes. In the case of Wγ (∗) backgrounds, it rises to 30 %, due to the lack of knowledge of the overall normalisation. The total uncertainty in the background estimates is about 15 %, which is dominated by the systematic uncertainties in the normalisation of the top-quark and W+jets backgrounds. A 2.2 % uncertainty is assigned to the integrated luminosity measurement [37]. A summary of the uncertainties is given in Table 2. For simplicity, averages of the estimates for WZ and ZZ backgrounds are shown.

6 The WW cross section measurement

The number of events observed in the signal region is N data=1134. The W+W− yield is calculated by subtracting the expected contributions of the various SM background processes, N bkg=247±15 (stat.)±30 (syst.) events. The inclusive cross section is obtained from the expression

where the signal selection efficiency ϵ, including the detector acceptance and averaging over all lepton flavours, is found to be (3.28±0.02 (stat.)±0.26 (syst.)) % using simulation and taking into account the two production modes. As shown in Eq. (1), the efficiency is corrected by the branching fraction for a W boson decaying to each lepton family, \(\mathcal{B}( \mathrm {W} \to\ell \overline {\nu } ) = (10.80 \pm0.09)~\%\) [38], to estimate the final inclusive efficiency for the signal.

The W+W− production cross section in pp collision data at \(\sqrt{s} = 7~\mathrm{TeV}\) is measured to be

The statistical uncertainty is due to the total number of observed events. The systematic uncertainty includes both the statistical component from the limited number of events and systematic uncertainties in the background prediction, as well as the uncertainty in the signal efficiency.

This measurement is consistent with the SM expectation of 47.0±2.0 pb, based on \(\mathrm {q} \overline {\mathrm {q}} \) annihilation and gluon–gluon fusion. For the event selection used in the analysis, the expected theoretical cross section may be larger by as much as 5 % because of additional W+W− production processes, such as diffractive production [39], double parton scattering, QED exclusive production [40] and Higgs boson production with decay to W+W−. The dominant contribution of about 4 % would come from SM Higgs production, assuming its mass to be near 125 GeV [4].

The measured W+W− cross section can be presented in terms of a ratio to the Z boson production cross section in the same data set. The W+W− to Z cross section ratio, \(\sigma_{ \mathrm {W}^{+} \mathrm {W}^{-} }/\sigma_{ \mathrm {Z} }\), provides a good cross-check of this W+W− cross section measurement, using the precisely known Z boson production cross section as a reference. This ratio has the advantage that some systematic effects cancel. More precise comparisons between measurements from different data-taking periods are possible because the ratio is independent of the integrated luminosity. The PDF uncertainty in the theoretical cross section prediction is also largely cancelled in this ratio, since both W+W− and the Z boson are produced mainly via \(\mathrm {q} \overline {\mathrm {q}} \) annihilation. The estimated theoretical value for this ratio is [1.63±0.07 (theor.)]×10−3 [31], where the scale uncertainty between both processes is considered uncorrelated, while the PDF uncertainty is assumed fully correlated.

The Z boson production process is measured in the e+e−/μ + μ − final states using events passing the same lepton selection as in the W+W− measurement and lying within the Z mass window, where the purity of the sample is about 99.8 % [31]. Nonresonant backgrounds (including Z/γ ∗→τ + τ −) are estimated from eμ data, while the resonant component of WZ and ZZ processes is normalised to NLO cross sections using MC samples. Correlation of theoretical and experimental uncertainties between the two processes is taken into account. An additional 2 % uncertainty in the shape of the Z resonance due to final-state radiation and higher-order effects is assigned. The latter is based on the difference between the next-to-next-to-leading-order prediction from fewz 2.0 [41] simulation code and the MC generator used in the analysis, and on the renormalisation and factorisation scale variation given by fewz.

The ratio of the inclusive W+W− cross section to the Z cross section in the dilepton mass range between 60 and 120 GeV is measured to be

in agreement with the theoretical expectation. The Z cross section resulting from this ratio, assuming the standard model value for the W+W− cross section, is 1.1 % higher than the inclusive Z cross section measurement in CMS using the 2010 data set [31], which had an integrated luminosity of 36 pb−1, but well within the systematic uncertainties of both measurements.

7 Limits on the anomalous triple gauge–boson couplings

A search for anomalous TGCs is done using the effective Lagrangian approach with the LEP parametrisation [2] without form factors. The most general form of such a Lagrangian has 14 complex couplings (seven for WWZ and seven for WWγ). Assuming electromagnetic gauge invariance and charge and parity symmetry conservation, that number is reduced to five real couplings: Δκ Z , \(\Delta g_{1}^{Z}\), Δκ γ , λ Z and λ γ . Applying gauge invariance constraints leads to

which reduces the number of independent couplings to three. In the SM, all five couplings are zero. The coupling constants \(\Delta g_{1}^{ \mathrm {Z} }\) and Δκ γ parametrise the differences from the standard model values of 1 for both \(g_{1}^{ \mathrm {Z} }\) and κ γ , which are measures of the WWZ and WWγ coupling strengths, respectively.

The presence of anomalous TGCs would enhance the production rate for diboson processes at high boson p T and high invariant mass. The effect of these couplings is ascertained by evaluating the expected distribution of p Tmax, the transverse momentum of the leading (highest-p T) lepton, and by comparing it to the measured distribution, using a maximum-likelihood fit. The p Tmax is a very sensitive observable for these searches, and it is widely used in the fully leptonic final states, since the total mass of the event cannot be fully reconstructed. The likelihood L is defined as a product of Poisson probability distribution functions for the observed number of events (N obs) and the combined one for each event, P(p T):

where N exp is the expected number of signal and background events. The leading lepton p T distributions with anomalous couplings are simulated using the mcfm NLO generator, taking into account the detector effects. The distributions are corrected for the acceptance and lepton reconstruction efficiency, as described in Sect. 5. The uncertainties in the quoted integrated luminosity, signal selection and background fraction are assumed to be Gaussian. These uncertainties are incorporated in the likelihood function in Eq. (2) by introducing nuisance parameters with Gaussian constraints. A set of points with nonzero anomalous couplings is used and distributions between the points are extrapolated assuming a quadratic dependence of the differential cross section as a function of the anomalous couplings.

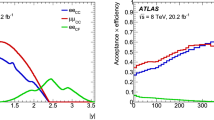

Figure 3 shows the measured leading lepton p T distributions in data and the predictions for the SM W+W− signal and background processes, as well as the expected distributions with non-negative anomalous couplings, in the two-dimensional model λ Z–\(\Delta g_{1}^{ \mathrm {Z} }\).

Leading lepton p T distribution in data (points with error bars) overlaid with the best fit using a two-dimensional λ Z–\(\Delta g_{1}^{ \mathrm {Z} }\) model (solid histogram) and two expected distributions with anomalous coupling value, λ Z≠0 (dashed and dotted histograms). In the SM, λ Z=0. The last bin includes the overflow

No evidence for anomalous couplings is found. The 95 % confidence level (CL) intervals of allowed anomalous couplings values, setting the other two couplings to their SM expected values, are

The results presented here are comparable with the measurements performed by the ATLAS Collaboration [8] using the LEP parametrisation. These results are also comparable upon those obtained at the Tevatron [42, 43], which are based on the HISZ parametrisation [44] and LEP parametrisation with form factors, but they are not as precise as the combination of the LEP experiments [45–47]. Recently, CMS has set limits on these couplings [48], using a different final-state channel. Our measurements clearly demonstrate that both the WWZ and WWγ couplings exist, as predicted in the standard model (\(g_{1}^{ \mathrm {Z} } = 1\), κ γ =1). Figure 4 displays the contour plots at the 68 % and 95 % CL for the Δκ γ =0 and \(\Delta g_{1}^{ \mathrm {Z} } = 0\) scenarios.

8 Summary

This paper reports a measurement of the W+W− cross section in the \(\mathrm {W}^{+} \mathrm {W}^{-} \to\ell^{+}\nu\ell^{-} \overline {\nu } \) decay channel in proton-proton collisions at a centre of mass energy of 7 TeV, using the full CMS data set of 2011. The W+W− cross section is measured to be 52.4±2.0 (stat.)±4.5 (syst.)±1.2 (lum.) pb, consistent with the NLO theoretical prediction, σ NLO(pp→W+W−)=47.0±2.0 pb. No evidence for anomalous WWZ and WWγ triple gauge-boson couplings is found, and stringent limits on their magnitude are set.

References

J.M. Campbell, R.K. Ellis, C. Williams, Vector boson pair production at the LHC. J. High Energy Phys. 07, 018 (2011). doi:10.1007/JHEP07(2011)018, arXiv:1105.0020

G. Gounaris et al., Triple gauge boson couplings. Physics at LEP2 52 (1996). doi:10.5170/CERN-1996-001-V-1, arXiv:hep-ph/9601233

CMS Collaboration, Search for the standard model Higgs boson decaying to W+W− in the fully leptonic final state in pp collisions at \(\sqrt{s} = 7~\mathrm{TeV}\). Phys. Lett. B 710, 91 (2012). doi:10.1016/j.physletb.2012.02.076, arXiv:1202.1489

CMS Collaboration, Observation of a new boson at a mass of 125 GeV with the CMS experiment at the LHC. Phys. Lett. B 716, 30 (2012). doi:10.1016/j.physletb.2012.08.021, arXiv:1207.7235

ATLAS Collaboration, Observation of a new particle in the search for the standard model Higgs boson with the ATLAS detector at the LHC. Phys. Lett. B 716, 1 (2012). doi:10.1016/j.physletb.2012.08.020, arXiv:1207.7214

CMS Collaboration, Measurement of W+W− production and search for the Higgs boson in pp collisions at \(\sqrt{s} = 7~\mathrm{TeV}\). Phys. Lett. B 699, 25 (2011). doi:10.1016/j.physletb.2011.03.056, arXiv:1102.5429

ATLAS Collaboration, Measurement of the W+W− cross section in \(\sqrt{s} = 7~\mathrm{TeV}\) pp collisions with ATLAS. Phys. Rev. Lett. 107, 041802 (2011). doi:10.1103/PhysRevLett.107.041802, arXiv:1104.5225

ATLAS Collaboration, Measurement of the W+W− cross section in \(\sqrt{s} = 7~\mathrm{TeV}\) pp collisions with the ATLAS detector and limits on anomalous gauge couplings. Phys. Lett. B 712, 289 (2012). doi:10.1016/j.physletb.2012.05.003, arXiv:1203.6232

ATLAS Collaboration, Measurement of W+W− production in pp collisions at \(\sqrt{s} = 7~\mathrm{TeV}\) with the ATLAS detector and limits on anomalous WWZ and WWγ couplings. Phys. Rev. D 87, 112001. doi:10.1103/PhysRevD.87.112001, arXiv:1210.2979

CMS Collaboration, The CMS experiment at the CERN LHC. J. Instrum. 3, S08004 (2008). doi:10.1088/1748-0221/3/08/S08004

J. Alwall et al., MadGraph 5: going beyond. J. High Energy Phys. 06, 128 (2011). doi:10.1007/JHEP06(2011)128, arXiv:1106.0522

T. Binoth, M. Ciccolini, N. Kauer, M. Krämer, Gluon-induced W-boson pair production at the LHC. J. High Energy Phys. 12, 046 (2006). doi:10.1088/1126-6708/2006/12/046, arXiv:hep-ph/0611170

S. Frixione, P. Nason, C. Oleari, Matching NLO QCD computations with parton shower simulations: the POWHEG method. J. High Energy Phys. 11, 070 (2007). doi:10.1088/1126-6708/2007/11/070, arXiv:0709.2092.

T. Sjöstrand, S. Mrenna, P. Skands, PYTHIA 6.4 physics and manual. J. High Energy Phys. 05, 026 (2006). doi:10.1088/1126-6708/2006/05/026, arXiv:hep-ph/0603175

H.-L. Lai et al., Uncertainty induced by QCD coupling in the CTEQ global analysis of parton distributions. Phys. Rev. D 82, 054021 (2010). doi:10.1103/PhysRevD.82.054021, arXiv:1004.4624

H.-L. Lai et al., New parton distributions for collider physics. Phys. Rev. D 82, 074024 (2010). doi:10.1103/PhysRevD.82.074024, arXiv:1007.2241

GEANT4 Collaboration, GEANT4—a simulation toolkit. Nucl. Instrum. Methods A 506, 250 (2003). doi:10.1016/S0168-9002(03)01368-8

CMS Collaboration, Electron reconstruction and identification at \(\sqrt{s} = 7~\mathrm{TeV}\). CMS Physics Analysis Summary CMS-PAS-EGM-10-004 (2010)

CMS Collaboration, Performance of CMS muon reconstruction in pp collision events at \(\sqrt{s} = 7~\mathrm{TeV}\). J. Instrum. 7, P10002 (2012). doi:10.1088/1748-0221/7/10/P10002, arXiv:1206.4071

CMS Collaboration, Particle flow event reconstruction in CMS and performance for jets, taus, and MET. CMS Physics Analysis Summary CMS-PAS-PFT-09-001 (2009)

CMS Collaboration, Jet performance in pp collisions at \(\sqrt{s} = 7~\mathrm{TeV}\). CMS Physics Analysis Summary CMS-PAS-JME-10-003 (2010)

M. Cacciari, G.P. Salam, G. Soyez, The anti-k t jet clustering algorithm. J. High Energy Phys. 04, 063 (2008). doi:10.1088/1126-6708/2008/04/063, arXiv:0802.1189

M. Cacciari, G.P. Salam, G. Soyez, FastJet user manual. Eur. Phys. J. C 72, 1896 (2012). doi:10.1140/epjc/s10052-012-1896-2, arXiv:1111.6097v1

M. Cacciari, G.P. Salam, Dispelling the N 3 myth for the k t jet-finder. Phys. Lett. B 641, 57 (2006). doi:10.1016/j.physletb.2006.08.037, arXiv:hep-ph/0512210

M. Cacciari, G.P. Salam, Pileup subtraction using jet areas. Phys. Lett. B 659, 119 (2008). doi:10.1016/j.physletb.2007.09.077, arXiv:0707.1378

CMS Collaboration, Determination of jet energy calibration and transverse momentum resolution in CMS. J. Instrum. 6, 11002 (2011). doi:10.1088/1748-0221/6/11/P11002, arXiv:1107.4277

CMS Collaboration, Identification of b-quark jets with the CMS experiment. J. Instrum. 8, P04013 (2013). doi:10.1088/1748-0221/8/04/P04013, arXiv:1211.4462

CMS Collaboration, Commissioning of b-jet identification with pp collisions at \(\sqrt{s} = 7~\mathrm{TeV}\). CMS Physics Analysis Summary CMS-PAS-BTV-10-001 (2010)

CMS Collaboration, Missing transverse energy performance of the CMS detector. J. Instrum. 6, P09001 (2011). doi:10.1088/1748-0221/6/09/P09001, arXiv:1106.5048

R.C. Gray et al., Backgrounds to Higgs boson searches from asymmetric internal conversion (2011). arXiv:1110.1368

CMS Collaboration, Measurements of inclusive W and Z cross sections in pp collisions at \(\sqrt{s} = 7~\mathrm{TeV}\). J. High Energy Phys. 11, 080 (2011). doi:10.1007/JHEP01(2011)080, arXiv:1012.2466

M. Botje et al., The PDF4LHC Working Group Interim Recommendations (2011). arXiv:1101.0538

S. Alekhin et al., The PDF4LHC Working Group Interim Report (2011). arXiv:1101.0536

A.D. Martin, W.J. Stirling, R.S. Thorn, G. Watt, Parton distributions for the LHC. Eur. Phys. J. C 63, 189 (2009). doi:10.1140/epjc/s10052-009-1072-5, arXiv:0901.0002

NNPDF Collaboration, Impact of heavy quark masses on parton distributions and LHC phenomenology. Nucl. Phys. B 849, 296 (2011). doi:10.1016/j.nuclphysb.2011.03.021, arXiv:1101.1300

LHC Higgs Cross Section Working Group, Handbook of LHC Higgs cross sections: inclusive observables (2011). arXiv:1101.0593

CMS Collaboration, Absolute calibration of the luminosity measurement at CMS: winter 2012 update. CMS Physics Analysis Summary CMS-PAS-SMP-12-008 (2012)

J. Beringer et al. (Particle Data Group), Review of particle physics. Phys. Rev. D 86, 010001 (2012). doi:10.1103/PhysRevD.86.010001

P. Bruni, G. Ingelman, Diffractive hard scattering at e p and \(\mathrm {p} \overline{\mathrm{p}}\) colliders, in Int. Europhysics Conference on High Energy Physics. Conf. Proc. C, vol. 722, Marseilles, France (1993), p. 595

A. Pukhov, CalcHEP 2.3: MSSM, structure functions, event generation, batchs, and generation of matrix elements for other packages (2004). arXiv:hep-ph/0412191

R. Gavin, Y. Li, F. Petriello, S. Quackenbush, FEWZ 2.0: a code for hadronic Z production at next-to-next-to-leading order. Comput. Phys. Commun. 182, 2388 (2011). doi:10.1016/j.cpc.2011.06.008, arXiv:1011.3540v1

CDF Collaboration, Measurement of the WZ cross section and triple gauge couplings in \(p\overline{p}\) collisions at \(\sqrt{s}=1.96~\mathrm{TeV}\). Phys. Rev. D 86, 031104 (2012). doi:10.1103/PhysRevD.86.031104

D0 Collaboration, Limits on anomalous trilinear gauge boson couplings from WW, WZ and Wγ production in \(\mathrm {p} \overline {\mathrm {p}} \) collisions at s=1.96 TeV. Phys. Lett. B 718, 451 (2012). doi:10.1016/j.physletb.2012.10.062, arXiv:1208.5458

K. Hagiwara, S. Ishihara, R. Szalapski, D. Zeppenfeld, Low energy effects of new interactions in the electroweak boson sector. Phys. Rev. D 48, 2182 (1993). doi:10.1103/PhysRevD.48.2182

ALEPH Collaboration, Improved measurement of the triple gauge-boson couplings γWW and ZWW in e+e− collisions. Phys. Lett. B 614, 7 (2005). doi:10.1016/j.physletb.2005.03.058

L3 Collaboration, Measurement of triple-gauge-boson couplings of the W boson at LEP. Phys. Lett. B 586, 151 (2004). doi:10.1016/j.physletb.2004.02.045, arXiv:hep-ex/0402036

OPAL Collaboration, Measurement of charged current triple gauge boson couplings using W pairs at LEP. Eur. Phys. J. C 33, 463 (2004). doi:10.1140/epjc/s2003-01524-6, arXiv:hep-ex/0308067

CMS Collaboration, Measurement of the sum of WW and WZ production with W+dijet events in pp collisions at \(\sqrt{s}=7~\mathrm{TeV}\). Eur. Phys. J. C 73, 2283 (2013). doi:10.1140/epjc/s10052-013-2283-3, arXiv:1210.7544

Acknowledgements

We congratulate our colleagues in the CERN accelerator departments for the excellent performance of the LHC and thank the technical and administrative staffs at CERN and at other CMS institutes for their contributions to the success of the CMS effort. In addition, we gratefully acknowledge the computing centres and personnel of the Worldwide LHC Computing Grid for delivering so effectively the computing infrastructure essential to our analyses. Finally, we acknowledge the enduring support for the construction and operation of the LHC and the CMS detector provided by the following funding agencies: BMWF and FWF (Austria); FNRS and FWO (Belgium); CNPq, CAPES, FAPERJ, and FAPESP (Brazil); MEYS (Bulgaria); CERN; CAS, MoST, and NSFC (China); COLCIENCIAS (Colombia); MSES (Croatia); RPF (Cyprus); MoER, SF0690030s09 and ERDF (Estonia); Academy of Finland, MEC, and HIP (Finland); CEA and CNRS/IN2P3 (France); BMBF, DFG, and HGF (Germany); GSRT (Greece); OTKA and NKTH (Hungary); DAE and DST (India); IPM (Iran); SFI (Ireland); INFN (Italy); NRF and WCU (Republic of Korea); LAS (Lithuania); CINVESTAV, CONACYT, SEP, and UASLP-FAI (Mexico); MSI (New Zealand); PAEC (Pakistan); MSHE and NSC (Poland); FCT (Portugal); JINR (Armenia, Belarus, Georgia, Ukraine, Uzbekistan); MON, RosAtom, RAS and RFBR (Russia); MSTD (Serbia); SEIDI and CPAN (Spain); Swiss Funding Agencies (Switzerland); NSC (Taipei); ThEPCenter, IPST and NSTDA (Thailand); TUBITAK and TAEK (Turkey); NASU (Ukraine); STFC (United Kingdom); DOE and NSF (USA).

Individuals have received support from the Marie-Curie programme and the European Research Council and EPLANET (European Union); the Leventis Foundation; the A. P. Sloan Foundation; the Alexander von Humboldt Foundation; the Belgian Federal Science Policy Office; the Fonds pour la Formation à la Recherche dans l’Industrie et dans l’Agriculture (FRIA-Belgium); the Agentschap voor Innovatie door Wetenschap en Technologie (IWT-Belgium); the Ministry of Education, Youth and Sports (MEYS) of Czech Republic; the Council of Science and Industrial Research, India; the Compagnia di San Paolo (Torino); the HOMING PLUS programme of Foundation for Polish Science, cofinanced by EU, Regional Development Fund; and the Thalis and Aristeia programmes cofinanced by EU-ESF and the Greek NSRF.

Open Access

This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

Author information

Authors and Affiliations

Consortia

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License ( https://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

The CMS Collaboration., Chatrchyan, S., Khachatryan, V. et al. Measurement of the W+W− cross section in pp collisions at \(\sqrt{s} = 7\mbox{ TeV}\) and limits on anomalous WWγ and WWZ couplings. Eur. Phys. J. C 73, 2610 (2013). https://doi.org/10.1140/epjc/s10052-013-2610-8

Received:

Revised:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-013-2610-8