Abstract

Our 1998 paper “Encouraging Student Reflection and Articulation using a Learning Companion” (Goodman et al. 1998) was a stepping stone in the progression of learning companions for intelligent tutoring systems (ITS). A simulated learning companion, acting as a peer in an intelligent tutoring environment ensures the availability of a collaborator and encourages the student to learn collaboratively, while drawing upon the instructional advantages that ITSs provide. This paper is a commentary on our 1998 paper, reflecting on that research and some of the subsequent relevant research by others and us since then in Learning Companions, Intelligent Tutoring Systems, and Collaborative Learning.

Similar content being viewed by others

Introduction and Motivation

This paper is a commentary on our 1998 paper “Encouraging Student Reflection and Articulation using a Learning Companion” (Goodman et al. 1998). It looks back at the research on Learning Companions presented in that paper and reflects on some of the tremendous advances in Intelligent Tutoring Systems (ITS), Learning Companions (LC), and Collaborative Learning (CL) technologies since that time. The instructional benefits of peer learning and the importance of collaborative learning technologies have become more apparent as these technologies have matured. The primary contribution of our 1998 paper was the pedagogical benefits provided by even limited peer-to-peer interaction through menu-based dialogues in an ITS. The suggestions of our simulated peer, even though often flawed, due to limited natural language capabilities and modeling of the domain, still pushed human students to think about what they were learning and how to express that information to others, enhancing their learning experience.

At the time of our paper, Intelligent tutoring systems (ITS) had begun to extend beyond the viewpoint that an ITS should solely provide a single student with individualized instruction and coached practice. The tutor in a traditional ITS behaved much like a human instructor in a one-on-one tutoring session, observing the student’s problem solving actions and offering advice and guidance. A different paradigm at that time, that also had the potential to significantly improve learning, was collaborative learning. It was known that classroom learning improves significantly when students participate in structured learning activities with small groups of peers (Brown and Palincsar 1989; Chi et al. 1989; Doise et al. 1975). Peers encourage each other to reflect on what they are learning and to articulate their thinking, which enhances the learning process. The educational value of student collaboration has led to the development of computer-supported collaborative learning (CSCL) tools (Lund et al. 1996; McManus and Aiken 1993). These tools enrich learning in a setting that encourages students to communicate with their peers while solving problems. Although CSCL systems at the time of our paper provided suitable learning environments, they did not offer students the type of individualized assistance and guidance available in an ITS, and they required at least two human participants willing to collaborate while they are studying. A simulated learning companion Footnote 1 (Self 1986; Chan and Baskin 1988; VanLehn et al. 1994; Chan 1995, 1996; Ragnemalm 1996), acting as a peer in an intelligent tutoring environment ensures the availability of a collaborator and encourages the human student to learn cooperatively, while utilizing the instructional advantages that ITSs provide.

The goal of the research presented in our 1998 paper was to promote more effective instructional exchanges between a student and an intelligent tutoring system. The approach taken to meet this goal involves providing a simulated peer as a partner for the student in learning and problem solving. The learning companion described in our 1998 paper enhances learning by initiating a dialogue with a student, forcing the student to reflect and articulate on their learning. A positive side-effect of using a learning companion is that more information is available for the ITS’s student model to record the student’s understanding of the material. A more detailed student model can lead to better coaching and problem selection for the student. Other research has shown the instructional value added by having a student work with a peer in a learning environment (e.g., Brown and Palincsar 1989; Katz and Lesgold 1993; Katz 1995, 1997). While the tutor could be designed to play the role of a peer, it is better to differentiate these roles since a student has higher expectations for the quantity and quality of communication received from a tutor than from a peer (Fox 1993). Moreover, learning companion and student interactions can be more tightly constrained while still achieving the desired effect of getting a student to clearly layout his reasoning.

Many advances in learning companions, intelligent tutoring systems, and collaborative learning have occurred since our 1998 paper. New learning companions geared to address the pedagogical needs of a human student through more human-like interactions have been introduced. These learning companions build on advances in natural language understanding, affective modeling, and machine learning. This commentary discusses our original approach and motivation, describes related follow-on research in learning companions, intelligent tutoring systems, and collaborative learning, and highlights some outstanding research issues.

Approach: Coaching Issues Related to Reflection and Articulation

Our 1998 paper focused not just on providing a learning companion but one that encouraged reflection and articulation on the part of the human student. Research in education at the time of our paper had shown the importance of learners reflecting on what they have done so far and articulating it to others (Chi et al. 1989; Collins 1990; Frederiksen and White 1997; Koschmann 1995; Lesgold et al. 1992). Reflective activities encourage students to analyze their performance, contrast their actions to those of others, abstract the actions they used in similar situations, and compare their actions to those of novices and experts (Collins 1990). Reflection enhances the learning benefit of an exercise because it gives students an opportunity to review their previous actions and decisions before proceeding, enabling them to make more educated decisions later.

Articulation is the process of explaining to others the problem solving activities that have occurred. A student, for example, could build and test theories and then explain the theories to another student. Articulation, thus,

“enhances retention, it illuminates the coherence of current understanding, it sensitizes knowledge points for impact by subsequent feedback, and it forces the learner to take a stand on his or her knowledge in the presence of peers, making a commitment that calls for assessing and evaluating that knowledge and setting the stage for future learning” (Koschmann 1995, p. 93).

The learning companion, “LuCy,” described in our 1998 paper as part of the PROPA ITS (Goodman et al. 1997; Cheikes 1998) helps students articulate and reflect on what they have learned revealing a deeper understanding of their domain knowledge; a menu-driven dialogue provides the delivery vehicle for the communication. PROPA is an ITS prototype intended to teach explanatory analysis skills in the domain of satellite activity (Cheikes 1998). Explanatory analysis is the process by which an analyst formulates explanations for past or predicted events. The learning companion in PROPA encourages a student to reflect on choices that were made, actions taken, and concepts learned while solving an assigned problem. Our learning companion restricted communication by having the student select from a list of possible responses. In most cases, this limitation did not impose a significant impediment to the student since the dialogue is structured to draw out the student’s beliefs and thoughts by forcing him to either agree or disagree with the peer and to explain why by choosing a reason. Figures 1, 2, and 3 illustrate communication between the student and LuCy in PROPA. Instructional systems at the time of our paper utilized a number of different ways to gather information about the learner’s knowledge and promote learning through peer-oriented instructional activities such as those summarized in Table 1 below.

Learning companions could fill many roles in an instructional context. A learning companion can, for example, be designed to play the role of an executive, suggesting new ideas for the student to consider, or a critic, challenging the student’s proposals. The role of a learning companion and the extent of its knowledge can be defined by the instructional designer and controlled by the runtime instructional component of the ITS. This allows for the adaptation of the learning companion’s behavior to a student’s individual needs — an advantage over human collaborators.

A core limitation of our original research is that we did not provide a rigorous study on the effectiveness of our learning companion. Contrasting peer-to-peer and student-to-instructor interactions would help provide the foundation for a formative evaluation of this research. Insights gained from this research, however, led to two formal experiments that were conducted in collaborative learning (cf. Soller et al. 1998), one with and one without the use of computer-supported collaborative learning technology. These experiments provided insight into the kinds of peer-to-peer interactions that occur in an instructional setting and the importance of particular dialogue interactions. Additional formal studies have been conducted on subsequent learning companions (e.g., Hietala and Niemirepo 1997; Uresti and du Boulay 2004; Baylor and Kim 2005; Girard et al. 2013; Matsuda et al. 2013) providing important insights on the key features to drive effective learning in an ITS that incorporate a learning companion (as summarized in Table 3). Another limitation of our original research was that dialogues between the student and learning companion were restricted to anticipated menu-based comments and responses. While still useful in eliciting information from a student, exploring the depth of a student’s knowledge, and urging a student to move in a positive direction, the dialogues made the interaction unnatural and not always the most appropriate. Better natural language and student modeling techniques seen since have improved such interactions.

The next section describes some relevant research in learning companions, intelligent tutoring systems, and collaborative learning since our original research that have led to more productive learning experiences for learners.

Progress in Learning Companions

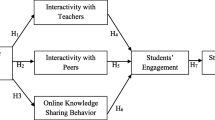

Our 1998 research was intended to blend ITSs with Computer-Supported Collaborative Learning (CSCL) to reap the benefits of peer learning. Figure 4 below attempts to highlight the differences between a Traditional ITS, a Collaborative ITS, a Traditional CSCL, an Intelligent CSCL, and recent approaches using intelligent tools for online learning communities and massive open online courses (MOOCs) (Kay et al. 2013). The arrows indicate the participants of each instructional technology, with a dashed arrow indicating when a participant is optional. The role of a Human Coach or Simulated Coach in CSCL environments can include an expanded task, beyond the traditional coaching ones, to facilitate group interaction. The connection between Collaborative ITS and Intelligent CSCL is through the incorporation of one or more Simulated Peers in the instructional environment and that was the main concentration of our research and the focus of this paper. Online learning communities encompass these approaches and those provided by MOOCs to reach a much larger population of learners.

Our research continued and progressed down a couple different paths as illustrated in Fig. 5. First, our research led to the development of a learning companion for use in a map-based ITS (Goodman and Iorizzo 2000). The instructional environment provided a learning experience for students through scenario-based training, computer-based training on core domain and reference materials, and background materials. The learning companion interacted with the human student while s/he worked through map-oriented exercises in a map tool. Second, our research in learning companions for ITSs motivated us to investigate collaborative learning among students more closely. Collaborative learning similarly benefits from the student reflection and articulation goals of our original research but the complexity of group interactions required a better understanding of the effects of group dynamics. Results of such an analysis could lead to insights on how intelligent support could be provided to learners in a collaborative learning environment. A simulated pedagogical agent for an intelligent CSCL would, for example, require broader information than a traditional learning companion to provide effective guidance to a learning team or individual members of the team. The pedagogical agent must infer the domain knowledge of individual student collaborators, effectiveness of peer discussion and peer interactions, presence or lack of important instructional roles, and the level of understanding of individual learners in the group. Table 2 below lists these and additional relevant characteristics we investigated to guide a pedagogical agent.

We studied the dynamics of collaborative learning groups by observing students working together to solve a common problem in software design (Soller et al. 1998; Soller 2001). We found that students learning in small groups encourage each other to ask questions, explain and justify their opinions, articulate their reasoning, and elaborate and reflect upon their knowledge, thereby motivating and improving learning. These benefits, however, were only achieved by active and well-functioning learning teams. Placing students in a group and assigning them a task did not guarantee that the students would engage in effective collaborative learning behavior. While some peer groups seem to interact productively, others struggle to maintain a balance of participation, leadership, understanding, and encouragement.

Soller and Lesgold (2000) extend our initial CSCL research. They point out that supporting group learning requires understanding the process of collaborative learning. This understanding entails a fine-grained sequential analysis of the group activity and conversation. Dialogue acts provided the representation for communication between collaborators. Modeling instructional dialogue at the dialogue act level made it possible to gain insight into the nature of the conversation while avoiding the much larger and more difficult natural language understanding problem. Soller and Lesgold (2000) discuss the merits of applying different computational approaches for modeling collaborative learning activities such as the transfer of new knowledge between collaborators. We adopted their revised version of our CSCL software but instead of focusing on knowledge sharing among learners, we addressed a different aspect of collaboration, group dynamics, and chose a different modeling technique for our research. Dialogues were collected at the University of Pittsburgh and the MITRE Corporation to provide a corpus for study for both parties.

The results of our research (Soller et al. 1998, 1999) demonstrate the potential of dialogue acts to identify, for example, the distinction between a balanced, supportive group and an unbalanced, unsupportive group. A balanced, supportive group is one committed to helping its members understand the problem domain. An unbalanced, unsupportive group is typically unfocused and less committed to helping its team members. The use of dialogue acts underlying communication between members of each group showed extensive differences that could indicate which type of behavior was exhibited.

An additional investigation of small learning groups was warranted to reveal the ways participants in a collaborative learning task interact and the factors that govern those interactions (Goodman et al. 2005). The goal of the research was to see if (1) the instructional roles played by members of the group could be deduced from machine-inferable factors about the collaboration and (2) whether the presence or absence of particular instructional roles indicated the effectiveness of the learning. Each goal was explored independently. Our hypothesis was that the presence or absence of particular roles could be an indicator of the status of the on-going collaborative learning process. Our study resulted in a model that recognized the instructional roles underlying students’ utterances during an evolving student discussion. With such recognition, one can contrast situations where certain roles arise to those where they do not. When a role is expected but missing, it might indicate a place where our pedagogical agent might want to intervene and play the missing role to facilitate better learning and problem solving.

Another important indicator of effective or ineffective small learning group interaction is the presence of deliberative discussion. Deliberative discussions are characterized by an attitude of social cooperation, a willingness to share information, openness to persuasion by reason, a good faith exchange of views, and decisions made by a pooling of judgments; all of which should lead to substantively better learning outcomes (Rehg et al. 2001). Any statement during student dialogue that proposed a potential solution step, that continued another’s proposal, or posed an alternative to a postulated solution step, and statements that explicitly agreed or disagreed with someone, are taken as evidence of deliberative discussion. Our hypothesis was that dialogue acts are indicators of deliberative discussion. Our study results (Goodman et al. 2005) demonstrated that important characteristics of group activity during collaborative learning could be detected using machine learning techniques. In particular, we found neural nets were effective at detecting localized and specific instances of good or poor collaboration. A pedagogical agent can use these indicators to make intervention decisions regarding the quality of the solution and the nature of the learners’ small group interaction. Example interventions include:

-

Attempt to keep the conversation coherent, focusing one topic at a time, and keeping all learners on the same topic, while still permitting learner control and flexibility in terms of topic selection,

-

Help the learners reach a complete correct solution by encouraging further discussion of incomplete or incorrect solutions,

-

Encourage deliberative discussion (e.g., “Joe, what do you think about the explanation that Mary just presented?”), rather than debates or negotiations, where learners exhibit social cooperation, a willingness to share information, openness to persuasion by reason, a good faith exchange of views, and decisions made by pooling of judgments all leading to bettering learning and outcomes that produce high-quality knowledge, deeply embedded in learners’ cognitive structures,

-

Encourage each learner to increase their knowledge of each topic by measuring their degree of understanding and coaching them to interact with their peers to increase their knowledge,

-

Promote productive dialogue by keeping learners aware of the needs of other learners and encouraging them to be responsive to those needs.

Our goal in this research was the advancement of interactions between learners and simulated peer agents that promote desired features of beneficial collaboration for learning in a group. These include broad involvement of all learners in discussions, measured and productive progress towards educational goals through dialogues between learners, and encouragement and support as needed. Many advances in learning companions and collaborative learning have occurred since our 1998 paper. New learning companions geared to address the pedagogical needs of a student through more human-like interactions have been introduced building on advances in natural language processing, virtual agents, affective modeling, and machine learning. Table 3 highlights some of these advances. Learning companions have grown to fill specific roles to provide targeted pedagogical support of the human learner. The roles of a learning companion include Weak Peer, Strong Peer, Expert, Motivator, Mentor, Teachable Agent, Facilitator, and Distributed Leader. The underlying pedagogical support varies from domain knowledge expertise, reinforcement of human student actions, to small group dynamics. Some learning companions are meant to be taught by the human learner to provide and reinforce learning as a side-effect in the human learner.

A learning companion’s pedagogical support is directly dependent on the pedagogical knowledge available to the learning companion to reason about the state of learners and how to best engage them. Table 4 summarizes some of the underlying pedagogical knowledge that drive today’s learning companions and establishes the available roles that they can play. For example, a Facilitator agent requires knowledge about group dynamics to best support a group of learners. Recent research in collaborative learning has expanded the notion of facilitation to include other important collaborative learning skills (e.g., distributed leadership and mutual engagement) to guide a group to better learning for all (Dragon et al. 2013).

Summary

Learning companions (Chan and Baskin 1988; Ragnemalm 1996) can help address the coaching issues our PROPA ITS and other ITSs face by portraying a number of different roles. Students benefit from a more complete learning experience when the instructional software can play the role of a collaborator for the student in addition to the traditional tutor role (Self 1985). Our 1998 paper described a learning companion, LuCy, a module of an ITS whose purpose was to encourage student reflection and articulation of past actions and future intentions. The combination of student and learning companion collaboration in addition to individualized coached practice allows a student to obtain guidance and support from the tutor while learning with a peer through collaboration.

LuCy’s dialogues encourage the student to reflect on thinking, and evaluate past actions. LuCy does this by prompting the student to explain the reasoning behind actions, and justify decisions leading to the actions. When the student takes an action, LuCy sometimes prompts the student to reflect on the reasoning behind this activity by asking the student questions about the action. If the student has seen data directly related to the current activity but has not yet applied it, LuCy will remind the student about the data in the context of a dialogue, and encourage re-evaluation of its relevance.

Table 5 provides a summary of LuCy’s characteristics in the context of the PROPA ITS.

Progress in learning companions and collaborative learning has been substantial since our 1998 paper. More human-like interactions between a human learner and Learning Companions have made it easier to bring the benefits of peer learning to an ITS while intensifying the learning benefits for the human learner. Additionally, the tracking of small group dynamics has made it feasible to deploy a learning companion in a CSCL environment. Learning companions can play the role of facilitator in helping guide the peer-to-peer interactions to promote learning and better group dynamics. Learning companions can offer benefits as instructional systems grow from teaching single learners to teach the large number of learners in today’s online learning environments and MOOCs, building on advances in machine learning and user modeling.

Open Research Questions

In 2015, given the past research since our 1998 paper, the open research question is no longer whether or not a learning companion can enhance the learning of human learners in an ITS or CSCL. Instead, there are still a number of open research questions surrounding learning companions and intelligent CSCLs that are important to address.

-

(1)

An outstanding issue that still persists today has to do with the generality and portability of learning companions to new domains, instructional situations, and paradigms. Our research and much of that since was tied to particular instantiations of a learning companion in a chosen domain. The complexity and cost of porting to a new instructional situation is high and a chosen learning companion methodology may no longer be applicable in the new domain. In particular, for learning companions to succeed in today’s online learning communities and MOOCs, they must address issues such as social isolation, dropout, and self-regulation among members of a large community of learners. Learning companions may move towards domain independence and expanded forms of interaction through new techniques in natural language processing, machine learning, big data, and user modeling.

-

(2)

It is feasible that more than one learning companion could interact with human students in an intelligent CSCL. An important question is how to best deploy, utilize, and coordinate multiple learning companions to fill various roles in an intelligent CSCL.

-

(3)

Modeling of users takes on a different perspective in an intelligent CSCL. There are attributes of individual students (a ‘student model’) and of the whole group of human learners (a ‘group model’) that need to be tracked to best drive the instructional support.

-

(4)

Learning companions can provide important pedagogical support to human learners. There are, however, other feedback paths that can be utilized to more effectively convey information to learners. For example, ‘indicators’ such as a gauge that indicates how busy a particular participant in a CSCL might be at any point of time. Such an indicator would help collaborative learners utilize the attentiveness of a particular learner during activities that require one’s engagement.

-

(5)

The development of learning companions for less structured learning environments such as open learning environments and MOOCs can benefit learners. Specialized agents for facilitation and distributed learning can help.

-

(6)

More natural and comprehensive dialogues that move beyond the menu-based approaches of the past can strengthen the interactions between human learners and learning companions.

Notes

Also known as a simulated pedagogical agent.

References

Baylor, A. L., & Kim, Y. (2005). Simulating instructional roles through pedagogical agents. International Journal on Artificial Intelligence in Education, 15, 95–115.

Brown, A., & Palincsar, A. (1989). Guided, cooperative learning and individual knowledge acquisition. In L. B. Resnick (Ed.), Knowledge, learning and instruction. Hillsdale: Lawrence Erlbaum.

Burleson, W. (2006). Affective Learning companions: strategies for emphatic agents with real-time multimodal affective sensing to foster meta-cognitive and meta-affective approaches to learning, motivation, and perseverance. Ph.D. Dissertation, Massachusetts Institute of Technology.

Chan, T.-W. (1995). Social learning systems: An overview. In B. Collis & G. Davies (Eds.), Innovative adult learning with innovative technologies (pp. 101–122). North-Holland: IFIP Transactions A-61.

Chan, T.-W. (1996). Learning companion systems, social learning systems, and the global social learning club. Journal of Artificial Intelligence in Education, 7(2), 125–159.

Chan, T., & Baskin, A. (1988). Studying with the prince. The computer as a learning companion. In Proceedings of the ITS-88 Conference, Montreal, Canada, 194–200.

Cheikes, B. (1998). PROPA: an argumentation-based tutor for explanatory tasks (MITRE Technical Report, MTR 98B0000056V005000R0). Bedford, MA: The MITRE Corporation.

Chi, M. T. H., Bassok, M., Lewis, M. W., Reinmann, P., & Galser, R. (1989). Self-explanations: how students study and use examples in learning to solve problems. Cognitive Science, 13, 145–182.

Collins, A. (1990). Cognitive apprenticeship and instructional technology. In L. Idol & B. F. Jones (Eds.), Educational values and cognitive instruction. Hillsdale: Lawrence Erlbaum.

Doise, W., Mugny, G., & Perret-Clermont, A. (1975). Social interaction and the development of cognitive operations. European Journal of Social Psychology, 5(3), 367–383.

Dragon, T., Mavrikis, M., McLaren, B. M., Harrer, A., Kynigos, C., Wegerif, R., & Yang, Y. (2013). In IEEE transactions on learning technologies, 6(3), 197–207.

Fox, B. (1993). The human tutorial dialogue project: issues in the design of instrutional systems (computers, cognition, and work). Hillsdale: Lawrence Erlbaum.

Frederiksen, J. R. & White, B. Y. (1997). Cognitive facilitation: a method for promoting reflective collaboration. In R. Hall, N. Miyake, and N. Enyedy (Eds.), Proceedings of the Second International Conference on Computer Support for Collaborative Learning, Toronto, 53–62.

Girard, S., Chavez-Echeagaray, M. E., Gonzalez-Sanchez, J., Hidalgo-Pontet, Y., Zhang, L., Burleson, W., & VanLehn, K. (2013). Defining the behavior of an affective learning companion in the affective meta-tutor project. In H. Chad Lane, Y. Kalina, M. Jack, & P. Philip (Eds.), Artificial intelligence in education, chapter 3 (pp. 21–30). Berlin: Springer.

Goodman, B. & Iorizzo, L. (2000). Learning with reflection: project praxis. In Proceedings of the Interservice / Industry Training, Simulation and Education Conference.

Goodman, B., Soller, A., Linton, F., & Gaimari, R. (1997). Encouraging student reflection and articulation using a learning companion. In Proceedings of the AI-ED 97 World Conference on Artificial Intelligence in Education (pp. 151–158).

Goodman, B., Soller, A., Linton, F., & Gaimari, R. (1998). Encouraging student reflection and articulation using a learning companion. In International Journal of Artificial Intelligence in Education, 9, 237–255.

Goodman, B. A., Linton, F. N., Gaimari, R. D., Hitzeman, J. M., Ross, H. J., & Zarrella, G. (2005). Using dialogue features to predict trouble during collaborative learning. User Modeling and User-Adapted Interaction, 15(1–2), 85–134.

Goodman, B. A., Drury, J., Gaimari, R. D., Kurland, L., & Zarrella, J. (2006). Applying user models to improve team decision making. MITRE Technical Report 060150, The MITRE Corporation, Cambridge, MA.

Hietala, P. & Niemirepo, T. (1997). Collaboration with software agents: What if the Learning Companion Agent Makes Errors? In B. du Boulay and R. Mizoguchi (Eds.), Proceedings of AI-ED 97 World Conference on Artificial Intelligence in Education, Kobe, Japan, IOS Press, 159–166.

Hietala, P. & Niemirepo, T. (1998). The competence of learning companion agents. International Journal of Artificial Intelligence in Education, 9(3–4), 178–192.

Katz, S. (1995). Identifying the support needed in computer-supported collaborative learning systems. In J. L. Schnase and E. L. Cunnius (Eds.), Proceedings of the First International Conference on Computer Support for Collaborative Learning (CSCL’95), Bloomington, 200–203.

Katz, S. (1997). An approach to analyzing learning conversations (LRDC Technical Report). Pittsburgh, PA: Learning Research and Development Center, University of Pittsburgh.

Katz, S., & Lesgold, A. (1993). The role of the tutor in computer-based collaborative learning situations. In S. P. Lajoie & S. J. Derry (Eds.), Computers as cognitive tools (pp. 289–317). Hillsdale, NJ: Lawrence Erlbaum.

Kay, J., Reimann, P., Diebold, E., & Kummerfeld, B. (2013). MOOCs: so many learners, so much potential…. IEEE Intelligent Systems, 28(3), 70–77.

Kort, B., Reilly, R., & Picard, R.W. (2001). An affective model of interplay between emotions and learning: reengineering educational pedagogy—building a learning companion. In Proceedings of the Intl Conference on Advanced Learning Technologies 2001 (ICALT-2001).

Koschmann, T. (1995). CSCL: Theory and practice of an emerging paradigm (computers, cognition, and work). Hillsdale: Lawrence Erlabaum.

Lesgold, A., Katz, S., Greenberg, L., Hughes, E., & Eggan, G. (1992). Extensions of intelligent tutoring paradigms to support collaborative learning. In S. Dijkstra, H. Krammer, & J. van Merrienboer (Eds.), Instructional models in computer-based learning environments (pp. 291–311). Berlin: Springer.

Lund, K., Baker, M, & Baron, M. (1996). Modeling dialogue and beliefs as a basis for generating guidance in a CSCL environment. In Proceedings of the ITS-96 Conference, Montreal, 206–214.

Matsuda, N., Yarzebinksi, E., Keiser, V., Raizada, R., Cohen, W. W., Sylianides, G. J., & Koedinger, K. R. (2013). Cognitive anatomy of tutor learning: lessons learned with simstudent. Journal of Educational Psychology, 105(4), 1152–1163.

McManus, M., & Aiken, R. (1993). The group leader paradigm in an intelligent collaborative learning system. In S. Ohlsson, P. Brna, & H. Pain (Eds.), Proceedings of the World Conference on Artificial Intelligence in Education, Edinburgh, Scotland, 249–256.

Ragnemalm, E.L. (1996). Collaborative dialogue with a learning companion as a source of information on student reasoning. In C. Frasson, G. Gauthier, and A. Lesgold (Eds.), Intelligent Tutoring Systems, Third International Conference. Berlin: Springer-Verlag, 650–658

Rehg, W., McBurney, P. & Parsons, S.: 2001, Computer decision support systems for public argumentation: criteria for assessment. In: H. V. Hansen, C. W. Tindale, J. A. Blair and R. H. Johnson (Eds.): Argumentation and its Applications. Proceedings of the Fourth Biennial Conference of the Ontario Society for the Study of Argumentation: OSSA 2001.

Self, J. (1985). A perspective on intelligent computer-assisted learning. Journal of Computer Assisted Learning, 1, 159–166.

Self, J. (1986). The application of machine learning to student modeling. Instructional Sciences, 14, 327–338.

Soller, A. (2001). Supporting social interaction in an intelligent collaborative learning system. International Journal of Artificial Intelligence in Education (IJAIED), 12, 40–62.

Soller, A. (2004). Computational modeling and analysis of knowledge sharing in collaborative distance learning. User Modeling and User-Adapted Interaction, 14(4), 351–381.

Soller, A., & Lesgold, A. (2000). Knowledge acquisition for adaptive collaborative learning environments. In AAAI Fall Symposium: Learning How to Do Things (pp. 251–262).

Soller, A., Goodman, B., Linton, F., & Gaimari, R. (1998). Promoting effective peer interaction in an intelligent collaborative learning system. In Intelligent Tutoring Systems (pp. 186–195). Springer Berlin Heidelberg.

Soller, A., Linton, F., Goodman, B., & Lesgold, A. (1999). Toward intelligent analysis and support of collaborative learning interaction. In Proceedings of the Ninth International Conference on Artificial Intelligence in Education (pp. 75–82).

Uresti, J. A. R., & du Boulay, B. (2004). Expertise, motivation and teaching in learning companion systems. International Journal of Artificial Intelligence in Education, 14, 67–106.

VanLehn, K., Ohlsson, S., & Nason, R. (1994). Applications of simulated students: an exploration. Journal of Artificial Intelligence in Education, 5(2), 135–175.

Walker, E., Rummel, N., & Koedinger, K. R. (2014). Adaptive intelligent support to improve peer tutoring in algebra. In International Journal of Artificial Intelligence in Education, 24, 33–61.

Wang, H. C., Rose, C. P., & Chang, C. Y. (2011). Agent-based dynamic support for learning from collaborative brainstorming in scientific inquiry. International Journal of Computer Supported Collaborative Learning, 6(3), 371–396.

Acknowledgments

Our original 1996 research was supported by the MITRE Technology Program. Work on this paper was partially supported by the Collaboration and Social Computing Department of the Information Technologies Division at The MITRE Corporation. We want to acknowledge the important contributions of our colleague, Amy Soller, to our original research, and to the reviewers of this paper for their helpful comments.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Goodman, B., Linton, F. & Gaimari, R. Encouraging Student Reflection and Articulation Using a Learning Companion: A Commentary. Int J Artif Intell Educ 26, 474–488 (2016). https://doi.org/10.1007/s40593-015-0041-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40593-015-0041-4