Abstract

In this paper we proposed a data-driven bandwidth selection procedure of the recursive kernel density estimators under double truncation. We showed that, using the selected bandwidth and a special stepsize, the proposed recursive estimators outperform the nonrecursive one in terms of estimation error in many situations. We corroborated these theoretical results through simulation study. The proposed estimators are then applied to data on the luminosity of quasars in astronomy. We corroborated these theoretical results through simulation study, then, we applied the proposed estimators to data on the luminosity of quasars in astronomy.

Similar content being viewed by others

References

Bilker, W.B. and Wang, M.C. (1996). A semiparametric extension of the Mann-Whitney test for randomly truncated data. Biometrics 52, 10–20.

Bojanic, R. and Seneta, E. (1973). A unified theory of regularly varying sequences. Math. Z. 134, 91–106.

Efron, B. and Petrosian, V. (1999). Nonparametric methods for doubly truncated data. J. Amer. Statist. Assoc. 94, 824–834.

Galambos, J. and Seneta, E. (1973). Regularly varying sequences. Proc. Amer. Math. Soc. 41, 110–116.

Klein, J.P. and Moeschberger, M.L. (2003). Survival Analysis Techniques for Censored and Truncated Data. Springer, New York.

Mokkadem, A. and Pelletier, M. (2007). A companion for the Kiefer-Wolfowitz-Blum stochastic approximation algorithm. Ann. Statist. 35, 1749–1772.

Mokkadem, A., Pelletier, M. and Slaoui, Y (2009). The stochastic approximation method for the estimation of a multivariate probability density. J. Statist. Plann. Inference 139, 2459–2478.

Moreira, C. and de Uña-Àlvarez, J. (2010). A semiparametric estimator of survival for doubly truncated data. Statist. Med. 29, 3147–3159.

Moreira, C. and de Uña-Àlvarez, J. (2012). Kernel density estimation with doubly truncated data. Electron. J. Stat. 6, 501–521.

Moreira, C., de Uña-Àlvarez, J. and Crujeiras, R. (2010). DTDA: an R package to analyze randomly truncated data. J. Stat. Softw. 37, 1–20.

Parzen, E. (1962). On estimation of a probability density and mode. Ann. Math. Statist. 33, 1065–1076.

Révész, P. (1973). Robbins-Monro procedure in a Hilbert space and its application in the theory of learning processes I. Studia Sci. Math. Hung. 8, 391–398.

Révész, P. (1977). How to apply the method of stochastic approximation in the non-parametric estimation of a regression function. Math. Operationsforsch. Statist., Ser. Statistics. 8, 119–126.

Rosenblatt, M. (1956). Remarks on some nonparametric estimates of a density function. Ann. Math. Statist. 27, 832–837.

Shen, P.S. (2010). Nonparametric analysis of doubly truncated data. Ann. Inst. Statist. Math. 62, 835–853.

Silverman, B.W. (1986). Density estimation for statistics and data analysis. Chapman and Hall, London.

Slaoui, Y. (2013). Large and moderate principles for recursive kernel density estimators defined by stochastic approximation method. Serdica Math. J. 39, 53–82.

Slaoui, Y. (2014a). Bandwidth selection for recursive kernel density estimators defined by stochastic approximation method. J. Probab. Stat 2014, 739640. https://doi.org/10.1155/2014/739640.

Slaoui, Y. (2014b). The stochastic approximation method for the estimation of a distribution function. Math. Methods Statist. 23, 306–325.

Slaoui, Y. (2015). Plug-In Bandwidth selector for recursive kernel regression estimators defined by stochastic approximation method. Stat. Neerl. 69, 483–509.

Slaoui, Y. (2016a). Optimal bandwidth selection for semi-recursive kernel regression estimators. Stat. Interface. 9, 375–388.

Slaoui, Y. (2016b). On the choice of smoothing parameters for semi-recursive nonparametric hazard estimators. J. Stat. Theory Pract. 10, 656–672.

Tsybakov, A.B. (1990). Recurrent estimation of the mode of a multidimensional distribution. Probl. Inf. Transm. 8, 119–126.

Acknowledgements

We are grateful to referee and an Editor for their helpful comments, which have led to this substantially improved version of the paper.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: Proofs

First, we introduce the following asymptotically equivalent version of the proposed recursive estimators (2.3),

and the asymptotically equivalent version of the non-recursive estimator (2.4),

Remark 1.

The consistency results of \(\frac {\alpha _{n}}{G_{n}\left (.\right )}\) can be obtained from Shen (2010) and Moreira and de Uña-Àlvarez (2010).

Throughout this section we use the following notation:

Let us first state the following technical lemma.

Lemma 1.

Let \(\left (v_{n}\right )\in \mathcal {GS}\left (v^{*}\right )\), \(\left (\eta _{n}\right )\in \mathcal {GS}\left (-\eta \right )\), and \(m>0\) such that \(m-v^{*}\xi >0\) where \(\xi \) is defined inEq. 3.2. We have

Moreover, for all positive sequence \(\left (\beta _{n}\right )\) such that \(\lim _{n \to +\infty }\beta _{n}= 0\), and all \(C \in \mathbb {R}\),

Lemma 1 is widely applied throughout the proofs. Let us underline that it is its application, which requires Assumption \((A2)(iii)\) on the limit of \((n\gamma _{n})\) as n goes to infinity.

Our proofs are organized as follows. Propositions 1 and 2 in Sections 5.1 and 5.2 respectively, Theorem 1 in Section 5.3.

1.1 A.1 Proof of Proposition 1

In view of Eqs. 5.1 and 5.3, we have

It follows that

Moreover, for simplicity, we let \(H\left (x\right )=\frac {f_{X}\left (x\right )}{G\left (x\right )}\), and in view of Eq. ??, we have

Then, it follows from Eq. 5.4, for \(p = 1\), that

with

and, since f is bounded and continuous at x, we have \(\lim _{k\to \infty }\eta _{k}\left (x\right )= 0\). In the case \(a\leq \gamma /5\), we have \(\lim _{n\to \infty }\left (n\gamma _{n}\right )>2a\); the application of Lemma 1 then gives

and Eq. ?? follows. In the case \(a>\gamma /5\), we have \({h_{n}^{2}}=o\left (\sqrt {\gamma _{n}h_{n}^{-1}}\right )\), and \(\lim _{n\to \infty }\left (n\gamma _{n}\right )>\left (\gamma -a\right )/2\), then Lemma 1 ensures that

which gives (3.4). Further, we have

Moreover, in view of Eq. 5.4, for \(p = 2\), that

with

Moreover, it follows from Eq. 5.5, that

with

Then, it follows from Eqs. 5.6, 5.7 and 5.8, that

Since f and \(fG^{-1}\) are bounded continuous, we have \(\lim _{k\to \infty }\nu _{k}\left (x\right )= 0\) and \(\lim _{k\to \infty }\widetilde {\nu _{k}}\left (x\right )= 0\). In the case \(a\geq \gamma /5\), we have \(\lim _{n\to \infty }\left (n\gamma _{n}\right )>\left (\gamma -a\right )/2\), and the application of Lemma 1 gives

which proves (3.5). Now, in the case \(a<\gamma /5\), we have \(\gamma _{n}h_{n}^{-1}=o\left ({h_{n}^{4}}\right )\), and \(\lim _{n\to \infty }\left (n\gamma _{n}\right )>2a\), then the application of Lemma 1 gives

which proves (3.6).

1.2 A.2 Proof of Proposition 2

Following similar steps as the proof of the Proposition 2 of Mokkadem et al. (2009), we proof the Proposition 2.

1.3 A.3 Proof of Theorem 1

Let us at first assume that, if \(a\geq \gamma /5\), then

In the case when \(a>\gamma /5\), Part 1 of Theorem 1 follows from the combination of Eqs. 3.4 and 5.9. In the case when \(a=\gamma /5\), Parts 1 and 2 of Theorem ?? follow from the combination of Eqs. 3.3 and 5.9. In the case \(a<\gamma /5\), Eq. 3.6 implies that

and the application of Eq. 3.3 gives Part 2 of Theorem 1.

We now prove (5.9). In view of Eq. 2.3, we have

Set

The application of Lemma 1 ensures that

On the other hand, we have, for all \(p>0\),

and, since \(\lim _{n\to \infty }\left (n\gamma _{n}\right )>\left (\gamma -a\right )/2\), there exists \(p>0\) such that \(\lim _{n\to \infty }\) \(\left (n\gamma _{n}\right )>\frac {1+p}{2+p}\left (\gamma -a\right )\). Applying Lemma 1, we get

and we thus obtain

The convergence in Eq. 5.9 then follows from the application of Lyapounov’s Theorem.

Appendix B: R Source Code

Here we give a source code of the proposed method according to the first model: \(U^{*}\sim \mathcal {U}\left (-1,0\right )\), \(V^{*}\sim \mathcal {U}\left (0,1\right )\) and \(X^{*}\sim \mathcal {N}\left (0,1\right )\).

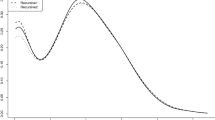

n=100; #sample size Np=250; #number of discretization points niter=50; #number of iteration ᅟ # initialization of parameters ᅟ FNR=matrix(0,niter,Np); FR=matrix(0,niter,Np); A1=rep(0,niter); A2=rep(0,niter); A=rep(0,niter); Y=matrix(0,n,Np); ᅟ # generation of discretization points ᅟ D=seq(-4,4,8/(Np-1)); ᅟ #Gaussian kernel KG<-function(x){1/sqrt(2*pi)*exp(-x^2/2)} #second derivative of Gaussian kernel Kd<-function(x){y<-(x^2-1)*KG(x)} #computing R(K) K2G<-function(x){1/(2*pi)*exp(-x^2)} IR=integrate(K2G, lower = -Inf, upper = Inf)$value; #computing mu2(K) Kx<-function(x){1/sqrt(2*pi)*x^{2}*exp(-x^{2}/2)} mu=integrate(Kx, lower = -Inf, upper = Inf)$value; ᅟ #start of iterations for (iter in 1:niter) { print(iter) ᅟ #simulation ᅟ Xt=rnorm(n); Ut=runif(n,-1,0); Vt=runif(n,0,1); XX=(Xt>=Ut)*Xt*(Xt<=Vt); X1=(Xt>=Ut)*Xt; X2=Xt*(Xt<=Vt); alpha1=mean(X1!=0); alpha2=mean(X2!=0); alpha=mean(XX!=0); X=Xt;U=Ut;V=Vt; ᅟ for (i in 1:n){ while (X[i]<U[i]|X[i]>V[i]){ U[i]<-runif(1,-1,0) X[i]<-rnorm(1) V[i]<-runif(1,0,1)}} ᅟ #estimation of alpha ᅟ Gn=ecdf(X); G=function(x) {1-x^2} ᅟ alpha=mean(G(XX)); ᅟ #estimation of I1 and I2 ᅟ Q1=quantile(X,0.25) Q3=quantile(X,0.75) d=(Q3-Q1)/1.349; c=min(sd(X),d); ᅟ #estimation of I1 for the non-recursive estimator (see, equation (18)) ᅟ #pilot bandwidth for estimating I1 h=c*n^(-2/5); ᅟ M1=matrix(0,ncol=n,nrow=n); for (i in 1:n){ for (j in 1:n){ M1[i,j]=KG((X[i]-X[j])/h)*(G(X[i])*G(X[j]))^(-1);}} ᅟ I1tilde=(sum(M1)-sum(diag(M1)))/(n*(n-1)*h); ᅟ #estimation of I2 for the non-recursive estimator (see, equation (19)) ᅟ #pilot bandwidth for estimating I2 h=c*n^(-3/14); ᅟ M2 = array (dim = c(n,n,n)); for (i in 1:n){ for (j in 1:n){ for (k in 1:n){ M2[i,j,k]=Kd((X[i]-X[j])/h)*Kd((X[i]-X[k])/h)*(G(X[j]) *G(X[k]))^(-1);}}} L=rep(0,n); for (i in 1:n) { L[i]=sum(diag(M2[i,,]));} I2tilde=(sum(M2)-sum(L))/(n^3*h^6); ᅟ #estimation of I1 for the recursive estimator (see, equation (12)) ᅟ #pilot stepsize for estimating I1 ᅟ gam=1.36/c(2:n); Gl=1-gam; Pn=prod(1-gam); ng=length(Gl); L1=rep(0,ng); for (k in 1:ng) { L1[k]=prod(Gl[1:k]);} P1=L1^(-1); ᅟ #pilot bandwidth for estimating I1 hk=c*c(1:n)^(-2/5); ᅟ N1=matrix(0,ncol=n,nrow=n); for (i in 1:ng){ for (j in 1:ng){ N1[i,j]=(P1[j]*gam[j]/hk[j]*KG((X[i]-X[j])/hk[j]))*(G(X[i]) *G(X[j]))^(-1);}} ᅟ I1hat=Pn*n^(-1)*(sum(N1)-sum(diag(N1))); ᅟ #estimation of I2 for the recursive estimator (see, equation (13)) ᅟ #pilot stepsize for estimating I2 ᅟ gam=1.48/c(2:n); Gl=1-gam; Pn=prod(1-gam); ng=length(Gl); L1=rep(0,ng); for (k in 1:ng) { L1[k]=prod(Gl[1:k]);} P1=L1^(-1); ᅟ #pilot bandwidth for estimating I2 hk=c*c(1:n)^(-3/14); ᅟ N2=array (dim = c(ng,ng,ng)); for (i in 1:ng){ for (j in 1:ng){ for (k in 1:ng){ N2[i,j,k]=P1[j]*gam[j]*P1[k]*gam[k]*hk[j]^(-3)*hk[k]^(-3) *Kd((X[i]-X[j])/hk[j]) *Kd((X[i]-X[k])/hk[k])*(G(X[j])*G(X[k]))^(-1);}}} Ln=rep(0,ng); for (i in 1:ng) { Ln[i]=sum(diag(N2[i,,]));} ᅟ I2hat=Pn^2*n^(-1)*(sum(N2)-sum(Ln)); ᅟ #Optimale Bandwidth for the non-recursive estimator (see, equation (20)) ᅟ hN=(I1tilde/I2tilde)^(1/5)*(IR/(mu^2))^(1/5)*alpha^(1/5) *n^(-1/5); ᅟ #Optimale Bandwidth for the recursive estimator (see, equation (15)) ᅟ hR=(3/10)^(1/5)*(I1hat/I2hat)^(1/5)*(IR/(mu^2))^(1/5) *alpha^(1/5)*c(2:n)^(-1/5); ᅟ #Non-recursive estimator ᅟ I1=matrix(0,Np,n); for (i in 1:Np){ for (j in 1:n) { I1[i,j]=sum(hN^(-1)*KG(hN^(-1)*(D[i]-X[j]))*G(X[j])^(-1))} } FNR[iter,]=alpha*rowSums(I1)/n; ᅟ #Recursive estimator with stepsize gamma_n=n^(-1) ᅟ I2=matrix(0,Np,(n-1)); for (i in 1:Np){ for (j in 1:(n-1)) { I2[i,j]=sum(hR[j]^(-1)*KG(hR[j]^(-1)*(D[i]-X[j])) *G(X[j])^(-1))} } FR[iter,]=alpha*rowSums(I2)/n; ᅟ }#end of iterations ᅟ #output the result FNR1=FNR[!rowSums(!is.finite(FNR)),] FR1=FR[!rowSums(!is.finite(FR)),] FNR1=colMeans(FNR1); #nonrecursive FR1=colMeans(FR1); #recursive ᅟ #qualitative comparaison between the two estimators (3) and (4) MD=dnorm(D); #Density for the standard normal distribution NR1=c(mean(abs(FNR1-MD)),mean((FNR1-MD)^2), mean(abs(FNR1/MD-1))) NR1=round(NR1,4); print("Non recursive") print(NR1) Rec1=c(mean(abs(FR1-MD)),mean((FR1-MD)^2),mean(abs(FR1/MD-1))) Rec1=round(Rec1,4); print("Recursive") print(Rec1) ᅟ #quantitative comparaison between the two estimators (3) and (4) plot(D,MD,xlab="",ylab="",ylim=c(0,0.65)) Data1=data.frame(D,FNR1) lines(Data1,type="l",lty=1,lwd=2) Data2=data.frame(D,FR1) lines(Data2,type="l",lty=2,lwd=2) legend("topleft",legend=c("Nonrecursive","Recursive"), lty=c(1,2),lwd=2)

Rights and permissions

About this article

Cite this article

Slaoui, Y. Data-Driven Bandwidth Selection for Recursive Kernel Density Estimators Under Double Truncation. Sankhya B 80, 341–368 (2018). https://doi.org/10.1007/s13571-018-0165-2

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13571-018-0165-2