Abstract

Nonlocal interactions, which have attracted attention in various fields, result from the integration of microscopic information such as a transition possibility, molecular events, and signaling networks of living creatures. Nonlocal interactions are useful to reproduce various patterns corresponding to such detailed microscopic information. However, the approach is inconvenient for observing the specific mechanisms behind the target phenomena because of the compression of the information. Therefore, we previously proposed a method capable of approximating any nonlocal interactions by a reaction–diffusion system with auxiliary factors (Ninomiya et al., J Math Biol 75:1203–1233, 2017). In this paper, we provide an explicit method for determining the parameters of the reaction–diffusion system for the given kernel shape by using Jacobi polynomials under appropriate assumptions. We additionally introduce a numerical method to specify the parameters of the reaction–diffusion system with the general diffusion coefficients by the Tikhonov regularization.

Similar content being viewed by others

References

Amari, S.: Dynamics of pattern formation in lateral-inhibition type neural fields. Biol. Cybernet. 27, 77–87 (1977)

Bates, P.W., Fife, P.C., Ren, X., Wang, X.: Traveling waves in a convolution model for phase transitions. Arch. Ration. Mech. Anal. 138, 105–136 (1997)

Bates, P.W., Zhao, G.: Existence, uniqueness and stability of the stationary solution to a nonlocal evolution equation arising in population dispersal. J. Math. Anal. Appl. 332, 428–440 (2007)

Berestycki, H., Nadin, G., Perthame, B., Ryzhik, L.: The non-local Fisher-KPP equation: traveling waves and steady states. Nonlinearity 22, 2813–2844 (2009)

Chihara, T.S.: An Introduction to Orthogonal Polynomials. Gordon and Breach, New York (1978)

Coville, J., Dávila, J., Martíanez, S.: Nonlocal anisotropic dispersal with monostable nonlinearity. J. Differ. Equ. 244, 3080–3118 (2008)

Furter, J., Grinfeld, M.: Local vs. non-local interactions in population dynamics. J. Math. Biol. 27, 65–80 (1989)

Hutson, V., Martinez, S., Mischaikow, K., Vickers, G.T.: The evolution of dispersal. J. Math. Biol. 47, 483–517 (2003)

Kondo, S.: An updated kernel-based Turing model for studying the mechanisms of biological pattern formation. J. Theor. Biol. 414, 120–127 (2017)

Kuffler, S.W.: Discharge patterns and functional organization of mammalian retina. J. Neurophysiol. 16, 37–68 (1953)

Laing, C.R., Troy, W.C.: Two-bump solutions of Amari-type models of neuronal pattern formation. Phys. D 178, 190–218 (2003)

Laing, C.R., Troy, W.: PDE methods for nonlocal models. SIAM J. Appl. Dyn. Syst. 2, 487–516 (2003)

Lefever, R., Lejeune, O.: On the origin of tiger bush. Bull. Math. Biol. 59, 263–294 (1997)

Murray, J. D.: Mathematical biology. I. An introduction, vol. 17, 3rd edn. Interdisciplinary Applied Mathematics. Springer, Berlin (2002)

Murray, J. D.: Mathematical biology. II. Spatial models and biomedical applications, vol. 18, 3rd edn. Interdisciplinary Applied Mathematics. Springer, Berlin (2003)

Nakamasu, A., Takahashi, G., Kanbe, A., Kondo, S.: Interactions between zebrafish pigment cells responsible for the generation of Turing patterns. Proc. Natl. Acad. Sci. USA 106, 8429–8434 (2009)

Nakamura, G., Potthast, R.: Inverse Modeling. IOP Publishing, Bristol (2015)

Ninomiya, H., Tanaka, Y., Yamamoto, H.: Reaction, diffusion and non-local interaction. J. Math. Biol. 75, 1203–1233 (2017)

Tanaka, Y., Yamamoto, H., Ninomiya, H.: Mathematical approach to nonlocal interactions using a reaction–diffusion system. Dev. Growth Differ. 59, 388–395 (2017)

Acknowledgements

The authors would like to thank Professor Yoshitsugu Kabeya of Osaka Prefecture University for his valuable comments and Professor Gen Nakamura of Hokkaido University for his fruitful comments for Sect. 6. The authors are particularly grateful to the referees for their careful reading and valuable comments. The first author was partially supported by JSPS KAKENHI Grant Numbers 26287024, 15K04963, 16K13778, 16KT0022. The second author was partially supported by KAKENHI Grant Number 17K14228, and JST CREST Grant Number JPMJCR14D3.

Author information

Authors and Affiliations

Corresponding author

Additional information

Dedicated to Professor Masayasu Mimura on his 75th birthday.

Appendices

Appendix

A: Existence and boundedness of a solution of the problem (P)

Proposition 5

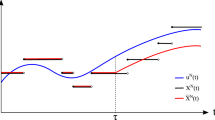

(Local existence and uniqueness of the solution) There exist a constant \(\tau >0\) and a unique solution \(u\in C([0,\tau ];BC(\mathbb {R}))\) of the problem (P) with an initial datum \(u_0\in BC(\mathbb {R})\).

This proposition is proved by the standard argument. That is based on the fixed point theorem for integral equation by the heat kernel.

In order to prove Theorem 1, we discuss the maximum principle as follows.

Lemma 2

(Global bounds for the solution of (P)) For a solution u of (P), it follows

Proof

For a contradiction, we assume that there exists a constant \(T>0\) such that

Then, we can take \(\{T_n\}_{n\in \mathbb {N}}\) satisfying  and

and

Hence, for all \(R>0\) there exists \(N\in \mathbb {N}\) such that

We suppose that \(\sup _{0\le t\le T_n,x\in \mathbb {R}}u(x,t) =\sup _{0\le t\le T_n,x\in \mathbb {R}}|u(x,t)|\), (by replacing u(x, t) with \(-u(x,t)\) if necessary).

Case 1: \(u(x_n,t_n)=R_n\) for some \((x_n,t_n)\in \mathbb {R}\times [0,T_n]\).

Since \((x_n,t_n)\) is a maximum point of u on \(\mathbb {R}\times [0,T_n]\), we see that

For \(r_0>0\) large enough, it holds that

Put \(R=r_0\), \(r=u(x_n,t_n)\). By (26), for all \(u(x_n, t_n)>R \) we have

Substituting \((x_n,t_n)\) in the equation of (P), we obtain that

This yields a contradiction.

Case 2: \(u(x,t)<R_n\) for all \((x,t)\in \mathbb {R}\times [0,T_n]\).

For any \(n\in \mathbb {N}\), there exists a maximum point \(t_n\in (0,T_n]\) of \(\Vert u(\cdot ,t)\Vert _{BC(\mathbb {R})}\) such that \(\Vert u(\cdot , t_n)\Vert _{BC(\mathbb {R})} =\max _{0\le t \le T_n} \Vert u(\cdot , t)\Vert _{BC(\mathbb {R})}=R_n\). If \(s\in (0,t_n)\), then we see that

because \(0<t_n-s\le T_n\). Here, since \(\Vert u(\cdot , t_n-s)\Vert _{BC(\mathbb {R})}-s < \Vert u(\cdot ,t_n)\Vert _{BC(\mathbb {R})} =\sup _{x\in \mathbb {R}} |u(x,t_n)|\), there exists a point \(x^{(n,s)}\in \mathbb {R}\) such that

Let \(n\in \mathbb {N}\) be a sufficiently large. Since \(\Vert u(\cdot , t_n-s)\Vert _{BC(\mathbb {R})}\) is sufficiently large, we have \(u(x^{(n,s)},t_n)>r_0\). Hence, by (27) it follows that

By (30), (31) and the equation of (P), it holds that \(u_t(x^{(n,s)},t_n)\le -3\). Hence, there is a sufficient small constant \(\eta _0>0\) such that for any \(0<\eta <\eta _0\),

On the other hand, by (28) and (29), we obtain that for \(s\in (0,t_n)\),

Choosing \(0< s < \min \{\eta _0, t_n\}\) and taking \(\eta =s\) in (32), we see that

This inequality is a contradiction because s is positive. Thus, we have (25) because both of Case 1 and Case 2 imply a contradiction. \(\square \)

Proof of Theorem 1

Proposition 5 and Lemma 2 immediately imply Theorem 1. \(\square \)

B: Boundedness of the solution of the problems (P) and \(\hbox {RD}_{\varepsilon }\)

Here, we show several propositions.

Proof of Proposition 1

Multiplying the equation of (P) by u and integrating it over \(\mathbb {R}\), we have

From (H1), the Schwarz inequality and the Young inequality for convolutions, one have

Moreover, using (H4), the interpolation for the Hölder inequality and the Young inequality, it holds that

Hence we obtain

We therefore have

Next, integrating the equation of (P) multiplied by \(u_{xx}\) over \(\mathbb {R}\), one have

From (H2), (H3), the Schwarz inequality and the Young inequality for convolutions, we can estimate the derivative of \(\Vert u_x\Vert _{L^2}^2\) with respect to t as follows:

By the Hölder and the Young inequalities, recalling that

we obtain

where \(C_{11}\) is a positive constant and if \(2\le p<3\), then \(g_6=0\) which implies \(C_{11}=0\) by (H4). By Lemma 2, since \(\Vert u(\cdot ,t)\Vert _{BC(\mathbb {R})}\) is bounded in t, one see that

Put \(X(t):=\Vert u(\cdot ,t)\Vert _{L^2(\mathbb {R})}^2\) and \(Y(t):=\Vert u_x(\cdot ,t)\Vert _{L^2(\mathbb {R})}^2\). By (33) and (35), it follows that

Consequently, we have

where \(2k_0=\max \{C_{10},C_{12}\}\). \(\square \)

Next we give the proof of Proposition 2.

Proof of Proposition 2

First, we show that \(u^{\varepsilon }\) and \(v_j^{\varepsilon }\) are bounded in \(L^2(\mathbb {R})\) by the argument similar to the proof of Proposition 1. Multiplying the principal equation of (\(\hbox {RD}_{\varepsilon }\)) by \(u^{\varepsilon }\) and integrating it over \(\mathbb {R}\), we have

Also, multiplying the second equation of (\(\hbox {RD}_{\varepsilon }\)) by \(v_j^{\varepsilon }\) and integrating it over \(\mathbb {R}\), we see that

Multiplying the above inequality by \(\alpha _j^2\) and adding those inequalities from \(j=1\) to M, we obtain that

where \(C_{14}=\sum _{j=1}^{M}\alpha _j^2\). Here, put \(X(t):=\Vert u^{\varepsilon }(\cdot ,t)\Vert _{L^2(\mathbb {R})}^2\), \(Y(t):=\sum _{j=1}^{M} \Vert \alpha _j v_j^{\varepsilon } (\cdot ,t) \Vert _{L^2(\mathbb {R})}^2\). Hence, (36), (37) are described as follows:

Noticing that X(t), \(Y(t)\ge 0\), we have

which implies

Note that \(\Vert v_j^{\varepsilon }(\cdot ,0)\Vert _{L^2(\mathbb {R})}=\Vert k^{d_j}*u_0\Vert _{L^2(\mathbb {R})} \le \Vert u_0\Vert _{L^2(\mathbb {R})}\) from \(0<\varepsilon <1\) and \(\Vert k^d\Vert _{L^1(\mathbb {R})}=1\). Recalling \(X(t)=\Vert u^{\varepsilon }(\cdot ,t)\Vert _{L^2(\mathbb {R})}^2\), one see that

Using (38) again yields

Hence, it is shown that

Therefore, each component \(u^{\varepsilon }\) and \(v_j^{\varepsilon }\) of the solution is bounded in \(L^2(\mathbb {R})\) by (36) and (39).

Next, let us show the boundedness of \(u_x^{\varepsilon }\) and \(v_{j,x}^{\varepsilon }\) in \(L^2(\mathbb {R})\). Note that we use the \(L^2\)-boundedness of \(u^{\varepsilon }\) and \(v_j^{\varepsilon }\) in the proof. Multiplying the principal equation of (\(\hbox {RD}_{\varepsilon }\)) by \(u_{xx}^{\varepsilon }\) and integrating it in \(\mathbb {R}\), similarly to the proof of Proposition 1, one see that

Here, \(C_{11}\) is the same constant used by the inequality (34), and by (H4), \(C_{11}=0\) if \(2\le p<3\). By the Gagliardo–Nirenberg–Sobolev inequality, there is a positive constant \(C_S\) satisfying

Applying this to (40) yields

By using the Young inequality, we have

Hence, we get the following inequality:

Also, integrating the second equation of (\(\hbox {RD}_{\varepsilon }\)) multiplied by \(v_{j,xx}^{\varepsilon }\) over \(\mathbb {R}\), from the Young inequality, it follows that

Hence, multiplying this by \(\alpha _j^2\) and adding those from \(j=1\) to M yield the following:

where \(C_{18}=\sum _{j=1}^M \alpha _j^2\). Similarly to (36) and (37), (41) and (42) are represented as follows:

where \(X(t)=\Vert u_x^{\varepsilon }(\cdot ,t)\Vert _{L^2(\mathbb {R})}^2\) and \(Y(t)=\sum _{j=1}^{M}\Vert \alpha _jv_{j,x}^{\varepsilon }(\cdot ,t)\Vert _{L^2(\mathbb {R})}^2\). Therefore, it follows that for any \(0\le t\le T\),

\(\square \)

Proof of Proposition 3

Let \((u^{\varepsilon },v_j^{\varepsilon })\) be a solution of (\(\hbox {RD}_{\varepsilon }\)). For any \(\delta >0\) and \(k\in \mathbb {N}\), multiplying the first equation of (\(\hbox {RD}_{\varepsilon }\)) by \(u^{\varepsilon } /\sqrt{\delta +(u^{\varepsilon })^2}\) and integrating it with respect to \(x\in [-k,k]\), we have that

For the left-hand side of (43), it holds that

by using the dominated convergence theorem. Moreover, we calculate the first term of the right-hand side of (43) as follows:

By (43), as \(\delta \rightarrow 0\), it holds that for any \(k\in \mathbb {N}\)

Similarly to the proof of Proposition 1,

where \(C_{20}\) is a positive constant depending on \(g_1\), \(g_2\), \(g_3\) and the boundedness of \(\Vert u^{\varepsilon }\Vert _{BC(\mathbb {R})}\) by Proposition 2. By the similar argument for \(v_j^{\varepsilon }\), we estimate

Hence, we obtain that

By calculating the sum of (44) and (45), we see that

where \(c_k(t)=d_u ( |u_x^{\varepsilon } (k,t)|+|u_x^{\varepsilon } (-k,t)| ) +C_{20}\sum _{j=1}^{M} d_j |\alpha _j| ( |v_{j,x}^{\varepsilon } (k,t)|+|v_{j,x}^{\varepsilon } (-k,t)| )\) depends on t and \(C_{21}= C_{20}(1 + \sum _{j=1}^M | \alpha _j | )\). Here, from Proposition 2, \(u_x^{\varepsilon } (\cdot ,t)\), \(v_{j,x}^{\varepsilon }(\cdot ,t) \in L^2(\mathbb {R})\) for a fixed \(0\le t\le T\). Hence, there is a subsequence \(\{k_m\}_{m\in \mathbb {N}}\) satisfying \(k_m\rightarrow \infty \) as \(m\rightarrow \infty \) such that \(u_x^{\varepsilon } (k_m,t)\), \(u_x^{\varepsilon } (-k_m,t)\), \(v_{j,x}^{\varepsilon }(k_m,t)\), \(v_{j,x}^{\varepsilon }(-k_m,t)\rightarrow 0\) as \(m\rightarrow \infty \). Hence, \(c_{k_m}(t)\rightarrow 0\) as \(m\rightarrow \infty \). Note that \(k_m\) depends on a time t. Taking the limit of (46) on \(k=k_m\) as \(m\rightarrow \infty \), we have the following inequality

Using the classical Gronwall Lemma, we have

Therefore, we see that

and it is shown that \(\sup _{0\le t\le T}\Vert u^{\varepsilon }(\cdot ,t)\Vert _{L^1(\mathbb {R})}\) is bounded. Furthermore, since (45) holds and \(c_{k_m}(t)\rightarrow 0\) as \(m\rightarrow \infty \), we obtain

where \(C_{23}= C_{22} \sum _{j=1}^{M} |\alpha _j|\). Hence, noting that \(\Vert v_j^{\varepsilon }(\cdot ,0)\Vert _{L^1(\mathbb {R})}\le \Vert u_0\Vert _{L^1(\mathbb {R})}\), we have

Consequently, we get the boundedness of \(\sup _{0\le t\le T} \sum _{j=1}^{M} \Vert \alpha _j v_j^{\varepsilon }(\cdot ,t) \Vert _{L^1(\mathbb {R})}\), so that Proposition 3 is shown. \(\square \)

C: Proof of Lemma 1

Proof

Put \(V_j:=v_j-k^{d_j}*u^{\varepsilon }\). Note that \(k^{d_j}*u^{\varepsilon }\) is a solution of \(d_j(k^{d_j}*u^{\varepsilon })_{xx}-k^{d_j}*u^{\varepsilon } +u^{\varepsilon }=0\). Since \(u^{\varepsilon }\) is the first component of the solution to (\(\hbox {RD}_{\varepsilon }\)), we can calculate as follows:

Recalling that \(\Vert k^{d_j}\Vert _{L^1(\mathbb {R})}=1\) and both of \(\Vert u^{\varepsilon }\Vert _{L^2(\mathbb {R})}\) and \(\Vert v_j^{\varepsilon }\Vert _{L^2(\mathbb {R})}\) are bounded with respect to \(\varepsilon \) from Proposition 2, the right-hand side of the previous identity is bounded in \(L^2(\mathbb {R})\). Hence, there exists a positive constant \(C_{24}\) independent of \(\varepsilon \) such that

Recalling that \(\varepsilon v_{j,t}^{\varepsilon }=d_jv_{j,xx}+u^{\varepsilon }-v_j^{\varepsilon }\), the equation of \(V_j\) becomes

Multiplying this equation by \(V_j\) and integrating it over \(\mathbb {R}\) yield

Since it is shown that

we get \(\Vert V_j\Vert _{L^2(\mathbb {R})}^2\le \min \{\Vert V_j(\cdot ,0)\Vert _{L^2(\mathbb {R})}^2,\, (C_{24}\varepsilon )^2\}\). Noting that \(V_j(\cdot ,0)=0\), we obtain that

Therefore, (9) is proved. \(\square \)

D: Polynomial approximation

Proof of Proposition 4

Let \(\phi \in BC([0,\infty ])\). We change variables x in terms of y as follows:

Since y is decreasing in x and y belongs to [0, 1] when x belongs to \([0,\infty ]\), we have the inverse function of y and the inverse function is represented by \(x=-\log y\). Also, since \(\phi (x)\) is bounded at infinity by \(\phi \in BC([0,+\infty ])\), one have \(\psi \in C([0,1])\). Hence, applying the Stone–Weierstrass theorem to \(\psi \), for any \(\varepsilon \), there exists a polynomial function \(p(y)=\sum _{j=0}^M\beta _j {y}^j\) such that

Substituting \(y=e^{-x}\) to the previous inequality, it follows that for all \(x\in [0,+\infty ]\)

due to (47). \(\square \)

E: Examples of calculated parameters

We provide the examples of values of \(\alpha _1,\ldots , \alpha _5\) which are explicitly calculated by using (8). We consider the case for \(J_1\) and \( J_2\).

In the case of \(J_1(x)\), \(\alpha _1,\ldots ,\alpha _5\) are calculated by

where

For the case of \(J_2(x)\), \(\alpha _1,\ldots ,\alpha _5\) are given by

About this article

Cite this article

Ninomiya, H., Tanaka, Y. & Yamamoto, H. Reaction–diffusion approximation of nonlocal interactions using Jacobi polynomials. Japan J. Indust. Appl. Math. 35, 613–651 (2018). https://doi.org/10.1007/s13160-017-0299-z

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13160-017-0299-z

Keywords

- Nonlocal interaction

- Reaction–diffusion system

- Jacobi polynomials

- Traveling wave solution

- Optimizing problem