Abstract

Background

The low wear rates of crosslinked polyethylenes provide the potential to use larger diameters to resist dislocation. However, this requires the use of thinner liners in the acetabular component, with concern that higher contact stresses will increase wear, offsetting the benefits of the crosslinking.

Questions/purposes

We asked the following questions: Is the wear of conventional and crosslinked polyethylene liners affected by ball diameter, rigidity of backing, and liner thickness? Are the stresses in the liner affected by thickness?

Methods

Wear rates were measured in a hip simulator and stresses were calculated using finite element modeling.

Results

Without crosslinking, the wear rate was 4% to 10% greater with a 36-mm diameter than a 28-mm diameter. With crosslinking, wear was 9% lower with a 36-mm diameter without metal backing and 4% greater with metal backing. Reducing the thickness from 6 mm to 3 mm increased the contact stress by 46%, but the wear rate decreased by 19%.

Conclusions

The reduction in wear with 5 Mrad of crosslinking was not offset by increasing the diameter from 28 mm to 36 mm or by using a liner as thin as 3 mm.

Clinical Relevance

The results indicate, for a properly positioned 5-Mrad crosslinked acetabular component and within the range of dimensions evaluated, neither wear nor stresses in the polyethylene are limiting factors in the use of larger-diameter, thinner cups to resist dislocation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Polyethylenes (PEs) with elevated crosslinking for improved wear resistance have been in clinical use for more than 10 years. Wear measurements based on clinical radiographs have indicated wear rates substantially lower than for historical PEs [3, 4, 8, 10, 11, 15–18, 23, 28, 42], with a corresponding reduction in the incidence and severity of debris-induced osteolysis. The promising clinical performance of the crosslinked PEs has led to their use in hips with larger-diameter balls to provide additional resistance to dislocation [17]. However, a larger-diameter ball has a longer sliding distance per step, which could increase the rate of wear, and there is concern that higher stresses in a thin liner also might substantially accelerate wear.

We, therefore, asked whether the rate of wear of noncrosslinked and crosslinked PE liners would be affected by (1) increasing the ball diameter from 28 mm to 36 mm, (2) the presence of a rigid metal backing, and (3) reducing the thickness of the liner from 6 mm to 3 mm. Using a finite element (FE) model, we also asked (4) whether the contact area and the stresses in the PE were affected by this reduction in thickness.

Materials and Methods

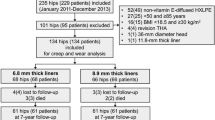

Hip simulator Test 1 (Fig. 1) compared the wear rates of 28- and 36-mm-diameter liners of noncrosslinked and crosslinked PE, with n = 3 for each of the four groups. Three liners of each type of PE were enclosed in polyurethane molds without the metal shells (Fig. 2) and tested for three million cycles to determine the interactive effect of the level of crosslinking and the ball diameter (Fig. 3) Then, to determine the effect of a rigid backing on the wear rate of the liners, they were placed in titanium alloy shells (Duraloc™; DePuy Orthopaedics, Inc, Warsaw, IN), mounted in the simulator using urethane molds (Fig. 2) and tested for an additional 2.5 million cycles. The wear rate for each liner was calculated using linear regression. Statistical analysis was performed using STATA® Version 10 (StataCorp LP, College Station, TX). The level of statistical significance for differences among the mean wear rates was calculated using Student’s t test.

The liners had a thickness of 6 mm. The noncrosslinked liners were machined from extruded bars of GUR 1050 UHMWPE (Poly-Hi Solidur, Inc, Fort Wayne, IN). The crosslinked liners were machined from extruded bars from the same batch of PE. The bars had been crosslinked by sealing them in foil bags, flushing with inert gas, evacuating, exposing to 5 Mrad of gamma radiation, heating to 155ºC for 24 hours to extinguish residual free radicals, annealing at 120ºC for 24 hours (both processes under partial vacuum), and then slow cooling to room temperature (Marathon™; DePuy). The crosslinked liners were sterilized with gas plasma, rather than gamma radiation, to avoid modifying the degree of crosslinking or reintroducing free radicals. The crystallinity was about 61% for the noncrosslinked PE and about 43% for the crosslinked PE [7].

The femoral balls (Articul/EZE™; DePuy) were fabricated from ASTM F-1537 cobalt-chrome alloy with nominal diameters of 28 mm or 36 mm. The surface roughness of each ball was measured with a Perthometer S8P® profilometer (Mahr Federal, Cincinnati, OH) using a tracing length of 1.75 mm, an evaluation length of 1.25 mm, and a cutoff length of 0.25 mm. The surfaces were implant quality, with an average Ra of about 0.02 μm. The actual diameters of the balls and liners were measured using a Mitutoyo BRT 504 coordinate measuring machine (Mitutoyo America, Aurora, IL) fitted with a Renishaw TP200 touch probe (Renishaw plc, Wolton-under-Edge, UK) and a 2-mm-diameter ruby stylus. The liner diameters averaged 28.20 ± 0.049 mm and 36.29 ± 0.027 mm. The ball diameters averaged 27.93 ± 0.005 mm and 36.0 ± 0.008 mm. For the wear test, the largest ball was paired with the largest liner, giving average radial ball-liner clearances of 132 ± 24 μm for the 28-mm hips and 144 ± 11 μm for the 36-mm hips.

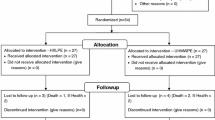

Hip Simulator Test 2 (Fig. 4) evaluated the effect of liner thickness on wear rate by comparing the wear of crosslinked liners all having an inner diameter of 36 mm but with wall thicknesses of 6, 5, 4, or 3 mm, with n = 3 in each group. As in Test 1, the wear rates were calculated using linear regression, and the level of statistical significance of differences in the mean wear rate among the four groups was calculated using Student’s t test. In addition, the relationship between wear rate and liner thickness was calculated using a mixed-model linear regression.

All of the liners in Test 2 were fabricated from Marathon™ crosslinked-remelted PE, prepared as described above. After gas plasma sterilization, the liners were artificially aged by heating to 80ºC in air for 21 days and were tested against implant-quality 36-mm-diameter ASTM F-1537 cobalt-chrome balls (DePuy), with an average radial ball-liner clearance of 525 ± 31 μm.

For wear testing, the liners were placed in titanium alloy acetabular shells (Pinnacle™; DePuy), mounted on the simulator using polyurethane molds, and tested for five million cycles. The test lubricant, loading, motion, cleaning intervals, and weighing procedures were the same as for Test 1, including the use of three loaded soak control liners for each of the four liner thicknesses (12 total).

Both wear tests were run on an orbital-bearing-type hip simulator (Shore Western, Monrovia, CA), with the liners mounted below the balls (Fig. 3), under a double-peak Paul-type load cycle [40] with a 2000-N maximum, with motion and loading synchronized at one cycle per second. The lubricant was filter-sterilized bovine serum (HyClone, Logan, UT) diluted with 0.2% sodium azide to retard bacterial degradation and mold growth and with 20 mmol/L EDTA to minimize precipitation of phosphates onto the balls, giving a protein concentration of approximately 90% (63 g/L).

At intervals of 250 thousand cycles, the PE liners and soak controls were rinsed, inspected visually, and replaced on the simulator in fresh serum. At intervals of 0.5 million cycles, the liners were cleaned, vacuum-desiccated, and weighed. The volume of wear was determined by increasing the measured weight loss of the wear liners by the mean weight gain of the soak controls and dividing by the density of UHMWPE (0.93 g/cm3).

To provide further understanding of the relationship between wall thickness and wear of the PE, a FE model was used to calculate the stresses within PE liners having inner diameters of 36 mm and thicknesses of 3, 4, 5, or 6 mm. The radial clearance between the femoral head and the liner was set at 525 μm (ie, comparable to the average clearance in Hip Simulator Test 2) (Fig. 5). Using PATRAN modeling software (v2005; MSC Software Corp, Santa Ana, CA), the liners were represented by 2976 eight-node brick elements and 256 six-node wedge elements. The same element mesh was used for the four liners that had different thicknesses. Since their stiffness was much higher than the PE, the cobalt-chromium head was modeled as an analytical rigid surface, and the titanium alloy shell was represented by a rigid concave spherical surface, using 808 four-node rigid elements. The ball-liner interface was modeled with 808 frictional elements that permitted sliding between the ball and liner, with the coefficient of friction set at 0.065 [31]. The liner-shell interface was modeled as fully bonded. The model had a total of 4848 elements and 14,574 degrees of freedom. The accuracy of the element mesh density was evaluated by increasing the total elements to 76,802. The increase of the stresses due to the higher mesh density was 1%, 4%, and 4% for the maximum contact stress, von Mises stress, and shear stress, respectively, for the liner with a thickness of 4 mm. An elastic modulus of 532 MPa was assigned to the PE elements, obtained from a true stress-strain curve based on the data of uniaxial tensile tests of Marathon™ crosslinked PE provided by the manufacturer. The Poisson’s ratio of the PE elements was set to 0.46. Since it was possible the stress within the PE, particularly in the case of the thin liners, might exceed the yield strength, elements with elastic-plastic deformation characteristics were used, with yield strength of 12.4 MPa and a von Mises yield criterion. The values for the elastic modulus and the yield strength were obtained from tensile tests performed by the manufacturer (DePuy) according to ASTM D638, in which yield is taken as the 0.2% offset strain on a true stress-strain curve (as recommended for PE by Kurtz et al. [27]). Since this material model did not include creep deformation of the PE, which would tend to increase the contact area and, thereby, decrease the stresses, the calculated stresses and the differences in stress among the four thicknesses should be considered worst-case maximum values. A constant force of 2000 N was applied through the center of the ball and normal to the surface of the liner, with the load centered 23° away from the pole of the liner (Fig. 5), corresponding to the maximum load used in the hip simulator tests. The analysis was performed using the ABAQUS program (v6.5; Simulia, Providence, RI).

Results

In Hip Simulator Test 1 (Table 1), the mean wear rate of the noncrosslinked PE liners without metal shells was 10% greater (p = 0.19) with a 36-mm diameter than with a 28-mm diameter. With metal shells, the mean wear rate was 4% greater (p = 0.4) with a 36-mm diameter than with 28-mm diameter. In contrast, the mean wear rate of the crosslinked PE liners without metal shells was 9% less (p = 0.57) with a 36-mm diameter than with 28-mm diameter. With metal shells, the mean wear rate was 4% greater (p = 0.64) with a 36-mm diameter than with 28-mm diameter. That is, with the crosslinked PE, there was no systematic increase in wear with increasing diameter, and these differences had relatively high p values. The crosslinked cups exhibited a slight gain in weight during the first 0.5 million cycles (Fig. 6). This effect has been reported in other tests of crosslinked PEs [33, 38, 39] and was likely due to the rate of fluid absorption of the wear cups temporarily exceeding that of the control cups. In each of the four test conditions (28- or 36-mm diameter, without or with modular metal backing), the mean wear rate was lower for the 5-Mrad crosslinked PE liners than for the noncrosslinked liners (Table 1), with the reductions ranging from 76% (p < 10−4) for both 28- and 36-mm diameters with metal backing to 83% (p < 10−3) for 36-mm diameter with polyurethane backing.

A graph shows the volumetric wear of the noncrosslinked (above) and crosslinked (below) PE liners without (left) and with (right) metal backing (Hip Simulator Test 1). Values are presented as mean ± SD. Neither increasing the ball diameter from 28 mm to 36 mm nor introducing the metal backing had a substantial effect on the wear rates.

The mean wear rates of the noncrosslinked PE liners were somewhat lower with metal shells than without them (Table 1), ie, by 8% with the 28-mm diameter (p = 0.07) and by 13% with the 36-mm diameter (p = 0.16). In contrast, the mean wear rates of the crosslinked PE liners were greater with metal shells, ie, by 10% with the 28-mm diameter (p = 0.42) and by 26% with the 36-mm diameter (p = 0.10)

In Hip Simulator Test 2, although there was substantial overlap of the amount of wear for individual liners from the different groups (Fig. 7), the mean wear rate tended to decrease with decreasing liner thickness, such that the mean wear rate of the 3-mm liners was about 19% less (p = 0.17) than that of the 6-mm liners (Table 2).

In the FE modeling of acetabular liner contact stresses, the stresses showed a corresponding increase with the decrease of the thickness of the liners (Fig. 8). The maximum contact stress, the von Mises, and shear stresses were 46%, 17%, and 18% greater in the 3-mm liner than in the 6-mm liner, respectively. While the contact stress for all of the wall thicknesses was greater than the yield strength of 12.4 MPa obtained from the uniaxial tensile tests, the von Mises stress in the liners was lower than the yield strength. Consequently, no yielding was considered to occur with any of the four wall thicknesses.

A graph shows the variation of the maximum contact stress, von Mises stress, and shear stress for liners with different thickness, as calculated with the FE model. Although the maximum value of the contact stress decreased substantially with decreasing thickness, there was much less effect on the maximum values of the von Mises and shear stresses.

In contrast, the contact area showed a corresponding increase with the increase of the thickness of the liners (Fig. 9). The computed contact area between the ball and liner was about 382 mm2 in the 3-mm liner and about 515 mm2 in the 6-mm liner, an increase of 35%.

Discussion

The improved wear resistance exhibited by the PEs with increased levels of crosslinking has stimulated interest in their use in hip arthroplasties with larger diameters to provide increased resistance to dislocation. However, the sliding distance per step increases in direct proportion to the diameter of the ball, potentially increasing the rate of wear. In addition, for a given outside diameter, a larger ball diameter requires a thinner PE liner. This can result in higher contact and internal stresses in the PE, possibly increasing the risk of excessive wear and/or fracture of the liner. Using a hip simulator, we investigated how the wear rates of noncrosslinked and crosslinked PE liners were affected by (1) increasing the ball diameter from 28 mm to 36-mm; (2) the presence of a rigid metal shell; and (3) reducing the thickness of the liner from 6 mm to 3 mm. We also asked (4) how the contact area and the stresses in the PE were affected by the reduction in thickness.

Our study is associated with several limitations. First a hip simulator cannot exactly reproduce the in vivo wear conditions. Nevertheless, hip simulator tests provide reasonably accurate predictions of the relative wear rates exhibited by different types of PEs in subsequent clinical use [23]. Second, the lower wear observed with the thinner liners occurred under ideal conditions, ie, with the contact zone well within the liner. This has been termed “Mode 1” wear conditions [34], ie, with the acetabular component implanted in the pelvis in an optimal orientation. In clinical practice, acetabular components are sometimes implanted in excessive vertical and/or rotational alignment, which can cause the ball to contact the liner near the rim (a type of Mode 2), or there can be impingement by the neck of the femoral stem (a type of Mode 4). This, in turn, can lead to severe wear and gross fracture of the PE, particularly in liners with external fixation notches that further reduce the thickness of the PE and act as stress concentrations. These types of failure have long been reported for acetabular components featuring conventional PE [14, 20, 35, 43] and, more recently, highly crosslinked PEs [12, 19, 36, 45]. Investigation of these failure modes is beyond the scope of the present study, but they should be taken into account in determining the minimum liner thickness acceptable for clinical use in a particular design of acetabular components.

With historical PEs (either noncrosslinked or moderately crosslinked due to gamma sterilization), the volumetric rate of wear has tended to increase with increasing ball diameter (Table 3) in hip simulator testing [5, 6, 37] and in clinical use [9, 13, 22, 24, 29, 44]. The increase is primarily due to the proportionately longer sliding distance per cycle. This trend also was evident in our study with the noncrosslinked PE, where the mean wear rates of the 36-mm liners were 10% and 4% higher without and with metal backing, respectively. In contrast, with highly crosslinked PEs, there has been little if any increase in the wear rate with increasing diameter in hip simulator tests [21, 37] or clinically [4, 17]. In our study, the mean wear rate of the larger, 36-mm-diameter liner was only slightly higher with the metal backing and was slightly lower without the metal backing (Table 1). The magnitude of the reduction in wear rate exhibited by the 5-Mrad crosslinked PE compared to noncrosslinked PE in our study (Table 1) was comparable to that shown in previous laboratory investigations [32, 33] and in clinical use [28].

The similarity of the wear rates with or without metal backing for either type of PE was consistent with the computational model of Maxian et al. [30], which predicted less than 1% difference in the wear rates of PE liners with or without metal backing.

The observation that the wear rate did not increase as the thickness of the cups decreased was consistent with the results of Kelly et al. [25] who compared the wear rates of acetabular cups of conventional and highly crosslinked PEs in a type of hip simulator closely comparable to that used in our study but with the cups mounted in greater abduction (“near impingement”). The conventional PE cups were gamma sterilized in nitrogen at 3 Mrad and had a 36-mm inner diameter with a 7.9-mm wall thickness (Stryker Orthopaedics, Mahwah, NJ). The PE for the highly crosslinked cups was crosslinked at 9 Mrad using three gamma doses of 3 Mrad, each followed by annealing (X3®; Stryker). These highly crosslinked cups had a 36-mm inner diameter with a 7.9-mm thickness or a 44-mm inner diameter with a 3.8-mm thickness. The mean wear rates were 25, 1.8, and 1.8 mg per million cycles for the conventional cups, 7.9-mm-thick crosslinked cups, and 3.8-mm-thick crosslinked cups, respectively. These authors concluded “crosslinked PE may allow for liners that are thinner than has been traditionally accepted” [25]. However, they also cautioned their results were for an “idealized cup position” and did not take into account the potential effects of edge loading, subluxation, or artificial aging, which has caused fracture of conventional and highly crosslinked liners.

In the FE modeling, the trend for the contact stress to increase as the thickness of the liner decreased (Fig. 8) was consistent with the results obtained by Bartel et al. [2] using analytical solutions and FE analysis. Although the general trend also was in agreement with the results of Plank et al. [41] who evaluated the contact stress of conventional and crosslinked PE using FE analysis and pressure-sensitive film for the comparable situation of a 38-mm ball and a 3-mm-thick PE liner, the contact stress calculated by these authors was 36% lower and von Mises stress was 39% higher than those in our study. This difference might be attributable to a number of factors, such as differences in the material properties, the geometry of the specimens, the clearance between the ball and liner, and the bonding condition assumed between the metal shell and the liners. For example, Bartel et al. [2] found a bonded interface, as used in our study, resulted in a higher contact stress and lower von Mises stress than a debonded interface, as used by Plank et al. [41] and Kurtz et al. [26].

Considering only the increase in the magnitudes of the contact stresses in these studies as the thickness of the liner was reduced, one would predict a corresponding increase in the rate of wear. For example, all other factors equal, Archard’s equation [1] predicts the volumetric rate of wear is directly proportional to the contact stress. Thus, it was surprising the lowest mean rate of wear in our study occurred with the thinnest (3-mm) liners. This trend might have been due, in part, to the contact area being smallest with the 3-mm liners, as indicated by the FE model. In the wear test, this might have been sufficient to more than offset the effect of the increased contact stress, leading to a net reduction in the volumetric rate of wear. In addition, the higher stresses in the thinner liners might have increased the total viscoelastic deformation of the PE, increasing the conformity between the ball and the liner in the contact zone and thereby the potential for at least partial separation of the bearing surfaces by a layer of lubricant, which would tend to reduce the rate of wear.

Taken together, the results of our study indicate, for a 5-Mrad crosslinked PE liner with the acetabular component in proper orientation, the diameter of the ball may be increased and the thickness of the PE decreased within the dimensional limits investigated to improve the resistance to dislocation, with little or no corresponding increase in the volumetric rate of wear and a modest increase in the stresses within the PE.

References

Archard J. The temperature of rubbing surfaces. Wear. 1958–59;2:438–455.

Bartel DL, Bicknell VL, Wright TM. The effect of conformity, thickness, and material on stresses in ultra-high molecular weight components for total joint replacement. J Bone Joint Surg Am. 1986;68:1041–1051.

Bitsch RG, Loidolt T, Heisel C, Ball S, Schmalzried TP. Reduction of osteolysis with use of Marathon cross-linked polyethylene: a concise follow-up, at a minimum of five years, of a previous report. J Bone Joint Surg Am. 2008;90:1487–1491.

Bragdon CR, Greene ME, Freiberg AA, Harris WH, Malchau H. Radiostereometric analysis comparison of wear of highly cross-linked polyethylene against 36- vs 28-mm femoral heads. J Arthroplasty. 2007;22:125–129.

Clarke IC, Good V, Anissian L, Gustafson A. Charnley wear model for validation of hip simulators-ball diameter versus polytetrafluoroethylene and polyethylene wear. Proc Inst Mech Eng H. 1997;211:25–36.

Clarke IC, Gustafson A, Jung H, Fujisawa A. Hip-simulator ranking of polyethylene wear: comparisons between ceramic heads of different sizes. Acta Orthop Scand. 1996;67:128–132.

Collier JP, Currier BH, Kennedy FE, Currier JH, Timmins GS, Jackson SK, Brewer RL. Comparison of cross-linked polyethylene materials for orthopaedic applications. Clin Orthop Relat Res. 2003;414:289–304.

D’Antonio JA, Manley MT, Capello WN, Bierbaum BE, Ramakrishnan R, Naughton M, Sutton K. Five-year experience with Crossfire® highly cross-linked polyethylene. Clin Orthop Relat Res. 2005;441:143–150.

Devane PA, Horne JG, Martin K, Coldham G, Krause B. Three-dimensional polyethylene wear of a press-fit titanium prosthesis: factors influencing generation of polyethylene debris. J Arthroplasty. 1997;12:256–266.

Digas G, Karrholm J, Thanner J, Malchau H, Herberts P. Highly cross-linked polyethylene in total hip arthroplasty: randomized evaluation of penetration rate in cemented and uncemented sockets using radiostereometric analysis. Clin Orthop Relat Res. 2004;429:6–16.

Dorr LD, Wan Z, Shahrdar C, Sirianni L, Boutary M, Yun A. Clinical performance of a Durasul highly cross-linked polyethylene acetabular liner for total hip arthroplasty at five years. J Bone Joint Surg Am. 2005;87:1816–1821.

Duffy GP, Wannomae KK, Rowell SL, Muratoglu OK. Fracture of a cross-linked polyethylene liner due to impingement. J Arthroplasty. 2009;24:158.e15–19.

Elfick AP, Hall RM, Pinder IM, Unsworth A. Wear in retrieved acetabular components: effect of femoral head radius and patient parameters. J. Arthroplasty. 1998;13:291–295.

Elfick AP, Hall RM, Pinder IM, Unsworth A. The effect of socket design, materials and liner thickness on the wear of the porous coated anatomic total hip replacement. Proc Inst Mech Eng H. 2001;215:447–457.

Garvin KL, Hartman CW, Mangla J, Murdoch N, Martell JM. Wear analysis in THA utilizing oxidized zirconium and crosslinked polyethylene. Clin Orthop Relat Res. 2009;467:141–145.

Geerdink CH, Grimm B, Ramakrishnan R, Rondhuis J, Verburg AJ, Tonino AJ. Crosslinked polyethylene compared to conventional polyethylene in total hip replacement: pre-clinical evaluation, in-vitro testing and prospective clinical follow-up study. Acta Orthop. 2006;77:719–725.

Geller JA, Malchau H, Bragdon C, Greene M, Harris WH, Freiberg AA. Large diameter femoral heads on highly cross-linked polyethylene: minimum 3-year results. Clin Orthop Relat Res. 2006;447:53–59.

Glyn-Jones S, Isaac S, Hauptfleisch J, McLardy-Smith P, Murray DW, Gill HS. Does highly cross-linked polyethylene wear less than conventional polyethylene in total hip arthroplasty? A double-blind, randomized, and controlled trial using roentgen stereophotogrammetric analysis. J Arthroplasty. 2008;23:337–343.

Halley D, Glassman A, Crowninshield RD. Recurrent dislocation after revision total hip replacement with a large prosthetic femoral head. A case report. J Bone Joint Surg Am. 2004;86:827–830.

Heck DA, Partridge CM, Reuben JD, Lanzer WL, Lewis CG, Keating EM. Prosthetic component failures in hip arthroplasty surgery. J Arthroplasty. 1995;10:575–580.

Hermida JC, Bergula A, Chen P, Colwell CW Jr, D’Lima DD. Comparison of the wear rates of twenty-eight and thirty-two-millimeter femoral heads on cross-linked polyethylene acetabular cups in a wear simulator. J Bone Joint Surg Am. 2003;85:2325–2331.

Hirakawa K, Bauer TW, Hashimoto Y, Stulberg B, Wilde AH. Effect of femoral head diameter on tissue concentration of wear debris. J Biomed Mater Res. 1997;36:529–535.

Jacobs CA, Christensen CP, Greenwald AS, McKellop H. Clinical performance of highly cross-linked polyethylenes in total hip arthroplasty. J Bone Joint Surg Am. 2007;89:2779–2786.

Kabo JM, Gebhard JS, Loren G, Amstutz HC. In vivo wear of polyethylene acetabular components. J Bone Joint Surg Br. 1993;75:254–258.

Kelly NH, Rajadhyaksha AD, Wright TM, Maher SA, Westrich GH. High stress conditions do not increase wear of thin highly crosslinked UHMWPE. Clin Orthop Relat Res. 2010;468:418–423.

Kurtz SM, Edidin AA, Bartel DL. The role of backside polishing, cup angle, and polyethylene thickness on the contact stresses in metal-backed acetabular components. J Biomech. 1997;30:639–642.

Kurtz SM, Pruitt L, Jewett CW, Crawford RP, Crane DJ, Edidin AA. The yielding, plastic flow, and fracture behavior of ultra-high molecular weight polyethylene used in total joint replacements. Biomaterials. 1998;19:1989–2003.

Leung SB, Egawa H, Stepniewski A, Beykirch S, Engh CA Jr, Engh CA Sr. Incidence and volume of pelvic osteolysis at early follow-up with highly cross-linked and noncross-linked polyethylene. J Arthroplasty. 2007;22:134–139.

Livermore J, Ilstrup D, Morrey B. Effect of femoral head size on wear of the polyethylene acetabular component. J Bone Joint Surg Am. 1990;72:518–528.

Maxian TA, Brown TD, Pedersen DR, McKellop HA, Lu B, Callaghan JJ. Finite element analysis of acetabular wear: validation and backing and fixation effects. Clin Orthop Relat Res. 1997;344:111–117.

McKellop H, Clarke IC, Markolf K, Amstutz H. Wear characteristics of UHMW polyethylene: a method for accurately measuring extremely low wear rates. J. Biomed Mater Res. 1978;12:895–927.

McKellop H, Shen FW, DiMaio W, Lancaster J. Wear of gamma-crosslinked polyethylene acetabular cups against roughened femoral balls. Clin Orthop Relat Res. 1999;369:73–82.

McKellop H, Shen FW, Lu B, Campbell P, Salovey R. Development of an extremely wear-resistant ultra high molecular weight polyethylene for total hip replacements. J Orthop Res. 1999;17:157–167.

McKellop HA. The lexicon of polyethylene wear in artificial joints. Biomaterials. 2007;28:5049–5057.

Min BW, Song KS, Kang CH, Won YY, Koo KH. Polyethylene liner failure in second-generation Harris-Galante acetabular components. J Arthroplasty. 2005;20:717–722.

Moore KD, Beck PR, Petersen DW, Cuckler JM, Lemons JE, Eberhardt AW. Early failure of a cross-linked polyethylene acetabular liner: a case report. J Bone Joint Surg Am. 2008;90:2499–2504.

Muratoglu OK, Bragdon CR, O’Connor D, Perinchief RS, Estok DM II, Jasty M, Harris WH. Larger diameter femoral heads used in conjunction with a highly cross-linked ultra-high molecular weight polyethylene: a new concept. J Arthroplasty. 2001;16:24–30.

Muratoglu OK, Bragdon CR, O’Connor DO, Jasty M, Harris WH. A novel method of cross-linking ultra-high-molecular-weight polyethylene to improve wear, reduce oxidation, and retain mechanical properties. Recipient of the 1999 HAP Paul Award. J Arthroplasty. 2001;16:149–160.

Oonishi H, Clarke IC, Yamamoto K, Masaoka T, Fujisawa A, Masuda S. Assessment of wear in extensively irradiated UHMWPE cups in simulator studies. J Biomed Mater Res A. 2004;68:52–60.

Paul JP. Loading on normal hip and knee joints on joint replacements. In: Schaldach M, Hohmann D, eds. Advances in Artificial Hip and Knee Joint Technology. Berlin, Germany: Springer-Verlag; 1976:53.

Plank GR, Estok DM 2nd, Muratoglu OK, O’Connor DO, Burroughs BR, Harris WH. Contact stress assessment of conventional and highly crosslinked ultra high molecular weight polyethylene acetabular liners with finite element analysis and pressure sensitive film. J Biomed Mater Res B Appl Biomater. 2007;80:1–10.

Rohrl SM, Li MG, Nilsson KG, Nivbrant B. Very low wear of non-remelted highly cross-linked polyethylene cups: an RSA study lasting up to 6 years. Acta Orthop. 2007;78:739–745.

Salvati EA, Wright TM, Burstein A, Jacobs J. Fracture of polyethylene acetabular cups. J Bone Joint Surg Am. 1979;61:1239–1242.

Shaju KA, Hasan ST, D’Souza LG, McMahon B, Masterson EL. The 22-mm vs the 32-mm femoral head in cemented primary hip arthroplasty long-term clinical and radiological follow-up study. J Arthroplasty. 2005;20:903–908.

Tower SS, Currier JH, Currier BH, Lyford KA, Van Citters DW, Mayor MB. Rim cracking of the cross-linked longevity polyethylene acetabular liner after total hip arthroplasty. J Bone Joint Surg Am. 2007;89:2212–2217.

Acknowledgments

We thank Peter Liao and Don McNulty of DePuy Orthopaedics, Inc., for their valuable contributions to the design of the study and the interpretation of the results.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Additional information

One or more of the authors received funding for this study from DePuy Orthopaedics, Inc, a Johnson & Johnson company, Warsaw, IN (HM) and from the Los Angeles Orthopaedic Hospital Foundation (FWS, HM, ZL). Drs. Shen and McKellop receive royalties for the license of the 5-Mrad crosslinked polyethylene that was the subject of the study. While employees of DePuy (PL, DM) participated in the interpretation of the results, the authors were responsible for the final content of the manuscript.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Shen, FW., Lu, Z. & McKellop, H.A. Wear versus Thickness and Other Features of 5-Mrad Crosslinked UHMWPE Acetabular Liners. Clin Orthop Relat Res 469, 395–404 (2011). https://doi.org/10.1007/s11999-010-1555-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11999-010-1555-6