Abstract

Background

Predicting the risk of in-hospital mortality on admission is challenging but essential for risk stratification of patient outcomes and designing an appropriate plan-of-care, especially among transferred patients.

Objective

Develop a model that uses administrative and clinical data within 24 h of transfer to predict 30-day in-hospital mortality at an Academic Health Center (AHC).

Design

Retrospective cohort study. We used 30 putative variables in a multiple logistic regression model in the full data set (n = 10,389) to identify 20 candidate variables obtained from the electronic medical record (EMR) within 24 h of admission that were associated with 30-day in-hospital mortality (p < 0.05). These 20 variables were tested using multiple logistic regression and area under the curve (AUC)–receiver operating characteristics (ROC) analysis to identify an optimal risk threshold score in a randomly split derivation sample (n = 5194) which was then examined in the validation sample (n = 5195).

Participants

Ten thousand three hundred eighty-nine patients greater than 18 years transferred to the Indiana University (IU)–Adult Academic Health Center (AHC) between 1/1/2016 and 12/31/2017.

Main Measures

Sensitivity, specificity, positive predictive value, C-statistic, and risk threshold score of the model.

Key Results

The final model was strongly discriminative (C-statistic = 0.90) and had a good fit (Hosmer-Lemeshow goodness-of-fit test [X2 (8) =6.26, p = 0.62]). The positive predictive value for 30-day in-hospital death was 68%; AUC-ROC was 0.90 (95% confidence interval 0.89–0.92, p < 0.0001). We identified a risk threshold score of −2.19 that had a maximum sensitivity (79.87%) and specificity (85.24%) in the derivation and validation sample (sensitivity: 75.00%, specificity: 85.71%). In the validation sample, 34.40% (354/1029) of the patients above this threshold died compared to only 2.83% (118/4166) deaths below this threshold.

Conclusion

This model can use EMR and administrative data within 24 h of transfer to predict the risk of 30-day in-hospital mortality with reasonable accuracy among seriously ill transferred patients.

Similar content being viewed by others

INTRODUCTION

Identifying patients with serious illness and predicting the risk of in-hospital mortality is challenging,1,2,3,4 especially among a diverse group of patients transferred between facilities for a higher level of care. Early assessment of serious illness and risk of mortality is essential for risk-adjustment for benchmarking5 and designing an appropriate plan-of-care including early conversations about patient outcomes and goals of care.3, 4 Transfer patients are known to be sicker, use more resources, and have poorer outcomes.6,7,8,9 They are medically more complex than initially estimated and can cause inaccurate benchmarking in centers receiving large numbers of transfer patients due to inadequate risk stratification.10 Clinicians may be challenged with the implications of early risk assessments, given their own limited prognostic accuracy, biases, and discomfort in the setting of patient emotions may defer serious illness communications (SIC) leading to a goal-incongruent care including intensive care unit (ICU) escalations and interventions with poor outcomes.2, 4 Despite its recognized value, SIC are often delayed towards the end of the disease trajectory after exhausting all life-sustaining treatments leading to patient and family dissatisfaction and under-utilization of palliative care and hospice services.4, 11, 12 Recognizing these complexities clinicians have expressed an interest in adopting evidence-based clinical prediction models to increase their prognostic confidence in the end-of-life care.13

Available models predicting mortality are often limited to ICU settings,14 are condition-specific,15,16,17,18 or predict deaths after hospital discharge.19,20,21 Multiple early warning systems (EWS) have been developed that use vital sign abnormalities prior to clinical deterioration with efforts to predict in-hospital mortality, but these models are limited by poor sensitivity, poor positive predictive value, and low reproducibility.22,23,24 Using machine learning, a real-time electronic medical record (EMR)–based inpatient mortality EWS was successful in identifying high-risk patients 24–48 h prior to death with improved sensitivity and specificity,25 and our goal was to identify patients for early interventions at the beginning of the hospitalization and before a crisis occurs. One validated in-hospital mortality model5, 26, 27 has an acceptable sensitivity, specificity, and predictive value that included transferred patients and direct admissions within the same health care system and used the same EMR. We were unable to replicate this approach due to lack of integration of information systems of transferring facilities into our EMR and variations in hospital practices of the sending facilities.28 A review of existing models demonstrated that a model that is feasible for our institution did not yet exist.

This study aimed to develop a model using readily available administrative and clinical data gathered from the EMR within 24 h of transfer to identify patients at a high risk of 30-day in-hospital mortality. Our goal is to predict risk for all-cause in-hospital mortality for transferred patients across all levels of care to support clinicians in decision-making and hospitals in risk-adjustment of patient outcomes.5, 26, 28, 29 The development of this model is the first step in the Indiana University (IU) Learning Health System Initiative (IU-LHSI) aimed at improving health care service delivery and outcomes for seriously ill transferred patients by identifying them within 24 h of transfer.1, 30

METHODS

Ethics

The IU Institutional Review Board (IRB) deemed this work to constitute quality improvement (QI) and exempted the study from IRB oversight.

RESEARCH SETTING

The Indiana University Health (IU Health) System is the largest and the most comprehensive health system in Indiana, comprising of 18 hospitals and partnering with Indiana University School of Medicine. The IU-Academic Health Center (IU-AHC) is a component of IU Health System that includes Methodist Hospital and University Hospital, which is a large tertiary center with about 50% of acute to acute transfers from within IU Health system and from facilities that are not integrated into its EMR. In 2019 IU-AHC had 93,633 emergency department (ED) visits, 14,377 transfers from peripheral hospitals, and 33,849 admissions.

Study Population

We identified 10,389 patients greater than 18 years old who were transferred from peripheral ED or other facilities to IU-AHC between 1/1/2016 and 12/31/2017. Any patients admitted from the IU-AHC ED were excluded.

Data Collection

Administrative data elements recorded within the first 24 h after transfer were obtained from the IU Health Data Warehouse, a combination of clinical (Cerner) and billing data using a data collection sheet (Appendix 1). Clinical data included vital signs, laboratory values, demographics, home medications, and health care utilization data. Billing data included Medicare severity–diagnosis-related group (MS-DRG) and International classification of diseases, tenth revision, clinical modification (ICD-10-CM). Diagnosis and visit data from up to 2 years prior to the index admission were obtained from the Indiana Health Information Exchange (IHIE), a statewide data system that connects to over 100 hospitals from 38 different health systems and includes clinical, laboratory, and diagnostic data for more than 15 million patients across Indiana.31, 32

Variables

Based on “PubMed” database search using terms “in-hospital mortality,” “in-hospital deaths,” and “inter-hospital transfers” and after review of other mortality prediction models, we identified 30 putative variables (see Table 1) for inclusion in the mortality model.5, 14, 15, 18, 26 Demographic data included age and gender. Admit type was defined as an emergency, urgent, and routine. The level of admission was defined as the initial location of admission (progressive care unit/PCU, critical care, regular floor). Admitting service was broadly categorized as medicine, surgery, critical care medicine, and obstetrics-gynecology. Utilization data included the total number of encounters within the IU Health system 90 days prior to the admission of interest. We used the first recorded vital signs, laboratory values, and Glasgow coma scale. Surgery within 24 h of admission was noted. Polypharmacy was defined as greater than five home medications at the time of admission of interest33 and was validated with a random check of fifty patient charts for accuracy. Respiratory support in the first 24 h was defined as noninvasive positive pressure ventilation support (NIPPV) or ventilation support. NIPPV included high flow oxygen, bilevel positive airway pressure (BIPAP), or continuous positive airway pressure (CPAP). Vasopressor use in the first 24 h was identified as present or absent. Charlson comorbidity index on the day of the admission of interest was retrospectively constructed from the admission and IHIE data using Deyo ICD-9 codes and their ICD-10 mappings.34 Four laboratory values had missing data rates >30% (bilirubin, albumin, base, INR) and were recorded as 3-level categorical variables (abnormal, normal, or missing). For patients with multiple transfers to IU-AHC during the study period, the admission of interest was defined as the last inpatient encounter.

Our main dependent variable was 30-day in-hospital mortality, defined as any inpatient death within 30 days of the admission of interest. Six transferred patients who died in the hospital later than 30 days of inpatient stay were excluded. Patients who died outside the hospital after discharge were not included in the in-hospital death group even if the post-discharge death was within 30 days.

Statistical Analysis

The 30 variables that were selected from the PubMed search were used in an initial multiple logistic regression model in the full data set to identify any candidate variables that were significantly associated with 30-day in-hospital mortality (p < 0.05) (Table 1). The cohort (10,389) was then randomly divided into derivation (n = 5194) and validation (n = 5195) samples, such that each sample had an equivalent rate of 30-day in-hospital mortality (9.09%). We used the derivation sample to develop the final model and validation sample to test the accuracy of the final model. Comparisons of significant variables in the initial full sample were conducted in the derivation sample between dead and survived groups, independent t tests were used for continuous variables, and chi-square tests were used for dichotomous variables (Table 2).

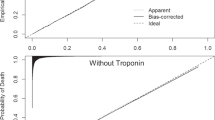

In the next step, variables significantly related to 30-day in-hospital mortality in the full sample were tested in relation to 30-day inpatient death in the derivation sample (Table 3). To evaluate this model, area under the curve–receiver operating characteristics (AUC-ROC) (Fig. 1), sensitivity, specificity, and positive predictive values at varying thresholds of specificity in the derivation sample were inspected (Table 4). We then applied the optimal threshold score (highest combined sensitivity and specificity values) from the derivation model to determine sensitivity and specificity within the validation model (Table 5).

RESULTS

In our total sample of 944 deaths, 56% of deaths occurred within the first seven days from the date of the transfer. On admission, 64.5% patients had do not resuscitate (DNR) status, 2.6% patients had limited code status, 7.2% patients had full code status, and 25% patients did not have a documented code status. Among the patients with a 30-day in-hospital mortality, only 11% of patients had a palliative care consultation, 4.6% were enrolled in hospice, and 3.4% were transitioned into hospice care after a palliative care consultation. The average days from the admission of interest to obtain a palliative care consultation was 7.37 days, and a hospice consultation was 9.18 days. Sepsis contributed to 18.64% of deaths; other causes are shown in Appendix 2.

Using a multiple regression model, we identified 20 candidate variables in the full data set that were statistically significant and associated with 30-day in-hospital mortality (p < 0.05) (Table 1). Based on comparisons between died and survived groups, patients who died were older, had fewer pre-admission encounters within the IU system, were less likely to have surgery, had higher CCI scores, had lower Glasgow coma scale, and were more likely admitted to a critical care unit with ventilator and vasopressor use (Table 2). Those who died also had a higher heart rate and respiratory rates on admission but slightly lower temperatures and more often had abnormal labs (Table 2). Fewer patients who died had polypharmacy (Table 2). The 20 candidate variables identified in the full data sample were noted to be statistically significant in the derivation sample as well (Table 3).

The final logistic model tested in the derivation sample was strongly discriminative (C-statistic = 0.90) and had a good fit (the Hosmer-Lemeshow goodness-of-fit test, [X2 (8)=6.26, p = 0 .62]. As shown in Figure 1, the AUC for 30-day in-hospital death in the derivation sample was 0.90 (95% confidence interval [0.89, 0.92], p < 0.0001). The median predicted score was −0.59 (range = −5.31, 5.16) for the cases that died, and was −3.74 (range = −14.77, 3.56) for the cases that survived. The positive predictive value was 68.00% (170/[170 + 80]). Table 4 shows model characteristics in the derivation sample at various levels of specificity. An outcome score of −2.19 (probability = 0.10), sensitivity (79.87%), and specificity (85.24%) had the greatest combined sum. In the validation sample, this threshold score of −2.19 resulted in a sensitivity of 75.00% and specificity of 85.71% (Table 5). At values above this threshold, 34.40% (354/1029) of patients died within 30 days; below this threshold, only 2.83% (118/4166) of the patients died.

DISCUSSION

In this study, we developed a model using 20 variables readily available from the administrative and EMR data in the first 24 h of transfer that was strongly predictive of 30-day in-hospital mortality (C-statistic 0.90). This work is a significant contribution as transfer patients have higher inpatient mortality, and very few prediction models for unselected transferred cohorts have been shown to have a high discriminatory ability and clinical utility.28, 35 We found that a threshold score of −2.19 identified a group of patients with a high likelihood of 30-day in-hospital mortality with a reasonable specificity and sensitivity.

Our study differs from prior studies that have developed mortality prediction models solely using EMR-generated variables as we also included clinical data obtained from EMR very early after the transfer, CCI scores, and health care utilization estimates obtained from claims data and data from health information exchange(HIE) sources.36, 37 Some variables that were associated with in-hospital mortality were those that would be expected to be associated with increased mortality, for example, older age, admission to an intensive care setting, use of vasopressors, intubation, and abnormal laboratory values; our model may have performed well because of the broader range of information we included to supplement a clinician’s judgment: utilization data, comorbidity scores, and polypharmacy. Some associations were counter-intuitive, including fewer patient encounters prior to transfer and less polypharmacy. Because our sample only included transfer patients, we may have missed utilization events at outside hospitals not participating in the IHIE which could contribute to lower number of patient encounters. Alternatively, residents of remote areas or patients with other barriers to health care access could result in lower patient encounters and untreated underlying medical conditions. We expected polypharmacy would be associated with increased mortality but found that patients with polypharmacy at the time of transfer had a higher survival rate, perhaps reflecting more access to care prior to the admission of interest.38 Abnormal laboratory values were also associated with mortality in largely expected ways, but the analysis of albumin, base, bilirubin, and INR was complicated due to the amount of missing data for these four variables (Table 3). Laboratory values may not be ordered due to lack of clinical rationale, as an aspect of less aggressive care, or were available from the transferring center and not repeated on admission. We did note a higher risk of mortality among those with missing bilirubin data, and this may suggest that it is prudent to order bilirubin early on admission in clinically relevant patients.

Our model has several potential implications for interventions in patients with serious illness, including identification of high-risk patients for additional clinical review and as a possible trigger for SIC.12According to a recent national experts’ consensus, EMR should reduce the burden of data capture on clinicians and hospital systems by meaningful communication of SIC12; the present model could be used for this purpose. A real-time mortality predicting EWS was prospectively validated to predict patients at high risk for inpatient mortality using machine learning methods and identified 40% of patients 24 to 48 h before their death but only 11% of patients 3 to 7 days before death.25 Our aim was not to develop continuous real-time mortality predicting model but to identify patients at risk for in-hospital death at the beginning of admission to facilitate SIC interventions and target efforts to improve in-hospital mortality outcomes before a crisis occurs. Another study developed an EMR model of 6-month mortality using sophisticated machine learning techniques to trigger early palliative care consults on hospital day 2, increasing palliative care referrals.20 Similarly, we are planning a pilot study to deploy our model as a part of the IU-LHSI project to identify patients at high risk of in-hospital mortality who may benefit from formal SIC interventions, including formal palliative care and hospice consultations as appropriate. Although the absolute percentage of deaths identified with this model is around 34% for those with a score above the threshold, this positive predictive value is reasonable for identifying patients that may benefit from early palliative care and hospice intervention. The need for this type of intervention is evidenced in our study by the observation of a lower proportion of patients receiving palliative and hospice care consultations prior to their death. Because SIC includes shared decision-making after discussing prognostic estimates in the setting of patient expectations, goals, values,12 and designing a plan-of-care before a crisis occurs, our model is especially desired as a potential EMR intervention in transferred patients triggered 24 h after admission. Our model is also unique as it illustrates the potential benefit of meaningful use of HIE by facilitating the exchange of medical information relevant to clinical care during transitions of care.39

Our study has some limitations. We were able to predict 30-day in-hospital deaths but were unable to predict earlier in-hospital deaths due to a limited sample size. We do not have a measure of clinician judgment regarding the risk of inpatient mortality at the point of initial care on admission, which could add additional predictive value. There may be relationships between individual missing labs in specific populations (e.g., patients with sepsis) and the risk of mortality that we were unable to distinguish. Longitudinal health care utilization data from IHIE was obtained to support the analysis, but any utilization data from health care systems not participating in IHIE could not be included. Most of the variables used in this model were readily available in the EMR within 24 h of transfer except CCI scores, which may not be available on day 1 of the hospitalization. Our next steps will be to revalidate the model substituting CCI scores with the readily available “medical problem list” and any other in-system diagnoses or procedures as the source of comorbidity data. This was an observational retrospective cohort single-center study among diverse transferred patients, so its generalizability to other specific inpatient populations is yet to be determined. Our model may also be affected by lead-time bias and under-predicted mortality in our cohort as we included vital signs after arrival, but despite these potential issues, our model had reasonable accuracy.10, 40 This model was developed and validated prior to the COVID-19 pandemic, to further evaluate its accuracy during a period of increased hospitalization and mortality of seriously ill patients infected with severe acute respiratory syndrome-coronavirus-2 (SARS-CoV-2) will need to be examined. Lastly, the clinical implications of the association of the statistical significance of this model and the in-hospital mortality must be interpreted with caution; this model should invariably be used in the setting of a clinician’s judgment and either to develop a palliative care response team to address SIC early in the hospital course or for clinical documentation improvement efforts.

CONCLUSIONS

Our study shows that readily available EMR and administrative data within the first 24 h of a hospital transfer is a promising approach to predict the risk of 30-day in-hospital mortality. This model has an excellent discriminatory ability and clinically acceptable levels of sensitivity, specificity, and positive predictive value. In subsequent work, we plan to use this model early in the hospital course as an EMR trigger to identify seriously ill transferred patients with a high risk of mortality and facilitate timely SIC interventions. We also plan to examine the model’s utility to trigger a check of the severity of illness documentation and coding to improve the accuracy of risk-stratified mortality indices.

References

Kelley AS, Bollens-Lund E. Identifying the Population with Serious Illness: The “Denominator” Challenge. J Palliat Med. 2018;21(S2):S7-S16.

Anderson WG, Kools S, Lyndon A. Dancing around death: hospitalist-patient communication about serious illness. Qual Health Res. 2013;23(1):3-13.

Fail RE, Meier DE. Improving Quality of Care for Seriously Ill Patients: Opportunities for Hospitalists. J Hosp Med. 2018;13(3):194-197.

Bernacki RE, Block SD, American College of Physicians High Value Care Task F. Communication about serious illness care goals: a review and synthesis of best practices. JAMA Intern Med. 2014;174(12):1994-2003.

Escobar GJ, Gardner MN, Greene JD, Draper D, Kipnis P. Risk-adjusting hospital mortality using a comprehensive electronic record in an integrated health care delivery system. Med Care. 2013;51(5):446-453.

Rosenberg LB, Jacobsen JC. Chasing Hope: When Are Requests for Hospital Transfer a Place for Palliative Care Integration? J Hosp Med. 2020;14(4):250-251.

Golestanian E, Scruggs JE, Gangnon RE, Mak RP, Wood KE. Effect of interhospital transfer on resource utilization and outcomes at a tertiary care referral center. Crit Care Med. 2007;35(6):1470-1476.

Ligtenberg JJ, Arnold LG, Stienstra Y, et al. Quality of interhospital transport of critically ill patients: a prospective audit. Crit Care. 2005;9(4):R446-451.

Hill AD, Vingilis E, Martin CM, Hartford K, Speechley KN. Interhospital transfer of critically ill patients: demographic and outcomes comparison with nontransferred intensive care unit patients. J Crit Care. 2007;22(4):290-295.

Holena DN, Wiebe DJ, Carr BG, et al. Lead-Time Bias and Interhospital Transfer after Injury: Trauma Center Admission Vital Signs Underpredict Mortality in Transferred Trauma Patients. J Am Coll Surg. 2017;224(3):255-263.

Kaufman BG, Sueta CA, Chen C, Windham BG, Stearns SC. Are Trends in Hospitalization Prior to Hospice Use Associated With Hospice Episode Characteristics? Am J Hosp Palliat Care. 2017;34(9):860-868.

Sanders JJ, Paladino J, Reaves E, et al. Quality Measurement of Serious Illness Communication: Recommendations for Health Systems Based on Findings from a Symposium of National Experts. J Palliat Med. 2020;23(1):13-21.

Hallen SA, Hootsmans NA, Blaisdell L, Gutheil CM, Han PK. Physicians’ perceptions of the value of prognostic models: the benefits and risks of prognostic confidence. Health Expect. 2015;18(6):2266-2277.

Yang M, Mehta HB, Bali V, et al. Which risk-adjustment index performs better in predicting 30-day mortality? A systematic review and meta-analysis. J Eval Clin Pract. 2015;21(2):292-299.

Ramchandran KJ, Shega JW, Von Roenn J, et al. A predictive model to identify hospitalized cancer patients at risk for 30-day mortality based on admission criteria via the electronic medical record. Cancer. 2013;119(11):2074-2080.

Di MY, Liu H, Yang ZY, Bonis PA, Tang JL, Lau J. Prediction Models of Mortality in Acute Pancreatitis in Adults: A Systematic Review. Ann Intern Med. 2016;165(7):482-490.

Dodson JA, Hajduk AM, Geda M, et al. Predicting 6-Month Mortality for Older Adults Hospitalized With Acute Myocardial Infarction: A Cohort Study. Ann Intern Med. 2020;172(1):12-21.

Adelson K, Lee DKK, Velji S, et al. Development of Imminent Mortality Predictor for Advanced Cancer (IMPAC), a Tool to Predict Short-Term Mortality in Hospitalized Patients With Advanced Cancer. J Oncol Pract. 2018;14(3):e168-e175.

Simmons CPL, McMillan DC, McWilliams K, et al. Prognostic Tools in Patients With Advanced Cancer: A Systematic Review. J Pain Symptom Manage. 2017;53(5):962-970 e910.

Courtright KR, Chivers C, Becker M, et al. Electronic Health Record Mortality Prediction Model for Targeted Palliative Care Among Hospitalized Medical Patients: a Pilot Quasi-experimental Study. J Gen Intern Med. 2019;34(9):1841-1847.

Cowen ME, Strawderman RL, Czerwinski JL, Smith MJ, Halasyamani LK. Mortality predictions on admission as a context for organizing care activities. J Hosp Med. 2013;8(5):229-235.

Churpek MM, Yuen TC, Edelson DP. Risk stratification of hospitalized patients on the wards. Chest. 2013;143(6):1758-1765.

Shiloh AL, Lominadze G, Gong MN, Savel RH. Early Warning/Track-and-Trigger Systems to Detect Deterioration and Improve Outcomes in Hospitalized Patients. Semin Respir Crit Care Med. 2016;37(1):88-95.

Kirkland LL, Malinchoc M, O’Byrne M, et al. A clinical deterioration prediction tool for internal medicine patients. Am J Med Qual. 2013;28(2):135-142.

Ye C, Wang O, Liu M, et al. A Real-Time Early Warning System for Monitoring Inpatient Mortality Risk: Prospective Study Using Electronic Medical Record Data. J Med Internet Res. 2019;21(7):e13719.

Escobar GJ, Greene JD, Scheirer P, Gardner MN, Draper D, Kipnis P. Risk-adjusting hospital inpatient mortality using automated inpatient, outpatient, and laboratory databases. Med Care. 2008;46(3):232-239.

van Walraven C, Escobar GJ, Greene JD, Forster AJ. The Kaiser Permanente inpatient risk adjustment methodology was valid in an external patient population. J Clin Epidemiol. 2010;63(7):798-803.

Grady D, Berkowitz SA. Why is a good clinical prediction rule so hard to find? Arch Intern Med. 2011;171(19):1701-1702.

Siontis GC, Tzoulaki I, Ioannidis JP. Predicting death: an empirical evaluation of predictive tools for mortality. Arch Intern Med. 2011;171(19):1721-1726.

IU-Regenstrief 1 of 11 Awards for Next Generation of Learning Health System Researchers. Regenstrief Institute, Inc. https://www.regenstrief.org/article/iu-regenstrief-ahrq-award/. Published 2018. Updated 9/26/2018. Accessed 9/26/2018.

Grannis SJ, Stevens KC, Merriwether R. Leveraging health information exchange to support public health situational awareness: the indiana experience. Online J Public Health Inform. 2010;2(2).

About IHIE - Indiana Health Information. Indiana Health Information Exchange. https://www.ihie.org/about-us/. Accessed 12/31/2019.

Masnoon N, Shakib S, Kalisch-Ellett L, Caughey GE. What is polypharmacy? A systematic review of definitions. BMC Geriatr. 2017;17(1):230.

Quan H, Sundararajan V, Halfon P, et al. Coding algorithms for defining comorbidities in ICD-9-CM and ICD-10 administrative data. Med Care. 2005;43(11):1130-1139.

Yourman LC, Lee SJ, Schonberg MA, Widera EW, Smith AK. Prognostic indices for older adults: a systematic review. JAMA. 2012;307(2):182-192.

Desai RJ, Wang SV, Vaduganathan M, Evers T, Schneeweiss S. Comparison of Machine Learning Methods With Traditional Models for Use of Administrative Claims With Electronic Medical Records to Predict Heart Failure Outcomes. JAMA Netw Open. 2020;3(1):e1918962.

Pine M, Jordan HS, Elixhauser A, et al. Enhancement of claims data to improve risk adjustment of hospital mortality. JAMA. 2007;297(1):71-76.

Leelakanok N, Holcombe AL, Lund BC, Gu X, Schweizer ML. Association between polypharmacy and death: A systematic review and meta-analysis. J Am Pharm Assoc (2003). 2017;57(6):729-738 e710.

Esmaeilzadeh P, Sambasivan M. Health Information Exchange (HIE): A literature review, assimilation pattern and a proposed classification for a new policy approach. J Biomed Inform. 2016;64:74-86.

Tunnell RD, Millar BW, Smith GB. The effect of lead time bias on severity of illness scoring, mortality prediction and standardised mortality ratio in intensive care--a pilot study. Anaesthesia. 1998;53(11):1045-1053.

Funding

This work was funded by the “Advanced Scholarship Program for Internists in Research and Education,” Indiana University School of Medicine, Indianapolis, IN, USA.

Author information

Authors and Affiliations

Contributions

Ann Cottingham, Richard M. Frankel, PhD, Rachel Gruber, and Regenstrief Institute Inc., Indianapolis, IN, USA.

Corresponding author

Ethics declarations

Conflict of Interest

None of the authors have any conflicts of interest to disclose.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Abstract Published at

Supplementary Information

ESM 1

(DOCX 31 kb)

Rights and permissions

About this article

Cite this article

Mahendraker, N., Flanagan, M., Azar, J. et al. Development and Validation of a 30-Day In-hospital Mortality Model Among Seriously Ill Transferred Patients: a Retrospective Cohort Study. J GEN INTERN MED 36, 2244–2250 (2021). https://doi.org/10.1007/s11606-021-06593-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11606-021-06593-z