Abstract

The breakthrough brought by generative adversarial networks (GANs) in computer vision (CV) applications has gained a lot of attention in different fields due to their ability to capture the distribution of a dataset and generate high-quality similar images. From one side, this technology has been rapidly adopted as an alternative to traditional applications and introduced novel perspectives in data augmentation, domain transfer, image expansion, image restoration, image segmentation, and super-resolution. From another side, we found that due to the lack of industrial datasets and the limitation for acquiring and accurately annotating new images, GANs form an exciting solution to generate new industrial image datasets or to restore and augment existing ones. Therefore, we introduce a review of the latest trend in GANs applications and project them in industrial use cases. We conducted our experiments with synthetic images and analyzed most of GAN’s failures and image artifacts to provide training’s best practices.

Similar content being viewed by others

Data availability

Our rendered datasets are available from the corresponding authors upon reasonable request.

Notes

The BigGAN truncation tricks consist of using different distributions for the latent space while training and inferring the generator.

IC-GAN supports two training backbones: BigGAN and StyleGAN2-ADA.

Pre-trained models are models that have already been trained on some other datasets.

The larger the dataset size, the more harmful the augmentation is Karras et al. (2020a).

These steps are followed by geometric and photometric transformations to augment the dataset.

WIT400M dataset (Radford et al., 2021).

The use of strong CLIP guidance in the model can limit the diversity of the generated images and introduces image artifacts (Sauer et al., 2023).

A procedural texture is when an algorithm generates the texture instead of relying on the time-consuming process of photogrammetry or error-prone projection of the texture mapping.

A tiled texture is, when repeated side-by-side with a copy of itself, displays no visible seam or junction where the two tiles meet.

For technical details, we recommend reading Pang et al.’s review of I2I methods and applications (Pang et al., 2021).

Cyclic loss best practices are manifested in small domain gaps: horses to zebras, summer to winter, etc.

E.g. the camera can be set on a transport robot or a moving robot arm.

The ‘+’ sign normally denotes that model results are improved (Li et al., 2021).

GAN may succeed in generating some classes while it fails in covering all samples for other classes.

A modified version of MSG-GAN is developed to generate mipmap (Williams, 1983) instead of an image. In computer graphics, mipmaps, or pyramids, are a series of pre-computed and optimized images, each representing the previous image at progressively lower resolutions.

The training is executed on NVIDIA DGX-1 with 8 Tesla V100 GPUs.

References

Abbas, A., Jain, S., Gour, M., et al. (2021). Tomato plant disease detection using transfer learning with c-gan synthetic images. Computers and Electronics in Agriculture, 187(106), 279.

Abou Akar, C., Tekli, J., Jess, D., et al. (2022). Synthetic object recognition dataset for industries. In 2022 35th SIBGRAPI conference on graphics, patterns and images (SIBGRAPI) (pp. 150–155). IEEE.

ajbrock. (2019). BigGAN-PyTorch. https://github.com/ajbrock/BigGAN-PyTorch. Accessed February 08, 2022.

Alaa, A., Van Breugel, B., Saveliev, E. S., et al. (2022). How faithful is your synthetic data? Sample-level metrics for evaluating and auditing generative models. In International conference on machine learning, PMLR (pp. 290–306).

Alaluf, Y., Patashnik, O., Wu, Z., et al. (2022). Third time’s the charm? Image and video editing with stylegan3. arXiv preprint arXiv:2201.13433.

Alami Mejjati, Y., Richardt, C., Tompkin, J., et al. (2018). Unsupervised attention-guided image-to-image translation. In Advances in neural information processing systems, 31.

Alanov, A., Kochurov, M., & Volkhonskiy, D., et al. (2019). User-controllable multi-texture synthesis with generative adversarial networks. arXiv preprint arXiv:1904.04751.

Almahairi, A., Rajeshwar, S., & Sordoni, A., et al. (2018). Augmented cyclegan: Learning many-to-many mappings from unpaired data. In International conference on machine learning, PMLR (pp. 195–204).

Amodio, M., & Krishnaswamy, S. (2019). Travelgan: Image-to-image translation by transformation vector learning. In Proceedings of the ieee/cvf conference on computer vision and pattern recognition (pp. 8983–8992).

Anwar, S., Khan, S., & Barnes, N. (2020). A deep journey into super-resolution: A survey. ACM Computing Surveys (CSUR), 53(3), 1–34.

Arjovsky, M., & Bottou, L. (2017). Towards principled methods for training generative adversarial networks. arXiv preprint arXiv:1701.04862.

Arjovsky, M., Chintala, S., & Bottou, L. (2017). Wasserstein generative adversarial networks. In International conference on machine learning, PMLR (pp. 214–223).

Armandpour, M., Sadeghian, A., Li, C., et al. (2021). Partition-guided gans. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 5099–5109).

Arora, S., & Zhang, Y. (2017). Do gans actually learn the distribution? an empirical study. arXiv preprint arXiv:1706.08224

Ashok, K., Boddu, R., Syed, S. A., et al. (2023). Gan base feedback analysis system for industrial iot networks. Automatika, 64(2), 259–267.

Azulay, A., & Weiss, Y. (2018). Why do deep convolutional networks generalize so poorly to small image transformations? arXiv preprint arXiv:1805.12177

Bai, C. Y., Lin, H. T., & Raffel, C., et al. (2021). On training sample memorization: Lessons from benchmarking generative modeling with a large-scale competition. In Proceedings of the 27th ACM SIGKDD conference on knowledge discovery & data mining (pp. 2534–2542).

Balakrishnan, G., Zhao, A., & Dalca, A. V., et al. (2018). Synthesizing images of humans in unseen poses. In Proceedings of the IEEE conference on computer vision and pattern Recognition (pp. 8340–8348).

Baldvinsson, J. R., Ganjalizadeh, M., & AlAbbasi, A. et al. (2022). Il-gan: Rare sample generation via incremental learning in gans. In GLOBECOM 2022-2022 IEEE global communications conference. IEEE (pp. 621–626).

Bansal, A., Sheikh, Y., & Ramanan, D. (2017). Pixelnn: Example-based image synthesis. arXiv preprint arXiv:1708.05349

Bao, J., Chen, D., & Wen, F., et al. (2017). Cvae-gan: fine-grained image generation through asymmetric training. In Proceedings of the IEEE international conference on computer vision (pp. 2745–2754).

Barannikov, S., Trofimov, I., & Sotnikov, G., et al. (2021). Manifold topology divergence: a framework for comparing data manifolds. In Advances in neural information processing systems, 34.

Barua, S., Ma, X., & Erfani, S. M., et al. (2019). Quality evaluation of gans using cross local intrinsic dimensionality. arXiv preprint arXiv:1905.00643

Bashir, S. M. A., Wang, Y., Khan, M., et al. (2021). A comprehensive review of deep learning-based single image super-resolution. PeerJ Computer Science, 7, e621.

Bau, D., Zhu, J. Y., & Wulff, J., et al. (2019). Seeing what a gan cannot generate. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 4502–4511).

Benaim, S., & Wolf, L. (2017). One-sided unsupervised domain mapping. In Advances in neural information processing systems, 30.

Benaim, S., & Wolf, L. (2018). One-shot unsupervised cross domain translation. In Advances in neural information processing, systems, 31.

Bergmann, U., Jetchev, N., & Vollgraf, R. (2017). Learning texture manifolds with the periodic spatial gan. arXiv preprint arXiv:1705.06566

Bernsen, N. O. (2008). Multimodality theory. In Multimodal user interfaces (pp. 5–29). Springer.

Bhagwatkar, R., Bachu, S., Fitter, K., et al. (2020). A review of video generation approaches. 2020 international conference on power, instrumentation, control and computing (PICC) (pp. 1–5). IEEE.

Bińkowski, M., Sutherland, D. J., & Arbel, M., et al. (2018). Demystifying mmd gans. arXiv preprint arXiv:1801.01401

Bora, A., Price, E., & Dimakis, A. G. (2018). Ambientgan: Generative models from lossy measurements. In International conference on learning representations.

Borji, A. (2022). Pros and cons of gan evaluation measures: New developments. Computer Vision and Image Understanding, 215(103), 329.

Bougaham, A., Bibal, A., & Linden, I., et al. (2021). Ganodip-gan anomaly detection through intermediate patches: a pcba manufacturing case. In Third international workshop on learning with imbalanced domains: Theory and applications, PMLR (pp. 104–117).

Boulahbal, H. E., Voicila, A., & Comport, A. I. (2021). Are conditional GANs explicitly conditional? In British machine vision conference, virtual, United Kingdom, https://hal.science/hal-03454522

Brock, A., Donahue, J., & Simonyan, K. (2018). Large scale gan training for high fidelity natural image synthesis. arXiv preprint arXiv:1809.11096

Brownlee, J. (2019a). A Gentle Introduction to BigGAN the Big Generative Adversarial Network. https://machinelearningmastery.com/a-gentle-introduction-to-the-biggan/. Accessed February 08, 2022.

Brownlee, J. (2019b). How to identify and diagnose GAN failure modes. https://machinelearningmastery.com/practical-guide-to-gan-failure-modes/. Accessed May 18, 2022.

Brownlee, J. (2019c). How to Implement the Inception Score (IS) for Evaluating GANs. https://machinelearningmastery.com/how-to-implement-the-inception-score-from-scratch-for-evaluating-generated-images/. Accessed May 28, 2022.

Cai, Y., Wang, X., Yu, Z., et al. (2019). Dualattn-gan: Text to image synthesis with dual attentional generative adversarial network. IEEE Access, 7, 183,706-183,716.

Cai, Z., Xiong, Z., Xu, H., et al. (2021). Generative adversarial networks: A survey toward private and secure applications. ACM Computing Surveys (CSUR), 54(6), 1–38.

Cao, G., Zhao, Y., Ni, R., et al. (2011). Unsharp masking sharpening detection via overshoot artifacts analysis. IEEE Signal Processing Letters, 18(10), 603–606.

Cao, J., Katzir, O., & Jiang, P., et al. (2018). Dida: Disentangled synthesis for domain adaptation. arXiv preprint arXiv:1805.08019

Casanova, A., Careil, M., & Verbeek, J., et al. (2021). Instance-conditioned gan. In Advances in neural information processing systems, 34.

Castillo, C., De, S., Han, X., et al. (2017). Son of zorn’s lemma: Targeted style transfer using instance-aware semantic segmentation. In 2017 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 1348–1352). IEEE.

Chang, H. Y., Wang, Z., & Chuang, Y. Y. (2020). Domain-specific mappings for generative adversarial style transfer. In European conference on computer vision (pp. 573–589). Springer.

Chen, H., Liu, J., & Chen, W., et al. (2022a). Exemplar-based pattern synthesis with implicit periodic field network. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 3708–3717).

Chen, T., Zhang, Y., & Huo, X., et al. (2022b). Sphericgan: Semi-supervised hyper-spherical generative adversarial networks for fine-grained image synthesis. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 10,001–10,010).

Chen, X., & Jia, C. (2021). An overview of image-to-image translation using generative adversarial networks. In International conference on pattern recognition (pp. 366–380). Springer.

Chen, X., Duan, Y., & Houthooft, R., et al. (2016). Infogan: Interpretable representation learning by information maximizing generative adversarial nets. In Advances in neural information processing systems, 29.

Chen, X., Xu, C., & Yang, X., et al. (2018). Attention-gan for object transfiguration in wild images. In Proceedings of the European conference on computer vision (ECCV) (pp. 164–180).

Choi, Y., Choi, M., & Kim, M., et al. (2018). Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 8789–8797).

Chong, M. J., & Forsyth, D. (2020). Effectively unbiased fid and inception score and where to find them. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 6070–6079).

Chu, C., Zhmoginov, A., & Sandler, M. (2017). Cyclegan, a master of steganography. arXiv preprint arXiv:1712.02950

Cohen, T., & Wolf, L. (2019). Bidirectional one-shot unsupervised domain mapping. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 1784–1792).

CompVis. (2022). Stable diffusion model card. https://github.com/CompVis/stable-diffusion/blob/main/Stable_Diffusion_v1_Model_Card.md. Accessed January 24, 2023.

Cordts, M., Omran, M., & Ramos, S., et al. (2015). The cityscapes dataset. In CVPR workshop on the future of datasets in vision, sn.

Cunningham, P., Cord, M., & Delany, S. J. (2008). Supervised learning. In Machine learning techniques for multimedia (pp. 21–49). Springer.

Deng, J., Dong, W., & Socher, R., et al. (2009). Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition (pp. 248–255). IEEE.

Deng, L. (2012). The mnist database of handwritten digit images for machine learning research. IEEE Signal Processing Magazine, 29(6), 141–142.

Denton, E. L., Chintala, S., & Fergus, R., et al. (2015). Deep generative image models using a Laplacian pyramid of adversarial networks. In Advances in neural information processing systems, 28.

Denton, E. L., et al. (2017). Unsupervised learning of disentangled representations from video. In Advances in neural information processing systems, 30.

Ding, M., Yang, Z., & Hong, W., et al. (2021). Cogview: Mastering text-to-image generation via transformers. in Advances in neural information processing systems, 34.

Dong, C., Kumar, A., & Liu, E. (2022). Think twice before detecting gan-generated fake images from their spectral domain imprints. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 7865–7874).

Dumoulin, V., Shlens, J., & Kudlur, M. (2016). A learned representation for artistic style. arXiv preprint arXiv:1610.07629

Dumoulin, V., Perez, E., Schucher, N., et al. (2018). Feature-wise transformations. Distill, 3(7), e11.

Durall, R., Chatzimichailidis, A., & Labus, P., et al. (2020). Combating mode collapse in gan training: An empirical analysis using hessian eigenvalues. arXiv preprint arXiv:2012.09673

Eckerli, F., & Osterrieder, J. (2021). Generative adversarial networks in finance: An overview. arXiv preprint arXiv:2106.06364

Esser, P., Sutter, E., & Ommer, B. (2018). A variational u-net for conditional appearance and shape generation. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 8857–8866).

Facebook Research. (2021). IC-GAN: Instance-Conditioned GAN. https://github.com/facebookresearch/ic_gan. Accessed February 08, 2022.

Farajzadeh-Zanjani, M., Razavi-Far, R., & Saif, M., et al. (2022). Generative adversarial networks: A survey on training, variants, and applications. In Generative adversarial learning: Architectures and applications (pp. 7–29). Springer.

Frühstück, A., Alhashim, I., & Wonka, P. (2019). Tilegan: Synthesis of large-scale non-homogeneous textures. ACM Transactions on Graphics (ToG), 38(4), 1–11.

Fu, H., Gong, M., & Wang, C., et al. (2019). Geometry-consistent generative adversarial networks for one-sided unsupervised domain mapping. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 2427–2436).

Gatys, L., Ecker, A. S., & Bethge, M. (2015a). Texture synthesis using convolutional neural networks. In Advances in neural information processing systems, 28.

Gatys, L. A., Ecker, A. S., & Bethge, M. (2015b). A neural algorithm of artistic style. arXiv preprint arXiv:1508.06576

Gatys, L. A., Bethge, M., & Hertzmann, A., et al. (2016a). Preserving color in neural artistic style transfer. arXiv preprint arXiv:1606.05897

Gatys, L. A., Ecker, A. S., & Bethge, M. (2016b). Image style transfer using convolutional neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 2414–2423).

Geyer, J., Kassahun, Y., & Mahmudi, M., et al. (2020). A2d2: Audi autonomous driving dataset. arXiv preprint arXiv:2004.06320

Ghiasi, G., Lee, H., & Kudlur, M., et al. (2017). Exploring the structure of a real-time, arbitrary neural artistic stylization network. arXiv preprint arXiv:1705.06830

Glorot, X., & Bengio, Y. (2010). Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the thirteenth international conference on artificial intelligence and statistics, JMLR Workshop and Conference Proceedings (pp. 249–256).

GM, H., Sahu, A., & Gourisaria, M. K. (2021). Gm score: Incorporating inter-class and intra-class generator diversity, discriminability of disentangled representation, and sample fidelity for evaluating gans. arXiv preprint arXiv:2112.06431

Gokaslan, A., Ramanujan, V., & Ritchie, D., et al. (2018). Improving shape deformation in unsupervised image-to-image translation. In Proceedings of the European Conference on Computer Vision (ECCV) (pp. 649–665).

Gomi, T., Sakai, R., Hara, H., et al. (2021). Usefulness of a metal artifact reduction algorithm in digital tomosynthesis using a combination of hybrid generative adversarial networks. Diagnostics, 11(9), 1629.

Gonzalez-Garcia, A., Van De Weijer, J., & Bengio, Y. (2018). Image-to-image translation for cross-domain disentanglement. In Advances in neural information processing systems, 31.

Goodfellow, I. (2016). Nips 2016 tutorial: Generative adversarial networks. arXiv preprint arXiv:1701.00160

Goodfellow, I., Pouget-Abadie, J., & Mirza, M., et al. (2014). Generative adversarial nets. In Advances in neural information processing systems, 27.

Google Developers. (2022). GAN Training. https://developers.google.com/machine-learning/gan/training. Accessed June 05, 2022.

Gu, S., Zhang, R., Luo, H., et al. (2021). Improved singan integrated with an attentional mechanism for remote sensing image classification. Remote Sensing, 13(9), 1713.

Gulrajani, I., Ahmed, F., & Arjovsky, M., et al. (2017). Improved training of wasserstein gans. Advances in neural information processing systems, 30.

Guo, X., Wang, Z., Yang, Q., et al. (2020). Gan-based virtual-to-real image translation for urban scene semantic segmentation. Neurocomputing, 394, 127–135.

Gupta, R. K., Mahajan, S., & Misra, R. (2023). Resource orchestration in network slicing using gan-based distributional deep q-network for industrial applications. The Journal of Supercomputing, 79(5), 5109–5138.

Härkönen, E., Hertzmann, A., Lehtinen, J., et al. (2020). Ganspace: Discovering interpretable gan controls. Advances in Neural Information Processing Systems, 33, 9841–9850.

Hasan, M., Dipto, A. Z., Islam, M. S., et al. (2019). A smart semi-automated multifarious surveillance bot for outdoor security using thermal image processing. Advances in Networks, 7(2), 21–28.

Hatanaka, S., & Nishi, H. (2021). Efficient gan-based unsupervised anomaly sound detection for refrigeration units. In 2021 IEEE 30th international symposium on industrial electronics (ISIE) (pp. 1–7). IEEE.

He, K., Zhang, X., & Ren, S., et al. (2015). Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE international conference on computer vision (pp. 1026–1034).

He, K., Zhang, X., & Ren, S., et al. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 770–778).

He, M., Chen, D., Liao, J., et al. (2018). Deep exemplar-based colorization. ACM Transactions on Graphics (TOG), 37(4), 1–16.

Heusel, M., Ramsauer. H., & Unterthiner, T., et al. (2017). Gans trained by a two time-scale update rule converge to a local Nash equilibrium. In Advances in neural information processing systems, 30.

Hindistan, Y. S., & Yetkin, E. F. (2023). A hybrid approach with gan and dp for privacy preservation of iiot data. IEEE Access.

Huang, K., Wang, Y., Tao, M., et al. (2020). Why do deep residual networks generalize better than deep feedforward networks? A neural tangent kernel perspective. Advances in Neural Information Processing Systems, 33, 2698–2709.

Huang, X., & Belongie, S. (2017). Arbitrary style transfer in real-time with adaptive instance normalization. In Proceedings of the IEEE international conference on computer vision (pp. 1501–1510).

Huang, X., Liu, M. Y., & Belongie, S., et al. (2018). Multimodal unsupervised image-to-image translation. In Proceedings of the European conference on computer vision (ECCV) (pp. 172–189).

Huang, X., Mallya, A., & Wang, T. C., et al. (2022). Multimodal conditional image synthesis with product-of-experts gans. In European conference on computer vision (pp. 91–109). Springer.

IBM Cloud Education. (2020). Supervised Learning. https://www.ibm.com/cloud/learn/supervised-learning. Accessed June 1, 2022.

Isola, P., Zhu, J. Y., & Zhou, T., et al. (2017). Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1125–1134).

Jacques, S., & Christe, B. (2020). Chapter 2—Healthcare technology basics. In S. Jacques & B. Christe (Eds.), Introduction to clinical engineering (pp. 21–50). London: Academic Press. https://doi.org/10.1016/B978-0-12-818103-4.00002-8

Jayram, T., Marois, V., & Kornuta, T., et al. (2019). Transfer learning in visual and relational reasoning. arXiv preprint arXiv:1911.11938

Jelinek, F., Mercer, R. L., Bahl, L. R., et al. (1977). Perplexity—A measure of the difficulty of speech recognition tasks. The Journal of the Acoustical Society of America, 62(S1), S63–S63.

Jetchev, N., Bergmann, U., & Vollgraf, R. (2016). Texture synthesis with spatial generative adversarial networks. arXiv preprint arXiv:1611.08207

Jing, Y., Yang, Y., Feng, Z., et al. (2019). Neural style transfer: A review. IEEE Transactions on Visualization and Computer Graphics, 26(11), 3365–3385.

Johnson, D. H. (2006). Signal-to-noise ratio. Scholarpedia, 1(12), 2088.

Johnson, J., Alahi, A., & Fei-Fei, L. (2016). Perceptual losses for real-time style transfer and super-resolution. In European conference on computer vision (pp. 694–711). Springer.

Joo, D., Kim, D., & Kim, J. (2018). Generating a fusion image: One’s identity and another’s shape. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1635–1643).

Junyanz. (2017). PyTorch CycleGAN and Pix2Pix. https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/docs/datasets.md. Accessed January 25, 2023.

Karlinsky, L., Shtok, J., & Tzur, Y., et al. (2017). Fine-grained recognition of thousands of object categories with single-example training. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 4113–4122).

Karnewar, A., & Wang, O. (2020). Msg-gan: Multi-scale gradients for generative adversarial networks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 7799–7808).

Karras, T., Aila, T., & Laine, S., et al. (2017). Progressive growing of gans for improved quality, stability, and variation. arXiv preprint arXiv:1710.10196

Karras, T., Laine, S., & Aila, T. (2019). A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 4401–4410).

Karras, T., Aittala, M., Hellsten, J., et al. (2020). Training generative adversarial networks with limited data. Advances in Neural Information Processing Systems, 33, 12,104-12,114.

Karras, T., Laine, S., & Aittala, M., et al. (2020b). Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 8110–8119).

Karras, T., Aittala, M., & Laine, S., et al. (2021). Alias-free generative adversarial networks. In Advances in neural information processing systems, 34.

Kaymak, Ç., & Uçar, A. (2019). A brief survey and an application of semantic image segmentation for autonomous driving. In Handbook of deep learning applications (pp. 161–200). Springer.

Kazemi, H., Soleymani, S., & Taherkhani, F., et al. (2018). Unsupervised image-to-image translation using domain-specific variational information bound. In Advances in neural information processing systems, 31.

Khrulkov, V., & Oseledets, I. (2018). Geometry score: A method for comparing generative adversarial networks. In International conference on machine learning, PMLR (pp. 2621–2629).

Kilgour, K., Zuluaga, M., & Roblek, D., et al. (2019). Fréchet audio distance: A reference-free metric for evaluating music enhancement algorithms. In INTERSPEECH (pp. 2350–2354).

Kim, D. W., Ryun Chung, J., & Jung, S. W. (2019a). Grdn: Grouped residual dense network for real image denoising and gan-based real-world noise modeling. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops.

Kim, H., & Mnih, A. (2018). Disentangling by factorising. In International conference on machine learning, PMLR (pp. 2649–2658).

Kim, J., & Park, H. (2022). Limited discriminator gan using explainable ai model for overfitting problem. ICT Express.

Kim, J., Kim, M., & Kang, H., et al. (2019b). U-gat-it: Unsupervised generative attentional networks with adaptive layer-instance normalization for image-to-image translation. arXiv preprint arXiv:1907.10830

Kim, T., Cha, M., & Kim, H., et al. (2017). Learning to discover cross-domain relations with generative adversarial networks. In International conference on machine learning, PMLR (pp. 1857–1865).

kligvasser. (2021). SinGAN. https://github.com/kligvasser/SinGAN. Accessed March 29, 2022.

Koshino, K., Werner, R. A., & Pomper, M. G., et al. (2021). Narrative review of generative adversarial networks in medical and molecular imaging. Annals of Translational Medicine, 9(9).

Kupyn, O., Martyniuk, T., & Wu, J., et al. (2019). Deblurgan-v2: Deblurring (orders-of-magnitude) faster and better. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 8878–8887).

Ledig, C., Theis, L., & Huszár, F., et al. (2017). Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 4681–4690).

Lee, H. Y., Tseng, H. Y., & Huang, J. B., et al. (2018). Diverse image-to-image translation via disentangled representations. In Proceedings of the European conference on computer vision (ECCV) (pp. 35–51).

Li, B., Zou, Y., & Zhu, R., et al. (2022). Fabric defect segmentation system based on a lightweight gan for industrial internet of things. Wireless Communications and Mobile Computing.

Li, C., & Wand, M. (2016). Precomputed real-time texture synthesis with Markovian generative adversarial networks. In European conference on computer vision (pp. 702–716). Springer.

Li, K., Yang, S., Dong, R., et al. (2020). Survey of single image super-resolution reconstruction. IET Image Processing, 14(11), 2273–2290.

Li, M., Huang, H., & Ma, L., et al. (2018). Unsupervised image-to-image translation with stacked cycle-consistent adversarial networks. In Proceedings of the European conference on computer vision (ECCV) (pp. 184–199).

Li, M., Ye, C., & Li, W. (2019). High-resolution network for photorealistic style transfer. arXiv preprint arXiv:1904.11617

Li, R., Cao, W., Jiao, Q., et al. (2020). Simplified unsupervised image translation for semantic segmentation adaptation. Pattern Recognition, 105(107), 343.

Li, Y., Fang, C., & Yang, J., et al. (2017). Diversified texture synthesis with feed-forward networks. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3920–3928).

Li, Y., Singh, K. K., & Ojha, U., et al. (2020c). Mixnmatch: Multifactor disentanglement and encoding for conditional image generation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 8039–8048).

Li, Y., Zhang, R., & Lu, J., et al. (2020d). Few-shot image generation with elastic weight consolidation. arXiv preprint arXiv:2012.02780

Li, Y., Sixou, B., & Peyrin, F. (2021). A review of the deep learning methods for medical images super resolution problems. IRBM, 42(2), 120–133.

Likas, A., Vlassis, N., & Verbeek, J. J. (2003). The global k-means clustering algorithm. Pattern Recognition, 36(2), 451–461.

Lin, J., Xia, Y., & Qin, T., et al. (2018). Conditional image-to-image translation. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 5524–5532).

Lin, J., Wang, Y., & He, T., et al. (2019). Learning to transfer: Unsupervised meta domain translation. arXiv preprint arXiv:1906.00181

Lin, J., Pang, Y., & Xia, Y., et al. (2020). Tuigan: Learning versatile image-to-image translation with two unpaired images. In European conference on computer vision (pp. 18–35). Springer.

Lin, T. Y., Maire, M., & Belongie, S., et al. (2014). Microsoft coco: Common objects in context. In European conference on computer vision (pp. 740–755). Springer.

Liu, G., Taori, R., & Wang, T. C., et al. (2020). Transposer: Universal texture synthesis using feature maps as transposed convolution filter. arXiv preprint arXiv:2007.07243

Liu, H., Cao, S., & Ling, Y., et al. (2021). Inpainting for saturation artifacts in optical coherence tomography using dictionary-based sparse representation. IEEE Photonics Journal, 13(2).

Liu, M. Y., Breuel, T., & Kautz, J. (2017). Unsupervised image-to-image translation networks. Advances in neural information processing systems, 30.

Liu, Z., Liu, C., & Shum, H. Y., et al. (2002). Pattern-based texture metamorphosis. In 2002 Proceedings of the 10th Pacific conference on computer graphics and applications (pp. 184–191). IEEE.

Liu, Z., Li, M., & Zhang, Y., et al. (2023). Fine-grained face swapping via regional gan inversion. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 8578–8587).

Long, J., Shelhamer, E., & Darrell, T. (2015). Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3431–3440).

Lorenz, D., Bereska, L., & Milbich, T., et al. (2019). Unsupervised part-based disentangling of object shape and appearance. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 10,955–10,964).

Luan, F., Paris, S., & Shechtman, E., et al. (2017). Deep photo style transfer. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 4990–4998).

Luhman, T., & Luhman, E. (2023). High fidelity image synthesis with deep vaes in latent space. arXiv preprint arXiv:2303.13714

Ma, L., Jia, X., & Georgoulis, S., et al. (2018a). Exemplar guided unsupervised image-to-image translation with semantic consistency. arXiv preprint arXiv:1805.11145

Ma, L., Sun, Q., & Georgoulis, S., et al. (2018b). Disentangled person image generation. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 99–108).

Mao, Q., Lee, H. Y., & Tseng, H. Y., et al. (2019). Mode seeking generative adversarial networks for diverse image synthesis. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 1429–1437).

Mao, X., Li, Q., & Xie, H., et al. (2017). Least squares generative adversarial networks. In Proceedings of the IEEE international conference on computer vision (pp. 2794–2802).

Maqsood, S., & Javed, U. (2020). Multi-modal medical image fusion based on two-scale image decomposition and sparse representation. Biomedical Signal Processing and Control, 57(101), 810.

Mathiasen, A., & Hvilshøj, F. (2020). Backpropagating through fr\(\backslash \)’echet inception distance. arXiv preprint arXiv:2009.14075

McCloskey, S., & Albright, M. (2019). Detecting gan-generated imagery using saturation cues. In 2019 IEEE international conference on image processing (ICIP). IEEE (pp. 4584–4588).

Meta, A. I. (2021). Building AI that can generate images of things it has never seen before. https://ai.facebook.com/blog/instance-conditioned-gans/. Accessed February 9, 2022.

Mirza, M., & Osindero, S. (2014). Conditional generative adversarial nets. arXiv preprint arXiv:1411.1784

Mittal, T. (2019). Tips On Training Your GANs Faster and Achieve Better Results. https://medium.com/intel-student-ambassadors/tips-on-training-your-gans-faster-and-achieve-better-results-9200354acaa5. Accessed May 18, 2022.

Miyato, T., Kataoka, T., & Koyama, M., et al. (2018). Spectral normalization for generative adversarial networks. arXiv preprint arXiv:1802.05957

Mo, S., Cho, M., & Shin, J. (2018). Instagan: Instance-aware image-to-image translation. arXiv preprint arXiv:1812.10889

Mo, S., Cho, M., & Shin, J. (2020). Freeze the discriminator: a simple baseline for fine-tuning gans. arXiv preprint arXiv:2002.10964

Mordvintsev, A., Olah, C., & Tyka, M. (2015). Inceptionism: Going deeper into neural networks, 2015. http://blog.research.google/2015/06/inceptionism-going-deeper-into-neural.html

Murez, Z., Kolouri, S., & Kriegman, D., et al. (2018). Image to image translation for domain adaptation. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 4500–4509).

Mustafa, A., & Mantiuk, R. K. (2020). Transformation consistency regularization—a semi-supervised paradigm for image-to-image translation. In European conference on computer vision (pp. 599–615). Springer.

Naeem, M. F., Oh, S. J., & Uh, Y., et al. (2020). Reliable fidelity and diversity metrics for generative models. In International conference on machine learning, PMLR (pp. 7176–7185).

Nakano, R. (2018). Arbitrary style transfer in TensorFlow.js. https://magenta.tensorflow.org/blog/2018/12/20/style-transfer-js/. Accessed April 04, 2022.

Nash, C., Menick, J., & Dieleman, S., et al. (2021). Generating images with sparse representations. arXiv preprint arXiv:2103.03841

Naumann, A., Hertlein, F., & Doerr, L., et al. (2023). Literature review: Computer vision applications in transportation logistics and warehousing. arXiv preprint arXiv:2304.06009

Nedeljković, D., & Jakovljević, Ž. (2022). Gan-based data augmentation in the design of cyber-attack detection methods. In Proceedings of the 9th International Conference on Electrical, Electronic and Computing Engineering (IcETRAN 2022), Novi Pazar, June 2022, ROI1. 4, ETRAN Society, Belgrade (pp ROI1-4). Academic Mind, Belgrade.

Nie, W., Karras, T., & Garg, A., et al. (2020). Semi-supervised stylegan for disentanglement learning. In International conference on machine learning, PMLR (pp. 7360–7369).

Nielsen, M. (2019). Deep Learning - Chapter 6. http://neuralnetworksanddeeplearning.com/chap6.html. Accessed February 04, 2022.

Noguchi, A., & Harada, T. (2019). Image generation from small datasets via batch statistics adaptation. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 2750–2758).

NVLabs. (2020). StyleGAN2 with adaptive discriminator augmentation (ADA)—Official TensorFlow implementation. https://github.com/NVlabs/stylegan2-ada. Accessed February 07, 2022.

NVLabs. (2021). Official PyTorch implementation of the NeurIPS 2021 paper. https://github.com/NVlabs/stylegan3. Accessed February 08, 2022.

NVLabs. (2021a). StyleGAN - Official TensorFlow Implementation. https://github.com/NVlabs/stylegan. Accessed February 03, 2022.

NVLabs. (2021b). StyleGAN2 - Official TensorFlow Implementation. https://github.com/NVlabs/stylegan2. Accessed February 03, 2022.

NVLabs. (2021). StyleGAN2-ADA—Official PyTorch implementation. https://github.com/NVlabs/stylegan2-ada-pytorch. Accessed February 8, 2022.

Odena, A., Dumoulin, V., & Olah, C. (2016). Deconvolution and Checkerboard Artifacts. https://distill.pub/2016/deconv-checkerboard/. Accessed June 3, 2022.

Open AI. (2022). CLIP: Connecting Text and Images. https://openai.com/blog/clip/. Accessed February 14, 2022.

openai. (2022). CLIP. https://github.com/openai/CLIP. Accessed February 14, 2022.

Pan, S. J., & Yang, Q. (2009). A survey on transfer learning. IEEE Transactions on Knowledge and Data Engineering, 22(10), 1345–1359.

Pan X, Tewari A, Leimkühler T, et al (2023) Drag your gan: Interactive point-based manipulation on the generative image manifold. arXiv preprint arXiv:2305.10973

Pang, Y., Lin, J., & Qin, T., et al. (2021). Image-to-image translation: Methods and applications. IEEE Transactions on Multimedia.

Park, S. J., Son, H., Cho, S., et al. (2018). Srfeat: Single image super-resolution with feature discrimination. In Proceedings of the European conference on computer vision (ECCV) (pp. 439–455).

Park, T., Liu, M. Y., & Wang, T. C., et al. (2019). Semantic image synthesis with spatially-adaptive normalization. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 2337–2346).

Park, T., Efros, A. A., & Zhang, R., et al. (2020a). Contrastive learning for unpaired image-to-image translation. In European conference on computer vision (pp. 319–345). Springer.

Park, T., Zhu, J. Y., Wang, O., et al. (2020). Swapping autoencoder for deep image manipulation. Advances in Neural Information Processing Systems, 33, 7198–7211.

Parmar, G., Zhang, R., & Zhu, J. Y. (2021). On buggy resizing libraries and surprising subtleties in fid calculation. arXiv preprint arXiv:2104.11222

Pasini, M. (2019). 10 Lessons I Learned Training GANs for one Year. https://towardsdatascience.com/10-lessons-i-learned-training-generative-adversarial-networks-gans-for-a-year-c9071159628. Accessed May 18, 2022.

Pasquini, C., Laiti, F., Lobba, D., et al. (2023). Identifying synthetic faces through gan inversion and biometric traits analysis. Applied Sciences, 13(2), 816.

Patashnik, O., Wu, Z., & Shechtman, E., et al. (2021). Styleclip: Text-driven manipulation of stylegan imagery. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 2085–2094).

Pathak, D., Krahenbuhl, P., & Donahue, J., et al. (2016). Context encoders: Feature learning by inpainting. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 2536–2544).

Pavan Kumar, M., & Jayagopal, P. (2021). Generative adversarial networks: a survey on applications and challenges. International Journal of Multimedia Information Retrieval, 10(1), 1–24.

Paysan, P., Knothe, R., & Amberg, B., et al. (2009). A 3d face model for pose and illumination invariant face recognition. In 2009 sixth IEEE international conference on advanced video and signal based surveillance (pp. 296–301). IEEE.

Peng, X., Yu, X., & Sohn, K., et al. (2017). Reconstruction-based disentanglement for pose-invariant face recognition. In Proceedings of the IEEE international conference on computer vision (pp. 1623–1632).

Petrovic, V., & Cootes, T. (2006). Information representation for image fusion evaluation. In 2006 9th international conference on information fusion (pp. 1–7). IEEE.

Portenier, T., Arjomand Bigdeli, S., & Goksel, O. (2020). Gramgan: Deep 3d texture synthesis from 2d exemplars. Advances in Neural Information Processing Systems, 33, 6994–7004.

Preuer, K., Renz, P., Unterthiner, T., et al. (2018). Fréchet chemnet distance: A metric for generative models for molecules in drug discovery. Journal of Chemical Information and Modeling, 58(9), 1736–1741.

Radford, A., Metz, L., & Chintala, S. (2015). Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv:1511.06434

Radford, A., Kim, J. W., & Hallacy, C., et al. (2021). Learning transferable visual models from natural language supervision. In International conference on machine learning, PMLR (pp. 8748–8763).

Ramesh, A., Pavlov, M., & Goh, G., et al. (2021). Zero-shot text-to-image generation. In International conference on machine learning, PMLR (pp. 8821–8831).

Ramesh, A., Dhariwal, P., & Nichol, A., et al. (2022). Hierarchical text-conditional image generation with clip latents. arXiv preprint arXiv:2204.06125

Ravuri, S., & Vinyals, O. (2019a). Classification accuracy score for conditional generative models. Advances in neural information processing systems, 32.

Ravuri, S., & Vinyals, O. (2019b). Seeing is not necessarily believing: Limitations of biggans for data augmentation.

Richter, S. R., Vineet, V., & Roth, S., et al. (2016). Playing for data: Ground truth from computer games. In European conference on computer vision (pp. 102–118). Springer.

Roich, D., Mokady, R., Bermano, A. H., et al. (2022). Pivotal tuning for latent-based editing of real images. ACM Transactions on Graphics (TOG), 42(1), 1–13.

Rombach, R., Blattmann, A., & Lorenz, D., et al. (2022). High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR) (pp. 10,684–10,695).

Ronneberger, O., Fischer, P., & Brox, T. (2015). U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention (pp. 234–241). Springer.

Rutinowski, J., Youssef, H., & Gouda, A., et al. (2022). The potential of deep learning based computer vision in warehousing logistics. Logistics Journal: Proceedings 2022(18).

Saad, M. M., Rehmani, M. H., & O’Reilly, R. (2022). Addressing the intra-class mode collapse problem using adaptive input image normalization in gan-based x-ray images. arXiv preprint arXiv:2201.10324

Sajjadi, M. S., Bachem, O., & Lucic, M., et al. (2018). Assessing generative models via precision and recall. Advances in neural information processing systems, 31.

Salimans, T., Goodfellow, I., & Zaremba, W., et al. (2016). Improved techniques for training gans. Advances in neural information processing systems, 29.

Sauer, A., Schwarz, K., & Geiger, A. (2022). Stylegan-xl: Scaling stylegan to large diverse datasets. In ACM SIGGRAPH 2022 conference proceedings (pp. 1–10).

Sauer, A., Karras, T., & Laine, S., et al. (2023). Stylegan-t: Unlocking the power of gans for fast large-scale text-to-image synthesis. arXiv:2301.09515.

Saunshi, N., Ash, J., & Goel, S., et al. (2022). Understanding contrastive learning requires incorporating inductive biases. arXiv preprint arXiv:2202.14037

Schuh, G., Anderl, R., & Gausemeier, J., et al. (2017). Industrie 4.0 Maturity Index: Die digitale Transformation von Unternehmen gestalten. Herbert Utz Verlag.

Shaham, T. R., Dekel, T., & Michaeli, T. (2019). Singan: Learning a generative model from a single natural image. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 4570–4580).

Shahbazi, M., Danelljan, M., & Paudel, D. P., et al. (2022). Collapse by conditioning: Training class-conditional gans with limited data. arXiv preprint arXiv:2201.06578

Shannon, C. E. (1949). Communication in the presence of noise. Proceedings of the IRE, 37(1), 10–21.

Sharma, M., Verma, A., & Vig, L. (2019). Learning to clean: A gan perspective. In Computer Vision–ACCV 2018 workshops: 14th Asian conference on computer vision, Perth, Australia, December 2–6, 2018, Revised Selected Papers 14 (pp. 174–185). Springer.

Shen, Y., & Zhou, B. (2021). Closed-form factorization of latent semantics in gans. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 1532–1540).

Shen, Z., Huang, M., & Shi, J., et al. (2019). Towards instance-level image-to-image translation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 3683–3692).

Shorten, C., & Khoshgoftaar, T. M. (2019). A survey on image data augmentation for deep learning. Journal of Big Data, 6(1), 1–48.

Singh, K. K., Ojha, U., & Lee, Y. J. (2019). Finegan: Unsupervised hierarchical disentanglement for fine-grained object generation and discovery. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 6490–6499).

Song, Q., Li, G., Wu, S., et al. (2023). Discriminator feature-based progressive gan inversion. Knowledge-Based Systems, 261(110), 186.

Song, S., Yu, F., & Zeng, A., et al. (2017). Semantic scene completion from a single depth image. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1746–1754).

Song, Y., Yang, C., & Lin, Z., et al. (2018). Contextual-based image inpainting: Infer, match, and translate. In Proceedings of the European Conference on Computer Vision (ECCV) (pp. 3–19).

SORDI.ai. (2023). Synthetic object recognition dataset for industries. https://www.sordi.ai. Accessed May 24, 2023.

Soucy, P., & Mineau, G. W. (2001). A simple knn algorithm for text categorization. In Proceedings 2001 IEEE international conference on data mining (pp. 647–648). IEEE.

Struski, Ł, Knop, S., Spurek, P., et al. (2022). Locogan–locally convolutional gan. Computer Vision and Image Understanding, 221(103), 462.

Suárez, P. L., Sappa, A. D., & Vintimilla, B. X. (2017). Infrared image colorization based on a triplet dcgan architecture. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops (pp. 18–23).

Sussillo, D., & Abbott, L. (2014). Random walk initialization for training very deep feedforward networks. arXiv preprint arXiv:1412.6558

Suzuki, R., Koyama, M., & Miyato, T., et al. (2018). Spatially controllable image synthesis with internal representation collaging. arXiv preprint arXiv:1811.10153

taesungp. (2020). Contrastive Unpaired Translation (CUT). https://github.com/taesungp/contrastive-unpaired-translation. Accessed February 7, 2022.

tamarott. (2020). SinGAN. https://github.com/tamarott/SinGAN. Accessed March 29, 2022.

Tancik, M., Srinivasan, P., Mildenhall, B., et al. (2020). Fourier features let networks learn high frequency functions in low dimensional domains. Advances in Neural Information Processing Systems, 33, 7537–7547.

Tang, C. S., & Veelenturf, L. P. (2019). The strategic role of logistics in the industry 4.0 era. Transportation Research Part E: Logistics and Transportation Review, 129, 1–11.

Tang, H., Xu, D., & Sebe, N., et al. (2019). Multi-channel attention selection gan with cascaded semantic guidance for cross-view image translation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 2417–2426).

Tang, H., Xu. D., & Yan, Y., et al. (2020). Local class-specific and global image-level generative adversarial networks for semantic-guided scene generation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 7870–7879).

Tao, M., Tang, H., & Wu, F., et al. (2022). Df-gan: A simple and effective baseline for text-to-image synthesis. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 16,515–16,525).

Tao, S., & Wang, J. (2020). Alleviation of gradient exploding in gans: Fake can be real. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 1191–1200).

Teterwak, P., Sarna, A., & Krishnan, D., et al. (2019). Boundless: Generative adversarial networks for image extension. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 10,521–10,530).

Tian, C., Fei, L., Zheng, W., et al. (2020). Deep learning on image denoising: An overview. Neural Networks, 131, 251–275.

tkarras. (2017a). Progressive Growing of GANs for Improved Quality, Stability, and Variation — Official TensorFlow implementation of the ICLR 2018 paper. https://github.com/tkarras/progressive_growing_of_gans. Accessed February 7, 2022.

tkarras. (2017b). Progressive Growing of GANs for Improved Quality, Stability, and Variation—Official Theano implementation of the ICLR 2018 paper. https://github.com/tkarras/progressive_growing_of_gans/tree/original-theano-version. Accessed February 07, 2022.

Torrey, L., & Shavlik, J. (2010). Transfer learning. In Handbook of research on machine learning applications and trends: Algorithms, methods, and techniques. IGI global (pp. 242–264).

tportenier. (2020). GramGAN: Deep 3D Texture Synthesis From 2D Exemplars. https://github.com/tportenier/gramgan. Accessed March 29, 2022.

Tran, L. D., Nguyen, S. M., & Arai. M. (2020). Gan-based noise model for denoising real images. In Proceedings of the Asian conference on computer vision.

Tran, N. T., Tran, V. H., Nguyen, N. B., et al. (2021). On data augmentation for gan training. IEEE Transactions on Image Processing, 30, 1882–1897.

Tsitsulin, A. (2020). Different results on the same arrays. https://github.com/xgfs/imd/issues/2. Accessed May 28, 2022.

Tsitsulin, A., Munkhoeva, M., & Mottin, D., et al. (2019). The shape of data: Intrinsic distance for data distributions. arXiv preprint arXiv:1905.11141

Tzovaras, D. (2008). Multimodal user interfaces: From signals to interaction. Springer.

Ulyanov, D., Lebedev, V., & Vedaldi, A., et al. (2016a). Texture networks: Feed-forward synthesis of textures and stylized images. In ICML (p. 4).

Ulyanov, D., Vedaldi, A., & Lempitsky, V. (2016b). Instance normalization: The missing ingredient for fast stylization. arXiv preprint arXiv:1607.08022

Vo, D. M., Sugimoto, A., & Nakayama, H. (2022). Ppcd-gan: Progressive pruning and class-aware distillation for large-scale conditional gans compression. In Proceedings of the IEEE/CVF winter conference on applications of computer vision (pp. 2436–2444).

Voita, L. (2022). (Introduction to) Transfer Learning. https://lena-voita.github.io/nlp_course/transfer_learning.html. Accessed June 03, 2022.

Wang, M., Lang, C., Liang, L., et al. (2021). Fine-grained semantic image synthesis with object-attention generative adversarial network. ACM Transactions on Intelligent Systems and Technology (TIST), 12(5), 1–18.

Wang, T. C., Liu, M. Y., & Zhu, J. Y., et al. (2018a). High-resolution image synthesis and semantic manipulation with conditional gans. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 8798–8807).

Wang, X., Yu, K., & Wu, S., et al. (2018b). Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European conference on computer vision (ECCV) workshops.

Wang, X., Xie, L., & Dong, C., et al. (2021b). Real-esrgan: Training real-world blind super-resolution with pure synthetic data. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 1905–1914).

Wang, Y., Qian, B., Li, B., et al. (2013). Metal artifacts reduction using monochromatic images from spectral ct: Evaluation of pedicle screws in patients with scoliosis. European Journal of Radiology, 82(8), e360–e366.

Wang, Y., Wu, C., & Herranz, L., et al. (2018c). Transferring gans: Generating images from limited data. In Proceedings of the European conference on computer vision (ECCV) (pp. 218–234).

Wang, Y., Tao, X., & Shen, X., et al. (2019). Wide-context semantic image extrapolation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 1399–1408).

Wang, Y., Gonzalez-Garcia, A., & Berga, D., et al. (2020a). Minegan: effective knowledge transfer from gans to target domains with few images. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 9332–9341).

Wang, Y., Yao, Q., Kwok, J. T., et al. (2020). Generalizing from a few examples: A survey on few-shot learning. ACM Computing Surveys (CSUR), 53(3), 1–34.

Wang, Z., Bovik, A. C., Sheikh, H. R., et al. (2004). Image quality assessment: From error visibility to structural similarity. IEEE Transactions on Image Processing, 13(4), 600–612.

Wang, Z., Chen, J., & Hoi, S. C. (2020). Deep learning for image super-resolution: A survey. IEEE Transactions on Pattern Analysis and Machine Intelligence, 43(10), 3365–3387.

Wang, Z., She, Q., & Ward, T. E. (2021). Generative adversarial networks in computer vision: A survey and taxonomy. ACM Computing Surveys (CSUR), 54(2), 1–38.

Wei, L. Y., Lefebvre, S., & Kwatra, V., et al. (2009). State of the art in example-based texture synthesis. Eurographics 2009, State of the Art Report, EG-STAR (pp. 93–117).

Weiss, K., Khoshgoftaar, T. M., & Wang, D. (2016). A survey of transfer learning. Journal of Big Data, 3(1), 1–40.

Williams, L. (1983). Pyramidal parametrics. In Proceedings of the 10th annual conference on Computer graphics and interactive techniques (pp. 1–11).

Wu, W., Cao, K., & Li, C., et al. (2019). Transgaga: Geometry-aware unsupervised image-to-image translation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 8012–8021).

Xia, W., Zhang, Y., & Yang, Y., et al. (2022). Gan inversion: A survey. IEEE Transactions on Pattern Analysis and Machine Intelligence.

Xiao, F., Liu, H., & Lee, Y. J. (2019). Identity from here, pose from there: Self-supervised disentanglement and generation of objects using unlabeled videos. In Proceedings of the IEEE/CVF international conference on computer vision, pp. 7013–7022.

xinntao. (2021). Real-ESRGAN. https://github.com/xinntao/Real-ESRGAN. Accessed May 9, 2022.

Xu, T., Zhang, P., & Huang, Q., et al. (2018). Attngan: Fine-grained text to image generation with attentional generative adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1316–1324).

Xuan, J., Yang, Y., & Yang, Z., et al. (2019). On the anomalous generalization of gans. arXiv preprint arXiv:1909.12638

Ye, H., Yang, X., & Takac, M., et al. (2021). Improving text-to-image synthesis using contrastive learning. The 32nd British machine vision conference (BMVC).

Yi, X., Walia, E., & Babyn, P. (2019). Generative adversarial network in medical imaging: A review. Medical Image Analysis, 58(101), 552.

Yi, Z., Zhang, H., & Tan, P., et al. (2017). Dualgan: Unsupervised dual learning for image-to-image translation. In Proceedings of the IEEE international conference on computer vision (pp. 2849–2857).

Yinka-Banjo, C., & Ugot, O. A. (2020). A review of generative adversarial networks and its application in cybersecurity. Artificial Intelligence Review, 53(3), 1721–1736.

Yu, N., Barnes, C., & Shechtman, E., et al. (2019). Texture mixer: A network for controllable synthesis and interpolation of texture. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 12,164–12,173).

Yuan, Y., Liu, S., & Zhang, J., et al. (2018). Unsupervised image super-resolution using cycle-in-cycle generative adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops (pp. 701–710).

Yuheng-Li. (2020). MixNMatch: Multifactor disentanglement and encoding for conditional image generation. https://github.com/Yuheng-Li/MixNMatch. Accessed February 14, 2022.

Zhang, H., Xu, T., & Li, H., et al. (2017a). Stackgan: Text to photo-realistic image synthesis with stacked generative adversarial networks. In Proceedings of the IEEE international conference on computer vision (pp. 5907–5915).

Zhang, H., Xu, T., Li, H., et al. (2018). Stackgan++: Realistic image synthesis with stacked generative adversarial networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 41(8), 1947–1962.

Zhang, K., Luo, W., Zhong, Y., et al. (2020a). Deblurring by realistic blurring. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 2737–2746).

Zhang, N., Zhang, L., & Cheng, Z. (2017b). Towards simulating foggy and hazy images and evaluating their authenticity. In International conference on neural information processing. Springer (pp. 405–415).

Zhang, P., Zhang, B., & Chen, D., et al. (2020b). Cross-domain correspondence learning for exemplar-based image translation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 5143–5153).

Zhang, Q., Liu, Y., Blum, R. S., et al. (2018). Sparse representation based multi-sensor image fusion for multi-focus and multi-modality images: A review. Information Fusion, 40, 57–75.

Zhang, R. (2019). Making convolutional networks shift-invariant again. In International conference on machine learning, PMLR (pp. 7324–7334).

Zhang, R., Zhu, J. Y., & Isola, P., et al. (2017c). Real-time user-guided image colorization with learned deep priors. arXiv preprint arXiv:1705.02999

Zhang, R., Isola, P., & Efros, A. A., et al. (2018c). The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 586–595).

Zhang, S., Zhen, A., & Stevenson, R. L. (2019a). Gan based image deblurring using dark channel prior. arXiv preprint arXiv:1903.00107

Zhang, X., Karaman, S., & Chang, S. F. (2019b). Detecting and simulating artifacts in gan fake images. In 2019 IEEE international workshop on information forensics and security (WIFS) (pp. 1–6). IEEE.

Zhang, Y., Liu, S., Dong, C., et al. (2019). Multiple cycle-in-cycle generative adversarial networks for unsupervised image super-resolution. IEEE Transactions on Image Processing, 29, 1101–1112.

Zhao, L., Mo, Q., & Lin, S., et al. (2020a). Uctgan: Diverse image inpainting based on unsupervised cross-space translation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 5741–5750).

Zhao, S., Liu, Z., Lin, J., et al. (2020). Differentiable augmentation for data-efficient gan training. Advances in Neural Information Processing Systems, 33, 7559–7570.

Zhao, S., Cui, J., & Sheng, Y., et al. (2021). Large scale image completion via co-modulated generative adversarial networks. arXiv preprint arXiv:2103.10428

Zhao, Y., Wu, R., & Dong, H. (2020c). Unpaired image-to-image translation using adversarial consistency loss. In European conference on computer vision (pp. 800–815). Springer.

Zhao, Z., Zhang, Z., & Chen, T., et al. (2020d). Image augmentations for gan training. arXiv preprint arXiv:2006.02595

Zheng, C., Cham, T. J., & Cai, J. (2021). The spatially-correlative loss for various image translation tasks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 16,407–16,417).

Zheng, H., Fu, J., & Zha, Z.J., et al. (2019). Looking for the devil in the details: Learning trilinear attention sampling network for fine-grained image recognition. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 5012–5021).

Zhou, X., Zhang, B., & Zhang, T., et al. (2021). Cocosnet v2: Full-resolution correspondence learning for image translation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 11,465–11,475).

Zhou, Y., Zhu, Z., & Bai, X., et al. (2018). Non-stationary texture synthesis by adversarial expansion. arXiv preprint arXiv:1805.04487

Zhu, J. Y., Park, T., & Isola, P., et al. (2017a). Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international conference on computer vision (pp. 2223–2232).

Zhu, J. Y., Zhang, R., & Pathak, D., et al. (2017b). Toward multimodal image-to-image translation. Advances in neural information processing systems, 30.

Zhu, M., Pan, P., & Chen, W., et al. (2019). Dm-gan: Dynamic memory generative adversarial networks for text-to-image synthesis. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 5802–5810).

Zhu, P., Abdal, R., & Qin, Y., et al. (2020). Sean: Image synthesis with semantic region-adaptive normalization. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 5104–5113).

Acknowledgements

This research is supported by the EIPHI Graduate School (contract “ANR-17-EURE-0002”) and the BMW TechOffice Munich.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by Arun Mallya.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Experimentation Datasets

In this section, we describe our 4 in-house rendered synthetic datasets that we used in our experimentation.

Industrial Assets: This dataset consists of simple synthetic images (\(512 \times 512\) px) for different industrial assets with domain randomization. We consider the following assets combinations: smart transport robot (STR) (16,000), trolly (16,000), STR and trolly (11,457), pallet (11,271), jack (9,425), electrical jack (8,944), stillage (8,933), forklift (8,840), tugger train (8,794), small load carrier (KLT) box (9,976), and random combinations of grouped assets (4,029). This dataset was rendered using Unity Engine (Fig. 30).

All three remaining datasets are rendered using NVIDIA Omniverse.

KLT & Pallet: The dataset includes 4 combinations of small load carrier (KLT) box and pallet single images: (1) Low variation of KLT boxes (9,948) (2) Low variations of Pallet (10,893) (3) Higher variations of Pallet (5,000) (4) Higher variations of KLT box (2,500) (Fig. 31).

Stillage Modalities: The dataset consists of 2,006 synthetic images (1,280 \(\times \) 720 px) for different stillages, sided next to each other. In addition, paired semantic and instance segmentations, and depth images are included (In total, 8,024 images) (Fig. 32).

Klt2Cardboard: The dataset consists of around 20K synthetic images (\(3{,}206 \times 1{,}440\) px) in total for randomly stacked boxes placed on a euro pallet in two different room environments. The first 10,138 consists of small load carrier (KLT) boxes surrounded by logistic assets, while the second 10,222 images consist of cardboard boxes surrounded by office assets. Both contain a maximum of 2-sided, 5-stacked boxes (Fig.33).

Appendix B: Conditional GAN (cGAN)

It is the conditional version of GAN (Mirza & Osindero, 2014). In cGAN, conditional settings are applied to both the generator and the discriminator. The conditional settings can be any type of auxiliary information such as class labels (Karras et al., 2020a), instance images (Casanova et al., 2021), data pairing (Isola et al., 2017), etc. Alongside the latent space, the generator network inputs the class information condition and produces images. Fundamentally, a generator is free to generate whatever output as long as it satisfies the discriminator, which explains the necessity of applying conditionality on both GAN networks (Boulahbal et al., 2021). Additionally, a cGAN converges faster than a classical GAN, and its generated image random distribution follows a certain pattern.

As previously mentioned, a default simple GAN consists of 2 networks: a generative and a discriminator network. Although, training GAN’s networks does not differ from training any other network. Therefore, we refer to the universal taxonomy in Machine Learning (ML) to consider four major GAN learning methods based on the different ways of handling the available training datasets: supervised, unsupervised, semi-supervised, and few-shot as stated below in Appendix C.

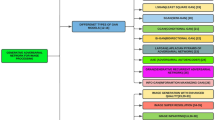

Appendix C: GAN Training Taxonomy

Supverised learning (SL): It uses labeled datasets to train GANs (Cunningham et al., 2008). Due to the various GAN applications, we consider different shapes of labels. For instance, GAN’s conditional generation requires additional category labels for each training image as in Karras et al. (2020a), Karras et al. (2021), Casanova et al. (2021). Supervised I2I applications require paired image dataset to obtain accurate domain translations as in Wang et al. (2018a), Zhu et al. (2017b). However, despite the high efficiency of supervised learning over other learning methods, ensuring labeled data needs a certain level of expertise to structure that data, is intensively time-consuming, and has a likelihood of human error (IBM Cloud Education, 2020). In addition, it is sometimes impossible to acquire, especially when it comes to paired datasets, since it is “double the trouble” as capturing and processing a single domain dataset. In this case, synchronized hardware or an entire area control are additionally required.

Unsupverised learning (UL): Unlike SL, the model is not provided with labels or domain pairings. Therefore, the model works on its own to discover patterns and information (Chu et al., 2017; Park et al., 2020a). Yet, for optimal performance, unsupervised learning requires a more extensive dataset. In fact, unlabeled datasets are much more easily accessible. Therefore, researchers have focused more on enhancing unsupervised learning architecture over supervised learning methods (Chen & Jia, 2021).

Semi-supervised learning (SSL): It lies between supervised and unsupervised learning. Typically, a semi-supervised learning operates a dataset with few labels. Therefore, it aims to label all remaining images based on the small number of existing labeled images (e.g., it is possible to reconstruct the whole video by labeling 10–20% of its frames) (Mustafa & Mantiuk, 2020). Moreover, SSL is efficient for good disentanglement learning using only 0.25–2.5% of labeled data (Nie et al., 2020). However, semi-supervised learning generally has lower accuracy since all future predictions rely on predicted GT labels.

Few-shot learning (FSL): FSL models are based on a very few (Benaim & Wolf, 2018; Cohen & Wolf, 2019) to single image, i.e. one-shot learning (OSL), training dataset (Park et al., 2020a; Lin et al., 2020; Shaham et al., 2019). By definition, “FSL is a type of machine learning problems (specified by E, T and P), where E contains only a limited number of examples with supervised information for the target T.” Afterward, FSL training is evaluated based on its performance P (Wang et al., 2020b). Despite the fast FSL training generalization on a new small dataset, FSL models outperform in their field of experience. They may not be optimal when inferring slightly different use cases (check Sect. 6.3). Although, FSL is a promising field to relieve the burden of collecting huge datasets for the methods above, especially since limited hardware is enough for training. Thus, many researchers are focusing on fulfilling that aim.

Additionally, transfer learning (TL) is becoming the de facto solution for CV training (Jayram et al., 2019). The main idea is transferring knowledge from an auxiliary model into the main one. In other terms, an auxiliary model is a model that is trained on huge datasets to satisfy a source task. Afterward, the training “is resumed” with a smaller dataset to solve the interesting target task. Therefore, we divide TL methods into two categories: transductive and inductive. A transductive TL maintains the same task and labels as in the source task, e.g., Domain Adaptation (Guo et al., 2020; Li et al., 2020b; Cao et al., 2018; Murez et al., 2018). However, in inductive TL, the task, and therefore the labels, are changed and defined in the target task (e.g., sequential transfer learning, which is the most popular TL method) (Voita, 2022). Yet, some limitations may occur when training the model too long (check Sect. 4.1.2).

Appendix D: StyleGAN Retrospective

1.1 StyleGAN

In 2019, NVIDIA published StyleGAN (Karras et al., 2019; NVLabs, 2021a), an extension of the traditional ProGAN architecture (Karras et al., 2017). The generator network has been modified to include progressive resolution blocks, starting from \(2^2\times 2^2\) to \(2^{10}\times 2^{10}\) pixels. At each block, and after each convolution layer (Gatys et al., 2015b), a different sample of Gaussian noise is added to the feature map (Nielsen, 2019). Inspired by the style transfer literature (Huang & Belongie, 2017; Jing et al., 2019), an AdaIN layer (Huang & Belongie, 2017; Dumoulin et al., 2016, 2018; Ghiasi et al., 2017) controls the style transfer process. While the style only affects global effects, such as shape, identity, pose, lighting, and background, the noise injection at each block directly controls the image features and guarantees stochastic variations at different scales. This process separates the high-level attributes from the stochastic variation of local effects, such as beard, freckles, and hair. As a result, StyleGAN generates high-quality and high-resolution images (up to \(1024\times 1024\) pixels) with detailed style-level stochastic variations. However, all images above 64\(\times \)64 resolution show water droplet artifacts in the feature map, often visible in the generated output image (Karras et al., 2020b). Additionally, the progressive growing technique used in all versions of StyleGAN produces phase artifacts, where some details are stuck to the same location regardless of slight movements of the parent object, as shown in Fig. 1.

1.2 StyleGAN2

StyleGAN2 (Karras et al., 2020b; NVLabs, 2021b), published in 2020, is a revised version of StyleGAN proposed to improve the image quality and remove all artifacts. First, Karras et al. replaced in the generator all AdaIN normalization (Huang & Belongie, 2017; Dumoulin et al., 2016, 2018; Ghiasi et al., 2017)—causing the droplet artifacts—with estimated statistics (Glorot & Bengio, 2010; He et al., 2015). Second, artifacts related to progressive growing (Karras et al., 2017) are reduced by using a modified versionFootnote 17 of a hierarchical (Denton et al., 2015; Zhang et al., 2017a, 2018a) generator: Multi-Scale Gradients for GAN (MSG-GAN) (Karnewar & Wang, 2020) with skip connections (Ronneberger et al., 2015). Skip connections are used to connect matching resolutions between both networks. In parallel, residual networks (Gulrajani et al., 2017; He et al., 2016; Miyato et al., 2018) have shown benefits in the discriminator. Both alternatives replace StyleGAN’s generator (synthesis network), and discriminator networks’ feedforward design (Huang et al., 2020) respectively. Compared to StyleGAN, the new revision improves the training performanceFootnote 18 by 40%, equivalent to 61 img/s.

However, despite the image quality improvements, tens of thousands of images with obvious variations are still required for the GAN training. Otherwise, it leads to discriminator overfitting and a training divergence (Karras et al., 2020a; Arjovsky & Bottou, 2017). Thus, acquiring this amount of varying dataset is sometimes unfeasible, as previously explained in Sect. 1.

Appendix E: Fine-Grained Image Generation

1.1 InfoGAN

Information Maximization GAN (InfoGAN) (Chen et al., 2016) is a GAN that utilizes unsupervised training to disentangle common visual concepts between small subsets of the latent variables, such as the presence of objects, lighting, object azimuth, pose, elevation, etc. By doing so, InfoGAN maximizes the mutual information between the latent variables and the generated images, thereby increasing the variation in the generated dataset.

1.2 FineGAN

FineGAN, proposed by Singh et al. (2019), is an architecture that disentangles the background, object shape, and object appearance hierarchically without the use of masks or fine-grained annotations. FineGAN iteratively executes in three stages: Background stage, parent stage, and child stage, where the object of interest’s features, such as appearance and shape (parent stage), are combined with the previously extracted background (background stage) and then colorized with a texture (child stage) to perform FineGAN generation.

\({{{\textbf {Limitations:}}}}\) However, FineGAN does not support conditioning on real images and only supports sampling from latent codes. Therefore, before using FineGAN, additional work to extract the background, object pose, and appearance’s latent code is required to support image-conditioned generation (Li et al., 2020c).

1.3 MixNMatch

Li et al. developed MixNMatch (Li et al., 2020c; Yuheng-Li, 2020) which is built on top of FineGAN (Singh et al., 2019) and does not only allow sampled latent codes, but also real images to be used for image generation. Unlike previous fine-grained GAN architectures such as MUNIT (Huang et al., 2018), FusionGAN (Joo et al., 2018), and other disentangling techniques (Lee et al., 2018; Lorenz et al., 2019; Xiao et al., 2019), which focus on only two features: appearance and pose, MixNMatch simultaneously disentangles four factors: background, object pose, object shape, and object texture with minimal supervision. Unlike other approaches that require strong supervision annotations, such as key points, pose, masks, etc. Peng et al. (2017), Balakrishnan et al. (2018), Ma et al. (2018b), Esser et al. (2018), MixNMatch uses only bounding box annotations to model the backgrounds, as all training images have an object of interest. Once the background generator model is trained, no bounding boxes are needed for image generation.

The MixNMatch training process consists of two stages: (1) In the first stage, the “code mode,” MixNMatch takes up to 4 images and encodes them into four latent codes to generate realistic images with high accuracy. On the one hand, the shape’s latent code space capacity is too small to handle unique 3D shape variations such as boxes, STRs, trolleys, etc. On the other hand, the small capacity of the shape code limits the generation of pixel-level shape and pose details, which is handled in the second stage. (2) In the second stage, the “feature mode,” MixnMatch maps the image to a higher-dimensional feature space to preserve the shape and pose spatially-aligned details. Then, the FineGAN 3-stages pipeline is executed for conditional MixNMatch generation. In Fig. 15, we can see the combination of the KLT box texture, ground texture, and ground color features in the generated output.

Appendix F: GAN SOTA Datasets

In this section, we review the mentioned datasets in Tables 1, 2, and 3 with brief description and their downloadable links (Tables 6, 7, 8, 9).

Appendix G: Style Transfer Motivation

Style transfer (Gatys et al., 2015b; Jing et al., 2019) is one of the first and most known I2I translations to automate pastiche (Dumoulin et al., 2016). When talking about style transfer, we consider three types of images: two inputs and one output (Chen & Jia, 2021; Dumoulin et al., 2016):

-

1.

Content image: Style transfer preserves the high-level semantic features of the content input, which are noted as invariant features, e.g., contrast, brightness, shape, etc.

-

2.

Style image: Style transfer extracts style features from the second input image such as texture, contrast (Ulyanov et al., 2016b), color, etc.

-

3.

Generated stylized image: Style transfer combines extracted style and content features into one single output.

Applying GANs as a backbone for I2I models is the most effective strategy (Chen & Jia, 2021). This section presents various techniques for GAN-based domain transfer and adaptive industrial applications that are essential and common to different fields. In I2I translation applications (Pang et al., 2021) e.g. semantic image synthesis (Park et al., 2019; Zhu et al., 2020; Tang et al., 2020), image segmentation (Guo et al., 2020; Li et al., 2020b), style transfer (Yi et al., 2017; Alami Mejjati et al., 2018), image inpainting (Pathak et al., 2016; Song et al., 2018; Zhao et al., 2020a), image deblurring (Zhang et al., 2020a, 2019a; Kupyn et al., 2019), image denoising (Kim et al., 2019a; Tian et al., 2020; Tran et al., 2020), image colorization (Zhang et al., 2017c; Suárez et al., 2017; He et al., 2018), super-resolution (Ledig et al., 2017; Zhang et al., 2019c; Yuan et al., 2018), domain adaptation (Cao et al., 2018; Murez et al., 2018), etc. the generative model aims to generate images that look like the target domain distribution (Chen & Jia, 2021; Pang et al., 2021). We executed arbitrary style transfer methods from Nakano (2018) to apply styles of different patterns, drawings, and modalities and the original image itself on an image of stillages. As a result, the style is hardly applied all over the input image: it overrides original colors and paints the whole image with a single to minimal amount of textures, as shown in Fig. 34 below.

Style transfer experiments based on Nakano (2018): a Style image b Stylized output

Researchers focused on increasing the number of supported styles per a single network, combining arbitrary styles with interpolations (Dumoulin et al., 2016; Ghiasi et al., 2017; Nakano, 2018), conserving original images’ colors and luminance beyond the style and textures (Gatys et al., 2016a), replace the per-pixel loss function into a perceptual loss depending on extracted high-level features (Johnson et al., 2016), maintain photo-realistic style transfer to preserve the generated image realism similarly to the content image (Li et al., 2019; Park et al., 2020b), apply instance image style transfer (Castillo et al., 2017) because of the natural image complexity which contains a variety of distinct textures (Luan et al., 2017), etc. Yet, despite its successful contribution to artistic and painter’s style transfer (Pang et al., 2021), current approaches are inefficient for stylizing into industrial image modalities without any information loss. For instance, when applying a depth image as a style, the generated output consists of a sharpened grayscale image with depth information absence. Additionally, a segmentation-based style transfers the segmentation’s colors into the whole content image.

Appendix H: SR Briefing