Abstract

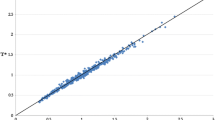

This paper evaluates the European Paradox according to which Europe plays a leading world role in terms of scientific excellence, measured in terms of the number of publications, but lacks the entrepreneurial capacity of the US to transform this excellent performance into innovation, growth, and jobs. Citation distributions for the US, the European Union (EU), and the Rest of the World are evaluated using a pair of high- and low-impact indicators, as well as the mean citation rate (MCR). The dataset consists of 3.6 million articles published in 1998–2002 with a common 5-year citation window. The analysis is carried at a low aggregation level, namely, the 219 sub-fields identified with the Web of Science categories distinguished by Thomson Scientific. The problems posed by international co-authorship and the multiple assignments of articles to sub-fields are solved following a multiplicative strategy. We find that, although the EU has more publications than the US in 113 out of 219 sub-fields, the US is ahead of the EU in 189 sub-fields in terms of the high-impact indicator, and in 163 sub-fields in terms of the low-impact indicator. Finally, we verify that using the high-impact indicator the US/EU gap is usually greater than when using the MCR.

Similar content being viewed by others

Notes

See inter alia Seglen (1992), Schubert et al. (1987) for evidence concerning scientific articles published in 1981–1985 in 114 sub-fields, Glänzel (2007) for articles published in 1980 in 12 broad fields and 60 middle-sized disciplines, Albarrán and Ruiz-Castillo (2011) for articles published in 1998–2002 in the 22 fields already mentioned, and Albarrán et al. (2011d) for these same articles classified in 219 Web of Science categories and a number of intermediate disciplines and broad scientific fields according to three aggregation schemes.

Economists will surely recognize that the key to this approach is the identification of a citation distribution with an income distribution. Once this step is taken, the measurement of low-impact, which starts with the definition of low-citation papers as those with citations below the CCL, coincides with the measurement of economic poverty. In turn, once low-impact has been identified with economic poverty, it is equally natural to identify the measurement of high-impact with the measurement of a certain notion of economic affluence.

This is part of an aggregation scheme built in Albarrán et al. (2011d), which borrow from the schemes recommended by Tijssen and van Leeuwen (2003) and Glänzel and Schubert (2003) with the aim of maximizing the possibility that a power law represents the upper tail of each of the corresponding citation distributions. It is not claimed that this scheme provides an accurate representation of the structure of science. It is rather a convenient simplification for the presentation of information at the sub-field level in this paper.

It should be noted that, as far as low-impact, the EU situation is more favorable; its contribution to world levels is below its publication share in 137 out of 219 sub-fields. Furthermore, the US/EU low-impact gap reveals a weaker US dominance. It suffices to say that the EU is now ahead in 24 out of 73 Other Natural Sciences, and a total of 56 out of 219 sub-fields. Moreover, when the US is ahead, most of the time the gap is small. For the details, see Herranz and Ruiz-Castillo (2011).

References

Aksnes, D., Schneider, J., & Gunnarsson, M. (2012). Ranking national research systems by citation indicators. A comparative analysis using whole and fractionalised counting methods. Journal of Informetrics, 6, 36–43.

Albarrán, P., Crespo, J., Ortuño, I., & Ruiz-Castillo, J. (2010). A comparison of the scientific performance of the U.S. and Europe at the turn of the 21st century. Scientometrics, 85, 329–344.

Albarrán, P., Crespo, J., Ortuño, I., & Ruiz-Castillo, J. (2011a). The skewness of science in 219 sub-fields and a number of aggregates. Scientometrics, 88, 385–397.

Albarrán, P., Ortuño, I., & Ruiz-Castillo, J. (2011b). The measurement of low- and high-impact in citation distributions: technical results. Journal of Informetrics, 5, 48–63.

Albarrán, P., Ortuño, I., & Ruiz-Castillo, J. (2011c). High- and low-impact citation measures: empirical applications. Journal of Informetrics, 5, 122–145.

Albarrán, P., Ortuño, I., & Ruiz-Castillo, J. (2011d). Average-based versus high- and low-impact indicators for the evaluation of citation distributions. Research Evaluation, 20, 325–339.

Albarrán, P., & Ruiz-Castillo, J. (2011). References made and citations received by scientific articles. Journal of the American Society for Information Science and Technology, 62, 40–49.

Anderson, J., Collins, P., Irvine, J., Isard, P., Martin, B., Narin, F., et al. (1988). On-line approaches to measuring national scientific output: a cautionary tale. Science and Public Policy, 15, 153–161.

Bouyssou, D., & Marchant, T. (2011). Bibliometric rankings of journals based on impact factors: an axiomatic approach. Journal of Informetrics, 5, 75–86.

Delanghe, H., Sloan, B., & Muldur, U. (2011). European research policy and bibliometric indicators, 1990–2005. Scientometrics, 87, 389–398.

Dosi, G., Llerena, P., & Sylos Labini, M. (2006). The relationship between science, technologies, and their industrial exploitation: an illustration through the myths and realities of the so-called ‘European Paradox’. Research Policy, 35, 1450–1464.

Dosi, G., Llerena, P., & Sylos Labini, M. (2009). “Does the ‘European Paradox’ still hold? Did it ever?” In: H. Delanghe, B. Sloan, U. Muldur (Eds.), European science and technology policy: towards integration or fragmentation? (pp. 1450–1464). Cheltenham: Edward Elgar.

Foster, J. E., Greeer, J., & Thorbecke, E. (1984). A class of decomposable poverty measures. Econometrica, 52, 761–766.

Glänzel, W. (2007). Characteristic scores and scales: a bibliometric analysis of subject characteristics based on long-term citation observation. Journal of Informetrics, 1, 92–102.

Glänzel, W. (2010). “The application of characteristics scores and scales to the evaluation and ranking of scientific journals”, forthcoming in proceedings of INFO 2010 (pp. 1–13). Cuba: Havana.

Glänzel, W., & Schubert, A. (2003). A new classification scheme of science fields and subfields designed for scientometric evaluation purposes. Scientometrics, 56, 357–367.

Herranz, N. & Ruiz-Castillo, J. (2011). “The end of the ‘European Paradox’”, working paper 11–27, Universidad Carlos III. http://hdl.handle.net/10016/12195.

Herranz, N. & Ruiz-Castillo, J. (2012a). “Multiplicative and fractional strategies when journals are assigned to several sub-fields”. Journal of the American Society for Information Science and Technology. doi: 10.1002/asi.22629 (in press).

Herranz, N. & Ruiz-Castillo, J. (2012b). “Sub-field normalization procedures in the multiplicative case: high- and low-impact citation indicators”. Research Evaluation. doi:10.1093/reeval/rvs006 (in press).

King, D. (2004). The scientific impact of nations. Nature, 430, 311–316.

May, R. (1997). The scientific wealth of nations. Science, 275, 793–796.

National Science Board. (2010). Science and engineering indicators 2010, appendix tables. Arlington: National Science Foundation.

Ravallion, M., & Wagstaff, A. (2011). On measuring scholarly influence by citations. Scientometrics, 88, 321–337.

Schubert, A., Glänzel, W., & Braun, T. (1987). A new methodology for ranking scientific institutions. Scientometrics, 12, 267–292.

Seglen, P. (1992). The skewness of science. Journal of the American Society for Information Science, 43, 628–638.

Tijssen, J. W., & van Leeuwen, T. (2003). “Bibliometric analysis of world science”, extended technical annex to chapter 5 of the third European report on science and technology indicators. Luxembourg: Directorate-General for Research Office for Official Publications of the European Community.

Acknowledgments

The authors acknowledge financial support from the Santander Universities Global Division of Banco Santander. Ruiz-Castillo also acknowledges financial help from the Spanish MEC through grant SEJ2007-67436. This paper is part of the SCIFI-GLOW Collaborative Project supported by the European Commission’s Seventh Research Framework Programme, Contract number SSH7-CT-2008-217436.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Herranz, N., Ruiz-Castillo, J. The end of the “European Paradox”. Scientometrics 95, 453–464 (2013). https://doi.org/10.1007/s11192-012-0865-8

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-012-0865-8