Abstract

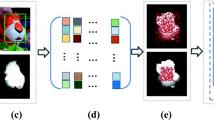

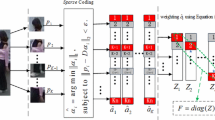

The complex changes of target and its surroundings introduce several tracking challenges, such as occlusion, deformation and so on. Many challenges coexist in a video which makes tracking still under successfully solved. The present trackers deal with coexisting challenges in a common model for all components of target. However, different components often undergo different challenges at the same time, while some with deformation and others with occlusion. The common model cannot adapt to these challenges simultaneously. An effective method is to separately deal with the challenges. This paper proposes a new robust tracker via separately tracking and identifying the multi-scale patches of target to cope with the coexisting challenges. It is achieved by three respects. Firstly, we define a new basic tracker by introducing the gaussian mixture model into Kernelized Correlation Filters (KCF). For the KCF is very sensitive to the similar surroundings, we construct a regular term and a loss function via the gaussian mixture model to optimize the classifier formed by KCF. Secondly, we define a new appearance representation model of target by multi-scale patches. To deal with the different variations of patches, we separately construct and update their appearance representations. Thirdly, with the tracked result of each patch computed by our basic tracker, we use the structure information and the Hough Vote to decide the target. Then, our method improves the accuracy by rejecting the failed tracked patches. Many experiments have been achieved on the Tracking Benchmark, and the quantitative and qualitative evaluations show that the proposed tracker performs better than most of the present trackers.

Similar content being viewed by others

References

Bibi A, Mueller M, Ghanem B. Target response adaptation for correlation filter tracking, proc of the 14th European conference on computer vision. Amsterdam, 2016: 419–433

Bolme DS, Beveridge JR, Draper B, Lui YM et al. (2010) Visual object tracking using adaptive correlation filters. Proc 23th IEEE Conf Comput Vision Pattern Recogn (CVPR) San Francisco: 2544–2550

Bolme DS, Beveridge JR, Draper BA, Lui YM (2010) Visual object tracking using adaptive correlation filters, proc of the 23th IEEE conference on computer vision and pattern recognition (CVPR). San Fracisco:2544–2550

Chen D, Yuan Z, Wu Y, Zhang G, Zheng N (2013) Constructing adaptive complex cells for robust visual tracking. Proc 19th Int Conf Comput Vision. Sydney:1113–1120

Comaniciu D, Ramesh V, Meer P Kernel-based object tracking, IEEE Trans Pattern Anal Mach Intell 2003, 25 (5): 564–575

Cui Z, Xiao S, Feng J, Yan S (2016) Recurrently target-attending tracking. Proc 29th IEEE Conf Comput Vision Pattern Recogn (CVPR). Las Vegas: 1449–1458

Danelljan M, Hager G, Shahbaz Khan F, Felsberg M (2015) Learning spatially regularized correlation filters for visual tracking. Proc 21th Int Conf Comput Vision (ICCV). Santiago: 4310–4318

Fan H, Ling H (2017) SANet: structure-aware network for visual tracking. Proc 30th IEEE Conf Comput Vision Pattern Recogn (CVPR), Hawaii: 2217–2224

Godec M, Roth PM, Bischof H (2013) Hough-based traking of non-rigid objects. Comput Vis Image Underst 117(10):1245–1256

Hamed KG, Ashton F, Simon L (2017) Learning background-aware correlation filters for visual tracking. Proc 22th IEEE Conf Int Conf Comput Vision (ICCV), Venice: 1144–1152

Hare S, Saffari A, Torr PHS (2012) Efficient online structured output learning for key point-based object tracking. Proc 25th IEEE Conf Comput Vision Pattern Recogn. Providence: 1894–1901

Hare S, Saffari A, Torr PHS (2016) Struck: structured output tracking with kernels. IEEE Trans Pattern Recogn Mach Intell 38(10):2096–2109

He S, Yang QX, Lau R, Wang J, Yang MH (2013) Visual tracking via locality sensitive histograms. Proc 26th IEEE Conf Comput Vision Pattern Recogn (CVPR). Portland: 2427–2434

Henriques JF, Caseiro R, Martins P, Batista J (2012) Exploiting the circulant structure of tracking-by-detection with kernels. Proc 12th Eur Conf Comput Vision. Florence: 702–715

Henriques JF, Caseiro R, Martins P, Batista J (2015) High-speed tracking with kernelized correlation filters. IEEE Trans Pattern Anal Mach Intell 37(3):583–596

Hu Z, Xie R, Wang M, Sun Z (2017) Midlevel cues mean shift visual tracking algorithm based on target-background saliency confidence map. Multimed Tools Appl 76:21265–21280

Jack V, Luca BF, Joao FH, Andrea V, Philip HST (2017) End-to-end representation learning for correlation filters based tracking. Proc 30th IEEE Conf Comput Vision Pattern Recogn (CVPR), Hawaii: 5000–5008

Jia X, Lu H, Yang MH (2012) Visual tracking via adaptive structural local sparse appearance model. Proc 25th IEEE Conf Comput Vision Pattern Recogn. Providence:1822–18292

Jia X, Lu H, Yang MH (2012) Visual tracking via adaptive structural local sparse appearance model, proc of the 25th IEEE conference on computer vision and pattern recognition (CVPR). Providence:1822–1829

Jongwon C, Hyung JC, Sangdoo Y, Tobias F (2017) Attentional correlation filter network for adaptive visual tracking, proc of the 30th IEEE conference on computer vision and pattern recognition (CVPR), Hawaii: 4828–4837

Kwon J, Lee KM (2013) Highly nonrigid object tracking via patch-based dynamic appearance modeling. IEEE Trans Pattern Anal Mach Intell 35(10):2427–2441

Li Y, Zhu J, Hoi SCH (2015) Reliable patch trackers: robust visual tracking by exploiting reliable patches. Proc 29th IEEE Conf Comput Vision Pattern Recogn. Boston:353–361

Liao L (2017) X, Zhang C, toward situation awareness: a survey on adaptive learning for model-free tracking. Multimed Tools Appl 76:21073–21115

Liu Y, Cui J, Zhao H, Zha H (2012) Fusion of low-and high-dimensional approaches by trackers sampling for generic human motion tracking. Proc 21st Int Conf Pattern Recogn (ICPR), Japan, Tsukuba Science, , 898–901

Liu Y, Nie L, Han L, Zhang L, Rosenblum DS (2015) Action2activity: Recognizing complex activities from sensor data. Proc 24th Int Conf Artif Intell (IJCAI), Buenos Aires, Argentina: 1617–1623

Liu Y, Nie L, Liu L, Rosenblum DS (2016) From action to activity: Sensor-based activity recognition. Neurocomputing 181(12):108–115

Liu S, Zhang T, Cao X, Xu C (2016) Structural correlation filter for robust visual tracking. Proc 29th IEEE Conf Comput Vision Pattern Recogn (CVPR). Las Vegas: 4312–4320

Liu Y, Zhang L, Nie L, Yan Y, Rosenblum DS (2016) Fortune teller: predicting your career path. Proc thirtieth AAAI Conf Artif Intell (AAAI), Phoenix, Arizona: 201–207

Lowe DG (2004) Distinctive image features from scale-invariant keypoints. Int J Comput Vis 60(2):91–110

Ma C, Huang JB, Yang X, Yang MH (2015) Hierarchical convolutional features for visual tracking. Proc 21th Int Conf Comput Vision (ICCV). Santiago: 3074–3082

Martin D, Goutam B, Fahad K, Michael F (2017) ECO: efficient convolution operators for tracking. Proc 30th IEEE Conf Comput Vision Pattern Recogn (CVPR), Hawaii: 6931–6939

Mohanapriya D, Mahesh K (2017) A novel foreground region analysis using NCP-DBP texture pattern for robust visual tracking. Multimed Tools Appl 76:25731–25748

Nam H, Han B (2016) Learning multi-domain convolutional neural networks for visual tracking. Proc 29th IEEE Conf Comput Vision Pattern Recogn (CVPR) Las Vegas : 4293–4302

Ning J, Yang J, Jiang S, Zhang L, Yang MH (2016) Object tracking via dual linear structured SVM and explicit feature map. Proc 29th IEEE Conf Comput Vision Pattern Recogn. Las Vegas 4266–4274

Pan Z, Liu S, Fu W (2017) A review of visual moving target tracking. Multimed Tools Appl 76:16989–17018

Quan W, Liu Z, Chen JX, Liang D (2017) Adaptive relay detection using primary and auxiliary detectors for tracking. Multimed Tools Appl 76:24299–24313

Smeulders AWM, Chu DM, Calderara S, Dehghan A, Shah M (2014) Visual tracking: an experiment survey. IEEE Trans Pattern Anal Mach Intell 36(7):1442–1468

Wang L, Ouyang W, Wang X, Lu H (2016) STCT: sequentially training convolutional networks for visual tracking. Proc 29th IEEE Conf Comput Vision Pattern Recogn (CVPR). Las Vegas: 1373–1381

Wang Z, Wang H, Tan J, Chen P, Xie C (2017) Robust object tracking via multi-scale patch based sparse coding histogram. Multimed Tools Appl 76:12181–12203

Wang M, Liu Y, Huang Z (2017) Large margin object tracking with circulant feature maps. Proc 30th IEEE Conf Comput Vision Pattern Recogn (CVPR), Hawaii: 4800–4808

Wang F, Li X, Lu M (2017) Adaptive Hamiltonian MCMC sampling for robust visual tracking. Multimed Tools Appl 76:13087–13106

Wu Y, Lim J, Yang MH (2013) Online object tracking: a benchmark. Proc 26th IEEE Conf Comput Vision Pattern Recogn (CVPR). Portland: 2411–2418

Xu Y, Cui J, Zhao H, Zha H (2013) Tracking generic human motion via fusion of low-and high-dimensional approaches. IEEE Trans Syst Man Cybernet: Syst 43(4):996–1002

Yang F, Lu H, Yang MH (2014) Robust superpixel tracking. IEEE Trans Image Process 23(4):1639–1651

Yun S, Choi J, Yoo Y, Yun K, Choi Y (2017) Action-decision networks for visual tracking with deep reinforcement learning. Proc 30th IEEE Conf Comput Vision Pattern Recogn (CVPR), Hawaii: 1349–1358

Zhang L, Maaten L (2014) Preserving structure in model-free tracking. IEEE Trans Pattern Recogn Mach Intell 36(4):756–769

Zhong W, Lu H, Yang MH (2012) Robust object tracking via sparsity-based collaborative model, proc of the 25th IEEE conference on computer vision and pattern recognition. Providence (CVPR): 1838–1845

Acknowledgements

We are grateful to all the reviewers for their valuable suggestions. We also greatly appreciate Professor Meihua Wang for her useful discussion and many helps in improving the manuscript. This work was supported in part by the National Natural Science Fund of China (61772209, 61472335) and the Science and Technology Planning Project of Guangdong Province (2016A050502050, 2014A050503057), the National Key R&D Program of China (2017YFB0503500) and the Zhejiang Provincial Natural Science Foundation of China (LY17F020009).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: The experiments on 50 videos of TB50 from Tracking Benchmark by frame-to-frame comparison

8 trackers are used to do comparisons, including the recent famous trackers DLSSVM (CVPR’16) [29], KCF(PAMI’ 15) [27], RPT (CVPR’ 15) [32], LSHT(CVPR ‘13) [39], LSST(CVPR’ 13) [5] and the top three ranked trackers from Tracking Benchmark [13] namely the STRUCK (PAMI’16) [9], ALSA (CVPR’12) [3], SCM (CVPR’12) [12]. We select 6 trackers (RPT, DLSSVM, KCF, LSHT, LSST) to demonstrate the results, and they perform better in the compared 8 trackers and proposed in recent years. The references of these trackers are described at the end of this file.

The references used in the above comparisons:

[27] Henriques J F, Caseiro R, Martins p, Batista J. High-speed tracking with kernelized correlation filters. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2015, 37(3): 583–596.

[39] He S, Yang Q X, Lau R, Wang J, Yang M H. Visual tracking via locality sensitive histograms, Proc of the 26th IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Portland, 2013: 2427–2434.

[13] Wu Y, Lim J, Yang M H. Online object tracking: A benchmark, Proc of the 26th IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Portland, 2013: 2411–2418.

[29] Ning J, Yang J, Jiang S, Zhang L, Yang M H. Object tracking via dual linear structured SVM and explicit feature map, Proc of the 29th IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, 2016: 4266–4274.

[5] Hare S, Saffari A, Torr P H S. Efficient online structured output learning for key point-based object tracking, Proc of the 25th IEEE Conference on Computer Vision and Pattern Recognition. Providence, 2012: 1894–1901.

[32] Li Y, Zhu J, Hoi S C H. Reliable patch trackers: robust visual tracking by exploiting reliable patches, Proc of the 29th IEEE Conference on Computer Vision and Pattern Recognition. Boston, 2015:353–361.

[9] Hare S, Saffari A, Torr P H S. Struck: Structured output tracking with kernels, IEEE Transactions on Pattern Recognition and Machine Intelligence, 2016, 38(10): 2096–2109.

[3] Jia X, Lu H, Yang M H. Visual tracking via adaptive structural local sparse appearance model, Proc of the 25th IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Providence, 2012:1822–1829.

[12] Zhong W, Lu H, Yang M H. Robust object tracking via sparsity-based collaborative model, Proc of the 25th IEEE Conference on Computer Vision and Pattern Recognition. Providence (CVPR), 2012: 1838–1845.

Appendix 2: The Evaluations on 50 videos of TB50 from Tracking Benchmark

8 trackers are used to do comparisons, including the recent famous trackers DLSSVM (CVPR’16) [29], KCF(PAMI’ 15) [27], RPT (CVPR’ 15) [32], LSHT(CVPR ‘13) [39], LSST(CVPR’ 13) [5] and the top three ranked trackers from Tracking Benchmark [13] namely the STRUCK (PAMI’16) [9], ALSA (CVPR’12) [3], SCM (CVPR’12) [12]. We select 6 trackers (RPT, DLSSVM, KCF, LSHT, LSST) to demonstrate the results, and they perform better in the compared 8 trackers and proposed in recent years. The references of these trackers are described at the end of this file.

The precision plots of 11 tracking challenges on TB50:

The success plots of 11 tracking challenges on TB50:

The references used in the above comparisons:

[27] Henriques J F, Caseiro R, Martins p, Batista J. High-speed tracking with kernelized correlation filters. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2015, 37(3): 583–596.

[39] He S, Yang Q X, Lau R, Wang J, Yang M H. Visual tracking via locality sensitive histograms, Proc of the 26th IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Portland, 2013: 2427–2434.

[13] Wu Y, Lim J, Yang M H. Online object tracking: A benchmark, Proc of the 26th IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Portland, 2013: 2411–2418.

[29] Ning J, Yang J, Jiang S, Zhang L, Yang M H. Object tracking via dual linear structured SVM and explicit feature map, Proc of the 29th IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, 2016: 4266–4274.

[5] Hare S, Saffari A, Torr P H S. Efficient online structured output learning for key point-based object tracking, Proc of the 25th IEEE Conference on Computer Vision and Pattern Recognition. Providence, 2012: 1894–1901.

[32] Li Y, Zhu J, Hoi S C H. Reliable patch trackers: robust visual tracking by exploiting reliable patches, Proc of the 29th IEEE Conference on Computer Vision and Pattern Recognition. Boston, 2015:353–361.

[9] Hare S, Saffari A, Torr P H S. Struck: Structured output tracking with kernels, IEEE Transactions on Pattern Recognition and Machine Intelligence, 2016, 38(10): 2096–2109.

[3] Jia X, Lu H, Yang M H. Visual tracking via adaptive structural local sparse appearance model, Proc of the 25th IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Providence, 2012:1822–1829.

[12] Zhong W, Lu H, Yang M H. Robust object tracking via sparsity-based collaborative model, Proc of the 25th IEEE Conference on Computer Vision and Pattern Recognition. Providence (CVPR), 2012: 1838–1845.

Rights and permissions

About this article

Cite this article

Liang, Y., Li, K., Zhang, J. et al. Robust visual tracking via identifying multi-scale patches. Multimed Tools Appl 78, 14195–14230 (2019). https://doi.org/10.1007/s11042-018-6760-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-018-6760-4