Abstract

The unified transform method (UTM) provides a novel approach to the analysis of initial boundary value problems for linear as well as for a particular class of nonlinear partial differential equations called integrable. If the latter equations are formulated in two dimensions (either one space and one time, or two space dimensions), the UTM expresses the solution in terms of a matrix Riemann–Hilbert (RH) problem with explicit dependence on the independent variables. For nonlinear integrable evolution equations, such as the celebrated nonlinear Schrödinger (NLS) equation, the associated jump matrices are computed in terms of the initial conditions and all boundary values. The unknown boundary values are characterized in terms of the initial datum and the given boundary conditions via the analysis of the so-called global relation. In general, this analysis involves the solution of certain nonlinear equations. In certain cases, called linearizable, it is possible to bypass this nonlinear step. In these cases, the UTM solves the given initial boundary value problem with the same level of efficiency as the well-known inverse scattering transform solves the initial value problem on the infinite line. We show here that the initial boundary value problem on a finite interval with x-periodic boundary conditions (which can alternatively be viewed as the initial value problem on a circle) belongs to the linearizable class. Indeed, by employing certain transformations of the associated RH problem and by using the global relation, the relevant jump matrices can be expressed explicitly in terms of the so-called scattering data, which are computed in terms of the initial datum. Details are given for NLS, but similar considerations are valid for other well-known integrable evolution equations, including the Korteweg–de Vries (KdV) and modified KdV equations.

Similar content being viewed by others

1 Introduction

Two of the most extensively studied classes of solutions of nonlinear partial differential equations of evolution type are (i) solutions with decay at spatial infinity and (ii) solutions which are periodic in space. For integrable evolution equations, the introduction of the Inverse Scattering Transform (IST) about half a century ago had the groundbreaking implication that the initial value problem (IVP) for solutions in the first of these classes could be solved by means of only linear operations [7]. More precisely, this approach expresses the solution of the IVP problem in terms of the solution of a linear singular integral equation, or, equivalently, in terms of the solution of a Riemann–Hilbert (RH) problem. Since this RH problem is formulated in terms of spectral functions whose definition only involves the given initial data, the solution formula is effective.

For the second of the above classes—the class of spatially periodic solutions—the introduction of the so-called finite-gap integration method in the 1970s had far-reaching implications, see [2]. This approach makes it possible to generate large classes of exact solutions, the so-called finite-gap solutions, by considering rational combinations of theta functions defined on Riemann surfaces with a finite number of branch cuts. The construction of solutions via this approach, in particular of the Korteweg–de Vries (KdV) and the nonlinear Schrödinger (NLS) equations, has a long and illustrious history [9]. The theory combines several branches of mathematics, including the spectral theory of differential operators with periodic coefficients, Riemann surface theory, and algebraic geometry, and has had implications for diverse areas of mathematics [2, 8, 9].

Despite the unquestionable success of the finite-gap approach, two questions naturally present themselves:

-

How effective is the finite-gap approach for the solution of the IVP for general periodic initial data? If the initial data are in the finite-gap class, the solution of the IVP can be retrieved by solving a Jacobi inversion problem on a finite-genus Riemann surface. However, if the initial data are not in the class of finite-gap potentials, then a theory of infinite-gap solutions is required. Although some aspects of the approach certainly can be extended to Riemann surfaces of infinite-genus [3, 10, 12], there are several complications associated with solving the Jacobi inversion problem on an infinite-genus surface. In fact, from a computational point of view even finite-gap solutions are not easily accessible [1, 2].

-

Why is the finite-gap integration method conceptually so different from the IST formalism? For example, whereas the IST relies on the solution of a RH problem, the algebro-geometric approach calls for the solution of a Jacobi inversion problem. (A Jacobi inversion problem can be regarded as a RH problem on a branched plane [11], but conceptually such an approach appears indirect.)

We propose a new approach for the solution of the space-periodic IVP for an integrable evolution equation. Just like in the implementation of the IST for the problem on the line, our approach expresses the solution of the periodic problem in terms of the solution of a RH problem, and the definition of this RH problem involves only the given initial data. Thus, the presented solution of the periodic problem is conceptually analogous to the solution of the problem on the line.

Let us explain the ideas that led us to the presented approach. Although the space-periodic problem is often viewed as an IVP on the circle, it can also be viewed as an initial boundary value problem (IBVP) on an interval with periodic boundary conditions. In 1997, one of the authors introduced a methodology for the solution of IBVPs for integrable equations [4]. The implementation of this method, known as the Unified Transform or Fokas Method, to an IBVP typically consists of two steps. The first step is to construct an integral representation of the solution characterized by a RH problem formulated in the complex k-plane, where k denotes the spectral parameter of the associated Lax pair. Since this representation involves, in general, some unknown boundary values, the solution formula is not yet effective. The second step is therefore to characterize the unknown boundary values by analyzing a certain equation called the global relation. In general, the second step involves the solution of a nonlinear problem. However, for certain so-called linearizable boundary conditions, additional symmetries can be used to eliminate the unknown boundary values from the formulation of the RH problem altogether.

It turns out that the boundary conditions corresponding to the space-periodic problem are linearizable. This suggests that it should be possible to use the above philosophy to obtain an effective solution. Although the analysis is quite different from that of other linearizable problems, we have indeed been able to find a RH problem whose formulation only involves the initial data.

The new approach is as effective for the periodic problem as the IST is for the problem on the line in the sense that all ingredients of the RH problem are defined in terms of quantities obtained by solving a linear Volterra integral equation whose kernel is characterized by the initial data. However, the formulation of the RH problem in the periodic case is complicated by the fact that the jump contour is determined by the zeros of an entire function (more precisely, the jump contour involves the spectral gaps known from the finite-gap approach).

Section 2 presents the main result. Section 3 presents details for the example when \(q_0(x) = q_0\) is a constant, which provides an illustration of the effectiveness of the new formalism, as well as a verification of its correctness.

2 The main result

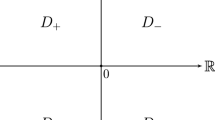

We write \(D_1, \ldots , D_4\) for the four open quadrants of the complex plane, see Figs. 1 and 2. A bar over a function denotes that the Schwarz conjugate is taken, i.e., \(\bar{f}(k) = \overline{f(\bar{k})}\). We define \(\theta \) by

Theorem 2.1

Suppose that q(x, t) is a smooth solution of the NLS equation

which is x-periodic of period \(L > 0\), i.e., \(q(x+L, t) = q(x,t)\). Define a(k) and b(k) in terms of the initial datum

by

where the vector \(\big (\mu ^{(1)}(x,k), \mu ^{(2)}(x,k)\big )\) satisfies the linear Volterra integral equation

Define \(\tilde{\varGamma }(k)\) in terms of a(k) and b(k) by

where \(\varDelta (k)\) is given by

Define the contour \(\tilde{\Sigma }\) by \(\tilde{\Sigma } = {\mathbb {R}}\cup i{\mathbb {R}}\cup \mathcal {C}\), where \(\mathcal {C}\) denotes a set of branch cuts of \(\sqrt{4 - \varDelta ^2}\) consisting of subintervals of \({\mathbb {R}}\) and vertical line segments which connect the zeros of odd order of \(4 - \varDelta ^2\). Orient \(\mathcal {C}\) as shown in Fig. 1 and let \(\tilde{\Sigma }_\star \) denote the contour \(\tilde{\Sigma }\) with all branch points of \(\sqrt{4 - \varDelta ^2}\) and points of self-intersection removed, see [6] for details.

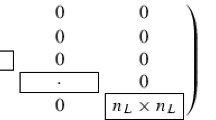

Assume that the function \(\tilde{\varGamma }\) has no poles on the contour \(\tilde{\Sigma }\) and that it has at most finitely many simple poles in \(D_1 \cup D_3\); denote the possible poles in \(D_1\) by \(\{p_j\}_1^{n_1}\) and the possible poles in \(D_3\) by \(\{q_j\}_{1}^{n_2}\). Let \(\tilde{P} = \{p_j, \bar{p}_j\}_1^{n_1} \cup \{q_j, \bar{q}_j\}_1^{n_2}\).

Consider the following RH problem: Find a \(2 \times 2\)-matrix-valued function \(\tilde{m}\) such that

-

\(\tilde{m}(x,t,\cdot ) : {\mathbb {C}}{\setminus } (\tilde{\Sigma } \cup \tilde{P}) \rightarrow {\mathbb {C}}^{2 \times 2}\) is analytic.

-

The limits of m(x, t, k) as k approaches \(\tilde{\Sigma }_\star \) from the left \((+)\) and right \((-)\) exist, are continuous on \(\tilde{\Sigma }_\star \), and satisfy

$$\begin{aligned} \tilde{m}_-(x,t,k)&= \tilde{m}_+(x, t, k) \tilde{v}(x, t, k), \qquad k \in \tilde{\Sigma }_\star , \end{aligned}$$(2.8)where ṽ is defined by

$$\begin{aligned} \tilde{v} = {\left\{ \begin{array}{ll} \tilde{v}_1 = \begin{pmatrix} \frac{a - \lambda b \bar{\tilde{\varGamma }} - \lambda \tilde{\varGamma } (\bar{a}\bar{\tilde{\varGamma }} - \bar{b})e^{2ikL}}{a- \lambda b \bar{\tilde{\varGamma }}} &{} \quad -\tilde{\varGamma } e^{2ikL} e^{-2 i \theta }\\ \frac{\lambda \bar{\tilde{\varGamma }} e^{2 i \theta }}{(a - \lambda b \bar{\tilde{\varGamma }}) (a + \lambda \bar{b} \tilde{\varGamma } e^{2ikL})} &{} \quad \frac{a}{a + \lambda \bar{b} \tilde{\varGamma } e^{2ikL}} \end{pmatrix}, &{} k \in i{\mathbb {R}}_+ {\setminus } \bar{\mathcal {C}},\\ \tilde{v}_2 = \begin{pmatrix} 1 - \lambda \tilde{\varGamma } \bar{\tilde{\varGamma }} &{}\quad -\frac{(\bar{a} \tilde{\varGamma } e^{2ikL}+b) e^{-2 i \theta }}{\bar{a}+ \lambda b \bar{\tilde{\varGamma }} e^{-2ikL}}\\ \frac{\lambda (a \bar{\tilde{\varGamma }}e^{-2ikL}+\bar{b}) e^{2 i \theta }}{a+ \lambda \bar{b} \tilde{\varGamma } e^{2ikL}}&{}\quad \frac{1}{(a+ \lambda \bar{b} \tilde{\varGamma } e^{2ikL}) (\bar{a} + \lambda b \bar{\tilde{\varGamma }} e^{-2ikL})} \end{pmatrix}, &{} \quad k \in {\mathbb {R}}_+ {\setminus } \bar{\mathcal {C}},\\ \tilde{v}_3 = \begin{pmatrix} \frac{\bar{a} - \lambda \bar{b} \tilde{\varGamma } - \lambda \bar{\tilde{\varGamma }}(a\tilde{\varGamma }- b) e^{-2ikL}}{\bar{a} -\lambda \bar{b} \tilde{\varGamma }}&{}\quad - \frac{\tilde{\varGamma } e^{-2 i \theta }}{(\bar{a} - \lambda \bar{b} \tilde{\varGamma }) (\bar{a} + \lambda b \bar{\tilde{\varGamma }} e^{-2ikL})} \\ \lambda \bar{\tilde{\varGamma }} e^{-2ikL} e^{2 i \theta } &{}\quad \frac{\bar{a}}{\bar{a} + \lambda b \bar{\tilde{\varGamma }} e^{-2ikL}} \end{pmatrix}, &{} k \in i{\mathbb {R}}_- {\setminus } \bar{\mathcal {C}},\\ \tilde{v}_4 = \begin{pmatrix} \frac{1 - \lambda \tilde{\varGamma } \bar{\tilde{\varGamma }}}{(a - \lambda b \bar{\tilde{\varGamma }}) (\bar{a} - \lambda \bar{b} \tilde{\varGamma })} &{}\quad -\frac{(a \tilde{\varGamma } - b)e^{-2 i \theta }}{\bar{a} -\lambda \bar{b} \tilde{\varGamma }} \\ \frac{\lambda (\bar{a} \bar{\tilde{\varGamma }} - \bar{b})e^{2 i \theta }}{a - \lambda b \bar{\tilde{\varGamma }}} &{} \quad 1 \end{pmatrix}, &{} k \in {\mathbb {R}}_- {\setminus } \bar{\mathcal {C}},\\ \tilde{v}^{\text {cut}}_{D_1} = \begin{pmatrix} \frac{a + \lambda \bar{b} \tilde{\varGamma }_+ e^{2ikL}}{a + \lambda \bar{b} \tilde{\varGamma }_- e^{2ikL}} &{}\quad (\tilde{\varGamma }_ --\tilde{\varGamma }_+) e^{2ikL} e^{-2i\theta } \\ 0 &{}\quad \frac{a +\lambda \bar{b} \tilde{\varGamma }_- e^{2ikL}}{a + \lambda \bar{b}\tilde{\varGamma }_+ e^{2ikL}} \end{pmatrix}, &{} k \in \mathcal {C} \cap D_1,\\ \tilde{v}^{\text {cut}}_{D_2} = \begin{pmatrix} 1 &{} 0 \\ \frac{\lambda (\bar{\tilde{\varGamma }}_-- \bar{\tilde{\varGamma }}_+)e^{2i\theta }}{(a - \lambda b \bar{\tilde{\varGamma }}_-)(a -\lambda b \bar{\tilde{\varGamma }}_+)} &{} \quad 1 \end{pmatrix}, &{} k \in \mathcal {C} \cap D_2,\\ \tilde{v}^{\text {cut}}_{D_3} =\begin{pmatrix} 1 &{} \quad \frac{(\tilde{\varGamma }_- - \tilde{\varGamma }_+)e^{-2i\theta }}{(\bar{a} - \lambda \bar{b} \tilde{\varGamma }_-)(\bar{a} -\lambda \bar{b} \tilde{\varGamma }_+)} \\ 0 &{}\quad 1 \end{pmatrix}, &{} k \in \mathcal {C} \cap D_3,\\ \tilde{v}^{\text {cut}}_{D_4} = \begin{pmatrix} \frac{\bar{a} + \lambda b \bar{\tilde{\varGamma }}_- e^{-2ikL}}{\bar{a} + \lambda b \bar{\tilde{\varGamma }}_+ e^{-2ikL}} &{} \quad 0 \\ \lambda (\bar{\tilde{\varGamma }}_ --\bar{\tilde{\varGamma }}_+) e^{2i\theta } e^{-2ikL} &{} \quad \frac{\bar{a} + \lambda b \bar{\tilde{\varGamma }}_+ e^{-2ikL}}{\bar{a} + \lambda b \bar{\tilde{\varGamma }}_- e^{-2ikL}} \end{pmatrix}, &{} \quad k \in \mathcal {C} \cap D_4,\\ \tilde{v}_1^{\text {cut}} = \tilde{v}^{\text {cut}}_{D_1} \tilde{v}_{1-}, &{}\quad k \in \mathcal {C} \cap i{\mathbb {R}}_+,\\ \tilde{v}_2^{\text {cut}} = \tilde{v}^{\text {cut}}_{D_1} \tilde{v}_{2-}, &{}\quad k \in \mathcal {C} \cap {\mathbb {R}}_+,\\ \tilde{v}_3^{\text {cut}} = \tilde{v}^{\text {cut}}_{D_3} \tilde{v}_{3-}, &{}\quad k \in \mathcal {C} \cap i{\mathbb {R}}_-,\\ \tilde{v}_4^{\text {cut}} = \tilde{v}^{\text {cut}}_{D_3} \tilde{v}_{4-}, &{}\quad k \in \mathcal {C} \cap {\mathbb {R}}_-, \end{array}\right. } \end{aligned}$$(2.9)and \(\tilde{v}_{j-}\) denotes the boundary values of \(\tilde{v}_j\) as k approaches \(\mathcal {C}\) from the right.

-

\(\tilde{m}(x,t,k) = I + O\big (k^{-1}\big )\) as \(k \rightarrow \infty \), \(k \in {\mathbb {C}}{\setminus } \cup _{n\in {\mathbb {Z}}} \mathcal {D}_n\), where \(\mathcal {D}_n\) is the open disk

$$\begin{aligned} \mathcal {D}_n = \bigg \{k \in {\mathbb {C}}\, \bigg | \, \bigg |k - \frac{n\pi }{L}\bigg | < \frac{\pi }{4L}\bigg \}. \end{aligned}$$(2.10) -

\(\tilde{m}(x,t,k) = O(1)\) as \(k \rightarrow \tilde{\Sigma } {\setminus } \tilde{\Sigma }_\star \), \(k \in {\mathbb {C}}{\setminus } \tilde{\Sigma }\).

-

At the points \(p_j \in D_1\) and \(\bar{p}_j \in D_4\), \(\tilde{m}\) satisfies, for \(j = 1, \dots , n_1\),

$$\begin{aligned}&\underset{k = p_j}{\text {Res} \,} [\tilde{m}(x,t,k)]_2 = \Big [[\tilde{m}]_1 (a + \lambda \bar{b} \tilde{\varGamma } e^{2ikL})\bar{a} e^{2ikL} e^{-2i\theta } \Big ](x,t,p_j) \, \underset{k=p_j}{\text {Res} \,} \tilde{\varGamma }(k), \nonumber \\\end{aligned}$$(2.11a)$$\begin{aligned}&\underset{k=\bar{p}_j}{\text {Res} \,} [\tilde{m}(x,t,k)]_1 = \Big [\lambda [\tilde{m}]_2 (\bar{a} + \lambda b \bar{\tilde{\varGamma }} e^{-2ikL}) a e^{-2ikL} e^{2i\theta } \Big ](x,t,\bar{p}_j) \, \overline{\underset{k=p_j}{\text {Res} \,} \tilde{\varGamma }(k)}.\nonumber \\ \end{aligned}$$(2.11b) -

At the points \(q_j \in D_3\) and \(\bar{q}_j \in D_2\), \(\tilde{m}\) satisfies, for \(j = 1, \dots , n_2\),

$$\begin{aligned}&\underset{k=q_j}{\text {Res} \,} [\tilde{m}(x,t,k)]_2 = \bigg [ [\tilde{m}]_1 \frac{a e^{-2i\theta }}{\bar{a} - \lambda \bar{b} \tilde{\varGamma }}\bigg ](x,t,q_j) \, \underset{k=q_j}{\text {Res} \,} \tilde{\varGamma }(k), \end{aligned}$$(2.11c)$$\begin{aligned}&\underset{k=\bar{q}_j}{\text {Res} \,} [\tilde{m}(x,t,k)]_1 = \bigg [\lambda [\tilde{m}]_2 \frac{\bar{a} e^{2i\theta }}{a - \lambda b \bar{\tilde{\varGamma }}}\bigg ](x,t,\bar{q}_j) \, \overline{\underset{k=q_j}{\text {Res} \,} \tilde{\varGamma }(k)}. \end{aligned}$$(2.11d)

The above RH problem has a unique solution \(\tilde{m}(x,t,k)\) for each \((x,t) \in [0,L] \times [0,\infty )\). The solution q of the NLS Eq. (2.2) can be obtained from \(\tilde{m}\) via the equation

where the limit is taken along any ray \(\{k \in {\mathbb {C}}| \arg k = \phi \}\) where \(\phi \in {\mathbb {R}}{\setminus } \{n\pi /2 \, | \, n \in {\mathbb {Z}}\}\) (i.e., the ray is not contained in \({\mathbb {R}}\cup i{\mathbb {R}}\)).

Proof

The NLS Eq. (2.2) has a Lax pair given by

where \(k \in {\mathbb {C}}\) is the spectral parameter, \(\mu (x,t,k)\) is a \(2 \times 2\)-matrix valued function, the matrices Q and \(\tilde{Q}\) are defined in terms of the solution q(x, t) of (2.2) by

and \(\hat{\sigma }_3\mu = [\sigma _3, \mu ]\). Suppose q(x, t) is a smooth solution of (2.2) defined for \((x,t) \in {\mathbb {R}}\times [0,T]\) such that \(q(x+L, t) = q(x,t)\), where \(L > 0\) and \(0< T < \infty \) is an arbitrary fixed final time. Let \(\mu _j(x,t,k)\), \(j = 1,2,3,4\), denote the four solutions of (2.13) which are normalized to be the identity matrix at the points (0, T), (0, 0), (L, 0), and (L, T), respectively, see [5]. The spectral functions a, b, A, B are defined for \(k \in {\mathbb {C}}\) by

where

Note that \(\{\mu _j\}_1^4\), s, and S are entire functions of k. Clearly, S(k) depends on T, whereas s(k) does not. The entries of the matrices s(k) and S(k) are related by the so-called global relation, see [5, Eq. (1.4)]:

where \(c^+(k)\) is an entire function such that

The above functions satisfy the unit determinant relations

As \(k \rightarrow \infty \), the functions a and b satisfy

Using the entries of s and S, we construct the following quantities:

The eigenfunctions \(\mu _j\) are related by

The four open quadrants \(D_1, \dots D_4\), the contour \({\mathbb {R}}\cup i{\mathbb {R}}\), and the jump matrices \(J_1, \dots , J_4\) defined in (2.24)

The RH problem for M. It was shown in [5] that the sectionally meromorphic function M(x, t, k) defined by

satisfies

where the contour \({\mathbb {R}}\cup i{\mathbb {R}}\) is oriented as shown in Fig. 2 and

with

The jump matrix J depends on x and t only through the function \(\theta (x,t,k)\) defined in (2.1). The solution q(x, t) of (2.2) can be recovered from M using

where the limit may be taken in any open quadrant.

If the functions \(\alpha (k)\) and d(k) have no zeros, then M is analytic for \(k \in {\mathbb {C}}{\setminus } ({\mathbb {R}}\cup i{\mathbb {R}})\) and it can be characterized as the unique solution of the RH problem (2.22). The jump matrix J depends via the spectral functions on the initial data q(x, 0) as well as on the boundary values q(0, t) and \(q_x(0,t)\). If all these boundary values are known, then the value of q(x, t) at any point (x, t) can be obtained by solving the RH problem (2.22) for M and using (2.25). If the functions \(\alpha (k)\) and d(k) have zeros, then M may have pole singularities and the RH problem has to be supplemented with appropriate residue conditions, see [5, Proposition 2.3].

The RH problem for m. It is possible to formulate a RH problem which involves jump matrices defined in terms of the ratio \(\varGamma = B/A\), as opposed to A and B. Indeed, consider the sectionally meromorphic function m(x, t, k) defined in terms of the eigenfunctions \(\mu _j(x,t,k)\), \(j = 1, \dots , 4\), by

The function m is related to the solution M defined in (2.21) by

where the sectionally meromorphic function H is defined by

with \(H_j\) denoting the restriction of H to \(D_j\), \(j = 1,2,3,4\).

The limits of m(x, t, k) as k approaches \(({\mathbb {R}}\cup i{\mathbb {R}}) {\setminus } \{0\}\) from the left and right satisfy

where the jump matrix v is defined by

Furthermore,

Also, the function m satisfies \(\det m = 1\), as well as the symmetry relations

The RH problem for \(\tilde{m}\). In order to define a RH problem which depends on \(\tilde{\varGamma }\) instead of \(\varGamma \), where \(\tilde{\varGamma }\) is independent of T, we introduce the function g(k) by

where \(g_j\) denotes the restriction of g to \(D_j\), \(j = 1, \dots , 4\). In (2.31), \(\varGamma = B/A\) and \(\tilde{\varGamma }\) is defined by (2.6). We define \(\tilde{m}(x,t,k)\) by

Let \(\mathcal {C}\) be the set of branch cuts of \(\sqrt{4 - \varDelta ^2}\) introduced in Theorem 2.1. The function \(\sqrt{4 - \varDelta ^2}\) is analytic and single-valued on \({\mathbb {C}}{\setminus } \mathcal {C}\); the branch is fixed by the condition that \(\sqrt{4 - \varDelta ^2} = 2\sin (kL)(1 + O(k^{-1}))\) as \(k \in {\mathbb {C}}{\setminus } \cup _{n\in {\mathbb {Z}}} \mathcal {D}_n\) tends to infinity, see [6] for details.

A straightforward computation shows that \(\tilde{m}\) satisfies the jump relation (2.8) on \(({\mathbb {R}}\cup i{\mathbb {R}}) {\setminus } \mathcal {C}\), where \(\tilde{v}\) is given by (2.28) with \(\varGamma \) replaced by \(\tilde{\varGamma }\). However, since the square root \(\sqrt{4 - \varDelta ^2}\) changes sign as k crosses a branch cut, \(\tilde{v}\) is not given by the same expression as v on \(({\mathbb {R}}\cup i{\mathbb {R}}) \cap \mathcal {C}\). Also, if \(\lambda = -1\), then \(\tilde{m}\) may have jumps across the contours \(\mathcal {C} \cap D_j\), \(j = 1, \dots , 4\). Remarkably, all these jumps can be expressed in terms of a and b, yielding (2.28).

The proof of uniqueness of the solution \(\tilde{m}\) of the RH problem of Theorem 2.1 uses the fact that the problem with \(\tilde{P} \ne \emptyset \) can be transformed into a problem with \(\tilde{P} = \emptyset \) and the fact that \(\det \tilde{m}\) is an entire function, see [6].

Fix \((x,t) \in [0,L] \times [0,\infty )\). Choose \(T \in (t, \infty )\) and define \(\tilde{m}\) by (2.32) with m and g defined using T as final time. Straightforward computations show that \(\tilde{m} = MHg\) satisfies the RH problem of Theorem 2.1 and that (2.12) holds at (x, t) (the verification of (2.12) uses (2.25) and the fact that \(g - I\) is exponentially small as \(k \rightarrow \infty \) along any ray \(\arg k = \text {constant}\) not contained in \({\mathbb {R}}\cup i{\mathbb {R}}\); details are given in [6]).\(\square \)

Remark 2.2

The solutions obtained via algebraic geometry correspond to the particular case of the above formalism when the number of branch cuts of \(\sqrt{4 - \varDelta ^2}\) is finite. This implies that in these particular cases the associated RH problems can be solved via Riemann theta functions.

3 Example: Constant initial data

To illustrate the approach of Theorem 2.1, we consider the case of constant initial data

where \(q_0 > 0\) can be taken to be positive thanks to the phase invariance of (2.2). In this case, direct integration of the x-part of the Lax pair (2.13) leads to the following expressions for the spectral functions a and b:

where r(k) denotes the square root

It follows that

Note that a, b, and \(\varDelta \) are entire functions of k even though r(k) has a branch cut.

The function \(4 - \varDelta ^2 = 4\sin ^2(Lr)\) has the two simple zeros

as well as the infinite sequence of double zeros

If \(\lambda = 1\), then all zeros are real; if \(\lambda = -1\), then the zeros are real for \(|n| \ge L q_0/\pi \) and purely imaginary for \(|n| < L q_0/\pi \). The function \(\sqrt{4 - \varDelta ^2}\) is single-valued on \({\mathbb {C}}{\setminus } \mathcal {C}\), where \(\mathcal {C}\) consists of a single branch cut:

Let us fix the branch in the definition of r so that \(r:{\mathbb {C}}{\setminus } \mathcal {C} \rightarrow {\mathbb {C}}\) is analytic and \(r(k) \sim k\) as \(k \rightarrow \infty \). Then,

and hence, the function \(\tilde{\varGamma }:{\mathbb {C}}{\setminus } \mathcal {C} \rightarrow {\mathbb {C}}\) defined in (2.6) is given by

The function \(\tilde{\varGamma }(k)\) has no poles. The contour \(\tilde{\Sigma }\) is given by \(\tilde{\Sigma } = {\mathbb {R}}\cup i{\mathbb {R}}\) and is oriented as shown in Fig. 2; the origin is its only point of self-intersection and so \(\tilde{\Sigma }_\star = ({\mathbb {R}}\cup i{\mathbb {R}}) {\setminus } \{0, \lambda ^\pm \}\). Substituting the expressions (3.1) and (3.2) for \(a,b,\tilde{\varGamma }\) into the definition (2.28) of the jump matrix \(\tilde{v}\), we get

If \(\lambda = 1\), then \(\mathcal {C} = (-q_0, q_0)\) so the formulation of the RH problem also involves the jump matrices \(\tilde{v}_2^{\text {cut}}\) and \(\tilde{v}_4^{\text {cut}}\), whereas if \(\lambda = -1\), then \(\mathcal {C} = (-iq_0, iq_0)\) so the formulation instead involves the jump matrices \(\tilde{v}_1^{\text {cut}}\) and \(\tilde{v}_3^{\text {cut}}\). Let \(\mathfrak {r}(k) = \sqrt{|k^2 - \lambda q_0^2|} \ge 0\). A calculation shows that if \(\lambda = 1\), then

where \(\mathfrak {r}(k) = \sqrt{q_0^2 - k^2} \ge 0\). If \(\lambda =-1\), then

where \(\mathfrak {r}(k) = \sqrt{q_0^2 + k^2} \ge 0\). We conclude that in the case of constant initial data, the RH problem for \(\tilde{m}\) can be formulated as follows (recall that the contour \({\mathbb {R}}\cup i{\mathbb {R}}\) is oriented as shown in Fig. 2; see also Fig. 3).

Jump matrices for the RH problem 3.1 for \(\lambda = 1\) (left) and \(\lambda = -1\) (right)

RH problem 3.1

(The RH problem for constant initial data) Find a \(2 \times 2\)-matrix valued function \(\tilde{m}(x,t,k)\) with the following properties:

-

\(\tilde{m}(x,t,\cdot ) : {\mathbb {C}}{\setminus } ({\mathbb {R}}\cup i{\mathbb {R}}) \rightarrow {\mathbb {C}}^{2 \times 2}\) is analytic.

-

The limits of m(x, t, k) as k approaches \(({\mathbb {R}}\cup i{\mathbb {R}}) {\setminus } \{0, \lambda ^\pm \}\) from the left and right exist, are continuous on \(({\mathbb {R}}\cup i{\mathbb {R}}) {\setminus } \{0, \lambda ^\pm \}\), and satisfy

$$\begin{aligned} \tilde{m}_-(x,t,k) = \tilde{m}_+(x, t, k) \tilde{v}(x, t, k), \qquad k \in ({\mathbb {R}}\cup i{\mathbb {R}}) {\setminus } \{0, \lambda ^\pm \}, \end{aligned}$$(3.6)where \(\tilde{v}\) is defined as follows (see Fig. 3):

-

If \(\lambda = 1\), then

$$\begin{aligned} \tilde{v} = {\left\{ \begin{array}{ll} \tilde{v}_1 &{}\quad \text {on } i{\mathbb {R}}_+, \\ \tilde{v}_2 &{} \quad \text {on } (q_0, +\infty ), \\ \tilde{v}_2^{\text {cut}} &{}\quad \text {on }(0, q_0), \\ \tilde{v}_3 &{} \quad \text {on } i{\mathbb {R}}_-, \\ \tilde{v}_4 &{} \quad \text {on } (-\infty , -q_0), \\ \tilde{v}_4^{\text {cut}} &{}\quad \text {on } (-q_0, 0), \end{array}\right. } \end{aligned}$$where \(\{\tilde{v}_j\}_1^4\), \(\tilde{v}_2^{\text {cut}}\), and \(\tilde{v}_4^{\text {cut}}\) are defined by (3.3) and (3.4).

-

If \(\lambda = -1\), then

$$\begin{aligned} \tilde{v} = {\left\{ \begin{array}{ll} \tilde{v}_1 &{} \quad \text {on } (iq_0, +\infty ), \\ \tilde{v}_1^{\text {cut}} &{}\quad \text {on } (0, iq_0), \\ \tilde{v}_2 &{}\quad \text {on } {\mathbb {R}}_+, \\ \tilde{v}_3 &{} \quad \text {on } (-i\infty , -iq_0) , \\ \tilde{v}_3^{\text {cut}} &{} \quad \text {on } (-iq_0, 0), \\ \tilde{v}_4 &{} \quad \text {on }{\mathbb {R}}_-, \end{array}\right. } \end{aligned}$$where \(\{\tilde{v}_j\}_1^4\), \(\tilde{v}_1^{\text {cut}}\), and \(\tilde{v}_3^{\text {cut}}\) are defined by (3.3) and (3.5).

-

-

\(\tilde{m}(x,t,k) = I + O\big (k^{-1}\big )\) as \(k \rightarrow \infty \), \(k \in {\mathbb {C}}{\setminus } \cup _{n\in {\mathbb {Z}}} \mathcal {D}_n\).

-

\(\tilde{m}(x,t,k) = O(1)\) as \(k \rightarrow \{0, \lambda ^\pm \}\), \(k \in {\mathbb {C}}{\setminus } ({\mathbb {R}}\cup i{\mathbb {R}})\).

Remark 3.2

It is easy to verify that the jump matrices in RH problem 3.1 satisfy the following consistency conditions at the origin:

3.1 Solution of the RH problem for \(\tilde{m}\)

In what follows, we solve the RH problem 3.1 for \(\tilde{m}\) explicitly by transforming it to a RH problem which has a constant off-diagonal jump across the branch cut \(\mathcal {C} = (\lambda ^-, \lambda ^+)\).

The jump matrices \(\tilde{v}_1\) and \(\tilde{v}_3\) in (3.3) admit the factorizations

It follows that the jump across \(i{\mathbb {R}}{\setminus } \mathcal {C}\) can be removed by introducing a new solution \(\hat{m}\) by

Straightforward computations using (3.3)–(3.5) show that \(\hat{m}\) only has a jump across the cut \((\lambda ^-, \lambda ^+)\). Let us orient

to the right if \(\lambda = 1\) and upward if \(\lambda = -1\). We find that \(\hat{m}(x,t,\cdot ) : {\mathbb {C}}{\setminus } (\lambda ^-, \lambda ^+) \rightarrow {\mathbb {C}}^{2 \times 2}\) is analytic, that \(\hat{m} = I + O(k^{-1})\) as \(k \rightarrow \infty \), and that \(\hat{m}\) satisfies the jump condition

where \(f(k) \equiv f(x,t,k)\) is defined by

The jump matrix in (3.7) can be made constant (i.e., independent of k) by performing another transformation. Define \(\delta (k) \equiv \delta (x,t,k)\) by

The function \(\delta \) satisfies the jump relation \(\delta _+ \delta _- = f\) on \((\lambda ^-, \lambda ^+)\) and \(\lim _{k\rightarrow \infty } \delta (k) = \delta _\infty \), where

Moreover, \(\delta (k) = O((k-\lambda ^\pm )^{1/4})\) and \(\delta (k)^{-1} = O((k-\lambda ^\pm )^{-1/4})\) as \(k \rightarrow \lambda ^\pm \). Consequently, \(\check{m} = \delta _\infty ^{-\sigma _3} \hat{m} \delta ^{\sigma _3}\) satisfies the following RH problem: (i) \(\check{m}(x,t,\cdot ) : {\mathbb {C}}{\setminus } (\lambda ^-, \lambda ^+) \rightarrow {\mathbb {C}}^{2 \times 2}\) is analytic, (ii) \(\check{m} = I + O(k^{-1})\) as \(k \rightarrow \infty \), (iii) \(\check{m} = O((k-\lambda ^\pm )^{-1/4})\) as \(k \rightarrow \lambda ^\pm \), and (iv) \(\check{m}\) satisfies the jump condition

The unique solution of this RH problem is given explicitly by

where the branch of \(Q: {\mathbb {C}}{\setminus } (\lambda ^-, \lambda ^+) \rightarrow {\mathbb {C}}\) is such that \(Q \sim 1\) as \(k \rightarrow \infty \). Since \(\tilde{m}\) is easily obtained from \(\check{m}\) by inverting the transformations \(\tilde{m} \rightarrow \hat{m} \rightarrow \check{m}\), this provides an explicit solution of the RH problem for \(\tilde{m}\).

We next use the formula (3.10) for \(\check{m}\) together with (2.12) to find q(x, t). By (2.12),

In order to compute \(\delta _\infty \), we note that

where

The integral in (3.12) involving \(x - L\) vanishes because the integrand is odd, and the integral involving t can be computed by opening up the contour and deforming the contour to infinity:

where C is a counterclockwise circle encircling the cut \([\lambda ^-, \lambda ^+]\). If \(\lambda = 1\), then the substitution \(s = q_0 \sin \theta \) gives

while if \(\lambda = -1\), then the substitutions \(s = i\sigma = i q_0 \sin \theta \) yield

It follows that

Substituting this expression for \(\delta _\infty \) into (3.11), we find that the solution q(x, t) of (2.2) corresponding to the constant initial data \(q(x,0) = q_0 \in {\mathbb {R}}\) is given by

It is of course easily verified that this q satisfies the correct initial value problem.

References

Bobenko, A.I., Klein, C. (eds.) Computational Approach to Riemann Surfaces. Lecture Notes in Mathematics, 2013. Springer, Heidelberg (2011)

Belokolos, E.D., Bobenko, A.I., Enol’skii, V.Z., Its, A.R., Matveev, V.B.: Algebro-Geometric Approach to Nonlinear Integrable Equations. Springer Series in Nonlinear Dynamics. Springer, Berlin (1994)

Feldman, J., Knörrer, H., Trubowitz, E.: Riemann Surfaces of Infinite Genus, CRM Monograph Series, 20. American Mathematical Society, Providence (2003)

Fokas, A.S.: A unified transform method for solving linear and certain nonlinear PDEs. Proc. R. Soc. Lond. A 453, 1411–1443 (1997)

Fokas, A.S., Its, A.R.: The nonlinear Schrödinger equation on the interval. J. Phys. A 37, 6091–6114 (2004)

Fokas, A.S., Lenells, J.: A new approach to integrable evolution equations on the circle. Proc. R. Soc. A 477, 20200605 (2020). https://doi.org/10.1098/rspa.2020.0605

Gardner, C.S., Greene, J.M., Kruskal, M.D., Miura, R.M.: Method for solving the Korteweg–de Vries equation. Phys. Rev. Lett. 19, 1095–1097 (1967)

Gesztesy, F., Holden, H.: Soliton equations and their algebro-geometric solutions. Vol. I. (\(1+1\))-dimensional continuous models. Cambridge Studies in Advanced Mathematics, 79. Cambridge University Press, Cambridge (2003)

Matveev, V.B.: 30 years of finite-gap integration theory. Philos. Trans. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 366, 837–875 (2008)

McKean, H.P., van Moerbeke, P.: The spectrum of Hill’s equation. Invent. Math. 30, 217–274 (1975)

McLaughlin, T.-R.K., Nabelek, P.V.: A Riemann–Hilbert problem approach to infinite gap Hill’s operators and the Korteweg–de Vries equation. Int. Math. Res. Notices (2020). https://doi.org/10.1093/imrn/rnz156

Müller, W., Schmidt, M., Schrader, R.: Hyperelliptic Riemann surfaces of infinite genus and solutions of the KDV equation. Duke Math. J. 91, 315–352 (1998)

Acknowledgements

ASF acknowledges support from the EPSRC in the form of a senior fellowship. JL acknowledges support from the Göran Gustafsson Foundation, the Ruth and Nils-Erik Stenbäck Foundation, the Swedish Research Council, Grant No. 2015-05430, and the European Research Council, Grant Agreement No. 682537.

Funding

Open Access funding provided by Royal Institute of Technology

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Deconinck, B., Fokas, A.S. & Lenells, J. The implementation of the unified transform to the nonlinear Schrödinger equation with periodic initial conditions. Lett Math Phys 111, 17 (2021). https://doi.org/10.1007/s11005-021-01356-7

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11005-021-01356-7

Keywords

- Integrable evolution equation

- Periodic solution

- Riemann–Hilbert problem

- Finite-gap solution

- Inverse scattering

- Unified transform method

- Fokas method

- Linearizable boundary condition