Abstract

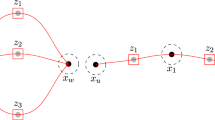

It is often possible to speed up the mixing of a Markov chain \(\{ X_{t} \}_{t \in \mathbb {N}}\) on a state space \(\Omega \) by lifting, that is, running a more efficient Markov chain \(\{ \widehat{X}_{t} \}_{t \in \mathbb {N}}\) on a larger state space \(\hat{\Omega } \supset \Omega \) that projects to \(\{ X_{t} \}_{t \in \mathbb {N}}\) in a certain sense. Chen et al. (Proceedings of the 31st annual ACM symposium on theory of computing. ACM, 1999) prove that for Markov chains on finite state spaces, the mixing time of any lift of a Markov chain is at least the square root of the mixing time of the original chain, up to a factor that depends on the stationary measure of \(\{X_t\}_{t \in \mathbb {N}}\). Unfortunately, this extra factor makes the bound in Chen et al. (1999) very loose for Markov chains on large state spaces and useless for Markov chains on continuous state spaces. In this paper, we develop an extension of the evolving set method that allows us to refine this extra factor and find bounds for Markov chains on continuous state spaces that are analogous to the bounds in Chen et al. (1999). These bounds also allow us to improve on the bounds in Chen et al. (1999) for some chains on finite state spaces.

Similar content being viewed by others

Notes

The constant \(\frac{1}{2}\) here is arbitrary; replacing the constant \(\frac{1}{2}\) with any constant \(0< c < 1\) would result in similar bounds, though perhaps with slightly different constants.

This proof is given for Markov chains on discrete state spaces, but the same bound holds for continuous-state Markov chains with minor changes in notation.

Again, the result is stated for chains on discrete state spaces but applies more generally to continuous-state Markov chains.

We point out that such a function \(x^{*}\) always exists. Note that the containment condition actually determines the value of \(x^{*}\) except on the set \(A = \{x \in \mathbb {R}^{d} \, : \, \exists \, 1 \le i \le d \, \text { s.t. } x_{i} \in \frac{1}{N} \mathbb {Z} \}\). Furthermore, \(x^{*}\) is continuous on \(A^{c}\) and A has measure 0. It is clear that the domain of \(x^{*}\) can be extended from \(A^{c}\) to \(\mathbb {R}^{d}\) in a measurable way, e.g., by choosing the smallest allowed value in the usual lexicographic order on \(\mathbb {R}^{d}\).

References

Chen, F., Lovász, L., Pak, I.: Lifting markov chains to speed up mixing. In: Proceedings of the 31st Annual ACM Symposium on Theory of Computing, pp. 275–281. ACM (1999)

Diaconis, P.: Group Representations in Probability and Statistics, volume 11 of IMS Lecture Notes—Monograph Series. Institute of Mathematical Statistics, Hayward (1988)

Diaconis, P., Holmes, S., Neal, R.M.: Analysis of a nonreversible Markov chain sampler. Ann. Appl. Probab. 10(3), 726–752 (2000)

Diaconis, P.: The Markov chain Monte Carlo revolution. Bull. Am. Math. Soc. 46(1), 179–205 (2008)

Diaconis, P., Miclo, L.: On the spectral analysis of second-order Markov chains. Ann. Fac. Sci. Toulouse Math. 22, 573–621 (2012)

Gibbs, A.L., Su, F.E.: On choosing and bounding probability metrics. Int. Stat. Rev. 70(3), 419–435 (2002)

Gomez, F., Schmidhuber, J., Sun, Y.: Improving the asymptotic performance of Markov chain Monte-Carlo by inserting vortices. Advances in Neural Information Processing Systems (2010)

Levin, D., Peres, Y., Wilmer, E.: Markov Chains and Mixing Times. American Mathematical Society, Providence (2009)

Morris, B., Peres, Y.: Evolving sets, mixing and heat kernel bounds. Probab. Theory Relat. Fields 133, 245–266 (2006)

Neal, R.: Improving asymptotic variance of MCMC estimators: Non-reversible chains are better. Technical Report No. 0406, Department of Statistics, University of Toronto (2004)

Peres, Y., Sousi, P.: Mixing times are hitting times of large sets. J. Theor. Probab. 28(2), 488–519 (2015)

Rosenthal, J.: Random rotations: characters and random walks on \(\text{ SO }(n)\). Ann. Probab. 22, 398–423 (1994)

Acknowledgements

The first author, KR, is partially supported by NSF Grant DMS-1407504 and the second author, AMS, is partially supported by an NSERC Grant. This project was started while AMS was visiting ICERM, and he thanks ICERM for its generous support.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Comparison to Previous Results on Discrete Spaces

We point out that Theorem 2 can give better bounds than Theorem 3.1 of [1] in some cases. Let \(\{ X_{t} \}_{t \in \mathbb {N}}\) be a Markov chain on a finite state space that satisfies Assumptions 2.7, with associated conductance \(\varPhi \), mixing time \(\tau \), and constants \(\beta , \gamma > 0\) satisfying Eq. (2.13). Then let \(\{ \widehat{X}_{t} \}_{t \in \mathbb {N}}\) be a lift of \(\{ X_{t} \}_{t \in \mathbb {N}}\) with mixing time \(\widehat{\tau }\) and conductance \(\widehat{\varPhi }\). By the same calculation as contained in inequality (4.11) (but omitting the lines involving superscript N’s), we have:

We give an example for which this bound is better than Theorem 3.1 of [1]. We fix \(k, n \in \mathbb {N}\) and consider a random walk \(\{ X_{t} \}_{t \in \mathbb {N}}\) on the cycle \(\mathbb {Z}_{kn} = \{1,2,\ldots , nk \}\). Recall that the usual graph distance on \(\mathbb {Z}_{kn}\) is given by

We then define the transition kernel K by:

This walk has stationary distribution \(\pi (x) = \frac{1}{kn}\) for all \(x \in \mathbb {Z}_{kn}\). It can be represented according to the form of Eq. (6.2) using the weights

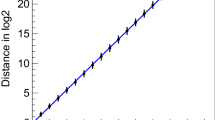

Using arguments identical to those in Theorem 2 of Chapter 3 of [2], the mixing time \(\tau \) of this walk can be shown to be \(\tau = \varTheta (n^{2})\). Theorem 3.1 of [1] (combined with remark 2.1) implies that any lift of this chain must have mixing time at least \(\widehat{\tau } = \varOmega \left( \frac{n}{\sqrt{\log (kn)}} \right) \).

In the other direction, set \(\beta = \frac{1}{12n}\), \(\gamma = \frac{3}{4}\). For any set \(S \subset \mathbb {Z}_{kn}\) with \(\pi (S) \le \frac{1}{12n}\),

Thus, part (1) of Assumptions 2.7 is satisfied with \(\beta = \frac{1}{12n}\) and \(\gamma = \frac{3}{4}\). Using the representation of K given by Eq. (6.3), we have \(\pi _{*} = \frac{2}{n}\). By inequality (6.1), then, we find \(\widehat{\tau } = \varOmega \left( \frac{n}{\log (n)} \right) \).

Let \(\{ k(\lambda ) \}_{ \lambda \in \mathbb {N}}\) be a sequence with the property \(k(\lambda ) \gg n(\lambda )^{\log (n(\lambda ))}\). Then let \(\{X_{t}^{(\lambda )}\}_{t \ge 0}\) be the Markov chain with kernel given by Eq. (6.2) with \(k = k(\lambda )\). In this regime, the bound from inequality (6.1) is tighter than that of Theorem 3.1 of [1]. More generally, we expect inequality (6.1) to be tighter than Theorem 3.1 of [1] when the discrete state space \(\varOmega \) of the Markov chain \(\{X_{t}\}_{t \ge 0}\) is extremely large compared to the support of \(K(x,\cdot )\) for all x.

Appendix 2: Mixing Bound for the Continuous Cycle Walk

In this section, we show that the kernel K from example 1 has mixing time \(\tau = \varTheta (c^{-2})\). Let \(\mu \) be the uniform measure on \([-c,c]\) as a subset of the torus [0, 1]. It is well known that, as a compact abelian Lie group, the characters of the torus [0, 1] are given by \(\chi _{n}(x) = e^{2 \pi inx}\). We calculate

By Lemma 4.3 of [12], this means that for \(A >1\), \(T > A c^{-2}\) and \(S \subseteq [0,1]\) measurable, we have for all \(c < C_{0}\) sufficiently small that

Since each of the three terms in the final line can be made arbitrarily small (uniformly in \(c < C_{0}\)) by choosing A large, this implies \(\tau = O(c^{-2})\).

To show the reverse inequality, let \(T(c) = A_{c} c^{-2}\), for some sequence \(A_{c} \rightarrow 0\) as \(c \rightarrow \infty \). For a copy of the chain started at \(X_{0}=0\), we have by Bernstein’s inequality \(P[ \vert X_{T(c)} \vert > \frac{1}{10}] \le 2 e^{- \frac{3}{2 A_{c}} \frac{1}{30 + c} } \rightarrow 0\). Thus, \(\limsup _{c \rightarrow \infty } \vert \vert \mathcal {L}(X_{T(c)}) - U \vert \vert _{TV} \ge \frac{1}{2}\), and so \(\tau = \varOmega (c^{-2})\).

Rights and permissions

About this article

Cite this article

Ramanan, K., Smith, A. Bounds on Lifting Continuous-State Markov Chains to Speed Up Mixing. J Theor Probab 31, 1647–1678 (2018). https://doi.org/10.1007/s10959-017-0745-5

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10959-017-0745-5