Abstract

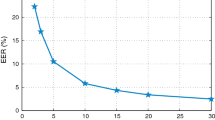

Our initial speaker verification study exploring the impact of mismatch in training and test conditions finds that the mismatch in sensor and acoustic environment results in significant performance degradation compared to other mismatches like language and style (Haris et al. in Int. J. Speech Technol., 2012). In this work we present a method to suppress the mismatch between the training and test speech, specifically due to sensor and acoustic environment. The method is based on identifying and emphasizing more speaker specific and less mismatch affected vowel-like regions (VLRs) compared to the other speech regions. VLRs are separated from the speech regions (regions detected using voice activity detection (VAD)) using VLR onset point (VLROP) and are processed independently during training and testing of the speaker verification system. Finally, the scores are combined with more weight to that generated by VLRs as those are relatively more speaker specific and less mismatch affected. Speaker verification studies are conducted using the mel-frequency cepstral coefficients (MFCCs) as feature vectors. The speaker modeling is done using the Gaussian mixture model-universal background model and the state-of-the-art i-vector based approach. The experimental results show that for both the systems, proposed approach provides consistent performance improvement on the conversational approach with and without different channel compensation techniques. For instance, with IITG-MV Phase-II dataset for headphone trained and voice recorder test speech, the proposed approach provides a relative improvement of 25.08 % (in EER) for the i-vector based speaker verification systems with LDA and WCCN compared to conventional approach.

Similar content being viewed by others

References

Ananthapadmanabha, T. V., & Yegnanarayana, B. (1979). Epoch extraction from linear prediction residual for identification of closed glottis interval. IEEE Transactions on Acoustics, Speech, and Signal Processing, 4, 309–319.

Auckenthaler, R., Carey, M., & Thomas, H. L. (2000). Score normalization for text-independent speaker verification systems. Digital Signal Processing, 10(1), 42–54.

Dehak, N., Kenny, P., Dehak, R., Dumouchel, P., & Ouellet, P. (2011). Front-end factor analysis for speaker verification. IEEE Transactions on Audio, Speech, and Language Processing, 19(4), 788–798.

Drygajlo, A., & El-Muliki, M. (1998). Speaker verification in noisy environment with combined spectral subtraction and missing data theory. In Proc. int. conf. acoust. speech, and signal processing, Seattle, Washington, USA (Vol. 1, pp. 121–124).

Eatock, J. P., & Mason, J. S. (1994). A quantitative assessment of the relative speaker discriminating properties of phonemes. In Proc. int. conf. acoust. speech, and signal processing, Adelaide, Australia (Vol. 1, pp. 133–136).

Haris, B. C., Pradhan, G., Misra, A., Shukla, S., Sinha, R., & Prasanna, S. R. M. (2011). Multi-variability speech database for robust speaker recognition. In Proc. national conf. on communication (NCC), Bangalore, India.

Haris, B. C., Pradhan, G., Misra, A., Prasanna, S. R. M., Das, R. K., & Sinha, R. (2012). Multivariability speaker recognition database in Indian scenario. International Journal of Speech Technology.

Hatch, A. O., Kajarekar, S., & Stolcke, A. (2006). Within-class covariance normalization for svm based speaker recognition. In ICSLP, Pittsburgh, PA, USA (pp. 1471–1474).

Hermansky, H., Morgan, N., Bayya, A., & Kohn, P. (1992). RASTA-PLP speech analysis technique. In Proc. IEEE int. conf. acoust., speech, and signal processing, San Francisco, CA (Vol. 1, pp. 121–124).

Kim, H. K., & Rose, R. C. (2003). Cepstrum-domain acoustic feature compensation based on decomposition of speech and noise for ASR in noisy environments. IEEE Transactions on Speech and Audio Processing, 11(5), 435–446.

Kinnunen, T., & Li, H. (2010). An overview of text-independent speaker recognition: From features to supervectors. Speech Communication, 52, 12–40.

Krishnamoorthy, P., & Prasanna, S. R. M. (2009). Reverberant speech enhancement by temporal and spectral processing. IEEE Transactions on Audio, Speech, and Language Processing, 17(2), 253–266.

Krishnamoorthy, P., & Prasanna, S. R. M. (2011). Enhancement of noisy speech by temporal and spectral processing. Speech Communication, 53, 154–174.

Murthy, K. S. R., & Yegnanarayana, B. (2008). Epoch extraction from speech signals. IEEE Transactions on Audio, Speech, and Language Processing, 16, 1602–1613.

Murty, K. S. R., Yegnanarayana, B., & Joseph, M. A. (2009). Characterization of glottal activity from speech signals. IEEE Signal Processing Letters, 16(6), 469–472.

Naylor, P. A., Kounoudes, A., Gudnason, J., & Brookes, M. (2007). Estimation of glottal closure instants in voiced speech using the DYPSA algorithm. IEEE Transactions on Audio, Speech, and Language Processing, 15(1), 34–43.

Pelecanos, J., & Sridharan, S. (2001). Feature warping for robust speaker verification. In Speaker Odyssey: the speaker recognition workshop, Crete, Greece (pp. 1–6).

Pradhan, G., & Prasanna, S. R. M. (2011). Speaker verification under degraded condition: a perceptual study. International Journal of Speech Technology, 14(4), 405–417.

Prasanna, S. R. M., & Pradhan, G. (2011). Significance of vowel-like regions for speaker verification under degraded condition. IEEE Transactions on Audio, Speech, and Language Processing, 19(8), 2552–2565.

Prasanna, S. R. M., & Yegnanarayana, B. (2005). Detection of vowel onset point events using excitation source information. In Proc. INTERSPEECH, Lisbon, Portugal (pp. 1133–1136).

Prasanna, S. R. M., Reddy, B. V. S., & Krishnamoorthy, P. (2009). Vowel onset point detection using source, spectral peaks, and modulation spectrum energies. IEEE Transactions on Audio, Speech, and Language Processing, 17(4), 556–565.

Reynolds, D. A. (2003). Channel robust speaker verification via feature mapping. In Proc. int. conf. acoust. speech, and signal processing, Hong Kong (Vol. 2, pp. 53–56).

Reynolds, D. A., Quatieri, T. F., & Dunn, R. B. (2000). Speaker verification using adapted Gaussian mixture models. Digital Signal Processing, 10, 19–41.

Solomonoff, A., Campbell, W. M., & Boardman, I. (2005). Advances in channel compensation for svm speaker recognition. In Proc. int. conf. acoust. speech, and signal processing, Philadelphia, USA (Vol. 1, pp. 629–632).

Teunen, R., Shahshahani, B., & Heck, L. P. (2000). A model-based transformation approach to robust speaker recognition. In Proc. int. conf. on spoken language processing, Beijing, China (Vol. 2, pp. 495–498).

TIMIT (1990). TIMIT acoustic-phonetic continuous speech corpus. NTIS Order PB91-505065, National Institute of Standards and Technology, Gaithersburg, Md, USA, 1990, Speech Disc 1-1.1.

Varga, A., & Steeneken, H. J. M. (1993). Assessment for automatic speech recognition: II. noisex-92: A database and an experiment to study the effect of additive noise on speech recognition systems. Speech Communication, 12(3), 247–251.

Xiang, B., Chaudhari, U. V., Navratil, J., Ramaswamy, G. N., & Gopinath, R. A. (2002). Short-time gaussianization for robust speaker verification. In Proc. int. conf. acoust. speech, and signal processing, Orlando, Florida, USA (Vol. 1, pp. 681–684).

Yegnanarayana, B., & Murthy, P. S. (2000). Enhancement of reverberant speech using lp residual signal. IEEE Transactions on Speech and Audio Processing, 8(3), 267–281.

Yegnanarayana, B., Avendano, C., Hermansky, H., & Murthy, P. S. (1999). Speech enhancement using linear prediction residual. Speech Communication, 28, 25–42.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Pradhan, G., Haris, B.C., Prasanna, S.R.M. et al. Speaker verification in sensor and acoustic environment mismatch conditions. Int J Speech Technol 15, 381–392 (2012). https://doi.org/10.1007/s10772-012-9159-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-012-9159-z