Abstract

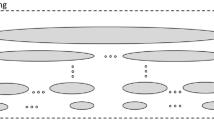

Standard practice in building models in software engineering normally involves three steps: collecting domain knowledge (previous results, expert knowledge); building a skeleton of the model based on step 1 including as yet unknown parameters; estimating the model parameters using historical data. Our experience shows that it is extremely difficult to obtain reliable data of the required granularity, or of the required volume with which we could later generalize our conclusions. Therefore, in searching for a method for building a model we cannot consider methods requiring large volumes of data. This paper discusses an experiment to develop a causal model (Bayesian net) for predicting the number of residual defects that are likely to be found during independent testing or operational usage. The approach supports (1) and (2), does not require (3), yet still makes accurate defect predictions (an R 2 of 0.93 between predicted and actual defects). Since our method does not require detailed domain knowledge it can be applied very early in the process life cycle. The model incorporates a set of quantitative and qualitative factors describing a project and its development process, which are inputs to the model. The model variables, as well as the relationships between them, were identified as part of a major collaborative project. A dataset, elicited from 31 completed software projects in the consumer electronics industry, was gathered using a questionnaire distributed to managers of recent projects. We used this dataset to validate the model by analyzing several popular evaluation measures (R 2, measures based on the relative error and Pred). The validation results also confirm the need for using the qualitative factors in the model. The dataset may be of interest to other researchers evaluating models with similar aims. Based on some typical scenarios we demonstrate how the model can be used for better decision support in operational environments. We also performed sensitivity analysis in which we identified the most influential variables on the number of residual defects. This showed that the project size, scale of distributed communication and the project complexity cause the most of variation in number of defects in our model. We make both the dataset and causal model available for research use.

Similar content being viewed by others

References

AgenaRisk (2007) Bayesian Network Software Tool. www.agenarisk.com

Boehm B, Clark B, Horowitz E, Westland C, Madachy R, Selby R (1995) Cost models for future software life cycle process: COCOMO 2.0. Ann Softw Eng 1(1):57–94

Boetticher G, Menzies T, Ostrand T (2008) PROMISE Repository of Empirical Software Engineering Data http://promisedata.org/ repository, West Virginia University, Department of Computer Science

Cangussu JW, DeCarlo RA, Mathur AP (2003) Using sensitivity analysis to validate a state variable model of the software test process. IEEE Trans Softw Eng 29(5):430–443

Chulani S, Boehm B (1999) Modelling Software Defect Introduction and Removal: COQUALMO (COnstructive QUAlity MOdel). Technical Report USC-CSE-99-510, University of Southern California, Center for Software Engineering

Chulani S, Boehm B, Steece B (1999) Bayesian analysis of empirical software engineering cost models. IEEE Trans Softw Eng 25(4):573–583

Compton T, Withrow C (1990) Prediction and Control of Ada Software Defects. J Syst Softw 12:199–207

eXdecide (2005) Quantified Risk Assessment and Decision Support for Agile Software Projects. EPSRC project EP/C005406/1. www.dcs.qmul.ac.uk/~norman/radarweb/core_pages/projects.html

Fenton NE, Pfleeger SL (1998) Software Metrics: A Rigorous and Practical Approach (2nd Edition). PWS Publishing, Boston

Fenton NE, Neil M (1999) A critique of software defect prediction models. IEEE Trans Software Eng 25(5):675–689

Fenton NE, Krause P, Neil M (2002a) Probabilistic modelling for software quality control. J Appl Non-Class Log 12(2):173–188

Fenton NE, Krause P, Neil M (2002b) Software measurement: uncertainty and causal modelling. IEEE Software. 10(4):116–122

Fenton NE, Marsh W, Neil M, Cates P, Forey S, Tailor M (2004) Making Resource Decisions for Software Projects. Proceedings of 26th International Conference on Software Engineering (ICSE 2004), Edinburgh, United Kingdom, 397–406

Fenton NE, Neil M, Caballero JG (2007a) Using ranked nodes to model qualitative judgments in Bayesian networks. IEEE Trans Knowl Data Eng 19(10):1420–1432

Fenton NE, Neil M, Marsh W, Hearty P, Marquez D, Krause P, Mishra R (2007b) Predicting software defects in varying development lifecycles using Bayesian nets. Inf Softw Technol 49(1):32–43

Fenton N, Neil M, Marsh W, Hearty P, Radliński Ł, Krause P (2007c) Project Data Incorporating Qualitative Factors for Improved Software Defect Prediction. Proceedings of the 3rd International Workshop on Predictor Models in Software Engineering. International Conference on Software Engineering. IEEE Computer Society, Washington, DC: 2

Gaffney JR (1984) Estimating the Number of Faults in Code. IEEE Trans Softw Eng 10(4):141–152

Henry S, Kafura D (1984) The evaluation of software system’s structure using quantitative software metrics. Softw Pract Exp 14(6):561–573

ISBSG (2007) Repository Data Release 10. International Software Benchmarking Standards Group. www.isbsg.org

Jensen FV (1996) An introduction to Bayesian networks. UCL Press, London

Jones C (1986) Programmer productivity. McGraw Hill, New York

Jones C (1999) Software sizing. IEE Review 45(4):165–167

Kitchenham BA, Pickard LM, MacDonell SG, Shepperd MJ (2001) What accuracy statistics really measure. IEE Proc Softw 148(3):81–85

Lipow M (1982) Number of Faults per Line of Code. IEEE Trans Softw Eng 8(4):437–439

Menzies T, Greenwald J, Frank A (2007) Data mining static code attributes to learn defect predictors. IEEE Trans Softw Eng 32(11):1–12

MODIST (2003) Models of Uncertainty and Risk for Distributed Software Development. EC Information Society Technologies Project IST-2000-28749. www.modist.org

MODIST BN Model (2007) http://promisedata.org/repository/data/qqdefects/

Musilek P, Pedrycz W, Nan Sun, Succi G (2002) On the Sensitivity of COCOMO II Software Cost Model. Proc of the 8th IEEE Symposium on Software Metrics: 13–20

Neapolitan RE (2004) Learning Bayesian networks. Pearson Prentice Hall, Upper Saddle River

Neil M, Krause P, Fenton NE (2003) Software Quality Prediction Using Bayesian Networks Software Engineering with Computational Intelligence (Ed T.M. Khoshgoftaar). Kluwer, Chapter 6

Ostrand TJ, Weyuker EJ, Bell RM (2005) Predicting the location and number of faults in large software systems. IEEE Trans Softw Eng 31(4):340–355

Radliński Ł, Fenton N, Neil M, Marquez D (2007) Improved Decision-Making for Software Managers Using Bayesian Networks, Proc. of 11th IASTED Int. Conf. Software Engineering and Applications (SEA), Cambridge, MA: 13–19

Saltelli A (2000) What is Sensitivity Analysis. In: Saltelli A, Chan K, Scott EM (eds) Sensitivity Analysis. John Wiley & Sons, pp. 4–13

SIMLAB (2004) Simulation Environment for Uncertainty and Sensitivity Analysis Version 2.2. Joint Research Centre of the European Commission. http://simlab.jrc.cec.eu.int/

Stensrud E, Foss T, Kitchenham B, Myrtveit I (2002) An Empirical Validation of the Relationship Between the Magnitude of Relative Error and Project Size. Proc. of 8th IEEE Symposium on Software Metrics: 3–12

Wagner S (2007a) An Approach to Global Sensitivity Analysis: FAST on COCOMO. Proceedings of the First International Symposium on Empirical Software Engineering and Measurement: 440–442

Wagner S (2007b) Global Sensitivity Analysis of Predictor Models in Software Engineering. Proceedings of the 3rd International Workshop on Predictor Models in Software Engineering. International Conference on Software Engineering. IEEE Computer Society, Washington, DC: 3

Winkler RL (2003) An introduction to Bayesian inference and decision,, 2nd edn. Probabilistic Publishing, Gainesville

Acknowledgments

This paper is based in part on work undertaken on the following funded research projects: MODIST (EC Framework 5 Project IST-2000-28749), SCULLY (EPSRC Project GR/N00258), SIMP (EPSRC Systems Integration Initiative Programme Project GR/N39234), and eXdecide (2005). We acknowledge the insightful suggestions of two anonymous referees that led to significant improvements in the paper. We also acknowledge the contributions of individuals from Agena, Philips Electronics, Israel Aircraft Industries, QinetiQ and BAE Systems. We dedicate this paper to the late Colin Tully, who acted as an enthusiastic and supportive reviewer of this work during the MODIST project.

Author information

Authors and Affiliations

Corresponding author

Additional information

Editor: Tim Menzies

Rights and permissions

About this article

Cite this article

Fenton, N., Neil, M., Marsh, W. et al. On the effectiveness of early life cycle defect prediction with Bayesian Nets. Empir Software Eng 13, 499–537 (2008). https://doi.org/10.1007/s10664-008-9072-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10664-008-9072-x