Abstract

The increasing diffusion of standardized assessments of students’ competences has been accompanied by an increasing need to make reliable data available to all stakeholders of the educational system (policy makers, teachers, researchers, families and students). In this light, we propose a multistep approach to detect and correct teacher cheating, which decreases the quality of student data offered by the Italian Institute for the Educational Evaluation of Instruction and Training. Our method integrates the “mechanistic” logic of the fuzzy clustering technique with a statistical model-based approach, and it aims to improve the detection of cheating and to correct test scores at both the class and student level. The results show a normalization of the scores and a stronger correction on data for Southern regions, where the propensity to cheat appears to be highest.

Source: Authors ‘elaboration on INVALSI data

Source: Authors ‘elaboration on INVALSI data (s.y. 2013/14)

Source: Authors’elaboration on INVALSI data (s.y. 2013/14)

Source: Authors ‘elaboration on INVALSI data (s.y. 2013/14)

Source: Authors’elaboration on INVALSI data (s.y. 2013/14)

Source: Authors ‘elaboration on INVALSI data (s.y. 2013/14)

Source: Authors ‘elaboration on INVALSI data (s.y. 2013/14)

Similar content being viewed by others

Notes

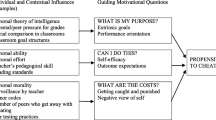

High-stakes testing refers to the use of standardized tests as objective measurements to award progress and register standards of quality. In general, “high stakes” means that test results are used to determine funding reductions, advancement (grade promotion or graduation for students), or salary increases (for teachers and administrators).

The Atlanta Public Schools cheating scandal is one of the most famous and largest in United States history. In 2011, special investigators released a report finding that 178 teachers or principals at 44 of 56 schools (more than 75%) in the Atlanta school system were involved in the falsification of test scores on the state of Georgia’s 2009 high-stakes test.

Actually (school year 2016/17), the administration of national tests is mandatory in primary (years 2, 5), lower secondary (year 3) and upper secondary schools (year 2).

In 2001, INVALSI launched a pilot project to evaluate student competencies involving the schools on a voluntary basis. The pilot project was repeated in subsequent years (until the s.y 2005/06) in order to develop the survey design.

However, the algorithm developed to detect cheating has been implemented for all other school grades examined by the INVALSI, and the results are available on request.

The following classification of Italian macro areas is considered: North-West (Liguria, Lombardia, Piemonte and Valle d’Aosta), North-East (Emilia Romagna, Friuli-Venezia Giulia, Trentino-Alto Adige and Veneto), Center (Lazio, Marche, Toscana, and Umbria), South and Islands (Abruzzo, Campania, Molise, Puglia, Basilicata, Calabria, Sardegna and Sicilia).

To deal with the problem of missing data, we followed the strategy adopted by (among others) Fuchs and Woessmann (2007). Missing data were handled through imputation, replacing the missing values with school or province (NUTS 3 level) means (or medians) and including a dummy variable vector in the model. Each dummy takes the value 1 for observations with missing (imputed) data and 0 otherwise. By including this dummies vector in the model, the observations with missing data on each variable can have their own intercepts. We also included the interaction terms between the imputation dummies and the data vectors, which allows them to have their own slopes for the respective variables.

The results of each model are available on request.

The sampling scheme adopted by INVALSI is similar to the scheme used by IEA TIMMS. In the first stage, several schools in each region were randomly selected by probabilistic sampling, with probability of inclusion proportional to school size. In the second stage, one or two classes for each tested grade within each treated school were selected by simple random sampling.

Since the correction of class score is applied only if the observed score is higher than the estimated one (4), the potential score will always be less than or equal to the observed one (\( \tilde{\mu }_{j}^{nm} \le \mu_{j}^{nm} ) \), and consequently the correction factor will range from 0 to 1 (0 ≤ Co ≤ 1).

The Bravais Pearson correlation between math test scores and quarter grades is equal to 0.52 for the 5th grade students of monitored classes (school year 2013/14).

The geometric mean has been chosen (compared to the arithmetic mean) because it provides a better balance between plausibility indices (in the case of particularly high values of one of the two) and as it is always lower than (or equal to) the arithmetic mean. Consequently, the geometric mean will tend to produce lower values of the cheating index according to the logic underlying the new proposed procedure.

The selection of this subset of classes (with simulated cheating) is carried out by a random uniform distribution.

The beta distribution is used to model phenomena that are constrained to be between 0 and 1, such as probabilities, proportions, and percentages. The two shape parameters, α and β, are estimated within each macro area through the maximum likelihood method (see, also, Kotz 2006, for further details).

See Hastie et al. (2009) for an extended discussion of the CART approach.

The propensity score method was first introduced by Rosembaum and Rubin (1983) for the estimation of the casual treatment effect. In the last years, many scholars have used this method in a predictive fashion to obtain imputed values of a target variable (Borra et al. 2013; Gimenez-Nadal and Molina 2013).

Currently the INVALSI conducts a sample survey that aims to observe a large set of school variables using a questionnaire administered to schools’ principals. In the future, it is planned to extend this type of survey to all schools in order to create an integrated database that combines information at a student level with those at the school level.

References

Agasisti T, Longobardi S (2014) Inequality in education: can Italian disadvantaged students close the gap? J Behav Exp Econ 52:8–20

Ahn T, Vigdor J (2014) The impact of No Child Left Behind’s accountability sanctions on school performance: regression discontinuity evidence from North Carolina. NBER WP 20511

Angrist J, Battistin E, Vuri D (2017) In a small moment: class size and moral hazard in the Italian mezzogiorno. Am Econ J Appl Econ 9(4):216–249

Apperson J, Bueno C, Sass TR (2016) Do the cheated ever prosper? The long-run effects of test-score manipulation by teachers on student outcomes. in: CALDER working paper 155

Bertoni M, Brunello G, Rocco L (2013) When the cat is near the mice won’t play: the effect of external examiners in Italian schools. J Publ Econ 104:65–77

Borra C, Sevilla A, Gershuny JI (2013) Calibrating time use estimates for the British household panel survey. Soc Indic Res 114(3):1211–1224

Bratti M, Checchi D, Filippin A (2007) Geographical differences in Italian students’ mathematical competencies: evidence from PISA 2003. Giornale degli Economisti e Annali di Economia 66(3):299–333

Breiman L, Friedman J, Olshen R, Stone C (1984) Classification and regression trees. Wadsworth, Belmont

Brunello G, Checchi D (2007) Does school tracking affect equality of opportunity? New international evidence. Econ Policy 22:781–861

Cohodes S (2016) Teaching to the student: charter school effectiveness in spite of perverse incentives. Educ Finance Policy 11(1):1–42

Dee TS, Dobbie W, Jacob BA, Rockoff J (2016) The causes and consequences of test score manipulation: evidence from the New York regents examinations. NBER WP 22165

Diamond R, Persson P (2016) The long-term consequences of teacher discretion in grading of high-stakes tests. NBER WP 22207

Ferrer-Esteban G (2013) Rationale and incentives for cheating in the standardized tests of the Italian assessment system. FGA working paper, Giovanni Agnelli Foundation (Turin)

Figlio DN (2006) Testing, crime and punishment. J Public Econ 90(4–5):837–851

Figlio DN, Getzler LS (2006) Accountability, Ability, and Disability: Gaming the System? In: Gronberg TG, Jansen DW (eds) Advances in applied microeconomics, 14. Elsevier Science Press, Oxford, pp 35–49

Fryer RG Jr, Levitt SD, List JA, Sadoff S (2012) Enhancing the efficacy of teacher incentives through loss aversion: A field experiment. NBER working paper 18237

Fuchs T, Woessmann L (2007) What accounts for international differences in student performance? A re-examination using PISA data. Empir Econ 32(2/3):433–464

Gimenez-Nadal JI, Molina JA (2013) Parents’ education as a determinant of educational childcare time. J Popul Econ 26(2):719–749

Hastie T, Tibshirani R, Friedman J (2009) The elements of statistical learning: data mining, inference, and prediction. Springer Series in Statistics, New York

Hussain I (2012) Subjective performance evaluation in the public sector: evidence from school inspections. CEE discussion paper 135, London School of Economics

INVALSI (2012). Rilevazioni nazionali sugli apprendimenti 2011–2012. Invalsi (Roma)

Jacob BA (2005) Accountability, incentives and behavior: the impact of high-stakes testing in the Chicago Public Schools. J Public Econ 89:761–796

Jacob BA, Levitt SD (2003) Rotten apples: an investigation of the prevalence and predictors of teacher cheating. Q J Econ 118(3):843–877

Kotz S (2006) Handbook of beta distribution and its applications. Biometrics 62:309–310

Lavy V (2009) Performance pay and teachers’ effort, productivity, and grading ethics. Am Econ Rev 99(5):1979–2011

Lazear PE (2006) Speeding, terrorism and teaching to the test. Q J Econ 121(3):1029–1061

Lucifora C, Tonello M (2015) Cheating and social interactions. Evidence from a randomized experiment in a national evaluation program. J Econ Behav Organ 115(C):45–66

Lucifora C, Tonello M (2016) Monitoring and sanctioning cheating at school: what works? Evidence from a national evaluation program, DISCE-working papers, 51, Dipartimento di Economia e Finanza

Martinelli C, Parker SW, Pérez-Gea AC, Rodrigo R (2015) Cheating and incentives: learning from a policy experiment. Interdisciplinary Center for Economic Science, George Mason University, working paper, October 2015

Mechtenberg L (2009) Cheap talk in the classroom: how biased grading at school explains gender differences in achievements, career choices and wages. Rev Econ Stud 76:1431–1459

Neal D, Schanzenbach DW (2010) Left behind by design: proficiency counts and test-based accountability. Rev Econ Stat 92:263–283

Paccagnella M, Sestito P (2014) School cheating and social capital. Educ Econ 22(4):367–388. https://doi.org/10.1080/09645292.2014.904277

Pereda-Fernández S (2016) A new method for the correction of test scores manipulation. Working paper no. 1047, Bank of Italy

Quintano C, Castellano R, Longobardi S (2009) A fuzzy clustering approach to improve the accuracy of Italian student data. Stat Appl 7(2):149–171

Quintano C, Castellano R, Longobardi S (2012) The effects of socioeconomic background and test-taking motivation on Italian students’ achievement. In: Di Ciaccio A, Coli M, Ibañez JMA (eds) Advanced statistical methods for the analysis of large data-sets. Springer, Berlin, pp 1–484

Raudenbush SW, Bryk AS (1992) Hierarchical linear models. Sage, Newbury Park

Rosembaum P, Rubin D (1983) The central role of the propensity score in observational studies for casual effects. Biometrika 70:41–50

Snijders T, Bosker R (1999) Multilevel analysis. Sage Publications, London

Wesolowsky G (2000) Detecting excessive similarity in answers on multiple choice exams. J Appl Stat 27(7):909–921

Zhao Z (2008) Sensitivity of propensity score methods to the specifications. Econ Lett 98(3):309–319

Acknowledgments

We are extremely grateful to Paolo Sestito (Bank of Italy) for his conceptual and theoretical guidance. We are indebted to Roberto Ricci (INVALSI), Giovanni De Luca (University of Naples “Parthenope”) and Federica Gioia (University of Naples “Parthenope”) for helpful comments and discussions. The authors also thank the editor and the two anonymous referees for their valuable suggestions.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Longobardi, S., Falzetti, P. & Pagliuca, M.M. Quis custiodet ipsos custodes? How to detect and correct teacher cheating in Italian student data. Stat Methods Appl 27, 515–543 (2018). https://doi.org/10.1007/s10260-018-0426-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10260-018-0426-2