Abstract

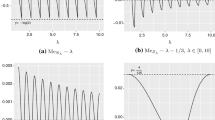

The asymptotic relative efficiency of the mean deviation with respect to the standard deviation is 88 % at the normal distribution. In his seminal 1960 paper A survey of sampling from contaminated distributions, J. W. Tukey points out that, if the normal distribution is contaminated by a small \(\epsilon \)-fraction of a normal distribution with three times the standard deviation, the mean deviation is more efficient than the standard deviation—already for \(\epsilon < 1\,\%\). In the present article, we examine the efficiency of Gini’s mean difference (the mean of all pairwise distances). Our results may be summarized by saying Gini’s mean difference combines the advantages of the mean deviation and the standard deviation. In particular, an analytic expression for the finite-sample variance of Gini’s mean difference at the normal mixture model is derived by means of the residue theorem, which is then used to determine the contamination fraction in Tukey’s 1:3 normal mixture distribution that renders Gini’s mean difference and the standard deviation equally efficient. We further compute the influence function of Gini’s mean difference, and carry out extensive finite-sample simulations.

Similar content being viewed by others

Notes

Here, the choice of the location estimator is unambiguous: high breakdown point robustness is the main selling feature of the MAD.

For simplicity, we define the \(p\)-quantile of distribution \(F\) as the value of the quantile function \(F^{-1}(p) = \inf \{ x |\, F(x) \le p\}\). For all population distributions we consider, there is no ambiguity, but note that \(\hat{F}_n^{-1}(1/2)\) and the sample median \(md(\hat{F}_n)\) as defined above are generally different.

References

Ahlfors LV (1966) Complex analysis, 2nd edn. McGraw-Hill, New York

Babu GJ, Rao CR (1992) Expansions for statistics involving the mean absolute deviations. Ann Inst Stat Math 2(44):387–403

Bickel PJ, Lehmann EL (1976) Descriptive statistics for nonparametric models, III. Dispersion. Ann Stat 6:1139–1148

Gorard S (2005) Revisiting a 90-year-old debate: the advantages of the mean deviation. Br J Educ Stud 4:417–430

Hall P, Welsh A (1985) Limit theorems for the median deviation. Ann Inst Stat Math 1(37):27–36

Hampel FR (1974) The influence curve and its role in robust estimation. J Am Stat Assoc 69:383–393

Hampel FR, Ronchetti EM, Rousseeuw PJ, Stahel WA (1986) Robust statistics. The approach based on influence functions. Wiley series in probability and mathematical statistics. Wiley, New York

Hoeffding W (1948) A class of statistics with asymptotically normal distribution. Ann Math Stat 19:293–325

Hojo T (1931) Distribution of the median, quartiles and interquartile distance in samples from a normal population. Biometrika 3–4(23):315–360

Huber PJ, Ronchetti EM (2009) Robust statistics. Wiley series in probability and statistics, 2nd edn. Wiley, Hoboken

Kenney F, Keeping E (1952) Mathematics of statistics. Part two. D. Van Nostrand Company, Inc., Princeton

Lax DA (1985) Robust estimators of scale: finite-sample performance in long-tailed symmetric distributions. J Am Stat Assoc 391(80):736–741

Lomnicki ZA (1952) The standard error of Gini’s mean difference. Ann Math Stat 4(23):635–637

Nair US (1936) The standard error of Gini’s mean difference. Biometrika 28:428–436

Pham-Gia T, Hung T (2001) The mean and median absolute deviations. Math Comput Model 7(34):921–936

R Development Core Team (2010) R: a language and environment for statistical computing. R Foundation for Statistical Computing, Vienna. http://www.R-project.org/. ISBN:3-900051-07-0

Rousseeuw P, Croux C, Todorov V, Ruckstuhl A, Salibian-Barrera M, Verbeke T, Manuel Koller MM (2014) robustbase: basic robust statistics. . R package version 0.91-1. http://CRAN.R-project.org/package=robustbase

Rousseeuw PJ, Croux C (1993) Alternatives to the median absolute deviation. J Am Stat Assoc 424(88):1273–1283

Tukey JW (1960) A survey of sampling from contaminated distributions. In: Olkin I et al (eds) Contributions to probability and statistics. Essays in honor of Harold Hotteling. Stanford University Press, Stanford, pp 448–485

Yitzhaki S (2003) Gini’s mean difference: a superior measure of variability for non-normal distributions. Metron 2(61):285–316

Acknowledgments

We are indebted to Herold Dehling for introducing us to the theory of U-statistics, to Roland Fried for introducing us to robust statistics, and to Alexander Dürre, who has demonstrated the benefit of complex analysis for solving statistical problems. Both authors were supported in part by the Collaborative Research Centre 823 Statistical modelling of nonlinear dynamic processes.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Proofs

Towards the proof of Theorem 1, we spare a few words about the derivation of the corresponding result for the normal distribution. When evaluating the integral \(J\), cf. (9), for the standard normal distribution, one encounters the integral

where \(\phi \) and \(\varPhi \) denote the density and the cdf of the standard normal distribution, respectively. Nair (1936) gives the value \(I_1 = 1/3 + 1/(2\pi \sqrt{3})\), resulting in \(J = \sqrt{3}/(2 \pi ) - 1/6\), but does not provide a proof. The author refers to the derivation of a similar integral (integral 8 in Table I, Nair 1936, p. 433), where we find the result as well as the derivation doubtful, and to an article by Hojo (1931), which gives numerical values for several integrals, but does not contain an explanation for the value of \(I_1\) either. We therefore include a proof here. Writing \(\varPhi (x)\) as the integral of its density and changing the order of the integrals in thus obtained three-dimensional integral yields

Solving the inner integral, we obtain

Introducing polar coordinates \(\alpha , r\) such that \(y = r \cos \alpha \), \(z = r \sin \alpha \), and solving the integral with respect to \(r\), we arrive at

This remaining integral may be solved by means of the residue theorem (e.g. Ahlfors 1966, p. 149). Substituting \(\gamma = e^{i \alpha }\) and using \(\sin \alpha = (e^{i \alpha } - e^{- i \alpha })/(2i)\), we transform \(I_1\) into the following line integral in the complex plane,

where \(\varGamma _0\) is the upper unit half circle in the complex plane, cp. Fig. 4. Let us call \(h\) the integrand in (10), its poles (both of order two) are \(\gamma _{1/2} = (2\pm \sqrt{3})i\), so that \(\gamma _2\) lies within the closed upper half unit circle \(\varGamma \). The residue of \(h\) in \(\gamma _2\) is \(-\sqrt{3} i /2\). Integrating \(h\) along \(\varGamma _1\), i.e. the real line from \(-\)1 to 1, cf. Fig. 4, and applying the residue theorem to the closed line integral along \(\varGamma \) completes the derivation.

Proof

(Proof of Theorem 1)

Evaluating the integral \(J\) for the normal mixture distribution, we arrive after lengthy calculations at

where

for all \(\lambda > 0\). As before, \(\phi \) and \(\varPhi \) denote the density and the cdf of standard normal distribution. The tricky integrals are \(C(\lambda )\) and \(D(\lambda )\), which, for \(\lambda = 1\), both reduce to the integral \(I_1\) above. Proceeding as before for the integral \(I_1\), solving the respective two inner integrals yields

These integrals are again solved by the residue theorem, which completes the proof. \(\square \)

For the proof of Theorem 2, the following identities are helpful:

where \(c_{\nu }\) is the scaling factor of the \(t_\nu \) density, cf. Table 1. The identities (12) and (13) can be obtained by transforming the respective left-hand sides into a \(t_\nu \)-densities by substituting \(y = ((2\nu -1)/\nu )^{1/2} \, x\) and \(y = ((3\nu -2)/\nu )^{1/2}\, x\), respectively.

Proof

(Proof of Theorem 2) For computing \(g\), we evaluate (7), successively making use of (11) and (12), and obtain

which can be written as in Theorem 2 by using \(B(x,y) = \varGamma (x)\varGamma (y)/\varGamma (x+y)\). For evaluating \(J\), we write \(J\) as \(J = \int _\mathbb {R}A(x) f_{\nu }(x)\, dx\) with \(f_\nu \) being the \(t_\nu \) density and

Using (11), we obtain

and

Hence, \(J = B_1 + B_2 - B_3\) with

where \(F_\nu \) is the cdf of the \(t_\nu \) distribution. By employing (11) and (13), we find

and arrive, again by employing \(B(x,y) = \varGamma (x)\varGamma (y)/\varGamma (x+y)\), at the expression for \(J\) given in Theorem 2. \(\square \)

The remaining integral

cannot be solved by the same means as the analogous integral \(I_1\) for the normal distribution, and we state this as an open problem. However, this one-dimensional integral can easily be approximated numerically, and the expression is quickly entered into a mathematical software like R (R Development Core Team 2010).

Proof

(Proof of Proposition 1) We have

and hence

which completes the proof. \(\square \)

With the influence function known, it is also possible use the relationship

instead of referring to the terms given in Sect. 2 to compute the asymptotic variance of the estimators. This leads to the same integrals.

Appendix 2: Miscellaneous

Lemma 1

For \(X_1,\ldots , X_n\) being independent and \(U(a,b)\) distributed for \(a,b \in \mathbb {R}\), \(a < b\), we have for the sample mean deviation (about the median)

Proof

For notational convenience we restrict our attention to the case \(a=0\), \(b=1\). Let \(X_{(i)}\) denote the \(i\)th order statistic, \(1 \le i \le n\). The random variable \(X_{(i)}\) has a Beta\((\alpha ,\beta )\) distribution with parameters \(\alpha = i\) and \(\beta = n+1-i\), and hence \(E(X_{(i)}) = i/(n+1)\). If \(n\) is odd, we write \(d_n\) as \(d_n = (n-1)^{-1} \sum _{i=1}^{\lfloor n/2 \rfloor } (X_{(n+1-i)} - X_{(i)})\) and obtain

If \(n\) is even, we have \(d_n = (n-1)^{-1} \sum _{i=1}^{n/2} (X_{(n+1-i)} - X_{(i)})\), and hence

which completes the proof. \(\square \)

Rights and permissions

About this article

Cite this article

Gerstenberger, C., Vogel, D. On the efficiency of Gini’s mean difference. Stat Methods Appl 24, 569–596 (2015). https://doi.org/10.1007/s10260-015-0315-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10260-015-0315-x

Keywords

- Influence function

- Mean deviation

- Median absolute deviation

- Normal mixture distribution

- Residue theorem

- Robustness

- \(Q_n\)

- Standard deviation