Abstract

A novel objective function has been introduced for solving the problem of space adjustment when supervisor is unavailable. In the introduced objective function, it has been tried to minimize the difference between distributions of the transformed original and test-data spaces. The local structural information presented in the original space is preserved by optimizing the mentioned objective function. We have proposed two techniques to preserve the structural information of original space: (a) identifying those pairs of examples that are as close as possible in original space and minimizing the distance between these pairs of examples after transformation and (b) preserving the naturally occurring clusters that are presented in original space during transformation. This cost function together with its constraints has resulted in a nonlinear objective function, used to estimate the weight matrix. An iterative framework has been employed to solve the problem of optimizing the objective function, providing a suboptimal solution. Next, using orthogonality constraint, the optimization task has been reformulated into the Stiefel manifold. Empirical examination using real-world datasets indicates that the proposed method performs better than the recently published state-of-the-art methods.

Similar content being viewed by others

References

Pan SJ, Tsang I, Kwok J, Yang Q (2011) Domain adaptation via transfer component analysis. IEEE Trans Neural Netw 22:199–210

Bache K, Lichman M (2013) UCI machine learning repository. http://archive.ics.uci.edu/ml

Saenko K, Kulis B, Fritz M, Darrell T (2010) Adapting visual category models to new domains. In: European conference on computer vision, pp 213–226

Pan SJ, Yang Q (2010) A survey on transfer learning. IEEE Trans Knowl Data Eng 22:1345–1359

Beijbom O (2012) Domain adaptation for computer vision applications. Technical report, University of California, San Diego

Sugiyama M, Nakajima S, Kashima H, von Bünau P, Kawanabe M (2007) Direct importance estimation with model selection and its application to covariate shift adaptation. In: Proceedings of neural information processing systems, pp 1962–1965

Dai W, Yang Q, Xue GR, Yu Y (2007) Boosting for transfer learning. In: International conference on machine learning, pp 193–200

Wan C, Pan R, Li J (2011) Bi-weighting domain adaptation for cross-language text classification. In: International joint conference on artificial intelligence, pp 1535–1540

Gopalan R, Li R, Chellappa R (2011) Domain adaptation for object recognition: an unsupervised approach. In: International conference in computer vision, pp 999–1006

Kulis B, Saenko K, Darrell T (2011) What you saw is not what you get: domain adaptation using asymmetric kernel transforms. In: IEEE conference on computer vision and pattern recognition, pp 1785–1792

Jhuo IH, Liu D, Lee DT, Chang SF (2012) Robust visual domain adaptation with low-rank reconstruction. In: IEEE conference on computer vision and pattern recognition, pp 2168–2175

Chattopadhyay R, Krishnan NC, Panchanathan S (2011) Topology preserving domain adaptation for addressing subject based variability in SEMG signal. In: AAAI spring symposium: computational physiology, pp 4–9

Howard A, Jebara T (2009) Transformation learning via kernel alignment. In: International conference on machine learning and applications, pp 301–308

Jiang W, Zavesky E, Fu Chang S, Loui A (2008) Cross-domain learning methods for high-level visual concept classification. In: International conference on image processing, pp 161–164

Yang J, Yan R, Hauptmann AG (2007) Cross-domain video concept detection using adaptive SVMs. In: International conference on multimedia, pp 188–197

Shi X, Fan W, Ren J (2008) Actively transfer domain knowledge. In: European conference on machine learning, pp 342–357

Baktashmotlagh M, Harandi M, Lovell B, Salzmann M (2013) Unsupervised domain adaptation by domain invariant projection. In: International conference on computer vision, pp 769–776

Duan L, Xu D, Tsang IW, Luo J (2012) Visual event recognition in videos by learning from web data. IEEE Trans Pattern Anal Mach Intell 34:1667–1680

Fernando B, Habrard A, Sebban M, Tuytelaars T (2013) Unsupervised visual domain adaptation using subspace alignment. In: International conference in computer vision, pp 2960–2967

Gong B, Shi Y, Sha F, Grauman K (2012) Geodesic flow kernel for unsupervised domain adaptation. In: IEEE conference on computer vision and pattern recognition, pp 2066–2073

Samanta S, Das S (2013) Domain adaptation based on eigen-analysis and clustering, for object categorization. In: International conference on computer analysis of images and patterns, LNCS, pp 245–253

Hoffmann H (2007) Kernel PCA for novelty detection. In: Pattern recognition, pp 863–874

Pezeshki A, Scharf LL, Chong EK (2010) The geometry of linearly and quadratically constrained optimization problems for signal processing and communications. J Frankl Inst 347:818–835

Boyd S, Vandenberghe L (2006) Convex optimization. Cambridge University Press, New York

Absil PA, Mahony R, Sepulchre R (2008) Optimization algorithms on matrix manifolds. Princeton University Press, Princeton

Tagare HD (2011) Notes on optimization on Stiefel manifolds. Technical report, Department of Diagnostic Radiology, Department of Biomedical Engineering, Yale University

Wen Z, Yin W (2013) A feasible method for optimization with orthogonality constraints. Math Prog 142:397–434

Löfberg J (2004) YALMIP: a Toolbox for modeling and optimization in MATLAB. In: Proceedings of the CACSD conference, Taiwan, Taipei

Chopra S, Balakrishnan S, Gopalan R (2013) Dlid: Deep learning for domain adaptation by interpolating between domains. In: ICML workshop on challenges in representation learning

Tzeng E, Hoffman J, Zhang N, Saenko K, Darrell T (2014) Deep domain confusion: maximizing for domain invariance. CoRR, abs/1412.3474

Long M, Wang J (2015) Learning transferable features with deep adaptation networks. CoRR, abs/1502.02791

Duan L, Xu D, Tsang IWH (2012) Domain adaptation from multiple sources: a domain-dependent regularization approach. IEEE Trans Neural Netw Learn Syst 23:504–518

Bay H, Ess A, Tuytelaars T, Gool LV (2008) Speeded-up robust features (SURF). Comput Vis Image Underst 110:346–359

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Nonparametric density-based clustering (NPDBC)

1.1 NPDBC

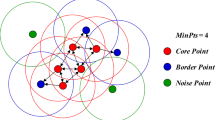

The technique presented for clustering is based on computation of the dataset density distribution in the original space. The employed clustering algorithm is considered as a nonparametric density-based clustering (NPDBC) method. The NPDBC method partitions a given dataset by estimating the data density employing Parzen window estimation. The window size is set to N −0.33 X at each attribute, where NX stands for the samples frequency in original space. The data density distribution is first projected on the direction of the greatest spread. The peaks are then discovered in the probability density distribution (PDD). All samples located around a peak are partitioned into a same cluster. It is obvious that the number of clusters is equal to the number of peaks in PDD. The only reason for employing NPDBC technique in data partitioning is to keep away from the preliminary guess of the quantity of clusters available in the dataset.

Appendix B: List of symbols used in the manuscript

See Table 6.

Appendix C: Analytical solutions for the iterative method in Algorithm 1

Substituting \( W_{X} = U_{X}\Psi _{X} + U_{Y}\Omega _{X} \) in the constraint \( W_{X}^{\text{T}} C_{X} W_{X} = I \) given in Eqs. (6) and (10) gives:

Then, from above,

where \( \Upsilon _{X} \) is an orthogonal matrix. Next, we substitute WX in the term \( W_{X}^{\text{T}} \tilde{P}_{X} W_{X} \) of Cost (WX, WY), in Eqs. (10) (or Eq. 6), to obtain:

where \( \tilde{P}_{X} = \left( {I + \mu_{X}^{\text{T}} \mu_{X} + X^{\text{T}} C^{{P^{X} }} X - S^{X} } \right) \) in Eq. (10) (and \( \tilde{P}_{X} = \left( {I + \mu_{X}^{\text{T}} \mu_{X} + X^{\text{T}} C^{{P^{X} }} X - S^{X} } \right) \) in Eq. 6). The cost function used in Eq. (10) (similar to that in Eq. 6) is:

Taking the derivative from the above and substituting from Eq. (C2), we get:

To get the optimal value of \( \Omega _{\text{X}} \), we use \( \frac{\partial }{{\partial\Omega _{X} }}{\text{Cost}}\left( {W_{X} ,W_{Y} } \right) = 0 \). This gives

Substituting \( W_{Y} = U_{Y}\Psi _{Y} + U_{X}\Omega _{Y} \) in the constraint W T Y CYWY = I given in Eqs. (6) and (10) gives:

Then, from above,

where \( \Upsilon _{Y} \) is an orthogonal matrix. Next, we substitute WY in the term \( W_{Y}^{\text{T}} \tilde{P}_{Y} W_{Y} \) of Cost (WX, WY), in Eq. (10) (or Eq. 6), to obtain:

where \( \tilde{P}_{Y} = \left( {I + \mu_{Y}^{\text{T}} \mu_{Y} + Y^{\text{T}} C^{{P^{Y} }} Y - S^{Y} } \right) \) in Eq. (10) (and \( \tilde{P}_{Y} = \left( {I + \mu_{Y}^{\text{T}} \mu_{Y} + Y^{\text{T}} C^{{P^{Y} }} Y - S^{Y} } \right) \) in Eq. 6). With the same analysis of Eq. (C4), we have:

ΨX (or ΨY) has been stated in terms of the matrix variable \( \Upsilon _{X} \) (or \( \Upsilon _{Y} \)) in Eq. (C1) (or Eq. C5). Next, we plan to achieve ΨX (or ΨY) with the least norm under the condition that ΩX (or ΩY) is a constant. For this purpose, we find the value of matrix \( \Upsilon _{X} \) (or matrix \( \Upsilon _{Y} \)), which yields the least value of \( {\text{tr}}_{{\Psi _{X}^{\text{T}}\Psi _{X} }} \) (or \( {\text{tr}}_{{\Psi _{Y}^{\text{T}}\Psi _{Y} }} \)). This is shown below:

Equations (C1), (C4), (C5), (C7), (C8) and (C9) have been used as Eqs. (11)–(13), to iteratively obtain the suboptimal value of W in Algorithm 1.

Appendix D: Solution to nonlinear constraint for optimization

Here, we provide a simplification of the quadratic constraint in Eqs. (6) and (10), to a linear form. As the distribution of \( \tilde{X} \) and \( \tilde{Y} \) should be the same, we can say:

This equation suggests that matrix WX (or matrix WY) is the geometric mean of C −1 X (or C −1 Y ) and I. If we use the relaxation that matrix WX is symmetric, then we get

Let \( U_{pX} \) be the upper triangular matrix obtained by Cholesky decomposition of CX, i.e., \( C_{X} = U_{pX}^{\text{T}} U_{pX} \) and \( V_{X} = U_{pX}^{ - 1} \). If \( R_{X}^{2} = U_{pX} IU_{pX}^{\text{T}} = U_{pX} U_{pX}^{\text{T}} \), then I = VXR 2 X V T X . Then,

where \( \Upsilon _{X} \) is an orthogonal matrix. With the same analysis if matrix WX is symmetric, \( U_{pY} \) is the upper triangular matrix obtained by Cholesky decomposition of CY, \( V_{Y} = U_{pY}^{ - 1} \), and \( R_{Y}^{2} = U_{pY} U_{pY}^{\text{T}} \) we have

where \( \Upsilon _{Y} \) is an orthogonal matrix.

Rights and permissions

About this article

Cite this article

Nejatian, S., Rezaie, V., Parvin, H. et al. An innovative linear unsupervised space adjustment by keeping low-level spatial data structure. Knowl Inf Syst 59, 437–464 (2019). https://doi.org/10.1007/s10115-018-1216-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10115-018-1216-8