Abstract

Problems involving the efficient arrangement of simple objects, as captured by bin packing and makespan scheduling, are fundamental tasks in combinatorial optimization. These are well understood in the traditional online and offline cases, but have been less well-studied when the volume of the input is truly massive, and cannot even be read into memory. This is captured by the streaming model of computation, where the aim is to approximate the cost of the solution in one pass over the data, using small space. As a result, streaming algorithms produce concise input summaries that approximately preserve the optimum value. We design the first efficient streaming algorithms for these fundamental problems in combinatorial optimization. For Bin Packing, we provide a streaming asymptotic (1 + ε)-approximation with \(\widetilde {O}\)\(\left (\frac {1}{\varepsilon }\right )\), where \(\widetilde {{{O}}}\) hides logarithmic factors. Moreover, such a space bound is essentially optimal. Our algorithm implies a streaming (d + ε)-approximation for Vector Bin Packing in d dimensions, running in space \(\widetilde {{{O}}}\left (\frac {d}{\varepsilon }\right )\). For the related Vector Scheduling problem, we show how to construct an input summary in space \(\widetilde {{{O}}}(d^{2}\cdot m / \varepsilon ^{2})\) that preserves the optimum value up to a factor of \(2 - \frac {1}{m} +\varepsilon \), where m is the number of identical machines.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The streaming model captures many scenarios when we must process very large volumes of data, which cannot fit into the working memory. The algorithm makes one or more passes over the data with a limited memory, but does not have random access to the data. Thus, it needs to extract a concise summary of the huge input, which can be used to approximately answer the problem under consideration. The main aim is to provide a good trade-off between the space used for processing the input stream (and hence, the summary size) and the accuracy of the (best possible) answer computed from the summary. Other relevant parameters are the time and space needed to make the estimate, and the number of passes, ideally equal to one.

While there have been many effective streaming algorithms designed for a range of problems in statistics, optimization, and graph algorithms (see surveys by Muthukrishnan [39] and McGregor [38]), there has been little attention paid to the core problems of packing and scheduling. These are fundamental abstractions, which form the basis of many generalizations and extensions [13, 14]. In this work, we present the first efficient algorithms for packing and scheduling that work in the streaming model.

A first conceptual challenge is to resolve what form of answer is desirable in this setting. If items in the input are too many to store, then it is also unfeasible to require a streaming algorithm to provide an explicit description of how each item is to be handled. Rather, our objective is for the algorithm to provide the cost of the solution, in the form of the number of bins or the duration of the schedule. Moreover, our algorithms can provide a concise description of the solution, which describes in outline how the jobs are treated in the design.

A second issue is that the problems we consider, even in their simplest form, are NP-hard. The additional constraints of streaming computation do not erase the computational challenge. In some cases, our algorithms proceed by adopting and extending known polynomial-time approximation schemes for the offline versions of the problems, while in other cases, we come up with new approaches. The streaming model effectively emphasizes the question of how compactly can the input be summarized to allow subsequent approximation of the problem of interest. Our main results show that in fact the inputs for many of our problems of interest can be “compressed” to very small intermediate descriptions which suffice to extract near-optimal solutions for the original input. This implies that they can be solved in scenarios which are storage or communication constrained.

We proceed by formalizing the streaming model, after which we summarize our results. We continue by presenting related work, and contrast with the online setting.

1.1 Problems and Streaming Model

Bin packing

The Bin Packing problem is defined as follows: The input consists of N items with sizes \(s_{1}, \dots , s_{N}\) (each between 0 and 1), which need to be packed into bins of unit capacity. That is, we seek a partition of the set of items \(\{1, \dots , N\}\) into subsets \(B_{1}, \dots , B_{m}\), called bins, such that for any bin Bi, it holds that \({\sum }_{j\in B_{i}} s_{j} \le 1\). The goal is to minimize the number m of bins used.

We also consider the natural generalization to Vector Bin Packing, where the input consists of d-dimensional vectors, with the value of each coordinate between 0 and 1 (i.e., the scalar items si are replaced with vectors vi). The vectors need to be packed into d-dimensional bins with unit capacity in each dimension, we thus require that \(\|{\sum }_{\mathbf {v}\in B_{i}} \mathbf {v}\|_{\infty } \le 1\) (where the infinity norm \(\|\mathbf {v}\|_{\infty } = \max \limits _{i} \mathbf {v}_{i}\)).

Scheduling

The Makespan Scheduling problem is closely related to Bin Packing but, instead of filling bins with bounded capacity, we try to balance the loads assigned to a fixed number of bins. Now we refer to the input as comprising a set of jobs, with each job j defined by its processing time pj. Our goal is to assign each job on one of m identical machines to minimize the makespan, which is the maximum load over all machines.

In Vector Scheduling, a job is described not only by its processing time, but also by, say, memory or bandwidth requirements. The input is thus a set of jobs, each job j characterized by a vector vj. The goal is to assign each job into one of m identical machines such that the maximum load over all machines and dimensions is minimized.

Streaming model

In the streaming scenario, the algorithm receives the input as a sequence of items, called the input stream. We do not assume that the stream is ordered in any particular way (e.g., randomly or by item sizes), so our algorithms must work for arbitrarily ordered streams. The items arrive one by one and upon receiving each item, the algorithm updates its memory state. A streaming algorithm is required to use space sublinear in the length of the stream, ideally just polylog(N), while it processes the stream. After the last item arrives, the algorithm computes its estimate of the optimal value, and the space or time used during this final computation is not restricted.

For many natural optimization problems outputting some explicit solution of the problem is not possible owing to the memory restriction (as the algorithm can store only a small subset of items). Thus the goal is to find a good approximation of the value of an offline optimal solution. Since our model does not assume that item sizes are integers, we express the space complexity not in bits, but in words (or memory cells), where each word can store any number from the input; a linear combination of numbers from the input; or any integer with \({\mathcal {O}}(\log N)\) bits (for counters, pointers, etc.).

1.2 Our Results

Bin packing

In Section 3, we present a streaming algorithm for Bin Packing, which outputs an asymptotic (1 + ε)-approximation of OPT, the optimal number of bins, using \({\mathcal {O}}\left (\frac {1}{\varepsilon } \cdot \log \frac {1}{\varepsilon } \cdot \log {\textsf {OPT}}\right )\) memory.Footnote 1 This means that the algorithm uses at most (1 + ε) ⋅OPT + o(OPT) bins, and in our case, the additive o(OPT) term is bounded by the space used. The novelty of our contribution is to combine a data structure that approximately tracks all quantiles in a numeric stream [27] with techniques for approximation schemes [19, 34]. We show that we can improve upon the \(\log {\textsf {OPT}}\) factor in the space complexity if randomization is allowed or if item sizes are drawn from a bounded-size set of real numbers. On the other hand, we argue that our result is close to optimal, up to a factor of \({\mathcal {O}}\left (\log \frac {1}{\varepsilon }\right )\), if item sizes are accessed only by comparisons (including comparisons with some fixed constants). Thus, one cannot get an estimate with at most OPT + o(OPT) bins by a streaming algorithm, unlike in the offline setting [29]. The hardness emerges from the space complexity of the quantiles problem in the streaming model.

For Vector Bin Packing, we design a streaming asymptotic (d + ε)-approximation algorithm running in space \({\mathcal {O}}\left (\frac {d}{\varepsilon } \cdot \log \frac {d}{\varepsilon } \cdot \log {\textsf {OPT}}\right )\); see Section 3.3. We remark that if vectors are rounded into a sublinear number of types, then better than d-approximation is not possible [7].

Scheduling

For Makespan Scheduling, one can obtain a straightforward streaming (1 + ε)-approximationFootnote 2 with space of only \({\mathcal {O}}(\frac {1}{\varepsilon }\cdot \log \frac {1}{\varepsilon })\) by rounding sizes of suitably large jobs to powers of 1 + ε and counting the total size of small jobs. In a higher dimension, it is also possible to get a streaming (1 + ε)-approximation, by the rounding introduced by Bansal et al. [8]. However, the memory required for this algorithm is exponential in d, precisely of size \({\mathcal {O}}\left (\left (\frac {1}{\varepsilon } \log \frac {d}{\varepsilon }\right )^{d}\right )\), and thus only practical when d is a very small constant. Moreover, such a huge amount of memory is needed even if the number m of machines (and hence, of big jobs) is small as the algorithm rounds small jobs into exponentially many types. See Section 4.2 for more details.

In case m and d make this feasible, we design a new streaming \(\left (2-\frac {1}{m}+\varepsilon \right )\)-approximation with \({\mathcal {O}}\left (\frac {1}{\varepsilon ^{2}} \cdot d^{2}\cdot m\cdot \log \frac {d}{\varepsilon }\right )\) memory, which implies a streaming 2-approximation algorithm running in space \({\mathcal {O}}(d^{2}\cdot m^{3}\cdot \log dm)\). We thus obtain a much better approximation than for Vector Bin Packing with a reasonable amount of memory (although to compute the actual makespan from our input summary, it takes time doubly exponential in d [8]). Our algorithm is not based on rounding, as in the aforementioned algorithms, but on combining small jobs into containers, and the approximation guarantee of this approach is at least \(2-\frac {1}{m}\), which we demonstrate by an example. We describe the algorithm in Section 4.

2 Related Work

We give an overview of related work in offline, online, and sublinear algorithms, and highlight the differences between online and streaming algorithms. Recent surveys of Christensen et al. [13] and Coffman et al. [14] have a more comprehensive overview.

2.1 Bin Packing

Offline approximation algorithms

Bin Packing is an NP-complete problem and indeed it is NP-hard even to decide whether two bins are sufficient or at least three bins are necessary. This follows by a simple reduction from the Partition problem and presents the strongest inapproximability to date. Most work in the offline model focused on providing asymptotic R-approximation algorithms, which use at most R ⋅OPT + o(OPT) bins. In the following, when we refer to an approximation for Bin Packing we implicitly mean the asymptotic approximation. The first polynomial-time approximation scheme (PTAS), that is, a (1 + ε)-approximation for any ε > 0, was given by Fernandez de la Vega and Lueker [19]. Karmarkar and Karp [34] provided an algorithm which returns a solution with \({\textsf {OPT}} + {\mathcal {O}}(\log ^{2} {\textsf {OPT}})\) bins. Recently, Hoberg and Rothvoß [29] proved it is possible to find a solution with \({\textsf {OPT}} + {\mathcal {O}}(\log {\textsf {OPT}})\) bins in polynomial time.

The input for Bin Packing can be described by N numbers, corresponding to item sizes. While in general these sizes may be distinct, in some cases the input description can be compressed significantly by specifying the number of items of each size in the input. Namely, in the High-Multiplicity Bin Packing problem, the input is a set of pairs \((a_{1}, s_{1}), \dots , (a_{\sigma }, s_{\sigma })\), where for \(i=1,\dots ,\sigma \), ai is the number of items of size si (and all si’s are distinct). Thus, σ encodes the number of item sizes, and hence the size of the description. The goal is again to pack these items into bins, using as few bins as possible. For constant number of sizes, σ, Goemans and Rothvoß [25] recently gave an exact algorithm for the case of rational item sizes running in time \(\displaystyle {(\log {\Delta })^{2^{{\mathcal {O}}(\sigma )}}}\), where Δ is the largest multiplicity of an item or the largest denominator of an item size, whichever is the greater.

While these algorithms provide satisfying theoretical guarantees, simple heuristics are often adopted in practice to provide a “good-enough” performance. First Fit [33], which puts each incoming item into the first bin where it fits and opens a new bin only when the item does not fit anywhere else achieves 1.7-approximation [17]. For the high-multiplicity variant, using an LP-based Gilmore-Gomory cutting stock heuristic [23, 24] gives a good running time in practice [2] and produces a solution with at most OPT + σ bins. However, neither of these algorithms adapts well to the streaming setting with possibly distinct item sizes. For example, First Fit has to remember the remaining capacity of each open bin, which in general can require space proportional to OPT.

Vector Bin Packing proves to be substantially harder to approximate, even in a constant dimension. For fixed d, Bansal, Eliáš, and Khan [7] showed an approximation factor of approximately \(0.807 + \ln (d+1) + \varepsilon \). For general d, a relatively simple algorithm based on an LP relaxation, due to Chekuri and Khanna [11], remains the best known, with an approximation guarantee of \(1+\varepsilon d+ {\mathcal {O}}(\log \frac {1}{\varepsilon })\). The problem is APX-hard even for d = 2 [41], and cannot be approximated within a factor better than d1−ε for any fixed ε > 0 [13] if d is arbitrarily large. Hence, our streaming (d + ε)-approximation for Vector Bin Packing asymptotically matches the offline lower bound.

Sampling-based algorithms

Sublinear-time approximation schemes constitute a model related to, but distinct from, streaming algorithms. Batu, Berenbrink, and Sohler [9] provide an algorithm that takes \(\widetilde {{\mathcal {O}}}\left (\sqrt {N}\cdot \operatorname {poly}(\frac {1}{\varepsilon })\right )\) weighted samples, meaning that the probability of sampling an item is proportional to its size. It outputs an asymptotic (1 + ε)-approximation of OPT. If uniform samples are also available, then sampling \(\widetilde {{\mathcal {O}}}\left (N^{1/3}\cdot \operatorname {poly}(\frac {1}{\varepsilon })\right )\) items is sufficient. These results are tight, up to a \(\operatorname {poly}(\frac {1}{\varepsilon }, \log N)\) factor. Later, Beigel and Fu [10] focused on uniform sampling of items, proving that \(\widetilde {\Theta }(N / {\textsf {SIZE}})\) samples are sufficient and necessary, where SIZE is the total size of all items. Their approach implies a streaming approximation scheme by uniform sampling of the substream of big items. However, the space complexity in terms of \(\frac {1}{\varepsilon }\) is not stated in the paper, and we calculate it to be \({\Omega }\left (\varepsilon ^{-c}\right )\) for a constant c ≥ 10. Moreover, \({\Omega }(\frac {1}{\varepsilon ^{2}})\) samples are clearly needed to estimate the number of items with size close to 1. Note that our approach is deterministic and substantially different than taking a random sample from the stream.

Online algorithms

Online and streaming algorithms are similar in the sense that they are required to process items one by one. However, an online algorithm must make all its decisions immediately — it must fix the placement of each incoming item on arrival.Footnote 3A streaming algorithm can postpone such decisions to the very end, but is required to keep its memory small, whereas an online algorithm may remember all items that have arrived so far. Hence, online algorithms apply in the streaming setting only when they have small space cost, including the space needed to store the solution constructed so far. The performance of online algorithms is usually quantified by the competitive ratio, which is defined as the worst-case ratio between the online algorithm’s cost and that of the optimal offline algorithm, analogous to the approximation ratio for approximation algorithms.

For Bin Packing, the best possible competitive ratio is substantially worse than what we can achieve offline or even in the streaming setting. Balogh et al. [5] designed an asymptotically 1.5783-competitive algorithm, while the current lower bound on the asymptotic competitive ratio is 1.5403 [6]. This (relatively complicated) online algorithm is based on the Harmonic algorithm [36], which for some integer K classifies items into size groups \((0,\frac 1K], (\frac 1K, \frac {1}{K-1}], \dots , (\frac {1}{2}, 1]\). It packs each group separately by Next Fit, keeping just one bin open, which is closed whenever the next item does not fit. Thus Harmonic can run in memory of size K and be implemented in the streaming model, unlike most other online algorithms which require maintaining the levels of all bins opened so far. Its competitive ratio tends to approximately 1.691 as K goes to infinity. Surprisingly, this is also the best possible ratio if only a bounded number of bins is allowed to be open for an online algorithm [36], which can be seen as the intersection of online and streaming models.

For Vector Bin Packing, the best known competitive ratio of d + 0.7 [21] is achieved by First Fit. A lower bound of Ω(d1−ε) on the competitive ratio was shown by Azar et al. [3]. It is thus currently unknown whether or not online algorithms outperform streaming algorithms in the vector setting.

2.2 Scheduling

Offline approximation algorithms

Makespan Scheduling is strongly NP-complete [22], which in particular rules out the possibility of a PTAS with time complexity \(\operatorname {poly}(\frac {1}{\varepsilon }, n)\). After a sequence of improvements, Jansen, Klein, and Verschae [32] gave a PTAS with time complexity \(2^{\widetilde {{\mathcal {O}}}(1/\varepsilon )} + {\mathcal {O}}(n \log n)\), which is essentially tight under the Exponential Time Hypothesis (ETH) [12].

For constant dimension d, Vector Scheduling also admits a PTAS, as shown by Chekuri and Khanna [11]. However, the running time is of order \(n^{(1/\varepsilon )^{\widetilde {{\mathcal {O}}}(d)}}\). The approximation scheme for a fixed d was improved to an efficient PTAS, namely to an algorithm running in time \(2^{(1/\varepsilon )^{{\widetilde {{\mathcal {O}}}}(d)}} + {\mathcal {O}}(dn)\), by Bansal et al. [8], who also showed that the running time cannot be significantly improved under ETH. In contrast, our streaming poly(d,m)-space algorithm computes an input summary maintaining 2-approximation of the original input. This respects the lower bound, since to compute the actual makespan from the summary, we still need to execute an offline algorithm, with running time doubly exponential in d. The state-of-the-art approximation ratio for large d is \({\mathcal {O}}(\log d / (\log \log d))\) [28, 31], while α-approximation is not possible in polynomial time for any constant α > 1 and arbitrary d, unless NP = ZPP [11].

Online algorithms

For the scalar problem, the optimal competitive ratio is known to lie in the interval (1.88,1.9201) [1, 20, 26, 30], which is substantially worse than what can be done by a simple streaming (1 + ε)-approximation in space \({\mathcal {O}}(\frac {1}{\varepsilon }\cdot \log \frac {1}{\varepsilon })\). Interestingly, for Vector Scheduling, the algorithm by Im et al. [31] with ratio \({\mathcal {O}}(\log d / (\log \log d))\) actually works in the online setting as well and needs space \({\mathcal {O}}(d\cdot m)\) only during its execution (if the solution itself is not stored), which makes it possible to implement it in the streaming setting. This online ratio cannot be improved as there is a lower bound of \({\Omega }(\log d / (\log \log d))\) [4, 31], whereas in the streaming setting we can achieve a 2-approximation with a reasonable memory (or even (1 + ε)-approximation for a fixed d). If all jobs have sufficiently small size, we improve the analysis in [31] and show that the online algorithm achieves (1 + ε)-approximation; see Section 4.

3 Bin Packing

Notation

For an instance I, let N(I) be the number of items in I, let SIZE(I) be the total size of all items in I, and let OPT(I) be the number of bins used in an optimal solution for I. Clearly, SIZE(I) ≤OPT(I). For a bin B, let s(B) be the total size of items in B. For a given ε > 0, we use \(\widetilde {{\mathcal {O}}}(f(\frac {1}{\varepsilon }))\) to hide factors logarithmic in \(\frac {1}{\varepsilon }\) and OPT(I), i.e., to denote \({\mathcal {O}}\big (f(\frac {1}{\varepsilon }) \cdot \operatorname {polylog} \frac {1}{\varepsilon } \cdot \operatorname {polylog} {\textsf {OPT}}(I)\big )\).

Overview

We first briefly describe the approximation scheme of Fernandez de la Vega and Lueker [19], whose structure we follow in outline. Let I be an instance of Bin Packing. Given a precision requirement ε > 0, we say that an item is small if its size is at most ε; otherwise, it is big. Note that there are at most \(\frac {1}{\varepsilon } {\textsf {SIZE}}(I)\) big items. The rounding scheme in [19], called “linear grouping”, works as follows: We sort the big items by size non-increasingly and divide them into groups of k = ⌊ε ⋅SIZE(I)⌋ items (the first group thus contains the k biggest items). In each group, we round up the sizes of all items to the size of the biggest item in that group. It follows that the number of groups and thus the number of distinct item sizes (after rounding) is bounded by \(\lceil \frac {1}{\varepsilon ^{2}}\rceil \). Let IR be the instance of High-Multiplicity Bin Packing consisting of the big items with rounded sizes. It can be shown that OPT(IB) ≤OPT(IR) ≤ (1 + ε) ⋅OPT(IB), where IB is the set of big items in I (we detail a similar argument in Section 3.1). Due to the bounded number of distinct item sizes, we can find a close-to-optimal solution for IR efficiently. We then translate this solution into a packing for IB in the natural way. Finally, small items are filled greedily (e.g., by First Fit) and it can be shown that the resulting complete solution for I is a \(1 + {\mathcal {O}}(\varepsilon )\)-approximation.

Karmarkar and Karp [34] proposed an improved rounding scheme, called “geometric grouping”. It is based on the observation that item sizes close to 1 should be approximated substantially better than item sizes close to ε. We present a version of such a rounding scheme in Section 3.1.

Our algorithm follows a similar outline with two stages (rounding and finding a solution for the rounded instance), but working in the streaming model brings two challenges: First, in the rounding stage, we need to process the stream of items and output a rounded high-multiplicity instance with few item sizes that are not too small, while keeping only a small number of items in the memory. Second, the rounding of big items needs to be done carefully so that not much space is “wasted”, since in the case when the total size of small items is relatively large, we argue that our solution is close to optimal by showing that the bins are nearly full on average.

Input summary properties

More precisely, we fix some ε > 0 that is used to control the approximation guarantee. During the first stage, our algorithm has one variable which accumulates the total size of all small items in the input stream, i.e., those of size at most ε. Let IB be the substream consisting of all big items. We process IB and output a rounded high-multiplicity instance IR with the following properties:

-

(P1)

There are at most σ item sizes in IR, all of them larger than ε, and the memory required for processing IB is \({\mathcal {O}}(\sigma )\).

-

(P2)

The i-th biggest item in IR is at least as large as the i-th biggest item in IB (and the number of items in IR is the same as in IB). This immediately implies that any packing of IR can be used as a packing of IB (in the same number of bins), so OPT(IB) ≤OPT(IR), and moreover, SIZE(IB) ≤SIZE(IR).

-

(P3)

\({\textsf {OPT}}(I_{\mathrm {R}})\le (1 + \varepsilon )\cdot {\textsf {OPT}}(I_{\mathrm {B}}) + {\mathcal {O}}(\log \frac {1}{\varepsilon })\).

-

(P4)

SIZE(IR) ≤ (1 + ε) ⋅SIZE(IB).

In words, (P2) means that we are rounding item sizes up and, together with (P3), it implies that the optimal solution for the rounded instance approximates OPT(IB) well. The last property is used in the case when the total size of small items constitutes a large fraction of the total size of all items. Note that SIZE(IR) −SIZE(IB) can be thought of as bin space “wasted” by rounding.

Observe that the succinctness of the rounded instance depends on σ. First, we show a streaming algorithm for rounding with \(\sigma = \widetilde {{\mathcal {O}}}(\frac {1}{\varepsilon ^{2}})\). Then we improve upon it and give an algorithm with \(\sigma = \widetilde {{\mathcal {O}}}(\frac {1}{\varepsilon })\), which is essentially the best possible, while guaranteeing an error of ε ⋅OPT(IB) introduced by rounding (elaborated on in Section 3.2). More precisely, we show the following:

Lemma 1

Given a stream IB of big items, there is a deterministic streaming algorithm that outputs a High-Multiplicity Bin Packing instance satisfying (P1)-(P4) with \(\sigma = {\mathcal {O}}\left (\frac {1}{\varepsilon }\cdot \log \frac {1}{\varepsilon }\cdot \log {\textsf {OPT}}(I_{\mathrm {B}})\right )\).

Before describing the rounding itself and proving Lemma 1, we explain how to use it to calculate an accurate estimate of the number of bins. This part follows a similar outline as in other approximation schemes for Bin Packing. In a nutshell, rounding the sizes of big items allows us to efficiently find an approximate solution for them, and then there are two cases: Either we know for sure that all small items fit into bins with big items, or all bins except for one are nearly full. Below, we provide details for completeness.

Calculating a bound on the number of bins after rounding

First, we obtain a solution \(\mathcal {S}\) of the rounded instance IR. For instance, we may round the solution of the linear program introduced by Gilmore and Gomory [23, 24], and get a solution with at most OPT(IR) + σ bins. Or, if item sizes are rational numbers, we may compute an optimal solution for IR by the algorithm of Goemans and Rothvoß [25]; however, the former approach appears to be more efficient and more general. In the following, we thus assume that \(\mathcal {S}\) uses at most OPT(IR) + σ bins.

We now calculate a bound on the number of bins in the original instance. Let W be the total free space in the bins of \(\mathcal {S}\) that can be used for small items. To be precise, W equals the sum over all bins B in \(\mathcal {S}\) of \(\max \limits (0, 1 - \varepsilon - s(B))\). The reason behind the definition of W is the following: Our definition of small items means that in the worst case, each small item could be as big as ε. To allow us to handle these in the second phase of the algorithm, we will cap the capacity of bins at 1 − ε as items of size ε do not fit into bins already containing items of total size more than 1 − ε. On the other hand, if a small item does not fit into a bin, then the remaining space in the bin is smaller than ε.

Let s be the total size of all small items in the input stream. If s ≤ W, then all small items surely fit into the free space of bins in \(\mathcal {S}\) (and can be assigned there greedily by First Fit). Consequently, we output that the number of bins needed for the stream of items is at most \(|\mathcal {S}|\), i.e., the number of bins in solution \(\mathcal {S}\) for IR. Otherwise, we need to place small items of total size at most s′ = s − W into new bins and it is easy to see that opening at most \(\lceil s^{\prime } / (1 - \varepsilon )\rceil \le (1 + {\mathcal {O}}(\varepsilon ))\cdot s^{\prime } + 1\) bins for these small items suffices. Hence, in the case s > W, we output that \(|\mathcal {S}| + \lceil s^{\prime } / (1 - \varepsilon )\rceil \) bins are sufficient to pack all items in the stream.

We prove that the number of bins that we output in either case is a good approximation of the optimal number of bins, provided that \(\mathcal {S}\) is a good solution for IR.

Lemma 2

Let I be given as a stream of items. Suppose that \(0<\varepsilon \le \frac 13\), that the rounded instance IR, created from I, satisfies properties (P1)-(P4), and that the solution \(\mathcal {S}\) of IR uses at most OPT(IR) + σ bins. Let ALG(I) be the number of bins that our algorithm outputs. Then, it holds that \({\textsf {OPT}}(I)\le {\textsf {ALG}}(I) \le (1 + 3\varepsilon )\cdot {\textsf {OPT}}(I) + \sigma + {\mathcal {O}}\left (\log \frac {1}{\varepsilon }\right )\).

Proof

We analyze the two cases of the algorithm: □

Case 1

s ≤ W In this case, small items fit into the bins of \(\mathcal {S}\) and \({\textsf {ALG}}(I) = |\mathcal {S}|\). For the inequality OPT(I) ≤ALG(I), observe that the packing \(\mathcal {S}\) can be used as a packing of items in IB (in a straightforward way) with no less free space for small items by property (P2). Thus \({\textsf {OPT}}(I) \le |\mathcal {S}|\).

To upper bound ALG(I), note that

where the second inequality follows from property (P3) and the third inequality holds as IB is a subinstance of I.

Case 2

s > W Recall that \({\textsf {ALG}}(I) = |\mathcal {S}| + \lceil s^{\prime } / (1 - \varepsilon )\rceil \). We again have that \(\mathcal {S}\) can be used as a packing of IB with no less free space for small items. Thus, the total size of small items that do not fit into bins in \(\mathcal {S}\) is at most \(s^{\prime }\) and these items clearly fit into \(\lceil s^{\prime } / (1 - \varepsilon )\rceil \) bins. Hence, \({\textsf {OPT}}(I)\le |\mathcal {S}| + \lceil s^{\prime } / (1 - \varepsilon )\rceil \).

For the other inequality, consider starting with solution \(\mathcal {S}\) for IR, first to (almost) fill up the bins of \(\mathcal {S}\) with small items of total size W, then using \(\lceil s^{\prime } / (1 - \varepsilon )\rceil \) additional bins for the remaining small items. Note that in each bin, except the last one, the unused space is less than ε, thus the total size of items in IR and small items is more than (ALG(I) − 1) ⋅ (1 − ε). Finally, we replace items in IR by items in IB and the total size of items decreases by SIZE(IR) −SIZE(IB) ≤ ε ⋅SIZE(IB) ≤ ε ⋅SIZE(I) by property (P4). Hence, SIZE(I) ≥ (ALG(I) − 1) ⋅ (1 − ε) − ε ⋅SIZE(I). Rearranging and using \(\varepsilon \le \frac 13\), we get

Considered together, these two cases both meet the claimed bound.

3.1 Processing the Stream and Rounding

In this section, we describe the streaming algorithm of the rounding stage and prove Lemma 1. The algorithm makes use of the deterministic quantile summary of Greenwald and Khanna [27]. Given a precision δ > 0 and an input stream of numbers \(s_{1}, \dots , s_{N}\), their algorithm computes a data structure Q(δ) which is able to answer a quantile query with precision δN. Namely, for any 0 ≤ ϕ ≤ 1, it returns an element s of the input stream such that the rank of s is in [(ϕ − δ)N,(ϕ + δ)N], where the rank of s is the position of s in the non-increasing ordering of the input stream.Footnote 4 The data structure stores an ordered sequence of tuples, each consisting of an input number si and valid lower and upper bounds on the true rank of si in the input sequence.Footnote 5 The first and last stored items correspond to the maximum and minimum numbers in the stream, respectively. Note that the lower and upper bounds on the rank of any stored number differ by at most ⌊2δN⌋ and upper (or lower) bounds on the rank of two consecutive stored numbers differ by at most ⌊2δN⌋ as well. The space requirement of Q(δ) is \({\mathcal {O}}(\frac {1}{\delta }\cdot \log \delta N)\), however, in practice the space used is observed to scale linearly with \(\frac {1}{\delta }\) [37]. (We remark that an offline optimal data structure for δ-approximate quantiles uses space \({\mathcal {O}}\left (\frac {1}{\delta }\right )\).)

We use the data structure Q(δ) to construct our algorithm for processing the stream IB of big items. Note that it is possible to use any other quantile summary instead of Q(δ) in a similar way.

Simple rounding algorithm

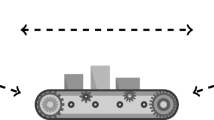

We begin by describing a simpler solution with \(\delta = \frac 14\varepsilon ^{2}\), resulting in a rounded instance with \(\widetilde {{\mathcal {O}}}(\frac {1}{\varepsilon ^{2}})\) item sizes. Subsequently, we introduce a more involved solution with smaller space cost, which proves Lemma 1. The algorithm uses a quantile summary structure to determine the rounding scheme. Given a (big) item si from the input, we insert it into Q(δ). After processing all items, we extract from Q(δ) the set of stored input items (i.e., their sizes) together with upper bounds on their rank (where the largest size has highest rank 1, and the smallest size has least rank NB). Note that the number NB of big items in IB is less than \(\frac {1}{\varepsilon }{\textsf {SIZE}}(I_{\mathrm {B}})\le \frac {1}{\varepsilon }{\textsf {OPT}}(I_{\mathrm {B}})\) as each is of size more than ε. Let q be the number of items (or tuples) extracted from Q(δ); we get that \(q = {\mathcal {O}}(\frac {1}{\delta }\cdot \log \delta N_{\mathrm {B}}) = {\mathcal {O}}\big (\frac {1}{\varepsilon ^{2}}\cdot \log (\varepsilon \cdot {\textsf {OPT}}(I_{\mathrm {B}}))\big )\). Let \((a_{1}, u_{1} = 1), (a_{2}, u_{2}), \dots , (a_{q}, u_{q} = N_{\mathrm {B}})\) be the output pairs of an item size and the bound on its rank, sorted so that a1 ≥ a2 ≥⋯ ≥ aq. We define the rounded instance IR with at most q item sizes as follows: IR contains (uj+ 1 − uj) items of size aj for each \(j = 1, \dots , q-1\), plus one item of size aq. (See Fig. 1.)

An illustration of the original distribution of sizes of big items in IB, depicted by a smooth curve, and the distribution of item sizes in the rounded instance IR, depicted by a bold “staircase” function. The distribution of \(I_{\mathrm {R}}^{\prime }\) (which is IR without the ⌊4δNB⌋ biggest items) is depicted by a (blue) dash dotted line. Selected items \(a_{i}, \dots , a_{q}\), with q = 11, are illustrated by (red) dots, and the upper bounds \(u_{1}, \dots , u_{q}\) on the ranks appear on the x axis

We show that the desired properties (P1)-(P4) hold with σ = q. Property (P1) follows easily from the definition of IR and the design of data structure Q(δ). Note that the number of items is preserved. To show (P2), suppose for a contradiction that the i-th biggest item in IB is bigger than the i-th biggest item in IR, whose size is aj for \(j=1,\dots ,q-1\), i.e., i ∈ [uj,uj+ 1) (note that j < q as aq is the smallest item in IB and is present only once in IR). We get that the rank of item aj in IB is strictly more than i, and as i ≥ uj, we get a contradiction with the fact that uj is a valid upper bound on the rank of aj in IB.

Next, we give bounds for OPT(IR) and SIZE(IR), which are required by properties (P3) and (P4). We pack the ⌊4δNB⌋ biggest items in IR separately into “extra” bins. Using the choice of \(\delta = \frac 14\varepsilon ^{2}\) and \(N_{\mathrm {B}}\le \frac {1}{\varepsilon }{\textsf {SIZE}}(I_{\mathrm {B}})\), we bound the number of these items and thus extra bins by 4δNB ≤ ε ⋅SIZE(IB) ≤ ε ⋅OPT(IB). Let \(I_{\mathrm {R}}^{\prime }\) be the remaining items in IR. We claim that the i-th biggest item bi in IB is bigger than the i-th biggest item in \(I_{\mathrm {R}}^{\prime }\) with size equal to aj for \(j=1,\dots ,q\). For a contradiction, suppose that bi < aj, which implies that the rank rj of aj in IB is less than i. Note that j < q as aq is the smallest item in IB. Since we packed the ⌊4δNB⌋ biggest items from IR separately, one of the positions of aj in the ordering of IR is i + ⌊4δNB⌋ and so we have i + ⌊4δNB⌋ < uj+ 1 ≤ uj + ⌊2δNB⌋, where the first inequality holds by the construction of IR and the second inequality is by the design of data structure Q(δ). It follows that i < uj −⌊2δNB⌋. Combining this with rj < i, we obtain that the rank of aj in IB is less than uj −⌊2δNB⌋, which contradicts that uj −⌊2δNB⌋ is a valid lower bound on the rank of aj.

The claim implies \({\textsf {OPT}}(I_{\mathrm {R}}^{\prime }) \le {\textsf {OPT}}(I_{\mathrm {B}})\) and \({\textsf {SIZE}}(I_{\mathrm {R}}^{\prime }) \le {\textsf {SIZE}}(I_{\mathrm {B}})\). We thus get that \({\textsf {OPT}}(I_{\mathrm {R}}) \le {\textsf {OPT}}(I_{\mathrm {R}}^{\prime }) + \lfloor 4\delta N_{\mathrm {B}}\rfloor \le {\textsf {OPT}}(I_{\mathrm {B}}) + \varepsilon \cdot {\textsf {OPT}}(I_{\mathrm {B}})\), proving property (P3). Similarly, \({\textsf {SIZE}}(I_{\mathrm {R}}) \le {\textsf {SIZE}}(I_{\mathrm {R}}^{\prime }) + \lfloor 4\delta N_{\mathrm {B}}\rfloor \le {\textsf {SIZE}}(I_{\mathrm {B}}) + \varepsilon \cdot {\textsf {SIZE}}(I_{\mathrm {B}})\), showing (P4).

Better rounding algorithm

Our improved rounding algorithm reduces the number of sizes in the rounded instance (and also the memory requirement) from \(\widetilde {{\mathcal {O}}}(\frac {1}{\varepsilon ^{2}})\) to \(\widetilde {{\mathcal {O}}}(\frac {1}{\varepsilon })\). It is based on the observation that the number of items of sizes close to ε can be approximated with much lower accuracy than the number of items with sizes close to 1, without affecting the quality of the overall approximation. This was observed already by Karmarkar and Karp [34].

We have now built up all the pieces we need in order to prove Lemma 1, that there is a small space streaming algorithm outputting a High-Multiplicity Bin Packing instance satisfying (P1)-(P4).

Proof Proof of Lemma 1

Let \(k = \lceil \log _{2} \frac {1}{\varepsilon } \rceil \). We first group big items in k groups \(0, \dots , k-1\) by size such that in group j there are items with sizes in (2−j− 1,2−j]. That is, the size intervals for groups are (0.5,1],(0.25,0.5], etc. Let Nj, \(j = 0, \dots , k-1\), be the number of big items in group j; clearly, Nj < 2j+ 1SIZE(IB) ≤ 2j+ 1OPT(IB). Note that the total size of items in group j is in (2−j− 1 ⋅ Nj,2−j ⋅ Nj]. Summing over all groups, we get in particular that

For each group j, we use a separate data structure Qj := Q(δ) with \(\delta = \frac 18\varepsilon \), where Q(δ) is the quantile summary from [27] with precision δ. So when a big item of size si arrives, we find j such that si ∈ (2−j− 1,2−j] and insert si into Qj. After processing all items, for each group j, we do the following: We extract from Qj the set of stored input items (i.e., their sizes) together with upper bounds on their rank. Let \(({a^{j}_{1}}, {u^{j}_{1}} = 1), ({a^{j}_{2}}, {u^{j}_{2}}), \dots , (a^{j}_{q_{j}}, u^{j}_{q_{j}} = N_{j})\) be the pairs of an item size and the upper bound on its rank in group j, ordered as in the simpler algorithm so that \({a^{j}_{1}}\ge {a^{j}_{2}}\ge {\cdots } \ge a^{j}_{q_{j}}\). We have

since ε2j ≤ ε2k ≤ 2.

An auxiliary instance \(I_{\mathrm {R}}^{j}\) is formed by \((u^{j}_{i+1} - {u^{j}_{i}})\) items of size ai for \(i=1, \dots , q_{j} - 1\) plus one item of size \(a_{q_{j}}\). To create the rounded instance IR, we take the union of all auxiliary instances \(I_{\mathrm {R}}^{j}\), \(j = 0, \dots , k-1\). Note that the number of item sizes in IR is

We show that the desired properties (P1)-(P4) are satisfied. Property (P1) follows easily from the definition of IR as the union of instances \(I_{\mathrm {R}}^{j}\) and the design of data structures Qj. To see property (P2), for every group j, it holds that the i-th biggest item in group j in IR is at least as large as the i-th biggest item in group j in IB. Indeed, for any \(p=0,\dots ,q_{j}\), \({u^{j}_{p}}\) is a valid upper bound on the rank of \({a^{j}_{p}}\) in group j in IB and ranks of items of size \({a^{j}_{p}}\) in group j in IR are at least \({u^{j}_{p}}\). Moreover, the number of items is preserved in every group. Hence, overall, the i-th biggest item in IR cannot be smaller than the i-th biggest item in IB.

Next, we prove properties (P3) and (P4), i.e., the bounds on OPT(IR) and on SIZE(IR). For each group j, we pack the ⌊4δNj⌋ biggest items in IR with size in group j into “extra” bins, each containing 2j items, except for at most one extra bin which may contain fewer than 2j items. This is possible as any item in group j has size at most 2−j. Using the choice of \(\delta = \frac 18\varepsilon \) and (1), we bound the total number of extra bins by

Let \(I_{\mathrm {R}}^{\prime }\) be the remaining items in IR. Consider group j and let IB(j) and \(I_{\mathrm {R}}^{\prime }(j)\) be the items with sizes in (2−j− 1,2−j] in IB and in \(I_{\mathrm {R}}^{\prime }\), respectively. We claim that the i-th biggest item bi in IB(j) is at least as large as the i-th biggest item in \(I_{\mathrm {R}}^{\prime }(j)\) with size equal to ap for \(p=1,\dots ,q_{j}\). For a contradiction, suppose that bi < ap, which implies that the rank rp of ap in IB(j) is less than i. Note that p < qj as \(a_{q_{j}}\) is the smallest item in IB(j). Since we packed the largest ⌊4δNj⌋ items from IR(j) separately, we have i + ⌊4δNj⌋ < up+ 1 ≤ up + ⌊2δNj⌋, where the last inequality is by the design of data structure Qj. It follows that i < up −⌊2δNj⌋. Combining it with rp < i, we obtain that the rank of ap in IB(j) is less than up −⌊2δNj⌋, which contradicts that up −⌊2δNj⌋ is a valid lower bound on the rank of ap. Hence, the claim holds for any group and it immediately implies \({\textsf {OPT}}(I_{\mathrm {R}}^{\prime }) \le {\textsf {OPT}}(I_{\mathrm {B}})\) and \({\textsf {SIZE}}({I_{\mathrm {R}}}^{\prime }) \le {\textsf {SIZE}}(I_{\mathrm {B}})\).

Combining with (2), we get that \({\textsf {OPT}}(I_{\mathrm {R}}) \le {\textsf {OPT}}(I_{\mathrm {R}}^{\prime }) + \varepsilon \cdot {\textsf {OPT}}(I_{\mathrm {B}}) + k \le (1 + \varepsilon )\cdot {\textsf {OPT}}(I_{\mathrm {B}}) + k,\) thus (P3) holds. Similarly, to bound the total wasted space, observe that the total size of items of IR that are not in \(I_{\mathrm {R}}^{\prime }\) is bounded by

where we use (1) in the last inequality. We obtain that \({\textsf {SIZE}}(I_{\mathrm {R}}) \le {\textsf {SIZE}}(I_{\mathrm {R}}^{\prime }) + \varepsilon \cdot {\textsf {SIZE}}(I_{\mathrm {B}}) \le (1 + \varepsilon )\cdot {\textsf {SIZE}}(I_{\mathrm {B}})\). We conclude that properties (P1)-(P4) hold for the rounded instance IR. □

Lemmas 1 and 2 directly imply our main result for Bin Packing.

Theorem 3

There is a streaming algorithm for Bin Packing that for each instance I given as a stream outputs an estimate ALG(I) of OPT(I) such that

The space requirement of the algorithm is \({\mathcal {O}}\left (\frac {1}{\varepsilon }\cdot \log \frac {1}{\varepsilon }\cdot \log {\textsf {OPT}}(I)\right )\).

3.2 Bin Packing and Quantile Summaries

In the previous section, the deterministic quantile summary data structure from [27] allows us to obtain a streaming approximation scheme for Bin Packing. We argue that this connection runs deeper.

We start with two scenarios for which there exist better quantile summaries. First, suppose that all big item sizes belong to a universe U of bounded size (for example, when item sizes are floating-point numbers with low precision). Then it can be better to use the quantile summary of Shrivastava et al. [40], which provides a guarantee of \({\mathcal {O}}(\frac 1\delta \cdot \log |U|)\) on the space complexity, where δ is the precision requirement. Thus, by using k copies of this quantile summary in a similar way as in Section 3.1, we get a streaming (1 + ε)-approximation algorithm for Bin Packing that runs in space \({\mathcal {O}}(\frac {1}{\varepsilon }\cdot \log \frac {1}{\varepsilon } \cdot \log |U|)\).

Second, if we allow the algorithm to use randomization and fail with probability γ, we can employ the optimal randomized quantile summary of Karnin, Lang, and Liberty [35], which, for a given precision δ and failure probability η, uses space \({\mathcal {O}}(\frac 1\delta \cdot \log \log \frac {1}{\delta \eta })\) and does not provide a δ-approximate quantile for some quantile query with probability at most η. In particular, using k copies of their data structure with precision δ = Θ(ε) and failure probability η = γ/k, similarly as in Section 3.1, gives a streaming (1 + ε)-approximation algorithm for Bin Packing which fails with probability at most γ and runs in space \({\mathcal {O}}\left (\frac {1}{\varepsilon }\cdot \log \frac {1}{\varepsilon } \cdot \log \log (\log \frac {1}{\varepsilon } / \varepsilon \gamma )\right )\).

More intriguingly, the connection between quantile summaries and Bin Packing also goes in the other direction. Namely, we show that a streaming (1 + ε)-approximation algorithm for Bin Packing with space bounded by S(ε,OPT) (or S(ε,N)) implies a data structure of size S(ε,N) for the following Estimating Rank problem: Create a summary of a stream of N numbers which is able to provide a δ-approximate rank of any query q, i.e., the number of items in the stream which are larger than q, up to an additive error of ± δN. A summary for Estimating Rank is essentially a quantile summary and we can actually use it to find an approximate quantile by doing a binary search over possible item names. However, this approach does not guarantee that the item name returned will correspond to one of the items present in the stream.

The reduction from Estimating Rank to Bin Packing goes as follows: Suppose that all numbers in the input stream for Estimating Rank are from interval \((\frac 12, \frac 23)\) (this is without loss of generality by scaling and translating) and let q be a query in \((\frac 12, \frac 23)\). For each number ai in the stream for Estimating Rank, we introduce two items of size ai in the stream for Bin Packing. After these 2N items (two copies each of \(a_{1}, \dots , a_{N}\)) are inserted in the same order as in the stream for Estimating Rank, we then insert a further 2N items in the stream for Bin Packing, all of size 1 − q. Observe first that no pair of the first 2N items can be placed in the same bin, so we must open at least 2N bins, two for each of a1,…,aN. Since \(\frac 12 > (1-q) > \frac 13\), and \(a_{i} > \frac 12\), we can place at most one of the 2N items of size (1 − q) in a bin with ai in it, provided that ai + (1 − q) ≤ 1, i.e., ai ≤ q. Thus, we can pack a number of the (1 − q)-sized items, equivalent to 2(N − rank(q)), in the first 2N bins. This leaves 2rank(q) items, all of size (1 − q). We pack these optimally into rank(q) additional bins, for a total of 2N + rank(q) bins.

We claim that a (1 + ε)-approximation of the optimum number of bins provides a 4ε-approximate rank of q. Indeed, let m be the number of bins returned by the algorithm and let r = m − 2N be the estimate of rank(q). We have that the optimal number of bins equals 2N + rank(q) and thus 2N + rank(q) ≤ m ≤ (1 + ε) ⋅ (2N + rank(q)) + o(N). By using r = m − 2N and rearranging, we get

Since the right-hand side can be upper bounded by rank(q) + 4εN (provided that o(N) < εN, which holds for a large enough N), r is a 4ε-approximate rank of q. Hence, the memory state of an algorithm for Bin Packing after processing the first 2N items (of sizes \(a_{1}, \dots , a_{N}\)) can be used as a data structure for Estimating Rank.

In [16] we show a space lower bound of \({\Omega }(\frac {1}{\varepsilon }\cdot \log \varepsilon N)\) for comparison-based data structures for Estimating Rank (and for quantile summaries as well).

Theorem 4 (Theorem 13 in 16)

For any \(0 < \varepsilon < \frac 1{16}\), there is no deterministic comparison-based data structure for Estimating Rank which stores \(o\left (\frac {1}{\varepsilon }\cdot \log \varepsilon N\right )\) items on any input stream of length N.

We conclude that there is no comparison-based streaming algorithm for Bin Packing which stores \(o(\frac {1}{\varepsilon }\cdot \log {\textsf {OPT}})\) items on any input stream (recall that \(N = {\mathcal {O}}({\textsf {OPT}})\) in our reduction). Note that our algorithm is comparison-based if we employ the comparison-based quantile summary of Greenwald and Khanna [27], except that it needs to determine the size group for each item, which can be done by comparisons with 2−j for integer values of j. Nevertheless, comparisons with a fixed set of constants does not affect the reduction from Estimating Rank (i.e., the reduction can choose an interval contained in \((\frac 12, \frac 23)\) to avoid all constants fixed in the algorithm), thus the lower bound of \({\Omega }\left (\frac {1}{\varepsilon }\cdot \log {\textsf {OPT}}\right )\) applies to our algorithm as well. This yields near optimality of our approach, up to a factor of \({\mathcal {O}}\left (\log \frac {1}{\varepsilon }\right )\). Finally, we remark that the lower bound of \({\Omega }(\frac {1}{\varepsilon }\cdot \log \log \frac {1}{\delta })\) for randomized comparison-based quantile summaries [35] translates to Bin Packing as well.

3.3 Vector Bin Packing

As already observed by Fernandez de la Vega and Lueker [19], a (1 + ε)-approximation algorithm for (scalar) Bin Packing implies a d ⋅ (1 + ε)-approximation algorithm for Vector Bin Packing, where items are d-dimensional vectors and bins have unit capacity in every dimension. Indeed, we split the vectors into d groups according to the largest dimension (chosen arbitrarily among dimensions that have the largest value) and in each group we apply the approximation scheme for Bin Packing, packing just according to the largest dimension. Finally, we take the union of opened bins over all groups. Since the optimum of the Bin Packing instance for each group is a lower bound on the optimum of Vector Bin Packing, we get that the solution is a d ⋅ (1 + ε)-approximation.

This can be done in the same way in the streaming model. Hence, there is a streaming algorithm for Vector Bin Packing which outputs a d ⋅ (1 + ε)-approximation of OPT, the offline optimal number of bins, using \({\mathcal {O}}\left (\frac {d}{\varepsilon } \cdot \log \frac {1}{\varepsilon } \cdot \log {\textsf {OPT}}\right )\) memory. By scaling ε, there is a (d + ε)-approximation algorithm with \(\widetilde {{\mathcal {O}}}(\frac {d^{2}}{\varepsilon })\) memory. We can, however, do better by one factor of d.

Theorem 5

There is a streaming (d + ε)-approximation for Vector Bin Packing algorithm that uses \({\mathcal {O}}\left (\frac {d}{\varepsilon } \cdot \log \frac {d}{\varepsilon } \cdot \log {\textsf {OPT}}\right )\) memory.

Proof

Given an input stream I of vectors, we create an input stream \(I^{\prime }\) for Bin Packing by replacing each vector v by a single (scalar) item a of size \(\|\mathbf {v}\|_{\infty }\). We use our streaming algorithm for Bin Packing with precision \(\delta = \frac {\varepsilon }{d}\) which uses \({\mathcal {O}}\left (\frac {1}{\delta } \cdot \log \frac {1}{\delta } \cdot \log {\textsf {OPT}}\right )\) memory and returns a solution with at most \(B = (1 + \delta )\cdot {\textsf {OPT}}(I^{\prime }) + \widetilde {{\mathcal {O}}}(\frac {1}{\delta })\) scalar bins. Clearly, B bins are sufficient for the stream I of vectors, since in the solution for \(I^{\prime }\) we replace each item by the corresponding vector and obtain a valid solution for I.

Finally, we show that \((1 + \delta )\cdot {\textsf {OPT}}(I^{\prime }) + \widetilde {{\mathcal {O}}}(\frac {1}{\delta })\le (d+\varepsilon )\cdot {\textsf {OPT}}(I) + \widetilde {{\mathcal {O}}}(\frac {d}{\varepsilon })\) for which it is sufficient to prove that \({\textsf {OPT}}(I^{\prime })\le d\cdot {\textsf {OPT}}(I)\) as \(\delta = \frac {\varepsilon }{d}\). Namely, from an optimal solution \(\mathcal {S}\) for I, we create a solution for \(I^{\prime }\) with at most d ⋅OPT(I) bins. For each bin B in \(\mathcal {S}\), we split the vectors assigned to B into d groups according to the largest dimension (chosen arbitrarily among those with the largest value) and for each group i we create bin Bi with vectors in group i. Then we just replace each vector v by an item of size \(\|\mathbf {v}\|_{\infty }\) and obtain a valid solution for \(I^{\prime }\) with at most d ⋅OPT(I) bins. □

Interestingly, a better than d-approximation using sublinear memory, which is rounding-based, is not possible, due to the following result in [7]. (Note that the result requires that the numbers in the input vectors can take arbitrary values in [0,1], i.e., vectors do not belong to a bounded universe.)

Theorem 6 (Implied by the proof of Theorem 2.2 in 7)

Any algorithm for Vector Bin Packing that rounds up large coordinates of vectors to o(N/d) types cannot achieve better than d-approximation, where N is the number of vectors.

It is an interesting open question whether or not we can design a streaming (d + ε)-approximation with \(o(\frac {d}{\varepsilon })\) memory or even with \(\widetilde {{\mathcal {O}}}\left (d + \frac {1}{\varepsilon }\right )\) memory.

4 Vector Scheduling

We provide a novel approach for creating an input summary for Vector Scheduling, based on combining small items into containers. Our streaming algorithm stores all big jobs and all containers, created from small items, and these containers are relatively big as well. Thus, there is a bounded number of big jobs and containers, and the space used is bounded as well. We show that this simple summarization preserves the optimal makespan up to a factor of \(2-\frac {1}{m} + \varepsilon \) for any 0 < ε ≤ 1. We assume that the algorithm knows (an upper bound on) m ≥ 2 in advance.

Description of algorithm AggregateSmallJobs

For 0 < ε ≤ 1 and m ≥ 2, the algorithm works as follows: For each \(k = 1, \dots , d\), it keeps track of the total load of all jobs in dimension k, denoted Lk. Note that the optimal makespan satisfies \({\textsf {OPT}}\ge \max \limits _{k} \frac 1m\cdot L_{k}\) (an alternative lower bound on OPT is the maximum \(\ell _{\infty }\) norm of a job seen so far, but our algorithm does not use this). For brevity, let \({\textsf {LB}} = \max \limits _{k} \frac 1m\cdot L_{k}\).

Let \(\gamma = {\Theta }\left (\varepsilon ^{2} / \log \frac {d^{2}}{\varepsilon }\right )\); the constant hidden in Θ follows from the analysis below. We also ensure that \(\gamma \le \frac 14\varepsilon \). We say that a job with vector v is big if \(\|\mathbf {v}\|_{\infty } > \gamma \cdot {\textsf {LB}}\); otherwise it is small. The algorithm stores all big jobs (i.e., the full vector of each big job), while it aggregates small jobs into containers, and does not store any small job directly. A container is simply a vector c that equals the sum of vectors for small jobs assigned to this container, and we ensure that \(\|\mathbf {c}\|_{\infty } \le 2\gamma \cdot {\textsf {LB}}\). Furthermore, container c is closed if \(\|\mathbf {c}\|_{\infty } > \gamma \cdot {\textsf {LB}}\), otherwise, it is open. As two open containers can be combined into one (open or closed) container, we maintain only one open container. We execute a variant of the Next Fit algorithm to pack the containers, adding an incoming small job into the open container, where it always fits as any small vector v satisfies \(\|\mathbf {v}\|_{\infty } \le \gamma \cdot {\textsf {LB}}\). All containers are retained in the memory.

When a new job vector v arrives, we update the values of Lk for \(k=1,\dots ,d\) (by adding vk) and also of LB. If LB increases, any previously big job u that has become small (w.r.t. new LB), is considered to be an open container. Moreover, it may happen that a previously closed container c becomes open again, i.e., \(\|\mathbf {c}\|_{\infty } \le \gamma \cdot {\textsf {LB}}\). If we indeed have more open containers, we keep aggregating an arbitrary pair of open containers until only one is left. Finally, if the new job v is small, we add it in an open container (if there is no open container, we first open a new, empty one). This completes the description of the algorithm. (We remark that for packing the containers, we may also use another, more efficient algorithm, such as First Fit, which however makes no difference in the approximation guarantee.)

Properties of the input summary

After all jobs are processed, we can assume that \({\textsf {LB}} = \max \limits _{k} \frac 1m\cdot L_{k} = 1\), which implies that OPT ≥ 1. This is without loss of generality by scaling every quantity by 1/LB.

First, we bound the space needed to store the input summary. Since any big job and any closed container, each characterized by a vector v, satisfy \(\|\mathbf {v}\|_{\infty } > \gamma \), it holds that there are at most \(\frac {1}{\gamma } \cdot d\cdot m\) big jobs and closed containers. As at most one container remains open in the end and any job or container is described by d numbers, the space cost is \({\mathcal {O}}\left (\frac {1}{\gamma } \cdot d^{2}\cdot m\right ) = {\mathcal {O}}\left (\frac {1}{\varepsilon ^{2}} \cdot d^{2}\cdot m\cdot \log \frac {d}{\varepsilon }\right )\).

We now analyze the maximum approximation factor that can be lost by this summarization. Let IR be the resulting instance formed by big jobs and containers with small items (i.e., the input summary produced by algorithm AggregateSmallJobs), and let I be the original instance, consisting of jobs in the input stream. We show that OPT(IR) and OPT(I) are close together, up to a factor of \(2 - \frac {1}{m} + \varepsilon \), and an example in Section 4.1 shows that this bound is tight for our approach. Note, however, that we still need to execute an offline algorithm to get (an approximation of) OPT(IR), which is not an explicit part of the summary; see the proof of Theorem 9 below.

The crucial part of the analysis is to show that containers for small items can be assigned to machines so that the loads of all machines are nearly balanced in every dimension, especially in the case when containers constitute a large fraction of the total load of all jobs. Let \(L^{\mathrm {C}}_{k}\) be the total load of containers in dimension k (equal to the total load of small jobs). Let \(I_{\mathrm {C}}\subseteq I_{\mathrm {R}}\) be the instance consisting of all containers in IR. The following lemma establishes the key properties of the input summary IR.

Lemma 7

Supposing that \(\max \limits _{k} \frac 1m\cdot L_{k} = 1\), the following holds:

-

(i) There is a solution for instance IC with load at most \(\max \limits (\frac {1}{2}, \frac {1}{m}\cdot L^{\mathrm {C}}_{k}) +2\varepsilon +4\gamma \) in each dimension k on every machine.

-

(ii) \({\textsf {OPT}}(I)\le {\textsf {OPT}}(I_{\mathrm {R}})\le \left (2 - \frac {1}{m} + 3\varepsilon \right )\cdot {\textsf {OPT}}(I)\).

Proof

(i) We obtain the solution from the randomized online algorithm by Im et al. [31]. Although this algorithm has ratio \({\mathcal {O}}(\log d / \log \log d)\) on general instances, we show that it behaves substantially better when jobs are small enough. In a nutshell, this algorithm works by first assigning each job j to a uniformly random machine i and if the load of machine i exceeds a certain threshold, then the job is reassigned by Greedy. The online Greedy algorithm works by assigning jobs one by one, each to a machine so that the makespan increases as little as possible (breaking ties arbitrarily).

Let \(L^{\prime }_{k} = \max \limits (\frac {1}{2}, \frac {1}{m}\cdot L^{\mathrm {C}}_{k})\). We assume that each machine has its capacity of \(L^{\prime }_{k}+2\varepsilon +4\gamma \) in each dimension k split into two parts: The first part has capacity \(L^{\prime }_{k}+\varepsilon +2\gamma \) in dimension k for the containers assigned randomly, and the second part has capacity ε + 2γ in all dimensions for the containers assigned by Greedy. Note that Greedy cares about the load in the second part only.

The algorithm assigns containers one by one as follows: For each container c, it first chooses a machine i uniformly and independently at random. If the load of the first part of machine i already exceeds \(L^{\prime }_{k}+\varepsilon \) in some dimension k, then c is passed to Greedy, which assigns it according to the loads in the second part. Otherwise, the algorithm assigns c to machine i.

As each container c satisfies \(\|\mathbf {c}\|_{\infty } \le 2\gamma \), it holds that randomly assigned containers fit into capacity \(L^{\prime }_{k}+\varepsilon +2\gamma \) in any dimension k on any machine. We show that the expected amount of containers assigned by Greedy is small enough so that they fit into machines with capacity of ε + 2γ, which in turn implies that there is a choice of random bits for the assignment so that the capacity for Greedy is not exceeded. The existence of a solution with capacity \(L^{\prime }_{k}+2\varepsilon +4\gamma \) in each dimension k will follow.

Consider a container c and let i be the machine chosen randomly for c. We claim that for any dimension k, the load on machine i in dimension k, assigned before processing c, exceeds \(L^{\prime }_{k}+\varepsilon \) with probability of at most \(\frac {\varepsilon }{d^{2}}\). To show the claim, we use the following Chernoff-Hoeffding bound:

Fact 8

Let \(X_{1}, \dots , X_{n}\) be independent binary random variables and let \(a_{1}, \dots , a_{n}\) be coefficients in [0,1]. Let \(X = {\sum }_{i} a_{i} X_{i}\). Then, for 0 < δ ≤ 1 and \(\mu \ge \mathbb {E}[X]\), it holds that \(\Pr [X > (1+\delta )\cdot \mu ] \le \exp \left (-\frac 13\cdot \delta ^{2}\cdot \mu \right )\).

We use this bound with variable \(X_{\mathbf {c^{\prime }}}\) for each vector \(\mathbf {c^{\prime }}\) assigned randomly before vector c and not reassigned by Greedy. We have \(X_{\mathbf {c^{\prime }}} = 1\) if \(\mathbf {c^{\prime }}\) is assigned on machine i. Let \(a_{\mathbf {c^{\prime }}} = \frac {1}{2\gamma }\cdot \mathbf {c^{\prime }}_{k} \le 1\). Let \(X = {\sum }_{\mathbf {c^{\prime }}} a_{\mathbf {c^{\prime }}} X_{\mathbf {c^{\prime }}}\) be the random variable equal to the load on machine i in dimension k, scaled by \(\frac {1}{2\gamma }\). It holds that \(\mathbb {E}[X] \le \frac {1}{m}\cdot \frac {1}{2\gamma }\cdot L^{\prime }_{k}\cdot m = \frac {1}{2\gamma }\cdot L^{\prime }_{k}\), since each container \(\mathbf {c^{\prime }}\) is assigned to machine i with probability \(\frac {1}{m}\) and \(L^{\prime }_{k}\cdot m\) is the upper bound on the total load of containers in dimension k. Using the Chernoff-Hoeffding bound with \(\mu = \frac {1}{2\gamma }\cdot L^{\prime }_{k}\) and δ = ε ≤ 1, we get that

Using \(\gamma = {\mathcal {O}}\left (\varepsilon ^{2} / \log \frac {d^{2}}{\varepsilon }\right )\) and \(L^{\prime }_{k}\ge \frac {1}{2}\), we obtain

where the last inequality holds for a suitable choice of the multiplicative constant in the definition of γ. This is sufficient to show the claim as \(X >(1+\varepsilon )\cdot \frac {1}{2\gamma }\cdot L^{\prime }_{k}\) if and only if the load on machine i in dimension k, assigned randomly before c, exceeds \((1+\varepsilon )\cdot L^{\prime }_{k}\).

By the union bound, the claim implies that each container c is reassigned by Greedy with probability at most \(\frac {\varepsilon }{d}\). Let G be the random variable equal to the sum of the ℓ1 norms (where \(\|\mathbf {c}\|_{1} = {\sum }_{k=1}^{d} \mathbf {c}_{k}\)) of containers assigned by Greedy. Using the linearity of expectation and the claim, we have

where the second inequality uses that the total load of containers in each dimension is at most m. Let μG be the makespan of the containers created by Greedy. Observe that each machine has a dimension with load at least μG − 2γ. Indeed, otherwise, if there is a machine i with load less than μG − 2γ in all coordinates, the last container c assigned by Greedy that caused the increase of the makespan to μG would be assigned to machine i, and the makespan after assigning c would be smaller than μG (using \(\|\mathbf {c}\|_{\infty } \le 2\gamma \)). It follows that \(\mu _{\mathbf {G}} - 2\gamma \le \frac {1}{m}\cdot G\) and, using \(\mathbb {E}[G]\le \varepsilon \cdot m\), we get that \(\mathbb {E}[\mu _{\mathbf {G}}] - 2\gamma \le \varepsilon \). Thus (i) holds.

(ii) The first inequality is straightforward as any solution for IR can be used as a solution for I, just packing small items first in containers and then the containers according to the solution for IR.

We create a solution of IR of makespan at most \(\left (2 - \frac {1}{m} + 3\varepsilon \right )\cdot {\textsf {OPT}}(I)\) as follows: We take an optimal solution \(\mathcal {S}_{\mathrm {B}}\) for instance IR ∖ IC, i.e., for big jobs only, and combine it, in an arbitrary way, with solution \(\mathcal {S}_{\mathrm {C}}\) for containers from (i), to obtain a solution \(\mathcal {S}\) for IR. Let μk be the largest load assigned to a machine in dimension k in solution \(\mathcal {S}_{\mathrm {B}}\); we have μk ≤OPT(I). Note that \(L^{\mathrm {C}}_{k} \le m - \mu _{k}\), since the total load of big jobs and containers together is at most m, by the assumption of the lemma.

Consider the load on machine i in dimension k in solution \(\mathcal {S}\). If \(\frac {1}{m}\cdot L^{\mathrm {C}}_{k} \ge \frac {1}{2}\), then this load is bounded by

where the first inequality uses \(L^{\mathrm {C}}_{k} \le m - \mu _{k}\) and \(\gamma \le \frac 14\varepsilon \) (ensured by the definition of γ), and the last inequality holds by μk ≤OPT(I) and 1 ≤OPT(I).

Otherwise, \(\frac {1}{m}\cdot L^{\mathrm {C}}_{k} < \frac {1}{2}\), in which case the load on machine i in dimension k is at most \(\mu _{k} + \frac {1}{2} +2\varepsilon +4\gamma \le (1.5 + 3\varepsilon )\cdot {\textsf {OPT}}(I) \le (2 - \frac {1}{m} + 3\varepsilon )\cdot {\textsf {OPT}}(I)\), using similar arguments as in the previous case and m ≥ 2. □

Finally, we have built up all the pieces for our main result for Vector Scheduling.

Theorem 9

There is a streaming \(\left (2-\frac {1}{m}+\varepsilon \right )\)-approximation algorithm for Vector Scheduling running in space \({\mathcal {O}}\left (\frac {1}{\varepsilon ^{2}} \cdot d^{2}\cdot m\cdot \log \frac {d}{\varepsilon }\right )\).

Proof

We process the stream by algorithm AggregateSmallJobs that outputs an input summary IR such that \({\textsf {OPT}}(I_{\mathrm {R}}) / {\textsf {OPT}}(I) \le 2 - \frac {1}{m} + \varepsilon \) by Lemma 7. Given summary IR, we compute a (1 + ε)-approximation of OPT(IR) using the algorithm in [8], which requires time doubly exponential in d (recall that such a time cost is needed to get an approximation of the makespan). This gives a solution of makespan at most \(\left (2 - \frac {1}{m} + {\mathcal {O}}(\varepsilon )\right )\cdot {\textsf {OPT}}(I)\). The space bound follows from the analysis given above. □

It remains open whether or not algorithm AggregateSmallJobs with γ = Θ(ε) also gives \((2-\frac {1}{m}+\varepsilon )\)-approximation, which would imply a better space bound of \({\mathcal {O}}(\frac {1}{\varepsilon }\cdot d^{2}\cdot m)\). The approximation guarantee of this approach cannot be improved, however, which we demonstrate by an example in the next section.

4.1 Tight Example for the Algorithm for Vector Scheduling

For any m ≥ 2, we present an instance I in d = m + 1 dimensions such that OPT(I) = 1, but \({\textsf {OPT}}(I_{\mathrm {R}}) \ge 2-\frac {1}{m}\), where IR is the instance created by algorithm AggregateSmallJobs described in Section 4.

Let γ be as in the algorithm and assume for simplicity that \(\frac {1}{\gamma }\) is an integer. First, m big jobs with vectors \(\mathbf {v^{1}}, \dots , \mathbf {v^{m}}\) arrive, where vi is a vector with dimensions i and m + 1 equal to 1 and with zeros in the other dimensions (that is, \(\mathbf {v^{i}_{\mathit {i}}} = 1\) and \(\mathbf {v^{i}_{\mathit {m+1}}} = 1\), while \(\mathbf {v^{i}_{\mathit {k}}} = 0\) for k∉{i,m + 1}). Then, small jobs arrive in groups of d − 1 = m jobs and there are \((m-1)\cdot \frac {1}{\gamma }\) groups. Each group consists of items \((\gamma , 0, \dots , 0, 0), (0, \gamma , \dots , 0, 0), \dots , (0, 0, \dots , \gamma , 0)\), i.e, for each \(i = 1, \dots , d-1\), it contains one item with value γ in coordinate i and with zeros in other dimensions. The groups arrive one by one, with an arbitrary ordering inside the group. Note, however, that these jobs with \(\ell _{\infty }\) norm equal to γ become small for the algorithm only once the first job from the last group arrives as they are compared to the total load in each dimension, which increases gradually. When they become small, the algorithm will combine each group into one container \((\gamma , \gamma , \dots , \gamma , 0)\), which can be achieved by processing the jobs in their arrival order and by having the last vector of the group larger by an infinitesimal amount (we do not take these infinitesimals into account in further calculations). Thus, IR consists of m big jobs and \((m-1)\cdot \frac {1}{\gamma }\) containers \((\gamma , \gamma , \dots , \gamma , 0)\).

Observe that OPT(I) = 1, since in the optimal solution, each machine i is assigned big job vi and \(\frac {1}{\gamma }\) small jobs with γ in dimension k for each \(k \in \{1, \dots , d-1\} \setminus \{i\}\). Thus the load equals one on any machine and dimension.

We claim that \({\textsf {OPT}}(I_{\mathrm {R}}) \ge 2-\frac {1}{m}\). Indeed, in a solution with makespan below 2, only one big job can be assigned on one machine, as all of them have value one in dimension m + 1, so each machine contains one big job. Observe that some machine gets at least \(\frac {m-1}{m}\cdot \frac {1}{\gamma }\) containers and thus, it has load of at least \(2-\frac {1}{m}\) in one of the d − 1 first dimensions, which shows the claim.

Note that for this instance to show ratio \(2-\frac {1}{m}\) it suffices that the algorithms creates \((m-1)\cdot \frac {1}{\gamma }\) containers \((\gamma , \gamma , \dots , \gamma , 0)\). This can be enforced for various greedy algorithms used for packing the small jobs into containers. We conclude that we need a different approach for input summarization to get a ratio below \(2-\frac {1}{m}\).

4.2 Rounding Algorithms for Vector Scheduling in a Constant Dimension

Makespan Scheduling

We start by outlining a simple streaming algorithm for d = 1 based on rounding. Here, each job j on input is characterized by its processing time pj only. The algorithm uses the size of the largest job seen so far, denoted \(p_{\max \limits }\), as a lower bound on the optimum makespan. This makes the rounding procedure (and hence, the input summary) oblivious of m, the number of machines, which is in contrast with the algorithm in Section 4 that uses just the sum of job sizes divided by m as the lower bound.

The rounding works as follows: Let q be an integer such that \(p_{\max \limits }\in ((1+\varepsilon )^{q}, (1+\varepsilon )^{q+1}]\), and let \(k = \lceil \log _{1+\varepsilon } \frac {1}{\varepsilon }\rceil = {\mathcal {O}}(\frac {1}{\varepsilon } \log \frac {1}{\varepsilon })\). A job is big if its size exceeds (1 + ε)q−k; note that any big job is larger than \(\varepsilon \cdot p_{\max \limits } / (1+\varepsilon )^{2}\). All other jobs are small and have size less than \(\varepsilon \cdot p_{\max \limits }\). The algorithm maintains one variable s for the total size of all small jobs and variables Li, \(i=q-k, \dots , q\), for the number of big jobs with size in ((1 + ε)i,(1 + ε)i+ 1] (note that this interval is not scaled by \(p_{\max \limits }\), i.e., increasing \(p_{\max \limits }\) slightly does not move the intervals).

Maintaining these variables when a new job arrives can be done in a straightforward way. In particular, when an increase of \(p_{\max \limits }\) causes that q increases (by 1 or more as it is integral), we discard all variables Li that do not correspond to big jobs any more, and account for previously big jobs that are now small in variable s. However, as the size of these jobs was rounded to a power of 1 + ε, variable s can differ from the exact total size of small jobs by a factor of at most 1 + ε.

The created input summary, consisting of \({\mathcal {O}}(\frac {1}{\varepsilon } \log \frac {1}{\varepsilon })\) variables Li and variable s, preserves the optimal value up to a factor of \(1+{\mathcal {O}}(\varepsilon )\). This follows, since big jobs are stored with size rounded up to the nearest power of 1 + ε, and, although we just know the approximate total size of small jobs, they can be taken into account similarly as when calculating a bound on the number of bins in our algorithm for Bin Packing.

Vector Scheduling

We describe the rounding introduced by Bansal et al. [8], which we can adjust into a streaming (1 + ε)-approximation for Vector Scheduling in a constant dimension. The downside of this approach is that it requires memory exceeding \(\left (\frac {2}{\varepsilon }\right )^{d}\), which becomes unfeasible even for ε = 1 and d being a relatively small constant. Moreover, such an amount of memory may be needed also in the case of a small number of machines.

We first use the following lemma by Chekuri and Khanna [11], where \(\delta = \frac {\varepsilon }{d}\):

Lemma 10 (Lemma 2.1 in 11)

Let I be an instance of Vector Scheduling. Let \(I^{\prime }\) be a modified instance where we replace each vector v by vector \(\mathbf {v^{\prime }}\) as follows: For each 1 ≤ i ≤ d, if \(\mathbf {v}_{i} > \delta \|\mathbf {v}\|_{\infty }\), then \(\mathbf {v^{\prime }}_{i} = \mathbf {v}_{i}\); otherwise, \(\mathbf {v^{\prime }}_{i} = 0\). Let \(\mathcal {S}^{\prime }\) be any solution for \(I^{\prime }\). Then, if we replace each vector \(\mathbf {v^{\prime }}\) in \(\mathcal {S}^{\prime }\) by its counterpart in I, we get a solution of I with makespan at most 1 + ε times the makespan of \(\mathcal {S}^{\prime }\).

In the following, we assume that the algorithms receives vectors from instance \(I^{\prime }\), created as in Lemma 10. Let \(p_{\max \limits }\) be the maximum \(\ell _{\infty }\) norm over all vectors that arrived so far; we use it as a lower bound on OPT. We again do not use the total volume in each dimension as a lower bound, which makes the input summarization oblivious of m. A job, characterized by vector v, is said to be big if \(\|\mathbf {v}\|_{\infty } > \delta \cdot p_{\max \limits }\); otherwise, v is small.

We round all values in big jobs to the powers of 1 + ε. By Lemma 10, we have that either \(\mathbf {v}_{k} > \delta ^{2}\cdot p_{\max \limits }\) or vk = 0 for any big v and dimension k, thus there are \(\left \lceil \log _{1+\varepsilon } \frac {1}{\delta ^{2}}\right \rceil ^{d} = {\mathcal {O}}\left (\left (\frac {2}{\varepsilon } \log \frac {d}{\varepsilon }\right )^{d}\right )\) types of big jobs at any time. We have one variable Lt counting the number of jobs for each big type t, where t is an integer vector consisting of the exponents, i.e., if v is a big vector of type t, then \(\mathbf {v}_{i}\in \left ((1+\varepsilon )^{\mathbf {t}_{i}}, (1+\varepsilon )^{\mathbf {t}_{i}+1}\right ]\) (we set \(\mathbf {t}_{i} = -\infty \) if vi = 0). As in the 1-dimensional case, big types change over time, when \(p_{\max \limits }\) (sufficiently) increases.

Note that small jobs cannot be rounded to powers of 1 + ε directly. Instead, they are rounded relative to their \(\ell _{\infty }\) norms. More precisely, consider a small vector v and let \(\gamma = \|\mathbf {v}\|_{\infty }\). For each dimension k, if vk > 0, let tk ≥ 0 be the largest integer such that \(\mathbf {v}_{k} \le \gamma \cdot (1+\varepsilon )^{-\mathbf {t}_{i}}\), and if vi = 0, we set ti to \(\infty \). Then \((\mathbf {t}_{1}, \dots , \mathbf {t}_{d})\) is the type of small vector v. Observe that small types do not change over time and there are at most \({\mathcal {O}}\left (\left (\frac {1}{\varepsilon } \log \frac {d}{\varepsilon }\right )^{d}\right )\) of them. For each small type t, we have one variable st counting the sum of the \(\ell _{\infty }\) norms of all small jobs of that type.

The variables can be maintained in an online fashion. Namely, when \(p_{\max \limits }\) increases, the types for previously big jobs that are now small are discarded, while the jobs that become small are accounted for in small types. For each such former big type t, we compute the corresponding small type as follows: Let \(\delta = \|\mathbf {t}\|_{\infty }\) be the maximum value in t (which is not \(-\infty \)). The corresponding small type \(\boldsymbol {\hat {t}}\) has \(\boldsymbol {\hat {t}}_{i} = \delta - \mathbf {t}_{i}\) if \(\mathbf {t}_{i} \neq -\infty \), and \(\boldsymbol {\hat {t}}_{i} = \infty \) otherwise. Then we increase \(s_{\boldsymbol {\hat {t}}}\) by Lt ⋅ (1 + ε)δ+ 1.

There are two types of errors introduced due to maintaining variables in the streaming scenario and not offline, where we know the final value of \(p_{\max \limits }\) in advance. First, it may happen that a vector v that was big upon its arrival becomes small, and the small type of v is different than the small type computed for the former big type of v (i.e., the small type of v with values rounded to powers of 1 + ε). Second, the sum of \(\ell _{\infty }\) norms of small vectors of a small type t is in (st/(1 + ε),st], and moreover, the error in some dimension i with ti > 0 (i.e., not the largest one for this type) may be of factor up to (1 + ε)2, since we may round such a dimension two times for some jobs. Note, however, that by giving up a factor of \(1+{\mathcal {O}}(\varepsilon )\), we may disregard both issues.

The offline algorithm of Bansal et al. [8] implies that such an input summary, consisting of variables for both small and big types, is sufficient for computing (1 + ε)-approximation.

5 Conclusions and Open Problems