Abstract

Markov’s inequality is a certain estimate for the norm of the derivative of a polynomial in terms of the degree and the norm of this polynomial. It has many interesting applications in approximation theory, constructive function theory and in analysis (for instance, to Sobolev inequalities or Whitney-type extension problems). One of the purposes of this paper is to give a solution to an old problem, studied among others by Baran and Pleśniak, and concerning the invariance of Markov’s inequality under polynomial mappings (polynomial images). We also address the issue of preserving Markov’s inequality when taking polynomial preimages. Lastly, we give a sufficient condition for a subset of a Markov set to be a Markov set.

Similar content being viewed by others

1 Introduction

Throughout the paper, \(\mathbb {K}=\mathbb {R}\) or \(\mathbb {C}\) and \(\mathbb {R}^N\) will be treated as a subspace of \(\mathbb {C}^N\). If \(\emptyset \ne A\subset \mathbb {C}^N\) and \(f:A\longrightarrow \mathbb {C}^{N'}\), then we put \({\Vert f\Vert }_{A} :=\sup _{z\in A} |f(z)|\), where \(|\,\;|\) denotes the maximum norm. Moreover, \(\mathbb {N}:=\{1,2,3,\ldots \}\) and \(\mathbb {N}_0:=\{0\}\cup \mathbb {N}\). We will also use the following notation: for each set \(\emptyset \ne A\subset \mathbb {C}^N\) and each \(\lambda >0\), we put

One of the most important polynomial inequalities is the following Markov’s inequality (cf. [42]).

Theorem 1.1

(Markov) If P is a polynomial of one variable, then

Moreover, this inequality is optimal, because for the Chebyshev polynomials \(T_n\) (\(n\in \mathbb {N}_0\)), we have \(T_n'(1)=n^2\) and \({\Vert T_n\Vert }_{[-1,\,1]}=1\).

Recall that

In fact, the above inequality for quadratic polynomials was discovered by the celebrated chemist Mendeleev. Markov’s inequality and its various generalizations found many applications in approximation theory, analysis, constructive function theory, but also in other branches of science (for example, in physics or chemistry). There is now such extensive literature on Markov type inequalities that it is beyond the scope of this paper to give a complete bibliography. Let us mention only certain works which are most closely related to our paper (with emphasis on those dealing with generalizations of Markov’s inequality on sets admitting cusps), for example [1–7, 11–31, 35, 36, 39, 43, 44, 46, 48, 50, 51, 57, 58]. We should stress here that the present paper owes a great debt particularly to Pawłucki and Pleśniak’s work, because in [43] they laid the foundations for the theory of polynomial inequalities on “tame” (for example, semialgebraic) sets with cusps.

From the point of view of applications, it is important that the constant \((\deg P)^2\) in Markov’s inequality grows not too fast (that is, polynomially) with respect to the degree of the polynomial P. This is the reason why the concept of a Markov set is widely investigated.

Definition 1.2

We say that a compact set \(\emptyset \ne E\subset \mathbb {C}^N\) satisfies Markov’s inequality (or: is a Markov set) if there exist \(\varepsilon , C>0\) such that, for each polynomial \(P\in \mathbb {C}[z_1,\ldots ,z_N]\) and each \(\alpha =(\alpha _1,\ldots ,\alpha _N)\in \mathbb {N}_0^N\),

where \(D^{\alpha }P:=\displaystyle \frac{\partial ^{|\alpha |}P}{\partial z_1^{\alpha _1}\ldots \partial z_N^{\alpha _N}}\) and \(|\alpha |:=\alpha _1+\cdots +\alpha _N\).

Clearly, by iteration, it is enough to consider in the above definition multi–indices \(\alpha \) with \(|\alpha |=1\). We begin by giving some examples.

-

Obviously, if \(\emptyset \ne E_1,\ldots , E_p\subset \mathbb {C}^N\) are compact sets satisfying Markov’s inequality, then the union \(E_1\cup \cdots \cup E_p\) satisfies Markov’s inequality as well. In general, this is no longer so for the intersection \(E_1\cap \cdots \cap E_p\).

-

It is straightforward to show that the Cartesian product of Markov sets is a Markov set. More precisely, if \(\emptyset \ne E_j\subset \mathbb {C}^{N_j}\) (\(N_j\in \mathbb {N}\)) is a compact set satisfying Definition 1.2 with \(\varepsilon _j, C_j>0\) (\(j=1,\ldots , p\)), then \(E_1\times \cdots \times E_p\subset \mathbb {C}^{N_1+\cdots +N_p}\) satisfies this definition with \(\varepsilon :=\max \{\varepsilon _1,\ldots ,\varepsilon _p\}\) and \(C:=\max \{C_1,\ldots ,C_p\}\).

-

In Sect. 5, we give a sufficient condition for a subset of a Markov set to be a Markov set—see Theorem 5.1 and Corollary 5.2.

-

Let \(\emptyset \ne E\subset \mathbb {C}\) be a compact set such that, for each connected component K of E, we have \(\text {diam}(K)\ge \eta \) with some \(\eta >0\) being independent of K. Then E is a Markov set—see Lemma 3.1 in [56] and Sect. 3.

-

By Theorem 3.1 in [43], each compact UPC set satisfies Markov’s inequality. Recall that a set \(E\subset \mathbb {R}^N\) is UPC (uniformly polynomially cuspidal) if there exist \(\upsilon ,\, \theta > 0\) and \(d\in \mathbb {N}\) such that, for each \(x \in \overline{E}\), we can choose a polynomial map \(\displaystyle S_x : \mathbb {R} \longrightarrow \mathbb {R}^N\) with \(\deg S_x\le d\) satisfying the following conditions:

-

\(S_x (0) = x\),

-

\(\displaystyle \mathrm{dist} \big (S_x (t),\, \mathbb {R}^N {\setminus } E\big ) \ge \theta t^{\upsilon }\) for each \(t \in [0,\, 1]\).

Note that a UPC set is in particular fat, that is \(\overline{E} = \overline{\mathrm{Int} E}\). In [43, 44, 46], some large classes of UPC sets (and hence Markov sets) are given. These classes include for example all compact, fat and semialgebraic subsets of \(\mathbb {R}^N\) (see Sect. 2 for the definition).

-

The following result is due to Baran and Pleśniak (cf. [3]).

Theorem 1.3

(Baran, Pleśniak) Let \(E\subset \mathbb {R}^N\) be a compact UPC set. Suppose that \(h:\mathbb {R}^N\longrightarrow \mathbb {R}^N\) is a polynomial map such that \(\mathrm{{Jac}}\,h(\zeta )\ne 0\) for each \(\zeta \in \mathrm{{Int}}E\). Then h(E) satisfies Markov’s inequality.

Since each compact UPC set satisfies Markov’s inequality, the Baran–Pleśniak theorem says that, under a certain assumption on a Markov set \(E\subset \mathbb {R}^N\) and under a certain assumption on a polynomial map \(h:\mathbb {R}^N\longrightarrow \mathbb {R}^N\), the image h(E) also satisfies Markov’s inequality.

Our aim is among others to show that in Theorem 1.3:

-

Very strong UPC assumption on the Markov set E is superfluous.

-

The assumption that \(\mathrm{{Jac}}\,h(\zeta )\ne 0\) for each \(\zeta \in \mathrm{{Int}}E\) can be replaced by much weaker assumption that \(h:\mathbb {R}^N\longrightarrow \mathbb {R}^{N'}\) and

$$\begin{aligned} \mathrm{{rank}}\,h:= \max \left\{ \mathrm{{rank}}\,d_{\zeta } h:\, \zeta \in \mathbb {R}^N\right\} =N'. \end{aligned}$$Moreover, the latter assumption is the weakest possible condition on the polynomial map h that must be assumed (see Lemma 2.3).

More precisely, we will prove the following result in Sect. 2.

Theorem 1.4

Suppose that \(\emptyset \ne E\subset \mathbb {K}^N\) is a compact set satisfying Markov’s inequality and \(h:\mathbb {K}^N\longrightarrow \mathbb {K}^{N'}\) is a polynomial map such that

(\(N,N'\in \mathbb {N}\)). Then h(E) also satisfies Markov’s inequality.

It is worth noting that there is a holomorphic version of Theorem 1.3 in [3], which reads as follows. Suppose that \( E\subset \mathbb {C}^N\) is a compact, polynomially convex set satisfying Markov’s inequality. If \(h:U \longrightarrow \mathbb {C}^N\) is a holomorphic map in a neighbourhood U of E such that h(E) is nonpluripolar and \(\mathrm{{Jac}}\,h(\zeta )\ne 0\) for each \(\zeta \in E\), then h(E) also satisfies Markov’s inequality. (The notion of a polynomially convex set and the notion of a nonpluripolar set are defined in Sect. 3.)

In connection with Theorem 1.4, the following question naturally arises.

Question 1.5

Suppose that \(\emptyset \ne E\subset \mathbb {K}^{N'}\) is a compact set satisfying Markov’s inequality and \(g:\mathbb {K}^N\longrightarrow \mathbb {K}^{N'}\) is a polynomial map (\(N,N'\in \mathbb {N}\)). Under what conditions is it true that \(g^{-1}(E)\) satisfies Markov’s inequality?

The precise answer is not known to us. However, we will address this issue in Sects. 3 and 4. In particular, we will give some specific examples to show a variety of situations that we encounter exploring this problem. Eventually, we will give a result (Theorem 3.8) being a partial answer to Question 1.5.

2 A proof of Theorem 1.4

We will need the notion of a semialgebraic set and the notion of a semialgebraic map.

Definition 2.1

A subset of \(\mathbb {R}^N\) is said to be semialgebraic if it is a finite union of sets of the form

where \(\xi ,\, \xi _1,\, \dots ,\, \xi _q \in \mathbb {R}[x_1, \ldots ,x_N]\) (cf. [9, 59]).

Definition 2.2

A map \(f: A \longrightarrow \mathbb {R}^{N'}\), where \(A\subset \mathbb {R}^N\), is said to be semialgebraic if its graph is a semialgebraic subset of \(\mathbb {R}^{N+N'}\).

All semialgebraic sets constitute the simplest polynomially bounded o-minimal structure (see [59, 60] for the definition and properties of o-minimal structures). However, the knowledge of o-minimal structures is not necessary to follow the present paper. Whenever we say “a set (a map) definable in a polynomially bounded o-minimal structure”, the reader who is not familiar with the basic notions of o-minimality can just think of a semialgebraic set (map).

Before going to the proof of Theorem 1.4, it is worth noting that the assumption that \(\mathrm{{rank}}\,h=N'\) is necessary in this theorem, as is seen by the following lemma.

Lemma 2.3

Suppose that \(h:\mathbb {K}^N\longrightarrow \mathbb {K}^{N'}\) is a polynomial map such that \(\mathrm{{rank}}\,h<N'\) (\(N,N'\in \mathbb {N}\)). Then for each compact set \(\emptyset \ne E\subset \mathbb {K}^N\) the image h(E) does not satisfy Markov’s inequality.

Proof

By Sard’s theorem, the set \(h(\mathbb {K}^N)\) has Lebesgue measure zero.

Case 1: \(\mathbb {K}=\mathbb {R}\). By the Tarski–Seidenberg theorem (cf. [8, 9, 40]), the set \(h(\mathbb {R}^N)\) is semialgebraic. Therefore \(h(\mathbb {R}^N)=\bigcup _{j=1}^sH_j\), where \(s\in \mathbb {N}\) and

with some \(P_j, P_{i,j}\in \mathbb {R}[w_1, \ldots ,w_{N'}]\). We can clearly assume that each \(H_j\) is nonempty. Put \(P:=P_1\cdot \ldots \cdot P_s\). Note that \(P\not \equiv 0\) Footnote 1 and \(P|_{h(\mathbb {R}^N)}\equiv 0\). Take a point \(a\in h(E)\). For each \(w\in \mathbb {R}^{N'}\), we have

and therefore \(D^{\alpha }P(a)\ne 0\) for some \(\alpha \in \mathbb {N}_0^{N'}\). Since \({\Vert P\Vert }_{h(E)}=0\), it follows that h(E) does not satisfy Markov’s inequality.

Case 2: \(\mathbb {K}=\mathbb {C}\). By Chevalley’s theorem, the set \(h(\mathbb {C}^N)\) is constructible (see [41, pp. 393–396], for the definition and details). Moreover, \(\overline{h(\mathbb {C}^N)}\ne \mathbb {C}^{N'}\) Footnote 2 and \(\overline{h(\mathbb {C}^N)}\) is a complex algebraic set (see [41, p. 394]), that is the set of common zeros of some collection of complex polynomials. In particular, there exists \(P\in \mathbb {C}[w_1, \ldots ,w_{N'}]\) such that \(P\not \equiv 0\) and \(P|_{h(\mathbb {C}^N)}\equiv 0\). Arguing as in Case 1 we see that h(E) does not satisfy Markov’s inequality.Footnote 3 \(\square \)

We will try to keep the exposition as self-contained as possible. It should be stressed, however, that our proof of Theorem 1.4 is influenced by ideas from the original proof of Theorem 1.3 by Baran and Pleśniak.

Proof of Theorem 1.4. Clearly, it suffices to consider the case \(\mathbb {K}=\mathbb {C}\). Put

Take an open and bounded set \(I\subset \mathbb {C}^N\) such that \(E\subset \overline{I}\) and \(\chi ^{-1}(I)\) is semialgebraic (for example, a sufficiently large open polydisk).

Put \(A:=I{\setminus } T\), where

Since the set T is (complex) algebraic and nowhere dense (see [41, p. 158]), it follows that \(\chi ^{-1}(A)\subset \mathbb {R}^{2N}\) is open, semialgebraic and \(\overline{\chi ^{-1}(A)}=\chi ^{-1}(\overline{I})\). Consequently, by Corollary 6.6 in [43], \(\chi ^{-1}(A)\) is UPC. Therefore there exist \(\upsilon ,\, \theta > 0\) and \(d\in \mathbb {N}\) such that, for each \(x \in \overline{\chi ^{-1}(A)}\), we can choose a polynomial map \(\displaystyle S_x : \mathbb {R} \longrightarrow \mathbb {R}^{2N}\) satisfying the following conditions:

-

(i)

\(\deg S_x\le d\),

-

(ii)

\(S_x (0) = x\),

-

(iii)

\(\displaystyle \mathrm{dist} \big (S_x (t),\, \mathbb {R}^{2N} {\setminus } \chi ^{-1}(A)\big ) \ge \theta t^{\upsilon }\) for each \(t \in [0,\, 1]\).

By Lemma 3.1 in [44], the maps \(G_0,G_1,\ldots ,G_d: \overline{\chi ^{-1}(A)}\longrightarrow \mathbb {R}^{2N}\), defined by

are bounded. Thus there exists \(C_1>0\) such that, for each \(z\in \overline{A}\) and \(t \in [0,\,1]\),

where

(Use the fact that \(S_{\chi ^{-1}(z)}(0)=\chi ^{-1}(z)\).)

By [41, p. 243], there exist \(C_2,\kappa >0\) such that, for each \(\zeta \in \overline{I}\),

(Use the fact that \(T\subset \mathbb {C}^N{\setminus } A\) and consider two cases: \(T=\emptyset \) and \(T\ne \emptyset \).)

Take \(\varepsilon , C>0\) such that, for each polynomial \(P\in \mathbb {C}[z_1,\ldots ,z_N]\) and each \(\alpha \in \mathbb {N}_0^N\),

(see Definition 1.2). Put

where \(k:=\deg h\ge 1\).

Let \(w_1, \ldots , w_{N'}\) denote the variables in \(\mathbb {C}^{N'}\). We will show that, for each polynomial \(Q\in \mathbb {C}[w_1,\ldots ,w_{N'}]\) with \(\deg Q\le n \) (\(n\in \mathbb {N}\)), each \(l\in \{1,\ldots , N'\}\) and each \(a\in E\),

Obviously, the above estimate proves the required assertion that h(E) satisfies Markov’s inequality.

Fix therefore Q, l, a as above. First, we will show that, for each \(\zeta \in \mathbb {C}^N\) and each \(j\in \{1,\ldots , N\}\),

By Taylor’s formula and (4),

which completes the proof of (6).

We will show moreover that, for each \(\zeta \in A\),

To this end, take the integers \(j_1=j_1(\zeta ), \ldots , j_{N'}=j_{N'}(\zeta )\) such that \(1\le j_1< \cdots < j_{N'}\le N\) and

(see (3)). Consider the system of equations:

Now it is enough to apply Cramer’s rule, Hadamard’s inequalityFootnote 4 and the estimates (6) and (8).

For each \(t\in [0,\,1]\), we have by (iii) and (2)

Combining this with (1) and (7), we get for each \(t\in (0,\,1]\) the following estimate:

Note that \(\displaystyle \frac{\partial Q}{\partial w_l}\circ h\circ P_a\) is the restriction to \(\mathbb {R}\) of a polynomial \(\Upsilon : \mathbb {C}\longrightarrow \mathbb {C}\) of degree \(\le dk(n-1)\). Put \(\delta :=n^{-\varepsilon }\) and

By Schur’s inequality,Footnote 5

Therefore either \(\displaystyle \left| \frac{\partial Q}{\partial w_l}(h(a)) \right| =0\), or \(\displaystyle \left| \frac{\partial Q}{\partial w_l}(h(a)) \right| >0\) and then

which establishes the estimate (5) and hence completes the proof of the theorem. \(\square \)

3 Markov’s inequality and polynomial preimages

In this section, we will look at Markov’s inequality from the point of view of polynomial preimages.

We begin by a brief discussion of another concept, called the HCP property, which is related to Markov’s inequality. For a compact set \(\emptyset \ne E\subset \mathbb {C}^N\), the following function

(\(z\in \mathbb {C}^N\)) is called the Siciak extremal function (cf. [34, 37, 38, 49, 54, 55]). It is an elementary check that \(\Phi _{E}\ge 1 \) in \(\mathbb {C}^N\), \(\Phi _E\equiv 1\) in E and \(\Phi _E\le \Phi _K\) provided that \(\emptyset \ne K\subset E\) and K is compact. However, except for some very special cases, no explicit expression for \(\Phi _E\) is known.

We have a very simple formula (yet with nontrivial proof) connecting the function \(\Phi _E\) with potential and pluripotential theory: \(\log \Phi _{E}=V_{E}\), where

and \(\mathcal {L}(\mathbb {C}^N)\) denotes the class of plurisubharmonicFootnote 6 functions \(\phi \) in \(\mathbb {C}^N\) satisfying the condition

(cf. Theorem 4.12 in [55] or Theorem 5.1.7 in [34]). The upper semicontinuous regularization \(V_{E}^*\) of \(V_{E}\) is often called the pluricomplex Green function, because for a compact set \(E\subset \mathbb {C}\) with positive logarithmic capacity \(V_{E}^*\) is the Green function with pole at infinity of the unbounded component of \(\mathbb {C}{\setminus } E\).

If \(\emptyset \ne E\subset \mathbb {C}^N\) is a compact set and \(\Phi _{E}\) is continuous at every point of E, then \(\Phi _E\) is continuous in \(\mathbb {C}^N\), in other words, the set E is L-regular (cf. Proposition 6.1 in [55] or Corollary 5.1.4 in [34]).

Definition 3.1

We say that a compact set \(\emptyset \ne E\subset \mathbb {C}^N\) has the HCP property if \(\Phi _E\) is Hölder continuous in the following sense: there exist \(\varpi , \mu >0\) such that

We will also need the notion of a pluripolar set.

Definition 3.2

(see [34]) A set \(A\subset \mathbb {C}^N\) is said to be pluripolar if one of the following two equivalent conditions holds:

-

(i)

For each point \(a\in A\), there exists an open neighbourhood U of a such that \(A\cap U\subset \{z\in U:\, u(z)=-\infty \}\) for some plurisubharmonic function \(u: U\longrightarrow [-\infty ,+\infty )\).

-

(ii)

There exists a plurisubharmonic function \(\psi \) in \(\mathbb {C}^N\) such that \(A \subset \{z\in \mathbb {C}^N:\, \psi (z)=-\infty \}\).

Let us add that the implication (i)\(\implies \) (ii) is the content of Josefson’s theorem (saying that every locally pluripolar set in \(\mathbb {C}^N\) is globally pluripolar). We have moreover the following characterization of pluripolar sets in terms of the pluricomplex Green function: for each set \(\emptyset \ne A\subset \mathbb {C}^N\),

(cf. Corollary 3.9 and Theorem 3.10 in [55]). Recall also that pluripolar sets have Lebesgue measure zero (cf. Corollary 2.9.10 in [34]) and countable unions of pluripolar sets are pluripolar (cf. Corollary 4.7.7 in [34]).

There is a close relation between Markov’s inequality and HCP property. Namely,

-

HCP \(\implies \) Markov’s inequality (see [43]).

-

The validity of the reverse implication still remains open (see [48], where this problem is posed by Pleśniak).

Furthermore, it is worth noting that, for each compact subset of \(\mathbb {R}^N\), UPC \(\implies \) HCP (see [43]), yet the implication cannot be reversed.

It should come as no surprise that the inverse image of a Markov set under a polynomial map need not be a Markov set, even if it is a compact set. Consider for example the map

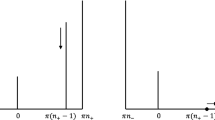

and the set \(E:=[-1,\,0]\). Then \(h^{-1}(E)=\{0\}\) does not satisfy Markov’s inequality. Clearly, the inverse image of an interval under any polynomial map \(h:\mathbb {R}\longrightarrow \mathbb {R}\) is a finite union of intervals (with infinite endpoints allowed) and points. Markov’s inequality for sets consisting of finitely many intervals was deeply investigated by Totik in [57].

The situation is quite different if we consider the complex case (\(\mathbb {K}=\mathbb {C}\)). But also in this case the claim that the polynomial preimage of a Markov set is a Markov set is still far from being valid.

Example 3.3

Consider the polynomial map

and the set \(E:=\Gamma \cup \{(\alpha ,\beta )\}\), where \(\Gamma := \left\{ z\in \mathbb {C}^2:\, |z_1|=|z_2|=1\right\} \), \(\alpha ,\beta \in \mathbb {C}\) and \(0<|\alpha |<|\beta |\le 1\). Recall that:

-

The Shilov boundary of the open polydisc \(\mathbb {D}_N:=\{z\in \mathbb {C}^N:\, |z|<1\}\) Footnote 7 is its skeleton, that is the set \(\{z\in \mathbb {C}^N:\, |z_1|=\cdots =|z_N|=1\}\) (see [53, p. 22]).

-

The closed polydisk \( \overline{\mathbb {D}}_N\) satisfies Markov’s inequality. Indeed, for \(N=1\), this the content of Bernstein’s theorem: for each complex polynomial Q of one variable,

$$\begin{aligned} {\Vert Q'\Vert }_{\overline{\mathbb {D}}}\le (\deg Q) \, {\Vert Q\Vert }_{\overline{\mathbb {D}}}, \end{aligned}$$where \(\mathbb {D}:=\mathbb {D}_1\) (see [10, p. 233]). For \(N>1\), it is enough to use the fact that the Cartesian product of Markov sets is a Markov set (see Sect.1).

It follows that the set E satisfies Markov’s inequality. However, the set \(g^{-1}(E)=\Gamma \cup \left\{ \left( \alpha , {\beta }/{\alpha } \right) \right\} \) does not satisfy Markov’s inequality. Indeed, suppose otherwise and take \(\varepsilon , C\) of Definition 1.2. Then, for polynomials \(\Psi _n\in \mathbb {C}[w_1,w_2]\) (\(n\in \mathbb {N}\)) defined by \(\Psi _n (w_1,w_2):=(\beta -\alpha w_2)w_2^n\), we have

which is impossible. \(\square \)

The situation described in the above example is particular, because the set \(g^{-1}(E)\) is not L-regular.Footnote 8 This is no longer the case in the next example (Example 3.6).

It will be convenient to state beforehand, for easy reference, two results. The first one gives a sufficient and necessary condition for a bounded set \(A \subset \mathbb {R}^2\) definable in some polynomially bounded o-minimal structure to be UPC (cf. [44], Theorem B).

Theorem 3.4

Let \(A \subset \mathbb {R}^2\) be bounded and definable in some polynomially bounded o-minimal structure (for example, semialgebraic). Then the following two statements are equivalent:

-

A is UPC.

-

A is fat and, for each \(a\in \overline{A}\), \(\rho >0\) and any connected component S of the set \(\mathrm{Int} A\cap {B}(a,\,\rho )\) such that \(a\in \overline{S}\), there is a polynomial arc \(\gamma :(0,\,1) \longrightarrow S\) such that \(\displaystyle \lim \limits _{t \rightarrow 0} \gamma (t) = a\), where \({B}(a,\,\rho )=\{x\in \mathbb {R}^2 :\, |x-a|<\rho \}\).

The second result is a special case of the (semi)analytic accessibility criterion due to Pleśniak (cf. [47]).Footnote 9

Theorem 3.5

Let \(K\subset \mathbb {K}^N\) be a compact set. Suppose that there exists a polynomial mapping \(\gamma : \mathbb {K}\longrightarrow \mathbb {K}^N\) such that \(\gamma ((0,\,1])\subset \mathrm{Int}K\). Then K is L-regular at \(\gamma (0)\), i.e., \(\Phi _K\) is continuous at \(\gamma (0)\).

Example 3.6

Suppose that a continuous function \(f: [0, \,R]\longrightarrow [0,+\infty )\), where \(R>0\), has the following properties:

-

\(f>0\) in \((0,\,R]\),

-

\(\displaystyle \lim _{t\rightarrow 0}\frac{f(t)}{t^r}=0\) for each \(r>0\),

-

there exists \(R_0\in (0,\,R]\) such that f is nondecreasing in \([0,\,R_0]\),

-

there exists \(R_1\in [0,\,R_0)\) such that \(f|_{[R_1,\,R]}\) is definable in a certain polynomially bounded o-minimal structure (for simplicity, \(f|_{[R_1,\,R]}\) can be thought of as a semialgebraic map).

Consider the map

and the set

Note that \(F^{-1}(E)\) is compact. We will show that:

-

E satisfies Markov’s inequality,

-

\(F^{-1}(E)\) is L-regular,

-

\(F^{-1}(E)\) does not satisfy Markov’s inequality for \(\mathbb {K}=\mathbb {R}\) but does satisfy Markov’s inequality for \(\mathbb {K}=\mathbb {C}\).

To this end, put

For each \(x=(x_1,x_2)\in E_1\), denote by \(H_x\) the rectangle with the vertices at \((x_1,x_2)\), \((R_0,x_2)\), \((x_1,-1)\), \((R_0,-1)\). Moreover, define \(S_x:\mathbb {R}\longrightarrow \mathbb {R}^2\) by

Note that \([0,\,1]\ni t\longmapsto S_x(t)\in \mathbb {R}^2\) is a parametrization of the line segment linking the point x and the midpoint of the diagonals of \(H_x\). Since \(H_x\subset E\), it follows that, for each \(t\in [0,\,1]\),

On the other hand, by Theorem 3.4, the set \(E_2\) is UPC.Footnote 10 Therefore, there exist \(\upsilon ,\, \theta > 0\) and \(d\in \mathbb {N}\) such that, for each \(x \in E_2\), we can choose a polynomial map \(\displaystyle \tilde{S}_x : \mathbb {R} \longrightarrow \mathbb {R}^2\) with \(\deg \tilde{S}_x\le d\) satisfying the following conditions:

-

\(\tilde{S}_x (0) = x\),

-

\(\displaystyle \mathrm{dist} \big (\tilde{S}_x (t),\, \mathbb {R}^2 {\setminus } E_2\big ) \ge \theta t^{\upsilon }\) for each \(t \in [0,\, 1]\).

Note that, for each \(x \in E_2\) and each \(t \in [0,\, 1]\),

Upon combining the above estimates for the sets \(E_1\) and \(E_2\), it is straightforward to show that \(E=E_1\cup E_2\) is UPC and hence, by Theorem 3.1 in [43], is a Markov set.

Case 1: \(\mathbb {K}=\mathbb {R}\). Note first that

By Theorem 3.5, the set \(F^{-1}(E)\) is L-regular.Footnote 11 Suppose, to derive a contradiction, that \(F^{-1}(E)\) is a Markov set. In particular, there exist \(\varepsilon , C>0\) such that, for each polynomial \(P\in \mathbb {C}[w_1,w_2]\),

For each \(n\in \mathbb {N}\), put

Moreover, take \(r>\varepsilon \) and set

Note that

Combining this with (12), we get

which is impossible, because the right–hand side tends to zero as \(n\rightarrow \infty \).

Case 2: \(\mathbb {K}=\mathbb {C}\). Note that, for each \(w\in \mathbb {C}^2\),

Therefore

By Theorem 5.3.1 in [34], for each \(w\in \mathbb {C}^2\),

Since E is UPC, it has the HCP property: there exist \(M_1, \mu >0\) such that, for each \(z\in E_{(1)}\),

Put

where \(K:=F^{-1}(E)_{(1)}\). By (14), for each \(z\in F(K)\),

Take \(M_3>0\) such that \(F|_K\) is Lipschitz with the constant \(M_3\), that is

for all \(w,w'\in K\). For each \(w\in K\), we have

which yields the HCP property for the set \(F^{-1}(E)\). Consequently, \(F^{-1}(E)\) is a Markov set and is L-regular. \(\square \)

The previous examples may suggest that a compact, L-regular set, which is the inverse image of a Markov set under a complex (i.e., holomorphic) polynomial map, is also a Markov set. This claim is however not valid.

Example 3.7

Let \(f: [0, \,R]\longrightarrow [0,+\infty )\) be as in Example 3.6. Set \(D:=D_1\times D_2\), where

In the same way as we handled the set E of Example 3.6, we can show that \(D_1, D_2\) satisfy Markov’s inequality. Moreover, put

Note that

After repeating the argument from Case 1 of Example 3.6, we conclude that \(G^{-1}(D)\) is L-regular and does not satisfy Markov’s inequality. On the other hand, the set D, as the Cartesian product of the Markov sets, is a Markov set. \(\square \)

After giving the above examples illustrating various situations which occur naturally when we consider Markov’s inequality in the context of polynomial preimages, we conclude this section with the statement of the following result, to be proved in the next section.

Theorem 3.8

Assume that \(g:U\longrightarrow \mathbb {C}^{N'}\) is a holomorphic mapping, where \(U\subset \mathbb {C}^N\) is open (\(N,N'\in \mathbb {N}\)). Suppose that a compact set \(\emptyset \ne E \subset \mathbb {C}^{N'}\) has the HCP property, \(\hat{E}\subset g(U)\) and \(g^{-1}(\hat{E})\) is compact. Then

-

\(N=N'\),

-

\(g^{-1}(E)\) has the HCP property and, in particular, is a Markov set.

Recall that \(\hat{E}\) denotes the polynomially convex hull of E:

If \(\hat{E} =E\), then we say that E is polynomially convex. For example, each compact subset of \(\mathbb {R}^N\) is polynomially convex in \(\mathbb {C}^N\) (cf. Lemma 5.4.1 in [34]).

4 A proof of Theorem 3.8

For the convenience of the reader we recall first the relevant notions and results from [41].

Definition 4.1

For a set \(A\subset \mathbb {C}^N\), we define its complex dimension by the formula

(We assume here that the maximum on the empty set is equal to \(-\infty \).)

Definition 4.2

Let \(\Omega \subset \mathbb {C}^N\) be an open set. A set \(A\subset \Omega \) is called an analytic subset (of \(\Omega \) or in \(\Omega \)) if, for each point \(a\in \Omega \), there is an open neighbourhood U of a and there exist holomorphic functions \(\xi _1,\ldots ,\xi _k:U\longrightarrow \mathbb {C}\) such that

Definition 4.3

A set \(A\subset \mathbb {C}^N\) is called a locally analytic set (in \(\mathbb {C}^N\)) if, for each point \(a\in A\), there is an open neighbourhood U of a and there exist holomorphic functions \(\xi _1,\ldots ,\xi _k:U\longrightarrow \mathbb {C}\) such that

The subsequent proofs make use of the following two results.

Theorem 4.4

Assume that \(f:W\longrightarrow \mathbb {C}^{N'}\) is a holomorphic mapping, where \(W\subset \mathbb {C}^N\) is open (\(N,N'\in \mathbb {N}\)). Suppose that \(B\subset W\) is a locally analytic set such that, for some \(m\in \mathbb {N}\),

Then f(B) is a countable union of submanifolds of dimension \(\le m\).

Proof

See [41, p. 254]. \(\square \)

Theorem 4.5

Every compact analytic subset of \(\mathbb {C}^N\) is finite.

Proof

See [41, p. 235]. \(\square \)

Before proceeding with the proof of Theorem 3.8, let us also state the following lemma.

Lemma 4.6

Assume that \(f:W\longrightarrow \mathbb {C}^{N'}\) is a holomorphic mapping, where \(W\subset \mathbb {C}^N\) is open (\(N,N'\in \mathbb {N}\)). Suppose that a set \( A \subset f(W)\) is nonpluripolar. Then \(f^{-1}(A)\) is nonpluripolar as well.

Proof

We will consider two cases.

Case 1: \(N<N'\). Obviously, \(\mathrm{{rank}}\,d_{w} f\le N\) for each \(w\in W\). By Theorem 4.4, f(W) is a countable union of submanifolds of dimension \(\le N\). In particular, the set f(W) (and hence A) is pluripolar, which is a contradiction. The case \(N<N'\) cannot therefore occur.

Case 2: \(N\ge N'\). We have \(W=B\cup W_0\), where

Clearly, the set B is an analytic subset of W. As in Case 1, we show via Theorem 4.4 that f(B) is pluripolar. In particular, the set \(A\cap f(W_0)\) is nonpluripolar.

By the rank theorem, for each \(a\in W_0\), there exists an open set \(U_a\) such that \(a\in U_a\subset W_0\), \(f(U_a)\) is open, and there exist biholomorphic mappings \(\varphi _a: U_a\longrightarrow \Delta _a\times \Omega _a\), \(\psi _a: f(U_a)\longrightarrow \Delta _a\), where \(\Delta _a\subset \mathbb {C}^{N'}\), \(\Omega _a\subset \mathbb {C}^{N-N'}\) are open sets, such that the mapping

is the natural projection. Clearly, there is a sequence \(a_j\in W_0\) (\(j\in \mathbb {N}\)) such that

Take \(l\in \mathbb {N}\) such that \(A\cap f(U_{a_l})\) is nonpluripolar. Then the set \(\psi _{a_l}(A\cap f(U_{a_l}))\) is also nonpluripolar.

Suppose, to derive a contradiction, that \(f^{-1}(A)\) is pluripolar. Then \(\varphi _{a_l}(f^{-1}(A)\cap U_{a_l})\) is also pluripolar and therefore

for some plurisubharmonic function u in \(\mathbb {C}^N\). Note that, for each \(y\in \Omega _{a_l}\),

Since \(\psi _{a_l}(A\cap f(U_{a_l}))\) is nonpluripolar, it follows that \(u\equiv -\infty \) in \(\mathbb {C}^{N'}\times \Omega _{a_l}\), which is impossible.Footnote 12 \(\square \)

In the proof of Theorem 3.8, we will use the notion of the relative extremal function. Suppose that \(\Omega \subset \mathbb {C}^N\) is an open set and \(A\subset \Omega \). The relative extremal function for A in \(\Omega \) is defined as follows:

(\(z\in \Omega \)), where \({\text {PSH}}(\Omega )\) denotes the plurisubharmonic functions in \(\Omega \).

Proof of Theorem 3.8. We will consider three cases.

Case 1: \(N>N'\). Take \(b=(b_1,\ldots ,b_{N'})\in \hat{E}\subset g(U)\). By the formula on p. 169 of [41],

On the other hand, \(g^{-1}(b)=g^{-1}(b)\cap g^{-1}(\hat{E})\) is compact, analytic and hence finite (cf. Theorem 4.5), in contradiction with (17). This means that the case \(N>N'\) cannot occur.

Case 2: \(N<N'\). It follows from Theorem 4.4 that g(U) is a countable union of submanifolds of dimension \(\le N\). In particular, g(U) (and hence E) is pluripolar, which is a contradiction. The case \(N<N'\) cannot therefore occur.

Case 3: \(N=N'\). Put \(K:=g^{-1}(\hat{E})\) and take \(\lambda >0\) such that \(K_{(\lambda )}\subset U\).Footnote 13 Note that there exists \(\epsilon >0\) such that

Suppose, towards a contradiction, that this is not the case and take a sequence \(a_j\in U\) (\(j\in \mathbb {N}\)) such that

Passing to a subsequence if necessary, we can assume that \(a_j\rightarrow a \in K_{(\lambda )}{\setminus } K_{\lambda }\). Consequently, \(\mathrm{dist}(a,\,K)=\lambda \) and \( \mathrm{dist}\big (g(a),\,\hat{E}\big )=0\), which means that \(a\notin K\) and \(a\in K\), a contradiction.

Put \(\Omega := K_{\lambda }\cap g^{-1}(\hat{E}_{\epsilon })\), where \(\epsilon >0\) is of (18). For each compact set \(T\subset \hat{E}_{\epsilon }\), we have

and therefore the set \(g^{-1}(T)\cap \Omega \) is compact. It follows that

is a proper holomorphic map. Since the set \(\Omega ':=g(\Omega )\) is open (cf. [52], Theorem 15.1.6), it follows that

is also a proper holomorphic map. Note moreover that \(g^{-1}(E)\subset K\subset \Omega \), \(\hat{E}=g(K)\subset \Omega '\) and \(\Omega , \Omega '\) are bounded. (Use the fact that \(\Omega '\subset \hat{E}_{\epsilon }\).)

By Lemma 4.6, the set \(g^{-1}(E)\) is nonpluripolar and hence \(V_{g^{-1}(E)}^*\in \mathcal {L}(\mathbb {C}^N)\) (see (11)). In particular,

for some \(M_1>0\). It is clear that

Moreover, by Proposition 5.3.3 in [34], there exists \(M_2>0\) such that

Let \(M_3,\mu >0\) be such that, for each \(z\in E_{(1)}\),

(see Definition 3.1). Obviously, for each \(z\in \Omega '\),

where \(M_4:=\max \big \{M_3,\, \sup _{\Omega '{\setminus } E_{(1)}} V_E \big \}\). (If \(\Omega '\subset E_{(1)}\), then we set \(M_4:=M_3\).)

By Proposition 4.5.14 in [34],

Consequently, for each \(w\in \Omega \),

and therefore

where \(M_5>0\) is such that \(|g(\zeta )-g(\zeta ')|\le M_5|\zeta -\zeta '|\) for all \(\zeta ,\zeta '\in \Omega \). Put

where

The estimate (23) implies that, for each \(w\in g^{-1}(E)_{(1)}\),

Hence

where \(M>0\) is such that \(e^{M_6t}\le 1+Mt\) for \(t\in [0,\,1]\). The case \(N=N'\) is therefore settled, and the proof of the theorem is complete. \(\square \)

With regard to Theorem 3.8, we have the following remark.

Remark 4.7

In Theorem 3.8, even if \(U=\mathbb {C}^N\) and \(g:\mathbb {C}^N\longrightarrow \mathbb {C}^{N}\) is a polynomial map, the assumption that \(\hat{E}\subset g(U)\) and \(g^{-1}(\hat{E})\) is compact cannot be replaced by the assumption that \(E\subset g(U)\) and \(g^{-1}(E)\) is compact and L-regular.

Proof

Set

Take a compact set \(K\subset \mathbb {R}^2\subset \mathbb {C}^2\) such that:

-

\(K\subset \left( 0,\,\frac{1}{2}\right] \times (1,\,2]\),

-

K is L-regular,

-

K does not satisfy Markov’s inequality.

(For instance, we can take a suitable translate of the set \(F^{-1}(E)\) from Case 1 of Example 3.6 with appropriately chosen function f.)

Put moreover \(E:=\Gamma \cup g(K)\), where \(\Gamma := \left\{ z\in \mathbb {C}^2:\, |z_1|=|z_2|=1\right\} \). Clearly, \(E\subset {\overline{\mathbb {D}}_2}\), \(E\subset g(\mathbb {C}^2)= \big ( (\mathbb {C}{\setminus } \{0\})\times \mathbb {C}\big ) \cup \{(0,0)\}\) and \(g^{-1}(E)= \Gamma \cup K\).Footnote 14 It is well known that, for each \(u\in \mathbb {C}\),

where \(\mathbb {D}:=\mathbb {D}_1\). Combining this with Proposition 5.9 in [55] we get, for each \(z=(z_1,z_2)\in \mathbb {C}^2\), the following estimates

Therefore

In particular,

-

E has the HCP property: \(\Phi _{E}(z)\le 1+\mathrm{dist}(z,\,E)\) for each \(z\in \mathbb {C}^2\),

-

\(g^{-1}(E)= \Gamma \cup K\) is L-regular, because \(\Gamma \) and K are L-regular.

Suppose, towards a contradiction, that \(g^{-1}(E)\) is a Markov set. Since \(\Gamma \) is the Shilov boundary of \(\mathbb {D}_2\) (see Example 3.3), it follows that \({\overline{\mathbb {D}}}_2\cup K\) is a Markov set as well. Put \(\Pi :\mathbb {C}^2\ni (w_1,w_2)\longmapsto w_2\in \mathbb {C}\). Note that \(\Pi ( {\overline{\mathbb {D}}}_2 )= \overline{\mathbb {D}}\subset \mathbb {C}\) and \(\Pi (K)\subset (1,\,2]\subset \mathbb {R}\subset \mathbb {C}\). Consequently, the sets \(\Pi ( {\overline{\mathbb {D}}}_2 )\) and \(\Pi (K)\) are disjoint and polynomially convex. Clearly, \({\overline{\mathbb {D}}}_2\) and K are also polynomially convex. Therefore, by Kallin’s separation lemma (cf. [33, p. 302]), we obtain the polynomial convexity of the set \({\overline{\mathbb {D}}}_2\cup K\). On account of Corollary 5.2, we get a contradiction, because K is not a Markov set. \(\square \)

5 Subsets of Markov sets

In this section, we will prove the following result announced in Introduction.

Theorem 5.1

Let \(E\subset \mathbb {C}^N\) be a compact and polynomially convex set satisfying Markov’s inequality. Assume that \(K\subset E\) is compact, nonpluripolar and open in E. Then K is a Markov set. Furthermore, if E satisfies Markov’s inequality with an exponent \(\varepsilon >0\) (see Definition 1.2), then K satisfies Markov’s inequality with the exponent \(\varepsilon \) as well.

Proof

Choose \(\lambda >0\) such that \( K_{(\lambda )}\cap (E{\setminus } K)=\emptyset \). In particular, we have

Take moreover a compact and polynomially convex set \(\mathcal {Z}\subset \mathbb {C}^N\) such that \(E\subset \mathrm{Int}\mathcal {Z}\) and \( \mathcal {Z}\subset K_{\lambda }\cup \left( \mathbb {C}^N{\setminus } K_{(\lambda )} \right) \)—see the proof of Lemma 2.7.4 in [32]. Define \(g: K_{\lambda }\cup \left( \mathbb {C}^N{\setminus } K_{(\lambda )} \right) \longrightarrow \mathbb {C}\) by the formula

By Theorem 8.5(1) in [55], there exist \(M>0\), \(\rho \in (0,\,1)\) with the following property: for each \(\mu \in \mathbb {N}\), we can choose a polynomial \(R_{\mu }\in \mathbb {C}[z_1,\ldots ,z_N]\) with \(\deg R_{\mu }\le \mu \) and such that

We can clearly assume that \(M\ge 1\).

By (11), \(V_K\) (and hence \(\Phi _K\)) is bounded on each compact subset of \(\mathbb {C}^N\). Thus we may choose \(k\in \mathbb {N}\) such that

Moreover, let \(\varepsilon , C>0\) be of Definition 1.2 for the set E. Therefore, for each polynomial \(P\in \mathbb {C}[z_1,\ldots ,z_N]\) and each \(\alpha \in \mathbb {N}_0^N\),

Take also \(C_1>0\) such that

and put

We will show that, for each polynomial \(Q\in \mathbb {C}[z_1,\ldots ,z_N]\) with \(\deg Q\le n\) \((n\in \mathbb {N})\) and each \(a\in K_{\left( C_1(k+1)^{-\varepsilon } n^{-\varepsilon }\right) }\), we have

To this end, fix Q, a as above and take \(b\in K\) such that \(|a-b|=\mathrm{dist}(a,\,K)\). Clearly, \(a\in \mathcal {Z}\cap K_{\lambda }\) and

Put \(P:=R_{nk}\cdot Q\). Then

and hence

We will check now that

To this end, fix \(y\in E\).

Case 1: \(y\in K\). Then

Case 2: \(y\in E{\setminus } K\). Then \(y\in \mathcal {Z}{\setminus } K_{(\lambda )}\) and

which completes the proof of (30).

Consequently,

and hence

which completes the proof of (27).

By Cauchy’s inequalities, for each \(Q\in \mathbb {C}[z_1,\ldots ,z_N]\), \(\alpha \in \mathbb {N}_0^N\), \(z\in \mathbb {C}^N\) and each \(r>0\),

where \(\mathbb {B}(z,r):=\{w\in \mathbb {C}^N:\, |w-z|\le r\}\). Combining this with (27) we get, for each \(Q\in \mathbb {C}[z_1,\ldots ,z_N]\) and each \(\alpha \in \mathbb {N}_0^N\) with \(|\alpha |=1\), the following estimate

Consequently, K satisfies Markov’s inequality with the exponent \(\varepsilon \). \(\square \)

Corollary 5.2

Assume that \(E_1,\ldots , E_p\subset \mathbb {C}^N\) (\(p\in \mathbb {N}\)) are compact, nonpluripolar and pairwise disjoint sets such that \(E:=E_1\cup \cdots \cup E_p\) is polynomially convex. Let \(\varepsilon >0\). Then the following two statements are equivalent:

-

1.

E satisfies Markov’s inequality with the exponent \(\varepsilon \).

-

2.

For each \(j\le p\), the set \(E_j\) satisfies Markov’s inequality with the exponent \(\varepsilon \).

We conclude this section with the following example concerning Corollary 5.2.

Example 5.3

Set \(E_1:=\{0\}\cup \left[ 1/3,\,2/3\right] \), \(E_2:=\partial \mathbb {D}\), where \(\mathbb {D}:=\mathbb {D}_1\), and \(E:=E_1\cup E_2\). Note that \(E_1\) does not satisfy Markov’s inequality. Indeed, suppose otherwise and take \(\varepsilon , C\) of Definition 1.2. Then, for the polynomials \(P_n(z):=z(1-z)^n\) (\(n\in \mathbb {N}\)), we have

which is impossible.

By Bernstein’s theorem (see Example 3.3) and the maximum principle, for each complex polynomial Q of one variable,

Hence, E satisfies Markov’s inequality. Moreover, E is not polynomially convex. In Corollary 5.2, the assumption that E is polynomially convex is therefore relevant even if \(N=1\). \(\square \)

Notes

Because otherwise \(\emptyset \ne H_j\subset \mathrm{{Int}\,h}(\mathbb {R}^N)\) for some \(j\le s\).

In fact, we could first consider the case \(\mathbb {K}=\mathbb {C}\) and then notice that the real case follows from the complex case.

If \(B=[b_{ij}]\) is a \(q\times q\) matrix of complex numbers, then

$$\begin{aligned} |\det B|^2 \le \prod _{i=1}^q \sum _{j=1}^q |b_{ij}|^2. \end{aligned}$$Schur’s inequality: For each polynomial R of one variable,

$$\begin{aligned} {\Vert R\Vert }_{[-1,\,1]}\le \left( \deg R+1\right) {\big \Vert \sqrt{1-\tau ^2}R(\tau ) \big \Vert }_{[-1,\,1]} \qquad \qquad (\lozenge ) \end{aligned}$$—see [10, p. 233], where this inequality is stated for real polynomials. If however \(R\in \mathbb {C}[\tau ]\) and \(R=R_1+iR_2\) with \(R_1,R_2\in \mathbb {R}[\tau ]\), then for \(\tau _0\in [-1,\,1]\) such that \({\Vert R\Vert }_{[-1,\,1]}=|R(\tau _0)|\), we have

$$\begin{aligned} |R(\tau _0)|^2\le & {} {\Vert R_1(\tau _0)R_1+ R_2(\tau _0)R_2 \Vert }_{[-1,\,1]}\\\le & {} \left( \deg R+1\right) {\big \Vert \sqrt{1-\tau ^2} \big ( R_1(\tau _0)R_1(\tau )+ R_2(\tau _0)R_2(\tau )\big ) \big \Vert }_{[-1,\,1]}\\\le & {} |R(\tau _0)|\left( \deg R+1\right) {\big \Vert \sqrt{1-\tau ^2}R(\tau ) \big \Vert }_{[-1,\,1]}, \end{aligned}$$which proves \((\lozenge )\).

See [34] for the definition and basic properties of plurisubharmonic functions.

That is, a closed set \(S\subset \partial \mathbb {D}_N\) such that:

-

(i)

for each continuous function \(f: \overline{\mathbb {D}}_N\longrightarrow \mathbb {C}\), holomorphic in \(\mathbb {D}_N\),

$$\begin{aligned}{\Vert f\Vert }_{\overline{\mathbb {D}}_N}={\Vert f\Vert }_{S}, \end{aligned}$$ -

(ii)

any closed set \(\tilde{S}\subset \partial \mathbb {D}_N\) satisfying (i) contains S.

-

(i)

For the polynomial \(Q(w_1,w_2):=w_2\), we have \({\Vert Q\Vert }_{\Gamma }=1\) and \(\left| Q\left( \alpha ,{\beta }/{\alpha } \right) \right| >1\). Consequently, \(\Phi _{\Gamma }\left( \alpha , {\beta }/{\alpha } \right) >1\) and combining this with Corollary 5.2.5 in [34] we obtain

$$\begin{aligned} \Phi _{g^{-1}(E)}^*\left( \alpha , {\beta }/{\alpha } \right) = \Phi _{\Gamma }^*\left( \alpha , {\beta }/{\alpha } \right) \ge \Phi _{\Gamma }\left( \alpha , {\beta }/{\alpha } \right) >1 = \Phi _{g^{-1}(E)}\left( \alpha , {\beta }/{\alpha } \right) \end{aligned}$$(recall that \(\phi ^*\) denotes the upper semicontinuous regularization of \(\phi \)). Since \(\Phi _{g^{-1}(E)}^*\left( \alpha , {\beta }/{\alpha } \right) >\Phi _{g^{-1}(E)}\left( \alpha , {\beta }/{\alpha } \right) \), it follows that \(\Phi _{g^{-1}(E)}\) is not continuous at the point \((\alpha , \beta /\alpha )\).

An alternative proof can also be found in [45, Corollary 2.8]

The assumption that \(f|_{[R_1,\,R]}\) is definable in a certain polynomially bounded o-minimal structure is used here to guarantee definability of \(E_2\) in a polynomially bounded o-minimal structure and to guarantee the existence of a polynomial arc \(\gamma :(0,\,1) \longrightarrow \mathrm{{Int}}E\) such that \(\displaystyle \lim \limits _{t \rightarrow 0} \gamma (t) = (R,f(R))\). An explicit example of such an arc is \(\gamma : (0,\,1)\ni t\longmapsto \big (R-(\eta t)^m, f(R)-\eta t\big )\in \mathbb {R}^2\), where \(\eta >0\) is sufficiently small and \(m\in \mathbb {N}\) is sufficiently large, which follows from the definition of a polynomially bounded o-minimal structure.

The only problem here is to see that there exists a polynomial arc \(\varphi :(0,\,1) \longrightarrow \mathrm{{Int}}F^{-1}(E)\) such that \(\displaystyle \lim \limits _{t \rightarrow 0} \varphi (t) = \big (R,\sqrt{f(R)}\big )\). However, this immediately follows from the assumption that \(f|_{[R_1,\,R]}\) is definable in a certain polynomially bounded o-minimal structure.

Recall that pluripolar sets have Lebesgue measure zero.

Recall that \(K_{(\lambda )}:=\{w\in \mathbb {C}^N:\, \mathrm{dist}(w,\,K) \le \lambda \}\) and \( K_{\lambda }:=\{w\in \mathbb {C}^N:\, \mathrm{dist}(w,\,K) < \lambda \}. \)

Recall that \(\mathbb {D}_N:=\{z\in \mathbb {C}^N:\, |z|<1\}\).

References

Baran, M.: Markov inequality on sets with polynomial parametrization. Ann. Polon. Math. 60, 69–79 (1994)

Baran, M., Białas–Cież, L.: Hölder continuity of the Green function and Markov brothers’ inequality. Constr. Approx. 40, 121–140 (2014)

Baran, M., Pleśniak, W.: Markov’s exponent of compact sets in \(\mathbb{C}^n\). Proc. Am. Math. Soc. 123, 2785–2791 (1995)

Baran, M., Pleśniak, W.: Bernstein and van der Corput-Schaake type inequalities on semialgebraic curves. Studia Math. 125, 83–96 (1997)

Baran, M., Pleśniak, W.: Polynomial inequalities on algebraic sets. Studia Math. 141, 209–219 (2000)

Białas-Cież, L., Eggink, R.: Equivalence of the local Markov inequality and a Kolmogorov type inequality in the complex plane. Potential Anal. 38, 299–317 (2013)

Białas-Cież, L., Kosek, M.: How to construct totally disconnected Markov sets? Ann. Mat. Pura Appl. 190, 209–224 (2011)

Bierstone, E., Milman, P.: Semianalytic and subanalytic sets. Inst. Hautes Études Sci. Publ. Math. 67, 5–42 (1988)

Bochnak, J., Coste, M., Roy, M.-F.: Real Algebraic Geometry. Springer, Berlin (1998)

Borwein, P., Erdélyi, T.: Polynomials and Polynomial Inequalities. Springer, New York (1995)

Bos, L.P., Brudnyi, A., Levenberg, N.: On polynomial inequalities on exponential curves in \(\mathbb{C}^n\). Constr. Approx. 31, 139–147 (2010)

Bos, L.P., Brudnyi, A., Levenberg, N., Totik, V.: Tangential Markov inequalities on transcendental curves. Constr. Approx. 19, 339–354 (2003)

Bos, L., Levenberg, N., Milman, P.D., Taylor, B.A.: Tangential Markov inequalities characterize algebraic submanifolds of \(\mathbb{R}^N\). Indiana Univ. Math. J. 44, 115–138 (1995)

Bos, L., Levenberg, N., Milman, P.D., Taylor, B.A.: Tangential Markov inequalities on real algebraic varieties. Indiana Univ. Math. J. 47, 1257–1272 (1998)

Bos, L.P., Milman, P.D.: On Markov and Sobolev type inequalities on sets in \(\mathbb{R}^n\). In: Rassias, M. Th., Srivastava, H. M., Yanushauskas, A. (eds.) Topics in Polynomials of One and Several Variables and Their Applications, pp. 81–100, World Sci., Singapore (1993)

Bos, L.P., Milman, P.D.: Sobolev–Gagliardo–Nirenberg and Markov type inequalities on subanalytic domains. Geom. Funct. Anal. 5, 853–923 (1995)

Bos, L.P., Milman, P.D.: Tangential Markov inequalities on singular varieties. Indiana Univ. Math. J. 55, 65–73 (2006)

Brudnyi, A.: On local behavior of holomorphic functions along complex submanifolds of \(\mathbb{C}^N\). Invent. Math. 173, 315–363 (2008)

Brudnyi, A., Brudnyi, Y.: Methods of Geometric Analysis in Extension and Trace Problems, vol. 1, Monographs in Mathematics 102, Birkhäuser, Boston (2012)

Brudnyi, A., Brudnyi, Y.: Methods of Geometric Analysis in Extension and Trace Problems, vol. 2, Monographs in Mathematics 103, Birkhäuser, Boston (2012)

Burns, D., Levenberg, N., Ma’u, S., Révész, S.: Monge–Ampère measures for convex bodies and Bernstein–Markov type inequalities. Trans. Am. Math. Soc. 362, 6325–6340 (2010)

Coman, D., Poletsky, E.: Transcendence measures and algebraic growth of entire functions. Invent. Math. 170, 103–145 (2007)

Erdélyi, T., Kroó, A.: Markov-type inequalities on certain irrational arcs and domains. J. Approx. Theory 130, 111–122 (2004)

Fefferman, C., Narasimhan, R.: Bernstein’s inequality on algebraic curves. Ann. Inst. Fourier (Grenoble) 43, 1319–1348 (1993)

Fefferman, C., Narasimhan, R.: On the polynomial-like behaviour of certain algebraic functions. Ann. Inst. Fourier (Grenoble) 44, 1091–1179 (1994)

Fefferman, C., Narasimhan, R.: Bernstein’s inequality and the resolution of spaces of analytic functions. Duke Math. J. 81, 77–98 (1995)

Fefferman, C., Narasimhan, R.: A local Bernstein inequality on real algebraic varieties. Math. Z. 223, 673–692 (1996)

Frerick, L.: Extension operators for spaces of infinite differentiable Whitney jets. J. Reine Angew. Math. 602, 123–154 (2007)

Frerick, L., Jordá, E., Wengenroth, J.: Tame linear extension operators for smooth Whitney functions. J. Funct. Anal. 261, 591–603 (2011)

Goncharov, A.: A compact set without Markov’s property but with an extension operator for \(\cal C^{\infty }\)-functions. Studia Math. 119, 27–35 (1996)

Goncharov, A.: Weakly equilibrium Cantor-type sets. Potential Anal. 40, 143–161 (2014)

Hörmander, L.: An Introduction to Complex Analysis in Several Variables, North–Holland Mathematical Library. North–Holland, Amsterdam (1990)

Kallin, E.: Polynomial Convexity: The Three Spheres Problem, Conference on Complex Analysis Held in Minneapolis, 1964, pp. 301–304. Springer, Berlin (1965)

Klimek, M.: Pluripotential Theory. Oxford University Press, Oxford (1991)

Kroó, A., Révész, S.: On Bernstein and Markov-type inequalities for multivariate polynomials on convex bodies. J. Approx. Theory 99, 134–152 (1999)

Kroó, A., Szabados, J.: Markov–Bernstein type inequalities for multivariate polynomials on sets with cusps. J. Approx. Theory 102, 72–95 (2000)

Levenberg, N.: Approximation in \(\mathbb{C}^N\). Surv. Approx. Theory 2, 92–140 (2006)

Levenberg, N.: Ten lectures on weighted pluripotential theory. Dolomites Res. Notes Approx. 5, 1–59 (2012)

Levenberg, N., Poletsky, E.: Reverse Markov inequality. Ann. Acad. Sci. Fenn. Math. 27, 173–182 (2002)

Łojasiewicz, S.: Ensembles Semi-Analytiques. Lecture Notes, IHES, Bures-sur-Yvette, France (1965)

Łojasiewicz, S.: Introduction to Complex Analytic Geometry. Birkhäuser Verlag, Boston (1991)

Markov, A. A.: On a problem of D.I. Mendeleev. Zap. Im. Akad. Nauk. 62, 1–24 (1889)

Pawłucki, W., Pleśniak, W.: Markov’s inequality and \(\cal C^{\infty }\) functions on sets with polynomial cusps. Math. Ann. 275, 467–480 (1986)

Pierzchała, R.: UPC condition in polynomially bounded o-minimal structures. J. Approx. Theory 132, 25–33 (2005)

Pierzchała, R.: Siciak’s extremal function of non-UPC cusps. I. J. Math. Pures Appl. 94, 451–469 (2010)

Pierzchała, R.: Markov’s inequality in the o-minimal structure of convergent generalized power series. Adv. Geom. 12, 647–664 (2012)

Pleśniak, W.: \(L\)-regularity of subanalytic sets in \(\mathbb{R}^n\). Bull. Acad. Polon. Sci. 32, 647–651 (1984)

Pleśniak, W.: Markov’s inequality and the existence of an extension operator for \(\cal C^{\infty }\) functions. J. Approx. Theory 61, 106–117 (1990)

Pleśniak, W.: Siciak’s extremal function in complex and real analysis. Ann. Polon. Math. 80, 37–46 (2003)

Pleśniak, W.: Inégalité de Markov en plusieurs variables, Int. J. Math. Math. Sci. 14, 1–12 (2006)

Révész, S.: Turán type reverse Markov inequalities for compact convex sets. J. Approx. Theory 141, 162–173 (2006)

Rudin, W.: Function Theory in the Unit Ball of \(\mathbb{C}^n\). Springer, Berlin (2008)

Shabat, B.V.: Introduction to Complex Analysis. Part II. Functions of Several Variables, Translations of Mathematical Monographs. American Mathematical Society, Providence (1992)

Siciak, J.: On some extremal functions and their applications in the theory of analytic functions of several complex variables. Trans. Am. Math. Soc. 105, 322–357 (1962)

Siciak, J.: Extremal plurisubharmonic functions in \(\mathbb{C}^n\). Ann. Polon. Math. 39, 175–211 (1981)

Siciak, J.: Rapid polynomial approximation on compact sets in \(\mathbb{C}^N\). Univ. Iagel. Acta Math. 30, 145–154 (1993)

Totik, V.: Polynomial inverse images and polynomial inequalities. Acta Math. 187, 139–160 (2001)

Totik, V.: On Markoff inequality. Constr. Approx. 18, 427–441 (2002)

van den Dries, L.: Tame Topology and O-minimal Structures, LMS Lecture Note Series, vol. 248. Cambridge University Press, Cambridge (1998)

van den Dries, L., Miller, C.: Geometric categories and o-minimal structures. Duke Math. J. 84, 497–540 (1996)

Acknowledgments

I am very grateful to the referee for the comments and suggestions which improved the exposition.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Pierzchała, R. Markov’s inequality and polynomial mappings. Math. Ann. 366, 57–82 (2016). https://doi.org/10.1007/s00208-015-1294-9

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00208-015-1294-9