Abstract

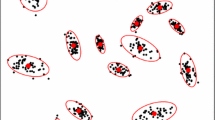

The expectation–maximization (EM) algorithm is a commonly used method for finding the maximum likelihood estimates of the parameters in a mixture model via coordinate ascent. A serious pitfall with the algorithm is that in the case of multimodal likelihood functions, it can get trapped at a local maximum. This problem often occurs when sub-optimal starting values are used to initialize the algorithm. Bayesian initialization averaging (BIA) is proposed as an ensemble method to generate high quality starting values for the EM algorithm. Competing sets of trial starting values are combined as a weighted average, which is then used as the starting position for a full EM run. The method can also be extended to variational Bayes methods, a class of algorithm similar to EM that is based on an approximation of the model posterior. The BIA method is demonstrated on real continuous, categorical and network data sets, and the convergent log-likelihoods and associated clustering solutions presented. These compare favorably with the output produced using competing initialization methods such as random starts, hierarchical clustering and deterministic annealing, with the highest available maximum likelihood estimates obtained in a higher percentage of cases, at reasonable computational cost. For the Stochastic Block Model for network data promising results are demonstrated even when the likelihood is unavailable. The implications of the different clustering solutions obtained by local maxima are also discussed.

Similar content being viewed by others

Notes

Using \(r = 0.94 \text{ or } 0.95\) regularly resulted in a saddle point with the value of the log-likelihood converging to \(-\,770\). These results have been omitted from Fig. 12.

References

Agresti A (2002) Categorical data analysis, 2nd edn. Wiley, London

Aitkin M, Aitkin I (1996) A hybrid EM/Gauss–Newton algorithm for maximum likelihood in mixture distributions. Stat Comput 6:127–130

Andrews JL, McNicholas PD (2011) Model-based clustering, classification, and discriminant analysis via mixtures of multivariate t-distributions. Stat Comput 22(5):1021–1029

Baudry JP, Celeux G (2015) EM for mixtures. Stat Comput 25(4):713–726

Baudry JP, Cardoso M, Celeux G, Amorim MJ, Ferreira AS (2015) Enhancing the selection of a model-based clustering with external categorical variables. Adv Data Anal Classif 9(2):177–196. https://doi.org/10.1007/s11634-014-0177-3

Besag J (1986) On the statistical analysis of dirty pictures. J R Stat Soc Ser B Methodol 48:259–302

Biernacki C, Celeux G, Govaert G (2003) Choosing starting values for the EM algorithm for getting the highest likelihood in multivariate Gaussian mixture models. Comput Stat Data Anal 41(3–4):561–575

Byrd R, Lu P, Nocedal J, Zhu C (1995) A limited memory algorithm for bound constrained optimization. ACM Trans Math Softw 16:1190–1208

Carpaneto G, Toth P (1980) Algorithm 548: solution of the assignment problem. ACM Trans Math Softw 6(1):104–111

Celeux G, Govaert G (1992) A classification EM algorithm for clustering and two-stochastic versions. Comput Stat Data Anal 14:315–332

Cook R, Weisberg S (1994) An introduction to regression graphics. Wiley, New York

Csardi G, Nepusz T (2006) The igraph software package for complex network research. Int J Complex Syst 1695:1–9

Daudin JJ, Picard F, Robin S (2008) A mixture model for random graphs. Stat Comput 18:173–183

Dempster AP, Laird NM, Rubin DB (1977) Maximum likelihood from incomplete data via the EM algorithm. J R Stat Soc Ser B Methodol 39(1):1–38 (with discussion)

Fraley C, Raftery AE (1999) Mclust: software for model-based clustering. J Classif 16:297–306

Fruchterman TMJ, Reingold EM (1991) Graph drawing by force-directed placement. Softw Pract Exp 21(11):1129–1164

Goodman LA (1974) Exploratory latent structure analysis using both identifiable and unidentifiable models. Biometrika 61(2):215–231

Hand DJ, Yu K (2001) Idiot’s Bayes: not so stupid after all? Int Stat Rev 69(3):385–398

Hoeting A, Madigan D, Raftery A, Volinsky C (1999) Bayesian model averaging: a tutorial. Stat Sci 14(4):382–401

Holland PW, Laskey KB, Leinhardt S (1983) Stochastic blockmodels: first steps. Soc Netw 5(2):109–137

Karlis D, Xekalaki E (2003) Choosing initial values for the EM algorithm for finite mixtures. Comput Stat Data Anal 41(3–4):577–590

Keribin C (2000) Consistent estimation of the order of mixture models. Sankhy? Indian J Stat Ser A (1961–2002) 62(1):49–66

Lee S, McLachlan GJ (2012) Finite mixtures of multivariate skew t-distributions: some recent and new results. Stat Comput 24(2):181–202

Linzer DA, Lewis JB (2011) poLCA: an R package for polytomous variable latent class analysis. J Stat Softw 42(10):1–29

McGrory C, Ahfock D (2014) Transdimensional sequential Monte Carlo for hidden Markov models using variational Bayes-SMCVB. In: Proceedings of the 2014 federated conference on computer science and information systems, vol 3. pp 61–66

McLachlan GJ, Krishnan T (1997) The EM algorithm and extensions. Wiley, New York

McLachlan GJ, Peel D (1998) Advances in pattern recognition: joint IAPR international workshops on structual and syntactic pattern recognition (SSPR) and statistical pattern recognition (SPR) Sydney, Australia, August 11–13, 1998 Proceedings, Springer, Berlin, chap Robust cluster analysis via mixtures of multivariate t-distributions, pp 658–666

McLachlan GJ, Peel D (2000) Finite mixture models. Wiley, New York

Meng XL, Rubin DB (1992) Recent extensions of the EM algorithm (with discussion). In: Bayesian statistics 4. Oxford University Press, Oxford, pp 307–320

Meng XL, Rubin DB (1993) Maximum likelihood estimation via the ECM algorithm: a general framework. Biometrika 80:267–278

Meyer D, Dimitriadou E, Hornik K, Weingessel A, Leisch F (2012) e1071: Misc Functions of the Department of Statistics (e1071), TU Wien. R package version 1.6-1. http://CRAN.R-project.org/package=e1071

Moran M, Walsh C, Lynch A, Coen RF, Coakley D, Lawlor BA (2004) Syndromes of behavioural and psychological symptoms in mild Alzheimer’s disease. Int J Geriatr Psychiatry 19:359–364

Murphy M, Wang D (2001) Do previous birth interval and mother’s education influence infant survival? A Bayesian model averaging analysis of Chinese data. Popul Stud 55(1):37–47

Neal RM, Hinton GE (1999) A view of the EM algorithm that justifies incremental, sparse, and other variants. In: Jordan MI (ed) Learning in graphical models. MIT Press, Cambridge, pp 355–368

Nobile A, Fearnside AT (2007) Bayesian finite mixtures with an unknown number of components: the allocation sampler. Stat Comput 17(2):147–162

O’Hagan A, Murphy T, Gormley I (2012) Computational aspects of fitting mixture models via the expectation–maximisation algorithm. Comput Stat Data Anal 56(12):3843–3864

Raftery AE, Balabdaoui F, Gneiting T, Polakowski M (2005) Using Bayesian model averaging to calibrate forecast ensembles. Mon Weather Rev 133:1155–1174

Redner R, Walker H (1984) Mixture densities, maximum likelihood, and the EM algorithm. Soc Ind Appl Math Rev 26:195–329

Rokach L, Maimon O (2010) Clustering methods. In: Data mining and knowledge discovery handbook. Springer, Berlin, pp 321–352

Schwarz G (1978) Estimating the dimension of a model. Ann Stat 6:461–464

Slonim N, Atwal GS, Tkacik G, Bialek W, Mumford D (2005) Information-based clustering. Proc Natl Acad Sci USA 102(51):18297–18302

Snijders TAB, Nowicki K (1997) Estimation and prediction for stochastic blockmodels for graphs with latent block structure. J Classif 14(1):pp.75-100

Ueda N (1998) Deterministic annealing EM algorithm. Neural Netw 11:271–282

Volant S, Martin Magniette ML, Robin S (2012) Variational Bayes approach for model aggregation in unsupervised classification with Markovian dependency. Comput Stat Data Anal 56(8):2375–2387

Volinsky CT, Madigan D, Raftery AE, Kronmal RA (1997) Bayesian model averaging in proportional hazard models: assessing the risk of a stroke. J R Stat Soc Ser C Appl Stat 46(4):433–448

Walsh C (2006) Latent class analysis identification of syndromes in Alzheimer’s disease: a Bayesian approach. Metodološki Zvezki-Adv Methodol Stat 3:147–162

Ward JH (1963) Hierarchical grouping to optimize an objective function. J Am Stat Assoc 58(301):236–244

Wasserman S, Faust K (1994) Social network analysis: methods and applications. Cambridge University Press, Cambridge

White A, Murphy TB (2014) BayesLCA: an R package for Bayesian latent class analysis. J Stat Softw 61(13):1–28

Wintle BA, McCarthy MA, Volinsky CT, Kavanagh RP (2003) The use of Bayesian model averaging to better represent uncertainty in ecological models. Conserv Biol 17(6):1579–1590

Zachary WW (1977) An information flow model for conflict and fission in small groups. J Anthropol Res 33(1):452–473

Zhou H, Lange KL (2010) On the bumpy road to the dominant mode. Scand J Stat 37(4):612–631

Zhu C, Byrd R, Lu P, Nocedal J (1997) Algorithm 778: L-BFGS-B: Fortran subroutines for large-scale bound-constrained optimization. Soc Ind Appl Math J Sci Comput 23(4):550–560

Acknowledgements

The authors would like to acknowledge the contribution of Dr. Jason Wyse to this paper, who provided many helpful insights as well as C++ code for the label-switching methodology employed.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

O’Hagan, A., White, A. Improved model-based clustering performance using Bayesian initialization averaging. Comput Stat 34, 201–231 (2019). https://doi.org/10.1007/s00180-018-0855-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-018-0855-2