Abstract

Multiple testing refers to any instance that involves the simultaneous testing of more than one hypothesis. If decisions about the individual hypotheses are based on the unadjusted marginal p-values, then there is typically a large probability that some of the true null hypotheses will be rejected. Unfortunately, such a course of action is still common. In this article, we describe the problem of multiple testing more formally and discuss methods which account for the multiplicity issue. In particular, recent developments based on resampling result in an improved ability to reject false hypotheses compared to classical methods such as Bonferroni.

Similar content being viewed by others

Keywords

JEL Classifications

Multiple testing refers to any instance that involves the simultaneous testing of several hypotheses. This scenario is quite common in much empirical research in economics. Some examples include: (i) one fits a multiple regression model and wishes to decide which coefficients are different from zero; (ii) one compares several forecasting strategies to a benchmark and wishes to decide which strategies are outperforming the benchmark; (iii) one evaluates a program with respect to multiple outcomes and wishes to decide for which outcomes the program yields significant effects.

If one does not take the multiplicity of tests into account, then the probability that some of the true null hypotheses are rejected by chance alone may be unduly large. Take the case of S = 100 hypotheses being tested at the same time, all of them being true, with the size and level of each test exactly equal to α. For α = 0.05, one expects five true hypotheses to be rejected. Further, if all tests are mutually independent, then the probability that at least one true null hypothesis will be rejected is given by 1–0.95100 = 0.994.

Of course, there is no problem if one focuses on a particular hypothesis, and only one of them, a priori. The decision can still be based on the corresponding marginal p-value. The problem only arises if one searches the list of p-values for significant results a posteriori. Unfortunately, the latter case is much more common.

Notation

Suppose data X is generated from some unknown probability distribution P. In anticipation of asymptotic results, we may write X = X(n), where n typically refers to the sample size. A model assumes that P belongs to a certain family of probability distributions, though we make no rigid requirements for this family; it may be a parametric, semiparametric, or nonparametric model.

Consider the problem of simultaneously testing a hypothesis Hs against the alternative hypothesis H″s for s = 1, …, S. A multiple testing procedure (MTP) is a rule which makes some decision about each Hs. The term false discovery refers to the rejection of a true null hypothesis. Also, let I(P) denote the set of true null hypotheses, that is, s ∈ I(P) if and only if (iff) Hs is true.

We also assume that a test of the individual hypothesis Hs is based on a test statistic Tn,s, with large values indicating evidence against Hs. A marginal p-value for testing Hs is denoted by \( \widehat{p} \)n,s.

Familywise Error Rate

Accounting for the multiplicity of individual tests can be achieved by controlling an appropriate error rate. The traditional or classical familywise error rate (FWE) is the probability of one or more false discoveries:

Control of the FWE means that, for a given significance level α,

Control of the FWE allows one to be 1 − α confident that there are no false discoveries among the rejected hypotheses.

Note that ‘control’ of the FWE is equated with ‘finite-sample’ control: (1) is required to hold for any given sample size n. However, such a requirement can often only be achieved under strict parametric assumptions or for special permutation setups. Instead, we then settle for asymptotic control of the FWE:

Methods Based on Marginal p-values

MTPs falling in this category are derived from the marginal or individual p-values. They do not attempt to incorporate any information about the dependence structure between these p-values. There are two advantages to such methods. First, we might only have access to the list of p-values from a past study, but not to the underlying complete data set. Second, such methods can be very quickly implemented. On the other hand, as discussed later, such methods are generally sub-optimal in terms of power.

To show that such methods control the desired error rate, we need a condition on the p-values corresponding to the true null hypotheses:

Condition (3) merely asserts that, when testing Hs alone, the test that rejects Hs when \( \widehat{p} \)n,s ≤ u has level u, that is, \( \widehat{p} \)n,s is a proper p-value.

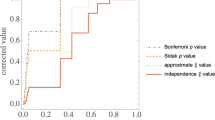

The classical method to control the FWE is the Bonferroni method, which rejects Hs iff \( \widehat{p} \)n,s ≤ A/S: More generally, the weighted Bonferroni method rejects Hs if \( \widehat{p} \)n,s ≤ ws ⋅ A/S; where the constants ws, satisfying ws ≥ 0 and Σs ws = 1, reflect the ‘importance’ of the individual hypotheses.

An improvement is obtained by the method of Holm (1979). The marginal pvalues are ordered from smallest to largest: \( \widehat{p} \)n,(1) ≤ \( \widehat{p} \)n,(2) ≤ … ≤ \( \widehat{p} \)n,(S) with their corresponding null hypotheses labeled accordingly: H(1), H(2), …, H(s). Then, H(s) is rejected iff \( \widehat{p} \)n,(j) ≤ A/(S − j + 1) for j = 1, …, s. In other words, the method starts with testing the most significant hypothesis by comparing its p-value to α/S, just as the Bonferroni method. If the hypothesis is rejected, the method moves on to the second most significant hypothesis by comparing its p-value to α/(S −1), and so on, until the procedure comes to a stop. Necessarily, all hypotheses rejected by Bonferroni will also be rejected by Holm, but potentially a few more will be too. So, trivially, the method is more powerful. But it still controls the FWE under (3).

If it is known that the p-values are suitably positive dependent, then further improvements can be obtained with the use of Simes identity; see Sarkar (1998).

So far, we have assumed ‘finite-sample validity’ of the null p-values expressed by (3). However, often p-values are derived by asymptotic approximations or resampling methods, only guaranteeing ‘asymptotic validity’ instead:

Under this more realistic condition, the MTPs presented in this section only provide asymptotic control of the FWE in the sense of (2).

Single-step Versus Stepwise Methods

In single-step MTPs, individual test statistics are compared to their critical values simultaneously, and after this simultaneous ‘joint’ comparison, the multiple testing method stops. Often there is only one common critical value, but this need not be the case. More generally, the critical value for the sth test statistic may depend on s. An example is the weighted Bonferroni method discussed above.

Often single-step methods can be improved in terms of power via stepwise methods, while still maintaining control of the desired error rate. Stepdown methods start with a single-step method but then continue by possibly rejecting further hypotheses in subsequent steps. This is achieved by decreasing the critical values for the remaining hypotheses depending on the hypotheses already rejected in previous steps. As soon as no further hypotheses are rejected, the method stops. The Holm (1979) method discussed above is a stepdown method.

Stepdown methods therefore improve upon single-step methods by possibly rejecting ‘less significant’ hypotheses in subsequent steps. In contrast, there also exist stepup methods that start with the least significant hypotheses, having the smallest test statistics, and then ‘step up’ to further examine the remaining hypotheses having larger test statistics.

More general methods that control the FWE can be obtained by the closure method; see Hochberg and Tamhane (1987).

Resampling Methods Accounting for Dependence

Methods based on p-values often achieve (asymptotic) control of the FWE by assuming (i) a worst-case dependence structure or (ii) a ‘convenient’ dependence structure (such as mutual independence). This has two potential disadvantages. In case of (i), the method can be quite sub-optimal in terms of power if the true dependence structure is quite far away from the worst-case scenario. In case of (ii), if the convenient dependence structure does not hold, even asymptotic control may not result. As an example for case (i), consider the Bonferroni method. If the p-values were perfectly dependent, then the cut-off value could be changed from α/S to α. While perfect dependence is rare, this example serves to make a point. In the realistic scenario of ‘strong cross-dependence’, the cut-off value could be changed to something a lot larger than α/S while still maintaining control of the FWE. Hence, it is desirable to account for the underlying dependence structure.

Of course, this dependence structure is unknown and must be (implicitly) estimated from the available data. Consistent estimation, in general, requires that the sample size grow to infinity. Therefore, in this subsection, we will settle for asymptotic control of the FWE. In addition, we will specialize to making simultaneous inference on the elements of a parameter vector θ = (θ1, …, θS)T. Assume the individual hypotheses are one-sided of the form:

Modifications for two-sided hypotheses are straightforward.

The test statistics are of the form \( {T}_{n,s}={\widehat{\varTheta}}_{n,s}/{\widehat{\varSigma}}_{n,s} \). Here, \( {\widehat{\varTheta}}_{n,s} \) is an estimator of θs computed from X(n). Further, \( {\widehat{\varSigma}}_{n,s} \) is either a standard error for \( {\widehat{\varSigma}}_{n,s} \) or simply equal to \( 1/\sqrt{n} \) in case such a standard error is not available or only very difficult to obtain.

We start by discussing a single-step method. An idealized method would reject all Hs for which Tn,s ≥ d1 where d1 is the 1 − α quantile under P of the random variable \( ma{x}_s\left({\widehat{\varTheta}}_{n,s}-{\varTheta}_s\right)/{\widehat{\varSigma}}_{n,s} \) Naturally, the quantile d1 does not only depend on the marginal distributions of the centered statistics \( \left({\widehat{\varTheta}}_{n,s}-{\varTheta}_s\right)/{\widehat{\varSigma}}_{n,s} \) but, crucially, also on their dependence structure.

Since P is unknown, the idealized critical value d1 is not available. But it can be estimated consistently under weak regularity conditions as follows. Take \( \widehat{d} \)1 as the 1 − α quantile under \( \widehat{P} \)n of \( {\mathrm{max}}_s\left({{\widehat{\varTheta}}^{\ast}}_{n,s}-{\widehat{\varTheta}}_{n,s}\right)/{{\widehat{\varSigma}}^{\ast}}_{n,s} \). Here, \( \widehat{P} \)n is an unrestricted estimate of P. Further \( {{\widehat{\varTheta}}^{\ast}}_{n,s} \) and \( {{\widehat{\varSigma}}^{\ast}}_{n,s} \) are the estimator of θs and its standard error (or simply \( 1/\sqrt{n} \)), respectively, computed from X(n),* where X(n),* ~ \( \widehat{P} \)n. In other words, we use the bootstrap to estimate d1. The particular choice of \( \widehat{P} \)n depends on the situation. In particular, if the data are collected over time a suitable time series bootstrap needs to be employed; see Davison and Hinkley (1997) and Lahiri (2003).

We have thus described a single-step MTP. However, a stepdown improvement is possible. In any given step j, we simply discard the hypotheses that have been rejected so far and apply the single-step MTP to the remaining universe of non-rejected hypotheses. The resulting critical value \( \widehat{d} \)j necessarily satisfies \( \widehat{d} \)j ≤ \( \widehat{d} \)j−1 so that new rejections may result; otherwise the method stops.

This bootstrap stepdown MTP provides asymptotic control of the FWE under remarkably weak regularity conditions. Mainly, it is assumed that \( \sqrt{n}\left(\widehat{\varTheta}-\varTheta \right) \) converges in distribution to a (multivariate) continuous limit distribution and that the bootstrap consistently estimates this limit distribution. In addition, if standard errors are employed for \( {\widehat{\varSigma}}_{n,s} \), as opposed to simply using \( 1/\sqrt{n} \), it is assumed that they converge to the same non-zero limiting values in probability, both in the ‘real world’ and in the ‘bootstrap world’. Under even weaker regularity conditions, a subsampling approach could be used instead; see Romano and Wolf (2005). Furthermore, when a randomization setup applies, randomization methods can be used as an alternative; see Romano and Wolf (2005) again.

Related methods are developed in White (2000) and Hansen (2005). However, both works are restricted to single-step methods. In addition, White (2000) does not consider studentized test statistics. Stepwise bootstrap methods to control the FWE are already proposed in Westfall and Young (1993). An important difference in their approach is that they bootstrap under the joint null, that is, they use a restricted estimate of P where the contraints of all null hypotheses jointly hold. This approach requires the so-called subset pivotality condition and is generally less valid than the approaches discussed so far based on an unrestricted estimate of P; for instance, see Example 4.1 of Romano and Wolf (2005).

Generalized Error Rates

So far, attention has been restricted to the FWE. Of course, this criterion is very strict; not even a single true hypothesis is allowed to be rejected. When S is very large, the corresponding multiple testing procedure (MTP) might result in low power, where we loosely define ‘power’ as the ability to reject false null hypotheses.

Let F denote the number of false rejections and let R denote the total number of rejections. The false discovery proportion (FDP) is defined as FDP = (F/R) ⋅ 1{R > 0}, where 1{⋅} denotes the indicator function. Instead of the FWE, we may consider the probability of the FDP exceeding a small, pre-specified proportion: P{FDP > γ}, for some γ ∈ [0,1). The special choice of γ = 0 simplifies to the traditional FWE. Another alternative to the FWE is the false discovery rate (FDR), defined to be the expected value of the FDP: FDRP = EP (FDP).

By allowing for a small (expected) fraction of false discoveries, one can generally gain a lot of power compared with FWE control, especially when S is large. For the discussion of MTPs to provide (asymptotic) control of the FDP and the FDR, the reader is referred to Romano et al. (2008a, b) and the references therein.

Bibliography

Davison, A.C., and D.V. Hinkley. 1997. Bootstrap Methods and their Application. Cambridge: University Press, Cambridge.

Hansen, P.R. 2005. A test for superior predictive ability. Journal of Business and Economics Statistics 23: 365–80.

Hochberg, Y., and A. Tamhane. 1987. Multiple comparison procedures. New York: Wiley.

Holm, S. 1979. A simple sequentially rejective multiple test procedure. Scandinavian Journal of Statistics 6: 65–70.

Lahiri, S.N. 2003. Resampling methods for dependent data. New York: Springer.

Romano, J.P., and M. Wolf. 2005. Exact and approximate stepdown methods for multiple hypothesis testing. Journal of the American Statistical Association 100: 94–108.

Romano, J.P., A.M. Shaikh, and M. Wolf. 2008a. Control of the false discovery rate under dependence using the bootstrap and subsampling (with discussion). Test 17: 417–42.

Romano, J.P., A.M. Shaikh, and M. Wolf. 2008b. Formalized data snooping based on generalized error rates. Econometric Theory 24: 404–47.

Sarkar, S.K. 1998. Some probability inequalities for ordered M T P2 random variables: A proof of simes conjecture. Annals of Statistics 26: 494–504.

Westfall, P.H., and S.S. Young. 1993. Resampling-based multiple testing: Examples and methods for p-value adjustment. New York: Wiley.

White, H.L. 2000. A reality check for data snooping. Econometrica 68: 1097–126.

Author information

Authors and Affiliations

Editor information

Copyright information

© 2018 Macmillan Publishers Ltd.

About this entry

Cite this entry

Romano, J.P., Shaikh, A.M., Wolf, M. (2018). Multiple Testing. In: The New Palgrave Dictionary of Economics. Palgrave Macmillan, London. https://doi.org/10.1057/978-1-349-95189-5_2914

Download citation

DOI: https://doi.org/10.1057/978-1-349-95189-5_2914

Published:

Publisher Name: Palgrave Macmillan, London

Print ISBN: 978-1-349-95188-8

Online ISBN: 978-1-349-95189-5

eBook Packages: Economics and FinanceReference Module Humanities and Social SciencesReference Module Business, Economics and Social Sciences