Abstract

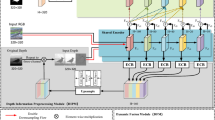

Feature match is a hot research topic in salient object detection, because the information definition is complex and it is difficult to explore an effective match strategy. In this paper, we propose a Dual-Path Feature Match Network (DPFMN) to enhance the cross-modal and global-local match efficiency. Specifically, in the cross-modal match, we propose the Auxiliary-enhanced Module (AEM) to excavate the auxiliary information. In the global-local match, we propose the Capsule Correlation Module (CCM) to store information hierarchically in the sub-capsules, which can enhance the correlation from global to local features. Also, we design the Guided Fusion Module (GFM) to integrate global-local features in a distributed manner to ensure information integrity. Considering the quality and detail of the saliency map, we introduce the Saliency Reconstruct Module (SRM) for progressive image reconstruction to avoid the unstable reconstruction information caused by too large gradients. The method proves its effectiveness through a fair comparison with 12 RGB-D and 7 RGB-T networks on 8 public datasets.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Mahadevan, V., Vasconcelos, N.: Saliency-based discriminant tracking. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 1007–1013. IEEE (2009)

Jang, Y,K., Cho, N.I.: Generalized product quantization network for semi-supervised image retrieval. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 3420–3429. IEEE (2020)

Song, M., Song, W., Yang, G., Chen, C.: Improving RGB-D salient object detection via modality-aware decoder. J. IEEE Trans. Image Process. 31, 6124–6138 (2022)

Huang, Y., Qiu, C., Yuan, K.: Surface defect saliency of magnetic tile. J. Vis. Comput. 36, 85–96 (2020)

Ji, W., Li, J., Zhang, M., Piao, Y., Lu, H.: Accurate RGB-D salient object detection via collaborative learning. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12363, pp. 52–69. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58523-5_4

Fan, D.P., Lin, Z., Zhang, Z., Zhu, M., Cheng, M.M.: Rethinking RGB-D salient object detection: models, data sets, and large-scale benchmarks. J. IEEE Trans. Neural Netw. Learn. Syst. 32(5), 2075–2089 (2020)

Zhai, Y., et al.: Bifurcated backbone strategy for RGB-D salient object detection. J. IEEE Trans. Image Process. 30, 8727–8742 (2021)

Zhang, J., et al.: UC-Net: uncertainty inspired RGB-D saliency detection via conditional variational autoencoders. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 8582–8591. IEEE (2020)

Zhang, Z., Lin, Z., Xu, J., Jin, W.D., Lu, S.P., Fan, D.P.: Bilateral attention network for RGB-D salient object detection. J. IEEE Trans. Image Process. 30, 1949–1961 (2021)

Ji, W., et al.: Calibrated RGB-D salient object detection. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 9471–9481. IEEE (2021)

Lang, C., Nguyen, T.V., Katti, H., Yadati, K., Kankanhalli, M., Yan, S.: Depth matters: Influence of depth cues on visual saliency. In: 12th European Conference on Computer Vision, pp. 101–115 (2012)

Ciptadi, A., Hermans, T., Rehg, J.: An in depth view of saliency. In: British Machine Vision Conference (2013)

Tu, Z., Li, Z., Li, C., Lang, Y., Tang, J.: Multi-interactive dual-decoder for RGB-thermal salient object detection. J. IEEE Trans. Image Process. 30, 5678–5691 (2021)

Zhou, W., Zhu, Y., Lei, J., Wan, J., Yu, L.: APNet: adversarial learning assistance and perceived importance fusion network for all-day RGB-T salient object detection. J. IEEE Trans. Emerg. Top. Comput. Intell. 6(4), 957–968 (2021)

Huo, F., Zhu, X., Zhang, Q., Liu, Z., Yu, W.: Real-time one-stream semantic-guided refinement network for RGB-Thermal salient object detection. J. IEEE Trans. Instrum. Meas. 71, 1–12 (2022)

Zhou, W., Zhu, Y., Lei, J., Yang, R., Yu, L.: LSNet: Lightweight spatial boosting network for detecting salient objects in RGB-thermal images. J. IEEE Trans. Image Process. 32, 1329–1340 (2023)

Liu, Z., Huang, X., Zhang, G., Fang, X., Wang, L., Tang, B.: Scribble-Supervised RGB-T Salient Object Detection. arXiv preprint arXiv:2303.09733 (2023)

Wang, W., et al.: Pyramid vision transformer: a versatile backbone for dense prediction without convolutions. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 568–578 (2021)

Liu, S., Huang, D.: Receptive field block net for accurate and fast object detection. In: Proceedings of the European Conference on Computer Vision, pp. 385–400 (2018)

Chen, L.C., Papandreou, G., Schroff, F., Adam, H.: Rethinking atrous convolution for semantic image segmentation. arXiv preprint arXiv:1706.05587 (2017)

Hinton, G.E., Sabour, S., Frosst, N.: Matrix capsules with EM routing. In: International Conference on Learning Representations (2018)

Sabour, S., Frosst, N., Hinton, G.E.: Dynamic routing between capsules. In: Advances in Neural Information Processing Systems (2017)

Ju, R., Ge, L., Geng, W., Ren, T., Wu, G.: Depth saliency based on anisotropic center-surround difference. In: IEEE International Conference on Image Processing, pp. 1115–1119 (2014)

Peng, H., Li, B., Xiong, W., Hu, W., Ji, R.: RGBD salient object detection: a benchmark and algorithms. In: The 13th European Conference on Computer Vision, pp. 92–109 (2014)

Zhu, C., Li, G.: A three-pathway psychobiological framework of salient object detection using stereoscopic technology. In: IEEE International Conference on Computer Vision Workshops, pp. 3008–3014 (2017)

Niu, Y., Geng, Y., Li, X., Liu, F.: Leveraging stereopsis for saliency analysis. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 454–461 (2012)

Wang, G., Li, C., Ma, Y., Zheng, A., Tang, J., Luo, B.: RGB-T saliency detection benchmark: dataset, baselines, analysis and a novel approach. In: 13th Conference on Image and Graphics Technologies and Applications, pp. 359–369 (2018)

Tu, Z., Xia, T., Li, C., Wang, X., Ma, Y., Tang, J.: RGB-T image saliency detection via collaborative graph learning. J. IEEE Trans. Multimedia 22(1), 160–173 (2019)

Tu, Z., Ma, Y., Li, Z., Li, C., Xu, J., Liu, Y.: RGBT salient object detection: a large-scale dataset and benchmark. IEEE Trans. Multimedia (2022)

Lee, M., Park, C., Cho, S., Lee, S.: Spsn: Superpixel prototype sampling network for rgb-d salient object detection. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds.) Computer Vision – ECCV 2022, vol. 13689, pp. 630–647. Springer, Cham (2022). https://doi.org/10.1007/978-3-031-19818-2_36

Liu, Z., Wang, Y., Tu, Z., Xiao, Y., Tang, B.: TriTransNet: RGB-D salient object detection with a triplet transformer embedding network. In: The 29th ACM International Conference on Multimedia, pp. 4481–4490 (2021)

Pang, Y., Zhao, X., Zhang, L., Lu, H.: CAVER: cross-modal view-mixed transformer for bi-modal salient object detection. J. IEEE Trans. Image Process. (2023)

Chen, T., Xiao, J., Hu, X., Zhang, G., Wang, S.: Adaptive fusion network for RGB-D salient object detection. J. Neurocomput. 522, 152–164 (2023)

Wu, J., Hao, F., Liang, W., Xu, J.: Transformer fusion and pixel-level contrastive learning for RGB-D salient object detection. J. IEEE Trans. Multimedia (2023)

Gao, W., Liao, G., Ma, S., Li, G., Liang, Y., Lin, W.: Unified information fusion network for multi-modal RGB-D and RGB-T salient object detection. J. IEEE Trans. Circ. Syst. Video Technol. 32(4), 2091–2106 (2021)

Liang, Y., Qin, G., Sun, M., Qin, J., Yan, J., Zhang, Z.: Multi-modal interactive attention and dual progressive decoding network for RGB-D/T salient object detection. J. Neurocomput. 490, 132–145 (2022)

Gu, K., Xia, Z., Qiao, J., Lin, W.: Deep dual-channel neural network for image-based smoke detection. J. IEEE Trans. Multimed. 22(2), 311–323 (2020)

Acknowledgements

This research was supported by the Project of China West Normal University under Grant 17YC046.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Wen, X., Feng, Z., Lin, J., Xiao, X. (2023). DPFMN: Dual-Path Feature Match Network for RGB-D and RGB-T Salient Object Detection. In: Yongtian, W., Lifang, W. (eds) Image and Graphics Technologies and Applications. IGTA 2023. Communications in Computer and Information Science, vol 1910. Springer, Singapore. https://doi.org/10.1007/978-981-99-7549-5_13

Download citation

DOI: https://doi.org/10.1007/978-981-99-7549-5_13

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-7548-8

Online ISBN: 978-981-99-7549-5

eBook Packages: Computer ScienceComputer Science (R0)