Abstract

The Simple Ballot Model (SBM) and the Component Ballot Model (CBM)—are proposed for solving uncertainty in an election when two candidates gain the same number of votes under the approval rule. The SBM establishes a framework to support counting. In separating the two candidates, it is essential to extract additional information from dominantly valid votes. The CBM uses probability matrices, vectors and permutation group as components. A stable-voting mechanism under permutation invariant can be created to distinguish candidates. The result of the chapter establishes a voting authority to resolve uncertainty of two candidates under the approval rule.

This work was supported by Yunnan Advanced Overseas Scholar Project and Yunnan National Science & Technology Foundation(2004F0009R).

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

JEL Classifications

1 Introduction

As a common practice in a modern democratic society, voting is a practical way to resolve a contest where each candidate seeks to gain maximal support from the electors. Approval voting is a voting procedure in which electors can vote for as many candidates as they wish. Each candidate approved of receives one vote and the candidate with the most votes wins. Approval voting, unlike more complicated ranking systems, is easier and simpler for electors to understand and use. This voting method has been widely used today by various governments and organizations around world (including the use by the United Nations to elect the secretary-general).

To keep healthy economic and political progress in modern democracy societies, it is necessary to apply reliable and convenient voting methodologies and tools to ensure fairness, efficiency and transparency and to overcome paradoxes and difficulties in elections.

1.1 Brief Review of Voting Systems

We can find interesting voting-based models and practices in many ancient stories from Chinese literature to Roman and Greek history. Just before the French revolution in the French Academy, de Borda [1] and de Condorcet [2] proposed the Borda rule and the Condorcet procedures. They wanted to use new voting methods to resolve difficulties and unfair results under traditional plurality-based voting rules in elections for the Academy. In 1920s, Hotelling [3] investigated the equilibrium of spatial economic competition for two firms between location and price. During World War II, von Neumann and Morgenstern [4] developed Theory of Games using differential equations to investigate complicated competition behaviors. This theoretical foundation has a superior influence to develop analytical methodologies and tools from applying pre-designed strategic policies to predicting practical election outcomes. Under fairness conditions, Arrow [5] proved his famous Impossibility Theorem which claims that there is no single election procedure to fairly decide the outcome of an election involving more than three candidates. Various ideas, methods and technologies have emerged to resolve voting difficulties [6,7,8,9].

1.2 Problems in the 2000 American Election

The most debatable problem in the 2000 American election, the 2K-election, is that

Whether the machine-rejected ballots need to be manually recounted?

The practical solution of the 2K-election problem was finally decided by the nine judge’s votes in the US Supreme Court on the lawsuits from the Florida Supreme Court.

This indicates that current voting theories and vote-counting models are all faults to be an authority resolving the problem.

Although the 2K-election is under the plurality rule, not under the approval rule, however the approval rule cannot guarantee to avoid the similar uncertainty when a large number of electors are involved. It is necessary to establish relevant theoretical structure to avoid possible problems in the future.

1.3 Structure of the Chapter

This chapter proposes two models constructing a voting theory to resolve the 2K-election-like problems and other paradoxes in voting practices. Only one voting system under approval rule is concerned.

In Sect. 2, a Simple Ballot Model (SBM) is proposed. Using the SBM, the separable and uncertain conditions for the ballot papers are established. To show some practical strategies and relevant problems in current voting methodologies, four additional rules (reducing error probability, merging other candidate votes, re-election, and court decision) that are commonly used in practical voting processes are discussed.

In Sect. 2.8, the error margin for the 2K-election problem is analyzed. Through voting practice is not an accurate science, but the error margin of 0.233% in the event still cannot be acceptable as an accurate measure. Although almost 99.8% of the valid votes were counted, there is still no way of determining that who is the winner. Therefore, the attentions shifts to the 0.2% votes which were already deemed invalid. This problem highlights that the voting system needs to improve, and a method of extracting additional information from valid votes to separate the two candidates under uncertainty conditions becomes essential.

In Sect. 3, a new voting model—the Component Ballot Model (CBM)—is defined and constructed to provide the essential construction for extracting more information from votes for comparisons. Based on multiple feature matrices (similar to contingency tables in classical statistics), probability feature vectors and permutation invariant group and other advanced mathematical tools, multiple pair sets of feature index families for two candidates are constructed. This mechanism establishes a voting authority to make a decision for an election. After the mathematical definitions and constructions to feature matrix, feature vector, probability feature vector and feature index, the most important results are summarized in Two-D Separable Proposition and Voting Authority Proposition.

Taking into account only the valid votes, the election model will have intrinsic stability for the reliable results immediately after the election. Confusion, frustration and dissatisfaction as those experienced in the 2K-election can be avoided.

In the light of this research, some further research directions are suggested in Sect. 4.

2 Simple Ballot Model

2.1 Key Words in Election

Key words used in an election event can be defined as follows.

-

Election—a special event based on counting votes for a winner (normally whoever attracts the most votes wins the election)

-

Candidate—a person who has been nominated in an election

-

Elector—a person who may legally vote in an election

-

Ballot—a pre-designed form used to record choices of an elector

-

Vote—a ballot on which the choices of an elector are recorded

-

Poll—the collections of votes from all legal electors

-

Decision—Za result on who wins the election.

The Simple Ballot Model simulates the simplest case scenario of whole voting procedure based upon all ballots directly collected from an election under approval rule. In this scenario, one elector can only create one vote for as many candidates selected from a list of candidates.

2.2 Definitions

For an ideal election involving n (≥2) candidates, let \( C = \left\{ {c_{1} ,c_{2} , \ldots ,c_{n} } \right\} \) be a set of the selected candidates. A ballot \( B = \left\langle {c_{1} ,c_{2} , \ldots ,c_{n} } \right\rangle \) is a pre-designed form containing the list of candidates for whom the electors may vote.

A vote is a record of a ballot B. Let a vote denote \( v \). It is valid if \( v = \left\langle {v_{1} ,v_{2} , \ldots ,v_{n} } \right\rangle ,v_{i} { \in }\left\{ {0,1} \right\},i \in [1,n],\sum\nolimits_{i = 1}^{n} {v_{i} > 0} \), otherwise if \( \exists v_{i} = x \notin \left\{ {0,1} \right\},i \in [1,n] \) or \( \sum\nolimits_{i = 1}^{n} {v_{i} } = 0 \) (null selection), then the vote \( v \) is invalid; where \( v_{i} = 1 \) indicates selected the candidate \( c_{i} \), \( v_{i} = 0 \) indicates not selected \( c_{i} \) and \( v_{i} = x \) indicates invalid selection to \( c_{i} \). Normally a vote \( v \) has a value region from \( \left\langle {0,0, \ldots ,0} \right\rangle \) to \( \left\langle {1,1, \ldots ,1} \right\rangle \) … \( \left\langle {x,x, \ldots ,x} \right\rangle \).

An elector can only create one vote and there are a total number of N (≫n) votes in the election.

A poll V is a vote collection in which all votes can be arranged as an array with N entries:

where \( v(t) \) denotes the vote of the tth elector. As each candidate has a number, let \( k \in v(t) \) denote the tth elector selected the kth candidate on the vote.

For example, \( n = 6,N = 8, \) a poll V is: \( V = \;\left( {v(1), \ldots ,v(t), \ldots ,v(8)} \right),\quad t \in [1,8] \)

In this poll, \( \left\{ {v(1),v(2),v(3),v(5),v(6),v(7)} \right\} \) are valid votes (\( v_{3} (1) = v_{4} (1) = 1 \) indicates the 1-st vote selected the third and forth candidates). In addition, \( v(4) \) contains an uncertain selection \( \left( {v_{3} (4) = x} \right) \) and \( v(8) \) is a null selection, both votes are invalid.

Let \( V_{0} \) denote the invalid-poll in the election. It collects all invalid votes from the poll V. Let Vc denote a valid sub-poll in the election. Both sub-polls Vc and \( V_{0} \) partition the poll V. i.e.

Let \( V_{k} \) denote a sub-poll in the election. For any \( k \in [1,n],V_{k} \) collects all valid votes from the poll V for the kth candidate.

Let \( \tilde{V} \) denote a poll vector,

A SBM is a collection of a ballot form, all votes, poll and poll components for an election.

Let \( N_{Vc} \) denote the number of votes in the valid poll \( Vc \), \( N_{Vc} = |Vc| \). Let \( N_{k} \) denote the number of votes in the valid poll \( V_{k} \), \( N_{k} = |V_{k} |,k \in [1,n] \) and \( N_{0} \) denote the number of votes in the invalid poll \( V_{0} \).

The total number of votes in an election, N, is equal to the sum of the number of the valid votes \( N_{Vc} \) plus the number of all invalid votes \( N_{0} \), i.e.

Let \( p_{Vc} = |Vc|/|V| = N_{Vc} /N \) denote a measure of the valid votes.

For any poll vector \( \tilde{V} \), let \( p_{k} = |V_{k} |/|V| = N_{k} /N \), \( 1 \le k \le n \) denote a measure of the kth candidate and \( p_{0} = {{|V_{0} |} \mathord{\left/ {\vphantom {{|V_{0} |} {|V|}}} \right. \kern-0pt} {|V|}} = {{N_{0} } \mathord{\left/ {\vphantom {{N_{0} } N}} \right. \kern-0pt} N} \) denote the measure of the invalid votes.

Under the approval rule, there are many overlaps among different sub-polls. Considering two candidate sub-polls and their common parts, if \( \exists k,l \in [1,n],V_{k} ,V_{l} \subseteq Vc,V_{k} \cap V_{l} \ne \varnothing \), then

In general, we have

Let \( \widetilde{\Psi } \) denote a frequency vector,

2.3 One-Dimensional Feature Distribution

The frequency vector \( \widetilde{\Psi } \) corresponds to a density distribution. There are equations as follows.

Because there is no further partition among sub-polls, the vector \( \widetilde{\Psi } \) is composed of a one-Dimension frequency feature histogram.

Considering inequalities (2.6), (2.8) and (2.9), there is an inequality.

If sub-polls partition the poll, then there is \( 1 = \sum\nolimits_{k = 0}^{n} {p_{k} } \). In the worst case scenario, if all valid votes select all candidates without invalid votes, then

2.4 Separable Condition

When \( \exists i,j \in [1,n],p_{i} ,p_{j} > p_{0} \), a decision between the candidates i and j can be made if and only if

This is the separable condition.

2.5 Uncertain Condition

However, there will be intrinsic difficulties to make a decision between the candidates i and j simply from their measures \( p_{i} \) and \( p_{j} \), if

This is the uncertain condition.

Under the uncertain condition, there are no simple solutions to distinguish signals clearly between \( p_{i} \) and \( p_{j} \) under the interference of \( p_{0} \).

2.6 Balanced Opposites

It is extremely hard to make any decision when both candidates gain the same number of votes in an election. However, for any equilibrium dynamic system involving two balanced opposites in competition, the most probable trends are \( p_{j} = p_{i} \). In general, more complicated feedback mechanisms are involved and balanced events occur more frequently [10, 11].

2.7 Four Additional Policies

To resolve conflicts in an election, four additional policies may be useful: reducing error probability \( \left( {p_{0} \to 0} \right) \), merging other candidate votes (\( V_{i} \cup V_{l} \to V_{i} \) or \( V_{j} \cup V_{l} \to V_{j} ;i,j,l \in [1,n] \)), re-election (new \( p_{i} ,p_{j} \)) and court decision.

The reducing error probability policy works well in certain conditions involving only a small number of electors. Using various controlled methods, e.g., the total number of seats in Parliament being an odd number or some additional votes allowed by Parliament Leaders, the worst case scenario where both candidates hold equal votes without a decision can be eliminated. However, when an election involves a large number of electors like sizes of the 2K-election, the voting system becomes a naturally complex dynamic system and there is no way to make the error margin \( \left( {p_{0} \to 0} \right) \) negligible.

The merging other votes policy works in simple conditions at a single location. To combine votes for candidates from multiple locations under approval rule would be more difficult than under plurality rules since there are many overlaps among sub-polls. There is no guarantee to ensure the policy work. In the best cases, old difficulties may be temporarily solved, but new similar uncertainties could immediately emerge.

From a complex-dynamic system, re-election is as same as the original election. Therefore, the re-election policy cannot provide improved separable property between two candidates.

If other solutions can not be found by timing or other issues, then it is feasible to use Courts to make decision. The court decision policy uses Courts to make decision, it results in efficient decision-making but breaks down the election procedure and it may loose fairness, transparency, self-determination and other advantages of the election process.

2.8 How Accurate Is Accurate?

It is well known that all measurements in physics and in all exact science are inaccurate in some degree. So, what then is sufficient to be deemed accurate for an election? Can we accept a 10% margin of error to be accurate? What about 1% or even 0.1%?

In real life, an error margin of 1% would be highly commendable and one of 0.1% would be considered highly accurate.

Although, voting and polling were not meant to be an exact science, polls and other pre-election statistics had error margin of almost 5–10%. Yet in the actual election, the margin of error was less in the disputed counties, e.g. Miami-Dada and Palm Beach, only 14,000 votes from a total number of six million votes were rejected. The margin of error was only 0.233%. Usually, this would be deemed a negligible number, as almost 99.8% of votes were valid. However, it was not enough to separate the two candidates, this margin would have to reduce the rejected votes from 14,000 to 100. In the condition, at least an error margin of 0.00016666% is required. This is highly improbable due to the cost, time and other factors.

2.9 Shifting Attentions from Invalid Votes to Valid Votes

Almost 99.8% votes are valid. This indicates that in order to determine who will be the winner under the uncertain condition, it is necessary to fetch additional information to determine a victor from valid votes instead of reducing the error margin by handling invalid votes. The total number of votes is far greater than the number of candidates. This makes possible to extract additional information using cross-classification methods based on contingency table-like techniques among multiple categories. The cross-classified technique is a powerful toolkit in modern statistics [12, 13, 14, 15].

Under additional categories such as location, age group and sex, valid votes will be categorized as two-dimensional classified feature distributions in respective contingency tables. Such spatial or histogram-like feature distributions provide invaluable information to support improving separable properties between two uncertain candidates. To represent this idea, a new model is proposed in next chapter.

3 Component Ballot Model

To overcome the intrinsic complexities and uncertain problems in approval voting practices, a new model—the Component Ballot Model—is proposed in this chapter to use multiple variables on a ballot for a better description and an easier comparison.

3.1 Definitions

To be consistent with the previous notation, similar symbols (ballot paper) are used. However, the contents of the ballot paper and other notations will be compounded into vector forms.

Let \( C = \left\{ {C_{1} ,C_{2} , \ldots ,C_{m} } \right\} \) be a set of the selected conditions. The i-th item contains \( n_{i} \) distinct values for selections, \( C_{i} = \left\langle {c_{1}^{i} , \ldots ,c_{j}^{i} , \ldots ,c_{{n_{i} }}^{i} } \right\rangle ,j \in [1,n_{i} ],i \in [1,m] \).

A ballot B (or a component ballot) is a vector composed of m items:

Component items in a ballot provide additional information about elector to the paper such as sex, voting time, location, age group, and minority, living area, social security and employ situations.

For example, the first item contains 10 candidates, the second item presents 100,000 locations, the third item has 3 sex groups (male, female, neutral), the forth item contains 150 age groups, and the fifth item indicates 1010 social security number. Under above conditions, a ballot paper could be

A vote v (or a component vote) is a record of a component ballot B for which at least one value for each m items has been assigned:

where \( n_{i} \) is the upper limit of \( v^{i} \); \( v_{l}^{i} = 1 \) (or 0) means \( c_{l}^{i} \) candidate selected (or not selected), \( v_{l}^{i} = x \) indicates \( c_{l}^{i} \) being an invalid value.

More items are provided for each ballot to include more information. Further distinctions of their valid regions are necessary. If for a vote v, the first item satisfies \( i = 1,\sum\nolimits_{l = 1}^{{n_{i} }} {v_{l}^{1} \ge 1} \)(more than one values selected) and all additional items satisfy \( v_{l}^{i} \in \left\{ {0,1} \right\},l \in [1,n_{i} ],i \in [2,m],\sum\nolimits_{l = 1}^{{n_{i} }} {v_{l}^{i} = 1} \)(one and only one value selected), then the vote v is a valid vote. However, if \( \exists i,l,v_{l}^{i} \in \{ x\} ,i \in [1,m],l \in [1,n_{i} ] \) or there is one \( v^{i} \) in additional items assigned multiple values, \( \left( {\exists i,v_{l}^{i} \in \left\{ {0,1} \right\},\sum\nolimits_{l = 1}^{{n_{i} }} {v_{l}^{i} > 1} ,l \in [1,n_{i} ],i \in [2,m]} \right) \) then v is an invalid vote.

Normally the valid first item in a vote has a value region from \( \left\langle {0,0, \ldots ,0,1} \right\rangle \) to \( \left\langle {1,1, \ldots ,1} \right\rangle \). A total number of \( 2^{{n_{1} }} - 1 \) combinations are valid to allow one, two or more candidates selected. However, for other additional items there is one and only one value selected from \( \left\langle {0,0, \ldots ,0,1} \right\rangle \) to \( \left\langle {1,0, \ldots ,0,0} \right\rangle \). There are only \( n_{i} ,i \in [2,m] \) selections allowed.

Additional information for electors may been accessed from existing election databases somewhere, there is no any technical difficulty to merge them to be a compound vote automatically using modern information technology.

There are enough rooms for an elector with various parameters on a vote and a total number of N electors in voting.

A poll V is a vote collection in which all votes can be arranged as an array with N entries:

Considering each vote has m items, a poll V can be represented as a 2D m × N array.

3.2 Feature Partition

Let \( Vc \) denote a valid poll and \( V_{0} \) denote an invalid poll, \( Vc \) and \( V_{0} \) partition the poll V i.e.

Let \( V_{{}}^{i} \) denote a sub-poll in the election. For any \( i \in [1,m],V_{{}}^{i} \) collects all valid votes of the poll V for the ith item.

Zero-D Feature Lemma

All \( \left\{ {V_{{}}^{i} } \right\}_{i = 1}^{m} \) sub-polls contain the same votes as in the poll Vc:

Proof

Using Eqs. (3.5) and (3.6), a valid vote contains at least one valid value in each category. No difference exists to project all valid votes as one group.□

Let \( V_{k}^{i} \) denote a sub-poll in the election. For any \( i \in [1,m],V_{k}^{i} \) collects all valid votes of the poll Vc for the ith item in a special location k.

One-D Feature Lemma

All \( \left\{ {V_{k}^{i} } \right\}_{{k \in [1,n_{i} ]}} \) sub-polls dissect a sub poll \( V^{i} \):

Proof

By Eqs. (3.5)–(3.8), each vote has at least an identified value. To collect all votes with the value, we have the result.□

One-D Feature Corollary

If each vote contains only one value in the category item, then all sub-polls \( \left\{ {V_{k}^{i} } \right\}_{{k \in [1,n_{i} ]}} \) partition a sub poll \( V^{i} \):

Proof

By Eq. (3.9), each vote has an identified value. There is no overlap among possible sub-polls in relation to the category item.□

It can be noticed that only candidate category does not satisfy one-D feature corollary under approval voting rule. Other additional categories satisfied the condition.

Different from the Zero-D feature lemma, the One-D feature corollary provides non-trivial partition of the votes into multiple sub polls.

Let \( V^{0} \) denote an invalid-poll in the election. It collects all invalid votes of the poll V.

Since there is no any further distinction for votes in \( V^{0} \), all votes in this poll correspond to discarded votes.

Let \( V_{k,l}^{i,j} \) denote a sub poll. It can be described as

For any \( i,j \in [1,m],k \in [1,n_{i} ],l \in \left[ {1,n_{j} } \right] \), collected votes of \( V_{k,l}^{i,j} \) are the same as the votes in \( V_{l,k}^{j,i} \).

If \( l \ne k \), then votes in \( V_{k,l}^{i,j} \) are different from the votes in \( V_{k,l}^{j,i} \).

Two-D Feature Lemma

All votes in \( \left\{ {V_{k,l}^{i,j} } \right\}_{{k \in \left[ {1,n_{i} } \right],l \in \left[ {1,n_{j} } \right]}} \) dissect either \( V_{k}^{i} \) or \( V_{l}^{j} \).

or

Proof

By Eq. (3.12) and one-D feature lemma, each vote in the sub-polls has other identified values. To collect all votes with the value in relevant sub-polls, we have the result.□

Two-D Feature Corollary

If a valid vote contains a single value in the selected category item, then all votes in \( \left\{ {V_{k,l}^{i,j} } \right\}_{{k \in \left[ {1,n_{i} } \right],l \in \left[ {1,n_{j} } \right]}} \) partition either \( V_{k}^{i} \) or \( V_{l}^{j} \). For j category,

Or for i category,

Proof

When each vote in the sub-polls has only a single value in relation to the selected category item, the sub-polls partition the selected poll.□

Under this construction, all votes in \( \left\{ {V_{k,l}^{i,j} } \right\}_{{k \in [1,n_{i} ],l \in [1,n_{j} ]}}^{i,j \in [1,m]} \) dissect the valid poll Vc. When single value condition satisfied, sub-polls can partition the valid poll.

3.3 Feature Matrix Representation

For a given pair \( i,j \in [1,m] \), let k corresponding to row number and l corresponding to column number, for a given \( \left\{ {V_{k,l}^{i,j} } \right\}_{{k \in [1,n_{i} ],l \in [1,n_{j} ]}} \) sub polls, there is a unique feature matrix representation.

3.3.1 Feature Matrix

Let \( V^{i,j} \) denote a feature matrix,

Using a statistical language, a feature matrix \( V^{i,j} \) may correspond to a contingency table based on cross-classified categorical data under two selected categories [13, 16, 17]. Each element of the matrix collects a sub-set of votes in a respective cross-categorical meaning.

3.3.2 Feature Matrix Set

For a given \( \left\{ {V_{k,l}^{i,j} } \right\}_{{k \in [1,n_{i} ],l \in [1,n_{j} ]}}^{i,j \in [1,m]} \), there are a total number of \( 2\text{ * }\left( \begin{aligned} m \hfill \\ 2 \hfill \\ \end{aligned} \right) = m\text{ * }(m - 1) \) distinction feature matrixes. It is composed of a matrix set VS,

For a given pair \( i \ne j,i,j \in [1,m] \) in the set, each \( \left\{ {V_{k,l}^{i,j} } \right\}_{{k \in [1,n_{i} ],l \in [1,n_{j} ]}} \) or \( \left\{ {V_{k,l}^{j,i} } \right\}_{{k \in [1,n_{j} ],l \in [1,n_{i} ]}} \) corresponds to a unique matrix or its translation matrix. However a given pair \( i = j,i,j \in [1,m] \), the matrix is equal to its translation matrix. So there are a total of \( m\text{ * }m - m \) different matrix representations.

For a fixed item (e.g. \( i = 1 \)) as the first index, there are a total number of \( m = \left( \begin{aligned} m \hfill \\ 1 \hfill \\ \end{aligned} \right) \) different matrices in the system to record different relations among \( \left\{ {V_{k,l}^{i,j} } \right\}_{{k \in [1,n_{i} ],l \in [1,n_{j} ]}}^{i,j \in [1,m]} \) sub polls.

Let \( VSC(i) \) denotes the matrix set with first index fixed at i,

Selecting one category for both row and column values, for a given \( VSC(i) \), if \( V_{k,l}^{i,i} \in V^{{i,{\kern 1pt} i}} \) in \( VSC(i) \), a vote in the i th category contains only one valid value, then \( V_{k,l}^{i,i} \) can be determined as following.

In this case, the matrix \( V^{{i,{\kern 1pt} i}} \) is a diagonal matrix.

However, if \( V_{k,l}^{i,i} \in V^{{i,{\kern 1pt} i}} \) in \( VSC(i) \), a vote in the i th category contains multiple distinguishable values, then \( \left\{ {V_{k,l}^{i,i} } \right\} \) provides cross-classified sub-polls.

In this case, the matrix \( V^{{i,{\kern 1pt} i}} \) is a symmetric matrix.

For a given \( VSC(i),V_{k,l}^{i,j} \in V^{{i,{\kern 1pt} j}} \) in \( VSC(i) \), following equation is true.

3.3.3 Probability Feature Matrix

Let \( P^{i,j} \) denote a probability feature matrix corresponding to the matrix \( P^{i,j} \) and \( \left\{ {p_{k,l}^{i,j} } \right\} \) denote its element set, for any \( p_{k,l}^{i,j} \in P^{i,j} \),

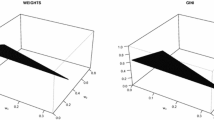

For example, \( n_{1} = 6,n_{2} = 4 \), a probability feature matrix can be as follows:

3.4 Probability Feature Vector

For any \( P^{i,j} \), only at most \( n_{i} \) row vectors in the matrix need to satisfy Eq. (3.22).

The Eq. (3.22) can be established from Eq. (3.13c), if the column items partition the sub-polls for the given row.

Because there is not any restriction among the columns of the probability feature matrix \( P^{i,j} \), such properties make flexible select different categories partitioning a given vote set \( \left\{ {p_{k,l}^{i,j} } \right\} \) into multiple distributions in larger selection spaces to satisfy complicated dynamic system requirements.

For a given \( P^{i,j} \), if the ith item is a categorical index of candidates, then any candidate \( k \in [1,n_{i} ] \) has a probability feature vector corresponding to its probability densities relevant to item j and denoted by \( \Psi _{k}^{i,j} \).

3.5 Differences Between Two Probability Vectors

Let \( \left\{ {V_{l}^{i} } \right\}_{{l \in [1,n_{i} ]}} \) sub-polls denote a vector \( \tilde{V}^{i} = \left( {V_{0} ,V_{1}^{i} , \ldots ,V_{l}^{i} , \ldots ,V_{{n_{i} }}^{i} } \right),\,l \in [1,n_{i} ] \), this vote vector corresponds to a probability vector

\( \widetilde{{\Psi^{i} }} = \left( {\tilde{p}^{0} ,\tilde{p}_{1}^{i} , \ldots ,\tilde{p}_{l}^{i} , \ldots ,\tilde{p}_{{n_{i} }}^{i} } \right),\,l \in [1,n_{i} ] \), let

and

Let \( \left\{ {V_{l}^{i} } \right\}_{{l \in [1,n_{i} ]}} \) sub-polls denote a vector \( V^{i} = \left( {V_{1}^{i} , \ldots ,V_{l}^{i} , \ldots ,V_{{n_{i} }}^{i} } \right),\,l \in [1,n_{i} ] \) and

A vector \( V^{i} \) is corresponding to a probability vector \( \Psi^{i} \),

If the ith item of a vote indicates an ordinal number of candidates in an election, a probability vector \( \widetilde{\Psi }^{i} \) is a special case of a linear spectral distribution.

For any lth candidate, if \( 1 \ge \tilde{p}_{l}^{i} > > \tilde{p}^{0} \ge 0 \), then \( \tilde{p}_{l}^{i} \cong p_{l}^{i} \).

Considering the difference between the two probability measures,

Equation (3.28) indicates that the probability measure of invalid votes is small compared with the candidate measures. There is no significant difference for both probability measures \( \tilde{p}_{l}^{i} \) and \( p_{l}^{i} \) for a candidate in two probability vectors \( \widetilde{\Psi }^{i} \) and \( \Psi^{i} \) respectively.

If any lth and gth candidates gain a similar number of votes in an election to satisfy the uncertain condition, then the difference between both probability measures \( p_{l}^{i} \) and \( p_{g}^{i} \) are restricted by the uncertain condition too.

Considering probability measure difference under uncertain condition, their difference is

Equation (3.31) indicates that the new probability vector does not solve the uncertain problem. To overcome the difficulty, other techniques need to be employed.

3.6 Permutation Invariant Group

For any \( \Psi_{k}^{i,j} \), a permutation invariant group \( \Psi (i,j|k) \) can be constructed to collect vectors using all elements in \( \uppsi_{k}^{i,j} \) as constructors of possible permutations.

3.6.1 Feature Index and Permutation Invariant Family

For a vector \( \Xi \in \Psi (i,j|k) \), if it is feasible to define a numeric measure (or feature index) and all vectors \( \forall \Phi \in \Psi (i,j|k) \) have the same index, then the feature index \( \lambda \) is an invariant of \( \Psi (i,j|k) \).

For \( \forall \Phi \in \Psi (i,j|k) \),

3.6.2 Polynomial Feature Index Family

For any probability vector \( \Psi = \left( {p_{1} , \ldots ,p_{j} , \ldots ,p_{m} } \right) \) with m items and \( \exists k \in [1,m],\,p_{k} > 0 \) a family of polynomial indexes \( \left\{ {\lambda_{n} } \right\} \) is defined by Eqs. (3.33)–(3.36).

…

For example, using the sample probability matrix \( P^{1,2} \) of Eq. (3.21), its polynomial indexes \( \left\{ {\lambda_{n} } \right\} \) are

3.6.3 Entropy Feature Index

For a probability vector \( \Psi = \left( {p_{1} , \ldots ,p_{j} , \ldots ,p_{m} } \right) \) with m items, an entropy feature index \( \lambda_{E} \) is defined by Eq. (3.37).

In polynomial index family \( \left\{ {\lambda_{n} (\Psi )} \right\}_{n \ge 0} ,\,\lambda_{0} (\Psi ) \) indicates the length of vector and \( \lambda_{1} (\Psi ) \) provides the normalized measure. In addition to \( \left\{ {\lambda_{n} (\Psi )} \right\}_{n \ge 0} \) family, \( \lambda_{E} (\Psi ) \) provides another type of indexes in relation to the entropy measurement. Using one of these indexes, it is feasible to distinguish two probability vectors in different permutation groups.

For example, using the same probability matrix \( P^{1,2} \) of Eq. (3.21), its entropy index \( \lambda_{E} \) is

3.7 Two Probability Vectors and Their Feature Indexes

Two probability vectors \( \Psi_{k}^{i,j} \) and \( \Psi_{l}^{i,j} \), have two distinct index families \( \left\{ {\lambda_{n} \left( {\Psi_{k}^{i,j} } \right)} \right\}_{n \ge 0} ,\left\{ {\lambda_{n} \left( {\Psi_{l}^{i,j} } \right)} \right\}_{n \ge 0} \) and \( \exists \tau ,\lambda_{\tau } (\Psi_{k}^{i,j} ) \ne \lambda_{\tau } (\Psi_{l}^{i,j} ),\;1 < \tau \le \lambda_{0} (\Psi_{l}^{i,j} ) \) then the two vectors belong to two different permutation groups.

For two probability vectors \( \Psi_{k}^{i,j} \) and \( \Psi_{l}^{i,j} \), each vector belongs to one permutation group and cannot be generated from another vector then \( \exists n > 1,\lambda_{n} \left( {\Psi_{k}^{i,j} } \right) \ne \lambda_{n} \left( {\Psi_{l}^{i,j} } \right),1 < n \le \lambda_{0} \left( {\Psi_{l}^{i,j} } \right) \).

Under such conditions, if two vectors have different index families, then they are in different permutation groups. In another way, when two vectors cannot be generated from another one, at least one indexes is distinguishable.

3.8 CBM Construction

Let CBM denote a Component Ballot Model. A CBM is a collection of a ballot form, vote sequences, poll and poll component matrix collection, probability matrix collections with normalized probability vectors plus the selected indexing family for an election.

Compared with SBM (Eq. 2.3) and CBM (Eq. 3.38), it is clear that the SMB is the simplest case of CBM and CBM provides more powerful properties for refined descriptions and comparisons in complicated voting applications.

Two-D Separable Proposition

For two candidates to gain similar number of votes in the uncertain condition, it is always feasible to use other categorical information (i.e. location, age group) to re-partition sub polls for each candidate. If the two refined probability feature vectors belong to two permutation groups, then the uncertain problem can be solved in most case scenarios by using the polynomial feature index family or the entropy future index.

Proof

For most case scenarios, cross-classified categorical data make corresponding probability feature vectors with significant differences in relation to respective density distributions. Under different categories without simple correspondences, this mechanism makes it possible to use the same strategy to handle votes for candidates. Since one party may be very strong in certain polices and relative weak in other strategies, those differences create various probability feature vectors easier located in different permutation groups. Even in the most balanced election events from a global viewpoint, hugely distinguishable distributions exist in local regions. This is the most important reason for two probability feature vectors making a pair of significantly distinct feature indexes.□

In a complex dynamic system, equilibrium is the most probable state when the system is in dynamic balance. However, there are significant differences among local areas even in the most equilibrium conditions. This is the most powerful part of proposed model for solving uncertainty in general for complex dynamic systems.

For an election to avoid uncertainty and frustrations due to the voting result in uncertainty, it is necessary to pre-select additional odd \( m - 1 \ge 1 \) categories different from candidates. Following main conclusion can be statement.

Voting Authority Proposition

If two candidates in an election under approval rule are in uncertainty, then additional categories (odd \( m - 1 \ge 1 \)) under pre-agreed conditions could be used. These create the \( m - 1 \) pairs of feature indexes for making the decision for who will be the winner.

Proof

According to the two-D separable proposition, each additional category can provide a pair of significantly distinct feature indexes to separate the two candidates, and all selected \( m - 1 \) pairs have such properties. Considering \( m - 1 \) an odd number, each pair of indexes acts as an authority vote. So, there is no problem using the majority rule to make the decision.□

4 Conclusion and Further Work

In the proposed Component Ballot Model, multiple probability-feature matrix collections are employed and component categories other than the candidate are proposed on ballot papers to overcome confusion and frustration when two candidates are in uncertainty.

Applying advanced invariant constructions to probability feature vectors and also distinguishable properties among measurements in polynomial and entropy feature index families, voting authority provides a stable indexing mechanism to make the whole calculation based on valid votes. Distinguishable properties and invariant properties among feature index families provide reliable measurements for election outcomes.

The basic ideas, tools and technologies in the chapter are originated and created from the author’s research works in 1990s for advanced content-based information retrieval and image feature indexing [18,19,20].

Because the approval rule is only one of the rules in practical voting systems, reader may read author’s other paper discussing related aspects of voting theory under plurality and majority rules [21]. It is interesting to know whether the proposed new model can apply to other voting systems (such as Borda rules, proportional-representation system and preference voting systems) consistently. Similar uncertainty exists in other voting mechanisms. This will be a natural extension of current study.

To satisfy practical voting systems, it is essential to establish testing frameworks to make recommendations for the specific invariant properties contained in the proposed or new indexing families. There is no doubt that different voting systems may require various combinations of different feature indexing schemes to satisfy their optimal properties. More case studies linking between theoretical models and practical applications should be conducted to solve complicated voting paradoxes and other similar problems.

References

J.C. de Borda, Mémoire sur les élections au scrutin (Historie de l’Académie Royal des Sciences, Paris, 1781)

M.-J. Condorcet, Éssai sur l’application de l’analyse à la probabilité des décisions rendues à la pluralité des voix, Paris (1785)

H. Hotelling, Stability in competition. Econ. J. 39, 41–57 (1929)

J. von Neumann, O. Morgenstern, Theory of Games and Economic Behaviour, Princeton (1944)

K.J. Arrow, in Social Choice and Individual Values. Cowles Commission Monographs, no. 12, 2nd edn., 1963 (New York and London, 1951)

S.J. Brams, P.C. Fishburn, Approval voting. Am. Polit. Sci. Rev. 72(3), 831–847 (1978)

S. Galam, Application of statistical physics to politics. Phys. A 274, 132–139 (1999)

V. Merlin et al., On the probability that all decision rules select the same winner. J. Math. Econ. 33, 183–207 (2000)

M. Regenwetter, Probabilistic preferences and topset voting. Math. Soc. Sci. 34, 91–105 (1997)

C. Robinson, Dynamical System – Stability, Symbolic Dynamics and Chaos, 2nd edn. (CRC Press, Boca Raton, 1999)

M.J. Zechman, in Dynamic Models of Voting Behavior and Spatial Models of Party Competition. Working papers in Methodology (No. 10, Institute for Research in Social Science University of North Carolina at Chapel Hill, 1978)

M.R. Anderberg, Cluster Analysis for Applications. (Academic Press, 1973)

B.S. Everitt, The Analysis of Contingency Tables, 2nd edn. (Chapman & Hall, London, 1992)

J.L. Devore, Probability and Statistics for Engineering and the Sciences (Duxbury Press, 1995)

N.L. Johnson, N. Balakrishnan, Advances in the Theory and Practice of Statistics (Wiley, Hoboken, 1997)

W.J. Conover, Practical Nonparametric Statistics, 2nd edn. (Wiley, Hoboken, 1980)

S.E. Fienberg, The Analysis of Cross-Classified Categorical Data, 2nd edn (The MIT Press, Cambridge, 1994)

Z.J. Zheng, in Conjugate Visualisation of Global Complex Behaviour, ed. by R. Stocker, H. Jelinek, B. Durnota and T. Bossomaier. Complex Systems: From Local Interactions to Global Phenomena (IOS Press, Amsterdam, 1996), pp. 57–67

Z.J. Zheng, C.H.C. Leung, Visualising global behaviour of 1D cellular automata image sequence in 2D map. Phys. A 233(3–4), 785–800 (1996)

Z.J. Zheng, C.H.C. Leung, Graph indexes of 2D-thinned images for rapid content-based image retrieval. J. Vis. Commun. Image Represent. 8(2), 121–134 (1997)

J.Z.J. Zheng, Voting Theory for Two Parties, submitted to SIAM Review (2001)

Acknowledgements and Disclaimer

The author would like to express his gratitude to Dr. Wilson Wen for distinguishing the relationship between a feature matrix and a contingency table. Sincerely thanks also go to Dr. Gangjun Liu, Dr. Grahame Smith, Ms. Wilna Macmillan and Dr. Wen Dai for their invaluable comments, suggestions, modifications and careful proofreading of the manuscript.

The constructions and conclusions contained in the chapter are merely the author’s personal opinion of a scientist from a complex-dynamic system view. The author would like to take full responsibility for the contents. No government agent or company should bear the responsibility for the chapter.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2019 The Author(s)

About this chapter

Cite this chapter

Zheng, J. (2019). Voting Theory for Two Parties Under Approval Rule. In: Zheng, J. (eds) Variant Construction from Theoretical Foundation to Applications. Springer, Singapore. https://doi.org/10.1007/978-981-13-2282-2_10

Download citation

DOI: https://doi.org/10.1007/978-981-13-2282-2_10

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-13-2281-5

Online ISBN: 978-981-13-2282-2

eBook Packages: EngineeringEngineering (R0)