Abstract

Image quality has a great influence on the performance of non-contact biometric identification system. In order to acquire palm vein image with high-quality, an image quality assessment algorithm for palm vein is presented based on natural scene statistics features. Moreover, the effect of uneven illumination caused by palm tilt are taken into account. A large number of experiments are presented and the results based on proposed algorithm are accordance with human subjective assessment.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

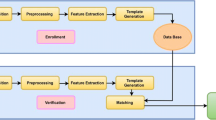

In recent years, biometric identification technology has received extensive attention in research and application. Some examples of biometric identifiers are fingerprint, facial, iris, voice and palm print features. Identification systems based on these bio-metric features have been applied to mobile phones, personal computers and access control. As these identifiers are exposed to the environment and likely to be damaged or forged, palm vein pattern as an internal identifier is more reliable than these externals. Palm vein image are taken with an infrared camera by means of contactless way, the image has poor contrast and nonuniform brightness. Since palm vein image quality have a considerable influence on recognition performance, the primary work of image preprocessing is quality assessment of palm vein image [1,2,3].

Many scholars and institutes have proposed several hand vein image quality evaluation methods. Ming et al. [4, 5] used mean gray value and gray variance as quality index of dorsal hand vein image. In [6, 7],four parameters were selected as the reference for image quality, including gray variance, information entropy, cross point and area of effect. They integrated these parameters with weights to get image quality score. Wang et al. [8] focus on the impact of the palm vein preprocessing and match when palm distance change from far to near. They used TenenGrad to evaluate image clarity and used SSIM to show structure difference of the same palm with the changes of distance increasing. Wang et al. [9] proposed relative contrast and relative definition for dorsal hand vein image quality assessment.

The above studies have not considered the influence of uneven illumination on the palm vein image. In this paper, statistics of Harr-Like features are introduced. We use natural scene statistics (NSS) features presented in [10] to estimate image quality. In addition, the effects of uneven illumination caused by palm tilt are taken into ac-count.

2 Quantitative Evaluation of Palm Vein

In palm vein identification system, palm vein images are taken by contactless way with near-infrared camera. These images usually have poor contrast and strong noise, some of them have uneven illumination. Our work is to quantify the quality of palm vein images to reject low-quality images.

2.1 Illumination Uniformity of ROI Image

Firstly, the vein ROI image is partitioned into several patches and the gray mean of each patch of the image is calculated. Then, we use the difference between the minimum and the maximum gray mean among all patches of ROI image to evaluate the uniformity of image brightness. The larger the difference, the more uneven the brightness of ROI image.

We get ROI image F from palm vein image and partition it into several patches equally. If the matrix of \(\mathrm{{M}} \times \mathrm{{N}}\) represents gray ROI image F and F(i, j) denotes the gray value of the pixel point at the \(i^{th}\) line and the \(j^{th}\) row, we compute mean gray M[n] of each part:

Where k denotes the size of each patch, n is the index of each patch, \(F_n\) stands for the patch of the ROI image whose index is n.

The score of brightness uniformity can be defined as:

Where \({{M_{\max }}}\) and \({{M_{\min }}}\) are the maximum and minimum gray mean M[n] among all patches of the ROI image, M(F) is the gray mean of the ROI image.

Figure 1 shows brightness nonuniformity of 30 palm vein images from the same person that tilt palm from zero to 60\(^\circ \). The size of ROI image is \(128\times 128\) pixels, we partition ROI image into 64 patches equally. Thus k equals 16 and n is defined as a variable set from domain \(N = \{0, 1, 2 \dots 63\}\). The vertical axis represents the magnitude of \(Q_m\), and Horizontal axis represents the tilt angle of the palm. Brightness nonuniformity gradually increased with the tilt angle of palm gradually increased, so we can use \(Q_m\) as an index to evaluate brightness uniformity.

2.2 Statistics of Pixel Intensities and Their Products

Anish Mittal et al. [10] proposed a natural image quality evaluator (NIQE). They selected 90 natural pictures with high quality and learned the distribution models of pixel intensities and their products of these nature pictures, the parameters of distribution models were regarded as quality aware NSS features. Then, they fitted these NSS features with a multivariate Gaussian (MVG) model and called this model natural MVG model. A MVG model of the image to be quality analyzed is fitted with the same method, the quality of the distorted image is expressed as the distance between the natural MVG model and the MVG model of the distorted image.

NIQE does not need distorted images or human subjective scores on them to train the model and has a better performance on several natural image databases, so it is very suitable for realtime identification system. We put NIQE into our quality assessment algorithm, 120 palm vein images with high quality are chosen from PolyU database [11] to train the natural MVG model.

In our algorithm, the size of patch is \(16\times 16\) pixels and \(Q_n\) stands for the result of NIQE.

2.3 Statistics of Harr-Like Features

Haar-like features are digital image features used in object recognition [12]. A simple rectangular Haar-like feature can be defined as the difference of the sum of pixels of areas inside the rectangle, which can be at any position and scale within the original image. In gray image, palm vein pattern is composed of dark lines with different orientations, we can use 3-rectangular Harr-Like feature to detect palm vein. Figure 2 illustrates the process flow when computing the quality index based on statistics of Harr-Like features.

The Harr-Like feature windows we have used are showing in Fig. 2. Since the width of palm vein is about 6 pixels, windows with size of \(12\times 12\) pixels are chosen to ensure the width of black parts of windows are 6 pixels or so. We use four kinds of windows showing in Fig. 3 to compute Harr-Like features, the formulation is as follows:

Where h is Harr-Like feature, and stand for the sum of pixel intensities of white and black part of window respectively and denote the number of white and black pixels in the window respectively.

Then, we move the window with a step of one pixel over the palm vein image, and for each subsection of the image the Haar-like feature is calculated. We can get four vectors of Harr-Like features with four kinds of windows, and use H1, H2, H3 and H4 to denote this four vectors. The distribution of H1, H2, H3, H4 are showing in Fig. 4. From Fig. 4, we can see that the distribution of Harr-like features can be well-modeled as following a zero mode asymmetric generalized Gaussian distribution (AGGD) [13]:

The mean of the distribution is defined as follows:

Different palm vein images have different AGGD, we can use the parameters \(x({\gamma _1},{\beta _{l1}},{\beta _{\gamma 1}},{\eta _1},...{\gamma _4},{\beta _{l4}},{\beta _{\gamma 4}},{\eta _4})\) as NSS feature to quantify quality.

We choose 120 palm vein image with high quality from the database of PolyU [11] to learn the Multivariate Gaussian model:

Where \(({x_1},...,{x_k})\) are the NSS features computed in (4)-(5), and \(\mu \) and \(\varSigma \) denote the mean and covariance matrix of the MVG model, which are estimated using a standard maximum likelihood estimation procedure. We use Mahalanobis distance to measure the distortion level of palm vein image, the formulation as:

Where y stand for the NSS feature of distorted palm vein image. The greater the distance, the worse the image quality.

2.4 Fusion of Quality Indexes

We combine \({Q_m}\), \({Q_n}\) and \({Q_h}\) to quantify the quality of palm vein image, the Q can be defined as follows:

Where \(\alpha \) and \(\beta \) are weights of \({Q_n}\) and \({Q_h}\) respectively, we choose \(\alpha = \beta = 0.5\). Since \({Q_m}\), \({Q_n}\) and \({Q_h}\) are inversely proportional to the image quality, Q is inversely proportional to the image quality.

3 Experimental Analysis

We use a vein image acquisition system to obtain palm vein image, the system is consists of three modules: near infrared light source, the cameral, and the filter. The palm vein image of twenty one colleagues are obtained, 60 palm vein images are taken in different situations for each person. The proposed algorithm has been successfully tested on a variety of test images and only a few of the results are shown in this paper. Please note that the score is inversely proportional to the image quality.

In order to learn the effect of uneven illumination caused by palm tilt, a set of ROI images with different tilt angles from same person are chosen for experiment. We compute every index of quality Q, the results are showing in Table 1.

From Table 1 we can find that the Q and \(Q_m\) are decrease with the tilt angle of palm increase, while \(Q_n\) and \(Q_h\) are fluctuating. The results prove that \(Q_m\) can well reflect the effect of uneven illumination on image quality caused by palm tilt.

We choose a series of palm vein images with different qualities from same person, these images have different kinds and level of distortion. We have some experiments with these images to test our algorithm, the results are showing in Table 2.

From Table 2, we can find that the results based on proposed algorithm are accordance with human subjective assessment. Form \(Q_m\) of image 2 and image 3, we can see that the illumination uniformity of image 2 and image 3 are similar to each other. But image 2 include more useful details than image 3, so the quality of image 1 is better than image 3. Image 3 and image 4 have similar \(Q_h\) which means they have similar detail information, but they have a great difference on illumination uniformity, so the quality of image 3 is better than image 4.

From [10] we can see that NIQE has a good performance on nature scene pictures. However, it is not suitable for palm vein images. The method presented in this paper combines NIQE and two other indexes which based on characteristics of palm vein images, thus it has a better performance on palm vein images.

4 Conclusion

In this paper, an image quality assessment algorithm for palm vein is presented based on natural scene statistics features. In addition, we use gray mean difference between different parts of palm vein image to estimate the brightness uniformity. Some experiments are given at the end. From the results of experiments we can see that the image quality assessment score based on proposed algorithm is accordance with human subjective assessment.

References

Chen, P., Lan, X., Jin, F., Shi, J.: Research of high quality palm vein image acquisition and roi extraction. Chin. J. Sens. Actuators 28(7), 7 (2015)

Kauba, C., Uhl, A.: Robustness evaluation of hand vein recognition systems. In: 2015 International Conference of the Biometrics Special Interest Group (BIOSIG), pp. 1–5. IEEE (2015)

Nayar, G.R., Bhaskar, A., Satheesh, L., Kumar, P.S., Aneesh, R.P.: Personal authentication using partial palmprint and palmvein images with image quality measures. In: 2015 International Conference on Computing and Network Communications (CoCoNet), pp. 191–198. IEEE (2015)

Sheng, M.-Y., Zhao, Y., Zhang, D.-W., Zhuang, S.-L.: Quantitative assessment of hand vein image quality with double spatial indicators. In: 2011 International Conference on Multimedia Technology (ICMT), pp. 642–645. IEEE (2011)

Zhao, Y., Sheng, M.-Y.: Application and analysis on quantitative evaluation of hand vein image quality. In: 2011 International Conference on Multimedia Technology (ICMT), pp. 5749–5751. IEEE (2011)

Cui, J.J., Li, Q., Jia, X.: An image quality assessment algorithm for palm-dorsa vein based on multi-feature fusion. In: Advanced Materials Research, vol. 508, pp. 96–99. Trans Tech Publications (2012)

Jia, X., Cui, J., Xue, D., Pan, F.: Near infrared vein image acquisition system based on image quality assessment. In: 2011 International Conference on Electronics, Communications and Control (ICECC), pp. 922–925. IEEE (2011)

Wang, J., Yu, M., Qu, H., Li, B.: Analysis of palm vein image quality and recognition with different distance. In: 2013 Fourth International Conference on Digital Manufacturing and Automation (ICDMA), pp. 215–218. IEEE (2013)

Wang, Y.-D., Yang, C.-Y., Duan, Q.-Y.: Multiple indexes combination weighting for dorsal hand vein image quality assessment. In: 2015 International Conference on Machine Learning and Cybernetics (ICMLC), vol. 1, pp. 239–245. IEEE (2015)

Mittal, A., Soundararajan, R., Bovik, A.C.: Making a completely blind image quality analyzer. IEEE Signal Process. Lett. 20(3), 209–212 (2013)

Lasmar, N.-E., Stitou, Y., Berthoumieu, Y.: Multiscale skewed heavy tailed model for texture analysis. In: 2009 16th IEEE International Conference on Image Processing (ICIP), pp. 2281–2284. IEEE (2009)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Wang, C., Sun, X., Dong, W., Zhu, Z., Zheng, S., Zeng, X. (2017). Quality Assessment of Palm Vein Image Using Natural Scene Statistics. In: Yang, J., et al. Computer Vision. CCCV 2017. Communications in Computer and Information Science, vol 772. Springer, Singapore. https://doi.org/10.1007/978-981-10-7302-1_21

Download citation

DOI: https://doi.org/10.1007/978-981-10-7302-1_21

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-7301-4

Online ISBN: 978-981-10-7302-1

eBook Packages: Computer ScienceComputer Science (R0)