Abstract

Imbalanced performance usually happens to those classifiers (including deep neural networks) trained on imbalanced training data. These classifiers are more likely to make mistakes on minority class instances than on those majority class ones. Existing explanations attribute the imbalanced performance to the imbalanced training data. In this paper, using deep neural networks, we strive for deeper insights into the imbalanced performance. We find that imbalanced data is a neither sufficient nor necessary condition for imbalanced performance in deep neural networks, and another important factor for imbalanced performance is the distance between the majority class instances and the decision boundary. Based on our observations, we propose a new under-sampling method (named Moderate Negative Mining) which is easy to implement, state-of-the-art in performance and suitable for deep neural networks, to solve the imbalanced classification problem. Various experiments validate our insights and demonstrate the superiority of the proposed under-sampling method.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In the binary classification problem, it is often the case that one class is much more over-represented than the other one. The more over-presented class is usually referred to as the negative or majority class, and the other one as the positive or minority class (we use these words interchangeably in this paper). Most standard algorithms (such as k-NN, SVM, decision tree and deep neural network) are designed to be trained with balanced data. If given imbalanced training data, they will have difficulty in learning proper decision rules and produce imbalanced performance on test data. In other words, they are more likely to make mistakes on minority class instances than on majority class ones.

Most existing explanations attribute the imbalanced performance phenomenon to the imbalanced training data. Therefore, many approaches which aim to balance the data distribution are proposed. For example, random over-sampling method creates positives by randomly replicating the minority class instances. Although the data distribution can be made balanced in this way, it doesn’t create any new information and easily leads to over-fitted classifiers. SMOTE [1] overcomes the weakness by synthesizing new non-replicated positives. However, it is still believed to be insufficient to solve the imbalanced data problem. Another direction to balance the data distribution is under-sampling the over-presented negatives. For example, random under-sampling, like random over-sampling, selects the negatives randomly to construct the balanced positives and negatives. There are also several other more advanced under-sampling methods, such as One-Sided Selection (OSS) [2] and Nearest Neighbor Cleaning Rule [3]. These methods try to remove those redundant, borderline or mislabeled samples and strive for more reliable ones. However, they are suitable to handle those low-dimensional, highly structured and size limited training data which is often in vector form. For those which are high-dimensional, unstructured and in large quantity, they behave unsatisfactorily.

In this paper, in addition to the imbalanced training data, we explore deeper and more fundamental explanations to imbalanced performance in deep neural networks. We choose deep neural network to study the imbalance problem for two reasons. Firstly, deep neural network has achieved great success in various problems in the last several years, such as image classification [4], object detection [5] and machine translation [6]. It has been the most popular model in the machine learning field. Secondly, usually used as an end-to-end model, it can handle raw input data directly without complex data preprocessing. Thus it is suitable to handle those high-dimensional and unstructured raw training data. Using deep neural networks, we find that imbalanced data is a neither sufficient nor necessary condition for the imbalanced performance. By saying that, we mean:

-

balanced training data can also lead to imbalanced performance on test data (shown in Fig. 1b and c), and

-

classifiers trained on imbalanced training data do not necessarily produce imbalanced performance on test data (shown in Fig. 1d).

Illustration of bias of a linear separator induced over training data in a one-dimensional example. Triangles and circles denote positive and negative samples, respectively. The corresponding latent distributions are shown. The dotted line (\(\hat{w}\)) is the hypothesis induced over training data, while the solid line (\(w^*\)) depicts the optimal separator of the underlying distributions.

Additionally, we find another important factor for imbalanced performance is the distance between the negative samples and the positives (or the latent decision hyperplane). Specifically, sampling those which are distant from the positives will push the learnt decision hyperplane towards the space of the negatives (shown in Fig. 1b). On the other hand, sampling those which are near the positives or aggressive ones (which tend to be mislabeled and invade the space of the positives) will push the learnt decision hyperplane towards the space of the positives (shown in Fig. 1c). Neither of these two sampling ways can balance the performance on the two class test data. Based on these observations, we propose to sample the negatives which are moderately distant from the positives (shown in Fig. 1e). These negatives are less likely to be mislabeled and more reliable than the nearest ones, meanwhile they are more informative than those which are distant from the positives. We call the proposed under-sampling method Moderate Negative Mining (MNM). Experiments conducted on various datasets validate the proposed approach.

2 Related Work

There is large literature on solving imbalanced data problem. Existing approaches can be roughly categorized into two groups: the internal approaches [7, 8] and the external approaches [1,2,3, 9]. The internal approaches modify existing algorithms or create new ones to handle the imbalanced classification problem. For example, cost-sensitive learning algorithms impose heavier penalty on misclassifying the minority class instances than that on misclassifying the majority class ones [8]. Although internal approaches are effective in certain cases, they have the disadvantage of being algorithm specific. That is to say, it might be quite difficult, or sometimes impossible, to transport the modification proposed for one classifier to the others [9]. The external approaches, on the other hand, leave those learning algorithms unchanged and alter the data distribution to solve the imbalanced classification problem. They are also known as data re-sampling methods and can be divided into two groups: over-sampling and under-sampling methods. Over-sampling methods increase the quantity of minority class instances by replicating them or synthesizing new ones to balance the data distribution [1]. They are criticized for the over-fitting problem [10]. Under-sampling methods, in an another direction, under-sample the majority class instances to balance the imbalanced distribution. For example, One-Sided Selection (OSS) [2] adopts the Tomek links [11] to eliminate borderline and noisy examples. Nearest Neighbor Cleaning Rule [3] decreases the size of the negatives by cleaning the unreliable negatives. Both these approaches are based on simple nearest neighbor assumption, thus they have limited performance when used for deep neural networks.

Albeit the large literature on imbalanced problem, few works [12,13,14] study the imbalanced classification problem in deep neural networks. In [12], over-sampling and under-sampling are combined by complementary neural network and SMOTE algorithm to solve the imbalanced classification problem. The effects of sampling and threshold-moving in training cost-sensitive neural networks are studied in [13]. It is demonstrated in [14] that more discriminative deep representations can be learned by enforcing a deep network to maintain both inter-cluster and inter-class margins. In this paper, we explore more insights into learning from imbalanced data by deep neural networks and propose a new under-sampling method to solve the imbalanced classification problem. We believe our approach is a good complement to existing approaches for solving the imbalanced classification problem in deep neural networks. The proposed method can be categorized as an external approach.

3 Imbalanced Data and Imbalanced Performance

In this section, we empirically validate our first observation: imbalanced data is a neither sufficient nor necessary condition for imbalanced performance in deep neural networks. The validation process consists of two stages. In the first stage, we validate that balanced training data can also lead to imbalanced performance. In the second stage, we validate that imbalanced data does not necessarily lead to imbalanced performance. The experimental settings of these two processes are largely kept the same, so we first briefly introduce them as follows.

We use the publicly available CIFAR-10 [15] dataset to construct the imbalanced datasets. CIFAR-10 has 10 classes. To construct imbalanced datasets for binary classification problem, we treat one of them as the positive class and the others as the negative one. In this way, we construct 10 imbalanced datasets (CIFAR10-AIRPLANE, CIFAR10-AUTOMOBILE, CIFAR10-BIRD, CIFAR10-CAT, CIFAR10-DEER, CIFAR10-DOG, CIFAR10-FROG, CIFAR10-HORSE, CIFAR10-SHIP, CIFAR10-TRUCK). Original training images are used for training. The test images are equally split into 2 groups. One for validation, and the other one for test.

In this paper, we study the imbalanced classification problem in deep neural networks. Our deep neural networks follow the architecture of 6 convolutional layers. Each convolutional layer is followed by a RELU [18] nonlinear activation layer. We list the detailed information of the architecture in Table 1. The number following each @ symbol represents the number of kernels in the convolutional layer.

These deep neural networks are optimised by the Stochastic Gradient Descent (SGD) algorithm. The base learning rate is set to be 0.01 and decreases by \(50\%\) every 10 epoches. The maximum epoch is set to be 40. The batch size in the SGD algorithm is set to be 256.

3.1 Balanced Training Data Can Lead to Imbalanced Performance

Balanced training data can also lead to imbalanced performance, as shown in Fig. 1b and c. In Fig. 1b, the negatives which are distant from the positives are sampled. On the contrary, samples which are near or across the boundary are sampled in Fig. 1c. The intuition illustrated in Fig. 1 is simple, but it leaves the distance between two data points unsolved, especially for the high-dimensional raw data, such as images. We propose a simple method to approach this problem. Firstly, we randomly under-sample the negatives to balance the training data. We use the balanced training data to train a basic network, which we call metric network. The metric network follows the same architecture with the final classifier (although it can be of a different one, as shown in [16]), and is used to calculate the distance between the negatives and the decision boundary in their embedding space. Finally, we sample the same number of negatives as the positives to construct the balanced training data. To make the performance of the trained classifier imbalanced, we only sample those which are most (or least) distant from the decision boundary (or the positives).

In the trained embedding space, we assume the optimal decision hyperplane is approximately the decision hyperplane learnt by the classifier (this is a reasonable assumption because the embedding space and the classifier are optimized jointly). For softmax classifier, we usually take 0.5 as the decision threshold for a two-class classification problem. The predicted probability of a softmax classifier is

where i is in \(\{0, 1\}\), W is the learnt weights of the softmax classifier, and \(\text {x}\) is a data point in the embedding space. The decision hyperplane is thus

For any negative data point \(\text {x}\), its signed distance to the decision hyperplane is

Thus the predicted probability is a good indicator of the distance between the data point and the decision hyperplane in the embedding space.

In our experiments, to give better understanding of the above proposed validation method, we categorize the negatives into 9 groups (group 0 to group 8) based on their predicted probabilities, as the negatives is as 9 times large in size as the positives. From group 0 to group 8, predicted probabilities of the target label (\(p_0\)) go from the largest to the smallest, thus the distance between the negatives in them and the decision hyperplane goes from the largest to smallest. Every group has the same number of negatives. Therefore, for every constructed imbalanced dataset, we can construct 9 balanced training datasets with their 9 groups of negatives and all the positives.

We use sensitivity and specificity to measure the imbalanced performance. Sensitivity, also called the true positive rate, is defined as

Specificity, also called true negative rate, is defined as

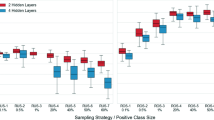

For a fair comparison, during the training of every deep classifier, we keep all experimental settings the same except for the different training data. In Fig. 2, we plot the sensitivity and the specificity of the classifiers trained from the training data which is comprised of the negatives in one group and all the positives.

As shown in Fig. 2, the performance of classifiers trained from the balanced datasets which are comprised of the negatives in group 0 and all the positives and is rather imbalanced, as the sensitivity is generally much larger than the specificity for group 0 in all datasets. As the group number increases within a certain range (i.e. the distance between the negative instances and the decision hyperplane increases), the difference between sensitivity and specificity becomes smaller and the imbalanced performance gradually disappears. However, when it goes further, the difference rebounds and the imbalance phenomenon arises again. From this experiment, we empirically validate that balanced training data can also lead to imbalanced performance on test data.

3.2 Imbalanced Training Data Can Lead to Balanced Performance

It is shown in Fig. 2 that the balanced training data can lead to imbalanced performance. Based on this finding, we can balance the imbalanced performance by altering the data distribution. For example, the classifiers trained from the negatives in group 0 tend to make more mistakes on negatives than on positives. If we increase the size of the negatives in training data, the imbalanced performance can be made balanced. In our experiment, we keep the positives unchanged and increase the size of the negatives in group 0 to balance the performance. Every time increasing the size of the negatives, we add to the training data the most distant negatives which are not contained in the training data. Figure 3 shows how the performance of the trained classifier changes, as the ratio between the size of the negatives and that of the positives in the CIFAR10-AUTOMOBILE dataset increases. As can be seen, when the ratio is about 6 (in this case, the training data is rather imbalanced), the performance becomes balanced. Although here we only plot the results on CIFAR10-AUTOMOBILE dataset, experiments on other datasets give the similar results.

4 Moderate Negative Mining

Although the method in Sect. 3.2 can balance classifiers’ performance on the test data, it requires carefully regulating the size of the negatives to balance the imbalanced performance. In addition, in real life we desire for not only more balanced but also better performance. In this section, we propose a new under-sampling method named Moderate Negative Mining (MNM) to aid deep neural networks in learning from imbalanced data. The proposed under-sampling approach can not only solve the imbalanced problem, but also produce better overall performance in our experiments when compared with other existing under-sampling approaches.

As shown in Sect. 3, both the most and the least distant negatives will lead to imbalanced performance. Two reasons account for that. Firstly, the least distant negatives tend to be unreliable or mislabeled ones. These negatives have been shown to degrade the performance of trained classifiers. Secondly, the most distant negatives are far away from the decision hyperplane, thus they are not informative enough to represent the space of the negatives. Moderate Negative Mining exploits a simple strategy to under-sample the over-presented negatives. We hypothesize the moderately distant negatives which are between the most and the least distant ones, are ideal negatives for training the classifier. This is a reasonable assumption because the moderately distant negatives are less likely to be polluted by mislabeled ones and those unvalued redundant ones.

As described in Sect. 3, we use the predicted probability to measure the distance between a negative instance and the decision hyperplane. To give better comparison, in the same way as Sect. 3.1, we also categorize the negatives into 9 groups (from the most distant negatives in group 0 to the least distant ones in group 8) and give the performance of classifiers trained from negatives in each group. We use deep neural networks of the same architecture as described in Sect. 3. All experimental settings are kept the same during the training process for fair comparisons, except for the different training data.

As the deep classifiers often output a score for both the positive and the negative class, we must specify a score threshold (usually 0.5 for binary classification) to make a trade-off between false positives and false negatives. However, when evaluating the performance, we require a measurement which is independent of the score threshold to measure the overall performance of the trained model. We sidestep the threshold selection problem by employing AUROC (Area Under Receiver Operating Characteristic curve) and AUPR (Area Under Precision-Recall curve). Figure 4 depicts how AUROC and AUPR values change as we choose different group of negatives as training data. As can be seen, for almost all datasets, we can find a group (or several groups) which achieves better performance than random under-sampling.

The proposed Moderate Negative Mining (MNM) method selects the best classifier on the validation data. To give a better comparison with other under-sampling methods, we list the AUROC and AUPR values of the proposed method on test data in Table 2, as well as those of Random Under-sampling (RUS), One-Sided Selection (OSS) [2] and Nearest Neighbor Cleaning Rule (NCL) [3]. These are all well known under-sampling methods to solve the imbalanced data problem. As can be seen, the proposed method achieves better performance on almost all datasets. The superiority shown in PR space is more obvious than that in ROC space. It is because the PR curves are more informative than ROC curves when the data is imbalanced [17].

5 Discussion

Although imbalanced performance is often believed to be caused by imbalanced training data, we have shown that imbalanced training data is a neither sufficient nor necessary condition in Sect. 3. Therefore, in addition to construction of balanced training data, we can also construct imbalanced training data to balance the classifier’s performance on the positives and the negatives. Here we call the former method balance method and the latter imbalance method for brevity.

As deep neural networks usually get better performance given larger training data, we may expect better performance in the imbalance method. However, our experiments show the balance method achieves better performance. It is because although the imbalanced method trains the model with more examples, most of the sampled negatives are easy ones and of little information to learn the decision rule. This is validated further by our proposed Moderate Negative Mining method, in which we abandon those easy negatives and those most hardest ones. Both these two types of negatives can hinder the learning of the classifier.

6 Conclusion

In this paper we empirically prove that imbalanced data is a neither sufficient nor necessary condition for imbalanced performance on test data, although the imbalanced training data is often believed to be the chief culprit. We find another important factor for imbalanced performance is the distance between the majority class instances and the decision boundary. Based on these observations, we propose a new under-sampling, named as Moderate Negative Mining, to solve the imbalanced classification problem in deep neural networks. Several experiments demonstrate the superiority of the proposed method.

For future work, we aim to select the majority class instances directly rather than by boring validation. Furthermore, we desire to formulate the negative under-sampling as a learning problem, which is challenging as the sampled examples are discrete.

References

Chawla, N.V., Bowyer, K.W., Hall, L.O., Kegelmeyer, W.P.: SMOTE: synthetic minority over-sampling technique. J. Artif. Intell. Res. 16, 321–357 (2002)

Kubat, M., Matwin, S.: Addressing the curse of imbalanced training sets: one-sided selection. In: ICML, vol. 97, pp. 179–186, July 1997

Laurikkala, J.: Improving identification of difficult small classes by balancing class distribution. In: Quaglini, S., Barahona, P., Andreassen, S. (eds.) AIME 2001. LNCS (LNAI), vol. 2101, pp. 63–66. Springer, Heidelberg (2001). https://doi.org/10.1007/3-540-48229-6_9

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. In: Advances in Neural Information Processing Systems, pp. 91–99 (2015)

Bahdanau, D., Cho, K., Bengio, Y.: Neural machine translation by jointly learning to align and translate. arXiv preprint arXiv:1409.0473 (2014)

Pazzani, M., Merz, C., Murphy, P., Ali, K., Hume, T., Brunk, C.: Reducing misclassification costs. In: Proceedings of the Eleventh International Conference on Machine Learning, pp. 217–225 (1994)

Tang, Y., Zhang, Y.Q., Chawla, N.V., Krasser, S.: SVMs modeling for highly imbalanced classification. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 39(1), 281–288 (2009)

Estabrooks, A., Jo, T., Japkowicz, N.: A multiple resampling method for learning from imbalanced data sets. Comput. Intell. 20(1), 18–36 (2004)

He, H., Garcia, E.A.: Learning from imbalanced data. IEEE Trans. Knowl. Data Eng. 21(9), 1263–1284 (2009)

Tomek, I.: Two modifications of CNN. IEEE Trans. Syst. Man Cybern. 6, 769–772 (1976)

Jeatrakul, P., Wong, K.W., Fung, C.C.: Classification of imbalanced data by combining the complementary neural network and SMOTE algorithm. In: Wong, K.W., Mendis, B.S.U., Bouzerdoum, A. (eds.) ICONIP 2010 Part II. LNCS, vol. 6444, pp. 152–159. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-17534-3_19

Zhou, Z.H., Liu, X.Y.: Training cost-sensitive neural networks with methods addressing the class imbalance problem. IEEE Trans. Knowl. Data Eng. 18(1), 63–77 (2006)

Huang, C., Li, Y., Change Loy, C., Tang, X.: Learning deep representation for imbalanced classification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5375–5384 (2016)

Krizhevsky, A., Hinton, G.: Learning multiple layers of features from tiny images (2009)

Lewis, D.D., Catlett, J.: Heterogeneous uncertainty sampling for supervised learning. In: Proceedings of the Eleventh International Conference on Machine Learning, pp. 148–156 (1994)

Saito, T., Rehmsmeier, M.: The precision-recall plot is more informative than the ROC plot when evaluating binary classifiers on imbalanced datasets. PLoS ONE 10(3), e0118432 (2015)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 1097–1105 (2012)

Acknowledgments

This work was supported in part by the National Natural Science Foundation of China (61572428, U1509206), National Key Research and Development Program (2016YFB1200203), Program of International Science and Technology Cooperation (2013DFG12840), Fundamental Research Funds for the Central Universities (2017FZA5014) and National High-tech Technology R&D Program of China (2014AA015205).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Song, J., Shen, Y., Jing, Y., Song, M. (2017). Towards Deeper Insights into Deep Learning from Imbalanced Data. In: Yang, J., et al. Computer Vision. CCCV 2017. Communications in Computer and Information Science, vol 771. Springer, Singapore. https://doi.org/10.1007/978-981-10-7299-4_56

Download citation

DOI: https://doi.org/10.1007/978-981-10-7299-4_56

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-7298-7

Online ISBN: 978-981-10-7299-4

eBook Packages: Computer ScienceComputer Science (R0)