Abstract

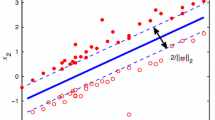

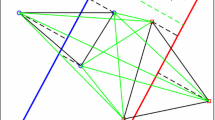

The proximal support vector machine via generalized eigenvalues (GEPSVM) is an excellent classifier for binary classification problem. However, the distance of GEPSVM from the point to the plane is measured by L2-norm, which emphasizes the role of outliers by the square operation. To optimize this, we propose a robust and effective GEPSVM classifier based on L1-norm distance metric, referred to as L1-GEPSVM. The optimization goal is to minimize the intra-class distance dispersion and maximize the inter-class distance dispersion simultaneously. It is known that the application of L1-norm distance is often considered as a simple and powerful way to reduce the impact of outliers, which improves the generalization ability and flexibility of the model. In addition, we design an effective iterative algorithm to solve the L1-norm optimal problems, which is easy to actualize and its convergence to a logical local optimum is theoretically ensured. Thus, the classification performance of L1-GEPSVM is more robust. Finally, the feasibility and effectiveness of L1-GEPSVM are proved by extensive experimental results on both UCI datasets and artificial datasets.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Vapnik, V.N.: Statistical learning theory. Encycl. Sci. Learn. 41, 3185 (2010)

Burges, C.J.C.: A tutorial on support vector machines for pattern recognition. Data Min. Knowl. Discov. 2, 121–167 (1998)

Deng, N., Tian, Y., Zhang, C.: Support Vector Machines. Optimization Based Theory, Algorithms, and Extensions. CRC Press, Boca Raton (2012)

Lin, Y.H., Chen, C.H.: Template matching using the parametric template vector with translation, rotation and scale invariance. Pattern Recogn. 41, 2413–2421 (2008)

Mangasarian, O.L., Wild, E.W.: Multisurface proximal support vector machine classification via generalized eigenvalues. IEEE Trans. Pattern Anal. Mach. Intell. 28, 69–74 (2006)

Fung, G.M., Mangasarian, O.L.: Proximal support vector machine classifiers. Mach. Learn. 59, 77–97 (2005)

Jayadeva, Khemchandani, R., Chandra, S.: Twin support vector machines for pattern classification. IEEE Trans. Pattern Anal. Mach. Intell. 29, 905–910 (2007)

Ye, Q., Ye, N.: International Joint Conference on Computational Sciences and Optimization, CSO 2009, Sanya, Hainan, China, 24–26 April, pp. 705–709 (2009)

Guarracino, M.R., Cifarelli, C., Seref, O., Pardalos, P.M.: A classification method based on generalized eigenvalue problems. Optim. Methods Softw. 22, 73–81 (2007)

Guarracino, M.R., Irpino, A., Verde, R.: International Conference on Complex, Intelligent and Software Intensive Systems, pp. 1183–1185

Shao, Y.H., Deng, N.Y., Chen, W.J., Wang, Z.: Improved generalized eigenvalue proximal support vector machine. IEEE Signal Process. Lett. 20, 213–216 (2013)

Marghny, M.H., Elaziz, R.M.A., Taloba, A.I.: Differential search algorithm-based parametric optimization of fuzzy generalized eigenvalue proximal support vector machine. Int. J. Comput. Appl. 108, 38–46 (2015)

Li, C.N., Shao, Y.H., Deng, N.Y.: Robust L1-norm non-parallel proximal support vector machine. Optimization 65, 1–15 (2015)

Kwak, N.: Principal component analysis by Lp-norm maximization. IEEE Trans. Cybern. 44, 594–609 (2014)

Kwak, N.: Principal component analysis based on L1-norm maximization. IEEE Trans. Pattern Anal. Mach. Intell. 30, 1672–1680 (2008)

Wang, H., Lu, X., Hu, Z., Zheng, W.: Fisher discriminant analysis with L1-norm. IEEE Trans. Cybern. 44, 828–842 (2014)

Li, C.N., Shao, Y.H., Deng, N.Y.: Robust L1-norm two-dimensional linear discriminant analysis. Neural Netw. Off. J. Int. Neural Netw. Soc. 65C, 92–104 (2015)

Chen, X., Yang, J., Ye, Q., Liang, J.: Recursive projection twin support vector machine via within-class variance minimization. Pattern Recogn. 44, 2643–2655 (2011)

Bache, K., Lichman, M.: UCI Machine Learning Repository (2013)

Yang, X., Chen, S., Chen, B., Pan, Z.: Proximal support vector machine using local information. Neurocomputing 73, 357–365 (2009)

Xue, H., Chen, S.: Glocalization pursuit support vector machine. Neural Comput. Appl. 20, 1043–1053 (2011)

Ye, Q., Zhao, C., Gao, S., Zheng, H.: Weighted twin support vector machines with local information and its application. Neural Netw. Off. J. Int. Neural Netw. Soc. 35, 31–39 (2012)

Ding, S., Hua, X., Yu, J.: An overview on nonparallel hyperplane support vector machine algorithms. Neural Comput. Appl. 25, 975–982 (2013)

Lin, G., Tang, N., Wang, H.: Locally principal component analysis based on L1-norm maximisation. IET Image Process. 9, 91–96 (2015)

Zhong, F., Zhang, J.: Linear discriminant analysis based on L1-norm maximization. IEEE Trans. Image Process. Publ. IEEE Signal Process. Soc. 22, 3018–3027 (2013)

Acknowledgement

This work was supported in part by the National Foundation for Distinguished Young Scientists under Grant 31125008, the Scientific Research Foundation for Advanced Talents and Returned Overseas Scholars of Nanjing Forestry University under Grant 163070679, and the National Science Foundation of China under Grants 61101197, 61272220, and 61401214.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

He Yan, A., Qiaolin Ye, B., Ying’an Liu, C., Tian’an Zhang, D. (2016). The GEPSVM Classifier Based on L1-Norm Distance Metric. In: Tan, T., Li, X., Chen, X., Zhou, J., Yang, J., Cheng, H. (eds) Pattern Recognition. CCPR 2016. Communications in Computer and Information Science, vol 662. Springer, Singapore. https://doi.org/10.1007/978-981-10-3002-4_57

Download citation

DOI: https://doi.org/10.1007/978-981-10-3002-4_57

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-3001-7

Online ISBN: 978-981-10-3002-4

eBook Packages: Computer ScienceComputer Science (R0)