Abstract

A derivational approach to event semantics using pregroup grammars as syntactic framework is defined. This system relies on three crucial components: the explicit introduction of event variables which are linked to the basic types of a lexical item’s grammatical type; the unification of event variables following a concatenation of two expressions and the associated type contraction; and the correspondence between pregroup orderings and the change of the available event variables associated to a lexical item, which the meaning predicates take scope over.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

This project aims at studying implicit event variables over which meaning predicates take scope and their interaction throughout syntactic derivations. A derivational system will be put in place around the pre-existing pregroup grammar framework to handle these variables compositionally using a unification process, while at the same time providing for a very natural semantics for pregroup grammars.

More concretely, it will be shown how by extending the usual pregroup framework with a semantic layer and by assigning explicit event variables to the syntactic categories of an expression, we can get semantic extraction from pregroup derivations without too many complications. The resulting meaning will be neo-davidsonian and conjunctivist in form, that is, the meaning will be analysed in terms of events, and a single logical operator will be used during the combination of meanings: the conjunction \(\wedge \). This is in opposition to the more traditional approach of logical analysis called Functionalism that treats semantic composition as function application: a sentence such as (1a) will not have corresponding logical form (1b) but instead have the form (1c) where what are usually treated as arguments to the verb — John, Mary — are instead related to it by the thematic role they play in the event that the verb characterizes.

Using conjunctions as sole mean of meaning combination makes it harder at first to analyse certain constructions, but this is a small price to pay for the level of generality and overall derivational simplicity that will be obtained in the end by equating syntactic combination (pregroup contractions) with meaning conjunction.

2 Pregroup Grammars

The syntactic framework that will be used for this project is called Pregroup Grammars and is a recent descendant of the original syntactic calculus which arose from the study of resource sensitive logics [2, 8, 9]. They are called as such because their syntactic types form a special mathematical structure called a pregroup. The semantic system defined later could be worked out independently of the pregroup framework, though they seem to work well together for multiple reasons.

A pregroup [9] \(\mathbb {P}=(P,\rightarrow ,^{r},^{l},\cdot , 1)\) is a partially ordered monoid on a set of elements P, the set of basic types, in which to every element \(a\in P\) corresponds a right and a left adjoint — \(a^{r}\in P\) and \(a^{l}\in P\) respectively — subject to

The left sides of the relations are called contractions and the right sides, expansions. More precisely, the types forming a pregroup satisfy the following properties:

The set of types closed under the \(^r\) and \(^l\) adjoint operations is called the set of simple types.

A pregroup grammar \(\mathbb {G} =(\varSigma ,P, \rightarrow , ^r, ^l, 1, \mathbb {T})\) consists of a lexicon \(\varSigma \) and a typing relation \(\mathbb {T}\subseteq \varSigma \times \mathbb {F}\) between the alphabet and the pregroup freely generated by the simple types of P and the ordering relation \(\rightarrow \). This simply means that each element of the lexicon is associated with one or more strings of simple types. For instance, \((want, i\phi ^l\)) will be used in a sentence like (2a) and \((want, i\bar{j}^l)\) in (2b).

Here are common basic types:

and an example derivation:

The fact that the structure is partially ordered also allows us to set a specific ordering of grammatical types such as

\(\bar{n}\rightarrow \pi _3\rightarrow \pi \)\(s_1 \rightarrow s\)

where \(\alpha \rightarrow \beta \) means that \(\alpha \) could also be used as \(\beta \), e.g. a plural noun \(n_2\) such as cats could be used as an object o or plural subject \(\pi _2\), but not as a third person singular subject \(\pi _3\), i.e. \(n_2\rightarrow \pi _2\), but \(n_2\not \rightarrow \pi _3\).

Using the orderings we can now analyse more complex sentences

Note the use of the ordering relations \(\bar{n}\rightarrow o\), \(n_2\rightarrow \bar{n}\rightarrow \pi _3\) and \(N\rightarrow \pi _3\).

As pregroup types are merely concatenation of types, the order of contractions does not really matter. What really matters in this kind of grammar are the derivation links that tell us how the different lexical items combine with eachother in a given sentence:

3 Problems with Semantics in Pregroup Grammars

One of the major inconveniences of using pregroup grammars to do semantics is that complex types can often be contracted in multiple ways, as we’ve seen above. For instance, consider the possible types that could be assigned to the subject position quantifier every in different grammatical formalisms:

In the first two cases, the order in which the types or features are used is well-defined and unique:

-

Traditional Categorial Grammars: Type-elimination follows nestedness, i.e. the quantifier must be joined to a noun phrase, then to a verb phrase

-

Minimalist Grammars: Feature-checking is from left to right, i.e. the quantifier must be joined to a noun phrase, after which it could be used as a determiner and finally moved by being selected by a higher node with a selectional case feature

On the other hand, pregroup types aren’t ordered: any basic type present in a type could theoretically be contracted at any point if it appears on the edge of the type. For instance, in the following sentence, every has the possibility to contract with either of its neighbours, \(John\ knows\ that\) or boy.

The very liberal type structure of pregroups is essentially the reason why traditional approaches to semantics do not work in that framework. For instance, consider the type of a finite transitive verb

In Montagovian semantics [10, 12], such a verb would correspond to a relation between two entities, and would get assigned meaning:

The order in which the subject and object get passed to the verb are very important, as a situation where I kick someone is very different from a situation where I get kicked by someone. But pregroup grammars cannot, in this sense, place constraints on which type gets contracted first, at least without introducing unwanted complexity to the system.

Note that there are already established approaches to doing semantics with pregroup grammars, see [4, 6, 14, 15]. The aim with this project is simply to show that other approaches are also possible, that might also be simpler when doing event semantics from a derivational point-of-view than using the \(\lambda \)-calculus as semantic framework.

4 Quick Overview of Event Semantics and Conjunctivism

Conjunctivism [13] is the idea that as smaller expressions concatenate, their meanings simply conjoin. This approach is somewhat controversial, as for the last hundred years formal semanticists have instead related semantic combination with function application. To understand why and how this idea came to be, it is useful to have an understanding of two of the major developments of this branch of semantics: Davidson’s characterization of action sentences in terms of events [5] and Parsons’ subatomic analysis of events [11].

The traditional way of looking at a verb of action such as kiss is as a logical function that takes in two arguments – the subject and the object – and returns a truth value.

Here \(kiss_{ext}\) is the set of pairs of people kissing.

For instance, if the extension of the verb kiss were \(kiss_{ext} = \{(J, M)\}\) then we could say that the only kissing happening is between John and Mary, John being the kisser and Mary the kissee.

The values of the following sentences could then be found by translating them into the appropriate logical form and checking if the membership conditions hold:

but \( [\![John\ kisses\ Paola]\!] =\bot \), as \((J,P)\not \in kiss_{ext}\).

Another possible analysis would be in terms of events.

i.e. \([\![ John\ kisses\ Mary ]\!] = \exists e.kiss(e,J, M)\).

Letting the verb take an implicit event argument makes it then much easier to deal with questions such as verb arity and sentential adjuncts, as constructions like temporal, locative and manner adjuncts can now be redefined as independent predicates over events. For instance,

This kind of representation makes it also easier to analyse certain cases of entailment relations: it is as simple as using \(\wedge \)-elimination on the denotation of a sentence with adjunct, to get its meaning without adjunct.

It is also possible to go even further [11] and treat subjects and objects not as arguments of the verb, but in a way similar to how adjuncts are handled using conjunctions. One can do so by introducing predicates standing for thematic relations between events and entities, that share the event with the verb:

Having verbs take in event arguments and no other grammatical argument also mirrors the way nouns and adjectives interact; instead of sharing an entity of some sort they are instead sharing an event or a state.

Cases of embedded sentences can also be treated similarly by letting the event of the embedded sentence be treated as object and letting meaning predicates associated to the lexical items be independent of one another:

The next logical step in our will to generalize even more how the meaning of each lexical item can be seen as a piece of information that simply adds constraints to the overall meaning of an expression, is to try to only have the conjunction as mean of combination, and this is what Paul Pietroski brought with Conjunctivism [13], inspired in part by the work of Schein [16, 17] on plurality. Pietroski’s proposal is that the semantics of any expression in natural language consists in a finite conjunction of the meaning of its parts.

Connectives other than the conjunction are often used though in formal semantics, and it is still not clear how sentences that seem to require them could be modelled without. The crux of Pietroski’s argument relies on using a different logic for sentential interpretation, namely, plural logic [1]. Plural quantification is an interpretation of monadic second-order logic, similar to a two-sorted logic, in which the monadic predicate variable is not interpreted as a set of things, but instead as taking multiple values.

The choice of this logic comes from the need to model plurality in cleaner ways than with first-order logic. It has also a greater descriptive power and can model meanings not accessible with first-order logic [1].

For instance, some predicates such as dance or boy are said to be singular and require that the input is a singular value for it to be evaluated. The reasoning behind this is that when someone asks of a group of people if they are boys, they are not asking whether that group as a whole is a boy, but rather, whether each person of that group is a boy. Therefore, singular predicates evaluate plural values by evaluating the singular values it is composed of.

This allows us to represent an expression such as \(two\ blue\ cats\) as

which is then interpreted as:

-

There is a (perhaps) plural entity

-

This plural entity has two values

-

The values of the plural entity are blue

-

The values of the plural entity are cats

-

The entity has to be plural, i.e. has more than one value

Using this logic is a key component of Conjunctivism, as it also allows one to model cases that seem to require extra logical tools like implication \(\rightarrow \), disjunction \(\vee \) or universal quantification \(\forall \). For instance, the quantified sentence \(every\ cat\ sleep\) can be represented as:

The reason why this works lies in the way each predicate contributes a specific condition to the global meaning of the expression:

-

\(Every\_Ag(E,X)\): are the values of X precisely the agents of the events E?

-

cat(X): is every value of X a cat?

-

sleep(E): is every event of E an event of sleeping?

The goal here is not to defend Conjunctivism as a valuable approach to semantics, but only to show that, although it might not look like much at first, it is powerful enough to handle interesting non-trivial cases.

5 Derivational Event Semantics Using Pregroup Grammars

In this section, it will be shown how one could use the implicit event variables instantiated by a lexical item’s corresponding meaning predicate to derive the right neo-davidsonian representation of an expression. The idea is that those variables can be turned into explicit objects that can be unified over as the expressions are combined. To structure the derivations, pregroup grammars will be used as the syntactic framework on top of which will be added the truth-conditional semantic layer and another layer where interaction between event variables take place.

The general process of unifying event variables is not required to take place in the pregroup framework, or even categorial framework, though pregroup grammars do offer some advantages over other syntactic frameworks for this type of analysis, especially since their types are non-functional and some syntactic relations are already defined in the system through type ordering, e.g. \(N\rightarrow \pi _3\).

It would also be possible to adapt this framework to a montagovian one where denotations are \(\lambda \)-terms and where the syntactic types are functional (see [3, 7, 20] for inspiration), but the end goal is really to show that the full power of the \(\lambda \)-calculus is not needed to get a good compositional semantics. We aim to get something that really highlights how events relate to each other and how simple meaning composition can really be. Simplicity is the key here.

The focal point of the analysis will also be different, in a sense, from what is seen in more traditional approaches to semantics, as instead of focusing on ways to combine expressions’ truth conditions and passing around predicates to predicates, the event variables will themselves be the ones moving around the syntactic trees, as they are the main shareable pieces of information in this framework. On the other hand, the semantics predicates’ main role will be to constrain the possibility of events to occur by restraining the possible values the event and entity variables can take.

5.1 Motivation

Let’s start by looking at the event analysis of a simple sentence.

In this case, a single event e is shared by both lexical items. We want to compositionally explain how to get to that denotation so that one could define a derivational system that takes words as input and outputs conjunctivist values.

In the above case, both \(\alpha \) and \(\beta \) stand for the meaning of their respective expressions and take as argument event variables \(e_1\) and \(e_2\). Leaving aside for a moment the question of what the exact values \(\alpha \) and \(\beta \) stand for, an important question to answer is: Where does e come from?

The main property of variables is their mutability, they can take any values assigned to them. One does not have to know from the start what value they will be taking at the end. In the following functional example, x does not have any intrinsic value at the beginning, its raison d’être is to take the value of whatever term gets passed to the expression.

Now, looking back at the event translation, neither the subject John nor dances know what they will be taking scope over when the derivation ends. For instance, in a sentence such as

they would not have the same event as argument: the subject John is related to a first event of knowing, while dances predicates over a totally different event where Sara is the agent instead of John. The goal is to figure out what they could have started with so that they end up with the right argument assignment. Assuming that they start for instance with values Agent(e, John) and dance(e), over the same event e, does not solve the problem: how did they know that they both take the exact same event as argument? Getting the right variables in the right place will be achieved by using unification on the variables.

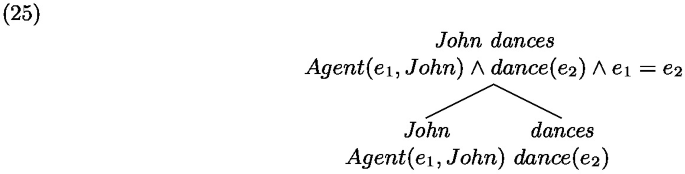

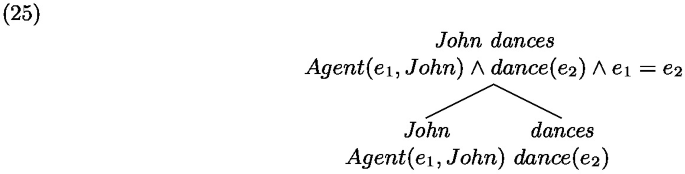

Here is how the derivation of the logical form \(\exists e. Agent(e, John)\wedge dance(e)\) will take place:

-

1.

Distinct variables are instantiated by john and dances’s semantic predicates, to be taken as arguments: \(Agent(e_1, John)\) and \(dance(e_2)\)

-

2.

Lexical items are concatenated, from which it follows that the variables associated with the syntactic categories that allowed the concatenation to take place are unified. In this case, \(e_1\) and \(e_2\) are unified, i.e. \(e_1=e_2\).

-

3.

A final process then takes place that binds the instantiated variables

To improve readability, values of the form \(A[e_1,e_2]\wedge e_1=e_2\) will be automatically replaced by \(A[e_1,e_2/e_1]\).

Note that actually keeping the two variables as distinct and binding each one — hence binding twice instead of once — does not actually make any difference in the meaning:

Now let’s have look at the sentence the cat dances, whose derivation tree looks something like:

It would be nice to have the derivation process be similar to the one described above, but that poses a problem, as doing so exactly the same way would give us this kind of logical form:

The variable taken by the determiner phrase as argument should be a completely different one from the one taken by the verb. The relation between those two variables seems to be exactly what Agent(e, x) is defining: the variable from the determiner phrase is the agent of the variable taken by the verb phrase.

This problem could be approached through two different angles. The first way (see 29), following Pietroski [13] is to assume that the type of the determiner and noun compound contains a unique implicit variable x it can refer to, which, through a transformation from determiner phrase to subject – or by being assigned case – gets a new semantic constraint Agent(e, x) added to its meaning and a new event variable: the verb phrase can now only access e, which is fresh, and not x anymore. In other words, a new grammatical role is now synonymous with a change of available implicit variable and a closure of the old variable. This solution has the advantage of being more theoretically motivated, but also has its downsides when used in a syntactic framework like PG, which does not restrict compounding of expressions as much as other frameworks.

The second way (30) this could be dealt with is by assigning different variables to the syntactic categories that form the type of the, so that when it first concatenates with cat, the syntactic category that allows for the operation to happen will be linked to x, and the one corresponding to the second concatenation will contain a different variable, e.

The variable over which two branches unify is represented in the node.

Looking back at the previous tree, one see that e dominates it and might wonder what it implies. For simplicity, it can be assumed that this node, which is the final one in the tree, is of a basic category, e.g. s or C as opposed to a concatenation of categories, and so that this category corresponds to a single variable, or has a single variable available for further concatenation, e in this case. The reason is that if one were to use this expression within another expression or if one wanted to concatenate extra lexical items to it, what would be shared between the two would be the event variable e, and in no case the entity variable x — assuming the internal structure of that subtree is not modified.

On the left, the sentence is included in the tree as an embedded clause, which semantically would be represented as the variable e now being the theme of \(e'\), the event over which I and think take place. On the right, the expression is concatenated with an adjunct, which shows that the event e is still possibly accessible from the expression \(the\ cat\ dances\).

In a way, no matter how that constituent — the cat dances — is used, the main information that will be shared and that could be quantified over, seems to be the event. This is similar to the way syntactic categories behave, in the sense that no matter how long an expression gets, if its syntactic category is A, it will always be possible to use it anywhere where an expression of category A could be used, no matter what other constituents it might contain. It does not mean that the embedded subtree is completely opaque, but simply that its access is more restricted.

5.2 Semantic Pregroup Types

It will be now shown how to transpose that approach into the pregroup framework.

To stay in the categorial state-of-mind, the system will be as general and require as few rules as possible when it comes to generating the logical form: systematic combination rules will be defined, but constraints as to when and to what lexical items these rules can be applied is mostly left to the lexical items themselves, by carefully specifying their syntactic types. No complexity is added to the syntactic layer from the addition of this new semantic layer, which means that the structure of derivations is no different than that of regular pregroup derivations. This means that the usual parsing algorithms for pregroup grammars can also be used, as long as clauses are added to handle semantics.

Note that the final representation of the meaning one will get at the end of a derivation can be qualified as raw, as some aspects of the meaning of a sentence cannot be reached simply by predicate combination, especially since no pre-derivational thematic assignments are assumed to have taken place. Concretely, this means that one might end up with the representation (33a) for the sentence (33b) but then that extra semantic information could be reached through meaning postulates such as (33c) as thematic roles depend on multiple factors such as voice, grammatical functions and the nature of the verb itself.

The most direct way of building a system to account for the kind of semantics just seen above is to pair lexical items with a syntactic type, a set of available variables and a truth-conditional meaning predicate, which scopes over different values and variables. Those variables will be assumed to be instantiated when the lexical item is first used, and their value will change through the derivation depending on the way types contract.

The full value of a lexical item is then a tuple of the form:

where \(a_i\) is a basic pregroup type, \(x_i\) an available implicit variable, and A a logical formula that stands for the expression’s meaning.

For instance, the relative pronoun whom, will have the form (35a) which will be rewritten as (35b) for clarity, and could be read as: the variable associated with the sentential and subject type is possibly different from the one associated to the noun types. This comes from the fact that whom is usually used as theme predicate over 2 distinct variables, one corresponding to an event and another corresponding to an entity.

This is a good example of why having only one available variable per lexical item does not work well with pregroup types: both the event and entity variable have to appear within its meaning predicate at some point of the derivation, but starting with either and trying to introduce the other at a later point brings many complications. Not to say that it is impossible, just much more painful.

In this case, whom will have semantic value Theme(e, x), sharing the variable e on the right with Caesar stabbed and x, on the left with cat.

Note that the kind or type of the variables is irrelevant in this system. There is no real difference between x, e, or any other variable, and only the constraints put on a variable can say something about it. Using specific characters to represent variables such as x and e only makes reading descriptions easier.

It is tempting to use, for instance, entity types and event types and try to copy what is done in Montagovian semantics, but in the end the types that would end up being required would be very different from the Montagovian ones. For instance, there is not going to be any boolean type passed around.

The reason is simply that the boundaries between entities and events are very blurry: is the crash in \(The\ crash\ was\ brutal\) an event or entity? Similarly, differentiating between activities, accomplishments and other eventualities [19] does not seem necessary: it will be assumed that the kind of eventualities is simply the result of the interplay between features or predicates, e.g.

5.3 Semantic Combinations

This section outlines a method of combining lexical items’ meanings given the new tuple types defined above. As previously mentioned, the Conjunctivist approach is followed here and the meaning of an expression takes the form of a conjunction of the meaning of the parts, scoping over given event variables.

To get the right variables at the right place, the variables will have to get unified over the contraction links. What this means is that whenever two syntactic types contract, their contained variables are forced to take the same value. This also affects the distribution of the other variables contained in those types. Here is a simple example to show how it works:

While contracting the types, the constraint that \(x=y\) is added to the global meaning. The variables could also be replaced automatically.

What is nice about this is that a derivation can now be represented as semantic predicates linked to each other by the variables they share, which also corresponds to the contraction links. Put another way, the contraction links can be labelled using the contained event and entity variables.

5.4 Syntactic/Semantic Hierarchy

Let’s have a look back at example (27) that was discussed at the very beginning of this chapter.

Underlyingly, the variables are layered in this kind of way:

The task at hand now is to find a way of going from the variable that is shared between the and cat to a fresh one that would then get unified with the one coming from dances. There is actually a very simple way of relating this to another pregroup operation and that is by extending the pregroup orderings, or syntactic hierarchy, to take into account semantic constructions.

To remind the reader, since pregroups are ordered structures, some grammatical relations can be explicitly defined as orders. For instance,

A relation such as \(\bar{n}\rightarrow \pi _3\) could then be rewritten to include information about the variables present in the types and about the extra semantic relation they now play under this new syntactic type:

which is to be interpreted as: using a determiner phrase as a third person subject means having its available entity variable used as the agent of the event specified by the verb it will combine with.

In this case, the rule used on the proper name John was:

Note that it is not always necessary to go through a transformation of variable, as potentially distinct variables could be attached to the basic types of an expression, just like for the above case of the relative pronoun whom, or in a case like (45) where all pieces naturally combine and the variables over which expressions are unified varies as the concatenation takes place

In this case the variable at the top of a branching represents the variable that was unified.

5.5 Existential-Closure

Existential-closure is not as straight-forward to implement in this system and will only be glossed over in this article. The main problem with trying to adapt the kind of semantic system that researchers like Pietroski uses is that syntactic types in pregroup grammars can combine in any order they want as long as they are on the edge of the type. This is problematic, as more mainstream syntactic systems usually does not work this way, and a lot of ordering is constrained by the encoding of the grammatical categories, for instance by using internal/external argument relations.

A simple example is how thematic relation assignment is usually dealt with. A generative way of representing how the phrase \(my\ cat\) can play the role of an agent could be as positing a covert agent-node that takes in the clause and changes its domain of predication from an entity to an event

This way, if something is under the scope of agent, it will only have access to x and not e, and on the other hand, x is bound inside the agent-node, hence if one is working outside of it, they will not have access to x, but only to e. By letting the determiner phrase be taken as an argument, the syntactic parsing is also restricted, as the concatenation of my and cat is something that can only happen lower in the tree, than the assignment of the agent role.

In pregroup grammars, the derivation could take multiple forms.

On the left, the ordering is used first on \(\bar{n}\rightarrow \pi \), which corresponds, in a sense, to combining a covert agent node with the determiner, before the latter combines with the noun. On the right, the combination of the determiner and noun takes place before the expression takes on the role of an agent, which is equivalent to the unique generative representation discussed above.

This much more flexible way of combining expressions is the reason it is harder to structure existential closure and is the reason multiple variables per type are needed, as some of those operations take place in parallel, and sometimes multiple variables have to be accessible at the same time. More precisely, in this case, closing the variable as \(\bar{n}\) goes to \(\pi \) before contracting the types (left path) blocks the entity variable coming from the noun to be unified with the determiner, which is dramatic. Restricting the order in which pregroup types could combine is also out of the question here.

Two possible alternatives to \(\exists \)-closure would be:

-

Closing a variable after a contraction of one of the basic types it is present in, only if it does not appear in any other basic type of the complex type it is part of.

-

Closing every variable instantiated at the end of a derivation, similarly to the way \(\exists \)-introduction is used in logic. This works since the only logical symbols we are working with are the conjunction and the existential quantifier.

Here is a complete table of the correspondence between the syntactic and the semantic structures:

Syntax | Semantics |

|---|---|

Concatenation of basic types | Conjunction of logical predicates |

Contraction of types | Unification on available event variables |

Syntactic type ordering | Conjunction of new semantic predicate |

Variable disappears from the types | \(\exists \)-closure |

6 Conclusion

The compositional semantic system defined in this article is an elegant and natural way of defining a semantics for pregroup grammars which relies on light machinery and intuitive operations, which are clearly its key characteristics.

Many questions are left to be answered, especially when it comes to the internal structure of the events and how this kind of system might relate to the typed \(\lambda \)-calculus, which seems to be much more powerful and to have a greater control on how predicates can be moved around and reorganized. The descriptive adequacy of Conjunctivism is also an interesting question to investigate in the future, which was only briefly addressed in this paper.

References

Boolos, G.: To be is to be the value of a variable (or to be some values of some variables). J. Philos. 81, 430–449 (1984)

Buszkowski, W.: Lambek calculus and substructural logics. Linguist. Anal. 36(1), 15–48 (2003)

Champollion, L.: Quantification and negation in event semantics. In: Barbara Partee, M.G., Skilters, J. (eds.) Baltic International Yearbook of Cognition, Logic and Communication, vol. 6, pp. 1–23. New Prairie Press, Manhattan (2010)

Clark, S., Coecke, B., Sadrzadeh, M.: Mathematical foundations for a compositional distributional model of meaning. Linguist. Anal. 36 (2010)

Davidson, D.: The logical form of action sentences. Synthese (1967)

Gaudreault, G.: Bidirectional functional semantics for pregroup grammars. In: Kanazawa, M., Moss, L.S., de Paiva, V. (eds.) Third Workshop on Natural Language and Computer Science, NLCS 2015. EPiC Series in Computer Science, vol. 32, pp. 12–28 (2015)

de Groote, P., Winter, Y.: A type-logical account of quantification in event semantics. In: Murata, T., Mineshima, K., Bekki, D. (eds.) JSAI-isAI 2014. LNCS (LNAI), vol. 9067, pp. 53–65. Springer, Heidelberg (2015). doi:10.1007/978-3-662-48119-6_5

Lambek, J.: Type grammar revisited. In: Lecomte, A., Lamarche, F., Perrier, G. (eds.) LACL 1997. LNCS (LNAI), vol. 1582, pp. 1–27. Springer, Heidelberg (1999). doi:10.1007/3-540-48975-4_1

Lambek, J.: From Word to Sentence: A Computational Algebraic Approach to Grammar. Polimetra, Koper (2008)

Montague, R.: Formal Philosophy: Papers of Richard Montague. Yale University Press, New Haven (1974). Ed. by R.H. Thomason

Parsons, T.: Events in the Semantics of English. The MIT Press, Cambridge (1990)

Partee, B. (ed.): Montague Grammar. Academic Press, New York (1976)

Pietroski, P.: Events and Semantic Architecture. Oxford University Press, Oxford (2005)

Preller, A.: Toward discourse representation via pregroup grammars. J. Logic Lang. Inform. 16(2), 173–194 (2007)

Preller, A., Sadrzadeh, M.: Semantic vector models and functional models for pregroup grammars. J. Logic Lang. Inform. 20(4), 419–443 (2011)

Schein, B.: Plurals and Events. MIT Press, Cambridge (1993)

Schein, B.: Events and the semantic content of thematic relations. In: Peter, G.P.G. (ed.) Logical Form and Language, pp. 263–344. Oxford University Press, Oxford (2002)

Stabler, E.: Derivational minimalism. In: Retoré, C. (ed.) LACL 1996. LNCS, vol. 1328, pp. 68–95. Springer, Heidelberg (1997). doi:10.1007/BFb0052152

Vendler, Z.: Verbs and times. Philos. Rev. 66, 143–160 (1957)

Winter, Y., Zwarts, J.: Event semantics and abstract categorial grammar. In: Kanazawa, M., Kornai, A., Kracht, M., Seki, H. (eds.) MOL 2011. LNCS (LNAI), vol. 6878, pp. 174–191. Springer, Heidelberg (2011). doi:10.1007/978-3-642-23211-4_11

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution-NonCommercial 2.5 International License (http://creativecommons.org/licenses/by-nc/2.5/), which permits any noncommercial use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2016 The Author(s)

About this paper

Cite this paper

Gaudreault, G. (2016). Compositional Event Semantics in Pregroup Grammars. In: Amblard, M., de Groote, P., Pogodalla, S., Retoré, C. (eds) Logical Aspects of Computational Linguistics. Celebrating 20 Years of LACL (1996–2016). LACL 2016. Lecture Notes in Computer Science(), vol 10054. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-53826-5_7

Download citation

DOI: https://doi.org/10.1007/978-3-662-53826-5_7

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-662-53825-8

Online ISBN: 978-3-662-53826-5

eBook Packages: Computer ScienceComputer Science (R0)