Abstract

Cloud services keep gaining popularity despite the security concerns. While non-sensitive data is easily trusted to cloud, security critical data and applications are not. The main concern with the cloud is the shared resources like the CPU, memory and even the network adapter that provide subtle side-channels to malicious parties. We argue that these side-channels indeed leak fine grained, sensitive information and enable key recovery attacks on the cloud. Even further, as a quick scan in one of the Amazon EC2 regions shows, high percentage – 55 % – of users run outdated, leakage prone libraries leaving them vulnerable to mass surveillance.

The most commonly exploited leakage in the shared resource systems stem from the cache and the memory. High resolution and the stability of these channels allow the attacker to extract fine grained information. In this work, we employ the Prime and Probe attack to retrieve an RSA secret key from a co-located instance. To speed up the attack, we reverse engineer the cache slice selection algorithm for the Intel Xeon E5-2670 v2 that is used in our cloud instances. Finally we employ noise reduction to deduce the RSA private key from the monitored traces. By processing the noisy data we obtain the complete 2048-bit RSA key used during the decryption.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Motivation

Cloud computing services are more popular than ever with their ease of access, low cost and real-time scalability. With increasing adoption of cloud, concerns over cloud specific attacks have been rising and so has the number of research studies exploring potential security risks in the cloud domain. A main enabler for cloud security is the seminal work of Ristenpart et al. [40]. The work demonstrated the possibility of co-location as well as the security risks that come with it. The co-location is the result of resource sharing between tenant Virtual Machines (VMs). Under certain conditions, the same mechanism can also be exploited to extract sensitive information from a co-located victim VM, resulting in security and privacy breaches. Methods to extract information from VMs have been intensely studied in the last few years however remain infeasible within public cloud environments, e.g. [29, 37, 41, 48]. The potential impact of attacks on crypto processes can be even more severe, since cryptography is at the core of any security solution. Consequently, extracting cryptographic keys across VM boundaries has also received considerable attention lately. Initial studies explored the Prime and Probe technique on L1 cache [19, 49]. Though requiring the attacker and the victim to run on the same physical CPU core simultaneously, the small number of cache sets and the simple addressing scheme made the L1 cache a popular target. Follow up works have step by step removed restrictions and increased the viability of the attacks. The shared Last Level Cache (LLC) now enables true cross-core attacks [8, 30, 45] where the attacker and the victim share the CPU, but not necessarily the CPU core. Most recent LLC Prime and Probe attacks no longer rely on de-duplication [14, 26] or core sharing, making them more widely applicable.

With the increasing sophistication of attacks, participants of the cloud industry ranging from Cloud Service Providers (CSPs), to hypervisor vendors, up all the way to providers of crypto libraries have fixed many of the newly exploitable security holes through patches [1, 3, 4]—many in response to published attacks. However, many of the outdated cryptographic libraries are still in use, opening the door for exploits. A scan over the entire range of IPs in the South America East region yields that 55 % of TLS hosts installed on Amazon EC2 servers have not been updated since 2015 and are vulnerable to an array of more recently discovered attacks. Consequently, a potential attacker such as a nation state, hacker group or a government organization can exploit these vulnerabilities for bulk recovery of private keys. Besides the usual standard attacks that target individuals, this enables mass surveillance on a population thereby stripping the network from any level of privacy. Note that the attack is enabled by our trust in the cloud. The cloud infrastructure already stores the bulk of our sensitive data. Specifically, when an attacker instantiates multiple instances in a targeted availability zone of a cloud, she co-locates with many vulnerable servers. In particular, an attacker trying to recover RSA keys can monitor the LLC in each of these instances until the pattern expected by the exploited hardware level leakage is observed. Then the attacker can easily scan the cloud network to build a public key database and deduce who the recovered private key belongs to. In a similar approach, Heninger et al. [21] scan the network for public keys with shared or similar RSA modulus factors due to poor randomization. Similarly Bernstein et al. [10] compiled a public key database and scanned for shared factors in RSA modulus commonly caused by broken random number generators.

In this work, we explore the viability of full RSA key recovery in the Amazon EC2 cloud. More precisely, we utilize the LLC as a covert channel both to co-locate and perform a cross-core side-channel attack against a recent cryptographic implementation. Our results demonstrate that even with complex and resilient infrastructures, and with properly configured random number generators, cache attacks are a big threat in commercial clouds.

Our Contribution

This work presents a full key recovery attack on a modern implementation of RSA in a commercial cloud and explores all steps necessary to successfully recover both the key and the identity of the victim. This attack can be applied under two different scenarios:

-

1.

Targeted Co-location: In this scenario, we launch instances until we co-locate with the victim as described in [24, 42]. Upon co-location the secret is recovered by a cache enabled cross-VM attack.

-

2.

Bulk Key Recovery: We randomly create instances and using cross-VM cache attacks recover imperfect private keys. These keys are subsequently checked and against public keys in public key database. The second step allows us to eliminate noise in the private keys and determine the identity of the owner of the recovered key.

Unlike in earlier bulk key recovery attacks [10, 21] we do not rely on faulty random number generators but instead exploit hardware level leakages.

Our specific technical contributions are as follows:

-

We first demonstrate that the LLC contention based co-location detection tools are plausible in public clouds

-

Second, we reverse-engineer the undocumented non-linear slice selection algorithm implemented in Intel Xeon E5-2670 v2 [2] used by our Amazon EC2 instances, and utilize it to automate and accelerate the attack

-

Third, we describe how to apply the Prime and Probe attack to the LLC and obtain RSA leakage information from co-located VMs

-

Last, we present a detailed analysis of the necessary post-processing steps to cope with the noise observed in a real public cloud setup, along with a detailed analysis on the CPU time (at most 30 core-hours) to recover both the noise-free key and the owner’s identity (IP).

2 Related Work

This work combines techniques needed for co-location in a public cloud with state-of-the art techniques in cache based cross-VM side channel attacks.

Co-location Detection: In 2009 Ristenpart et al. [40] demonstrated that a potential attacker has the ability to co-locate and detect co-location in public IaaS clouds. In 2011, Zhang et al. [47] demonstrated that a tenant can detect co-location in the same core by monitoring the L2 cache. Shortly after, Bates et al. [7] implemented a co-location test based on network traffic analysis. In 2015, Zhang et al. [48] demonstrated that de-duplication enables co-location detection from co-located VMs in PaaS clouds. In follow-up to Ristenpart et al.’s work [40], Zhang et al. [44] and Varadarajan et al. [42] explored co-location detection in commercial public cloud in 2015. Both studies use the memory bus contention channel explored by Wu et al. in 2012 [43] to detect co-location. Finally in 2016, İnci et al. [24] explored co-location detection on Amazon EC2, Microsoft Azure and Google Compute Engine using three detection methods namely memory bus locking, LLC covert channel and LLC software profiling.

Recovering Cache Slice Selection Methods: A basic technique based on hamming distances for recovering and exploiting linear cache slice selection was introduced in [23]. Irazoqui et al. [27] and Maurice et al. [36] used a more systematic approach to recover linear slice selection algorithms in a range of processors, the latter pointing out the coincidence of the functions across processors. Recently, Yarom et al. [46] recovered a 6 core slice selection algorithm with a similar technique as the one presented in this work.

Side-Channel Attacks: RSA have been widely studied and explored with regards to diverse covert channels such as time [12, 33], power [32], EM emanations [15, 16], and even acoustic channels [17]. Micro-architectural side-channel attacks also succeeded on recovering secret keys used in symmetric cryptography. After the first theoretical [22, 39] and practical [9, 38] attacks utilizing micro-architectural covert channels, Aciicmez et al. [5, 6] demonstrated the possibility of recovering RSA secret keys using the instruction cache and the branch prediction unit as covert channels. Later, Zhang et al. [49] recovered El-Gamal secret keys across co-located VMs exploiting leakage in the upper level caches.

While all the previously mentioned side-channel attacks used a private core resource (i.e., attacker needs to be running in the same CPU core as the victim), in 2014 Yarom et al. [45] proved to be able to recover RSA secret keys across co-located VMs by using the Flush and Reload attack in the presence of memory de-duplication. Recently Liu et al. [14] implemented an attack against El-Gamal using the Prime and Probe attack in the LLC, whereas Bhattacharya et al. [11] utilized the branch prediction performance counters to recover RSA keys.

In addition to the attacks on public key cryptography schemes cache attacks also have been applied to AES [26, 30], ECDSA [8], TLS messages [31], the number of items in a shopping cart [48] or even the key strokes typed in a keyboard [18]. Even further, they recently have been applied to PaaS clouds [48], across processors [28] and in smartphones [35].

3 Prime and Probe in the LLC

In computer systems, the physical memory is protected and not visible to the user, who only sees the virtual addresses that the data resides at. Therefore a memory address translation stage is required to map virtual addresses to physical. However, there are some bits of the virtual address that remain untranslated, i.e., the least significant \(p_{low}\) bits with \(2^{p_{low}}\) size memory pages. These are called the page offset, while the remaining part of the address is called the page frame number and their combination make the physical address. The location of a memory block in the cache is determined by its physical address. Usually the physical address is divided in three different sections to access n-way caches: the byte field, the set field and the tag field. The length of the byte and set fields are determined by the cache line size and the number of sets in the cache, respectively. The more sets a cache has, more bits are needed from the page frame number to select the set that a memory block occupies in the cache.

The Prime and Probe attack has been widely studied in upper level caches [5, 49], but was first introduced for the LLC in [14, 26] with the use of hugepages. Unlike regular memory pages that reveal only 12 bits of the physical address, hugepages reveal 21 bits, allowing the LLC monitoring. Also, profiling the LLC in contrast to the L1 or L2 cache has various advantages. Firstly, unlike the upper level caches, the LLC is shared across cores, providing a cross-core covert channel. Moreover, the time distinguishability of accesses in upper level caches is much lower than those between the LLC and memory. On the other hand, due to the size of LLCs, we cannot simultaneously profile the whole cache, but rather a small portion of it at a time. In addition to that, modern processors divide their LLC into slices with a non-public hash algorithm, making it difficult to predict where the data will be located. Taking all these into account, the Prime and Probe attack is divided in two main stages:

Prime Stage: The attacker fills a portion of the LLC with his own data and waits for a period of time for the victim to access the cache.

Probe Stage: The attacker probes (reloads) the primed data. If the victim accessed the monitored set of the cache, one (or more) of the attacker’s lines will not reside in the cache anymore, and will have to be retrieved from the memory.

As stated before, profiling a portion of the cache becomes more difficult when the LLC is divided into slices. However, as observed by [14] we can create an eviction set without knowing the algorithm implemented. This involves a step prior to the attack where the attacker finds the memory blocks colliding in a specific set/slice. This can be done by creating a large pool of memory blocks, and access them until we observe that one of them is fetched from the memory. The procedure will be further explained in Sect. 4. A group of memory blocks that fill one set/slice in the LLC will form an eviction set for that set/slice.

4 Co-locating on Amazon EC2

In order to perform our experiments across co-located VMs we first need to make sure that they are running in the same server. We present the LLC as an exploitable covert channel with the purpose of detecting co-location between two instances. For the experiments, we launched 4 accounts (named A, B, C and D) on the South American Amazon EC2 region and launched 20 m3.medium instances in each of these accounts, 80 instances in total.

On these instances, we performed our LLC co-location detection test and obtained co-located instance pairs. In total, out of 80 instances launched from different accounts, we were able to obtain 7 co-located pairs and one triplet. Account A had 5 co-located instances out of 20 while B and C had 4 and 7 respectively. As for the account D, we had no co-location with instances from other accounts. Overall, assuming that the account A is the target, next 60 instances launched in accounts B, C, D have 8.3 % probability of co-location with the target. Note that all co-locations were between machines from different accounts. The experiments did not aim at obtaining co-location with a single instance, for which the probability of obtaining co-location would be lower.

4.1 The LLC Co-location Method

The LLC is shared across all cores of most modern Intel CPUs, including the Intel Xeon E5-2670 v2 used (among others) in Amazon EC2. Accesses to LLC are thus transparent to all VMs co-located on the same machine, making it the perfect domain for covert communication and co-location detection.

Our LLC test is designed to detect cache lines that are needed to fill a specific set in the cache. In order to control the location that our data will occupy in the cache, the test allocates and works with hugepages.Footnote 1 In normal operation with moderate noise, the number of lines to fill one set is equal to LLC associativity, which is 20 in Intel Xeon E5-2670 v2 used in our Amazon EC2 instances. However, with more than one user trying to fill the same set at the same time, one will observe that fewer than 20 lines are needed to fill one set. By running this test concurrently on a co-located VM pair, both controlled by the same user, it is possible to verify co-location with high certainty. The test performs the following steps:

-

Prime one memory block \(b_0\) in a set in the LLC

-

Access additional memory blocks \(b_{1},b_2, \ldots ,b_n\) that occupy the same set, but can reside in a different slice.

-

Reload the memory block \(b_0\) to check whether it has been evicted from the LLC. A high reload time indicates that the memory block \(b_0\) resides in the RAM. Therefore we know that the required m memory blocks that fill a slice are part of the accessed additional memory blocks \(b_{1},b_2, \ldots ,b_n\).

-

Subtract one of the accessed additional memory blocks \(b_{i}\) and repeat the above protocol. If \(b_0\) is still loaded from the memory, \(b_{i}\) does not reside in the same slice. If \(b_0\) is now located in the cache, it can be concluded that \(b_{i}\) resides in the same cache slice as \(b_0\) and therefore fill the set.

-

Count the number of memory blocks needed to fill a set/slice pair. If the number is significantly different than the associativity, it can be concluded that we have cache contention across co-located VMs.

The LLC is not the only method that we have tried in order to verify co-location (see extended version of this paper for more information [25]). However, the experiments show that the LLC test is the only decisive and reliable test that can detect whether two of our instances are running in the same CPU in Amazon EC2. We performed the LLC test in two steps as follows:

-

1.

Single Instance Elimination: The first step of the LLC test is the elimination of single instances i.e. the ones that are not co-located with any other in the instance pool. To do so, we schedule the LLC test to run at all instances at the same time. Instances not detecting co-location is retired. For the remaining ones, the pairs need to be further processed as explained in the next step. Note that without this preliminary step, one would have to perform \(n(n-1)/2\) pair detection tests to find co-located pairs, i.e. 3160 tests for 80 instances. This step yielded 22 co-located instances out of 80.

-

2.

Pair Detection: Next we identify pairs for the possibly co-located instances. The test is performed as a binary search tree where each instance is tested against all the others for co-location.

4.2 Challenges and Tricks of Co-location Detection

During our experiments on Amazon EC2, we have observed various problems and interesting events related to the underlying hardware and software. Here we discuss what to expect when experimenting on Amazon EC2.

Instance Clock Decay: In our experiments using Amazon EC2, we have noticed that instance clocks degrade slowly over time. More interestingly, after detecting co-location using the LLC test, we discovered that co-located instances have the same clock degradation with 50 ns resolution. We believe that this information can be used for co-location detection.

Hardware Complexity: Modern Amazon EC2 instances have much more advanced and complex hardware components like 10 core, 20 thread CPUs and SSDs. Thus, our cache profiling techniques have to be adapted to handle servers with multiple slices that feature non-linear slice selection algorithms.

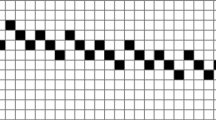

Co-located VM Noise: Compute cloud services including Amazon EC2 maintain a variety of services and servers. Most user-based services, however, quiet down when users quiet down, i.e. after midnight. Especially between 2 a.m. and 4 a.m. Internet traffic as well as computer usage is significantly lower than the rest of the day. We confirmed this assumption by measuring LLC noise in our instances and collected data from 6 instances over the course of 4 week days. Results are shown in Fig. 1. LLC noise and thus server load are at its peak around 8 p.m. and lowest at 4 a.m. We also measured the noise observed in the first 200 sets of the LLC for one day in Fig. 2. The y-axis shows the probability of observing a cache access by a co-located user other than victim during a Prime and Probe interval of the spy process (i.e. the attacker cannot detect the cache access of the victim process). The measurements were taken every 15 min. A constant noise floor at approx. 4.5 % is present in all sets. Sets 0 and 3 feature the highest noise, but high noise (11 %)is observed at the starting points of other pages as well. In fact, certain set numbers (0, 3, 26, 39, 58) mod 64 seem to be predictably more noisy and not well suited for the attack.

Dual Socket Machines: We did not find evidence of dual socket machines among the medium instances that we used in both co-location and attack steps. Indeed once co-located, our LLC co-location test always succeeded over time, even after a year. If the instances were to reside in dual socket machines and the VM processes moved between CPUs, the co-location test would have failed. However, even in that case, repeated experiments would still reveal co-location just by repeating the test after a time period enough to allow a socket migration.

5 Obtaining the Non-linear Slice Selection Algorithm

The LLC attack that will later be performed is based on the ability of generating colliding memory blocks, i.e., blocks that collide for a specific set and slice. In modern processors, each set in the LLC is divided into slices (usually one slice per core) to respond to multiple requests at a time. The usage of a sliced LLC as a covert channel becomes simpler when we deal with a power of two number of slices. In these cases, due to the linearity, the set bits does not affect the slice bits in the eviction set created for one of the slices. Thus, we could create an eviction set for a specific set-slice pair composed by b1, b2, ..bn memory blocks choosing a random set s. If we later want to target a different set, we could still use b1, b2, ...bn by changing only the set bits and they will fill the same slice. This fact was used in [14, 26] to perform LLC side channel attacks. This peculiarity is not observed in non-linear slices, i.e., the same b1, b2, .., bn will only slice-collide for a small number of sets. The slice colliding blocks can either be empirically observed for each set, or guessed if the non-linear slice selection algorithm is known. Our particular EC2 instance type utilizes a Intel Xeon E5-2670 v2, which features a 25 MB LLC distributed over 10 LLC slices (i.e., non power of two). We decide to reverse-engineer the non-linear slice selection algorithm to speed up our eviction set creation algorithm. Note that the approach that we follow can be utilized to reverse engineer any non-linear slice selection algorithm.

We describe the slice selection algorithm as

where each H(p) is a concatenation of 4 different functions corresponding to the 4 necessary bits to represent 10 slices. Note that H(p) will output results from 0000 to 1001 if we label the slices from 0–9. Thus, a non-linear function is needed that excludes outputs 10–15. Further note that p is the physical address and will be represented as a bit string: \(p=p_0p_1\ldots p_{35}\). In order to recover the non-linear hash function implemented by the Intel Xeon E5-2670 v2, we use a fully controlled machine featuring the same Intel Xeon E5-2670 v2 found in Amazon’s EC2 servers. We first generate ten equation systems (one per slice) based on slice colliding addresses by applying the same methodology explained to achieve co-location and generating up to 100,000 additional memory blocks.

Up to this point, one can solve the non-linear function after a re-linearization step given sufficiently many equations. Since we are not be able to recover enough addresses (due to RAM limitations) we take a different approach. Figure 3 shows the distribution of the 100,000 addresses over the 10 slices. Note that 8 slices are mapped to by \(81.25\,\%\) of the addresses, while 2 slices get only about \(18.75\,\%\), i.e., a 3 / 16 proportion. We will refer to these two slices as the non-linear slices.

We proceed to first solve the first 8 slices and the last 2 slices separately using linear functions. For each we try to find solutions to the equation systems

Here \(P_{i}\) is the equation system obtained by arranging the slice colliding addresses into a matrix form, \(\hat{H_i}\) is the matrix containing the slice selection functions and \(\hat{0}\) and \(\hat{1}\) are the all zero and all one solutions, respectively. This outputs two sets of linear solutions both for the first 8 linear slices and the last 2 slices.

Given that we can model the slice selection functions separately using linear functions, and given that the distribution is non-uniform, we model the hash function is implemented in two levels. In the first level a non-linear function chooses between either of the 3 linear functions describing the 8 linear slices or the linear functions describing the 2 non-linear slices. Therefore, we speculate that the 4 bits selecting the slice looks like:

where \(h_0\),\(h_1\) and \(h_2\) are the hash functions selecting bits 0,1 and 2 respectively, \(h_3\) is the function selecting the 3rd bit and nl is a nonlinear function of an unknown degree. We recall that the proportion of the occurrence of the last two slices is 3 / 16. To obtain this proportion we need a degree 4 nonlinear function where two inputs are negated, i.e.:

where nl is 0 for the 8 linear slices and 1 for the 2 non-linear slices. Observe that nl will be 1 with probability 3 / 16 while it will be zero with probability 13 / 16, matching the distributions seen in our experiments. Consequently, to find \(v_0\) and \(v_1\) we only have to solve Eq. (3) for slices 8 and 9 together to obtain a 1 output. To find \(v_2\) and \(v_3\), we first separate those addresses where \(v_0\) and \(v_1\) output 1 for the linear slices \(0-7\). For those cases, we solve Eq. (3) for slices \(0-7\). The result is summarized in Table 1. We show both the non-linear function vectors \(v_0,v_1,v_2,v_3\) and the linear functions \(h_0,h_1,h_2\). These results describe the behavior of the slice selection algorithm implemented in the Intel Xeon E5-2670 v2. With this result, we can now easily predict the slice selection on the target processor in the EC2 cloud.

6 Cross-VM RSA Key Recovery

To prove the viability of the Prime and Probe attack in Amazon EC2 across co-located VMs, we present an expanded version of the attack implemented in [14] by showing its application to RSA. It is important to remark that the attack is not processor specific, and can be implemented in any processor with inclusive last level caches. In order to perform the attack:

-

We make use of the fact that the offset of the address of each table position entry does not change when a new decryption process is executed. Therefore, we only need to monitor a subsection of all possible sets, yielding a lower number of traces.

-

Instead of the monitoring both the multiplication and the table entry set (as in [14] for El-Gamal), we only monitor a table entry set in one slice. This avoids the step where the attacker has to locate the multiplication set and avoids an additional source of noise.

The attack targets a sliding window implementation of RSA-2048 where each position of the pre-computed table will be recovered. We will use Libgcrypt 1.6.2 as our target library, which not only uses a sliding window implementation but also uses CRT and message blinding techniques [34]. The message blinding process is performed as a side channel countermeasure for chosen-ciphertext attacks, in response to studies such as [16, 17].

We use the Prime and Probe side channel technique to recover the positions of the table T that holds the values \(c^3,c^5,c^7,\ldots ,c^{2^{W}-1}\) where W is the window size. For CRT-RSA with 2048 bit keys, \(W=5\) for both exponentiations \(d_p,d_q\). Observe that, if all the positions are recovered correctly, reconstructing the key is a straightforward step.

Recall that we do not control the victim’s user address space. This means that we do not know the location of each of the table entries, which indeed changes from execution to execution. Therefore we will monitor a set hoping that it will be accessed by the algorithm. However, our analysis shows a special behavior: each time a new decryption process is started, even if the location changes, the offset field does not change from decryption to decryption. Thus, we can directly relate a monitored set with a specific entry in the multiplication table.

The knowledge of the processor in which the attack is going to be carried out gives an estimation of the probability that the set/slice we monitor collides with the set/slice the victim is using. For each table entry, we fix a specific set/slice where not much noise is observed. In the Intel Xeon E5-2670 v2 processors, the LLC is divided in 2048 sets and 10 slices. Therefore, knowing the lowest 12 bits of the table locations, we will need to monitor one set/slice that solves \(s \mod 64 = o\), where s is the set number and o is the offset for a table location. This increases the probability of probing the correct set from \(1/(2048 \cdot 10)=1/20480\) to \(1/((2048\cdot 10)/64)=1/320\), reducing the number of traces to recover the key by a factor of 64. Thus our spy process will monitor accesses to one of the 320 set/slices related to a table entry, hoping that the RSA encryption accesses it when we run repeated decryptions. Thanks to the knowledge of the non linear slice selection algorithm, we can easily change our monitored set/slice if we see a high amount of noise in one particular set/slice. Since we also have to monitor a different set per table entry, it also helps us to change our eviction set accordingly. The threshold is different for each of the sets, since the time to access different slices usually varies. Thus, the threshold for each of the sets has to be calculated before the monitoring phase. In order to improve the applicability of the attack the LLC can be monitored to detect whether there are RSA decryptions or not in the co-located VMs as proposed in [24]. After it is proven that there are RSA decryptions the attack can be performed.

In order to obtain high quality timing leakage, we synchronize the spy process and the RSA decryption by initiating a communication between the victim and attacker, e.g. by sending a TLS request. Note that we are looking for a particular pattern observed for the RSA table entry multiplications, and therefore processes scheduled before the RSA decryption will not be counted as valid traces. In short, the attacker will communicate with the victim before the decryption. After this initial communication, the victim will start the decryption while the attacker starts monitoring the cache usage. In this way, we monitor 4,000 RSA decryptions with the same key and same ciphertext for each of the 16 different sets related to the 16 table entries.

We investigate a hypothetical case where a system with dual CPU sockets is used. In such a system, depending on the hypervisor CPU management, two scenarios can play out; processes moving between sockets and processes assigned to specific CPUs. In the former scenario, we can observe the necessary number of decryption samples simply by waiting over a longer period of time. In this scenario, the attacker would collect traces and only use the information obtained during the times the attacker and the victim share sockets and discard the rest as missed traces. In the latter scenario, once the attacker achieves co-location, as we have in Amazon EC2, the attacker will always run on the same CPU as the target hence the attack will succeed in a shorter span of time.

7 Leakage Analysis Method

Once the online phase of the attack has been performed, we proceed to analyze the leakage observed. There are three main steps to process the obtained data. The first step is to identify the traces that contain information about the key. Then we need to synchronize and correct the misalignment observed in the chosen traces. The last step is to eliminate the noise and combine different graphs to recover the usage of the multiplication entries. Among the 4,000 observations for each monitored set, only a small portion contains information about the multiplication operations with the corresponding table entry. These are recognized because their exponentiation trace pattern differs from that of unrelated sets. In order to identify where each exponentiation occurs, we inspected 100 traces and created the timeline shown in Fig. 4(b). It can be observed that the first exponentiation starts after 37 % of the overall decryption time. Note that among all the traces recovered, only those that have more than 20 and less than 100 peaks are considered. The remaining ones are discarded as noise. Figure 4 shows measurements where no correct pattern was detected (Fig. 4(a)), and where a correct pattern was measured (Fig. 4(b)).

In general, after the elimination step, there are 8−12 correct traces left per set. We observe that data obtained from each of these sets corresponds to 2 consecutive table positions. This is a direct result of CPU cache prefetching. When a cache line that holds a table position is loaded into the cache, the neighboring table position is also loaded due to cache locality principle.

For each graph to be processed, we first need to align the creation of the look-up table with the traces. Identifying the table creation step is trivial since each table position is used twice, taking two or more time slots. Figure 5(a) shows the table access position indexes aligned with the table creation. In the figure, the top graph shows the true table accesses while the rest of the graphs show the measured data. It can be observed that the measured traces suffer from misalignment due to noise from various sources e.g. RSA or co-located neighbors.

To fix the misalignment, we take most common peaks as reference and apply a correlation step. To increase the efficiency, the graphs are divided into blocks and processed separately as seen in Fig. 5(a). At the same time, Gaussian filtering is applied to peaks. In our filter, the variance of the distribution is 1 and the mean is aligned to the peak position. Then for each block, the cross-correlation is calculated with respect to the most common hit graph i.e. the intersection set of all graphs. After that, all graphs are shifted to the position where they have the highest correlation and aligned with each other. After the cross-correlation calculation and the alignment, the common patterns are observable as in Fig. 5(b). Observe that the alignment step successfully aligns measured graphs with the true access graph at the top, leaving only the combining and the noise removal steps. We combine the graphs by simple averaging and obtain a single combined graph.

In order to get rid of the noise in the combined graph, we applied a threshold filter as can be seen in Fig. 6. We used 35 % of the maximum peak value observed in graphs as the threshold value. Note that a simple threshold was sufficient to remove noise terms since they are not common between graphs.

Now we convert scaled time slots of the filtered graph to real time slot indexes. We do so by dividing them with the spy process resolution ratio, obtaining the Fig. 7. In the figure, the top and the bottom graphs represent the true access indexes and the measured graph, respectively. Also, note that even if additional noise peaks are observed in the obtained graph, it is very unlikely that two graphs monitoring consecutive table positions have noise peaks at the same time slot. Therefore, we can filter out the noise stemming from the prefetching by combining two graphs that belong to consecutive table positions. Thus, the resulting indexes are the corresponding timing slots for look-up table positions.

The very last step of the leakage analysis is finding the intersections of two graphs that monitor consecutive sets. By doing so, we obtain accesses to a single table position as seen in Fig. 8 with high accuracy. At the same time, we have total of three positions in two graphs. Therefore, we also get the positions of the neighbors. A summary of the result of the leakage analysis is presented in Table 2. We observe that more than 92 % of the recovered peaks are in the correct position. However, note that by combining two different sets, the wrong peaks will disappear with high probability, since the chance of having wrong peaks in the same time slot in two different sets is very low.

8 Recovering RSA Keys with Noise

We divide the section in two different scenarios, i.e., the scenario where the identity and public key of the target is known (targeted co-location) and the scenario where we have no information about the public key (bulk key recovery).

8.1 Targeted Co-location: The Public Key Is Known

In this case we assume that the attacker implemented a targeted co-location against a known server, and that she has enough information about the public key parameters of the target. The leakage analysis described in the previous section recovers information on the CRT version of the secret exponent d, namely \(d_p= d \mod (p-1)\) and \(d_q = d\mod (q-1)\). A noise-free version of either one can be used to trivially recover the factorization of \(N=p q\), since \({\text {gcd}}(m-m^{e d_p},N) = p\) for virtually any m [13].

In cases where the noise on \(d_p\) and \(d_q\) is too high for a direct recovery with the above-mentioned method, their relation to the known public key can be exploited if the used public exponent e is small [20].

Almost all RSA implementations currently use \(e = 2^{16}+1\) due to the heavy performance boost over a random and full size e. For CRT exponents it holds that \(ed_p = 1 \mod (p-1)\) and hence \(ed_p = k_p (p-1) +1\) for some \(1\le k_p<e\) and similarly for \(d_q\), yielding \(k_p p = e d_p + k_p -1\) and \(k_q p = e d_q + k_q -1\).

Multiplying both equations gives us a key equation which we will exploit in two ways

If we consider Eq. (5) modulo e, the unknowns \(d_p\) and \(d_q\) disappear and we obtain \( k_pk_qN = (k_p-1)(k_q-1) \pmod {e}\). Therefore given \(k_p\) we can recover \(k_q\) and vice versa by solving this linear equation. Since \(1\le k_p<e\) represents an exhaustible small space we can simply try all values for \(k_p\) and compute corresponding \(k_q\) as shown above.

Next, assume we are given the first t bits of \(d_p\) and \(d_q\), e.g. \(a = d_p \mod 2^t\) and \(b = d_q \mod 2^t\). For each \(k_p\) we check whether \(\delta (a,b,t)=0\) where

This means we have a simple technique to check the correctness of the least-significant t bits of \(d_p\), \(d_q\) for a choice of \(k_p\). We can

-

Check parts of \(d_p\) and \(d_q\) by verifying if the test \(\delta (d_p(t),d_q(t),t)=0\) holds for \(t\in [1,\lceil \log (p)\rceil ]\).

-

Fix alignment and minor errors by shifting and varying \(d_p(t)\) and \(d_q(t)\), and then sieving working cases by checking if \(\delta (d_p(t),d_q(t),t)=0\),

-

Recover parts of \(d_q\) given \(d_p\) (and vice versa) by solving the error equation \(\delta (d_p(t),d_q(t),t)=0\) in case the data is missing or too noisy to correct.

Note that the algorithm may need to try all \(2^{16}\) values of \(k_p\) in a loop. Further, in the last case where we recover a missing data part using the checking equation we need to speculatively continue the iteration for a few more steps. If we observe too many mistakes we may early terminate the execution thread without reaching the end of \(d_p\) and \(d_q\).

To see how this approach can be adapted into our setting, we need to consider the error distribution observed in \(d_p\) and \(d_q\) as recovered by cache timing. Furthermore, since the sliding window algorithm was used in the RSA exponentiation operation, we are dealing with variable size (1–5 bit) windows with contents wp, wq, and window positions ip, iq for \(d_p\) and \(d_q\), respectively.

The windows are separated by 0 strings. We observed:

-

The window wp contents for \(d_p\) had no errors and were in the correct order. There were slight misalignments in the window positions ip with extra or missing zeros in between.

-

In contrast, \(d_q\) had not only alignment problems but also few windows with incorrect content, extra windows, and missing windows (overwritten by zeros). The missing windows were detectable since we do not expect unusually long zero strings in a random \(d_q\).

-

Since the iterations proceed from the most significant windows to the least we observed more errors towards the least significant words, especially in \(d_q\).

Algorithm 1 shows how one can progressively error correct \(d_p\) and \(d_q\) by processing groups of consecutive \(\ell \) windows of \(d_p\). The algorithm creates new execution threads when an assumption is made, and kills a thread after assumptions when too many checks fail to produce any matching on different windows. However, then the kill threshold has to be increased and the depth of the computation threads and more importantly the number of variations that need to be tested increases significantly. In this case, the algorithm finds the correct private key in the order of microseconds for a noise-free \(d_p\) and needs 4 s for our recovered \(d_p\).

8.2 Bulk Key Recovery: The Public Key Is Unknown

In this scenario, the attacker spins multiple instances and monitors the LLC, looking in all of them for RSA leakages. If viable leakages are observed, the attacker might not know the corresponding public key. However, she can build up a database of public keys by mapping the entire IP range of the targeted Amazon EC2 region and retrieve all the public keys of hosts that have the TLS port open. The attacker then runs the above described algorithm for each of the recovered private keys and the entire public key database. Having the list of ’neighboring’ IPs with an open TLS port also allows the attacker to initiate TLS handshakes to make the servers use their private keys with high frequency.

In the South America Amazon EC2 region, we have found 36000+ IP addresses with the TLS port open (shown in more detail in [25]) using nmap. With a public key database of that size, our algorithm takes between less than a second (for noise-free \(d_p\)s) and 30 CPU hours (noisy \(d_p\)s) to check each private key with the public key database. This approach recovers the public/private key pair, and consequently, the identity of the key owner.

9 Countermeasures

Libgcrypt 1.6.3 Update: Libgcrypt recently patched this vulnerability by making the sliding window multiplication table accesses indistinguishable from each other. Thus, an update to the latest version of the library avoids the leakage exploited in this work albeit only for ciphers using sliding window exponentiation.

Single-Tenant Instances: Although more expensive, in most cloud services, users have the option of having the whole physical machine to themselves, preventing co-location with potentially malicious users.

Live Migration: In a highly noisy environment like the commercial cloud, an attacker would need many traces to conduct a side-channel attack. In the live migration scenario, the attacker would have to perform the attack in the time period when the attacker and the victim share the physical machine.

10 Conclusion

In conclusion, we show that even with advanced isolation techniques, resource sharing still poses security risk to public cloud customers that do not follow the best security practices. The cross-VM leakage is present in public clouds and can be a practical attack vector for data theft. Therefore, users have a responsibility to use latest improved software for their critical cryptographic operations. Even further, we believe that smarter cache management policies are needed both at the hardware and software levels to prevent side-channel leakages.

Notes

- 1.

The co-location test has to be implemented carefully, since the heavy usage of hugepages may yield into performance degradation. In fact, while trying to achieve a quadruple co-location Amazon EC2 stopped our VMs due to performance issues. For a more detailed explanation, see [25].

References

Fix Flush and Reload in RSA. https://lists.gnupg.org/pipermail/gnupg-announce/2013q3/000329.html

Intel Xeon 2670–v2. http://ark.intel.com/es/products/75275/Intel-Xeon-Processor-E5-2670-v2-25M-Cache-2_50-GHz

OpenSSL fix flush and reload ECDSA nonces. https://git.openssl.org/gitweb/?p=openssl.git;a=commitdiff;h=2198be3483259de374f91e57d247d0fc667aef29

Transparent Page Sharing: Additional management capabilities and new default settings. http://blogs.vmware.com/security/vmware-security-response-center/page/2

Acıiçmez, O.: Yet another microarchitectural attack: exploiting I-cache. In: Proceedings of the 2007 ACM Workshop on Computer Security Architecture

Acıiçmez, O., Koç, Ç.K., Seifert, J.-P.: Predicting secret keys via branch prediction. In: Abe, M. (ed.) CT-RSA 2007. LNCS, vol. 4377, pp. 225–242. Springer, Heidelberg (2006)

Bates, A., Mood, B., Pletcher, J., Pruse, H., Valafar, M., Butler, K.: Detecting co-residency with active traffic analysis techniques. In: Proceedings of the 2012 ACM Workshop on Cloud Computing Security Workshop

Benger, N., van de Pol, J., Smart, N.P., Yarom, Y.: “Ooh Aah.. Just a Little Bit” : a small amount of side channel can go a long way. In: Batina, L., Robshaw, M. (eds.) CHES 2014. LNCS, vol. 8731, pp. 75–92. Springer, Heidelberg (2014)

Bernstein, D.J.: Cache-timing attacks on AES (2004). http://cr.yp.to/papers.html#cachetiming

Bernstein, D.J., Chang, Y.-A., Cheng, C.-M., Chou, L.-P., Heninger, N., Lange, T., van Someren, N.: Factoring RSA keys from certified smart cards: coppersmith in the wild. In: Sako, K., Sarkar, P. (eds.) ASIACRYPT 2013, Part II. LNCS, vol. 8270, pp. 341–360. Springer, Heidelberg (2013)

Bhattacharya, S., Mukhopadhyay, D.: Who watches the watchmen?: utilizing performance monitors for compromising keys of RSA on Intel platforms. In: Güneysu, T., Handschuh, H. (eds.) CHES 2015. LNCS, vol. 9293, pp. 248–266. Springer, Heidelberg (2015)

Brumley, D., Boneh, D.: Remote timing attacks are practical. In: Proceedings of the 12th USENIX Security Symposium, pp. 1–14 (2003)

Campagna, M.J., Sethi, A.: Key recovery method for CRT implementation of RSA. Cryptology ePrint Archive, Report 2004/147. http://eprint.iacr.org/

Liu, F., Yarom, Y., Ge, Q., Heiser, G., Lee, R.B.: Last level cache side channel attacks are practical, September 2015

Gandolfi, K., Mourtel, C., Olivier, F.: Electromagnetic analysis: concrete results. In: Koç, Ç.K., Naccache, D., Paar, C. (eds.) CHES 2001. LNCS, vol. 2162, pp. 251–261. Springer, Heidelberg (2001)

Genkin, D., Pachmanov, L., Pipman, I., Tromer, E.: Stealing keys from PCs using a radio: cheap electromagnetic attacks on windowed exponentiation. In: Güneysu, T., Handschuh, H. (eds.) CHES 2015. LNCS, vol. 9293, pp. 207–228. Springer, Heidelberg (2015)

Genkin, D., Shamir, A., Tromer, E.: RSA key extraction via low-bandwidth acoustic cryptanalysis. In: Garay, J.A., Gennaro, R. (eds.) CRYPTO 2014, Part I. LNCS, vol. 8616, pp. 444–461. Springer, Heidelberg (2014)

Gruss, D., Spreitzer, R., Mangard, S.: Cache.: template attacks: automating attacks on inclusive last-level caches. In: 24th USENIX Security Symposium, pp. 897–912. USENIX Association (2015)

Gullasch, D., Bangerter, E., Krenn, S.: Cache games - bringing access-based cache attacks on AES to practice. In: SP 2011, pp. 490–505

Hamburg, M.: Bit level error correction algorithm for RSA keys. Personal Communication. Cryptography Research Inc. (2013)

Heninger, N., Durumeric, Z., Wustrow, E., Halderman, J.A.: Mining your Ps and Qs: detection of widespread weak keys in network devices. In: Presented as Part of the 21st USENIX Security Symposium (USENIX Security 2012), Bellevue, WA. USENIX, pp. 205–220 (2012)

Hu, W.-M.: Lattice scheduling and covert channels. In: Proceedings of the 1992 IEEE Symposium on Security and Privacy

Hund, R., Willems, C.,Holz, T.: Practical timing side channel attacks against kernel space ASLR. In: Proceedings of the 2013 IEEE Symposium on Security and Privacy, pp. 191–205

İncİ, M.S., Gülmezoglu, B., Eisenbarth, T., Sunar, B.: Co-location detection on the cloud. In: COSADE (2016)

İncİ, M.S., Gülmezoglu, B., Irazoqui, G., Eisenbarth, T., Sunar, B.: Cache attacks enable bulk key recovery on the cloud (extended version) (2016). http://v.wpi.edu/wp-content/uploads/Papers/Publications/bulk_extended.pdf

Irazoqui, G., Eisenbarth, T., Sunar, B.: S$A: a shared cache attack that works across cores and defies VM sandboxing and its application to AES. In: 36th IEEE Symposium on Security and Privacy, S&P (2015)

Irazoqui, G., Eisenbarth, T., Sunar, B.: Systematic reverse engineering of cache slice selection in Intel processors. In: Euromicro DSD (2015)

Irazoqui, G., Eisenbarth, T., Sunar, B.: Cross processor cache attacks. In: Proceedings of the 11th ACM Symposium on Information, Computer and Communications Security, ASIA CCS 2016. ACM (2016)

Irazoqui, G., İncİ, M.S., Eisenbarth, T., Sunar, B.: Know thy neighbor: crypto library detection in cloud. Proc. Priv. Enhancing Technol. 1(1), 25–40 (2015)

Irazoqui, G., İncİ, M.S., Eisenbarth, T., Sunar, B.: Wait a minute! a fast, cross-VM attack on AES. In: RAID, pp. 299–319 (2014)

Irazoqui, G., İncİ, M.S., Eisenbarth, T., Sunar, B.: Lucky 13 strikes back. In: Proceedings of the 10th ACM Symposium on Information, Computer and Communications Security, ASIA CCS 2015, pp. 85–96 (2015)

Kocher, P.C., Jaffe, J., Jun, B.: Differential power analysis. In: Wiener, M. (ed.) CRYPTO 1999. LNCS, vol. 1666, pp. 388–397. Springer, Heidelberg (1999)

Kocher, P.C.: Timing attacks on implementations of Diffie-Hellman, RSA, DSS, and other systems. In: Koblitz, N. (ed.) CRYPTO 1996. LNCS, vol. 1109, pp. 104–113. Springer, Heidelberg (1996)

Libgcrypt: The Libgcrypt reference manual. http://www.gnupg.org/documentation/manuals/gcrypt/

Lipp, M., Gruss, D., Spreitzer, R., Mangard, S. ARMageddon : last-level cache attacks on mobile devices. CoRR abs/1511.04897 (2015)

Maurice, C., Scouarnec, N.L., Neumann, C., Heen, O., Francillon, A.: Reverse engineering intel last-level cache complex addressing using performance counters. In: RAID 2015 (2015)

Oren, Y., Kemerlis, V.P., Sethumadhavan, S., Keromytis, A.D.: The spy in the sandbox : practical cache attacks in javascript and their implications. In: Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security, New York, NY, USA, CCS 2015, pp. 1406–1418. ACM (2015)

Osvik, D.A., Shamir, A., Tromer, E.: Cache attacks countermeasures.: the case of AES. In: Proceedings of the 2006 The Cryptographers’ Track at the RSA Conference on Topics in Cryptology, CT-RSA 2006

Page, D.: Theoretical use of cache memory as a cryptanalytic side-channel (2002)

Ristenpart, T., Tromer, E., Shacham, H., Savage, S.: Hey you, get off of my cloud: exploring information leakage in third-party compute clouds. In: Proceedings of the 16th ACM Conference on Computer and Communications Security, CCS 2009, pp. 199–212

Suzaki, K., Iijima, K., Toshiki, Y., Artho, C.: Implementation of a memory disclosure attack on memory deduplication of virtual machines. IEICE Trans. Fundam. Electron., Commun. Comput. Sci. 96, 215–224 (2013)

Varadarajan, V., Zhang, Y., Ristenpart, T., Swift, M.: A placement vulnerability study in multi-tenant public clouds. In: 24th USENIX Security Symposium (USENIX Security 2015), Washington, D.C., August 2015, pp. 913–928. USENIX Association

Wu, Z., Xu, Z., Wang, H.: Whispers in the hyper-space: high-speed covert channel attacks in the cloud. In: USENIX Security Symposium, pp. 159–173 (2012)

Xu, Z., Wang, H., Wu, Z.: A measurement study on co-residence threat inside the cloud. In: 24th USENIX Security Symposium (USENIX Security 2015), Washington, D.C., August 2015, pp. 929–944. USENIX Association

Yarom, Y., Falkner, K.: FLUSH+RELOAD: a high resolution, low noise, L3 cache side-channel attack. In: 23rd USENIX Security Symposium (USENIX Security 2014), pp. 719–732

Yarom, Y., Ge, Q., Liu, F., Lee, R.B., Heiser, G.: Mapping the Intel last-level cache. Cryptology ePrint Archive, Report 2015/905 (2015). http://eprint.iacr.org/

Zhang, Y., Juels, A., Oprea, A., Reiter, M.K.: HomeAlone : co-residency detection in the cloud via side-channel analysis. In: Proceedings of the 2011 IEEE Symposium on Security and Privacy

Zhang, Y., Juels, A., Reiter, M. K., Ristenpart, T.: Cross-tenant side-channel attacks in paas clouds. In: Proceedings of the 2014 ACM SIGSAC Conference on Computer and Communications Security

Zhang, Y., Juels, A., Reiter, M.K., Ristenpart, T.: Cross-VM side channels and their use to extract private keys. In: Proceedings of the 2012 ACM Conference on Computer and Communications Security

Acknowledgments

This work is supported by the National Science Foundation, under grants CNS-1318919 and CNS-1314770.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 International Association for Cryptologic Research

About this paper

Cite this paper

İnci, M.S., Gulmezoglu, B., Irazoqui, G., Eisenbarth, T., Sunar, B. (2016). Cache Attacks Enable Bulk Key Recovery on the Cloud. In: Gierlichs, B., Poschmann, A. (eds) Cryptographic Hardware and Embedded Systems – CHES 2016. CHES 2016. Lecture Notes in Computer Science(), vol 9813. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-53140-2_18

Download citation

DOI: https://doi.org/10.1007/978-3-662-53140-2_18

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-662-53139-6

Online ISBN: 978-3-662-53140-2

eBook Packages: Computer ScienceComputer Science (R0)