Abstract

It has been a long-standing open problem whether the strong \(\lambda \)-calculus is a reasonable computational model, i.e. whether it can be implemented within a polynomial overhead with respect to the number of \(\beta \)-steps on models like Turing machines or RAM. Recently, Accattoli and Dal Lago solved the problem by means of a new form of sharing, called useful sharing, and realised via a calculus with explicit substitutions. This paper presents a new abstract machine for the strong \(\lambda \)-calculus based on useful sharing, the Useful Milner Abstract Machine, and proves that it reasonably implements leftmost-outermost evaluation. It provides both an alternative proof that the \(\lambda \)-calculus is reasonable and an improvement on the technology for implementing strong evaluation.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

References

Accattoli, B., Barenbaum, P., Mazza, D.: Distilling abstract machines. In: ICFP 2014, pp. 363–376 (2014)

Accattoli, B., Barenbaum, P., Mazza, D.: A strong distillery. In: Feng, X., Park, S. (eds.) APLAS 2015. LNCS, vol. 9458, pp. 231–250. Springer, Heidelberg (2015). doi:10.1007/978-3-319-26529-2_13

Accattoli, B., Bonelli, E., Kesner, D., Lombardi, C.: A nonstandard standardization theorem. In: POPL, pp. 659–670 (2014)

Accattoli, B., Coen, C.S.: On the relative usefulness of fireballs. In: LICS 2015, pp. 141–155 (2015)

Accattoli, B., Dal Lago, U.: On the invariance of the unitary cost model for head reduction. In: RTA, pp. 22–37 (2012)

Accattoli, B., Lago, U.D.: (Leftmost-Outermost) Beta reduction is invariant, indeed. Logical Methods Comput. Sci. 12(1), 1–46 (2016)

Ariola, Z.M., Bohannon, A., Sabry, A.: Sequent calculi and abstract machines. ACM Trans. Program. Lang. Syst. 31(4), 13:1–13:48 (2009)

Asperti, A., Mairson, H.G.: Parallel beta reduction is not elementary recursive. In: POPL, pp. 303–315 (1998)

Blelloch, G.E., Greiner, J.: Parallelism in sequential functional languages. In: FPCA, pp. 226–237 (1995)

Boutiller, P.: De nouveaus outils pour manipuler les inductif en Coq. Ph.D. thesis, Université Paris Diderot, Paris 7 (2014)

de Carvalho, D.: Execution time of lambda-terms via denotational semantics and intersection types (2009). CoRR abs/0905.4251

Crégut, P.: An abstract machine for lambda-terms normalization. In: LISP and Functional Programming, pp. 333–340 (1990)

Crégut, P.: Strongly reducing variants of the Krivine abstract machine. Higher Order Symbol. Comput. 20(3), 209–230 (2007)

Dal Lago, U., Martini, S.: The weak lambda calculus as a reasonable machine. Theoret. Comput. Sci. 398(1–3), 32–50 (2008)

Danos, V., Regnier, L.: Head linear reduction. Technical report (2004)

Danvy, O., Nielsen, L.R.: Refocusing in reduction semantics. Technical report RS-04-26, BRICS (2004)

Danvy, O., Zerny, I.: A synthetic operational account of call-by-need evaluation. In: PPDP, pp. 97–108 (2013)

Dénès, M.: Étude formelle d’algorithmes efficaces en algèbre linéaire. Ph.D. thesis, Université de Nice - Sophia Antipolis (2013)

Ehrhard, T., Regnier, L.: Böhm trees, Krivine’s machine and the Taylor expansion of lambda-terms. In: Beckmann, A., Berger, U., Löwe, B., Tucker, J.V. (eds.) CiE 2006. LNCS, vol. 3988, pp. 186–197. Springer, Heidelberg (2006)

Fernández, M., Siafakas, N.: New developments in environment machines. Electron. Notes Theoret. Comput. Sci. 237, 57–73 (2009)

Friedman, D.P., Ghuloum, A., Siek, J.G., Winebarger, O.L.: Improving the lazy Krivine machine. Higher Order Symbol. Comput. 20(3), 271–293 (2007)

García-Pérez, Á., Nogueira, P., Moreno-Navarro, J.J.: Deriving the full-reducing Krivine machine from the small-step operational semantics of normal order. In: PPDP, pp. 85–96 (2013)

Grégoire, B., Leroy, X.: A compiled implementation of strong reduction. In: ICFP 2002, pp. 235–246 (2002)

Milner, R.: Local bigraphs and confluence: two conjectures. Electron. Notes Theoret. Comput. Sci. 175(3), 65–73 (2007)

Sands, D., Gustavsson, J., Moran, A.: Lambda calculi and linear speedups. In: Mogensen, T.Æ., Schmidt, D.A., Sudborough, I.H. (eds.) The Essence of Computation. LNCS, vol. 2566, pp. 60–82. Springer, Heidelberg (2002)

Sestoft, P.: Deriving a lazy abstract machine. J. Funct. Program. 7(3), 231–264 (1997)

Smith, C.: Abstract machines for higher-order term sharing. Presented at IFL 2014 (2014)

Wand, M.: On the correctness of the Krivine machine. Higher Order Symbol. Comput. 20(3), 231–235 (2007)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Proofs of the Main Lemmas and Theorems

Proofs of the Main Lemmas and Theorems

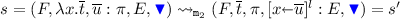

1.1 Proof of One-Step Weak Bisimulation Lemma (Lemma 7, p. 13)

-

1.

Commutative: the proof is exactly as the one for the Checking AM (Lemma 4.2), that can be found in the longer version of this paper on the author’s webpage.

-

2.

Exponential:

-

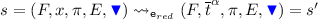

Case

with

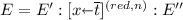

with  Then

Then  for some environments \(E'\), and \(E''\). Remember that terms are considered up to \(\alpha \)-equivalence.

for some environments \(E'\), and \(E''\). Remember that terms are considered up to \(\alpha \)-equivalence.

In the chain of equalities we can replace

with

with  because by well-labeledness the variables bound by \(E'\) are fresh with respect to \(\overline{t}\).

because by well-labeledness the variables bound by \(E'\) are fresh with respect to \(\overline{t}\). -

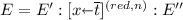

Case

with

with  The proof that

The proof that  is exactly as in the previous case.

is exactly as in the previous case.

-

-

3.

Multiplicative:

-

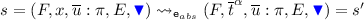

Case

Note that

Note that  is LO by the decoding invariant (Lemma 6.4). Note also that by the name invariant (Lemma 6.3b) \(x\) can only occur in \(\overline{t}\). Then:

is LO by the decoding invariant (Lemma 6.4). Note also that by the name invariant (Lemma 6.3b) \(x\) can only occur in \(\overline{t}\). Then:

-

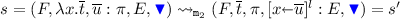

Case

with

with  not a variable. Note that

not a variable. Note that  is LO by the decoding invariant (Lemma 6.4). Note also that by the name invariant (Lemma 6.3b) \(x\) can only occur in \(\overline{t}\). Then:

is LO by the decoding invariant (Lemma 6.4). Note also that by the name invariant (Lemma 6.3b) \(x\) can only occur in \(\overline{t}\). Then:

-

1.2 Proof of the Progress Lemma (Lemma 8, p. 13)

A simple inspection of the machine transitions shows that final states have the form  . Then by the normal form invariant (Lemma 6.2a)

. Then by the normal form invariant (Lemma 6.2a)  is \(\beta \)-normal. \(\square \)

is \(\beta \)-normal. \(\square \)

1.3 Proof of the Weak Bisimulation Theorem (Theorem 2, p. 13)

-

1.

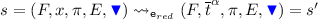

By induction on the length \(|\rho |\) of \(\rho \), using the one-step weak simulation lemma (Lemma 7). If \(\rho \) is empty then the empty derivation satisfies the statement. If \(\rho \) is given by \(\sigma :s\leadsto _{}^*s''\) followed by \(s''\leadsto _{}s'\) then by i.h. there exists \(e:\underline{s}\rightarrow _{\tiny \mathtt {LO}\beta }^*\underline{s''}\) s.t. \(|e|= |\sigma |_{{\mathtt m}}\). Cases of \(s''\leadsto _{}s'\):

-

(a)

Commutative or Exponential. Then \(\underline{s''}= \underline{s'}\) by Lemmas 7.1 and 7.2, and the statement holds taking \(d\mathrel {:=}e\) because \(|d|= |e|=_{\textit{i.h.}} |\sigma |_{{\mathtt m}}= |\rho |_{{\mathtt m}}\).

-

(b)

Multiplicative. Then \(\underline{s''}\rightarrow _{\tiny \mathtt {LO}\beta }\underline{s'}\) by Lemma 7.3 and defining \(d\) as \(e\) followed by such a step we obtain \(|d|= |e|+1 =_{\textit{i.h.}} |\sigma |_{{\mathtt m}}+ 1 = |\rho |_{{\mathtt m}}\).

-

(a)

-

2.

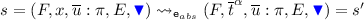

We use \(\mathtt {nf}_{{\mathtt e}{\mathtt c}}(s)\) to denote the normal form of \(s\) with respect to exponential and commutative transitions, that exists and is unique because \(\leadsto _{{\mathtt c}}\cup \leadsto _{{\mathtt e}}\) terminates (termination is given by forthcoming Theorems 3 and 4, that are postponed because they actually give precise complexity bounds, not just termination) and the machine is deterministic (as it can be seen by an easy inspection of the transitions). The proof is by induction on the length of \(d\). If \(d\) is empty then the empty execution satisfies the statement. If \(d\) is given by \(e:\overline{t}\rightarrow _{\tiny \mathtt {LO}\beta }^*w\) followed by \(w\rightarrow _{\tiny \mathtt {LO}\beta }u\) then by i.h. there is an execution \(\sigma :s\leadsto _{}^*s''\) s.t. \(w= \underline{s''}\) and \(|\sigma |_{{\mathtt m}}= |e|\). Note that since exponential and commutative transitions are mapped on equalities, \(\sigma \) can be extended as \(\sigma ':s\leadsto _{}^*s''\leadsto _{{\mathtt e}_{red},{\mathtt e}_{abs},{\mathtt c}_{1,2,3,4,5,6}}^*\mathtt {nf}_{{\mathtt e}{\mathtt c}}(s'')\) with \(\underline{\mathtt {nf}_{{\mathtt e}{\mathtt c}}(s'')} = w\) and \(|\sigma '|_{{\mathtt m}}=|e|\). By the progress property (Lemma 8) \(\mathtt {nf}_{{\mathtt e}{\mathtt c}}(s'')\) cannot be a final state, otherwise \(w= \underline{\mathtt {nf}_{{\mathtt e}{\mathtt c}}(s'')}\) could not reduce. Then \(\mathtt {nf}_{{\mathtt e}{\mathtt c}}(s'') \leadsto _{{\mathtt m}}s'\) (the transition is necessarily multiplicative because \(\mathtt {nf}_{{\mathtt e}{\mathtt c}}(s'')\) is normal with respect to the other transitions). By the one-step weak simulation lemma (Lemma 7.3) \(\underline{\mathtt {nf}_{{\mathtt e}{\mathtt c}}(s'')} = w\rightarrow _{\tiny \mathtt {LO}\beta }\underline{s'}\) and by determinism of \(\rightarrow _{\tiny \mathtt {LO}\beta }\) (Lemma 1) \(\underline{s'}= u\). Then the execution \(\rho \) defined as \(\sigma '\) followed by \(\mathtt {nf}_{{\mathtt e}{\mathtt c}}(s'') \leadsto _{{\mathtt m}}s'\) satisfy the statement, as \(|\rho |_{{\mathtt m}} = |\sigma '|_{{\mathtt m}} +1 = |\sigma |_{{\mathtt m}} +1 = |e|+ 1 = |d|\). \(\square \)

1.4 Proof of the Exponentials vs Multiplicatives Theorem (Theorem 3, p. 15)

-

1.

We prove that \(|\sigma |_{{\mathtt e}}\le |E|\). The statement follows from the environment size invariant (Lemma 9.2), for which \(|E|\le |\rho |_{{\mathtt m}}\).

If \(|\sigma |_{{\mathtt e}}= 0\) it is immediate. Then assume \(|\sigma |_{{\mathtt e}}> 0\), so that there is a first exponential transition in \(\sigma \), i.e. \(\sigma \) has a prefix \(s'\leadsto _{{\mathtt c}}^*\leadsto _{{\mathtt e}}s'''\) followed by an execution \(\tau :s'''\leadsto _{}^* s''\) such that \(|\tau |_{{\mathtt m}}= 0\). Cases of the first exponential transition \(\leadsto _{{\mathtt e}}\):

-

Case \(\leadsto _{{\mathtt e}_{abs}}\): the next transition is necessarily multiplicative, and so \(\tau \) is empty. Then \(|\sigma |_{{\mathtt e}}= 1\). Since the environment is non-empty (otherwise \(\leadsto _{{\mathtt e}_{abs}}\) could not apply), \(|\sigma |_{{\mathtt e}}\le |E|\) holds.

-

Case \(\leadsto _{{\mathtt e}_{(red,n)}}\): we prove by induction on n that \(|\sigma |_{{\mathtt e}}\le n\), that gives what we want because \(n\le |E|\) by Remark 1. Cases:

-

\(n = 1\)) Then \(\tau \) has the form \(s'''\leadsto _{{\mathtt c}}^* s''\) by Lemma 11.1, and so \(|\sigma |_{{\mathtt e}}= 1\).

-

\(n = 2\)) Then \(\tau \) is a prefix of \(\leadsto _{{\mathtt c}}^*\leadsto _{{\mathtt e}_{abs}}\) or \(\leadsto _{{\mathtt c}}^*\leadsto _{{\mathtt e}_{(red,1)}}\) by Lemma 11.2. In both cases \(|\sigma |_{{\mathtt e}}\le 2\).

-

\(n > 2\)) Then by Lemma 11.3 \(\tau \) is either shorter or equal to \(\leadsto _{{\mathtt c}}^*\leadsto _{{\mathtt e}_{(red,n-1)}}\), and so \(|\sigma |_{{\mathtt e}}\le 2\), or it is longer than \(\leadsto _{{\mathtt c}}^*\leadsto _{{\mathtt e}_{(red,n-1)}}\), i.e. it writes as \(\leadsto _{{\mathtt c}}^*\) followed by an execution \(\tau '\) starting with \(\leadsto _{{\mathtt e}_{(red,n-1)}}\). By i.h. \(|\tau '|\le n-1\) and so \(|\sigma |\le n\).

-

-

-

2.

This is a standard reasoning: since by local boundedness (the previous point) \({\mathtt m}\)-free sequences have a number of \({\mathtt e}\)-transitions that are bound by the number of preceding \({\mathtt m}\)-transitions, the sum of all \({\mathtt e}\)-transitions is bound by the square of \({\mathtt m}\)-transitions. It is analogous to the proof of Theorem 7.2.3 in [6]. \(\square \)

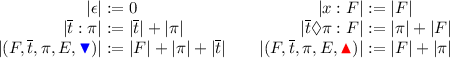

1.5 Proof of Commutatives vs Exponentials Theorem (Theorem 4, p. 15)

-

1.

We prove a slightly stronger statement, namely

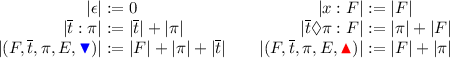

, by means of the following notion of size for stacks/frames/states:

, by means of the following notion of size for stacks/frames/states:

By direct inspection of the rules of the machine it can be checked that:

-

Exponentials Increase the Size: if \(s\leadsto _{{\mathtt e}}s'\) is an exponential transition, then \(|s'| \le |s| + |t|\) where \(|t|\) is the size of the initial term; this is a consequence of the fact that exponential steps retrieve a piece of code from the environment, which is a subterm of the initial term by Lemma 9.1;

-

Non-Exponential Evaluation Transitions Decrease the Size: if \(s\leadsto _{a} s'\) with

then

then  (for

(for  because the transition switches to backtracking, and thus the size of the code is no longer taken into account);

because the transition switches to backtracking, and thus the size of the code is no longer taken into account); -

Backtracking Transitions do not Change the Size: if \(s\leadsto _{a} s'\) with

then

then  .

.

Then a straightforward induction on \(|\rho |\) shows that

i.e. that

. Now note that \(|\cdot |\) is always non-negative and that since \(s\) is initial we have \(|s| = |t|\). We can then conclude with

. Now note that \(|\cdot |\) is always non-negative and that since \(s\) is initial we have \(|s| = |t|\). We can then conclude with

-

-

2.

We have to estimate

. Note that

. Note that-

(a)

, as

, as  pops variables from

pops variables from  , pushed only by

, pushed only by  ;

; -

(b)

, as

, as  pops pairs

pops pairs  from

from  , pushed only by

, pushed only by  ;

; -

(c)

, as

, as  ends backtracking phases, started only by

ends backtracking phases, started only by  .

.

Then

.

. -

(a)

-

3.

We have

. \(\square \)

. \(\square \)

1.6 Proof of the Useful MAM Overhead Bound Theorem (Theorem 5, p. 15)

-

1.

By definition, the length of the execution \(\rho \) simulating \(d\) is given by \(|\rho |= |\rho |_{{\mathtt m}}+ |\rho |_{{\mathtt e}}+|\rho |_c\). Now, by Theorem 3.2 we have \(|\rho |_{{\mathtt e}}= O(|\rho |_{{\mathtt m}}^2)\) and by Theorem 4.3 we have \(|\rho |_c= O((1+|\rho |_{{\mathtt e}})\cdot |t|) = O((1+|\rho |_{{\mathtt m}}^2)\cdot |t|)\). Therefore, \(|\rho |= O((1+|\rho |_{{\mathtt e}})\cdot |t|) = O((1+|\rho |_{{\mathtt m}}^2)\cdot |t|)\). By Theorem 2.2 \(|\rho |_{{\mathtt m}}= |d|\), and so \(|\rho |= O((1+|d|^2)\cdot |t|)\).

-

2.

The cost of implementing \(\rho \) is the sum of the costs of implementing the multiplicative, exponential, and commutative transitions. Remember that the idea is that variables are implemented as references, so that environment can be accessed in constant time (i.e. they do not need to be accessed sequentially):

-

(a)

Commutative: every commutative transition evidently takes constant time. At the previous point we bounded their number with \(O((1+|d|^2)\cdot |t|)\), which is then also the cost of all the commutative transitions together.

-

(b)

Multiplicative: a \(\leadsto _{{\mathtt m}_1}\) transition costs \(O(|t|)\) because it requires to rename the current code, whose size is bound by the size of the initial term by the subterm invariant (Lemma 9.1a). A \(\leadsto _{{\mathtt m}_2}\) transition also costs \(O(|t|)\) because executing the Checking AM on \(\overline{u}\) takes \(O(|\overline{u}|)\) commutative steps (Theorem 1.2), commutative steps take constant time, and the size of \(\overline{u}\) is bound by \(|t|\) by the subterm invariant (Lemma 9.1b). Therefore, all together the multiplicative transitions cost \(O(|d|\cdot |t|)\).

-

(c)

Exponential: At the previous point we bounded their number with \(|\rho |_{{\mathtt e}}= O(|d|^2)\). Each exponential step copies a term from the environment, that by the subterm invariant (Lemma 9.1d) costs at most \(O(|t|)\), and so their full cost is \(O((1+|d|)\cdot {|t|}^2)\) (note that this is exactly the cost of the commutative transitions, but it is obtained in a different way).

Then implementing \(\rho \) on RAM takes \(O((1+|d|)\cdot {|t|}^2)\) steps. \(\square \)

-

(a)

Rights and permissions

Copyright information

© 2016 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Accattoli, B. (2016). The Useful MAM, a Reasonable Implementation of the Strong \(\lambda \)-Calculus. In: Väänänen, J., Hirvonen, Å., de Queiroz, R. (eds) Logic, Language, Information, and Computation. WoLLIC 2016. Lecture Notes in Computer Science(), vol 9803. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-52921-8_1

Download citation

DOI: https://doi.org/10.1007/978-3-662-52921-8_1

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-662-52920-1

Online ISBN: 978-3-662-52921-8

eBook Packages: Computer ScienceComputer Science (R0)

with

with  Then

Then  for some environments

for some environments

with

with  because by well-labeledness the variables bound by

because by well-labeledness the variables bound by  with

with  The proof that

The proof that  is exactly as in the previous case.

is exactly as in the previous case. Note that

Note that  is LO by the decoding invariant (Lemma

is LO by the decoding invariant (Lemma

with

with  not a variable. Note that

not a variable. Note that  is LO by the decoding invariant (Lemma

is LO by the decoding invariant (Lemma

, by means of the following notion of size for stacks/frames/states:

, by means of the following notion of size for stacks/frames/states:

then

then  (for

(for  because the transition switches to backtracking, and thus the size of the code is no longer taken into account);

because the transition switches to backtracking, and thus the size of the code is no longer taken into account); then

then  .

.

. Now note that

. Now note that

. Note that

. Note that , as

, as  pops variables from

pops variables from  , pushed only by

, pushed only by  ;

; , as

, as  pops pairs

pops pairs  from

from  , pushed only by

, pushed only by  ;

; , as

, as  ends backtracking phases, started only by

ends backtracking phases, started only by  .

. .

. .

.