Abstract

We revisit the exact round complexity of secure computation in the multi-party and two-party settings. For the special case of two-parties without a simultaneous message exchange channel, this question has been extensively studied and resolved. In particular, Katz and Ostrovsky (CRYPTO ’04) proved that 5 rounds are necessary and sufficient for securely realizing every two-party functionality where both parties receive the output. However, the exact round complexity of general multi-party computation, as well as two-party computation with a simultaneous message exchange channel, is not very well understood.

These questions are intimately connected to the round complexity of non-malleable commitments. Indeed, the exact relationship between the round complexities of non-malleable commitments and secure multi-party computation has also not been explored.

In this work, we revisit these questions and obtain several new results. First, we establish the following main results. Suppose that there exists a k-round non-malleable commitment scheme, and let \(k'=\max (4,k+1)\); then,

-

(Two-party setting with simultaneous message transmission): there exists a \(k'\)-round protocol for securely realizing every two-party functionality;

-

(Multi-party setting): there exists a \(k'\)-round protocol for securely realizing the multi-party coin-flipping functionality.

As a corollary of the above results, by instantiating them with existing non-malleable commitment protocols (from the literature), we establish that four rounds are both necessary and sufficient for both the results above. Furthermore, we establish that, for every multi-party functionality five rounds are sufficient.

We actually obtain a variety of results offering trade-offs between rounds and the cryptographic assumptions used, depending upon the particular instantiations of underlying protocols.

Research supported in part from a DARPA/ARL SAFEWARE award, AFOSR Award FA9550-15-1-0274, and NSF CRII Award 1464397. The views expressed are those of the author and do not reflect the official policy or position of the Department of Defense, the National Science Foundation, or the U.S. Government. Also, Antigoni Polychroniadou received funding from CTIC under the grant 61061130540 and from CFEM supported by the Danish Strategic Research Council. This work was done in part while the authors were visiting the Simons Institute for the Theory of Computing, supported by the Simons Foundation and by the DIMACS/Simons Collaboration in Cryptography through NSF grant #CNS-1523467.

You have full access to this open access chapter, Download conference paper PDF

1 Introduction

The round complexity of secure computation is a fundamental question in the area of secure computation [20, 39, 40]. In the past few years, we have seen tremendous progress on this question, culminating into constant round protocols for securely computing any multi-party functionality [5, 9, 10, 21, 22, 27, 28, 36, 38]. These works essentially settle the question of asymptotic round complexity of this problem.

The exact round complexity of secure computation, however, is still not very well understoodFootnote 1. For the special case of two-party computation, Katz and Ostrovsky [26] proved that 5 rounds are necessary and sufficient. In particular, they proved that two-party coin-flipping cannot be achieved in 4 rounds, and presented a 5-round protocol for computing every functionality. To the best of our knowledge, the exact round complexity of multi-party computation has never been addressed before.

The standard model for multi-party computation assumes that parties are connected via authenticated point-to-point channels as well as simultaneous message exchange channels where everyone can send messages at the same time. Therefore, in each round, all parties can simultaneously exchange messages.

This is in sharp contrast to the “standard” model for two-party computation where, usually, a simultaneous message exchange framework is not considered. Due to this difference in the communication model, the negative result of Katz-Ostrovsky [26] for 4 rounds, does not apply to the multi-party setting. In particular, a 4 round multi-party coin-flipping protocol might still exist!

In other words, the results of Katz-Ostrovsky only hold for the special case of two parties without a simultaneous message exchange channel. The setting of two-party computation with a simultaneous message exchange channel has not been addressed before. Therefore, in this work we address the following two questions:

What is the exact round complexity of secure multi-party computation?

In the presence of a simultaneous message exchange channel, what is the exact round complexity of secure two-party computation?

These questions are intimately connected to the round complexity of non-malleable commitments [12]. Indeed, new results for non-malleable commitments have almost immediately translated to new results for secure computation. For example, the round complexity of coin-flipping was improved by Barak [3], and of every multi-party functionality by Katz et al. [27] based on techniques from non-malleable commitments. Likewise, black-box constructions for constant-round non-malleable commitments resulted in constant-round black-box constructions for secure computation [21, 38]. However, all of these results only focus on asymptotic improvements and do not try to resolve the exact round complexity, thereby leaving the following fundamental question unresolved:

What is the relationship between the exact round complexities of non-malleable commitments and secure computation?

This question is at the heart of understanding the exact round complexity of secure computation in both multi-party, and two-party with simultaneous message transmission.

1.1 Our Contributions

In this work we try to resolve the questions mentioned above. We start by focusing on the simpler case of two-party computation with a simultaneous message exchange channel, since it is a direct special case of the multi-party setting. We then translate our results to the multi-party setting.

Lower bounds for Coin-Flipping. We start by focusing on the following question.

How many simultaneous message exchange rounds are necessary for secure two-party computation?

We show that four simultaneous message exchange rounds are necessary. More specifically, we show that:

-

Theorem (Informal): Let \(\kappa \) be the security parameter. Even in the simultaneous message model, there does not exist a three-round protocol for the two-party coin-flipping functionality for \(\omega (\log \kappa )\) coins which can be proven secure via black-box simulation.

In fact, as a corollary all of the rounds must be “strictly simultaneous message transmissions", that is, both parties must simultaneously send messages in each of the 4 rounds. This is because in the simultaneous message exchange setting, the security is proven against the so called “rushing adversaries” who, in each round, can decide their message after seeing the messages of all honest parties in that round. Consequently, if only one party sends a message for example in the fourth round, this message can be “absorbed” within the third message of this partyFootnote 2, resulting in a three round protocol.

Results in the Two-Party Setting with a Simultaneous Message Exchange Channel. Next, we consider the task of constructing a protocol for coin-flipping (or any general functionality) in four simultaneous message exchange rounds and obtain a positive result. In fact, we obtain our results by directly exploring the exact relationship between the round complexities of non-malleable commitments and secure computation. Specifically, we first prove the following result:

-

Theorem (Informal): If there exists a k -round protocol for (parallel) non-malleable commitment, Footnote 3 then there exists a \(k'\) -round protocol for securely computing every two-party functionality with black-box simulation in the presence of a malicious adversary in the simultaneous message model, where \(k'=\max (4,k+1)\).

Instantiating this protocol with non-malleable commitments from [36], we get a four round protocol for every two-party functionality in the presence of a simultaneous message exchange channel, albeit under a non-standard assumption (adaptive one-way function). However, a recent result by Goyal et al. [23] constructs a non-malleable commitment protocol in three rounds from injective one-way functions, although their protocol does not immediately extend to the parallel setting. Instantiating our protocol with such a three-round parallel non-malleable commitment would yield a four round protocol under standard assumptions.

Results in the Multi-party Setting. Next, we focus on the case of the multi-party coin flipping functionality. We show that a simpler version of our two-party protocol gives a result for multi-party coin-flipping:

-

Theorem (Informal): If there exists a k -round protocol for (parallel) non-malleable commitments, then there exists a \(k'\) -round protocol for securely computing the multi-party coin-flipping functionality with black-box simulation in the presence of a malicious adversary for polynomially many coins where \(k'=\max (4,k+1)\).

Combining this result with the two-round multi-party protocol of Mukherjee and Wichs [34] (based on the LWE [37]), we obtain a \(k'+2\) round protocol for computing every multi-party functionality. Instantiating these protocols with non-malleable commitments from [36], we obtain a four round protocol for coin-flipping and a six round protocol for every functionality.

Finally, we show that the coin-flipping protocol for the multi-party setting can be extended to compute what we call the “coin-flipping with committed inputs” functionality. Using this protocol with the two-round protocol of [16] based on indistinguishability obfuscation [17], we obtain a five round MPC protocol.

1.2 Related Work

The round complexity of secure computation has a rich and long history. We only mention the results that are most relevant to this work in the computational setting. Note that, unconditionally secure protocols such as [6, 8] are inherently non-constant round. More specifically, the impossibility result of [11] implies that a fundamental new approach must be found in order to construct protocols, that are efficient in the circuit size of the evaluated function, with reduced communication complexity that beat the complexities of BGW, CCD, GMW etc.

For the computational setting and the special case of two party computation, the semi-honest secure protocol of Yao [33, 39, 40] consists of only three rounds (see Sect. 2). For malicious securityFootnote 4, a constant round protocol based on GMW was presented by Lindell [31]. Ishai et al. [25] presented a different approach which also results in a constant round protocol.

The problem of exact round complexity of two party computation was studied in the beautiful work of Katz and Ostrovsky [26] who provided a 5 round protocol for computing any two-party functionality. They also ruled out the possibility of a four round protocol for coin-flipping, thus completely resolving the case of two party (albeit without simultaneous message exchange, as discussed earlier). Recently Ostrovsky et al. [35] constructed a different 5-round protocol for the general two-party computation by only relying on black-box usage of the underlying trapdoor one-way permutation.

As discussed earlier, the standard setting for two-party computation does not consider simultaneous message exchange channels, and hence the negative results for the two-party setting do not apply to the multi-party setting where simultaneous message exchange channels are standard. To the best of our knowledge, prior to our work, the case of the two-party setting in the presence of a simultaneous message exchange channel was not explored in the context of the exact round complexity of secure computation.

For the multi-party setting, the exact round complexity has remained open for a long time. The work of [5] gave the first constant-round non black-box protocol for honest majority (improved by the black-box protocols of [9, 10]). Katz et al. [27], adapted techniques from [3, 5, 7, 12] to construct the first asymptotically round-optimal protocols for any multi-party functionality for the dishonest majority case. The constant-round protocol of [27] relied on non-black-box use of the adversary’s algorithm [2]. Constant-round protocols making black-box use of the adversary were constructed by [21, 28, 36], and making black-box use of one-way functions by Wee in \(\omega (1)\) rounds [38] and by Goyal in constant rounds [21]. Furtheremore, based on the non-malleable commitment scheme of [21, 22] construct a constant-round multi-party coin-tossing protocol. Lin et al. [30] presented a unified approach to construct UC-secure protocols from non-malleable commitments. However, as mentioned earlier, none of the aforementioned works focused on the exact round complexity of secure computation based on the round-complexity of non-malleable commitments. For a detailed survey of round complexity of secure computation in the preprocessing model or in the CRS model we refer to [1].

1.3 An Overview of Our Approach

We now provide an overview of our approach. As discussed earlier, we first focus on the two-party setting with a simultaneous message exchange channel.

The starting point of our construction is the Katz-Ostrovsky (KO) protocol [26] which is a four round protocol for one-sided functionalities, i.e., in that only one party gets the output. Recall that, this protocol does not assume the presence of a simultaneous message exchange channel. At the cost of an extra round, the KO two-party protocol can be converted to a complete (i.e. both-sided) protocol where both parties get their corresponding outputs via a standard trick [18] as follows: parties compute a modified functionality in which the first party \(P_1\) learns its output as well as the output of the second party \(P_2\) in an “encrypted and authenticated”Footnote 5 form. It then sends the encrypted value to \(P_2\) who can decrypt and verify its output.

A natural first attempt is to adapt this simple and elegant approach to the setting of simultaneous message exchange channel, so that the “encrypted/authenticated output” can somehow be communicated to \(P_2\) simultaneously at the same time when \(P_2\) sends its last message, thereby removing the additional round.

It is not hard to see that any such approach would not work. Indeed, in the presence of malicious adversaries while dealing with a simultaneous message exchange channel, the protocol must be proven secure against “rushing adversaries” who can send their messages after looking at the messages sent by the other party. This implies that, if \(P_1\) could indeed send the “encrypted/authenticated output” message simultaneously with last message from \(P_2\), it could have sent it earlier as well. Now, applying this argument repeatedly, one can conclude that any protocol which does not use the simultaneous message exchange channel necessarily in all of the four rounds, is bound to fail (see Sect. 3). In particular, any such protocol can be transformed, by simple rescheduling, into a 3-round protocol contradicting our lower bound.Footnote 6

This means that we must think of an approach which must use the simultaneous message exchange channel in each round. In light of this, a natural second attempt is to run two executions of a 4-round protocol (in which only one party learns the output) in “opposite” directions. This would allow both parties to learn the output. Unfortunately, such approaches do not work in general since there is no guarantee that an adversarial party would use the same input in both protocol executions. Furthermore, another problem with this approach is that of “non-malleability” where a cheating party can make its input dependent on the honest party’s input: for example, it can simply “replay” back the messages it receives. A natural approach to prevent such attacks is to deploy non-malleable commitments, as we discuss below.

Simultaneous Executions + Non-malleable Commitments. Following the approach discussed above we observe that:

-

1.

A natural direction is to use two simultaneous executions of the KO protocol (or any other similar 4-round protocol) over the simultaneous message exchange channel in opposite directions. Since we have only 4 rounds, a different protocol (such as some form of 2-round semi-honest protocol based on Yao) is not a choice.

-

2.

We must use non-malleable commitments to prevent replay/mauling attacks.

We remark that, the fact that non-malleable commitments come up as a natural tool is not a coincidence. As noted earlier, the multi-party case is well known to be inherently connected to non-malleable commitments. Even though our current focus is solely on the two-party case, this setting is essentially (a special case of) the multi-party setting due to the use of the simultaneous message exchange channel. Prior to our work, non-malleable commitments have been used extensively to design multi-party protocols [21, 22, 29, 33]. However, all of these works result in rather poor round complexity because of their focus on asymptotic, as opposed to exact, number of rounds.

To obtain our protocol, we put the above two ideas together, modifying several components of KOFootnote 7 to use non-malleable commitments. These components are then put together in a way such that, even though there are essentially two simultaneous executions of the protocol in opposite directions, messages of one protocol cannot be maliciously used to affect the other messages. In the following, we highlight the main ideas of our construction:

-

1.

The first change we make is to the proof systems used by KO. Recall that KO uses the Fiege-Shamir (FS) protocol as a mechanism to “force the output” in the simulation. Our first crucial modification is to consider a variant of the FS protocol in which the verifier gives two non-malleable commitments (\(\mathsf {nmcom}\)) to two strings \(\sigma _1,\sigma _2\) and gives a witness indistinguishable proof-of-knowledge (WIPOK) that it knows one of them. These are essentially the simulation trapdoors, but implemented through \(\mathsf {nmcom}\) instead of a one-way function. This change is actually crucial, and as such, brings in an effect similar to “simulation sound” zero-knowledge.

-

2.

The oblivious transfer protocol based on trapdoor permutations and coin-tossing now performs coin-tossing with the help of \(\mathsf {nmcom}\) instead of simple commitments. This is a crucial change since this allows us to slowly get rid of the honest party’s input in the simulation and still argue that the distribution of the adversary’s input does not change as a result of this.

We note that there are many parallel executions on \(\mathsf {nmcom}\) that take place at this stage, and therefore, we require that \(\mathsf {nmcom}\) should be non-malleable under many parallel executions. This is indeed true for most \(\mathsf {nmcom}\).

-

3.

Finally, we introduce a mechanism to ensure that the two parties use the exact same input in both executions. Roughly speaking, this is done by requiring the parties to prove consistency of messages “across” protocols.

-

4.

To keep the number of rounds to \(k+1\) (or 4 if \(k<3\)), many of the messages discussed above are “absorbed” with other rounds by running in parallel.

Multi-party Setting. The above protocol does not directly extend to the multi-party settings. Nevertheless, for the special case of coin flipping, we show that a (simplified) version of the above protocol works for the multi-party case. This is because the coin-tossing functionality does not really require any computation, and therefore, we can get rid of components such as oblivious transfer. In fact, this can be extended “slightly more” to also realize the “coin-flipping with committed inputs” since committing the input does not depend on inputs of other parties.

Next, to obtain our result for general functionalities, we simply invoke known results: using [34] with coin-flipping gives us a six round protocol, and using [26] gives a five round result.

2 Preliminaries

Notation. We denote the security parameter by \(\kappa \). We say that a function \(\mu :\mathbb {N}\rightarrow \mathbb {N}\) is negligible if for every positive polynomial \(p(\cdot )\) and all sufficiently large \(\kappa \)’s it holds that \(\mu (\kappa )<\frac{1}{p(\kappa )}\). We use the abbreviation PPT to denote probabilistic polynomial-time. We often use [n] to denote the set \(\{1,...,n\}\). Moreover, we use \(d \leftarrow \mathcal{D}\) to denote the process of sampling d from the distribution \(\mathcal{D}\) or, if \(\mathcal{D}\) is a set, a uniform choice from it. If \(\mathcal{D}_1\) and \(\mathcal{D}_2\) are two distributions, then we denote that they are statistically close by \(\mathcal{D}_1 \approx _{\mathrm {s}}\mathcal{D}_2\); we denote that they are computationally indistinguishable by \(\mathcal{D}_1 \approx _{\mathrm {c}}\mathcal{D}_2\); and we denote that they are identical by \( \mathcal{D}_1 \equiv \mathcal{D}_2\). Let V be a random variable corresponding to the distribution \(\mathcal{D}\). Sometimes we abuse notation by using V to denote the corresponding distribution \(\mathcal{D}\).

We assume familiarity with several standard cryptographic primitives. For notational purposes, we recall here the basic working definitions for some of them. We skip the well-known formal definitions for secure two-party and multi-party computations (see full version for a formal description). It will be sufficient to have notation for the two-party setting. We denote a two party functionality by \(F : \{0,1\}^* \times \{0,1\}^*\rightarrow \{0,1\}^* \times \{0,1\}^*\) where \(F = (F_1, F_2)\). For every pair of inputs (x, y), the output-pair is a random variable \((F_1(x, y), F_2(x, y))\) ranging over pairs of strings. The first party (with input x) should obtain \(F_1(x, y)\) and the second party (with input y) should obtain \(F_2(x, y)\). Without loss of generality, we assume that F is deterministic. The security is defined through the ideal/real world paradigm where for adversary \(\mathcal{A}\) participating in the real world protocol, there exists an ideal world simulator \(\mathcal{S}\) such that for every (x, y), the output of \(\mathcal{S}\) is indistinguishable from that of \(\mathcal{A}\). See the full version for an extended discussion.

We now recall the definitions for non-malleable commitments as well as some components from the work of Katz-Ostrovsky [26].

2.1 Tag Based Non-malleable Commitments

Let \(\mathsf {nmcom}=\langle C,R\rangle \) be a k round commitment protocol where C and R represent (randomized) committer and receiver algorithms, respectively. Denote the messages exchanged by \((\mathsf {nm}_1,\ldots ,\mathsf {nm}_{k})\) where \(\mathsf {nm}_i\) denotes the message in the i-th round.

For some string \(u\in \{0,1\}^\kappa \), tag \(\mathsf {id}\in \{0,1\}^t\), non-uniform PPT algorithm M with “advice” string \(z\in \{0,1\}^*\), and security parameter \(\kappa \), define \((v,\mathsf {view})\) to be the output of the following experiment: M on input \((1^\kappa ,z)\), interacts with C who commits to u with tag \(\mathsf {id}\); simultaneously, M interacts with \(R({1^\kappa ,\,\widetilde{\mathsf {id}}})\) where \(\widetilde{\mathsf {id}}\) is arbitrarily chosen by M (M’s interaction with C is called the left interaction, and its interaction with R is called the right interaction); M controls the scheduling of messages; the output of the experiment is \((v,\mathsf {view})\) where v denotes the value M commits to R in the right execution unless \(\widetilde{\mathsf {id}}=\mathsf {id}\) in which case \(v=\bot \), and \(\mathsf {view}\) denotes the view of M in both interactions.

Definition 1

(Tag based non-malleable commitments). A commitment scheme \(\mathsf {nmcom}=\langle C,R\rangle \) is said to be non-malleable with respect to commitments if for every non-uniform PPT algorithm M (man-in-the-middle), for every pair of strings \((u_0, u_1)\in \{0,1\}^\kappa \times \{0,1\}^\kappa \), every tag-string \(\mathsf {id}\in \{0,1\}^{t}\), every (advice) string \(z\in \{0,1\}^{*}\), the following two distributions are computationally indistinguishable,

Parallel Non-malleable Commitments. We consider a strengthening of \(\mathsf {nmcom}\) in which M can receive commitments to m strings on the “left”, say \((u_1,\ldots ,u_m)\), with tags \((\mathsf {id}_1,\ldots ,\mathsf {id}_m)\) and makes m commitments on the “right” with tags \((\widetilde{\mathsf {id}}_1,\ldots ,\widetilde{\mathsf {id}}_m)\). We assume that m is a fixed, possibly a-priori bounded, polynomial in the security parameter \(\kappa \). In the following let \(i\in [m],b\in \{0,1\}\): We say that a \(\mathsf {nmcom}\) is m-bounded parallel non-malleable commitment if for every pair of sequences \(\{u^b_i\}\) the random variables \((\{v^0_i\},\mathsf {view}^0)\) and \((\{v^1_i\},\mathsf {view}^1)\) are computationally indistinguishable where \(\{v^b_i\}\) denote the values committed by M in m sessions on right with tags \(\{\widetilde{\mathsf {id}}_i\}\) while receiving parallel commitments to \(\{u^b_i\}\) on left with tags \(\{\mathsf {id}_i\}\), and \(\mathsf {view}^b\) denotes M’s view.

First Message Binding Property. It will be convenient in the notation to assume that the first message \(\mathsf {nm}_1\) of the non-malleable commitment scheme \(\mathsf {nmcom}\) statistically determines the message being committed. This can be relaxed to only require that the message is fixed before the last round if \(k\ge 3\).

2.2 Components of Our Protocol

In this section, we recall some components from the KO protocol [26]. These are mostly standard and recalled here for a better exposition. The only (minor but crucial) change needed in our protocol is to the FLS proof system [13–15] where a non-malleable commitment protocol is used by the verifier. For concreteness, let us discuss how to fix these proof systems first.

Modified Feige-Shamir Proof Systems. We use two proof systems: \(\varPi _{\scriptscriptstyle \mathrm {WIPOK}}\) and \(\varPi _{\scriptscriptstyle \mathrm {FS}}\). Protocol \(\varPi _{\scriptscriptstyle \mathrm {WIPOK}}\) is the 3-round, public-coin, witness-indistinguishable proof-of-knowledge based on the work of Feige et al. [14] for proving graph Hamiltonicity. This proof system proves statements of the form \(\mathsf {st}_1\wedge \mathsf {st}_2\) where \(\mathsf {st}_1\) is fixed at the first round of the protocol, but \(\mathsf {st}_2\) is determined only in the last round of the protocol.Footnote 8 For concreteness, this proof system is given in the full version.

Protocol \(\varPi _{\scriptscriptstyle \mathrm {FS}}\) is the 4-round zero-knowledge argument-of-knowledge protocol of Feige and Shamir [15], which allows the prover to prove statement \(\mathsf {thm}\), with the modification that the protocol from verifier’s side is implemented using \(\mathsf {nmcom}\). More specifically,

-

Recall that the Feige-Shamir protocol consists of two executions of \(\varPi _{\scriptscriptstyle \mathrm {WIPOK}}\) in reverse directions. In the first execution, the verifier selects a one-way function f and sets \(x_1=f(w_1)\), \(x_2=f(w_2)\) and proves the knowledge of a witness for \(x_1\vee x_2\). In the second execution, prover proves the knowledge of a witness to the statement \(\mathsf {thm}\vee (x_1\vee x_2)\) where \(\mathsf {thm}\) is the statement to be proven. The rounds of these systems can be somewhat parallelized to obtain a 4-round protocol.

-

Our modified system, simply replaces the function f and \(x_1,x_2\) with two executions of \(\mathsf {nmcom}\). For convenience, suppose that \(\mathsf {nmcom}\) has only 3 rounds. Then, our protocol creates the first message of two independent executions of \(\mathsf {nmcom}\) to strings \(\sigma _1,\sigma _2\), denoted by \(\mathsf {nm}_1^1,\mathsf {nm}_1^2\) respectively, and sets \(x_1= \mathsf {nm}_1^1, x_2=\mathsf {nm}_1^2\). The second and third messages of \(\mathsf {nmcom}\) are sent with the second and third messages of the original FS protocol.

If \(\mathsf {nmcom}\) has more than 3 rounds, simply complete the first \(k-3\) rounds of the two executions before the 4 messages of the proof system above are exchanged.

-

As before, although \(\varPi _{\scriptscriptstyle \mathrm {FS}}\) proves statement \(\mathsf {thm}\), as noted in [26], it actually proves statements of the form \(\mathsf {thm}\wedge \mathsf {thm}'\) where \(\mathsf {thm}\) can be fixed in the second round, and \(\mathsf {thm}'\) in the fourth round. Usually \(\mathsf {thm}\) is empty and not mentioned. Indeed, this is compatible with the second \(\varPi _{\scriptscriptstyle \mathrm {WIPOK}}\) which proves statement of the form \(\mathsf {st}_1\wedge \mathsf {st}_2\), just set \(\mathsf {st}_1=\mathsf {thm},\mathsf {st}_2=\mathsf {thm}'\).

For completeness, we describe the full \(\varPi _{\scriptscriptstyle \mathrm {FS}}\) protocol in the full version.

Components of Katz-Ostrovsky Protocol

The remainder of this section is largely taken from [26] where we provide basic notations and ideas for semi-honest secure two-party computation based on Yao’s garbled circuits and semi-honest oblivious transfer (based on trapdoor one-way permutations). Readers familiar with [26] can skip this part without loss in readability.

Semi-honest Secure Two-Party Computation. We view Yao’s garbled circuit scheme [32, 39] as a tuple of PPT algorithms \((\mathsf {GenGC},\mathsf {EvalGC})\), where \(\mathsf {GenGC}\) is the “generation procedure” which generates a garbled circuit for a circuit C along with “labels,” and \(\mathsf {EvalGC}\) is the “evaluation procedure” which evaluates the circuit on the “correct" labels. Each individual wire i of the circuit is assigned two labels, namely \(Z_{i,0}, Z_{i,1}\). More specifically, the two algorithms have the following format (here \(i\in [\kappa ], b\in \{0,1\}\)):

-

\((\{ Z_{i,b}\},{\mathsf {GC}}_y ) \leftarrow \mathsf {GenGC}(1^\kappa , F,y)\): \(\mathsf {GenGC}\) takes as input a security parameter \(\kappa \), a circuit F and a string \(y\in \{0,1\}^\kappa \). It outputs a garbled circuit \({\mathsf {GC}}_y\) along with the set of all input-wire labels \(\{Z_{i,b}\}\). The garbled circuit may be viewed as representing the function \(F(\cdot , y)\).

-

\(v = \mathsf {EvalGC}({\mathsf {GC}}_y, \{ Z_{i,x_i}\})\): Given a garbled circuit \({\mathsf {GC}}_y\) and a set of input-wire labels \(\{ Z_{i,x_i}\}\) where \(x\in \{0,1\}^\kappa \), \(\mathsf {EvalGC}\) outputs either an invalid symbol \(\bot \), or a value \(v=F(x,y)\).

The following properties are required:

-

Correctness. \(\Pr \left[ F(x,y) = \mathsf {EvalGC}({\mathsf {GC}}_y, \{Z_{i,x_i}\})\right] = 1\) for all F, x, y, taken over the correct generation of \({\mathsf {GC}}_y, \{Z_{i,b}\}\) by \(\mathsf {GenGC}\).

-

Security. There exists a PPT simulator \(\mathsf {SimGC}\) such that for any (F, x) and uniformly random labels \(\{Z_{i,b}\}\), we have that:

$$\begin{aligned} \left( {\mathsf {GC}}_y, \{ Z_{i,x_i}\}\right) \mathop {\approx }\limits ^\mathrm{c}\mathsf {SimGC}\left( 1^\kappa ,F,v\right) \end{aligned}$$where \(\left( \{ Z_{i,b}\},{\mathsf {GC}}_y\right) \leftarrow \mathsf {GenGC}\left( 1^\kappa , F,y\right) \) and \(v = F(x,y)\).

In the semi-honest setting, two parties can compute a function F of their inputs, in which only one party, say \({P_1}\), learns the output, as follows. Let x, y be the inputs of \({P_1},{P_2}\), respectively. First, \({P_2}\) computes \((\{ Z_{i,b}\},{\mathsf {GC}}_y ) \leftarrow \mathsf {GenGC}(1^\kappa , F,y)\) and sends \({\mathsf {GC}}_y\) to \({P_1}\). Then, the two parties engage in \(\kappa \) parallel instances of OT. In particular, in the i-th instance, \({P_1}\) inputs \(x_i\), \({P_2}\) inputs \((Z_{i,0},Z_{i,1})\) to the OT protocol, and \({P_1}\) learns the “output” \(Z_{i,x_i}\). Then, \({P_1}\) computes \(v = \mathsf {EvalGC}({\mathsf {GC}}_y, \{ Z_{i,x_i}\})\) and outputs \(v=F(x,y)\).

A 3-round, semi-honest, OT protocol can be constructed from enhanced trapdoor permutations (TDP). For notational purposes, define TDP as follows:

Definition 2

(Trapdoor permutations). Let \(\mathcal{F}\) be a triple of \({PPT}\) algorithms \(({\mathrm {Gen}}, {\mathrm {Eval}}, {\mathrm {Invert}})\) such that if \({\mathrm {Gen}}(1^\kappa )\) outputs a pair \((f,\mathsf{td})\), then \({\mathrm {Eval}}(f, \cdot )\) is a permutation over \(\{0,1\}^\kappa \) and \({\mathrm {Invert}}(f,\mathsf{td}, \cdot )\) is its inverse. \(\mathcal{F}\) is a trapdoor permutation such that for all \({PPT}\) adversaries A:

For convenience, we drop \((f,\mathsf{td})\) from the notation, and write \(f(\cdot ),f^{-1}(\cdot )\) to denote algorithms \({\mathrm {Eval}}(f, \cdot ), {\mathrm {Invert}}(f,\mathsf{td}, \cdot )\) respectively, when \(f,\mathsf{td}\) are clear from the context. We assume that \(\mathcal{F}\) satisfies (a weak variant of ) “certifiability”: namely, given some f it is possible to decide in polynomial time whether \({\mathrm {Eval}}(f,\cdot )\) is a permutation over \(\{0,1\}^\kappa \).

Let \(\mathsf {H}\) be the hardcore bit function for \(\kappa \) bits for the family \(\mathcal{F}\); \(\kappa \) hardcore bits are obtained from a single-bit hardcore function h and \(f\in \mathcal{F}\) as follows: \(\mathsf {H}(z)=h(z)\Vert h(f(z))\Vert \ldots \Vert h(f^{\kappa -1}(z))\). Informally, \(\mathsf {H}(z)\) looks pseudorandom given \(f^\kappa (z)\).

The semi-honest OT protocol based on TDP is constructed as follows. Let \({P_2}\) hold two strings \(Z_0,Z_1 \in \{0,1\}^\kappa \) and \({P_1}\) hold a bit b. In the first round, \({P_2}\) chooses trapdoor permutation \((f, f ^{-1}) \leftarrow {\mathrm {Gen}}(1^{\kappa })\) and sends f to \({P_1}\). Then \({P_1}\) chooses two random string \(z_0', z_1'\leftarrow \{0, 1\}^\kappa \), computes \(z_b = f^{\kappa }(z_b')\) and \(z_{1-b}=z'_{1-b}\) and sends \((z_0, z_1)\) to \({P_2}\). In the last round \({P_2}\) computes \(W_a = Z_a \oplus \mathsf {H}(f^{-\kappa }(z_a))\) where \(a \in \{0, 1\}\), \(\mathsf {H}\) is the hardcore bit function and sends \((W_0,W_1)\) to \({P_1}\). Finally, \({P_2}\) can recover \(Z_b\) by computing \(Z_{b}=W_{b}\oplus \mathsf {H}(z_{b})\).

Putting it altogether, we obtain the following 3-round, semi-honest secure two-party protocol for the single-output functionality F (here only \(P_1\) receives the output):

Protocol \(\varPi _\mathsf{SH}\) . \({P_1}\) holds input \(x \in \{0,1\}^\kappa \) and \({P_2}\) holds inputs \(y \in \{0,1\}^\kappa \). Let \(\mathcal{F}\) be a family of trapdoor permutations and let \(\mathsf {H}\) be a hardcore bit function. For all \(i\in [\kappa ]\) and \(b\in \{0,1\}\) the following steps are executed:

-

Round-1: \({P_2}\) computes \((\{ Z_{i,b}\},{\mathsf {GC}}_y ) \leftarrow \mathsf {GenGC}(1^\kappa , F,y)\) and chooses trapdoor permutation \((f_{i,b}, f _{i,b}^{-1}) \leftarrow {\mathrm {Gen}}(1^{\kappa })\) and sends \(({\mathsf {GC}}_y,\{f_{i,b}\})\) to \({P_2}\).

-

Round-2: \({P_1}\) chooses random strings \( \{ z'_{i,b}\}\), computes \(z_{i,b} = f^{\kappa }(z'_{i,b})\) and \(z_{i,1-b}=z'_{i,1-b}\) and sends \( \{ z_{i,b}\}\) to \({P_2}\).

-

Round-3: \({P_2}\) computes \(W_{i,b} = Z_{i,b} \oplus \mathsf {H}(f_{i,b}^{-\kappa }(z_{i,b}))\) and sends \( \{ W_{i,b}\}\) to \({P_2}\).

-

Output: \({P_1}\) recovers the labels \(Z_{i,x_i}=W_{i,x_i}\oplus \mathsf {H}(z_{i,x_i})\) and computes \(v = \mathsf {EvalGC}({\mathsf {GC}}_y, \{ Z_{i,x_i}\})\) where \(v=F(x,y)\)

Equivocal Commitment Scheme \(\mathsf {Eqcom}\) . We assume familiarity with equivocal commitments, and use the following equivocal commitment scheme \(\mathsf {Eqcom}\) based on any (standard) non-interactive, perfectly binding, commitment scheme \(\mathsf {com}\): to commit to a bit x, the sender chooses coins \(\zeta _1, \zeta _2\) and computes \(\mathsf {Eqcom}(x;\zeta _1, \zeta _2)\mathop {=}\limits ^{\mathrm {def}} \mathsf {com}(x; \zeta _1)||\mathsf {com}(x; \zeta _2)\). It sends \(\mathsf{C}_x = \mathsf {Eqcom}(x;\zeta _1, \zeta _2)\) to the receiver along with a zero-knowledge proof that \(\mathsf{C}_x \) was constructed correctly (i.e., that there exist \(x,\zeta _1, \zeta _2\) such that \(\mathsf{C}_x =\mathsf {Eqcom}(x;\zeta _1, \zeta _2)\)).

To decommit, the sender chooses a bit b at random and reveals x, \(\zeta _b\). Note that a simulator can “equivocate” the commitment by setting \(C = \mathsf {com}(x; \zeta _1)||\mathsf {com}(\overline{x}; \zeta _2)\) for a random bit x, simulating the zero-knowledge proof and then revealing \(\zeta _1\) or \(\zeta _2\) depending on x and the bit to be revealed. This extends to strings by committing bitwise.

Sketch of the Two-Party KO Protocol. The main component of the two-party KO protocol is Yao’s 3-round protocol \(\varPi _\mathsf{SH}\), described above, secure against semi-honest adversaries. In order to achieve security against a malicious adversary their protocol proceeds as follows. Both parties commit to their inputs; run (modified) coin-tossing protocols to guarantee that each party obtains random coins which are committed to the other party (note that coin flipping for the side of the garbler \({P_2}\) is not needed since a malicious garbler \({P_2}\) gains nothing by using non-uniform coins. To force \({P_1}\) to use random coins the authors use a 3-round sub-protocol which is based on the work of [4]); and run the \(\varPi _\mathsf{SH}\) protocol together with ZK arguments to avoid adversarial inconsistencies in each round. Then, simulation extractability is guaranteed by the use of WI proof of knowledge and output simulation by the Feige-Shamir ZK argument of knowledge.

However, since even a ZK argument for the first round of the protocol alone will already require 4 rounds, the authors use specific proof systems to achieve in total a 4-round protocol. In particular, the KO protocol uses a specific WI proof of knowledge system with the property that the statement to be proven need not be known until the last round of the protocol, yet soundness, completeness, and witness-indistinguishability still hold. Also, this proof system has the property that the first message from the prover is computed independently of the statement being proved. Note that their 4-round ZK argument of knowledge enjoys the same properties. Furthermore, their protocol uses an equivocal commitment scheme to commit to the garble circuit for the following reason. Party \({P_1}\) may send his round-two message before the proof of correctness for round one given by \({P_2}\) is complete. Therefore, the protocol has to be constructed in a way that the proof of correctness for round one completes in round three and that party \({P_2}\) reveals the garbled circuit in the third round. But since the proof of security requires \({P_2}\) to commit to a garble circuit at the end of the first round, \({P_2}\) does so using an equivocal commitment scheme.

3 The Exact Round Complexity of Coin Tossing

In this section we first show that it is impossible to construct two-party (simulatable) coin-flipping for a super-logarithmic number of coins in 3 simultaneous message exchange rounds. We first recall the definition of a simulatable coin flipping protocol using the real/ideal paradigm from [27].

Definition 3

([27]). An \(n\)-party protocol \({\varPi }\) is a simulatable coin-flipping protocol if it is an \((n-1)\)-secure protocol realizing the coin-flipping functionality. That is, for every PPT adversary \(\mathcal{A}\) corrupting at most \(n-1\) parties there exists an expected PPT simulator \(\mathcal{S}\) such that the (output of the) following experiments are indistinguishable. Here we parse the result of running protocol \({\varPi }\) with adversary \(\mathcal{A}\) (denoted by \(\textsc {REAL}_{{\varPi },\mathcal{A}}(1^\kappa ,1^\lambda )\)) as a pair \((c,\mathsf {view}_\mathcal{A})\) where \(c\in \{0,1\}^\lambda \cup \{\bot \}\) is the outcome and \(\mathsf {view}_\mathcal{A}\) is the view of the adversary \(\mathcal{A}\).

\(\textsc {REAL}(1^\kappa , 1^\lambda )\) | \(\textsc {IDEAL}(1^\kappa ,1^\lambda )\) |

|---|---|

\(c,\mathsf {view}_\mathcal{A}\leftarrow \textsc {REAL}_{{\varPi },\mathcal{A}}(1^\kappa ,1^\lambda )\) | \(c'\leftarrow \{0,1\}^\lambda \) |

\(\widetilde{c},\mathsf {view}_\mathcal{S}\leftarrow \mathcal{S}^\mathcal{A}(c',1^\kappa ,1^\lambda )\) | |

Output \((c,\mathsf {view}_\mathcal{A})\) | If \(\widetilde{c}= \{c',\bot \}\) then Output \((\widetilde{c},\mathsf {view}_\mathcal{S})\) |

Else output \(\texttt {fail}\) |

We restrict ourselves to the case of two parties (\(n=2\)), which can be extended to any \(n> 2\). Below we denote messages in protocol \({\varPi }\) which are sent by party \({P}_i\) to party \({P}_j\) in the \({\rho }\)-th round by \({\mathsf{m}}_{i,j}^{{\varPi }[{\rho }]}\).

As mentioned earlier, Katz and Ostrovsky [26] showed that simulatable coin-flipping protocol is impossible in 4 rounds without simultaneous message exchange. Since we will use the result for our proofs in this section, we state their result below without giving their proof.

Lemma 1

[26, Theorem 1] Let \(p(\kappa ) = \omega (\log \kappa )\), where \(\kappa \) is the security parameter. Then there does not exist a 4-round protocol without simultaneous message transmission for tossing \(p(\kappa )\) coins which can be proven secure via black-box simulation.

In the following, we state our impossibility result for coin-fliping in 3 rounds of simultaneous message exchange.

Lemma 2

Let \(p(\kappa ) = \omega (log \kappa )\), where \(\kappa \) is the security parameter. Then there does not exist a 3-round protocol with simultaneous message transmission for tossing \(p(\kappa )\) coins which can be proven secure via black-box simulation.

Proof:

We prove the above statement by showing that a 3-round simultaneous message exchange protocol can be “rescheduled” to a 4-round non-simultaneous protocol which contradicts the impossibility of [26]. Here by rescheduling we mean rearrangement of the messages without violating mutual dependencies among them, in particular without altering the next-message functions.

For the sake of contradiction, assume that there exists a protocol \({\varPi }_\mathsf{flip}^{\Leftrightarrow }\) which realizes simulatable coin-flipping in 3 simultaneous message exchange rounds, then we can reschedule it in order to construct a protocol \({\varPi }_\mathsf{flip}^{{\mathop {\rightarrow }\limits ^{\leftarrow }}}\) which realizes simulatable coin-flipping in 4 roundsFootnote 9 without simultaneous message exchange as follows:

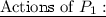

We provide a pictorial presentation of the above rescheduling in Fig. 1 for better illustration.

Now, without loss of generality assume that \({P}_1\) is corrupted. Then we need to build an expected PPT simulator \(\mathcal{S}_{{{P}}_1}\) (or simply \(\mathcal{S}\)) meeting the adequate requirements (according to Definition 1). First note that, since by assumption the protocol \({\varPi }_\mathsf{flip}^{\Leftrightarrow }\) is secure (i.e. achieves Definition 1) the following holds: for any corrupt \({{P}}^\Leftrightarrow _1\) executing the simultaneous message exchange protocol \({\varPi }_\mathsf{flip}^{\Leftrightarrow }\) there exists an expected PPT simulator \(\mathcal{S}^{\Leftrightarrow }\) (let us call it the “inner” simulator and \(\mathcal{S}\) the “outer” simulator) in the ideal world. So, \(\mathcal{S}\) can be constructed using \(\mathcal{S}^{\Leftrightarrow }\) for a corrupted party \({{P}}^\Leftrightarrow _1\) which can be emulated by \(\mathcal{S}\) based on \({{P}}_1\). Finally, \(\mathcal{S}\) just outputs whatever \(\mathcal{S}^{\Leftrightarrow }\) returns. \(\mathcal{S}\) emulates the interaction between \(\mathcal{S}^{\Leftrightarrow }\) and \({{P}}^\Leftrightarrow _1\) as follows:

-

1.

On receiving a value \(c'\in \{0,1\}^\lambda \) from the ideal functionality, \(\mathcal{S}\) runs the inner simulator \(\mathcal{S}^{\Leftrightarrow }(c',1^\kappa ,1^\lambda )\) to get the first message \({\mathsf{m}}_{2,1}^{{\varPi }_\mathsf{flip}^{\Leftrightarrow }[1]}\). Notice that in protocol \({\varPi }_\mathsf{flip}^{\Leftrightarrow }\) the first message from (honest) party \(P^\Leftrightarrow _2\) does not depend on the first message of the corrupted party \({{P}}^\Leftrightarrow _1\). So, the inner simulator must be able to produce the first message even before seeing the first message of party \(P_1\) (or the emulated party \({{P}}^\Leftrightarrow _1\))Footnote 10. Then it runs \(P_1\) to receive the first message \({\mathsf{m}}_{1,2}^{{\varPi }_\mathsf{flip}^{\Leftrightarrow }[1]}\).

-

2.

Then \(\mathcal{S}\) forwards \({\mathsf{m}}_{1,2}^{{\varPi }_\mathsf{flip}^{\Leftrightarrow }[1]}\) to the inner simulator which then returns the second simulated message \({\mathsf{m}}_{2,1}^{{\varPi }_\mathsf{flip}^{\Leftrightarrow }[2]}\). Now \(\mathcal{S}\) can construct the simulated message \({\mathsf{m}}_{2,1}^{{\varPi }_\mathsf{flip}^{{\mathop {\rightarrow }\limits ^{\leftarrow }}}[2]}\) by combining \({\mathsf{m}}_{2,1}^{{\varPi }_\mathsf{flip}^{\Leftrightarrow }[2]}\) and \({\mathsf{m}}_{2,1}^{{\varPi }_\mathsf{flip}^{\Leftrightarrow }[1]}\) received earlier (see above) which \(\mathcal{S}\) then forwards to \(P_1\).

-

3.

In the next step, \(\mathcal{S}\) gets back messages \({\mathsf{m}}_{1,2}^{{\varPi }_\mathsf{flip}^{{\mathop {\rightarrow }\limits ^{\leftarrow }}}[3]} = ({\mathsf{m}}_{1,2}^{{\varPi }_\mathsf{flip}^{\Leftrightarrow }[2]},{\mathsf{m}}_{1,2}^{{\varPi }_\mathsf{flip}^{\Leftrightarrow }[3]})\) from \(P_1\). It then forwards the second message \({\mathsf{m}}_{1,2}^{{\varPi }_\mathsf{flip}^{\Leftrightarrow }[2]}\) to \(\mathcal{S}^{\Leftrightarrow }\), which then returns the third simulated message \({\mathsf{m}}_{2,1}^{{\varPi }_\mathsf{flip}^{\Leftrightarrow }[3]}\). Finally it forwards the third message \({\mathsf{m}}_{1,2}^{{\varPi }_\mathsf{flip}^{\Leftrightarrow }[3]}\) to \(\mathcal{S}^{\Leftrightarrow }\).

-

4.

\(\mathcal{S}\) outputs whatever transcript \(\mathcal{S}^{\Leftrightarrow }\) outputs in the end.

-

5.

Note that, whenever the inner simulator \(\mathcal{S}^{\Leftrightarrow }\) asks to rewind the emulated \({{P}}^\Leftrightarrow _1\), \(\mathcal{S}\) rewinds \(P_1\).

It is not hard to see that the simulator \(\mathcal{S}\) emulates correctly the party \({{P}}^\Leftrightarrow _1\) and hence by the security of \({\varPi }_\mathsf{flip}^{\Leftrightarrow }\), the inner simulator \(\mathcal{S}^{\Leftrightarrow }\) returns an indistinguishable (with the real world) view. The key-point is that the re-scheduling of the messages from protocol \({\varPi }_\mathsf{flip}^{\Leftrightarrow }\) does not affect the dependency (hence the corresponding next message functions) and hence the correctness and security remains intact in \({\varPi }_\mathsf{flip}^{{\mathop {\rightarrow }\limits ^{\leftarrow }}}\).

We stress that the proof for the case where \({P}_2\) is corrupted is straightforward given the above. However, in that case, since \({{P}}_2\)’s first message depends on the first message of honest \({P}_1\), it is mandatory for the inner simulator \(\mathcal{S}^{\Leftrightarrow }\) to output the first message before seeing anything even in order to run the corrupted \({{P}}_2\) which is not necessary in the above case. As we stated earlier this is possible as the inner simulator \(\mathcal{S}^{\Leftrightarrow }\) should be able to handle rushing adversaries.

Hence we prove that if the underlying protocol \({\varPi }_\mathsf{flip}^{\Leftrightarrow }\) securely realizes simulatable coin-flipping in 3 simultaneous rounds then \({\varPi }_\mathsf{flip}^{{\mathop {\rightarrow }\limits ^{\leftarrow }}}\) securely realizes coin-flipping in 4 non-simultaneous rounds which contradicts the KO lower bound (Lemma 1). This concludes the proof. \(\square \)

Going a step further we show that any four-round simultaneous message exchange protocol realizing simulatable coin-flipping must satisfy a necessary property, that is each round must be a strictly simultaneous message exchange round, in other words, both parties must send some “non-redundant” message in each round. By “non-redundant” we mean that the next message from the other party must depend on the current message. Below we show the above, otherwise the messages can be again subject to a “rescheduling” mechanism similar to the one in Lemma 2, to yield a four-round non-simultaneous protocol; thus contradicting Lemma 1. More specifically,

Lemma 3

Let \(p(\kappa ) = \omega (log \kappa )\), where \(\kappa \) is the security parameter. Then there does not exist a 4-round protocol with at least one unidirectional round (i.e. a round without simultaneous message exchange) for tossing \(p(\kappa )\) coins which can be proven secure via black-box simulation.

Proof:

[Proof (Sketch)] We provide a sketch for any protocol with exactly one unidirectional round where only one party, say \({P}_1\) sends a message to \({P}_2\). Clearly, there can be four such cases where \({P}_2\)’s message is omitted in one of the four rounds. In Fig. 2 we show the case where \({P}_2\) does not send the message in the first round, and any such protocol can be re-scheduled (similar to the proof of Lemma 2) to a non-simultaneous 4-round protocol without altering any possible message dependency. This observation can be formalized in a straightforward manner following the proof of Lemma 2 and hence we omit the details. Therefore, again combining with the impossibility from Lemma 1 by [26] such simultaneous protocol can not realize simulatable coin-flipping. The other cases can be easily observed by similar rescheduling trick and therefore we omit the details for those cases. \(\square \)

4 Two-Party Computation in the Simultaneous Message Exchange Model

In this section, we present our two party protocol for computing any functionality in the presence of a static, malicious and rushing adversary. As discussed earlier, we are in the simultaneous message exchange channel setting where both parties can simultaneously exchange messages in each round. The structure of this protocol will provide a basis for our later protocols as well.

An overview of the protocol appears in the introduction (Sect. 1). In a high level, the protocol consists of two simultaneous executions of a one-sided (single-output) protocol to guarantee that both parties learn the output. The overall skeleton of the one-sided protocol resembles the KO protocol [26] which uses a clever combination of OT, coin-tossing, and \(\varPi _{\scriptscriptstyle \mathrm {WIPOK}}\) to ensure that the protocol is executed with a fixed input (allowing at the same time simulation extractability of the input), and relies on the zero-knowledge property of \(\varPi _{\scriptscriptstyle \mathrm {FS}}\) to “force the output”. A sketch of the KO protocol is given in Sect. 2.2. In order to ensure “independence of inputs” our protocol relies heavily on non-malleable commitments. To this end, we change the one-sided protocol to further incorporate non-malleable commitments so that similar guarantees can be obtained even in the presence of the “opposite side” protocol, and we further rely on zero-knowledge proofs to ensure that parties use the same input in both executions.

4.1 Our Protocol

To formally define our protocol, let:

-

\((\mathsf {GenGC},\mathsf {EvalGC})\) be the garbled-circuit mechanism with simulator \(\mathsf {SimGC}\); \(\mathcal{F}=({\mathrm {Gen}}, {\mathrm {Eval}}, {\mathrm {Invert}})\) be a family of TDPs with domain \(\{0,1\}^\kappa \); \(\mathsf {H}\) be the hardcore bit function for \(\kappa \) bits; \(\mathsf {com}\) be a perfectly binding non-interactive commitment scheme; \(\mathsf {Eqcom}\) be the equivocal scheme based on \(\mathsf {com}\), as described in Sect. 2;

-

\(\mathsf {nmcom}\) be a tag based, parallel Footnote 11 non-malleable commitment scheme for strings, supporting tags/identities of length \(\kappa \);

-

\(\varPi _{\scriptscriptstyle \mathrm {WIPOK}}\) be the witness-indistinguishable proof-of-knowledge for NP as described in Sect. 2;

-

\(\varPi _{\scriptscriptstyle \mathrm {FS}}\) be the proof system for NP, based on \(\mathsf {nmcom}\) and \(\varPi _{\scriptscriptstyle \mathrm {WIPOK}}\), as described in Sect. 2;

-

Simplifying assumption: for notational convenience only, we assume for now that \(\mathsf {nmcom}\) consists of exactly three rounds, denoted by \((\mathsf {nm}_1,\mathsf {nm}_2,\mathsf {nm}_3)\). This assumption is removed later (see Remark 1).

We also assume that the first round, \(\mathsf {nm}_1\), is from the committer and statistically determines the message to be committed. We use the notation \(\mathsf {nm}_1=\mathsf {nmcom}_1(\mathsf {id}, r; \omega )\) to denote the committer’s first message when executing \(\mathsf {nmcom}\) with identity \(\mathsf {id}\) to commit to string r with randomness \(\omega \).

We are now ready to describe our protocol.

Protocol \(\varPi _{\scriptscriptstyle \mathrm {2PC}}\). We denote the two parties by \({P_1}\) and \({P_2}\); \({P_1}\) holds input \(x \in \{0,1\}^\kappa \) and \({P_2}\) holds input \(y \in \{0,1\}^\kappa \). Furthermore, the identities of \({P_1},{P_2}\) are \(\mathsf {id}_1,\mathsf {id}_2\) respectively where \(\mathsf {id}_1\ne \mathsf {id}_2\). Let \(F:=(F_1,F_2): \{0,1\}^\kappa \times \{0,1\}^\kappa \rightarrow \{0,1\}^\kappa \times \{0,1\}^\kappa \) be the functions to be computed.

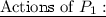

The protocol consists of four (strictly) simultaneous message exchange rounds, i.e., both parties send messages in each round. The protocol essentially consists of two simultaneous executions of a protocol in which only one party learns the output. In the first protocol, \({P_1}\) learns the output and the messages of this protocol are denoted by \((m_1,m_2,m_3,m_4)\) where \((m_1,m_3)\) are sent by \({P_1}\) and \((m_2,m_4)\) are sent by \({P_2}\). Likewise, in the second protocol \({P_2}\) learns the output and the messages of this protocol are denoted by \((\widetilde{m}_1,\widetilde{m}_2,\widetilde{m}_3,\widetilde{m}_4)\) where \((\widetilde{m}_1,\widetilde{m}_3)\) are sent by \({P_2}\) and \((\widetilde{m}_2,\widetilde{m}_4)\) are sent by \({P_1}\). Therefore, messages \((m_j,\widetilde{m}_j)\) are exchanged simultaneously in the j-th round, \(j\in \{1,\ldots ,4\}\) (see Fig. 3).

We now describe how these messages are constructed in each round below. In the following i always ranges from 1 to \(\kappa \) and b from 0 to 1.

-

Round 1. In this round \({P_1}\) sends a message \(m_1\) and \({P_2}\) sends a symmetrically constructed message \(\widetilde{m}_1\). We first describe how \({P_1}\) constructs \(m_1\).

-

1.

\({P_1}\) starts by committing to \(2\kappa \) random strings \(\{(r_{1,0},r_{1,1}),\ldots ,(r_{\kappa ,0},r_{\kappa ,1})\}\) using \(2\kappa \) parallel and independent executions of \(\mathsf {nmcom}\) with identity \(\mathsf {id}_1\). I.e., it uniformly chooses strings \(r_{i,b}\), randomness \(\omega _{i,b}\), and generates \(\mathsf {nm}^{i,b}_1\) which is the first message corresponding to the execution of \(\mathsf {nmcom}(\mathsf {id}_1,r_{i,b};\omega _{i,b})\).

-

2.

\({P_1}\) prepares the first message \(\mathsf {p}_1\) of \(\varPi _{\scriptscriptstyle \mathrm {WIPOK}}\), as well as the first message \(\mathsf {fs}_1\) of \(\varPi _{\scriptscriptstyle \mathrm {FS}}\).

For later reference, define \(\mathsf {st}_1\) to be the following: \(\exists \{(r_i,\omega _i)\}_{i\in [\kappa ]}\) s.t.:

$$\begin{aligned} \forall i: \big (\mathsf {nm}_1^{i,0}=\mathsf {nmcom}_1(\mathsf {id}_1,r_{i};\omega _{i}) \vee \mathsf {nm}_1^{i,1}=\mathsf {nmcom}_1(\mathsf {id}_1,r_{i};\omega _{i})\big ) \end{aligned}$$Informally, \(\mathsf {st}_1\) represents that \({P_1}\) “knows” one of the decommitment values for every i.

-

3.

Message \(m_1\) is defined to be the tuple \(\left( \{\mathsf {nm}^{i,b}_1\}, \mathsf {p}_1,\mathsf {fs}_1\right) \).

-

Performs the same actions as \({P_1}\) to sample the values \(\left\{ \big ({\widetilde{{r}}}_{i,b},{\widetilde{\omega }}_{i,b}\big )\right\} \) and constructs \(\widetilde{m}_1:=\left( \{{\widetilde{\mathsf {nm}}}^{i,b}_1\}, {\widetilde{\mathsf {p}}}_1,{\widetilde{\mathsf {fs}}}_1\right) \) where all \({\widetilde{\mathsf {nm}}}^{i,b}_1\) are generated with \({\mathsf {id}_2}\). Define the statement \(\widetilde{\mathsf {st}}_1\) analogously for these values.

-

1.

-

Round 2. In this round \({P_2}\) sends a message \(m_2\) and \({P_1}\) sends a symmetrically constructed message \(\widetilde{m}_2\). We first describe how \({P_2}\) constructs \(m_2\).

-

1.

\({P_2}\) generates the second messages \(\{\mathsf {nm}^{i,b}_2\}\) corresponding to all executions of \(\mathsf {nmcom}\) initiated by \({P_1}\) (with \(\mathsf {id}_1\)).

-

2.

\({P_2}\) prepares the second message \(\mathsf {p}_2\) of the \(\varPi _{\scriptscriptstyle \mathrm {WIPOK}}\) protocol initiated by \({P_1}\).

-

3.

\({P_2}\) samples random strings \( \{r'_{i,b}\}\) and \(\big (f_{i,b}, f ^{-1}_{i,b}\big ) \leftarrow {\mathrm {Gen}}(1^{\kappa })\) for the oblivious transfer executions.

-

4.

\({P_2}\) obtains the garbled labels and the circuit for \(F_1\): \(\big (\{Z_{i,b}\},~{\mathsf {GC}}_y\big )= \mathsf {GenGC}\big (1^\kappa , F_1, y ~; ~\varOmega \big )\).

-

5.

\({P_2}\) generates standard commitments to the labels, and an equivocal commitment to the garbled circuit: i.e., \(\mathsf {C}_{\mathsf {lab}}^{i,b}\leftarrow \mathsf {com}(Z_{i,b};\omega '_{i,b})\) and \(\mathsf {C}_{\mathsf {gc}}\leftarrow \mathsf {Eqcom}({\mathsf {GC}}_y;\zeta )\).

-

6.

\({P_2}\) prepares the second message \(\mathsf {fs}_2\) of the \(\varPi _{\scriptscriptstyle \mathrm {FS}}\) protocol initiated by \({P_1}\).

For later reference, define \(\mathsf {st}_2\) to be the following: \(\exists ~\big ( y, \varOmega , {\mathsf {GC}}_y, \{Z_{i,b}, \omega '_{i,b}\},\zeta \big )\) s.t.:

-

(a)

\(\big (\{Z_{i,b}\},~{\mathsf {GC}}_y\big ) = \mathsf {GenGC}\big (1^\kappa , F_1, y ~; ~\varOmega \big )\)

-

(b)

\(\forall (i,b): \mathsf {C}_{\mathsf {lab}}^{i,b} = \mathsf {com}(Z_{i,b};\omega '_{i,b})\)

-

(c)

\(\mathsf {C}_{\mathsf {gc}}= \mathsf {Eqcom}({\mathsf {GC}}_y;\zeta )\)

(Informally, \(\mathsf {st}_2\) is the statement that \({P_2}\) performed this step correctly.)

-

(a)

-

7.

Define message \(m_2:=\Big ( \{ \mathsf {nm}^{i,b}_2, r'_{i,b}, f_{i,b}, \mathsf {C}_{\mathsf {lab}}^{i,b} \}, \mathsf {C}_{\mathsf {gc}}, \mathsf {p}_2, \mathsf {fs}_2 \Big )\).

-

Performs the same actions as \({P_2}\) in the previous step to construct the message \(\widetilde{m}_2:=\Big ( \{ {\widetilde{\mathsf {nm}}}^{i,b}_2, {\widetilde{{r}}}'_{i,b}, \widetilde{f}_{i,b}, \widetilde{\mathsf {C}}_{\mathsf {lab}}^{i,b} \}, \widetilde{\mathsf {C}}_{\mathsf {gc}}, {\widetilde{\mathsf {p}}}_2, {\widetilde{\mathsf {fs}}}_2 \Big )\) w.r.t. identity \(\mathsf {id}_2\), function \(F_2\), and input x. Define the (remaining) values \(\widetilde{f}'^{-1}_{i,b},{\widetilde{Z}}_{i,b},{\widetilde{\omega }}'_{i,b}, {\mathsf {GC}}_x, \widetilde{\varOmega },\widetilde{\zeta }\) and statement \(\widetilde{\mathsf {st}}_2\) analogously.

-

1.

-

Round 3. In this round \({P_1}\) sends a message \(m_3\) and \({P_2}\) sends a symmetrically constructed message \(\widetilde{m}_3\). We first describe how \({P_1}\) constructs \(m_3\).

-

1.

\({P_1}\) prepares the third message \(\{\mathsf {nm}^{i,b}_3\}\) of \(\mathsf {nmcom}\) (with \(\mathsf {id}_1\)).

-

2.

If any of \(\{f_{i,b}\}\) are invalid, \({P_1}\) aborts. Otherwise, it invokes \(\kappa \) parallel executions of oblivious transfer to obtain the input-wire labels corresponding to its input x. More specifically, \({P_1}\) proceeds as follows:

-

If \(x_i =0\), sample \(z' _{i,0} \leftarrow \{0,1\}^\kappa \), set \(z_{i,0} =f^\kappa _{i,0} (z' _{i,0} )\), and \(z_{i,1}=r_{i,1}\oplus r'_{i,1}\).

-

If \(x_i =1\), sample \(z' _{i,1} \leftarrow \{0,1\}^\kappa \), set \(z_{i,1} =f^\kappa _{i,1} (z' _{i,1} )\), and \(z_{i,0}=r_{i,0}\oplus r'_{i,0}\).

-

-

3.

Define \(\mathsf {st}_3\) to be the following: \(\exists \{(r_i,\omega _i)\}_{i\in [\kappa ]}\) s.t. \(\forall i\):

-

(a)

\((\mathsf {nm}_1^{i,0}=\mathsf {nmcom}_1(\mathsf {id}_1,r_{i};\omega _{i}) \wedge z_{i,0}=r_{i}\oplus r'_{i,0} )\), or

-

(b)

\((\mathsf {nm}_1^{i,1}=\mathsf {nmcom}_1(\mathsf {id}_1,r_{i};\omega _{i})\wedge z_{i,1}=r_{i}\oplus r'_{i,1})\)

Informally, \(\mathsf {st}_3\) says that \({P_1}\) correctly constructed \(\{z_{i,b}\}\).

-

(a)

-

4.

\({P_1}\) prepares the final message \(\mathsf {p}_3\) of \(\varPi _{\scriptscriptstyle \mathrm {WIPOK}}\) proving the statement: \(\mathsf {st}_1\wedge \mathsf {st}_3\).Footnote 12 \({P_1}\) also prepares the third message \(\mathsf {fs}_3\) of \(\varPi _{\scriptscriptstyle \mathrm {FS}}\).

-

5.

Define \(m_3:=\Big (\{\mathsf {nm}^{i,b}_3,z_{i,b}\}, \mathsf {p}_3,\mathsf {fs}_3\Big )\) to \({P_2}\).

-

Performs the same actions as \({P_1}\) in the previous step to construct the message \(\widetilde{m}_3:=\Big (\{{\widetilde{\mathsf {nm}}}^{i,b}_3,{\widetilde{{z}}}_{i,b}\}, {\widetilde{\mathsf {p}}}_3,{\widetilde{\mathsf {fs}}}_3\Big )\) w.r.t. identity \(\mathsf {id}_2\) and input y. The (remaining) values \(\{{\widetilde{{z}}}_{i,b},{\widetilde{{z}}}'_{i,b}\}\) and statement \(\widetilde{\mathsf {st}}_3\) are defined analogously.

-

1.

-

Round 4. In this round \({P_2}\) sends a message \(m_4\) and \({P_1}\) sends a symmetrically constructed message \(\widetilde{m}_4\). We first describe how \({P_2}\) constructs \(m_4\).

-

1.

If \(\mathsf {p}_3,\mathsf {fs}_3\) are not accepting, \({P_2}\) aborts. Otherwise, \({P_2}\) completes the execution of the oblivious transfers for every (i, b). I.e., it computes \({W}_{i,b} = Z_{i,b} \oplus \mathsf {H}({f^{-\kappa }}(z_{i,b}))\).

-

2.

Define \(\mathsf {st}_4\) to be the following: \(\exists ~ (y,\varOmega ,{\mathsf {GC}}_y,\{Z_{i,b}\},\omega '_{i,b}, z'_{i,b},\widetilde{z}'_i\}_{i\in [\kappa ],b\in \{0,1\}})\) s.t.

-

(a)

\(\forall (i,b)\): \(\left( \mathsf {C}_{\mathsf {lab}}^{i,b}=\mathsf {com}(Z_{i,b};\omega '_{i,b})\right) ~\bigwedge ~\left( f^\kappa _{i,b} (z' _{i,b} )=z_{i,b}\right) ~\bigwedge ~\left( W_{i,b} = Z_{i,b} \oplus \mathsf {H}( (z'_{i,b}))\right) \)

-

(b)

\(\left( \big (\{Z_{i,b}\},~{\mathsf {GC}}_y\big ) = \mathsf {GenGC}\big (1^\kappa , F_1, y ~; ~\varOmega \big )\right) ~\bigwedge ~\left( \mathsf {C}_{\mathsf {gc}}= \mathsf {Eqcom}({\mathsf {GC}}_y;\zeta )\right) \)

-

(c)

\(\forall i\): \(\widetilde{z}_{i,y_i}=\widetilde{f}^\kappa _{i,y_i}(\widetilde{z}'_{i})\)

Informally, this means that \({P_2}\) performed both oblivious transfers correctly.

-

(a)

-

3.

\({P_2}\) prepares the final message \(\mathsf {fs}_4\) of \(\varPi _{\scriptscriptstyle \mathrm {FS}}\) proving the statement \(\mathsf {st}_2\wedge \mathsf {st}_4\).Footnote 13

-

4.

Define \(m_4:=\Big (\{ W_{i,b}\},\mathsf {fs}_4, {\mathsf {GC}}_y, \zeta \Big )\).

-

Performs the same actions as \({P_2}\) in the previous step to construct the message \(\widetilde{m}_4:=\Big (\{{\widetilde{W}_{i,b}}\},{\widetilde{\mathsf {fs}}}_4, {\mathsf {GC}}_x, {\widetilde{\zeta }} \Big )\) and analogously defined statement \(\widetilde{\mathsf {st}}_4\).

-

1.

-

Output Computation.

-

\({P_1}\)’s output: If any of \((\mathsf {fs}_4,{\mathsf {GC}}_y, \zeta )\) or the openings of \(\{W_{i,b}\}\) are invalid, \({P_1}\) aborts. Otherwise, \({P_1}\) recovers the garbled labels \(\{Z_i:=Z_{i,x_i}\}\) from the completion of the oblivious transfer, and computes \(F_1(x,y)= \mathsf {EvalGC}({\mathsf {GC}}_y, \{Z_{i}\})\).

-

\({P_2}\)’s output: If any of \(({\widetilde{\mathsf {fs}}}_4,{\mathsf {GC}}_x, {\widetilde{\zeta }})\) or the openings of \(\{\widetilde{W}_{i,b}\}\) are invalid, \({P_2}\) aborts. Otherwise, \({P_2}\) recovers the garbled labels \(\{{\widetilde{Z}}_i:={\widetilde{Z}}_{i,y_i}\}\) from the completion of the oblivious transfer, and computes \(F_2(x,y)= \mathsf {EvalGC}({\mathsf {GC}}_x, \{ {\widetilde{Z}}_{i}\})\).

-

Remark 1:

If \(\mathsf {nmcom}\) has \(k>3\) rounds, the first \(k-3\) rounds can be performed before the 4 rounds of \(\varPi _{\scriptscriptstyle \mathrm {2PC}}\) start; this results in a protocol with \(k+1\) rounds. If \(k<3\), then the protocol has only 4 rounds. Also, for large k, it suffices if the first \(k-2\) rounds of \(\mathsf {nmcom}\) statistically determine the message to be committed; the notation is adjusted to simply use the transcript up to \(k-2\) rounds to define the statements for the proof systems.

Finally, the construction is described for a deterministic F. Known transformations (see [19, Sect. 7.3]) yield a protocol for randomized functionalities, without increasing the rounds.

4.2 Proof of Security

We prove the security of our protocol according to the ideal/real paradigm. We design a sequence of hybrids where we start with the real world execution and gradually modify it until the input of the honest party is not needed. The resulting final hybrid represents the simulator for the ideal world.

Theorem 1

Assuming the existence of a trapdoor permutation family and a k-round parallel non-malleable commitment schemes, protocol \(\varPi _\mathsf {2PC}\) securely computes every two-party functionality \(F=(F_1,F_2)\) with black-box simulation in the presence of a malicious adversary. The round complexity of \(\varPi _\mathsf {2PC}\) is \(k'=\max (4,k+1)\).

Proof:

Due to the symmetric nature of our protocol, it is sufficient to prove security against the malicious behavior of any party, say \({P_1}\). We show that for every adversary \(\mathcal{A}\) who participates as \({P_1}\) in the “real” world execution of \(\varPi _\mathsf {2PC}\), there exists an “ideal” world adversary (simulator) \(\mathcal{S}\) such that for all inputs x, y of equal length and security parameter \(\kappa \in \mathbb {N}\):

We prove this claim by considering hybrid experiments \(H_0,H_1,\ldots \) as described below. We start with \(H_0\) which has access to both inputs x and y, and gradually get rid of the honest party’s input y to reach the final hybrid.

-

\(H_0\): Identical to the real execution. More specifically, \(H_0\) starts the execution of \(\mathcal{A}\) providing it fresh randomness and input x, and interacts with it honestly by performing all actions of \({P_2}\) with uniform randomness and input y. The output consists of \(\mathcal{A}\)’s view.

By construction, \(H_0\) and the output of \(\mathcal{A}\) in the real execution are identically distributed.

-

\(H_1\): Identical to \(H_0\) except that this hybrid also performs extraction of \(\mathcal{A}\)’s implicit input \(x^*\) from \(\varPi _{\scriptscriptstyle \mathrm {WIPOK}}\); in addition, it also extracts the “simulation trapdoor” \(\sigma \) from the first three rounds \((\mathsf {fs}_1,\mathsf {fs}_2,\mathsf {fs}_3)\) of \(\varPi _{\scriptscriptstyle \mathrm {FS}}\).Footnote 14 More specifically, \(H_1\) proceeds as follows:

-

1.

It completes the first three broadcast rounds exactly as in \(H_0\), and waits until \(\mathcal{A}\) either aborts or successfully completes the third round.

-

2.

At this point, \(H_1\) proceeds to extract the witness corresponding to each proof-of-knowledge completed in the first three rounds.

Specifically, \(H_1\) defines a cheating prover \(P^*\) which acts identically to \(H_0\), simulating all messages for \(\mathcal{A}\), except those corresponding to (each execution of) \(\varPi _{\scriptscriptstyle \mathrm {WIPOK}}\) which are forwarded outside. It then applies the extractor of \(\varPi _{\scriptscriptstyle \mathrm {WIPOK}}\) to obtain the “witnesses” which consists of the following: values \(\{(r_{i}, \omega _{i})\}_{i\in [\kappa ]}\) which is the witness for \(\mathsf {st}_1\wedge \mathsf {st}_3\), and a value \((\sigma ,\omega _\sigma )\) which is the simulation trapdoor for \(\varPi _{\scriptscriptstyle \mathrm {FS}}\).

If extraction fails, \(H_1\) outputs \(\mathsf {fail}\). Otherwise, let \(b_i \in \{0,1\}\) be such that \(\mathsf {nm}^{i,b_i}_1= \mathsf {nmcom}_1(\mathsf {id}_1,r_i;\omega _i)\). \(H_1\) defines a string \(x^* = (x_1^*,\ldots ,x_\kappa ^*)\) as follows:

$$\begin{aligned} {\text {If}}~ z_{i,b_i}=r_{i}\oplus r'_{i,b_i} ~{\text {then}} ~x_i^*=1-b_i; ~{\text {otherwise}}~ x_i^*=b_i \end{aligned}$$ -

3.

\(H_1\) completes the final round and prepares the output exactly as \(H_0\).

Claim 1. \(H_1\) is expected polynomial time, and \(H_0,H_1\) are statistically close.

Proof sketch: This is a (completely) standard proof which we sketch here. Let p be the probability with which \(\mathcal{A}\) completes \(\varPi _{\scriptscriptstyle \mathrm {WIPOK}}\) in the third round, and let \(\mathsf {trans}\) be the transcript. The extractor for \(\varPi _{\scriptscriptstyle \mathrm {WIPOK}}\) takes expected time \(\mathsf {poly}(\kappa )/p\) and succeeds with probability \(1-\mu (\kappa )\). It follows that the expected running time of \(H_1\) is \(\mathsf {poly}(\kappa )+p\cdot \frac{\mathsf {poly}(\kappa )}{p}=\mathsf {poly}(\kappa )\), and its output is statistically close to that of \(H_0\).Footnote 15 \(\diamond \)

-

1.

-

\(H_2\): Identical to \(H_1\) except that this hybrid uses the simulation trapdoor \((\sigma ,\omega _\sigma )\) as the witness to compute \(\mathsf {fs}_4\) in the last round. (Recall that \(\mathsf {fs}_4\) is the last round of an execution of \(\varPi _{\scriptscriptstyle \mathrm {WIPOK}}\)).

It is easy to see that \(H_2\) and \(H_3\) are computationally indistinguishable due the WI property of \(\varPi _{\scriptscriptstyle \mathrm {WIPOK}}\).

-

\(H_3\): In this hybrid, we get rid of \({P_2}\)’s input y that is implicitly present in values \(\{\widetilde{z}_{i,b}\}\) and \(\{r_{i,b}\}\) in \(\mathsf {nmcom}\) (but keep it everywhere else for the time being). Formally, \(H_3\) is identical to \(H_2\) except that in round 3 it sets \(\widetilde{z}_{i,b}=\widetilde{r}_{i,b} \oplus \widetilde{r}'_{i,b}\) for all (i, b).

Claim 2. The outputs of \(H_2\) and \(H_3\) are computationally indistinguishable.

Proof. We rely on the non-malleability of \(\mathsf {nmcom}\) to prove this claim. Let D be a distinguisher for \(H_2\) and \(H_3\).

The high level idea is as follows: first we define two string sequences \(\{u^1_{i,b}\}\) and \(\{u^2_{i,b}\}\) and a man-in-the-middle M (which incorporates \(\mathcal{A}\)) and receives non-malleable commitments to one of these sequences in parallel. Then we define a distinguisher \(D_{\mathsf {nm}}\) which incorporates both M and D, takes as input the value committed by M and its view, and can distinguish which sequence was committed to M. This violates non-malleability of \(\mathsf {nmcom}\).

Formally, define a man-in-middle M who receives \(2\kappa \) \(\mathsf {nmcom}\) commitments on left and makes \(2\kappa \) commitments on right as follows:

-

1.

M incorporates \(\mathcal{A}\) internally, and proceeds exactly as \(H_1\) by sampling all messages internally except for the messages of \(\mathsf {nmcom}\) corresponding to \({P_2}\). These messages are received from an outside committer as follows. M samples uniformly random values \(\{\widetilde{z}_{i,b}\}\) and \(\{\widetilde{r}'_{i,b}\}\) and defines \(\{u^0_{i,b}\}\) and \(\{u^1_{i,b}\}\) as:

$$\begin{aligned} u^0_{i,y_i}=\widetilde{z}_{i,y_i}\oplus \widetilde{r}'_{i,y_i},~~u^0_{i,\overline{y_i}}\leftarrow \{0,1\}^\kappa ,~~u^1_{i,b}=\widetilde{z}_{i,b}\oplus \widetilde{r}'_{i,b} \forall (i,b) \end{aligned}$$It forwards \(\{u^0_{i,b}\}\) and \(\{u^1_{i,b}\}\) to the outside committer who commits to one of these sequences in parallel. M forwards these messages to \(\mathcal{A}\), and forwards the message given by \(\mathcal{A}\) corresponding to \(\mathsf {nmcom}\) to the outside receiver.

-

2.

After the first three rounds are finished, M halts by outputting its view. In particular, M does not continue further like \(H_1\), it does not extract any values, and does not complete the fourth round. (In fact, M cannot complete the fourth round, since it does not have the witness).

Let \(\{v^0_{i,b}\}\) (resp., \(\{v^1_{i,b}\}\)) be the sequence of values committed by M with \(\mathsf {id}_2\) when it receives a commitment to \(\{u^0_{i,b}\}\) (resp., \(\{u^1_{i,b}\}\)) with \(\mathsf {id}_1\).

Define the distinguisher \(D_{\mathsf {nm}}\) as follows: \(D_{\mathsf {nm}}\) incorporates both M and D. It receives as input a pair \((\{v_{i,b}\},\mathsf {view})\) and proceeds as follows:

-

1.

\(D_{\mathsf {nm}}\) parses \(v_{i,b}\) to obtain a string \(\sigma \) corresponding to the “trapdoor witness.”Footnote 16

-

2.

\(D_{\mathsf {nm}}\) starts M and feeds him the view \(\mathsf {view}\) and continues the execution just like \(H_1\). It, however, does not rewind \(\mathcal{A}\) (internal to M), instead it uses \(\sigma \) (which is part of its input) and values in \(\mathsf {view}\) to complete the last round of the protocol.

-

3.

When \(\mathcal{A}\) halts, \(D_{\mathsf {nm}}\) feeds the view of \(\mathcal{A}\) to D and outputs whatever D outputs.

It is straightforward to verify that if M receives commitments corresponding to \(\{u^0_{i,b}\}\) (resp., \(\{u^1_{i,b}\}\) ) then the output of \(D_\mathsf {nm}\) is identical to that of \(H_2\) (resp., \(H_3\)). The claim follows. \(\diamond \)

-

1.

-

\(H_4\): Identical to \(H_3\) except that \(H_4\) changes the “inputs of the oblivious transfer” from \((Z_{i,0},Z_{i,1})\) to \((Z_{i,x^*_i},Z_{i,x^*_i})\). Formally, in the last round, \(H_4\) sets \(W_{i,b} = Z_{i,x^*_i} \oplus \mathsf {H}( (z'_{i,b}))\) for every (i, b), but does everything else as \(H_3\).

\(H_3\) and \(H_4\) are computationally indistinguishable due to the (indistinguishable) security of oblivious transfer w.r.t. a malicious receiver. This part is identical to the proof in [26], and relies on the fact that one of the two strings for oblivious transfer are obtained by “coin tossing;” and therefore its inverse is hidden, which implies that the hardcore bits look pseudorandom.

-

\(H_5\): Identical to \(H_4\) except that now we simulate the garbled circuit and its labels for values \(x^*\) and \(F_1(x^*,y)\). Formally, \(H_5\) starts by proceeding exactly as \(H_4\) up to round 3 except that instead of committing to correct garbled circuit and labels in round 2, it simply commits to random values. After completing round 3, \(H_5\) extracts \(x^*\) exactly as in \(H_4\). If extraction succeeds, it sends \(x^*\) to the trusted party, receives back \(v_1=F_1(x^*,y)\), and computes \(\left( \{ Z_{i,b}\}, {\mathsf {GC}}_{*}\right) \leftarrow \mathsf {SimGC}(1^\kappa ,F_1,x^*,v_1)\). It uses labels \(\{Z_{i,x^*_i}\}\) to define the values \(\{W_{i,b}\}\) as in \(H_3\), and equivocates \(\mathsf {C}_{\mathsf {gc}}\) to obtain openings corresponding to the simulated circuit \({\mathsf {GC}}^*\). It then computes \(\mathsf {fs}_4\) as before (by using the trapdoor witness \((\sigma ,\omega _\sigma )\)), and constructs \(m_4:=\left( \{W_{i,b}\},\mathsf {fs}_4,{\mathsf {GC}}^{*},\zeta \right) \). It feeds \(m_4\) to \(\mathcal{A}\) and finally outputs \(\mathcal{A}\)’s view and halts.

We claim that \(H_4\) and \(H_5\) are computationally indistinguishable. First observe that the joint distribution of values \((\{\mathsf {C}_{\mathsf {lab}}^{i,b}\},\mathsf {C}_{\mathsf {gc}})\) and \({\mathsf {GC}}_y\) (along with real openings) in \(H_4\) is indistinguishable from the joint distribution of the values \((\{\mathsf {C}_{\mathsf {lab}}^{i,b}\},\mathsf {C}_{\mathsf {gc}})\) and \({\mathsf {GC}}^*\) (along with equivocal openings) in \(H_5\). The two hybrids are identical except for sampling of these values, and can be simulated perfectly given these values from outside. The claim follows.Footnote 17

Observe that \(H_5\) is now independent of the input y. Our simulator \(\mathcal{S}\) is \(H_5\). This completes the proof. \(\square \)

5 Multi-party Coin Flipping Protocol

In this section, we show a protocol for the multi-party coin-flipping functionality. Since we need neither OT nor garbled circuits for coin-flipping, this protocol is simpler than the the two-party protocol.

At a high level, the multi-party coin flipping protocol \(\varPi _{\mathsf {MCF}}\) simply consists of each party “committing” to a random string r, which is opened in the last round along with a simulatable proof of correct opening given to all parties independently. The output consists of the \(\oplus \) of all strings. This actually does not work directly as stated, but with a few more components, such as equivocal commitment to r for the proof to go through. In particular, we prove the following theorem.

Theorem 2

Assuming the existence of a trapdoor permutation family and a k-round protocol for (parallel) non-malleable commitments, then the multi-party protocol \(\varPi _{\mathsf {MCF}}\) securely computing the multi-party coin-flipping functionality with black-box simulation in the presence of a malicious adversary for polynomially many coins. The round complexity of \(\varPi _{\mathsf {MCF}}\) is \(k'=\max (4,k+1)\).

The multi-party coin flipping protocol \(\varPi _{\mathsf {MCF}}\) and its security proof can be found in the full version.

5.1 Coin Flipping with Committed Inputs