Abstract

An order-revealing encryption scheme gives a public procedure by which two ciphertexts can be compared to reveal the ordering of their underlying plaintexts. We show how to use order-revealing encryption to separate computationally efficient PAC learning from efficient \((\varepsilon , \delta )\)-differentially private PAC learning. That is, we construct a concept class that is efficiently PAC learnable, but for which every efficient learner fails to be differentially private. This answers a question of Kasiviswanathan et al. (FOCS ’08, SIAM J. Comput. ’11).

To prove our result, we give a generic transformation from an order-revealing encryption scheme into one with strongly correct comparison, which enables the consistent comparison of ciphertexts that are not obtained as the valid encryption of any message. We believe this construction may be of independent interest.

M. Bun—Supported by an NDSEG fellowship and NSF grant CNS-1237235.

M. Zhandry—Work done while the author was a graduate student at Stanford University. Supported by the DARPA PROCEED program.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Many agencies hold sensitive information about individuals, where statistical analysis of this data could yield great societal benefit. The line of work on differential privacy [20] aims to enable such analysis while giving a strong formal guarantee on the privacy afforded to individuals. Noting that the framework of computational learning theory captures many of these statistical tasks, Kasiviswanathan et al. [37] initiated the study of differentially private learning. Roughly speaking, a differentially private learner is required to output a classification of labeled examples that is accurate, but does not change significantly based on the presence or absence of any individual example.

The early positive results in private learning established that, ignoring computational complexity, any concept class is privately learnable with a number of samples logarithmic in the size of the concept class [37]. Since then, a number of works have improved our understanding of the sample complexity – the minimum number of examples – required by such learners to simultaneously achieve accuracy and privacy. Some of these works showed that privacy incurs an inherent additional cost in sample complexity; that is, some concept classes require more samples to learn privately than they require to learn without privacy [1, 2, 13, 16, 17, 25]. In this work, we address the complementary question of whether there is also a computational price of differential privacy for learning tasks, for which much less is known. The initial work of Kasiviswanathan et al. [37] identified the important question of whether any efficiently PAC learnable concept class is also efficiently privately learnable, but only limited progress has been made on this question since then [1, 44].

Our main result gives a strong negative answer to this question. We exhibit a concept class that is efficiently PAC learnable, but under plausible cryptographic assumptions cannot be learned efficiently and privately. To prove this result, we establish a connection between private learning and order-revealing encryption. We construct a new order-revealing encryption scheme with strong correctness properties that may be of independent learning-theoretic and cryptographic interest.

1.1 Differential Privacy and Private Learning

We first recall Valiant’s (distribution-free) PAC model for learning [54]. Let \(\mathcal {C}\) be a concept class consisting of concepts \(c: X \rightarrow \{0, 1\}\) for a data universe X. A learner L is given n samples of the form \((x_i, c(x_i))\) where the \(x_i\)’s are drawn i.i.d. from an unknown distribution, and are labeled according to an unknown concept c. The goal of the learner is to output a hypothesis \(h: X \rightarrow \{0, 1\}\) from a hypothesis class \(\mathcal {H}\) that approximates c well on the unknown distribution. That is, the probability that h disagrees with c on a fresh example from the unknown distribution should be small – say, less than 0.05. The hypothesis class \(\mathcal {H}\) may be different from \(\mathcal {C}\), but in the case where \(\mathcal {H}\subseteq \mathcal {C}\) we call L a proper learner. Moreover, we say a learner is efficient if it runs in time polynomial in the description size of c and the size of its examples.

Kasiviswanathan et al. [37] defined a private learner to be a PAC learner that is also differentially private. Two samples \(S = \{(x_1, b_1), \dots , (x_n, b_n)\}\) and \(S' = \{(x'_1, b'_1), \dots , (x'_n, b'_n)\}\) are said to be neighboring if they differ on exactly one example, which we think of as corresponding to one individual’s information. A randomized learner \(L: (X \times \{0, 1\})^n \rightarrow \mathcal {H}\) is \((\varepsilon , \delta )\)-differentially private if for all neighboring datasets S and \(S'\) and all sets \(T \subseteq \mathcal {H}\),

The original definition of differential privacy [20] took \(\delta = 0\), a case which is called pure differential privacy. The definition with positive \(\delta \), called approximate differential privacy, first appeared in [19] and has since been shown to enable substantial accuracy gains. Throughout this introduction, we will think of \(\varepsilon \) as a small constant, e.g. \(\varepsilon = 0.1\), and \(\delta = o(1/n)\).

Kasiviswanathan et al. [37] gave a generic “Private Occam’s Razor” algorithm, showing that any concept class \(\mathcal {C}\) can be privately (properly) learned using \(O(\log |\mathcal {C}|)\) samples. Unfortunately, this algorithm runs in time \(\varOmega (|\mathcal {C}|)\), which is exponential in the description size of each concept. With an eye toward designing efficient private learners, Blum et al. [5] made the powerful observation that any efficient learning algorithm in the statistical queries (SQ) framework of Kearns [39] can be efficiently simulated with differential privacy. Moreover, Kasiviswanathan et al. [37] showed that the efficient learner for the concept class of parity functions based on Gaussian elimination can also be implemented efficiently with differential privacy. These two techniques – SQ learning and Gaussian elimination – are essentially the only methods known for computationally efficient PAC learning. The fact that these can both be implemented privately led Kasiviswanathan et al. [37] to ask whether all efficiently learnable concept classes could also be efficiently learned with differential privacy.

Beimel et al. [1] made partial progress toward this question in the special case of pure differential privacy with proper learning, showing that the sample complexity of efficient learners can be much higher than that of inefficient ones. Specifically, they showed that assuming the existence of pseudorandom generators with exponential stretch, there exists for any \(\ell (d) = \omega (\log d)\) a concept class over \(\{0, 1\}^d\) for which every efficient proper private learner requires \(\varOmega (d)\) samples, but an inefficient proper private learner only requires \(O(\ell (d))\) examples. Nissim [44] strengthened this result substantially for “representation learning,” where a proper learner is further restricted to output a canonical representation of its hypothesis. He showed that, assuming the existence of one-way functions, there exists a concept class that is efficiently representation learnable, but not efficiently privately representation learnable (even with approximate differential privacy). With Nissim’s kind permission, we give the details of this construction in Sect. 5.

Despite these negative results for proper learning, one might still have hoped that any efficiently learnable concept class could be efficiently improperly learned with privacy. Indeed, a number of works have shown that, especially with differential privacy, improper learning can be much more powerful than proper learning. For instance, Beimel et al. [1] showed that under pure differential privacy, the simple class of \(\mathsf {Point}\) functions (indicators of a single domain element) requires \(\varOmega (d)\) samples to privately learn properly, but only O(1) samples to privately learn improperly. Moreover, computational separations are known between proper and improper learning even without privacy considerations. Pitt and Valiant [46] showed that unless \(\mathbf {NP} = \mathbf {RP}\), k-term DNF are not efficiently properly learnable, but they are efficiently improperly learnable [54].

Under plausible cryptographic assumptions, we resolve the question of Kasiviswanathan et al. [37] in the negative, even for improper learners. The assumption we need is the existence of “strongly correct” order-revealing encryption (ORE) schemes, described in Sect. 1.3.

Theorem 1

(Informal). Assuming the existence of strongly correct ORE, there exists an efficiently computable concept class \(\mathsf {EncThresh}\) that is efficiently PAC learnable, but not efficiently learnable by any \((\varepsilon , \delta )\)-differentially private algorithm.

We stress that this result holds even for improper learners and for the relaxed notion of approximate differential privacy. We remark that cryptography has played a major role in shaping our understanding of the computational complexity of learning in a number of models (e.g. [40, 41, 49, 54]). It has also been used before to show separations between what is efficiently learnable in different models (e.g. [4, 50]).

1.2 Our Techniques

We give an informal overview of the construction and analysis of the concept class \(\mathsf {EncThresh}\).

We first describe the concept class of thresholds \(\mathsf {Thresh}\) and its simple PAC learning algorithm. Consider the domain \([N] = \{1, \dots , N\}\). Given a number \(t \in [N]\), a threshold concept \(c_t\) is defined by \(c_t(x) = 1\) if and only if \(x \le t\). The concept class of thresholds admits a simple and efficient proper PAC learning algorithm \(L_{\mathsf {Thresh}}\). Given a sample \(\{(x_1, c_t(x_1)), \dots , (x_n, c_t(x_n))\}\) labeled by an unknown concept \(c_t\), the learner \(L_{\mathsf {Thresh}}\) identifies the largest positive example \(x_{i^*}\) and outputs the hypothesis \(h = c_{x_{i^*}}\). That is, \(L_{\mathsf {Thresh}}\) chooses the threshold concept that minimizes the empirical error on its sample. To achieve a small constant error on any underlying distribution on examples, it suffices to take \(n = O(1)\) samples. Moreover, this learner can be modified to guarantee differential privacy by instead randomly sampling a threshold hypothesis with probability that decays exponentially in the empirical error of the hypothesis [37, 42]. The sampling can be performed in polynomial time, and requires only a modest blow-up in the learner’s sample complexity.

A simple but important observation about \(L_{\mathsf {Thresh}}\) – which, crucially, is not true of the differentially private version – is that it is completely oblivious to the actual numeric values of its examples, or even to the fact that the domain is [N]. In fact, \(L_{\mathsf {Thresh}}\) works equally well on any totally-ordered domain on which it can efficiently compare examples. In an extreme case, the learner \(L_{\mathsf {Thresh}}\) still works when its examples are encrypted under an order-revealing encryption (ORE) scheme, which guarantees that \(L_{\mathsf {Thresh}}\) is able to learn the order of its examples, but nothing else about them. Up to small technical modifications, our concept class \(\mathsf {EncThresh}\) is exactly the class \(\mathsf {Thresh}\) where examples are encrypted under an ORE scheme.

For \(\mathsf {EncThresh}\) to be efficiently PAC learnable, it must be learnable even under distributions that place arbitrary weight on examples corresponding to invalid ciphertexts. To this end, we require a “strong correctness” condition on our ORE scheme. The strong correctness condition ensures that all ciphertexts, even those that are not obtained as encryptions of messages, can be compared in a consistent fashion. This condition is not met by current constructions of ORE, and one of the technical contributions of this work is a generic transformation from weakly correct ORE schemes to strongly correct ones.

While a learner similar to \(L_{\mathsf {Thresh}}\) is able to efficiently PAC learn the concept class \(\mathsf {EncThresh}\), we argue that it cannot do so while preserving differential privacy with respect to its examples. Intuitively, the security of the ORE scheme ensures that essentially the only thing a learner for \(\mathsf {EncThresh}\) can do is output a hypothesis that compares an example to one it already has. We make this intuition precise by giving an algorithm that traces the hypothesis output by any efficient learner back to one of the examples used to produce it. This formalization builds conceptually on the connection between differential privacy and traitor-tracing schemes (see Sect. 1.4), but requires new ideas to adapt to the PAC learning model.

1.3 Order-Revealing Encryption

Motivated by the task of answering range queries on encrypted databases, an order-revealing encryption (ORE) scheme [7, 8] is a special type of symmetric key encryption scheme where it is possible to publicly sort ciphertexts according to the order of the plaintexts. More precisely, the plaintext space of the scheme is the set of integers \([N] = \{1,...,N\}\),Footnote 1 and in addition to the private encryption and decryption procedures \(\mathsf {Enc},\mathsf {Dec}\), there is a public comparison procedure \(\mathsf {Comp}\) that takes as input two ciphertexts, and reveals the order of the corresponding plaintexts. The notion of best-possible semantic security, defined in Boneh et al. [8], intuitively captures the requirement that, given a collection of ciphertexts, no information about the plaintexts is learned, except for the ordering.

Known Constructions of Order-Revealing Encryption. Relatively few constructions of order-revealing encryption are known, and all constructions are currently based on strong assumptions. Order-revealing encryption can be seen as a special case of 2-input functional encryption, also known as property preserving encryption [45]. In such a scheme, there are several functions \(f_1,...,f_k\), and given two ciphertexts \(c_0,c_1\) encrypting \(m_0,m_1\), it is possible to learn \(f_i(m_0,m_1)\) for all \(i \in [k]\). General multi-input functional encryption schemes can be obtained from indistinguishability obfuscation [30] or multilinear maps [8]. It is also possible to build ORE from single-input functional encryption with function privacy, which means that f is kept secret. Such schemes can be built from regular single-input schemes without function privacy [12], and such single-input schemes can also be built from obfuscation [27] or multilinear maps [28].

It is known that the forms of functional encryption discussed above actually imply obfuscation [3], meaning that all the assumptions from which we can currently build order-revealing encryption imply obfuscation. However, we stress that ORE appears to be much, much weaker than obfuscation or functional encryption: only a single very simple functionality is supported, namely comparison. In particular the functionality does not support evaluating cryptographic primitives on the plaintext, a feature required of essentially all of the interesting applications of obfuscation/functional encryption. Therefore, we conjecture that ORE can actually be based on significantly weaker assumptions. One way or another, it is important to resolve the status of ORE relative to obfuscation and other strong primitives: if ORE can be based on mild assumptions, it would strengthen our impossibility result, and likely lead to more efficient ORE constructions that can actually be used in practice. If ORE actually implies obfuscation or other similarly strong primitives, then ORE could be a path to building more efficient obfuscation with better security. Our work demonstrates that, in addition to having real-world practical motivations, ORE is also an interesting theoretical object.

Unfortunately, the above constructions are not quite sufficient for our purposes. The issue arises from the fact that our learner needs to work for any distribution on ciphertexts, even distributions whose support includes malformed ciphertexts. Unfortunately, previous constructions only achieve a weak form of correctness, which guarantees that encrypting two messages and then comparing the ciphertexts using \(\mathsf {Comp}\) produces the same result (with overwhelming probability) as comparing the plaintexts directly. This requirement only specifies how \(\mathsf {Comp}\) works on valid ciphertexts, namely actual encryptions of messages. Moreover, correctness is only guaranteed for these messages with overwhelming probability, meaning even some valid ciphertexts may cause \(\mathsf {Comp}\) to misbehave.

For our learner, this weak form of correctness means, for some distributions that place significant weight on bad ciphertexts, the comparison procedure is completely useless, and thus the learner will fail for these distributions.

We therefore need a stronger correctness guarantee. We need that, for any two ciphertexts, the comparison procedure is consistent with decrypting the two ciphertexts and comparing the resulting plaintexts. This correctness guarantee is meaningful even for improperly generated ciphertexts.

We note that none of the existing constructions of order-revealing encryption outlined above satisfy this stronger notion. For the obfuscation-based schemes, ciphertexts consist of obfuscated programs. In these schemes, it is easy to describe invalid ciphertexts where the obfuscated program performs incorrectly, causing the comparison procedure to output the wrong result. In the multilinear map-based schemes, the underlying instantiation use current “noisy” multilinear maps, such as [26]. An invalid ciphertext could, for example, have too much noise, which will cause the comparison procedure to behave unpredictably.

Obtaining Strong Correctness.

We first argue that, for all existing ORE schemes, the scheme can be modified so that \(\mathsf {Comp}\) is correct for all valid ciphertexts. We then give a generic conversion from any ORE scheme with weakly correct comparison, including the tweaked existing schemes, into a strongly correct scheme. We simply modify the ciphertext by adding a non-interactive zero-knowledge (NIZK) proof that the ciphertext is well-formed, with the common reference string added to the public comparison key. Then the decryption and comparison procedures check the proof(s), and only output the result (either decryption or comparison) if the proof(s) are valid. The (computational) zero-knowledge property of the NIZK implies that the addition of the proof to the ciphertext does not affect security. Meanwhile, NIZK soundness implies that any ciphertext accepted by the decryption and comparison procedures must be valid, and the weak correctness property of the underlying ORE implies that for valid ciphertexts, decryption and comparison are consistent. The result is that comparisons are consistent with decryption for all ciphertexts, giving strong correctness.

As we need strong correctness for every ciphertext, even hard-to-generate ones, we need the NIZK proofs to have perfect soundness, as opposed to computational soundness. Such NIZK proofs were built in [32].

We note also that the conversion outlined above is not specific to ORE, and applies more generally to functional encryption schemes.

1.4 Related Work

Hardness of Private Query Release. One of the most basic and well-studied statistical tasks in differential privacy is the problem of releasing answers to counting queries. A counting query asks, “what fraction of the records in a dataset D satisfy the predicate q?”. Given a collection of k counting queries \(q_1, \dots , q_k\) from a family \(\mathcal {Q}\), the goal of a query release algorithm is to release approximate answers to these queries while preserving differential privacy. A remarkable result of Blum et al. [6], with subsequent improvements by [21, 23, 33–35, 48], showed that an arbitrary sequence of counting queries can be answered accurately with differential privacy even when k is exponential in the dataset size n. Unfortunately, all of these algorithms that are capable of answering more than \(n^2\) queries are inefficient, running in time exponential in the dimensionality of the data. Moreover, several works [10, 21, 52] have gone on to show that this inefficiency is likely inherent.

These computational lower bounds for private query release rely on a connection between the hardness of private query release and traitor-tracing schemes, which was first observed by Dwork et al. [21]. Traitor-tracing schemes were introduced by Chor, Fiat, and Naor [18] to help digital content producers identify pirates as they illegally redistribute content. Traitor-tracing schemes are conceptually analogous to the example reidentification scheme we use to obtain our hardness result for private learning. Instantiating this connection with the traitor-tracing scheme of Boneh, Sahai, and Waters [9], which relies on certain assumptions in bilinear groups, Dwork et al. [21] exhibited a family of \(2^{\tilde{O}(\sqrt{n})}\) queries for which no efficient algorithm can produce a data structure which could be used to answer all queries in this family. Very recently, Boneh and Zhandry [10] constructed a new traitor-tracing scheme based on indistinguishability obfuscation that yields the same infeasibility result for a family of \(n\cdot 2^{O(d)}\) queries on records of size d. Extending this connection, Ullman [52] constructed a specialized traitor-tracing scheme to show that no efficient private algorithm can answer more than \(\tilde{O}(n^2)\) arbitrary queries that are given as input to the algorithm.

Dwork et al. [21] also showed strong lower bounds against private algorithms for producing synthetic data. Synthetic data generation algorithms produce a new “fake” dataset, whose rows are of the same type as those in the original dataset, with the promise that the answers to some restricted set of queries on the synthetic dataset well-approximate the answers on the original dataset. Assuming the existence of one-way functions, Dwork et al. [21] exhibited an efficiently computable collection of queries for which no efficient private algorithm can produce useful synthetic data. Ullman and Vadhan [53] refined this result to hold even for extremely simple classes of queries.

Nevertheless, the restriction to synthetic data is significant to these results, and they do not rule out the possibility that other privacy-preserving data structures can be used to answer large families of restricted queries. In fact, when the synthetic data restriction is lifted, there are algorithms (e.g. [15, 22, 36, 51]) that answer queries from certain exponentially large families in subexponential time. One can view the problem of synthetic data generation as analogous to proper learning. In both cases, placing natural syntactic restrictions on the output of an algorithm may in fact come at the expense of utility or computational efficiency.

Efficiency of SQ Learning. Feldman and Kanade [24] addressed the question of whether information-theoretically efficient SQ learners – i.e., those making polynomially many queries – could be made computationally efficient. One of their main negative results showed that unless \(\mathbf {NP} = \mathbf {RP}\), there exists a concept class with polynomial query complexity that is not efficiently SQ learnable. Moreover, this concept class is efficiently PAC learnable, which suggests that the restriction to SQ learning can introduce an inherent computational cost.

We show that the concept class \(\mathsf {EncThresh}\) can be learned (inefficiently) with polynomially many statistical queries. The result of Blum et al. [5] discussed above, showing that SQ learning algorithms can be efficiently simulated by differentially private algorithms, thus shows that \(\mathsf {EncThresh}\) also separates SQ learners making polynomially many queries from computationally efficient SQ learners.

Corollary 1

(Informal). Assuming the existence of strongly correct ORE, the concept class \(\mathsf {EncThresh}\) is efficiently PAC learnable and has polynomial SQ query complexity, but is not efficiently SQ learnable.

While our proof relies on much stronger hardness assumptions, it reveals ORE as a new barrier to efficient SQ learning. As discussed in more detail in Sect. 3.3, even though their result is about computational hardness, Feldman and Kanade’s choice of a concept class relies crucially on the fact that parities are hard to learn in the SQ model even information-theoretically. By contrast, our concept class \(\mathsf {EncThresh}\) is computationally hard to SQ learn for a reason that appears fundamentally different than the information-theoretic hardness of SQ learning parities.

Learning from Encrypted Data. Several works have developed schemes for training, testing, and classifying machine learning models over encrypted data (e.g. [11, 31]). In a model use case, a client holds a sensitive dataset, and uploads an encrypted version of the dataset to a cloud computing service. The cloud service then trains a model over the encrypted data and produces an encrypted classifier it can send back to the client, ideally without learning anything about the examples it received. The notion of privacy afforded to the individuals in the dataset here is complementary to differential privacy. While the cloud service does not learn anything about the individuals in the dataset, its output might still depend heavily on the data of certain individuals.

In fact, our non-differentially private PAC learner for the class \(\mathsf {EncThresh}\) exactly performs the task of learning over encrypted data, producing a classifier without learning anything about its examples beyond their order (this addresses the difficulty of implementing comparisons from prior work [31]). Thus one can interpret our results as showing that not only are these two notions of privacy for machine learning training complementary, but that they may actually be in conflict. Moreover, the strong correctness guarantee we provide for ORE (which applies more generally to multi-input functional encryption) may help enable the theoretical study of learning from encrypted data in other PAC-style settings.

2 Preliminaries and Definitions

2.1 PAC Learning and Private PAC Learning

For each \(k \in \mathbb {N}\), let \(X_k\) be an instance space (such as \(\{0, 1\}^k\)), where the parameter k represents the size of the elements in \(X_k\). Let \(\mathcal {C}_k\) be a set of boolean functions \(\{c: X_k \rightarrow \{0, 1\}\}\). The sequence \((X_1, \mathcal {C}_1), (X_2, \mathcal {C}_2), \dots \) represents an infinite sequence of learning problems defined over instance spaces of increasing dimension. We will generally suppress the parameter k, and refer to the problem of learning \(\mathcal {C}\) as the problem of learning \(\mathcal {C}_k\) for every k.

A learner L is given examples sampled from an unknown probability distribution \(\mathcal {D}\) over X, where the examples are labeled according to an unknown target concept \(c\in \mathcal {C}\). The learner must select a hypothesis h from a hypothesis class \(\mathcal {H}\) that approximates the target concept with respect to the distribution \(\mathcal {D}\). More precisely,

Definition 1

The generalization error of a hypothesis \(h:X\rightarrow \{0,1\}\) (with respect to a target concept c and distribution \(\mathcal {D}\)) is defined by \(\mathrm{error}_{\mathcal {D}}(c,h)=\Pr _{x \sim \mathcal {D}}[h(x)\ne c(x)].\) If \(\mathrm{error}_{\mathcal {D}}(c,h)\le \alpha \) we say that h is an \(\alpha \) -good hypothesis for c on \(\mathcal {D}\).

Definition 2

(PAC Learning [54]). Algorithm \(L: (X \times \{0, 1\})^n \rightarrow \mathcal {H}\) is an \((\alpha ,\beta )\) -accurate PAC learner for the concept class \(\mathcal {C}\) using hypothesis class \(\mathcal {H}\) with sample complexity n if for all target concepts \(c \in \mathcal {C}\) and all distributions \(\mathcal {D}\) on X, given an input of n samples \(S =((x_i, c(x_i)),\ldots ,(x_n, c(x_n)))\), where each \(x_i\) is drawn i.i.d. from \(\mathcal {D}\), algorithm L outputs a hypothesis \(h\in \mathcal {H}\) satisfying \(\Pr [\mathrm{error}_{\mathcal {D}}(c,h) \le \alpha ] \ge 1-\beta \). The probability here is taken over the random choice of the examples in S and the coin tosses of the learner L.

The learner L is efficient if it runs in time polynomial in the size parameter k, the representation size of the target concept c, and the accuracy parameters \(1/\alpha \) and \(1/\beta \). Note that a necessary (but not sufficient) condition for L to be efficient is that its sample complexity n is polynomial in the learning parameters.

If \(\mathcal {H}\subseteq \mathcal {C}\) then L is called a proper learner. Otherwise, it is called an improper learner.

Kasiviswanathan et al. [37] defined a private learner as a PAC learner that is also differentially private. Recall the definition of differential privacy:

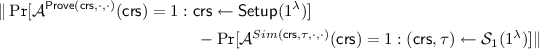

Definition 3

A learner \(L: (X \times \{0, 1\})^n \rightarrow \mathcal {H}\) is \((\varepsilon , \delta )\)-differentially private if for all sets \(T \subseteq \mathcal {H}\), and neighboring sets of examples \(S \sim S'\),

The technical object that we will use to show our hardness results for differential privacy is what we call an example reidentification scheme. It is analogous to the hard-to-sanitize database distributions [21, 53] and re-identifiable database distributions [14] used in prior works to prove hardness results for private query release, but is adapted to the setting of computational learning. In the first step, an algorithm \(\mathsf {Gen_{ex}}\) chooses a concept and a sample S labeled according to that concept. In the second step, a learner L receives either the sample S or the sample \(S_{-i}\) where an appropriately chosen example i is replaced by a junk example, and learns a hypothesis h. Finally, an algorithm \(\mathsf {Trace_{ex}}\) attempts to use h to identify one of the rows given to L. If \(\mathsf {Trace_{ex}}\) succeeds at identifying such a row with high probability, then it must be able to distinguish L(S) from \(L(S_{-i})\), showing that L cannot be differentially private. We formalize these ideas below.

Definition 4

An \((\alpha , \xi )\)-example reidentification scheme for a concept class \(\mathcal {C}\) consists of a pair of algorithms, \((\mathsf {Gen_{ex}}, \mathsf {Trace_{ex}})\) with the following properties.

-

\(\mathsf {Gen_{ex}}(k, n)\) Samples a concept \(c \in \mathcal {C}_k\) and an associated distribution \(\mathcal {D}\). Draws i.i.d. examples

, and a fixed value \(x_0\). Let S denote the labeled sample \(((x_1, c(x_1)), \dots , (x_n, c(x_n))\), and for any index \(i \in [n]\), let \(S_{-i}\) denote the sample with the pair \((x_i, c(x_i))\) replaced with \((x_0, c(x_0))\).

, and a fixed value \(x_0\). Let S denote the labeled sample \(((x_1, c(x_1)), \dots , (x_n, c(x_n))\), and for any index \(i \in [n]\), let \(S_{-i}\) denote the sample with the pair \((x_i, c(x_i))\) replaced with \((x_0, c(x_0))\). -

\(\mathsf {Trace_{ex}}(h)\) Takes state shared with \(\mathsf {Gen_{ex}}\) as well as a hypothesis h and identifies an index in [n] (or \(\bot \) if none is found).

The scheme obeys the following “completeness” and “soundness” criteria on the ability of \(\mathsf {Trace_{ex}}\) to identify an example given to a learner L.

Completeness. A good hypothesis can be traced to some example. That is, for every efficient learner L,

Here, the probability is taken over  and the coins of \(\mathsf {Gen_{ex}}\) and \(\mathsf {Trace_{ex}}\). Soundness. For every efficient learner L, \(\mathsf {Trace_{ex}}\) cannot trace i from the sample \(S_{-i}\). That is, for all \(i \in [n]\),

and the coins of \(\mathsf {Gen_{ex}}\) and \(\mathsf {Trace_{ex}}\). Soundness. For every efficient learner L, \(\mathsf {Trace_{ex}}\) cannot trace i from the sample \(S_{-i}\). That is, for all \(i \in [n]\),

for  .

.

We may sometimes relax the completeness condition to hold only under certain restrictions on L’s output (e.g. L is a proper learner or L is a representation learner). In this case, we say the \((\mathsf {Gen_{ex}}, \mathsf {Trace_{ex}})\) is an example reidentification scheme for (properly, representation) learning a class \(\mathcal {C}\).

Theorem 2

Let \((\mathsf {Gen_{ex}}, \mathsf {Trace_{ex}})\) be an \((\alpha , \xi )\)-example reidentification scheme for a concept class \(\mathcal {C}\). Then for every \(\beta >0\) and polynomial n(k), there is no efficient \((\varepsilon , \delta )\)-differentially private \((\alpha , \beta )\)-PAC learner for \(\mathcal {C}\) using n samples when

In a typical setting of parameters, we will take \(\alpha , \beta , \varepsilon = O(1)\) and \(\delta , \xi = o(1/n)\), in which case the inequality in Theorem 2 will be satisfied for sufficiently large n.

Proof

Suppose instead there were a computationally efficient \((\varepsilon , \delta )\)-differentially private \((\alpha , \beta )\)-PAC learner L for \(\mathcal {C}\) using n samples. Then there exists an \(i \in [n]\) such that \(\Pr [\mathsf {Trace_{ex}}(L(S)) = i] \ge (1-\beta -\xi ) / n\). However, since L is differentially private,

which contradicts the soundness of \((\mathsf {Gen_{ex}}, \mathsf {Trace_{ex}})\).

2.2 Order-Revealing Encryption

Definition 5

An Order-Revealing Encryption (ORE) scheme is a tuple \((\mathsf {Gen},\mathsf {Enc},\mathsf {Dec},\mathsf {Comp})\) of algorithms where:

-

\(\mathsf {Gen}(1^\lambda ,1^\ell )\) is a randomized procedure that takes as inputs a security parameter \(\lambda \) and plaintext length \(\ell \), and outputs a secret encryption/decryption key \(\mathsf {sk}\) and public parameters \(\mathsf {pars}\).

-

\(\mathsf {Enc}(\mathsf {sk},m)\) is a potentially randomized procedure that takes as input a secret key \(\mathsf {sk}\) and a message \(m\in \{0,1\}^\ell \), and outputs a ciphertext c.

-

\(\mathsf {Dec}(\mathsf {sk},c)\) is a deterministic procedure that takes as input a secret key \(\mathsf {sk}\) and a ciphertext c, and outputs a plaintext message \(m\in \{0,1\}^\ell \) or a special symbol \(\bot \).

-

\(\mathsf {Comp}(\mathsf {pars},c_0,c_1)\) is a deterministic procedure that “compares” two ciphertexts, outputting either “\(>\)”, “\(<\)”, “\(=\)”, or \(\bot \).

Correctness. An ORE scheme must satisfy two separate correctness requirements:

-

Correct Decryption: This is the standard notion of correctness for an encryption scheme, which says that decryption succeeds. We will only consider strongly correct decryption, which requires that decryption always succeeds. For all security parameters \(\lambda \) and message lengths \(\ell \),

$$\begin{aligned} \Pr [\mathsf {Dec}(\mathsf {sk},\;\mathsf {Enc}(\mathsf {sk},m)) = m: (\mathsf {sk},\mathsf {pars})\leftarrow \mathsf {Gen}(1^\lambda ,1^\ell )] = 1. \end{aligned}$$ -

Correct Comparison: We require that the comparison function succeeds. We will consider two notions, namely strong and weak. In order to define these notions, we first define two auxiliary functions:

-

\(\mathsf {Comp}_{plain}(m_0,m_1)\) is just the plaintext comparison function. That is, for \(m_0<m_1\), \(\mathsf {Comp}_{plain}(m_0,m_1)=``<"\), \(\mathsf {Comp}_{plain}(m_1,m_0)=``>"\), and \(\mathsf {Comp}_{plain}(m_0,m_0)=``="\).

-

\(\mathsf {Comp}_{ciph}(\mathsf {sk},c_0,c_1)\) is a ciphertext comparison function which uses the secret key. If first computes \(m_b=\mathsf {Dec}(\mathsf {sk},c_b)\) for \(b=0,1\). If either \(m_0=\bot \) or \(m_1=\bot \) (in other words, if either decryption failed), then \(\mathsf {Comp}_{ciph}\) outputs \(\bot \). If both \(m_0,m_1\ne \bot \), then the output is \(\mathsf {Comp}_{plain}(m_0,m_1)\).

Now we define our comparison correctness notions:

-

Weakly Correct Comparison: This informally requires that comparison is consistent with encryption. For all security parameters \(\lambda \), message lengths \(\ell \), and messages \(m_0,m_1\in \{0,1\}^\ell \),

$$\begin{aligned} \Pr \left[ \begin{array}{c}\mathsf {Comp}(\mathsf {pars},c_0,c_1)\\ =\mathsf {Comp}_{plain}(m_0,m_1)\end{array}:\begin{array}{c}(\mathsf {sk},\mathsf {pars})\leftarrow \mathsf {Gen}(1^\lambda ,1^\ell )\\ c_b\leftarrow \mathsf {Enc}(\mathsf {sk},m_b)\end{array}\right] =1. \end{aligned}$$In particular, for correctly generated ciphertexts, \(\mathsf {Comp}\) never outputs \(\bot \).

-

Strongly Correct Comparison: This informally requires that comparison is consistent with decryption. For all security parameters \(\lambda \), message lengths \(\ell \), and ciphertexts \(c_0,c_1\),

$$\begin{aligned} \Pr \left[ \begin{array}{c}\mathsf {Comp}(\mathsf {pars},c_0,c_1)\\ =\mathsf {Comp}_{ciph}(\mathsf {sk},c_0,c_1)\end{array}:(\mathsf {sk},\mathsf {pars})\leftarrow \mathsf {Gen}(1^\lambda ,1^\ell )\right] =1. \end{aligned}$$

-

Security. For security, we will consider a relaxation of the “best possible” security notion of Boneh et al. [8]. Namely, we only consider static adversaries that submit all queries at once. “Best possible” security is a modification of the standard notion of CPA security for symmetric key encryption to block trivial attacks. That is, since the comparison function always leaks the order of the plaintexts, the left and right sets of challenge messages must have the same order. In our relaxation where all challenge messages are queried at once, we can therefore assume without loss of generality that the left and right sequences of messages are sorted in ascending order. For simplicity, we will also disallow the adversary from querying on the same message more than once. This gives the following definition:

Definition 6

An ORE scheme \((\mathsf {Gen},\mathsf {Enc},\mathsf {Dec},\mathsf {Comp})\) is statically secure if, for all efficient adversaries \(\mathcal {A}\), \(|\Pr [W_0]-\Pr [W_1]|\) is negligible, where \(W_b\) is the event that \(\mathcal {A}\) outputs 1 in the following experiment:

-

\(\mathcal {A}\) produces two message sequences \(m_1^{(L)}<m_2^{(L)}<\dots <m_q^{(L)}\) and \(m_1^{(R)}<m_2^{(R)}<\dots <m_q^{(R)}\)

-

The challenger runs \((\mathsf {sk},\mathsf {pars})\leftarrow \mathsf {Gen}(1^\lambda ,1^\ell )\). It then responds to \(\mathcal {A}\) with \(\mathsf {pars}\), as well as \(c_1,\dots ,c_q\) where

$$c_i={\left\{ \begin{array}{ll} \mathsf {Enc}(\mathsf {sk},m_i^{(L)})&{}\text {if }b=0\\ \mathsf {Enc}(\mathsf {sk},m_i^{(R)})&{}\text {if }b=1 \end{array}\right. }$$ -

\(\mathcal {A}\) outputs a guess \(b'\) for b.

We also consider a weaker definition, which only allows the sequences \(m_i^{(L)}\) and \(m_i^{(R)}\) to differ at a single point:

Definition 7

An ORE scheme \((\mathsf {Gen},\mathsf {Enc},\mathsf {Dec},\mathsf {Comp})\) is statically single-challenge secure if, for all efficient adversaries \(\mathcal {A}\), \(|\Pr [W_0]-\Pr [W_1]|\) is negligible, where \(W_b\) is the event that \(\mathcal {A}\) outputs 1 in the following experiment:

-

\(\mathcal {A}\) produces a sequence of messages \(m_1<m_2<\dots <m_q\), and challenge messages \(m_L,m_R\) such that \(m_i<m_L<m_R<m_{i+1}\) for some \(i\in [q-1]\).

-

The challenger runs \((\mathsf {sk},\mathsf {pars})\leftarrow \mathsf {Gen}(1^\lambda ,1^\ell )\). It then responds to \(\mathcal {A}\) with \(\mathsf {pars}\), as well as \(c_1,\dots ,c_q\) where \(c_i=\mathsf {Enc}(\mathsf {sk},m_i)\) and

$$c^*={\left\{ \begin{array}{ll} \mathsf {Enc}(\mathsf {sk},m_L)&{}\text {if }b=0\\ \mathsf {Enc}(\mathsf {sk},m_R)&{}\text {if }b=1 \end{array}\right. }$$ -

\(\mathcal {A}\) outputs a guess \(b'\) for b.

We now argue that these two definitions are equivalent up to some polynomial loss in security.

Theorem 3

\((\mathsf {Gen},\mathsf {Enc},\mathsf {Dec},\mathsf {Comp})\) is statically secure if and only if it is statically single-challenge secure.

Proof

We prove that single-challenge security implies many-challenge security through a sequence of hybrids. Each hybrid will only differ in the messages \(m_i\) that are encrypted, and each adjacent hybrid will only differ in a single message. The first hybrid will encrypt \(m_i^{(L)}\), and the last hybrid will encrypt \(m_i^{(R)}\). Thus, by applying the single-challenge security for each hybrid, we conclude that the first and last hybrids are indistinguishable, thus showing many-challenge security.

Hybrid j for \(j\le q\).

First, notice that all the \(m_i\) are in order since both sequences \(m_i^{(L)}\) and \(m_i^{(R)}\) are in order. Second, the only difference between Hybrid \((j-1)\) and Hybrid j is that \(m_j=m_j^{(L)}\) in Hybrid \((j-1)\) and \(m_j=\min (m_j^{(L)},m_j^{(R)})\) in Hybrid j. Thus, single-challenge security implies that each adjacent hybrid is indistinguishable. Moreover, for j where \(m_j^{(L)}<m_j^{(R)}\), the two hybrids are actually identical.

Hybrid j for \(j> q\).

Again, notice that all the \(m_i\) are in order. Moreover, the only different between Hybrid \((2q-j)\) and Hybrid \((2q-j+1)\) is that \(m_{j}=\min (m_j^{(L)},m_j^{(R)})\) in Hybrid \((2q-j)\) and \(m_j=m_j^{(R)}\) in Hybrid \((2q-j+1)\). Thus, single-challenge security implies that each adjacent hybrid is indistinguishable. Moreover, for j where \(m_j^{(L)}>m_j^{(R)}\), the two hybrids are actually identical.

3 The Concept Class \(\mathsf {EncThresh}\) and Its Learnability

Let \((\mathsf {Gen}, \mathsf {Enc}, \mathsf {Dec}, \mathsf {Comp})\) be a statically secure ORE scheme with strongly correct comparison. We define a concept class \(\mathsf {EncThresh}\), which intuitively captures the class of threshold functions where examples are encrypted under the ORE scheme. Throughout this discussion, we will take \(N = 2^\ell \) and regard the plaintext space of the ORE scheme to be \([N] = \{1, \dots , N\}\). Ideally we would like, for each threshold \(t \in [N+1]\) and each \((\mathsf {sk}, \mathsf {pars}) \leftarrow \mathsf {Gen}(1^\lambda )\), to define a concept

However, we need to make a few technical modifications to ensure that \(\mathsf {EncThresh}\) is efficiently PAC learnable.

-

1.

In order for the learner to be able to use the comparison function \(\mathsf {Comp}\), it must be given the public parameters \(\mathsf {pars}\) generated by the ORE scheme. We address this in the natural way by attaching a set of public parameters to each example. Moreover, we define \(\mathsf {EncThresh}\) so that each concept is supported on the single set of public parameters that corresponds to the secret key used for encryption and decryption.

-

2.

Only a subset of binary strings form valid \((\mathsf {sk}, \mathsf {pars})\) pairs that are actually produced by \(\mathsf {Gen}\) in the ORE scheme. To represent concepts, we need a reasonable encoding scheme for these valid pairs. The encoding scheme we choose is the polynomial-length sequence of random coin tosses used by the algorithm \(\mathsf {Gen}\) to produce \((\mathsf {sk}, \mathsf {pars})\).

We now formally describe the concept class \(\mathsf {EncThresh}\). Each concept is parameterized by a string r, representing the coin tosses of the algorithm \(\mathsf {Gen}\), and a threshold \(t \in [N+1]\) for \(N = 2^\ell \). In what follows, let \((\mathsf {sk}^r, \mathsf {pars}^r)\) be the keys output by \(\mathsf {Gen}(1^\lambda , 1^\ell )\) when run on the sequence of coin tosses r. Let

Notice that given t and r, the concept \(f_{t, r}\) can be efficiently evaluated. The description length k of the instance space \(X_k = \{0, 1\}^k\) is polynomial in the security parameter \(\lambda \) and plaintext length \(\ell \).

3.1 An Efficient PAC Learner for \(\mathsf {EncThresh}\)

We argue that \(\mathsf {EncThresh}\) is efficiently PAC learnable by formalizing the argument from the introduction. Because we need to include the ORE public parameters in each example, the PAC learner L (Algorithm 1) for \(\mathsf {EncThresh}\) actually works in two stages. In the first stage, L determines whether there is significant probability mass on examples corresponding to some public parameters \(\mathsf {pars}\). Recall that each concept in \(\mathsf {EncThresh}\) is supported on exactly one such set of parameters. If there is no significant mass on any \(\mathsf {pars}\), then the all-zeroes hypothesis is a good hypothesis. On the other hand, if there is a heavy set of parameters, the learner L applies \(\mathsf {Comp}\) using those parameters to learn a good comparator.

Theorem 4

Let \(\alpha , \beta > 0\). There exists a PAC learning algorithm L for the concept class \(\mathsf {EncThresh}\) achieving error \(\alpha \) and confidence \(1-\beta \). Moreover, L is efficient (running in time polynomial in the parameters \(k, 1/\alpha , \log (1/\beta )\)).

Proof

Fix a target concept \(f_{t, r} \in \mathsf {EncThresh}_k\) and a distribution \(\mathcal {D}\) on examples. First observe that the learner L always outputs a hypothesis with one-sided error, i.e. we always have \(h \le f_{t, r}\) pointwise. Also observe that \(f_{t',r}\le f_{t,r}\) pointwise for any \(t'<t\). These both follow from the strong correctness of the ORE scheme. Let \((\mathsf {sk}^r, \mathsf {pars}^r)\) denote the keys output by \(\mathsf {Gen}(1^\lambda , 1^\ell )\) when run on the sequence of coin tosses r. Let \({\text {POS}}\) denote the set of examples \((\mathsf {pars}, c)\) on which \(f_{t, r}(\mathsf {pars}, c) = 1\). We divide the analysis of the learner in to two cases based on the weight \(\mathcal {D}\) places on \({\text {POS}}\).

Case 1: \(\mathcal {D}\) places weight at least \(\alpha \) on \({\text {POS}}\). Define \(\hat{t} \in [N+1]\) as the largest \(\hat{t} \le t\) such that \(\mathrm{error}_{\mathcal {D}}(f_{\hat{t}, r}, f_{t, r}) \ge \alpha \). Such a \(\hat{t}\) is guaranteed to exist since \(f_{0,r}\) is the all-zeros function, and therefore \(\mathrm{error}_{\mathcal {D}}(f_{0,r},f_{t,r})\) is equal to the weight \(\mathcal {D}\) places on \({\text {POS}}\), which is at least \(\alpha \).

Suppose \(f_{\hat{t}+1,r}\le h\) pointwise. Since h has one-sided error (that is, \(h\le f_{t,r}\) pointwise), we have \(\mathrm{error}_{\mathcal {D}}(f_{\hat{t}+1,r},f_{t,r})=\mathrm{error}_{\mathcal {D}}(f_{\hat{t}+1,r},h)+\mathrm{error}_{\mathcal {D}}(h,f_{t,r})\), or

Therefore, it suffices to show that \(f_{\hat{t}+1,r}\le h\) with probability at least \(1-\beta \). This is guaranteed as long as L receives a sample \((\mathsf {pars}^r, c_i, 1)\) with \(\hat{t} \le \mathsf {Dec}(\mathsf {sk}^r, c_i) < t\). In other words, \(f_{t,r}(\mathsf {pars}^r,c_i)=1\) and \(f_{\hat{t},r}(\mathsf {pars}^r,c_i)=0\). Since \(f_{\hat{t},r}\le f_{t,r}\) pointwise, such samples exactly account for the error between \(f_{\hat{t},r}\) and \(f_{t,r}\). Thus since \(\mathrm{error}_{\mathcal {D}}(f_{\hat{t}, r}, f_{t, r}) \ge \alpha \), for each i it must be that \(\hat{t} \le \mathsf {Dec}(\mathsf {sk}^r, c_i) < t\) with probability at least \(\alpha \). The learner L therefore receives some sample \(c_i\) with \(\hat{t} \le \mathsf {Dec}(\mathsf {sk}^r, c_i) < t\) with probability at least \(1- (1-\alpha )^n \ge 1-\beta \) (since we took \(n \ge \log (1/\beta )/\alpha \)).

Case 2: \(\mathcal {D}\) places less than \(\alpha \) weight on \({\text {POS}}\). Then the identically zero hypothesis has error at most \(\alpha \), so the claim holds because \(0 \le h \le f_{t, r}\).

3.2 Hardness of Privately Learning \(\mathsf {EncThresh}\)

We now prove the hardness of privately learning \(\mathsf {EncThresh}\) by constructing an example reidentification scheme for this concept class. Recall that an example reidentification scheme consists of two algorithms, \(\mathsf {Gen_{ex}}\), which selects a distribution, a concept, and examples to give to a learner, and \(\mathsf {Trace_{ex}}\) which attempts to identify one of the examples the learner received.

Our example reidentification scheme yields a hard distribution even for weak-learning, where the error parameter \(\alpha \) is taken to be inverse-polynomially close to 1 / 2.

Theorem 5

Let \(\gamma (n)\) and \(\xi (n)\) be noticeable functions. Let \((\mathsf {Gen}, \mathsf {Enc}, \mathsf {Dec}, \mathsf {Comp})\) be a statically single-challenge secure ORE scheme. Then there exists an (efficient) \((\alpha = \frac{1}{2} - \gamma , \xi )\)-example reidentification scheme \((\mathsf {Gen_{ex}}, \mathsf {Trace_{ex}})\) for the concept class \(\mathsf {EncThresh}\).

We start with an informal description of the scheme \((\mathsf {Gen_{ex}}, \mathsf {Trace_{ex}})\). The algorithm \(\mathsf {Gen_{ex}}\) sets up the parameters of the ORE scheme, chooses the “middle” threshold concept corresponding to \(t = N/2\), and sets the distribution on examples to be encryptions of uniformly random messages (together with the correct public parameters needed for comparison). Let \(m_1 < m_2 < \dots < m_n\) denote the sorted sequence of messages whose encryptions make up the sample produced by \(\mathsf {Gen_{ex}}\) (with overwhelming probability, they are indeed distinct). We can thus break the plaintext space up into buckets of the form \(B_i = [m_i, m_{i+1})\). Suppose L is a (weak) learner that produces a hypothesis h with advantage \(\gamma \) over random guessing. Such a hypothesis h must be able to distinguish encryptions of messages \(m \le t\) from encryptions of messages \(m > t\) with advantage \(\gamma \). Thus, there must be a pair of adjacent buckets \(B_{i-1}, B_i\) for which h can distinguish encryptions of messages from \(B_{i-1}\) from encryptions from \(B_i\) with advantage \(\frac{\gamma }{n}\).

This observation leads to a natural definition for \(\mathsf {Trace_{ex}}\): locate a pair of adjacent buckets \(B_{i-1}, B_i\) that h distinguishes, and output the identity i of the example separating those buckets. Completeness of the resulting scheme, i.e. the fact that some example is reidentified when L succeeds, follows immediately from the preceding discussion. We argue soundness, i.e. that an example absent from L’s sample is not identified, by reducing to the static security of the ORE scheme. The intuition is that if L is not given example i, then it should not be able to distinguish encryptions from bucket \(B_{i-1}\) from encryptions from bucket \(B_{i}\).

To make the security reduction somewhat more precise, suppose for the sake of contradiction that there is an efficient algorithm L that violates the soundness of \((\mathsf {Gen_{ex}}, \mathsf {Trace_{ex}})\) with noticeable probability \(\xi \). That is, there is some i such that even without example i, the algorithm L manages to produce (with probability \(\xi \)) a hypothesis h that distinguishes \(B_{i-1}\) from \(B_{i}\). A natural first attempt to violate the security of the ORE is to construct an adversary that challenges on the message sequences \(m_1 <\dots < m_{i-1} < m_i^{(L)} < m_{i+1}, < , m_n\) and \(m_1 < \dots < m_{i-1} < m_i^{(R)} < m_{i+1} < \dots < m_n\), where \(m_i^{(L)}\) is randomly chosen from \(B_{i-1}\) and \(m_i^{(R)}\) is randomly chosen from \(B_{i}\). Then if h can distinguish \(B_{i-1}\) from \(B_i\), the adversary can distinguish the two sequences. Unfortunately, this approach fails for a somewhat subtle reason. The hypothesis h is only guaranteed to distinguish \(B_{i-1}\) from \(B_{i}\) with probability \(\xi \). If h fails to distinguish the buckets – or distinguishes them in the opposite direction – then the adversary’s advantage is lost.

To overcome this issue, we instead rely on the security of the ORE for sequences that differ on two messages. For the “left” challenge, our adversary samples two messages from the same randomly chosen bucket, \(B_{i-1}\) or \(B_{i}\) (in addition to requesting encryptions of \(m_1, \dots , m_{i-1}, m_i, \dots , m_n\)). For the “right” challenge, it samples one message from each bucket \(B_{i-1}\) and \(B_i\). Let \(c^0\) and \(c^1\) be the ciphertexts corresponding to thee challenge messages. If h agrees on \(c^0\) and \(c^1\), then this suggests the messages are from the same bucket, and the adversary should guess “left”. On the other hand, if h disagrees on \(c^0\) and \(c^1\), then the adversary should guess “right”. If h distinguishes the buckets \(B_{i-1}\) and \(B_i\), this adversary does strictly better than random guessing. On the other hand, even if h fails to distinguish the buckets, the adversary does at least as well as random guessing. So overall, it still has a noticeable advantage at the ORE security game.

We now give the formal proof of Theorem 5.

Proof

We construct an example reidentification scheme for \(\mathsf {EncThresh}\) as follows. The algorithm \(\mathsf {Gen_{ex}}\) fixes the threshold \(t = N/2\) and samples  , yielding a concept \(f_{t, r}\). Let \(\mathcal {D}\) be the distribution \((\mathsf {pars}^r, \mathsf {Enc}(\mathsf {sk}^r, m))\) for uniformly random \(m \in [N]\). Let

, yielding a concept \(f_{t, r}\). Let \(\mathcal {D}\) be the distribution \((\mathsf {pars}^r, \mathsf {Enc}(\mathsf {sk}^r, m))\) for uniformly random \(m \in [N]\). Let  , and let \(m_1 \le \dots \le m_n\) be the result of sorting the \(m_i'\). Let \(m_0 = 0\) and \(m_{n+1} = N\). Since \(n = \mathrm {poly}(k) \ll N\), these random messages will be well-spaced. In particular, with overwhelming probability, \(|m_{i+1} - m_i| > 1\) for every i, so we assume this is the case in what follows. \(\mathsf {Gen_{ex}}\) then sets the samples to be \((x_1 = (\mathsf {pars}^r, \mathsf {Enc}(\mathsf {sk}^r, m_1')), \dots , x_n = (\mathsf {pars}^r, \mathsf {Enc}(\mathsf {sk}^r, m_n')))\). Let \(x_0 = (\mathsf {pars}^r, \mathsf {Enc}(\mathsf {sk}^r, m_0))\) be a “junk” example.

, and let \(m_1 \le \dots \le m_n\) be the result of sorting the \(m_i'\). Let \(m_0 = 0\) and \(m_{n+1} = N\). Since \(n = \mathrm {poly}(k) \ll N\), these random messages will be well-spaced. In particular, with overwhelming probability, \(|m_{i+1} - m_i| > 1\) for every i, so we assume this is the case in what follows. \(\mathsf {Gen_{ex}}\) then sets the samples to be \((x_1 = (\mathsf {pars}^r, \mathsf {Enc}(\mathsf {sk}^r, m_1')), \dots , x_n = (\mathsf {pars}^r, \mathsf {Enc}(\mathsf {sk}^r, m_n')))\). Let \(x_0 = (\mathsf {pars}^r, \mathsf {Enc}(\mathsf {sk}^r, m_0))\) be a “junk” example.

The algorithm \(\mathsf {Trace_{ex}}\) creates buckets \(B_i = [m_i, m_{i+1})\). For each i, let

By sampling random choices of m in each bucket, \(\mathsf {Trace_{ex}}\) can efficiently compute a good estimate \(\hat{p}_i \approx p_i\) for each i (Lemma 1). It then accuses the least i for which \(\hat{p}_{i-1} - \hat{p}_{i} \ge \frac{\gamma }{n}\), and \(\bot \) if none is found.

Lemma 1

Let \(K = \frac{8n^2}{\gamma ^2}\log (9n/\xi )\). For each \(i = 0, \dots , n\), let

where \(x_j = (\mathsf {pars}^r, \mathsf {Enc}(\mathsf {sk}^r, m_j))\) for i.i.d.  . Then \(|\hat{p}_i - p_i| \le \frac{\gamma }{4n}\) for every i with probability at least \(1-\xi /4\).

. Then \(|\hat{p}_i - p_i| \le \frac{\gamma }{4n}\) for every i with probability at least \(1-\xi /4\).

Proof

By a Chernoff bound, the probability that any given \(\hat{p}_i\) deviates from \(p_i\) by more than \(\frac{\gamma }{4n}\) is at most \(2\exp (-K\gamma ^2/8n^2) \le \frac{\xi }{4(n+1)}\). The lemma follows by a union bound.

We first verify completeness for this scheme. Let L be a learner for \(\mathsf {EncThresh}\) using n examples. If the hypothesis h produced by L is \((\frac{1}{2} - \gamma )\)-good, then there exists \(i_0 < i_1\) such that \(p_{i_0} - p_{i_1} \ge 2\gamma \). If this is the case, then there must be an i for which \(p_{i-1} - p_{i} \ge \frac{2\gamma }{n}\). Then with probability all but \(\xi (n)/2\) over the estimates \(\hat{p}_i\), we have \(\hat{p}_{i-1} - \hat{p}_{i} \ge \frac{\gamma }{n}\), so some index is accused.

Now we verify soundness. Fix a PPT L, and let \(j^* \in [n]\). Suppose L violates the soundness of the scheme with respect to \(j^*\), i.e.

We will use L to construct an adversary \(\mathcal {A}\) for the ORE scheme that succeeds with noticeable advantage. It suffices to build an adversary for the static (many-challenge) security of ORE, with Theorem 3 showing how to convert it to a single-challenge adversary. This many-challenge adversary is presented as Algorithm 2. (While not explicitly stated, the adversary should halt and output a random guess whenever the messages it samples are not well-spaced.)

Let \(i^*\) be such that \(m_{i^*}=m'_{j^*}\). With probability at least \(\xi \) over the parameters \((\mathsf {sk}^r, \mathsf {pars}^r)\), the choice of messages, the choice of the hypothesis h, and the coins of \(\mathsf {Trace_{ex}}\), there is a gap \(\hat{p}_{i^*-1} - \hat{p}_{i^*} \ge \frac{\gamma }{n}\). Hence, by Lemma 1, there is a gap \(p_{i^* - 1} - p_{i^*} \ge \frac{\gamma }{2n}\) with probability at least \(\frac{\xi }{2}\).

We now calculate the advantage of the adversary \(\mathcal {A}\). Fix a hypothesis h. For notational simplicity, let \(p = p_{i^* - 1}\) and let \(q = p_{i^*}\). Let \(y_0 = h(\mathsf {pars}^r, c_{i^*}^0)\) and \(y_1 = h(\mathsf {pars}^r, c_{i^*}^1)\). Then the adversary’s success probability is:

Thus if \(p - q \ge \frac{\gamma }{2n}\), then the adversary’s advantage is at least \(\frac{\gamma ^2}{4n^2}\). On the other hand, even for arbitrary values of p, q, the advantage is still nonnegative. Therefore, the advantage of the strategy is at least \(\frac{\xi \gamma ^2}{8n^2} - \mathrm {negl}(k)\) (the \(\mathrm {negl}(k)\) term coming from the assumption that the \(m_i'\) sampled where distinct), which is a noticeable function of the parameter k. This contradicts the static security of the ORE scheme.

3.3 The SQ Learnability of \(\mathsf {EncThresh}\)

The statistical query (SQ) model is a natural restriction of the PAC model by which a learner is able to measure statistical properties of its examples, but cannot see the individual examples themselves. We recall the definition of an SQ learner.

Definition 8

(SQ learning) [39]). Let \(c : X \rightarrow \{0, 1\}\) be a target concept and let \(\mathcal {D}\) be a distribution over X. In the SQ model, a learner is given access to a statistical query oracle \(\mathsf {STAT}(c, \mathcal {D})\). It may make queries to this oracle of the form \((\psi , \tau )\), where \(\psi : X \times \{0, 1\} \rightarrow \{0, 1\}\) is a query function and \(\tau \in (0, 1)\) is an error tolerance. The oracle \(\mathsf {STAT}(c, \mathcal {D})\) responds with a value v such that \(|v - \Pr _{x \in \mathcal {D}}[\psi (x, c(x)) = 1]| \le \tau \). The goal of a learner is to produce, with probability at least \(1-\beta \), a hypothesis \(h : X \rightarrow \{0, 1\}\) such that \(\mathrm{error}_{\mathcal {D}}(c, h) \le \alpha \). The query functions must be efficiently evaluable, and the tolerance \(\tau \) must be lower bounded by an inverse polynomial in k and \(1/\alpha \).

The query complexity of a learner is the worst-case number of queries it issues to the statistical query oracle. An SQ learner is efficient if it also runs in time polynomial in \(k, 1/\alpha , 1/\beta \).

Feldman and Kanade [24] investigated the relationship between query complexity and computational complexity for SQ learners. They exhibited a concept class \(\mathcal {C}\) which is efficiently PAC learnable and SQ learnable with polynomially many queries, but assuming \(\mathbf {NP} \ne \mathbf {RP}\), is not efficiently SQ learnable. Concepts in this concept class take the form

Here, \({\text {PAR}}_y(x')\) is the inner product of y and \(x'\) modulo 2. The concept class \(\mathcal {C}\) consists of \(g_{\phi , y}\) where \(\phi \) is a satisfiable 3-CNF formula and y is the lexicographically first satisfying assignment to \(\phi \). The efficient PAC learner for parities based on Gaussian elimination shows that \(\mathcal {C}\) is also efficiently PAC learnable. It is also (inefficiently) SQ learnable with polynomially many queries: either the all-zeroes hypothesis is good, or an SQ learner can recover the formula \(\phi \) bit-by-bit and determine the satisfying assignment y by brute force. On the other hand, because parities are information-theoretically hard to SQ learn, the satisfying assignment y remains hidden to an SQ learner unless it is able to solve 3-SAT.

In this section, we show that the concept class \(\mathsf {EncThresh}\) shares these properties with \(\mathcal {C}\). Namely, we know that \(\mathsf {EncThresh}\) is efficiently PAC learnable and because it is not efficiently privately learnable, it is not efficiently SQ learnable [5]. We can also show that \(\mathsf {EncThresh}\) has an SQ learner with polynomial query complexity. Making this observation about \(\mathsf {EncThresh}\) is of interest because the hardness of SQ learning \(\mathsf {EncThresh}\) does not seem to be related to the (information-theoretic) hardness of SQ learning parities.

Proposition 1

The concept class \(\mathsf {EncThresh}\) is (inefficiently) SQ learnable with polynomially many queries.

As with \(\mathcal {C}\) there are two cases. In the first case, the target distribution places nearly zero weight on examples with \(\mathsf {pars}= \mathsf {pars}^r\), and so the all-zeroes hypothesis is good. In the second case, the target distribution places noticeable weight on these examples, and our learner can use statistical queries to recover the comparison parameters \(\mathsf {pars}^r\) bit-by-bit. Once the public parameters are recovered, our learner can determine a corresponding secret key by brute force. Lemma 2 below shows that any corresponding secret key – even one that is not actually \(\mathsf {sk}^r\) – suffices. The learner can then use binary search to determine the threshold value t.

Proof

Let \(f_{t, r}\) be the target concept, \(\mathcal {D}\) be the target distribution, and \(\alpha \) be the target error rate. With the statistical query \((x \times b \mapsto b, \alpha /4)\), we can determine whether the all-zeroes hypothesis is accurate. That is, if we receive a value that is less than \(\alpha /2\), then \(\Pr _{x \in \mathcal {D}}[f_{t, r}(x) = 1] \le \alpha \). If not, then we know that \(\Pr _{x \in \mathcal {D}}[f_{t, r}(x) = 1] \ge \alpha /4\), so \(\mathcal {D}\) places significant weight on examples prefixed with \(\mathsf {pars}^r\). Suppose now that we are in the latter case.

Let \(m = |\mathsf {pars}|\). For \(i = 1, \dots , m\), define \(\psi _i(\mathsf {pars}, c, b) = 1\) if \(\mathsf {pars}_i = 1\) and \(b = 1\), and \(\psi _i(\mathsf {pars}, c, b) = 0\) otherwise. Then by asking the queries \((\psi _i, \alpha /16)\), we can determine each bit \(\mathsf {pars}^r_i\) of \(\mathsf {pars}^r\).

Now by brute force search, we determine a secret key \(\mathsf {sk}\) for which \((\mathsf {sk}, \mathsf {pars}^r) \in {\text {Range}}(\mathsf {Gen})\). The recovered secret key \(\mathsf {sk}\) may not necessarily be the same as \(\mathsf {sk}^r\). However, the following lemma shows that \(\mathsf {sk}\) and \(\mathsf {sk}^r\) are functionally equivalent:

Lemma 2

Suppose \((\mathsf {Gen}, \mathsf {Enc}, \mathsf {Dec}, \mathsf {Comp})\) is a strongly correct ORE scheme. Then for any pair \((\mathsf {sk}_1, \mathsf {pars}), (\mathsf {sk}_2, \mathsf {pars}) \in {\text {Range}}(\mathsf {Gen})\), we have that \(\mathsf {Dec}_{\mathsf {sk}_1}(c)= \mathsf {Dec}_{\mathsf {sk}_2}(c)\) for all ciphertexts c.

With the secret key \(\mathsf {sk}\) in hand, we now conduct a binary search for the threshold t. Recall that we have an estimate v for the weight that \(f_{t, r}\) places on positive examples, i.e. \(|v - \Pr _{x \in \mathcal {D}}[f_{t, r}(x) = 1]| \le \alpha /4\). Starting at \(t_1 = N/2\), we issue the query \((\varphi _1, \alpha /4)\) where \(\varphi _1(\mathsf {pars}, c, b) = 1\) iff \(\mathsf {pars}= \mathsf {pars}^r\) and \(\mathsf {Dec}(\mathsf {sk}, c) < t\). Let \(h_{t_1}\) denote the hypothesis

Thus, the query \((\varphi _1, \alpha /4)\) approximates the weight \(h_{t_1}\) places on positive examples. Let the answer to this query be \(v_1\). If \(|v_1 - v| \le \alpha / 2\), then we can halt and output the good hypothesis \(h_{t_1}\). Otherwise, if \(v_1 < v - \alpha /2\), we set the next threshold to \(t_2 = 3N/4\), and if \(v_1 > v + \alpha /2\), we set the next threshold to \(t_2 = N/4\). We recurse up to \(\log N = \ell = \mathrm {poly}(k)\) times, yielding a good hypothesis for \(f_{t, r}\).

Proof

(Proof of Lemma 2 ). Suppose the lemma is not true. First suppose that there exists a ciphertext c such that \(\mathsf {Dec}(\mathsf {sk}_1, c) = p_1 < p_2 = \mathsf {Dec}(\mathsf {sk}_2, c)\). Let \(c' \in \mathsf {Enc}(\mathsf {sk}_1, p_2)\). Then by strong correctness applied to the parameters \((\mathsf {sk}_1, \mathsf {pars})\), we must have \(\mathsf {Comp}(\mathsf {pars}, c, c') =\) “\(<\)”. Now by strong correctness applied to \((\mathsf {sk}_2, \mathsf {pars})\), we must have \(\mathsf {Dec}(\mathsf {sk}_2, c') > p_2\). Thus, \(p_1 < \mathsf {Dec}(\mathsf {sk}_1, c') = p_2 < \mathsf {Dec}(\mathsf {sk}_2, c')\). Repeating this argument, we obtain a contradiction because the message space is finite.

Now suppose instead that there is a ciphertext c for which \(\mathsf {Dec}(\mathsf {sk}_1, c) = p \in [N]\), but \(\mathsf {Dec}(\mathsf {sk}_2, c) = \bot \). Let \(c' \in \mathsf {Enc}(\mathsf {sk}_1, p')\) for some \(p' > p\). Then \(\mathsf {Comp}(\mathsf {pars}, c, c') =\) “\(<\)” by strong correctness applied to \((\mathsf {pars}, \mathsf {sk}_1)\). But \(\mathsf {Comp}(\mathsf {pars},c, c') = ``\bot "\) by strong correctness applied to \((\mathsf {pars}, \mathsf {sk}_2)\), again yielding a contradiction.

4 ORE with Strong Correctness

We now explain how to obtain ORE with strongly correct comparison, as all prior ORE schemes only satisfy the weaker notion of correctness. The lack of strong correctness is easiest to see with the scheme of Boneh et al. [8]. The protocol is built from current multilinear map constructions, which are noisy. If the noise terms grow too large, the correctness of the multilinear map is not guaranteed. The comparison function in [8] is computed by performing multilinear operations, and for correctly generated ciphertexts, the operations will give the right answer. However, there exist ciphertexts, namely those with very large noise, for which the comparison function gives an incorrect output. The result is that the comparison operation is not guaranteed to be consistent with decrypting the ciphertexts and comparing the plaintexts.

As described in the introduction, we give a generic conversion from any ORE scheme with weakly correct comparison into a strongly correct scheme. We simply modify the encryption algorithm by adding a non-interactive zero-knowledge (NIZK) proof that the resulting ciphertext is well-formed. Then the decryption and comparison procedures check the proof(s), and only output a non-\(\bot \) result (either decryption or comparison) if the proof(s) are valid.

Instantiating our Scheme. In our construction, we need the (weak) correctness of the underlying ORE scheme to hold with probability one. However, the existing protocols only have correctness with overwhelming probability, so some minor adjustments need to be made to the protocols. This is easiest to see in the ORE scheme of Boneh et al. [8]. The Boneh et al. scheme uses noisy multilinear maps [26] which may introduce errors. Therefore, the protocol described in [8] only achieves the (weak) correctness property with overwhelming probability, whereas we will require (weak) correctness with probability 1 for the conversion. However, it is straightforward to generate the parameters for the protocol in such a way as to completely eliminate errors. Essentially, the parameters in the protocol have an error term that is generated by a (discrete) Gaussian distribution, which has unbounded support. Instead, we truncate the Gaussian, resulting in a noise distribution with bounded support. By truncating sufficiently far from the center, the resulting distribution is also statistically close to the full Gaussian, so security of the protocol with truncated noise follows from the security of the protocol with un-truncated noise. By truncating the noise distribution, it is straightforward to set parameters so that no errors can occur.

It is similarly straightforward to modify current obfuscation candidates, which are also built from multilinear maps, to obtain perfect (weak) correctness by truncating the noise distributions. Thus, our scheme has instantiations using multilinear maps or iO.

4.1 Conversion from Weakly Correct ORE

We describe our generic conversion from an order-revaling encryption scheme with weak correctness using NIZKs. We will need the following additional tools:

Perfectly Binding Commitments. A perfectly binding commitment \(\mathsf {Com}\) is a randomized algorithm with two properties. The first is perfect binding, which states that if \(\mathsf {Com}(m;r)=\mathsf {Com}(m';r')\), then \(m=m'\). The second requirement is computational hiding, which states that the distributions \(\mathsf {Com}(m)\) and \(\mathsf {Com}(m')\) are computationally indistinguishable for any messages \(m,m'\). Such commitments can be built, say, from any injective one-way function.

Perfectly Sound NIZK. A NIZK protocol consists of three algorithms:

-

\(\mathsf {Setup}(1^\lambda )\) is a randomized algorithm that outputs a common reference string \(\mathsf {crs}\).

-

\(\mathsf {Prove}(\mathsf {crs},x,w)\) takes as input a common reference string \(\mathsf {crs}\), an NP statement x, and a witness w, and produces a proof \(\pi \).

-

\(\mathsf {Ver}(\mathsf {crs},x,\pi )\) takes as input a common reference string \(\mathsf {crs}\), statement x, and a proof \(\pi \), and outputs either \(\mathsf {accept}\) or \(\mathsf {reject}\).

We make three requirements for a NIZK:

-

Perfect Completeness. For all security parameters \(\lambda \) and any true statement x with witness w,

$$\begin{aligned} \Pr [\mathsf {Ver}(\mathsf {crs},x,\pi )=\mathsf {accept}:\mathsf {crs}\leftarrow \mathsf {Setup}(1^\lambda );\pi \leftarrow \mathsf {Prove}(\mathsf {crs},x,w)]=1. \end{aligned}$$ -

Perfect Soundness. For all security parameters \(\lambda \), any false statement x and any (invalid) proof \(\pi \),

$$\begin{aligned} \Pr [\mathsf {Ver}(\mathsf {crs},x,\pi )=\mathsf {accept}:\mathsf {crs}\leftarrow \mathsf {Setup}(1^\lambda )]=0. \end{aligned}$$ -

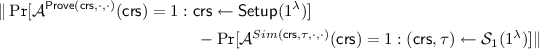

Computational Zero Knowledge. There exists a simulator \(\mathcal {S}_1,\mathcal {S}_2\) such that for any computationally bounded adversary \(\mathcal {A}\), the quantity

is negligible, where \(Sim(\mathsf {crs},\tau ,x,w)\) outputs \(\mathcal {S}_2(\mathsf {crs},\tau ,x)\) if w is a valid witness for x, and \(Sim(\mathsf {crs},\tau ,x,w)=\bot \) if w is invalid.

NIZKs satisfying these requirements can be built from bilinear maps [32].

The Construction. We now give our conversion. Let \((\mathsf {Setup},\mathsf {Prove},\mathsf {Ver})\) be a perfectly sound NIZK and \((\mathsf {Gen}',\mathsf {Enc}',\mathsf {Dec}',\mathsf {Comp}')\) and ORE with weakly correct comparison. We will assume that \(\mathsf {Enc}'\) is deterministic; if not, we can derandomize \(\mathsf {Enc}'\) using a pseudorandom function. Let \(\mathsf {Com}\) be a perfectly binding commitment. We construct a new ORE scheme \((\mathsf {Gen},\mathsf {Enc},\mathsf {Dec},\mathsf {Comp})\) with strongly correct comparison:

-

\(\mathsf {Gen}(1^\lambda ,1^\ell )\): run \((\mathsf {sk}',\mathsf {pars}')\leftarrow \mathsf {Gen}'(1^\lambda ,1^\ell )\). Let \(\sigma =\mathsf {Com}(\mathsf {sk};r)\) for randomness r, and run \(\mathsf {crs}\leftarrow \mathsf {Setup}(1^\lambda )\). Then the secret key is \(\mathsf {sk}=(\mathsf {sk}',r,\mathsf {crs})\) and the public parameters are \(\mathsf {pars}=(\mathsf {pars}',\sigma ,\mathsf {crs})\).

-

\(\mathsf {Enc}(\mathsf {sk},m)\): Compute \(c'=\mathsf {Enc}'(\mathsf {sk}',m)\). Let \(x_{c'}\) be the statement \(\exists \hat{m},\hat{\mathsf {sk}}',\hat{r}:\sigma =\mathsf {Com}(\hat{\mathsf {sk}}',\hat{r})\wedge c'=\mathsf {Enc}'(\hat{\mathsf {sk}}',\hat{m})\). Run \(\pi _{c'}=\mathsf {Prove}(\mathsf {crs},x_{c'},\;(m,\mathsf {sk}',r))\). Output the ciphertext \(c=(c',\pi _{c'})\).

-

\(\mathsf {Dec}(\mathsf {sk},c)\): Write \(c=(c',\pi _{c'})\). If \(\mathsf {Ver}(\mathsf {crs}, x_{c'},\pi _{c'})=\mathsf {reject}\), output \(\bot \). Otherwise, output \(m=\mathsf {Dec}'(\mathsf {sk}',c')\).

-

\(\mathsf {Comp}(\mathsf {pars},c_0,c_1)\); Write \(c_b=(c_b',\pi _{c_b'})\) and \(\mathsf {pars}=(\mathsf {pars}',\sigma ,\mathsf {crs})\). If \(\mathsf {Ver}(\mathsf {crs},x_{c_b'},\pi _{c_b'})=\mathsf {reject}\) for either \(b=0,1\), then output \(\bot \). Otherwise, output \(\mathsf {Comp}'(\mathsf {pars}',c_0',c_1')\).

Correctness. Notice that, for each plaintext m, the ciphertext component \(c'=\mathsf {Enc}'(\mathsf {sk}',m)\) is the unique value such that \(\mathsf {Dec}(\mathsf {sk},(c',\pi ))=m\) for some proof \(\pi \). Moreover, the completeness of the zero knowledge proof implies that \(\mathsf {Enc}(\mathsf {sk},m)\) outputs a valid proof. Decryption correctness follows.

For strong comparison correctness, consider two ciphertexts \(c_0,c_1\) where \(c_b=(c_b',\pi _{c_b'})\). Suppose both proofs \(\pi _{c_b'}\) are valid, which means that verification passes when running \(\mathsf {Comp}\) and so \(\mathsf {Comp}(\mathsf {pars},c_0,c_1)=\mathsf {Comp}'(\mathsf {pars}',c_0',c_1')\). Verification also passes when decrypting \(c_b\), and so \(\mathsf {Dec}(\mathsf {sk},c_b)=\mathsf {Dec}'(\mathsf {sk}',c_b')\).

Since the proofs are valid, \(c_b'=\mathsf {Enc}'(\mathsf {sk}',m_b)\) for some \(m_b\) for both \(b=0,1\). The weak correctness of comparison for \((\mathsf {Gen}',\mathsf {Enc}',\mathsf {Dec}',\mathsf {Comp}')\) implies that \(\mathsf {Comp}'(\mathsf {pars}',c_0',c_1')=\mathsf {Comp}_{plain}(m_0,m_1)\). The decryption correctness of \((\mathsf {Gen}',\mathsf {Enc}',\mathsf {Dec}',\mathsf {Comp}')\) then implies that \(\mathsf {Dec}(\mathsf {sk}',c_b')=m_b\), and therefore \(\mathsf {Dec}(\mathsf {sk},c_b)=m_b\). Thus \(\mathsf {Comp}_{ciph}(\mathsf {sk},c_0,c_1)=\mathsf {Comp}_{plain}(m_0,m_1)\). Putting it all together, \(\mathsf {Comp}(\mathsf {pars},c_0,c_1)=\mathsf {Comp}_{ciph}(\mathsf {sk},c_0,c_1)\), as desired.

Now suppose one of the proofs \(\pi _{c_b'}\) are invalid. Then \(\mathsf {Comp}(\mathsf {pars},c_0,c_1)=\bot \) and \(\mathsf {Dec}(\mathsf {sk},c_b)=\bot \). This means \(\mathsf {Comp}_{ciph}(\mathsf {sk},c_0,c_1)=\bot =\mathsf {Comp}(\mathsf {pars},c_0,c_1)\), as desired.

Security. To prove security, we first use the zero-knowledge simulator to simulate the proofs \(\pi _c'\) without using a witness (namely, the secret decryption key). Then we use the hiding property of the commitment to replace \(\sigma \) with a commitment to 0. At this point, the entire game can be simulated using an \(\mathsf {Enc}'\) oracle, and so the security reduces to the security of \(\mathsf {Enc}'\).

Theorem 6

If \((\mathsf {Gen}',\mathsf {Enc}',\mathsf {Dec}',\mathsf {Comp}')\) is a (statically) secure ORE, \((\mathsf {Setup},\mathsf {Prove},\mathsf {Ver})\) is computationally zero knowledge, and \(\mathsf {Com}\) is computationally hiding, then \((\mathsf {Gen},\mathsf {Enc},\mathsf {Dec},\mathsf {Comp})\) is a statically secure ORE.

Proof

We will prove security through a sequence of hybrids. Let \(\mathcal {A}\) be an adversary with advantage \(\epsilon \) in breaking the static security of \((\mathsf {Gen},\mathsf {Enc},\mathsf {Dec},\mathsf {Comp})\).

Hybrid 0. This is the real experiment, where \(\sigma \leftarrow \mathsf {Com}(\mathsf {sk})\), \(\mathsf {crs}\leftarrow \mathsf {Setup}(1^\lambda )\), and the proofs \(\pi _{c'}\) are answered using \(\mathsf {Prove}\) and valid witnesses. \(\mathcal {A}\) has advantage \(\epsilon \) in distinguishing the left and right ciphertexts.

Hybrid 1. This is the same as Hybrid 0, except that \(\mathsf {crs}\) is generated as \((\mathsf {crs},\tau )\leftarrow \mathcal {S}_1(1^\lambda )\), and all proofs are generated using \(\mathcal {S}_2(\mathsf {crs},\tau ,\cdot )\). The zero knowledge property of \((\mathsf {Setup},\mathsf {Prove},\mathsf {Ver})\) shows that this is indistinguishable from Hybrid 0.

Hybrid 2. This is the same as Hybrid 1, except that \(\sigma \leftarrow \mathsf {Com}(0)\). Since the randomness for computing \(\sigma \) is not needed for simulation, this change is undetectable using the hiding of \(\mathsf {Com}\).

Thus the advantage of \(\mathcal {A}\) in Hybrid 2 is at least \(\epsilon -\mathrm {negl}\) for some negligible function \(\mathrm {negl}\). Now consider the following adversary cB that attempts to break the security of \((\mathsf {Gen}',\mathsf {Enc}',\mathsf {Dec}',\mathsf {Comp}')\). \(\mathcal {B}\) simulates \(\mathcal {A}\), and forwards the message sequences \(m_1^{(L)}<m_2^{(L)}<\dots <m_q^{(L)}\) and \(m_1^{(R)}<m_2^{(R)}<\dots <m_q^{(R)}\) produced by \(\mathcal {A}\) to its own challenger. In response, it receives \(\mathsf {pars}'\), and ciphertexts \(c_i'\), where \(c_i'\) encrypts either \(m_i^{(L)}\) if \(b=0\) or \(m_i^{(R)}\) if \(b=1\), for a random bit b chosen by the challenger.

\(\mathcal {B}\) now generates \(\sigma \leftarrow \mathsf {Com}(0)\), \((\mathsf {crs},\tau )\leftarrow \mathcal {S}_1(1^\lambda )\), and lets \(\mathsf {pars}=(\mathsf {pars}',\sigma ,\mathsf {crs})\). It also computes \(\pi _{c_i'}\leftarrow \mathcal {S}_2(\mathsf {crs},\tau ,x_{c_i'})\), and defines \(c_i=(c_i',\pi _{c_i'})\), and gives \(\mathsf {pars}\) and the \(c_i\) to \(\mathcal {A}\). Finally when \(\mathcal {A}\) outputs a guess \(b'\) for b, \(\mathcal {B}\) outputs the same guess \(b'\).