Abstract

The hypergraph partitioning problem has many applications in scientific computing and provides a more accurate inter-processor communication model for distributed systems than the equivalent graph problem. In this paper, we propose a sequential multi-level hypergraph partitioning algorithm. The algorithm makes novel use of the technique of rough set clustering in categorising the vertices of the hypergraph. The algorithm treats hyperedges as features of the hypergraph and tries to discard unimportant hyperedges to make better clustering decisions. It also focuses on the trade-off to be made between local vertex matching decisions (which have low cost in terms of the space required and time taken) and global decisions (which can be of better quality but have greater costs). The algorithm is evaluated and compared to state-of-the-art algorithms on a range of benchmarks. The results show that it generates better partition quality.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Hypergraph Partitioning

- Core Vertices

- VLSI Circuit Partitioning

- Higher Average Roughness

- Reduced Information System

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

A hypergraph is a pair: a set of vertices and a set of hyperedges. Each hyperedge is a subset of the vertex set (there is no restriction on its size). The hypergraph partitioning problem asks, roughly speaking, for a partition of the vertex set such that the vertices are evenly distributed amongst the parts and the number of hyperedges that intersect multiple parts is minimised. A tool to solve this probem is called a partitioner. Hypergraph partitioning has applications in many areas of computer science such as data mining and image processing.

The hypergraph partitioning problem is a generalisation of the graph partitioning problem (in which the edges of a graph are subsets of the vertex set of size two contrasting with hyperedges whose size is unbounded), and provides a more natural way of representing the relationships between objects inherent in many problems [14]. The removal of the constraint on edge size, however, increases the practical difficulty of partitioning [13]. As both the graph and hypergraph variants of the partitioning problem are NP-hard [12], a number of heuristic algorithms have been proposed [10, 17]. In this paper, we propose and evaluate a new algorithm.

Our serial Feature Extraction Hypergraph Partitioning (FEHG) algorithm is of a type known as multi-level. It has three distinct phases: coarsening, initial partitioning and uncoarsening. During coarsening vertices are merged to obtain hypergraphs with progressively smaller vertex sets. After the coarsening stage, the partitioning problem is solved on the smaller hypergraph obtained in the initial partitioning. During uncoarsening, the coarsening stage is reversed and the solution obtained on the small hypergraph is used to provide a solution on the input hypergraph. We describe some of the problems of multi-level partitioning that motivate our study:

-

1.

Heuristics for multi-level hypergraph partitioning focus on finding highly-connected clusters of vertices that can be merged to form a coarser hypergraph. This requires a metric of similarity, the evaluation of which requires the recognition of “similar” vertices. As the mean and standard deviation of vertex degrees are usually high (and so the similarity of pairs of vertices is typically low), it is often a problem to define and measure the similarity [9].

-

2.

There can be redundancy in modelling scientific problems with hypergraphs and it is desirable to remove it. In [13], an attempt to reduce the storage overhead of saving and processing hypergraphs is presented, but the strategy can increase either the storage requirement or the running time in some cases.

-

3.

Decision making for matching vertices (that will be merged) is usually done locally. Global decisions are avoided due to their high cost and complexity though they give better results [21]. All proposed heuristics reduce the search domain and try to find the vertices to be matched using some degree of randomness. This degrades the quality of the partitioning by increasing the possibility of getting stuck in a local minimum. A better trade-off is needed between the low cost of local decisions and the high quality of global ones.

Highlights of our contribution:

-

We propose a new serial multi-level hypergraph partitioning algorithm which gives significant quality improvements over state-of-the-art algorithms.

-

We use rough set based clustering techniques for removing redundant attributes while partitioning and so make better clustering decisions.

-

We provide a trade-off between global and local clustering methods by calculating sets of core vertices (a global decision) and then traversing these cores one at a time to find best matchings between vertices (a local decision).

-

We show that solely relying on a vertex similarity metric can result in major degradation of the partitioning quality for some hypergraphs and different coarsening methods should be considered.

In the next section, we briefly review partitioning algorithms and software tools. In Sect. 3, we give a technical introduction to the Hypergraph Partitioning Problem. In Sect. 4 we introduce FEHG. In Sect. 5 we evaluate the algorithm and report results of a simulation comparing FEHG to state-of-the-art algorithms. Finally in Sect. 6, we conclude with comments on ongoing and future work.

2 Related Work

We provide a brief review of algorithms, tools, and applications of hypergraph partitioning; the reader is referred to [21] for an extensive survey. We note that, in general, there is no partitioner recognized to perform well for all types of hypergraphs as there are always trade-offs such as those between quality and speed [21]. Partitioning algorithms can be serial [4, 16] or parallel [8], iterative move-based [10] or multi-level [5], static [22] or dynamic [5], recursive [8] or direct [1], and finally they can work directly on hypergraphs [16] or model them as graphs and use graph partitioning algorithms [17].

Few software tools are available for hypergraph partitioning and there is no unified framework for hypergraph processing. One popular tool designed for VLSI circuit partitioning is hMetis Footnote 1 [16]. The algorithms are based on multi-level partitioning schemes and support recursive bisectioning (shmetis, hmetis), and direct k–way partitioning (kmetis). Examples of tools that are designed for specific applications are MLPart Footnote 2 and Mondriaan Footnote 3, designed for VLSI circuit partitioning and rectangular sparse matrix-vector multiplications, respectively. The emphasis of MLPart is on simplicity of design and Mondriaan uses the idea of 2D matrix partitioning to enhance performance [22]. PaToH Footnote 4 [4] is a multi-level recursive bipartitioning tool designed for serial hypergraph partitioning. It supports agglomerative (vertex clusters are formed one at a time) and hierarchical (several clusters of vertices can be formed simultaneously) clustering algorithms. Zoltan Footnote 5 [8] is developed for parallel applications. Its library includes a range of tools for problems such as dynamic load balancing and graph and hypergraph colouring and partitioning. Both static and dynamic hypergraph partitioning are supported as are multi-criteria load balancing and processor heterogeneity.

There are a wide range of applications for hypergraph partitioning (see, for example, [20]) including classifying gene expression data, replication management in distributed databases [6] and high dimensional data clustering [15].

3 Definitions

3.1 Hypergraph Partitioning

A hypergraph \(H=(V,E)\) is a pair consisting of a finite set of vertices V, with size \(\left| {V} \right| =n\) and a multi–set \(E \subseteq 2^n\) of hyperedges with size \(\left| {E} \right| = m\). For a hyperedge \(e \in E\) and vertex v, we say e contains v, or is incident to v, if \(v \in e\); this is represented by \(e \triangleright v\). The degree of a vertex is the number of distinct incident hyperedges and the size of a hyperedge \(\left| {e} \right| \) is the number of vertices it contains. The hypergraph is simply a graph if every hyperedge has size two.

Definition 1

Let k be a non–negative integer and let \(H=(V,E)\) be a hypergraph. A k–way partitioning of H is a collection of sets \(\varPi = \left\{ P_1, P_2, \cdots , P_k \right\} \) such that \(\cup _{i=1}^{k} P_i=V\), and \(\forall P_i,P_j \subset V, \; 1 \leqslant i \ne j \leqslant k\), we have \(P_i \ne \emptyset \), \(P_i \cap P_j = \emptyset \).

We say that \(v \in V\) is assigned to a part \(P \in \varPi \) if \(v \in P\). Let \(\omega :V \mapsto \mathbb {N}\) and \(\gamma :E \mapsto \mathbb {N}\) be weight functions for the vertices and hyperedges. The weight of P is defined as \(\omega (P)=\sum \nolimits _{v \in P}{\omega (v)}\). A hyperedge \(e \in E\) is said to be connected to P if \(e \cap P \ne \emptyset \). The connectivity degree of e is the number of parts connected to e and is denoted by \(\lambda _e(H,\varPi )\). A hyperedge is cut if it connects to more than one part. We define the cost of a partition \(\varPi \) of H as

The connectivity objective is to find a partition \(\varPi \) of low cost. Let \(W_{\mathrm {ave}}\) be the average weight of the parts: that is \(W_{\mathrm {ave}} =\sum \nolimits _{v \in V} \omega (v)/k\). The balancing requirement asks that all parts of the partition have similar weight: that is, given imbalance tolerance \(\epsilon \in (0,1)\), it is required that

The hypergraph partitioning problem is finding a minimum cost partition \(\varPi \) of H that satisfies the balancing requirement.

3.2 Rough Set Clustering

Rough set theory was introduced by Pawlak in 1991 as an approach to understanding fuzzy and uncertain knowledge [19]. It provides a mathematical tool to discover hidden patterns in data; it can be used, for example, for feature selection, data reduction, pattern extraction. It can deal efficiently with large data sets [2] by extracting global information that resides in the data.

Definition 2

Let \(\mathbb {U}\) be a non-empty finite set of objects (called the universe). Let \(\mathbf {A}\) be a non-empty finite set of attributes. Let \(\mathbf {V}\) be a multi-set of attribute values such that \(\mathbf {V}_a \in \mathbf {V}\) is a set of values for each \(a \in \mathbf {A}\). Let \(\mathcal {F}\) be a mapping function such that \(\mathcal {F}(u,a) \mapsto \mathbf {V}_a, \forall (a,u) \in \mathbf {A} \times \mathbb {U}\). Then \(\mathfrak {I}=(\mathbb {U},\mathbf {A},\mathbf {V},\mathcal {F} )\) is called an information system.

For any \(\mathbf {B} \subseteq \mathbf {A}\) there is an associated equivalence relation denoted \(\text {IND}(\mathbf {B})\) and called a B-Indiscernibility relation:

When \((u,v) \in \text {IND}(\mathbf {B})\), it is said that u and v are indiscernible under B and this is represented as \(u\mathcal {R}v\). Furthermore, the equivalence class of u with respect to B is \([u]_{\mathbf {B}}=\{v \in \mathbb {U} \mid u \mathcal {R} v\}\). The equivalence relation provides a partitioning of the universe and it is represented as \(\mathbb {U}/\text {IND}(\mathbf {B})\) or simply \(\mathbb {U}/\text {IND}\). Thus, for every \(X \in \mathbb {U}\), and with respect to \(\mathbf {B} \subseteq \mathbf {A}\), a B –lower and B –upper approximation can be defined for X, by, respectively, \(\underline{\mathbf {B}X}=\left\{ x \mid [x]_{\mathbf {B}} \subseteq X \right\} \) and \(\overline{\mathbf {B}X}=\left\{ x \mid [x]_{\mathbf {B}} \cap X \ne \emptyset \right\} \). \(\underline{\mathbf {B}X}\) contains objects that belong to X with certainty and \(\overline{\mathbf {B}X}\) contains objects that possibly belong to X. We describe a hypergraph \(H=(V,E)\) with an information system \(\mathcal {I}_{H}=(V,E,\mathbf {V},\mathcal {F})\) such that \(\mathbf {V}_e \in [0,1], \forall e \in E\) and the mapping function is defined as:

3.3 Hyperedge Connectivity Graph

We use rough set clustering in our algorithm to make better clustering decisions in hypergraphs. We will need a measure of similarity of a pair of hyperedges, a function \(sim(\cdot )\). Different similarity measures, such as Jaccard Index or Cosine Measure, can be used. Similarity is scaled according to the weight of hyperedges: for two \(e_i,e_j \in E\) the scaling factor is \(\frac{\gamma (e_i) + \gamma (e_j)}{2 \times \max _{e \in E}\left( \gamma (e) \right) }\).

Definition 3

For a given similarity threshold \(s \in (0,1)\), the Hyperedge Connectivity Graph (HCG) of a hypergraph \(H=(V,E)\) is a graph \(\mathcal {G}^s(\mathcal {V},\mathcal {E})\) where \(\mathcal {V}=E\) and two vertices \(v_i,v_j \in \mathcal {V}\) are adjacent if, for the corresponding hyperedges \(e_i,e_j \in E\) we have \(sim(e_i,e_j) \geqslant s\).

We discuss the importance of the choosing the similarity threshold in Sect. 5.

4 The Algorithm

The proposed algorithm is a recursive multi–level algorithm composed of coarsening, initial partitioning and uncoarsening phases.

4.1 The Coarsening

The process of coarsening involves finding a sequence of hypergraphs \(H=\left( V,E \right) , H^1=\left( V^1,E^1 \right) , \ldots , H^c=\left( V^c,E^c \right) \) such that each hypergraph has fewer vertices than its predecessor and the coarsest hypergraph \(H^c\) has fewer vertices than a predefined threshold. We say \(H^i\) is the hypergraph found at the ith level of coarsening. The compression ratio of successive levels i, j is defined as \(\frac{\left| {V^i} \right| }{\left| {V^j} \right| }\). We use vertex matching to match a pair of vertices and merge them to form a coarser vertex. The best pair is chosen using the Weighted Jaccard Index defined by:

This is similar to non-weighted jaccard index in PaToH which is called Scaled Heavy Connectivity Matching. The algorithm first constructs HCG graph defined above. by traversing H using Breadth-First Search (the graph itself does not need to be saved). A partition \(E^{\mathrm {R}}\) of the hyperedges of H is then obtained where each part contains hyperedges that belong to the same connected component of HCG. The size and weight of each \(e_{\mathrm {R}} \in E^{\mathrm {R}}\) is the number of hyperedges it contains and the sum of their weights, respectively. If we represent a hypergraph with an information system, a reduced information system \(\mathcal {I}^{\mathrm {R}}_{H} \left( V,E^{\mathrm {R}},\mathbf {V}^{\mathrm {R}},\mathcal {F}^{\mathrm {R}} \right) \) is constructed based on \(E^{\mathrm {R}}\). A vertex is incident to \(e_{\mathrm {R}} \in E^{\mathrm {R}}\) if at least one of its incident edges \(e \in H\) is in \(e_{\mathrm {R}}\). In addition \(\mathbf {V}^{\mathrm {R}}_{e_{\mathrm {R}}} \subseteq \mathbb {N}, \forall e_{\mathrm {R}} \in E^{\mathrm {R}}\) and the mapping function is defined as:

The next step is to remove superfluous attributes from \(E^{\mathrm {R}}\). A clustering threshold \(c \in [0,1]\) is defined and the mapping function of (4) is transformed to:

At this point we have a reduced information system \(\mathcal {I}^{\mathrm {f}}\) and we use this to find clusters of vertices using rough set clustering techniques. Using the indiscernibility relation defined in (2), the equivalence relation between vertices (Sect. 3.2), and the mapping function \(\mathcal {F}^{\mathrm {f}}\) of (5), \(\mathbb {U}/\text {IND}(E^{\mathrm {R}})\) provides a partitioning of the vertex set V. The parts are called the cores of the hypergraph. Cores of unit size as well as vertices whose \(\mathcal {F}^{\mathrm {f}}(v,e_{\mathrm {R}})=0, \, \forall e_{\mathrm {R}} \in E^{\mathrm {R}}\) are categorised as non–core vertices and they will be processed after core vertices. The cores are visited one at a time and they are searched locally to find the best matching pairs according to (3). The larger the mean vertex degree in the hypergraph is, the larger denominator we get in (5) and this makes it difficult to choose a clustering threshold. As a result, large mean vertex degrees produce more cores of unit size and this causes the number of vertices that belong to cores to be small compared to \(\left| {V} \right| \).

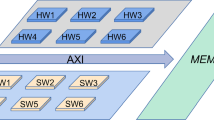

To maintain a certain compression ratio between two successive levels of the coarsening, we perform a random matching of the non–core vertices. An example of the coarsening procedure is given in Fig. 1.

An example of the coarsening procedure. (a) The sample hypergraph. (b) HCG using weighted jaccard index in (3) and similarity threshold \(s=0.5\). (c) The reduced information system, and (d) Remaining attributes after removing superfluous attributes for clustering threshold \(c=0.5\).

4.2 Initial Partitioning and Uncoarsening

In the initial partitioning phase, a bipartitioning on the coarsest hypergraph \(H^c\) is found using a number of algorithms. An output is selected to be projected back to the original hypergraph: if many outputs fulfill the balancing requirement then the one with lowest cost is chosen else it is the output that comes closest to meeting the balancing requirement. The algorithms used are random partitioning (randomly assign vertices to parts), linear partitioning (linearly assign vertices to parts), and a modification of the FM algorithm [10]. During uncoarsening, we try to refine the quality of the partitioning by moving the vertices across the partition boundary. A vertex is on the boundary if at least one of its incident edges is cut by the bipartitioning. The FM algorithm and its variants have been shown to be successful for the refinement process [8, 16] and we use a modified version of FM or Boundary FM algorithm.

5 Evaluation

We have compared our algorithm (FEHG) with PHG (the Zoltan hypergraph partitioner) [8], hMetis [16], and PaToH [4]. These algorithms achieve k-way partitioning by recursive bipartitioning. The evaluated hypergraphs listed in Table 1 are from the University of Florida Sparse Matrix Collection [7]. They are from a variety of applications with different specifications and include both symmetric and non–symmetric instances, and hypergraphs with different numbers of strongly connected components, etc. Each matrix in the table is treated as a hypergraph. We use the column-net model where each row of the matrix corresponds to a vertex and each column corresponds to a hyperedge [8]. The weights of vertices and hyperedges are set to unity. The evaluated tools have different input parameters that can be selected by the user. For our case, we use default settings for the comparison: shmetis is the default partitioner selected for hMetis, PaToH is initialised by setting SBProbType parameter to PATOH_SUGPARAM_DEFAULT, and the coarsening algorithm for PHG is set to agglomerative. All of them use a variation of FM for the refinement and uncoarsening phase.

FEHG has two input parameters: the similarity threshold to construct HCG, and the clustering threshold from (5). The values chosen for these parameters can have a large impact on the quality of the partitioning. We describe how calculate the similarity threshold when the Jaccard Index is used for measuring the similarity between hyperedges.

The Clustering Coefficient (CC) is a graph theory measure determined by the degree to which a node clusters with other nodes of the graph or hypergraph. Different methods for finding CC in hypergraphs have been proposed [18]. Given a hypergraph \(H=(V,E)\), we define CC for a hyperedge \(e \in E\) as:

The CC of the hypergraph is calculated as the average CC over all hyperedges. We calculate CC at the start of the algorithm. As the structure of the hypergraph changes at each level of coarsening, we readjust its value instead of recalculation. As proposed in [11] to analyse Facebook social networks and theoretically investigated in [3] on sparse random intersection graphs, the clustering of nodes in hypergraphs is inversely correlated with average vertex degree. Based on this, we readjust CC’s value according to the variation of average vertex degree from one level of the coarsening to the next. Finally, CC value of the hypergraph is set as the similarity threshold at each coarsening level.

Figure 2a depicts the variation of similarity threshold for each coarsening level of a tested graph CNR–2000. Both the readjusted value and the actual value are shown. The readjusted value provides a lower bound for the actual value and it is about \(50\,\%\) of its value from the third iteration onward which is sufficient for feature reduction. In Fig. 2b, the percentage of the edges whose clustering coefficients are at least equal to the similarity threshold along with normalised variation of edge size and its standard deviation (STD) is represented. As the partitioner gets close to the coarsest hypergraph we have small average size of hyperedges (2.09) and small average vertex degrees (2.47) but larger vertex degree standard deviation (14.33); most of the vertices share very few hyperedges so clustering decisions are difficult. As we see, the automatic readjustment still catches the possible similarities. In general we achieve a cut size 50 for CNR–2000.

(a) Readjusted similarity threshold s for the test hypergraph CNR–2000 according to (6) compared to its recalculation at each coarsening level. (b) The percentage of the hyperedges whose CC is more than s and comparison to normalised edge size edge and its standard deviation (STD).

In our evaluation we found that variation of the similarity threshold has higher impact on the quality of the partitioning than the clustering threshold. The reason is that hyperedges with higher CC value are more likely to cluster with others and they get higher coefficient in (4) and tend to be included in the final reduced information system in (5). This reduces the effects of clustering threshold variations. Therefore, we remove each \(e_{R} \in E^{R}\) of unit size (refer to Sect. 4.1) and we set the clustering threshold to 0 in (5) for the others. For example, edge partitions \(C_2\) and \(C_4\) are removed from the table in Fig. 1c. For all tested hypergraphs, the algorithms are each run 20 times and the average and best cut sizes are reported. Simulations are done with \(2\,\%\) imbalance tolerance in (1) and the number of parts are \(\left\{ 2,4,8,16,32 \right\} \). The final imbalance achieved by the algorithms are not reported because the balancing requirement was always met by all algorithms. The simulation results as well as standard deviation from the average cut are reported in Table 2. The latter could be used as a measure of the robustness of the algorithms specifically when they give close partitioning quality. The values are normalised with the best cut generated among all algorithms except the standard deviation. According to the results, FEHG performs very well compared to Zoltan and hMetis and it is competitive with PaToH. For example in Noterdame and Patents-Main, FEHG achieves a superior quality improvement compared to Zoltan and hMetis. In another simulation, we investigate whether relying only on a vertex similarity metric is enough to achieve better partition quality. When two vertices are matched, we refer to their similarity degree as the roughness of the match and it is calculated using (3). Matching pairs of vertices with higher similarity degree at each level of coarsening means higher average roughness of the matched vertices in that level. According to the algorithms that investigate vertex similarity metrics, an algorithm would be better if it yields higher average roughness for levels of coarsening compared to the others [21]. Furthermore, the decision about the vertex similarity is made locally in those algorithms without collecting global information. In FEHG, we refer to the average roughness of core vertices as core roughness. We consider two scenarios for our test while we find a pair match for non–core vertices: in the first one, a match is allowed for a non–core vertex if the roughness of the match is at least equal to the core roughness. In the second scenario we allow non–core vertex to be matched to any vertex as long as the roughness of the match is greater than zero. In the first scenario, the emphasis is on finding vertices with higher similarity as is the case for similarity metric based methods and it guarantees higher average roughness compared to the second scenario during levels of coarsening. The test is done on the hypergraphs and the result for CNR-2000 is reported in Fig. 3. According to the results, the first scenario causes high fluctuations of vertex degree standard deviations while the second scenario produces a smooth change. We achieve average cuts of 490 and 110 for CNR–2000 for the first and second scenarios, respectively.

The agglomerative clustering of Zoltan and hMetis give 25.54 and 8.89 times worse quality. PaToH also produces good average quality of 81 using absorption clustering using pins and hyperedge clustering. The variations in vertex degree or its standard deviation causes problems for clustering algorithms, making it hard to make good clustering decisions because of the increased conflicts between local and global decisions. Consequently, finding vertices with higher similarity for matching can not be relied on for every hypergraph and it does not always gives a better partitioning cut. In addition, gathering some global information before making clustering decisions can give a major quality improvement and decreases the unexpectedness of the partitioning cut as depicted in Table 2.

6 Conclusions and Future Work

We have proposed a multi–level hypergraph partitioning algorithm based on feature extraction and attribute reduction using rough set clustering techniques. The algorithm clusters hyperedges using different similarity metrics and a similarity threshold and tries to removes less important hyperedges. An automated calculation of this similarity threshold is proposed. The hypergraph is then transformed into a reduced information system. Employing the idea of Rough Set clustering, the algorithm calculates the partitioning of the objects in the reduced information system based on indispensability relations and core sets of vertices with globally high similarities. Then cores are searched locally for vertex matchings. Evaluating the algorithm in comparison to the state-of-the-art algorithms has shown improvements in quality of the partitioning for tested hypergraphs. Future work is to implement parallel versions of the algorithm. Using a special distribution of vertices and hyperedges among processors and the ideas of rough set theory, we are focusing on proposing a scalable partitioner.

References

Aykanat, C., Cambazoglu, B.B., Uar, B.: Multi-level direct K-way hypergraph partitioning with multiple constraints and fixed vertices. J. Parallel Distrib. Comput. 68(5), 609–625 (2008)

Bazan, J., Szczuka, M.S., Wojna, A., Wojnarski, M.: On the evolution of rough set exploration system. In: Tsumoto, S., Słowiński, R., Komorowski, J., Grzymała-Busse, J.W. (eds.) RSCTC 2004. LNCS (LNAI), vol. 3066, pp. 592–601. Springer, Heidelberg (2004)

Bloznelis, M., et al.: Degree and clustering coefficient in sparse random intersection graphs. Ann. Appl. Probab. 23(3), 1254–1289 (2013)

Catalyurek, U.V., Aykanat, C.: Hypergraph-partitioning-based decomposition for parallel sparse-matrix vector multiplication. IEEE Trans. Parallel Distrib. Syst. 10(7), 673–693 (1999)

Catalyurek, U., Boman, E., Devine, K., Bozdag, D., Heaphy, R., Riesen, L.: Hypergraph-based dynamic load balancing for adaptive scientific computations. In: Parallel and Distributed Processing Symposium (IPDPS 2007), pp. 1–11 (2007)

Curino, C., Jones, E., Zhang, Y., Madden, S.: Schism: a workload-driven approach to database replication and partitioning. Proc. VLDB Endow. 3(1–2), 48–57 (2010)

Davis, T.A., Hu, Y.: The university of florida sparse matrix collection. ACM Trans. Math. Softw. 38(1), 1 (2011)

Devine, K.D., Boman, E.G., Heaphy, R.T., Bisseling, R.H., Catalyurek, U.V.: Parallel hypergraph partitioning for scientific computing. In: Proceedings of 20th International Parallel and Distributed Processing Symposium (IPDPS 2006). IEEE (2006)

Ertöz, L., Steinbach, M., Kumar, V.: Finding clusters of different sizes, shapes, and densities in noisy, high dimensional data. In: SDM, pp. 47–58. SIAM (2003)

Fiduccia, C.M., Mattheyses, R.M.: A linear-time heuristic for improving network partitions. In: 19th Conference on Design Automation, pp. 175–181. IEEE (1982)

Foudalis, I., Jain, K., Papadimitriou, C., Sideri, M.: Modeling social networks through user background and behavior. In: Frieze, A., Horn, P., Prałat, P. (eds.) WAW 2011. LNCS, vol. 6732, pp. 85–102. Springer, Heidelberg (2011)

Garey, M.R., Johnson, D.S.: Computers and Intractability, vol. 29. W.H. Freeman, New York (2002)

Heintz, B., Chandra, A.: Beyond graphs: toward scalable hypergraph analysis systems. SIGMETRICS Perform. Eval. Rev. 41(4), 94–97 (2014)

Hendrickson, B., Kolda, T.G.: Graph partitioning models for parallel computing. Parallel Comput. 26(12), 1519–1534 (2000)

Hu, T., Liu, C., Tang, Y., Sun, J., Xiong, H., Sung, S.Y.: High-dimensional clustering: a clique-based hypergraph partitioning framework. Knowl. Inf. Syst. 39(1), 61–88 (2014)

Karypis, G., Aggarwal, R., Kumar, V., Shekhar, S.: Multilevel hypergraph partitioning: applications in vlsi domain. In: IEEE Transactions on Very Large Scale Integration (VLSI) Systems, vol. 7, no. 1, pp. 69–79 (1999)

Kayaaslan, E., Pinar, A., Çatalyrek, Ü., Aykanat, C.: Partitioning hypergraphs in scientific computing applications through vertex separators on graphs. SIAM J. Sci. Comput. 34(2), A970–A992 (2012)

Latapy, M., Magnien, C., Vecchio, N.D.: Basic notions for the analysis of large two-mode networks. Soc. Netw. 30(1), 31–48 (2008)

Pawlak, Z.: Rough Sets: Theoretical Aspects of Reasoning about Data. Kluwer Academic Publishers, Norwell (1991)

Tian, Z., Hwang, T., Kuang, R.: A hypergraph-based learning algorithm for classifying gene expression and arrayCGH data with prior knowledge. Bioinformatics 25(21), 2831–2838 (2009)

Trifunovic, A.: Parallel algorithms for hypergraph partitioning. Ph.D. thesis, University of London (2006)

Vastenhouw, B., Bisseling, R.H.: A two-dimensional data distribution method for parallel sparse matrix-vector multiplication. SIAM Rev. 47(1), 67–95 (2005)

Acknowledgments

This work is supported by the EU FP7 Marie Curie Initial Training Network “SCALUS — Scaling by means of Ubiquitous Storage” under grant agreement No.238808. We thank the Efficient Computing and Storage Group at Johannes Gutenberg Universität Mainz, Germany for their help and support.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Lotfifar, F., Johnson, M. (2015). A Multi–level Hypergraph Partitioning Algorithm Using Rough Set Clustering. In: Träff, J., Hunold, S., Versaci, F. (eds) Euro-Par 2015: Parallel Processing. Euro-Par 2015. Lecture Notes in Computer Science(), vol 9233. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-48096-0_13

Download citation

DOI: https://doi.org/10.1007/978-3-662-48096-0_13

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-662-48095-3

Online ISBN: 978-3-662-48096-0

eBook Packages: Computer ScienceComputer Science (R0)