Abstract

In the six years since I first researched university research rankings and bibliometrics, much of the world suffered an economic downturn that has impacted research funding and open access journals, research institution repositories and self-published material on the web have opened up access to scholarly output and led to new terminology and output measurements. University rankings have expanded beyond the national end-user consumer market to a research area of global interest for scientometric scholars. Librarians supporting scholarly research have an obligation to understand the background, metrics, sources and the rankings to provide advice to their researchers and their institutions.

This chapter updates an article in Taiwan’s Evaluation in Higher Education journal (Pagell 2009) based on a presentation at Concert (Pagell 2008). It includes a brief history of scholarly output as a measure of academic achievement. It focuses on the intersection of bibliometrics and university rankings by updating both the literature and the rankings themselves. Librarians should find it relevant and understandable.

Adopted from Pagell, Ruth A., “University Research Rankings: From Page Counting to Academic Accountability” (2009). Research Collection Library. Paper 1.http://ink.library.smu.edu.sg/library_research/1; 高教評鑑3:1(June 2009):33–63

Ruth A. Pagell has extensive international experience, writing and speaking on topics such as international business, bibliometrics and information literacy. After working in US libraries, she was founding librarian for the Li Ka Shing Library, Singapore Management University. She currently teaches in the LIS program at University of Hawaii. Email: rpagell@hawaii.edu

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

Introduction

One result from the internationalization of the education industry is the globalization of university rankings, with a focus on research output. Governments, intergovernmental organizations and funding bodies have shown a growing concern for research accountability which has moved the university rankings from a national to a worldwide playing field.

This chapter examines three different research streams underlying today’s university research rankings and demonstrates their impact on today’s university rankings to help readers understand “… how an arguably innocuous consumer concept has been transformed into a policy instrument, with wide ranging, intentional and unintentional, consequences for higher education and society” (Hazelkorn 2007).

National, Regional and International Policy and Accountability

The increased ability to measure and analyze scholarly output has increased the involvement of governmental and funding agencies in the rankings arena. They seek methodologies that will measure universities’ accountability to their funding sources and their constituencies. Government concern about the spending and impact of its research monies is not new. In 1965, U.S. President Johnson (1965) issued a policy statement to insure that federal support “of research in colleges and universities contribute more to the long run strengthening of the universities and colleges so that these institutions can best serve the Nation in the years ahead.

A growing number of countries have initiated research assessment exercises, either directly or through evaluation bodies such as the benchmark United Kingdom Research Assessment Exercise (RAE) initiated in 1992 which used peer review. The newer initiatives by the Higher Education Funding Council for England incorporates bibliometric measures of research output and considers measurements of research impact (van Raan et al. 2007; Paul 2008; HEFCE 2013) An OCLC pilot study (Key Perspectives 2009) looks at five specific countries, the Netherlands, Ireland, United Kingdom, Denmark and Australia, who have taken different approaches to assessment. Hou et al. (2012) examine the higher education excellence programs in four Asian countries, China, Korea, Japan and Taiwan.

Other active agencies are the Higher Education Evaluation and Accreditation Council of Taiwan (HEEACT 2013) University Grants Committee of Hong Kong (2013) and the Australian Research Quality Framework (Excellence in Research 2013). Most of these incorporate some form of bibliometrics into their evaluation methodology. Italy introduced performance related funding in 2009 (Abbott 2009), establishing the National Research Council (CNR 2013). In conjunction with the new Italian initiative is a series of articles examining many aspects of rankings and productivity (Abramo et al. 2011a, b, 2012, 2013a b).

Europe has been active in tracking academic rankings at a multi-national level. A group of experienced rankers and ranking analysts, who met first in 2002, created the International Ranking Expert Group (IREG 2013), now called the International Observatory on Rankings and Excellence. In 2006, the Group met in Berlin and issued the Berlin Principles for ranking colleges and universities. The UNESCO-European Centre for Higher Education in Bucharest, Romania and the Institute for Higher Education Policy (IHEP), an independent group based in Washington D.C., co-hosted the meeting. The four categories for the 16 Berlin Principles for rankings and league tables include:

-

A.

Purposes and Goals of Rankings

-

B.

Designing and Weighting Indicators

-

C.

Collection and Processing of Data

-

D.

Presentation of Ranking Results

The guidelines aim to insure that “those producing rankings and league tables hold themselves accountable for quality in their own data collection, methodology, and dissemination (Bollag 2006; IHEP 2006). As a follow-up, IHEP (2007) issued an evaluation of various existing ranking systems.

The key findings of the proceedings of the three assessment conferences are in the UNESCO-CEPES publication, Higher Education in Europe: (“From the Editors,” 2002; Merisotis and Sadlak 2005) and (“Editorial,” 2007).

The OECD Feasibility Study for the International Assessment of Higher Education Learning Outcomes (AHELO)gauges “whether an international assessment of higher education learning outcomes that would allow comparisons among HEIs across countries is scientifically and practically feasible. Planning began in 2008 and the final results are presented in several publications (OECD 2013) 17 countries, representing 5 continents are included in the study.

Incorporating both the Berlin Principles and the AHELO learning outcomes, the European Commission, Directorate General for Education and Culture, issued a tender to “look into the feasibility of making a multi-dimensional ranking of universities in Europe, and possibly the rest of the world too” (European Commission 2008). A new system U-Multirank, scheduled for launch in 2014, is the outcome of the feasibility study. (van Vught and Ziegele 2011).

At the university level, rankings have been viewed as a game (Dolan 1976; Meredith 2004; Henshaw 2006; Farrell and Van Der Werf 2007). University administrators play the game by making educational policy decisions based on what will improve their standings in those rankings that are important to them. 63 % of leaders/university administrators from 41 countries who responded to a 2006 survey under the auspices of OECD reported taking strategic, organizational academic or managerial actions in response to their rankings. The results of this survey are available in variety publications (Hazelkorn 2008).

Historical Literature Review

The appearance in 1983 of U.S. News and World Report ratings of U.S. colleges based on a survey of college presidents (Solorzano and Quick 1983) marked the beginning of the modern era in rankings, with a shift in emphasis from small studies in scholarly publications to a national comparison for a general audience. By 1990, the magazine’s rankings included university provided student and faculty measures to go along with the initial “reputational “ survey of college presidents, Governments and scholars had been publishing quality or research rankings for over 100 years. Salmi and Saroyan (2007) examine rankings and public accountability and also identify statistical annual reports published by the Commission of the US Bureau of Education from 1870–1890 that classified institutions.

Pagell and Lusk (2002) discuss a series of early scholarly business school rankings. The earliest work they cite, Raymond Hughes’ “A Study of Graduate School of America”, published on behalf of the America Council of Education., rated 19 graduate departments in the U.S., primarily Ivy League private universities and the major mid-western state universities. All but three of his initial 19 do not appear on one of this article’s list of top 30 worldwide universities today (See Table 8 below). Magnoun (1966) compares additional studies using Hughes methodology and analyzes the consistencies and changes during the 40 year interval. He emphasizes the importance of the rankings to university administration and the importance of quality graduate programs to the country as a whole. Other studies that Pagell and Lusk examine focus on individual departments and they count pages, publications and weighted page counts. The American Educational Research Association sponsored research rankings in the 1970s (Blau and Margulies 1974; Schubert 1979). Kroc introduces citation analysis for schools of education and analyzes early challenges using Social Science Citation Index (SSCI), many of which persist today (Kroc 1984).

These earlier rankings focused on specific departments in a limited number of U S universities. While scholarly rankings in today’s higher education environment are global, individual disciplines continue to use their own rankings. For example, Jin published two studies on economic rankings in East Asia relying on Econlit and page counts(Jin and Yau 1999; Jin and Hong 2008). The economics open access repository RePEc contains numerous rankings using multiple metrics, based on authors’ deposits in the repository (IDEAS 2013).

No one ranking is “correct”. However, there is a consistency across top rankings. In the scholarly surveys this paper cites, spanning 1925 to 2014, employing peer review and a variety of counting methodologies across different subject categories, a limited number of schools are number one with Harvard leading the way.

Using Bibliometric Methodology

Pritchard (1969) coined the term “bibliometrics” to mean the quantitative analysis and statistics to scholarly outputs, such as journal articles, citation counts, and journal impact. September 1978 marked the debut of the journal Scientometrics. This broader concept refers to the quantitative features and characteristics of science and scientific research and is attributed to Vaissily V Nalimov by Hood and Wilson (2001). They examine the similarities and differences among bibliometrics, scientometrics and also infometrics and informetrics. Webometrics is now considered a different approach to research rankings. Originally coined by Almind and Ingersen (1997), it applies bibliometric techniques to new web metrics. Webometrics entered the mainstream with the December 2004 special issue of Journal of the American Society for Information Science and Technology. Table 1 tracks the leading universities and countries producing bibliometric literature. 1980 to 1969 marked the first year that WOS included articles on bibliometrics and the number has increased every year since. Papers on “bibliometrics and university rankings” are about 10 % of all bibliometric papers.

Since the first article on bibliometrics appeared in 1969, there were 4474 articles in WOS and 5760 in SCOPUS with almost 34000 citations in WOS and 54800 in Scopus by October 2 2013 (using Biblometric* as a topic in WOS and a keyword in SCOPUS). Fig. 1 illustrates growth by decades.

No matter what term is used, the rankings are only as good as one’s understanding of the underlying measurements described below. Anyone using a ranking should check the documentation and methodology. The earlier rankings used peer review, now referred to as “reputation” and countable output such as journal articles in a group of “top” journals, proceedings, number of actual pages, number of normalized pages based on characters per page or doctoral degrees by school (Cleary and Edwards 1960). Some give full credit to each author, some distribute a percent per school by author; a few just use first author. Peer review may cover one to three years; other output measures cover one year to decades. Article counts may include book reviews, editorials and comments. All of these methods have their strengths and weaknesses. In order to select the international research university ranking that reflects an organization’s needs today, it is necessary to understand the bibliometrics that are used.

The appearance of Science Citation Index in 1955 laid the groundwork for the change from qualitative and manually countable scholarly output to the new era of citation metrics. When Eugene Garfield (1955) launched Science Citation Index, he originally positioned citation indexes as a subject approach to literature and a way to check the validity of an article through its cited references. In 1963, he wrote about the value of using citation data for the evaluation of publications (Garfield and Sher 1963). By 1979, in an article in volume one of Scientometrics he raised concerns about using citations as an evaluation tool that are still being examined by today’s researchers such as negative and self-citations; counting of multiple authors and disambiguation of authors names (Garfield 1979).

Today bibliometrics is a primary tool for organizations, such as universities and government bodies, to measure research performance.Widespread use of bibliometrics is possible with easy access to articles, citations and analytical tools in both Thomson-Reuters Scientific Web of Science (WOS) and Elsevier’s Scopus. Many individuals turn to Google Scholar.

Measurement in today’s academic environment is evidence-based and as noted by Leung (2007) “There is now mounting pressure all over the world for academics to publish in the most-cited journals and rake in as many citations to their work as possible”.

Individuals, researchers, departments, universities and outside bodies are all counting output. Departments employ bibliometrics to evaluate faculty for hire, tenure and promotion decisions, using number of publications and citation counts, journal impact and additional tools such as an H-Index. Academic output such as articles and citations provide the data for internal and external benchmarking. Universities are using more bibliometrics for government and stakeholder reporting of output. Country level benchmarking and comparisons use bibliometrics as well.

International data in any field poses problems involving standardization and cross country comparisons. University research rankings using both quality measures such as peer review and metrics compound these issues. Usher (2009) notes that “as rankings have spread around the world, a number of different rankings efforts have managed to violate every single one of “rankings principles. (Federkeil (2009) adds that “The only field typified by valid international indicators is research in the natural and life sciences….” He also notes that there is no “valid concept for a global ranking of teaching quality…”

Even if rankers agree to use a standard source for tracking articles or citations, there is no consensus on how to count multiple authors. Abramo et al. (2013b) studied the multi-author issue and suggested a further weighting based on how much each author contributed to the research. Other counting questions arise over authors who have changed universities and on whether to use a total figure, which favors large institutions or a per faculty count favoring smaller institutions. However, a per-faculty definition has issues of its own in whom to count as a faculty and how to calculate FTE.

It is necessary to understand the strengths and weaknesses of each of the bibliometric tools when analyzing and applying them to real world situations. It is important to check the methodology, including definitions and weightings, when comparing rankings or doing time series comparisons with the same tool. Table 2 organizes the most commonly used bibliometrics for research assessment by what they measure and which sources use them.

The H-Index is a measure of quality relative to quantity based on papers and citations within the given database. For example, if an author has 44 papers in SCOPUS with 920 citations and the 16th paper has 16 citations the H-Index is 16; if the same author has 36 papers in WOS with 591 cites and the 13th paper has 13 citations, the H-Index in WOS is 13. That same author created an author ID in Google Scholar, which tracks articles and citations. The author has 65 publications, 1921 citations and the 21st article has 21 citations for an H-index of 21.

Other approaches use weighted averages or scores, output per capita and output by subject or country norms. They may also adjust for multiple authors from different organizations. Metrics should be stable and consistent in order to measure changes over time and be replicable for user input.

One of the most controversial metrics is Journal Impact Factor from Thomson-Reuter’s Journal Citation Reports (Werner and Bornmann 2013). Concern about the over-use of this metric in the evaluation of faculty, from publishers, editors and researchers led to DORA, the San Francisco Declaration on Research Assessment, (San Francisco 2013) the outcome of the December 2012 meeting of the American Society for Cell Biology. Not only is there concern for the misuse of the impact factor as a rating instrument but also for its impact on scientific research. Alberts (2013) notes that impact factor encourages publishers to favor high-impact disciplines such as biomedicine and discourages researchers from taking on risky new work, which take time for publication.

JCR is being challenged by newer measures of journal quality which are appearing in university ranking scores. These include the eigenfactor, SNIP and SJR all of which are freely available on the web. The Bergstrom Lab (2013) at the University of Washington developed the eigenfactor, where journals are considered to be influential if they are cited often by other influential journals. The eigenfactor is now incorporated into Journal Citation Reports. SNIP, Source Normalized Impact per Paper, from Leiden’s CTWS measures contextual citation impact by weighting citations based on the total number of citations in a subject field. The impact of a single citation is given higher value in subject areas where citations are less likely, and vice versa. SCImago’s SJR2 recognizes the value of citations from closely related journals (Journal M3trics 2012).

New tools using webometrics and altmetrics which incorporate social media question the old model of scholarly impact (Konkiel 2013). The growing body of literature around “Webometrics” and Altmetrics expand the scope of this article. Björneborn and Ingwersen, in a special webometrics issue of Journal of the American Society for Information Society and Technology warned against taking the analogy between citation analyses and link analyses too far (Björneborn and Ingwersen 2004). However, we can no longer ignore the role of the web in academic research.

Despite the rise of alternative measures of scientific output, Web of Science (WOS) and Scopus remain the two major English language commercial bibliographic sources used by the research rankings. WOS is the current iteration of the original Science Citation Index. The entire suite of databases may include Science Citation Index (SCI-e from 1900), Social Science Citation Index (SSCI from 1900) and Arts & Humanities Citation Index (A&HCI from 1975). Other databases include Conference Proceedings and Books in Sciences and Social Sciences. An institution can subscribe to any or all of the databases, for as many years as they can afford. WOS has two search interfaces: General Search and Cited Reference Search. General Search includes only those articles that WOS indexes. Each article has the references in the article and the times the article is cited by other WOS publications. It is used at an institutional level for the rankings. Users can create their own rankings using analysis tools for authors, institutions or journals and rank output by number of articles by subject area, document type, leading authors, source titles, institutions and countries. Each author’s information (institution, country) receives one count. Not all articles include addresses. An H-Index is also calculated. The Cited Reference Search includes all citations in the WOS articles from any reference source and is primarily used for data on individual researchers. Until the end of 2011, Thomson provided a listing of highly cited papers also used in international rankings. This is now part of Essential Science Indicators, a separate subscription service. Thomson-Reuters publishes Science Watch, covering metrics and research analytics (Thomson-Reuters 2013). Registration is required

Elsevier’s SCOPUS began in late 2004. It includes citations received since 1996. The subscription includes all subject areas and document types for all the years that information is available. The subscription includes four broad subject areas: Health Sciences, Physical Sciences, Life Sciences and Social Sciences. Added features are author and affiliation searches and analysis of citing journals, authors and institutions and an H-Index. Elsevier publishes Research Trends a quarterly newsletter which provides insights into research trends based on bibliometric analysis with a range of articles on different aspects of ranking, from assessing the ARWU (Shanghai Rankings) to explaining the soon to be released U-Multirank (Research Trends 2008).

Google Scholar is the third and most controversial source of citations. The search engine has improved since authors, such as Peter Jacsó, exposed all of the errors that limited the use of Google Scholar for comparative evaluation purposes (Jacsó 2008). Today’s Scholar has an advanced search feature to search by author’s name. It has improved its ability to differentiate dates from numbers; it added the ability to download to bibliographic software; it has its own metrics for measuring journal quality and it is now linking to article citations on publisher pages. It still lacks the editorial control of WOS and Scopus, the controlled vocabulary with subject terms and any information on how articles and citations are included. Meho and Yang (2007) discuss the impact of data sources on citation counts and provide a balanced review, while pointing out the thousands of hours required for data cleansing using Google Scholar.

All three systems have mechanisms for authors to identify themselves, their affiliations and their publications if they chose to do so. Researchers may also create one unique ID through ORCID (http://orcid.org)

WOS and SCOPUS understate the number articles and citations, especially for universities that are not strong in the sciences and SCOPUS, because it only includes citations from articles written after 1995, also understates the citations for older authors. Google Scholar is not a viable alternative for quality university rankings. Table 3 compares features in WOS, SCOPUS and Google Scholar.

WOS or SCOPUS offer quality and standardization. However, they are slower to reflect changes in scientific communication

Factors Limiting the Number of Articles and Citations

Scientific disciplines are the strength of WOS and SCOPUS. This is especially obvious in rankings such as SIR that include research and medical institutes. Subject matter, language, country of origin and format understate the scholarly output in social science and humanities and put pressure on authors to publish in high impact journals at the expense of local research. Local journals or books publish scholarly output in these fields in the local language. In an article published in Scientometrics and summarized in Nature, Van Raan et al. (2011) reported that the language effect is important across multiple disciplines for articles published in German and French. While rankers now include separate listings for social sciences and humanities universities, these rankings are still based on the WOS or SCOPUS publications.

Table 4 displays impact factors for selected fields from the 2012 Journal Citation Reports

The most ranked journal from this selection, Nature Review in Neuroscience has an aggregate impact score of 35.9 and most highly ranked in the social sciences area, Journal of Economic Literature has an aggregate impact score of 10.160. JCR is transparent, showing the calculations for the metrics. Worldwide rankings generally use Essential Science Indicators, which is a sub-set of Web of Science and a separate subscription.

A user can download the entire SNIP and SJR dataset (with minimal registration), allowing an analyst to sort by scores, general topic, field or country but it lacks the underlying methodology. In the 50 most impactful journals using SJR, only three were exclusively in the Social Sciences.

Table 4 in Pagell’s original version (2009) includes data on Chinese social science and humanities articles published abroad from the Information Network of Humanities and Social Sciences in Chinese Universities. That data have not been updated and the Chinese Social Science Citation database ceased a couple of years ago. Table 5 provides data retrieved from WOS and SCOPUS on the same universities. Data estimate the total number of articles in social sciences and humanities in these databases and the number published in Chinese.

The situation for Asian institutions is more positive in the sciences. The U.S. National Science Board tracks the growth of non-U.S. science and engineering (including social science) output in Science and Engineering Indicators. Below are some of the 2012 data (Academic Research 2012)

-

“The United States accounted for 26 % of the world’s total S&E articles in 2009, down from 31 % in 1999. The share for the European Union also declined, from 36 % in 1999 to 32 % in 2009.

-

In Asia, average annual growth rates were high—for example, 16.8 % in China and 10.1 % in South Korea. In 2009, China, the world’s second-largest national producer of S&E articles, accounted for 9 % of the world total.

-

Coauthored articles grew from 40 % of the world’s total S&E articles in 1988 to 67 % in 2010. Articles with only domestic coauthors increased from 32 % of all articles in 1988 to 43 % in 2010. Internationally coauthored articles grew from 8 to 24 % over the same period.

-

U.S.-based researchers were coauthors of 43 % of the world’s total internationally coauthored articles in 2010. ”

The appendix to the report includes data by region, country and broad discipline, but not by university.

Jin and Hong (2008), in their article ranking economics departments in East Asian universities, note that “when journal quality and sample periods were adjusted favorably to East Asian schools, the current research productivity of top-tier East Asian universities was found to be close to that of major state universities in the United States a decade ago”

Figure 2 displays the calculations for the percent of articles in WOS for four Asian-Pacific countries. It shows the rapid growth of articles from Taiwan and Korea and the much slower growth for English language Singapore and New Zealand. The number and percent of Chinese articles in WOS is growing annually and is up to almost 4 %, similar to the number of articles in Japanese, French and German. Growth of peer reviewed articles from Asia-Pacific as indicated in data from WOS and Science and Engineering Indicators will have a positive impact on the number of Asian-Pacific universities appearing in the research rankings.

Contemporary International University Rankings or League Tables

Many countries publish national rankings which are tools for their own students, faculty and funding bodies. An example is the ranking of top Chinese universities from Research Center for Chinese Science Evaluation (RCCSE) at Wuhan University and the Network of Science & Education Evaluation in China (www.nseac.com). ARWU’s Resource page provides a listing of local rankings from 28 different countries. With the internationalization of education at an organizational level, institutions and even countries compete for students and researchers and not surprisingly, this has led to international ranking systems. Commercial sources, universities, evaluation authorities and scientometric research organizations compile today’s university rankings. The rankings may incorporate bibliometric data from Thomson-Reuters or Scopus, peer review or “reputational surveys”. Some research institutions are creating new algorithms from bibliometric sources or from web metrics.

Some of the better-known rankings include:

-

ARWU (Academic Ranking of World Universities) from 2003;

Center for World-Class Universities at Shanghai Jiao Tong University (Center, 2013)

-

National Taiwan University Rankings, “Performance Rankings of Scientific Papers of World Universities” from 2012-; formerly HEEACT (2007–2011); (National, 2012-)

-

THE World University Rankings from 2011 (Times, 2013 − 14)

-

Leiden Rankings from2008; Center for Science and Technology Studies (CWTS, 2013)

-

SIR (SCImago Institutional Rankings) from 2009—(SCImago, 2013)

-

QS World University Rankings from 2004 (Quacquarelli Symonds 2013)

(republished by US News and World Reports as World’s Best Colleges and Universities from 2008-)

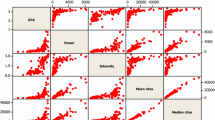

The University of Zurich (2013) presents a clear overview of the rankings listed above. Chen and Liao (2012) statistically analyze the data and calculate correlations among the rankings, especially ARWU, HEEACT (now NTU) and THE.

Shanghai Jiao Tong’s Center for World-Class Universities produces Academic Rankings of World Universities (ARWU). It has the World’s Top 500 and top 200 in five fields and five subjects. Nobel Prize winners in two indicators, Thomson Reuters bibliometric data and articles from Nature and Science comprise the rankings for all but those schools strongest in social sciences (Liu and Cheng 2005). The Academic Ranking of World Universities (ARWU) is published and copyrighted by Shanghai Ranking Consultancy, which is not affiliated with any university or government agency. Billaut et al. (2010) take a critical look at ARWU while DoCampo (2011) examines ARWU relative to university systems and country metrics.

Similar to, but not as well known as ARWU, is the former HEEACT(Higher Education Evaluation and Accreditation Council of Taiwan) ranking which is now published by the National Taiwan University and renamed NTU Ranking. It presents a worldwide ranking for 500 universities and rankings by six fields and 14 subjects. All the rankings are based on data from Thomson Reuters Essential Science Indicators.

CWTS at Leiden and SCImago expand the measurements used for rankings by experimenting with new metrics. Leiden University’s Center for Science and Technology Studies (CWTS) developed its own ranking system using bibliometric indicators from Thomson Reuters to measure the scientific output of 500 major universities worldwide. It uses no reputational data or data collected from the universities themselves. The researchers modify the existing data to create normalized scores and continue to experiment with new measures. The web site provides an overall ranking and the user can select field, region, country and indicator. These rankings receive little attention in the international press but the researchers from Leiden publish the most papers about “bibliometrics” based on searches in WOS and SCOPUS (searched 10 September, 2013).

SIR, SCImago’s Institutions Rankings, uses metrics from SCOPUS.It ranks over 2700 organizations including research and medical institutions. Ranking are worldwide, by region and by country. Measures include output, percent international collaboration, normalized citations and the percent of articles published in the first quartile of their categories using SJR, SCImago’s own journal impact score. SCImago claims that SIR reports are not league tables and the goal is to provide policy makers and research managers with a tool to evaluate and improve their research results. Reports are all in PDF format.

THE and QS have broader target markets, with a focus beyond the research community. Originally published as part of the QS rankings, THE began publishing its own rankings, powered by Thomson Reuters in 2010–2011. It ranks 400 worldwide universities. Its ranking metrics include teaching, research, knowledge transfer and international outlook. There are rankings by region and broad subject area and separate rankings by reputation and for universities less than 50 years of age.

QS continues to publish its rankings, with less emphasis on evidence based bibliometrics and more emphasis on qualitative “Academic reputation”. Recognizing the need to internationalize the market for North American college–bound students, U.S. News and World Report began republishing the then THE-QS in 2008 and it continues to republish the QS rankings. According to Robert Morse (2010), U.S News is working together with QS. A noticeable difference in the QS rankings is that 20 out of the top 50 universities are from Commonwealth or former Commonwealth countries.

The Berlin Principles emphasize the importance of accountability for the rankers, not only the institutions they are ranking. Enserink (2007), in his article in Science “Who Ranks the University Rankers”, examines the various international rankings. Other authors from such prestigious journals as Chronicle of Higher Education, Nature and Science have examined the effect of rankings on university behavior (Declan 2007; Labi 2008; Saisana et al. 2011).

Tables 6 and7 summarize the methodologies of selected international ranking, as described above. They illustrate the differences in metrics and weights of the various indicators. More information on methodology is available from the websites in the last row of the table.

QS modifies its metric weightings for rankings by subject and field, putting even more weight on reputation for social science and humanities.

In addition to modifications of existing metrics from Thomson-Reuters and Scopus by Leiden and SCImago, the use of web data is now receiving serious consideration. Consejo Superior de Investigaciones Científicas (CSIC)first issued the semi-annual Ranking Web of Universities in 2004. CSIC claims that it is an independent, objective, free, open scientific exercise for the providing reliable, multidimensional, updated and useful information about the performance of universities from all over the world based on their web presence and impact. Built from publicly available web data, it includes almost 12,000 institutions arranged by world, region, and country’ Rankings are based on impact (external links to…), presence openness, including repositories, on the one bibliometric element, excellence, the top 10 % of scholarly output with data from SCImago available from about 5100 institutions weighted at about 17 %. (CSIC http://www.csic.es).

Comparing a variety of rankings and ranking criteria clarify the importance of understanding the different metrics and weightings.

Table 8 uses 2013 Shanghai Jiao Tong (ARWU) as the basis for the top ten, and compares them to the top ten from the 2013 rankings from THE, QS, SCImago, Leiden and Webometrics and the 2012 rankings from NTU.

18 universities make up the top 10 on the four main lists (ARWU, NTU, THE and QS). Harvard, Stanford, MIT and Oxford are top ten on all of them; Harvard leads the pack across all the rankings. It is interesting to note the similarities and differences among the schemes and between the international lists and Hughes original 1925 rankings. Of Hughes Top 10 in 1925, only one school, University of Wisconsin, was not in the top ten in one of the selected rankings and 16 of the 19 are on at least one top 30 list. Internationalization brings UK universities into the top 20 and time has shifted the U.S. balance away from public institutions in the mid-west. Two top technology universities are in the top tier.

Another interesting factor in the tables is the difference in the SCImago and Leiden rankings for top papers, highlighting differences between the contents of SCOPUS and WOS.Webometrics top four are the same top four as ARWU’s research rankings

The evaluating bodies list universities by their rank, based on an underlying scoring system. Table 9 shows the importance of checking underlying scores to get a better understanding of what it means to be one or 100. It shows the scores for universities one, two and 100 and the percent of separation from 1st to 100th. For example, in the QS rankings the first and 100th universities show a 31.6 % difference while in the NTU rankings the first and 100th universities are over 79 % apart.

Only U.S. and U.K. universities are in the top ten lists. The number of Asian universities in the top 100 has been growing. Table 10 lists Asia’s top ten from four bibliometric rankings and Webometrics. There are a total of 24 universities on the list and the majority is now ranked in the top 100 in world. The strongest showings are from Japan and China.

An interesting, specialized addition to scholarly rankings comes from Nature which is publishing a rolling year’s ranking for Asia-Pacific institutions and countries based on its own publications. The ranking includes only total publications and uses two calculations for giving an institution credit when there are multiple authors. University of Tokyo is the standout in the Nature ranking for Asia which is comparable to those listed above but includes more countries (Nature 2013).

Beyond Bibliometrics

The European Union’s new U-Multirank and the web-based Altmetrics deserve a mention in any 2013 discussion of global university rankings and metrics. U-Multirank is the outcome of the EU’s Feasibility study mentioned above. It differs from most existing rankings since there is no one overall score. According to an overview article in Research Trends (Richardson 2011) it is user-driven and designed to encourage diversity. The ranking components include research, education, international orientation, knowledge exchange and regional engagement. The 2014 U-Multi rank will be based on 500 universities worldwide who have agreed to be included. The League of European Research Universities and most US and Chinese universities declined (Rabesandratana 2013). The complete study is available from CHERPA (Consortium for Higher Education and Research Performance Assessment). It is based on the CHE methodology used to rank German universities. (van Vught and Ziegele 2011).

Altmetrics, which uses social media and web content, is currently embedded in results for SCOPUS and an Altmetric box will pop up for articles with a score. It is unclear if it will be incorporated into global rankings but it does add another dimension to impact.

Figure 3 is the Altmetric for a 2013 article on Altmetrics from PLoS One in SCOPUS.

Conclusion

Today’s university rankings combine a variety of methodologies, including the traditional research output data and peer review and faculty or student input data as well as non-bibliometric measures such as contribution to industry, employers reputation and international orientation. Researchers are also looking for new and different measures that are available from the web and social media.

Existing research rankings are as narrow as a few journal titles in a discipline or as broad as all publications in Web of Science or Scopus or all links to universities and research institutions on the web. Countries have their own national rankings. International organizations are seeking new approaches to measure learning outcomes and research impact.

Government organizations and funding bodies require measures that evaluate quality of scholarly output as well as quantity. Commercial and academic publishers and faculty researchers are creating new and more complex measuring tools to meet these needs. A higher level of accountability is expected from the research producers. A higher level of accountability is also needed by the consumers of the metrics used to evaluate the outputs.

Librarians need to be aware of the different measures, not only to use as evaluation tools for collection development but also to be able to explain the meaning of these rankings to their researchers and institutions and assist them in interpreting the growing mass of rankings and research in the field.

Despite the different methodologies, the external pressures and internal maneuvering, there are two somewhat conflicting conclusions: Many of the historical best continue to dominate the top of the rankings; and many new faces, including a growing presence from Asia are joining the elite.

References

Abbott A (2009) Italy introduces performance-related funding. Nature 460(7255):559. doi:101038/460559a

Abramo G, Cicero T, D’Angelo CA (2013a) The impact of unproductive and top researchers on overall university research performance. J Informetr 7(1):166–175. doi:10.1016/j.joi.2012.10.006.

Abramo G, D’Angelo C, Cicero T (2012) What is the appropriate length of the publication period over which to assess research performance? Scientometrics 93(3):1005–1017. doi:10.1007/s11192-012-0714-9

Abramo G, D’Angelo C, Di Costa F (2011a) National research assessment exercises: a comparison of peer review and bibliometrics rankings. Scientometrics 89(3):929–941. doi:10.1007/s11192-011-0459-x

Abramo G, D’Angelo C, Di Costa F (2011b) A national-scale cross-time analysis of university research performance. Scientometrics 87(2):399–413. doi:10.1007/s11192-010-0319-0

Abramo G, D’Angelo CA, Rosati F (2013b) The importance of accounting for the number of co-authors and their order when assessing research performance at the individual level in the life sciences. J Informetr 7(1):198–208

Academic Research and Development (2012) Science and engineering indicatorswww.nsf.gov/statistics/seind12/c5/c5s4.htm. Accessed 23 Sept 2013

Alberts B (2013) Impact factor distortions. Science 340(6118):383

Almind TC, Ingwersen P (1997) Informetric analyses on the World Wide Web: methodological approaches to ‘Webometrics’. J Doc 53(4):404–426. doi:10.1108/EUM0000000007205

Bergstrom Lab (2013) Eigenfactor.org: ranking and mapping scientific knowledge.http://www.eigenfactor.org/. Accessed 1 Oct 2013

Björneborn L, Ingwersen P (2004) Toward a basic framework for webometrics. J Am Soc Inf Sci Technol 55(14):121

Billaut JC, Bouyssou D, Vincke P (2010) Should you believe the Shanghai Rankings? Scientometrics 84(1):237–263

Blau PM, Margulies RZ (1974) A research replication: the reputations of American professional schools. Change Winter(74–75):42–47

Bollag B (2006) Group endorses principles for ranking of higher-education. The Chronicle Daily News, 9 June 2006. http://chronicle.com/article/Group-Endorses-Principles-for/25703. Accessed 25 Sept 2013

Center for World-Class Universities at Shanghai Jiao Tong University (2013) Academic Rankings of World Universities—ARWU (2013).http://www.shanghairanking.com/index.html. Accessed 29 Sept 2013

Chen K, Liao P (2012) A comparative study on world university rankings: a bibliometric survey. Bibliometrics 92(1):89–103

Cleary FR, Edwards DJ (1960) The origins of the contributors to the A.E.R. during the ’fifties. Am Econ Rev 50(5):1011–1014

CNR (Consiglio Nazionale delle Richerche) (2013)http://www.cnr.it/sitocnr/Englishversion/Englishversion.html. Accessed 25 Sept 2013

CWTS (2013) Leiden Rankings.http://www.leidenranking.com/. Accessed 29 Sept 2013

Declan B (2007) Academics strike back at spurious rankings. Nature 447(7144):514

DoCampo D (2011) On using the Shanghai ranking to assess the research performance of university systems. Scientometrics 86(1):77–92

Dolan WP (1976) The ranking game: the power of the academic elite. pp 121. Retrieved from ERIC: ED 129 131

Editorial (2007) Higher Education in Europe 32(1):1–3

Enserink M (2007) Who ranks the university rankers? Science 317(5841):1026–1028

European Commission (2008) Ranking Europe’s Universities, European Commission—IP/08/1942 11/12/2008.http://europa.eu/rapid/press-release_IP-08–1942_en.htm. Accessed 25 Sept 2013

Excellence in Research for Australia (ERA) initiative (2013)http://www.arc.gov.au/era/default.htm. Accessed 25 Sept 2013

Farrell EF, Van Der Werf M (2007) Playing the rankings game. Chron High Educ 53(38):A.11

Federkeil G (2009) Ranking—New developments in Europe 3rd International Symposium on University Rankings, 2009 (February 26). http://www.mediabank.leidenuniv.nl/ppt/ics/2009/federkeil.ppt

From the Editors (2002) Higher Education in Europe 27(4):359

Garfield E (1955) Citation indexes for science. Science 122(3159). doi:http://www.jstor.org/stable/1749965

Garfield E (1979) Is citation analysis a legitimate evaluation tool? Scientometrics 1(4):359–375. doi:10.1007/BF02019306

Garfield E, Sher IH (1963) New factors in the evaluation of scientific literature through citation indexing. Am Doc 14(3):195–201

Hazelkorn E (2007) OECD: consumer concept becomes a policy instrument. University World News.http://www.universityworldnews.com/article.php?story=20071108144018483. Accessed 25 Sept 2013

Hazelkorn E (2008) Learning to live with league tables and ranking: the experience of institutional leaders. High Educ Policy 21(2):193–215

HEEACT (2013) Higher Educations Evaluation Accreditation Council of Taiwan.http://www.heeact.edu.tw/mp.asp?mp=4. Accessed 25 Sept 2013

HEFCE (2013) Higher Education Funding Council for England—Research.http://www.hefce.ac.uk/research/. Accessed 25 Sept 2013

Henshaw JT (2006) The ratings game: overall measurement and rankings in Does measurement measure up? How numbers reveal and conceal the truth. Johns Hopkins University Press, Baltimore

Hirsch J (2005) An index to quantify an individual’s scientific research output. Proceedings of the National Academy of Science 102(15569)

Hood WW, Wilson CS (2001) The literature of bibliometrics, scientometrics and informetrics. Scientometrics 52(2):291–314

Hou ATC, Ince M, Chiang CL (2012) A reassessment of Asian Pacific excellence programs in higher education: the Taiwan experience. Scientometrics 92(1):23–24

Hughes RM (1925) A study of the graduate schools of America. Miami University, Oxford, Ohio

IDEAS Rankings (2013, updated monthly)http://ideas.repec.org/top/. Accessed 1 Oct 2013

IHEP (Institute for Higher Education Policy) (2006) Berlin principles on ranking of higher education institutions.http://www.ihep.org/assets/files/publications/a-f/BerlinPrinciplesRanking.pdf. Accessed 25 Sept 2013

IHEP (Institute for Higher Education Policy) (2007) College and university ranking systems: global perspectives and American challenges. April 2007 p 67.http://www.ihep.org/Publications/publications-detail.cfm?id=11. Accessed 25 Sept 2013

IREG Observatory on Academic Ranking and Excellence (2013)http://www.ireg-observatory.org/.Accessed 25 Sept 2013

Jacsó P (2008) Google scholar revisited. Online Inf Rev 32(1):102

Jin JC, Hong JH (2008) East Asian rankings of economics departments. J Asian Econ 19(1):74–82

Jin JC, Yau L (1999). Research productivity of the economics profession in East Asia. Econ Inq 37(4):706

Johnson LB (15 September 1965) 514—Statement by the President to the Cabinet and Memorandum on Strengthening Academic Capability for Science. American Presidency Project.http://www.presidency.ucsb.edu/ws/index.php?pid=27257. Accessed 4 Nov 2009

Journal M3trics (2012) Research analytics redefined.http://www.journalmetrics.com/about-journal-metrics.php. Accessed 1 Oct 2013

Key Perspectives Ltd. (2009) A comparative review of research assessment regimes in five countries and the role of libraries in the research assessment process. Report commissioned by OCLC Research.http://www.oclc.org/research/publications/library/2009/2009-09.pdf. Accessed 25 Sept 2013

Konkiel S (2013) Altmetrics: a 21st century solution to determining research quality. Online/Searcher, July August 2013 11–15

Kroc RJ (1984) Using citation analysis to assess scholarly productivity. Educ Res 13(6):17–22

Labi A (2008) Obsession with rankings goes global. Chron High Educ 55(8):A.27

Leung K (2007) The glory and tyranny of citation impact: an East Asian perspective. Acad Manag J 50(3):510–513

Liu NC, Cheng Y (2005) The academic ranking of world universities. High Educ Eur 30(2):127–136

Magoun HW (1966) The Cartter Report on quality in graduate education: institutional and divisional standings compiled from the Report. J High Educ 37(9):481–492

Meho LI, Yang K (2007) Impact of data sources on citation counts and rankings of LIS faculty: Web of Science, Scopus, Google Scholar. J Am Soc Inf Sci Technol 58(13):2105–2125

Meredith M (2004) Why do universities compete in the ratings game? An empirical analysis of the effects of the U.S. News and World Report college rankings. Res High Educ 45(5):443–461

Merisotis J, Sadlak J (2005) Higher education rankings: evolution, acceptance, and dialogue. High Educ Eur 30(2):97–101

Morse R (2010) The world’s best just got bigger and better. Morse Code: Inside the college rankings.http://www.usnews.com/education/blogs/college- rankings-blog/2010/02/25/the-worlds-best-just-got-bigger-and-better. Accessed 2 Oct 2013

Morse, R., Space, J. (1990) What’s behind the rankings? U.S. News & World Report 108(11): 48–49

National Taiwan University (2012) NTU Ranking.http://nturanking.lis.ntu.edu.tw/Default.aspx. Accessed 29 Sept 2013

Nature Publishing Index Asia Pacific (2013) Monthly.http://www.natureasia.com/en/publishing-index/asia-pacific/. Accessed 30 Sept 2013

OECD Directorate for Education (2013) Measuring learning outcomes in higher education. (AHELO).http://www.oecd.org/site/ahelo/. Accessed 25 Sept 2013

Pagell RA (2008) Bibliometrics: From Bean counting to academic accountability. Paper presented at CONCERT.http://library.smu.edu.sg/aboutus/Bibliometricscomplete.pdf. Accessed 25 Sept 2013

Pagell RA (2009) University research rankings: from page counting to academic accountability. Eval High Educ 3(1):71–101.http://ink.library.smu.edu.sg/library_research/1. Accessed 25 Sept 2013

Pagell RA, Lusk EJ (2002) Benchmarking academic business school libraries relative to their business school rankings. J Bus Financ Librariansh 7(4):3

Paul RJ (2008) Measuring research quality: the United Kingdom Government’s Research Assessment Exercise. Eur J Inf Syst 17(4):324–329

Pritchard A (1969) Statistical bibliography or bibliometrics? J Doc 25:348–349

Quacquarelli Symonds (2013) QS world university rankings.http://www.topuniversities.com/qs-world-university-rankings. Accessed Sept 2013

Rabesandratana T (2013) ‘Brussels ranking’ of universities off to a rocky start, Science 339(6118):383

Research Trends (2008) Quarterly newsletter.http://www.researchtrends.com

Richardson M (2011) A “democratization” of university rankings: U-Multirank, Research Trends (24) 9

Saisana M, d’Hombres B, Saltelli A (2011) Rickety numbers: volatility of university rankings and policy implications. Res Policy 40(1):165–177

Salmi J, Saroyan A (2007) League tables as policy instruments: uses and misuses. High Educ Manag Policy 19(2):31–68.http://www.oecd.org/edu/imhe/45380007.pdf#page=33. Accessed 25 Sept 2013

San Francisco Declaration on Research Assessment.http://am.ascb.org/dora/. Accessed 30 Sept 2013

Schubert WH (1979) Contributions to AERA annual programs as an indicator of institutional productivity. Educ Res 8(7):13–17

SCIMago Research Group (2013) SIR reports.http://www.scimagoir.com/#. Accessed Sept 2013

Solorzano L, Quick BE (1983) Rating the colleges: exclusive national survey. U.S. News & World Report 95:3

Times Higher Education (2013) The world university rankings.http://www.timeshighereducation.co.uk/world-university-rankings/. Accessed 2 Oct 2013

Thomson-Reuters (2013) Science watch.http://sciencewatch.com/. Accessed Sept 2013

U-Multirank (2013)http://www.u-multirank.eu/. Accessed 3 Oct 2013

University Grants Committee (2013).http://www.ugc.edu.hk/eng/ugc/about/about.htm. Accessed 25 Sept 2013

University of Zurich (2013) How international rankings work.http://www.uzh.ch/about/portrait/rankings/top_en.html. Accessed 29 Sept 2013

Usher A (2009) Rankings 2.0: the new frontier in institutional comparisons. Aust Univ Rev 51(2):87–89

Van Raan AFJ, Moed H, van Leeuven TN (2007) Scoping study on the use of bibliometric analysis to measure the quality of research in UK higher education institutions. 131 pp. http://webarchive.nationalarchives.gov.uk/20100202100434/; http://www.hefce.ac.uk/pubs/rdreports/2007//rd18_07/rd18_07.pdf. Accessed 30 Sept 2013

Van Raan T, van Leeuwen T, Visser M (2011) Non-English papers decrease rankings. Nature 469:34. doi:10.1038/469034a

Van Vught F, Ziegele F (eds) (2011) U-Multirank: Design and testing the feasibility of a multi-dimensional global university rankings published by CHERPA network, 183 p.http://ec.europa.eu/education/higher-education/doc/multirank_en.pdf. Accessed 25 Sept 2013

Werner M, Bornmann L (2013) Journal impact factor: “the poor man’s citation analysis” and alternative approaches. Eur Sci Ed 39(3):62–63

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

This chapter is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

Copyright information

© 2014 The Editor(s) (if applicable) and the Author(s)

About this chapter

Cite this chapter

Pagell, R. (2014). Bibliometrics and University Research Rankings Demystified for Librarians. In: Chen, C., Larsen, R. (eds) Library and Information Sciences. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-54812-3_10

Download citation

DOI: https://doi.org/10.1007/978-3-642-54812-3_10

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-54811-6

Online ISBN: 978-3-642-54812-3

eBook Packages: Business and EconomicsBusiness and Management (R0)